Abstract

At CRYPTO ’12, Landecker et al. introduced the cascaded LRW2 (or CLRW2) construction and proved that it is a secure tweakable block cipher up to roughly \( 2^{2n/3} \) queries. Recently, Mennink has presented a distinguishing attack on CLRW2 in \( 2n^{1/2}2^{3n/4} \) queries. In the same paper, he discussed some non-trivial bottlenecks in proving tight security bound, i.e., security up to \( 2^{3n/4} \) queries. Subsequently, he proved security up to \( 2^{3n/4} \) queries for a variant of CLRW2 using 4-wise independent AXU assumption and the restriction that each tweak value occurs at most \( 2^{n/4} \) times. Moreover, his proof relies on a version of mirror theory which is yet to be publicly verified. In this paper, we resolve the bottlenecks in Mennink’s approach and prove that the original CLRW2 is indeed a secure tweakable block cipher up to roughly \( 2^{3n/4} \) queries. To do so, we develop two new tools: First, we give a probabilistic result that provides improved bound on the joint probability of some special collision events, and second, we present a variant of Patarin’s mirror theory in tweakable permutation settings with a self-contained and concrete proof. Both these results are of generic nature and can be of independent interests. To demonstrate the applicability of these tools, we also prove tight security up to roughly \( 2^{3n/4} \) queries for a variant of DbHtS, called DbHtS-p, that uses two independent universal hash functions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tweakable Block Ciphers: A tweakable block cipher (or TBC for short) is a cryptographic primitive that has an additional public indexing parameter called tweak in addition to the usual secret key of a standard block cipher. This means that a tweakable block cipher, \( {\widetilde{E}}: {\mathcal {K}}\times {\mathcal {T}}\times {\mathcal {M}}\rightarrow {\mathcal {M}}\), is a family of permutations on the plaintext/ciphertext space \( {\mathcal {M}}\) indexed by two parameters: the secret key \( k \in {\mathcal {K}}\) and the public tweak \( t \in {\mathcal {T}}\). Liskov, Rivest and Wagner formalized the concept of TBCs in their renowned work [1]. Tweakable block ciphers are more versatile than a standard block cipher and find a broad range of applications, most notably in authenticated encryption schemes, such as TAE [1], \( \mathsf {\Theta } \mathsf {CB}\) [2] (TBC-based generalization of the OCB family [2,3,4]), PIV [5], COPA [6], SCT [7] (used in Deoxys [7, 8]) and AEZ [9]; and message authentication codes, such as PMAC_TBC3K and PMAC_TBC1K [10], PMAC2x and PMACx [11], ZMAC [12], NaT and HaT [13], ZMAC+ [14], DoveMAC [15]. Apart from this, TBCs have also been employed in encryption schemes [16,17,18,19,20,21].

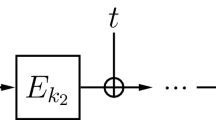

Birthday-Bound Secure TBCs: Although there are some TBC constructions designed from scratch, notably Deoxys-BC [8] and Skinny [8, 22], still the wide availability of secure and well-analyzed block ciphers make them perfect candidates for constructing TBCs. Liskov et al. [1] proposed two constructions for TBCs based on a secure block cipher. The second construction, called LRW2, is defined as follows:

where E is a block cipher, k is the block cipher key and h is an XOR universal hash function. The LRW2 construction is strongly related to the \( \textsf {XEX} \) construction by Rogaway [2] and its extensions by Chakraborty and Sarkar [23], Minematsu [24] and Granger et al. [25]. All these schemes are inherently birthday-bound secure due to the internal hash XOR collisions, i.e., the adversary can choose approx. \( 2^{n/2} \) queries in such a way that there will be two queries (t, m) and \( (t',m') \) with \( m \oplus h(t) = m' \oplus h(t') \). This leads to a simple distinguishing event \( c \oplus c' = m \oplus m' \).

Beyond-Birthday-Bound Secure TBCs: Landecker et al. [26] first suggested the cascading of two independent LRW2 instances to get a beyond-birthday-bound (BBB) secure TBC, called CLRW2, i.e.,

They proved that CLRW2 is a secure TBC up to approx. \( 2^{2n/3} \) queries. Later on, Procter [27] pointed out a flaw in the security proof of CLRW2. The proof was subsequently fixed by both Landecker et al. and Procter to recover the claimed security bounds. Lampe and Seurin [28] studied the \( \ell \ge 2 \) independent cascades of LRW2 and proved that it is secure up to approx. \( 2^{\frac{\ell n}{\ell +2}} \) queries. They further conjectured that the \( \ell \) cascade is secure up to \(2^{\frac{\ell n}{\ell +1}} \) queries. Recently, Mennink [29] has showed a \(2n^{1/2}2^{3n/4} \)-query attack on CLRW2. In the same paper, he also proved security up to \( 2^{3n/4} \) queries, albeit for a variant of CLRW2 with strong assumptions on the hash functions and restrictions on tweak repetitions.

All of the above constructions are proved to be secure in standard model. However, there are TBC constructions in public random permutation and ideal cipher model as well. In [13], Cogliati, Lampe and Seurin introduced the tweakable Even–Mansour construction and its cascaded variant. They showed that the two-round construction is secure up to approx. \( 2^{2n/3} \) queries. A simple corollary of this result also gives security of CLRW2 up to \( 2^{2n/3} \) queries. The bound is tight in the ideal permutation model as one can simply fix the tweak and use the \( 2^{2n/3} \) queries attack on key alternating cipher by Bogdanov et al. [30]. Some notable BBB secure TBC constructions in the ideal cipher model include Mennink’s \(\tilde{\textsf {F}}[1]\) and \(\tilde{\textsf {F}}[2]\) [31, 32], Wang et al. 32 constructions [33] and their generalization, called XHX, by Jha et al. [34]. All of these constructions are at most birthday-bound secure in the sum of key size and block size.Footnote 1 Recently, Lee and Lee [35] have proved that a two-level cascade of XHX, called XHX2, achieves BBB security in terms of the sum of key size and block size.

1.1 Recent Developments in the Analysis of CLRW2

In [29], Mennink presented an improved security analysis of CLRW2. The major contribution was an attack in approx. \(n^{1/2}2^{3n/4} \) queries. The attack works by finding four queries \( (t,m_1,c_1) \), \( (t',m_2,c_2) \), \( (t,m_3,c_3) \) and \((t',m_4,c_4) \) such that

This leads to a simple distinguishing attack since, in case of CLRW2,

happens with probability 1, given \( \texttt {AltColl} \) holds. In contrast, this happens with probability close to \( 1/2^n \) for an ideal tweakable random permutation.

Following on the insights from the attack, Mennink [29] also gives a security proof of the same order for a variant of CLRW2. Basically, the proof bounds the probability that the above-given four equations hold. Additionally, inspired by [36], Patarin’s mirror theory [37,38,39] is used which requires a bound on the probability of some more bad events. The major bottleneck in proving the security beyond \( 2^{2n/3} \) queries comes from two directions:

First, there is no straightforward way of proving the upper bound of the probability of occurrence of \( \texttt {AltColl}\) to \(\frac{q^4}{2^{3n}} \), where q is the number of queries. This is due to two reasons: (1) the adversary has full control over the tweak usages and (2) the hash functions are just 2-wise independent XOR universal.

Second, mirror theory was primarily developed to lower bound the number of solutions to equations arising for some random system which is trying to mimic a random function. This is not the case here, and as we will see in later sections, the mirror theory bound is directly dependent on tweak repetitions.

In order to bypass the two bottlenecks, the following assumptions are made in [29]:

- 1.

The hash functions are 4-wise independent AXU.

- 2.

The maximum number of tweak repetitions is restricted to \( 2^{n/4} \).

- 3.

A limited variant of mirror theory result is true for \( q < 2^{3n/4} \).

Among the three assumptions, the first two are at least plausible. But the last assumption is questionable as barring certain restricted cases, the proof of mirror theory has many gaps which are still open or unproved, as has been noted in [40, 41].

1.2 Contributions of this Work

In light of the above discussion, we revisit the proof strategy of [29] (see Sect. 3), explicitly considering each of the issues. We show that all three assumptions used in [29] are dispensable. In order to do so, we develop some new tools which are described below:

- 1.

The Alternating Events Lemma We derive a generic tool (see Sect. 4) to bound the probability of events of the form \( \texttt {AltColl} \). In CLRW2 analysis, only a special case is required, where the randomness comes from two independent universal hash functions.

- 2.

Mirror Theory in Tweakable Permutation Setting We adapt the mirror theory line of argument (see Sect. 5) to get suitable bounds in tweakable permutation setting. This is a generalization of the existing mirror theory result in function setting.

Using the above-mentioned tools, we prove that CLRW2 is secure up to approx. \( 2^{3n/4} \) queries (see Sect. 6). Our result, in combination with the attack in [29] (see supplementary material B), gives the tight (up to a logarithmic factor) security of CLRW2.

As a side result on the application of our tools, we also prove tight security up to roughly \( 2^{3n/4} \) queries for a variant of DbHtS [42], called \(\textsf {DbHtS-p}\), that uses two independent universal hash functions (see Sect. 7).

Here, we explicitly remark that our bound on CLRW2 is not derivable from the recent result on XHX2 [35].

2 Preliminaries

Notational Setup: For \( n \in {{\mathbb {N}}}\), [n] denotes the set \( \{1,2,\ldots ,n\} \), \( \{0,1\}^n \) denotes the set of bit strings of length n, and \( {\textsf {Perm}}(n) \) denotes the set of all permutations over \( \{0,1\}^n \). For \( n,\kappa \in {{\mathbb {N}}}\), \({\textsf {BPerm}}(\kappa ,n) \) denotes the set of all families of permutations \( \pi _k := \pi (k,\cdot ) \in {\textsf {Perm}}(n) \), indexed by \(k \in \{0,1\}^{\kappa } \). We sometimes extend this notation, whereby \( {\textsf {BPerm}}(\kappa ,\tau ,n) \) denotes the set of all families of permutations \( \pi _{(k,t)} \), indexed by \( (k,t) \in \{0,1\}^\kappa \times \{0,1\}^\tau \). For \( n,r \in {{\mathbb {N}}}\), such that \( n \ge r \), we define the falling factorial \( (n)_r :=n!/(n-r)! = n(n-1)\cdots (n-r+1) \).

For \( q \in {{\mathbb {N}}}\), \( x^q \) denotes the q-tuple \((x_1,x_2,\ldots ,x_q) \), and \( {\widehat{x}}^q \) denotes the set \(\{x_i : i \in [q]\} \). By an abuse of notation, we also use \( x^q \) to denote the multiset \( \{x_i : i \in [q]\} \) and \( \mu (x^q,x') \) to denote the multiplicity of \( x' \in x^q \). For a set \( {\mathcal {I}}\subseteq [q] \) and a q-tuple \( x^q \), \( x^{{\mathcal {I}}} \) denotes the tuple \( (x_i)_{i \in {\mathcal {I}}} \). For a pair of tuples \( x^q \) and \(y^q\), \( (x^q,y^q) \) denotes the 2-ary q-tuple \(((x_1,y_1),\ldots ,(x_q,y_q)) \). An n-ary q-tuple is defined analogously. For \( q \in {{\mathbb {N}}}\), for any set \( {\mathcal {X}}\), \( ({\mathcal {X}})_q\) denotes the set of all q-tuples with distinct elements from \({\mathcal {X}}\). For \( q \in {{\mathbb {N}}}\), a 2-ary tuple \( (x^q,y^q) \) is called permutation compatible, denoted \({x^q}\leftrightsquigarrow {y^q} \), if \(x_i = x_j \iff y_i =y_j \). Extending notations, a 3-ary tuple \((t^q,x^q,y^q) \) is called tweakable permutation compatible, denoted by \( ({t^q}, {x^q})\leftrightsquigarrow ({t^q}, {y^q}) \), if \( (t_i,x_i) = (t_j,x_j) \iff (t_i,y_i) = (t_j,y_j) \). For any tuple \( x^q \in {\mathcal {X}}^q \), and for any function \( f: {\mathcal {X}}\rightarrow {\mathcal {Y}}\), \( f(x^q) \) denotes the tuple \((f(x_1),\ldots ,f(x_q)) \). We use shorthand notation \(\exists ^*\) to represent the phrase “there exists distinct.”

We use the conventions: Uppercase and lowercase letters denote variables and values, respectively, and Serif font letters are used to denote random variables, unless stated otherwise. For a finite set \( {\mathcal {X}}\), \( \textsf {X} \leftarrow \!\!{\$}\,{\mathcal {X}}\) denotes the uniform and random sampling of \( \textsf {X} \) from \({\mathcal {X}}\).

2.1 Some Useful Inequalities

Definition 2.1

For \( r \ge s \), let \( a = (a_i)_{i \in [r]} \) and \( b = (b_j)_{j \in [s]} \) be two sequences over \( {{\mathbb {N}}}\). We say that acompresses tob, if there exists a partition \( {\mathcal {P}}\) of [r] such that \( {\mathcal {P}}\) contains exactly s cells, say \( {\mathcal {P}}_1,\ldots ,{\mathcal {P}}_s \), and \( \forall i\in [s],~~b_i = \sum _{j \in {\mathcal {P}}_i}a_j \).

Proposition 1

For \( r \ge s \), let \( a=(a_i)_{i \in [r]} \) and \( b=(b_j)_{j \in [s]} \) be sequences over \( {{\mathbb {N}}}\), such that a compresses to b. Then for any \( n \in {{\mathbb {N}}}\), such that \( 2^n \ge \sum _{i=1}^{r} a_i \), we have \( \prod _{i=1}^{r}(2^n)_{a_i} \ge \prod _{j=1}^{s}(2^n)_{b_j} \).

In [34, Proof of Lemma 3], the authors refer to a variant of Proposition 1. We remark that this variant [34, Fact 1] is in fact false. However, [34, Proof of Lemma 3] implicitly used Proposition 1 and hence stands correct.

Proposition 2

For \( r \ge 2 \), let \( c=(c_i)_{i\in [r]} \) and \( d =(d_i)_{i\in [r]}\) be two sequences over \( {{\mathbb {N}}}\). Let \(a_1,a_2,b_1,b_2 \in {{\mathbb {N}}}\), such that \( c_i \le a_j \), \( c_i + d_i \le a_j + b_j \) for all \( i \in [r] \) and \( j \in [2] \), and \(\sum _{i=1}^{r}d_i = b_1+b_2 \). Then, for any \( n \in {{\mathbb {N}}}\), such that \( a_j + b_j \le 2^n \) for \(j \in [2] \), we have \(\prod _{i=1}^{r} (2^n-c_i)_{d_i} \ge (2^n-a_1)_{b_1}(2^n-a_2)_{b_2}\).

Proposition 2 is quite intuitive, in the sense, that the starting value in each of the falling factorial terms on the left is at least as much as the starting values on the right, and the total number of terms is same on both the sides. The formal proofs of Proposition 1 and 2 are given in supplementary material A.

2.2 (Tweakable) Block Ciphers and Random Permutations

A block cipher with key size \( \kappa \) and block size n is a family of permutations \( {E}\in {\textsf {BPerm}}(\kappa ,n) \). For \( k \in \{0,1\}^\kappa \), we denote \( {E}_k(\cdot ) := {E}(k,\cdot ) \) and \({E}^{-1}_k(\cdot ) := {E}^{-1}(k,\cdot ) \). A tweakable block cipher with key size \( \kappa \), tweak size \( \tau \) and block size n is a family of permutations \( {\widetilde{E}}\in {\textsf {BPerm}}(\kappa ,\tau ,n) \). For \( k \in \{0,1\}^\kappa \) and \( t \in \{0,1\}^\tau \), we denote \({\widetilde{E}}_k(t,\cdot ) := {\widetilde{E}}(k,t,\cdot ) \) and \( {\widetilde{E}}^{-1}_k(t,\cdot ) :={\widetilde{E}}^{-1}(k,t,\cdot ) \). Throughout this paper, we fix \(\kappa ,\tau ,n \in {{\mathbb {N}}}\) as the key size, tweak size and block size, respectively, of the given (tweakable) block cipher.

We say that \( \Pi \) is an (ideal) random permutation on block space \( \{0,1\}^n\) to indicate that \( \Pi \leftarrow \!\!{\$}\,{\textsf {Perm}}(n) \). Similarly, we say that \( {{\widetilde{\Pi }}}\) is an (ideal) tweakable random permutation on tweak space \( \{0,1\}^\tau \) and block space \(\{0,1\}^n\) to indicate that \( {{\widetilde{\Pi }}}\leftarrow \!\!{\$}\,{\textsf {BPerm}}(\tau ,n) \).

2.3 (T)SPRP Security Definitions

In this paper, we assume that the distinguisher is non-trivial, i.e., it never makes a duplicate query, and it never makes a query for which the response is already known due to some previous query. For instance, say an oracle gives bidirectional access (permutation P with inverse). If the adversary has made a forward call x and gets response \( y = P(x) \). Then, making an inverse query y is redundant. Note that such redundancies are necessary in certain security games, most notably in indifferentiability, where the adversary can use these redundancies to catch a simulator. Let \({{\mathbb {A}}}(q,t) \) be the class of all non-trivial distinguishers limited to q oracle queries and t computations.

(Tweakable) Strong Pseudorandom Permutation (SPRP): The SPRP advantage of distinguisher \( {{\mathscr {A}}} \) against \( {E}\) instantiated with a key \({\textsf {K}} \leftarrow \!\!{\$}\,\{0,1\}^\kappa \) is defined as

The SPRP security of \( {E}\) is defined as \(\displaystyle \mathbf {Adv}^{{\textsf {sprp}}}_{{E}}(q,t) := \max _{{{\mathscr {A}}} \in {{\mathbb {A}}}(q,t)} \mathbf {Adv}^{{\textsf {sprp}}}_{E}({{\mathscr {A}}}) \). Similarly, the TSPRP advantage of distinguisher \( {{\mathscr {A}}} \) against \({\widetilde{E}}\) instantiated with a key \( {\textsf {K}} \leftarrow \!\!{\$}\,\{0,1\}^\kappa \) is defined as

The TSPRP security of \( {\widetilde{E}}\) is defined as \(\displaystyle \mathbf {Adv}^{{\textsf {tsprp}}}_{{\widetilde{E}}}(q,t) := \max _{{{\mathscr {A}}} \in {{\mathbb {A}}}(q,t)} \mathbf {Adv}^{{\textsf {tsprp}}}_{{\widetilde{E}}}({{\mathscr {A}}}) \).

2.4 The Expectation Method

Let \( {{\mathscr {A}}} \) be a computationally unbounded and deterministic distinguisher that tries to distinguish between two oracles \({\mathcal {O}}_0 \) and \( {\mathcal {O}}_1 \) via black box interaction with one of them. We denote the query–response tuple of \({{\mathscr {A}}}\)’s interaction with its oracle by a transcript \( \omega \). This may also include any additional information the oracle chooses to reveal to the distinguisher at the end of the query–response phase of the game. We denote by  (res.

(res.  ) the random transcript variable when \( {{\mathscr {A}}} \) interacts with \( {\mathcal {O}}_1 \) (res. \({\mathcal {O}}_0 \)). The probability of realizing a given transcript \(\omega \) in the security game with an oracle \( {\mathcal {O}}\) is known as the interpolation probability of \( \omega \) with respect to \( {\mathcal {O}}\). Since \( {{\mathscr {A}}} \) is deterministic, this probability depends only on the oracle \( {\mathcal {O}}\) and the transcript \( \omega \). A transcript \( \omega \) is said to be attainable if

) the random transcript variable when \( {{\mathscr {A}}} \) interacts with \( {\mathcal {O}}_1 \) (res. \({\mathcal {O}}_0 \)). The probability of realizing a given transcript \(\omega \) in the security game with an oracle \( {\mathcal {O}}\) is known as the interpolation probability of \( \omega \) with respect to \( {\mathcal {O}}\). Since \( {{\mathscr {A}}} \) is deterministic, this probability depends only on the oracle \( {\mathcal {O}}\) and the transcript \( \omega \). A transcript \( \omega \) is said to be attainable if  . The expectation method (stated below) is quite useful in obtaining improved bounds in many cases [43,44,45]. The H-coefficient technique due to Patarin [46] is a simple corollary of this result where the \(\epsilon _{{\textsf {ratio}}}\) is a constant function.

. The expectation method (stated below) is quite useful in obtaining improved bounds in many cases [43,44,45]. The H-coefficient technique due to Patarin [46] is a simple corollary of this result where the \(\epsilon _{{\textsf {ratio}}}\) is a constant function.

Lemma 2.1

(Expectation Method [43]) Let \( \varOmega \) be the set of all transcripts. For some \( \epsilon _{\textsf {bad} }> 0 \) and a nonnegative function \({\epsilon _{\textsf {ratio} }}: \varOmega \rightarrow [0,\infty ) \), suppose there is a set \( \varOmega _\textsf {bad} \subseteq \varOmega \) satisfying the following:

;

;For any \( \omega \notin \varOmega _\textsf {bad} \), \( \omega \) is attainable and

.

.

Then for an distinguisher \( {{\mathscr {A}}} \) trying to distinguish between \( {\mathcal {O}}_1 \) and \( {\mathcal {O}}_0 \), we have the following bound on its distinguishing advantage:

2.5 Patarin’s Mirror Theory

In [37], Patarin defines mirror theory as a technique to estimate the number of solutions of linear systems of equalities and linear non-equalities in finite groups. In its most general case, the mirror theory proof is tractable up to the order of \( 2^{2n/3} \) security bound, but it readily becomes complex and extremely difficult to verify, as one aims for the optimal bound [40, 41]. We remark here that this in no way suggests that the result is incorrect, and in future, we might even get some independent verifications of the result.

We restrict ourselves to the binary field \( {{\mathbb {F}}}_{2}^{n}\) with \( \oplus \) as the group operation. We will use the Mennink and Neves interpretation [36] of mirror theory. For ease of understanding and notational coherency, we sometimes use different parametrization and naming conventions. Let \( q \ge 1 \) and let \({\mathcal {L}}\) be the system of linear equations

where \( Y^q \) and \( V^q \) are unknowns, and \( \lambda ^q \in (\{0,1\}^n)^q \) are knowns. In addition, there are (in)equality restrictions on \( Y^q \) and \( V^q \), which uniquely determine \({\widehat{Y}}^q \) and \( {\widehat{V}}^q \). We assume that \({\widehat{Y}}^q \) and \( {\widehat{V}}^q \) are indexed in an arbitrary order by the index sets \( [q_Y] \) and \( [q_V] \), where \( q_Y =|{\widehat{Y}}^q| \) and \( q_V = |{\widehat{V}}^q| \). This assumption is without any loss of generality as this does not affect the system \({\mathcal {L}}\). Given such an ordering, we can view \( {\widehat{Y}}^q \) and \({\widehat{V}}^q \) as ordered sets \( \{Y'_1,\ldots ,Y'_{q_Y}\} \) and \(\{V'_1,\ldots ,V'_{q_V}\} \), respectively. We define two surjective index mappings:

It is easy to verify that \( {\mathcal {L}}\) is uniquely determined by \((\varphi _Y,\varphi _V,\lambda ^q) \), and vice versa. Consider a labeled bipartite graph \( {\mathcal {G}}({\mathcal {L}}) = ([q_Y],[q_V],{\mathcal {E}}) \) associated with \( {\mathcal {L}}\), where \( {\mathcal {E}}= \{(\varphi _Y(i),\varphi _V(i),\lambda _i) : i \in [q]\} \), \(\lambda _i\) being the label of edge. Clearly, each equation in \({\mathcal {L}}\) corresponds to a unique labeled edge (assuming no duplicate equations). We give three definitions with respect to the system \({\mathcal {L}}\) using \({\mathcal {G}}({\mathcal {L}})\).

Definition 2.2

(cycle-freeness) \({\mathcal {L}}\) is said to be cycle-free if and only if \( {\mathcal {G}}({\mathcal {L}}) \) is acyclic.

Definition 2.3

(\(\xi _{\max }\)-component) Two distinct equations (or unknowns) in \( {\mathcal {L}}\) are said to be in the same component if and only if the corresponding edges (res. vertices) in \( {\mathcal {G}}({\mathcal {L}}) \) are in the same component. The size of any component \( {\mathcal {C}}\) in \( {\mathcal {L}}\), denoted \( \xi ({\mathcal {C}}) \), is the number of vertices in the corresponding component of \({\mathcal {G}}({\mathcal {L}})\), and the maximum component size is denoted by \(\xi _{\max }({\mathcal {L}})\) (or simply \( \xi _{\max } \)).

Definition 2.4

(non-degeneracy) \({\mathcal {L}}\) is said to be non-degenerate if and only if there does not exist a path of even length at least 2 in \( {\mathcal {G}}({\mathcal {L}}) \) such that the labels along the edges on this path sum up to zero.

Theorem 2.1

(Fundamental Theorem of Mirror Theory [37]) Let \({\mathcal {L}}\) be a system of equations over the unknowns \(({\widehat{Y}}^q, {\widehat{V}}^q) \), that is, (i) cycle-free, (ii) non-degenerate and (iii) \( \xi _{\max }^2 \cdot \max \{q_Y,q_V\} \le 2^n/67 \). Then, the number of solutions \((y_1,\ldots ,y_{q_Y},v_1,\ldots ,v_{q_V}) \) of \( {\mathcal {L}}\), denoted \(h_q \), such that \( y_i \ne y_j \) and \( v_i \ne v_j \) for all \(i\ne j \), satisfies

A proof of this theorem is given in [37]. As mentioned before, the proof is quite involved with some claims remaining open or unproved. On the other hand, the same paper contains results for various other cases. For instance, for \(\xi =2\), several sub-optimal bounds have been shown. By sub-optimal, we mean that a factor of \( (1-\epsilon ) \), for some \( \epsilon > 0 \), is multiplied to the right-hand side of Eq. (3). Inspired by this, we give the following terminology which will be useful in later references to mirror theory.

For \( \xi \ge 2 \), \( \epsilon > 0 \), we write \((\xi ,\epsilon )\)-restricted mirror theory theorem to denote the mirror theory result in which the number of solutions, \( h_q \), of a system of equations with \( \xi _{\max } = \xi \), satisfies \( h_q \ge \left( 1-\epsilon \right) \frac{(2^n)_{q_Y}(2^n)_{q_V}}{2^{nq}} \).

Mirror theory has been primarily used for bounding the pseudorandomness of sum of permutations [37, 40, 47, 48] with respect to a random function. For instance, suppose we sample elements in \({\widehat{Y}}^q \) and \( {\widehat{V}}^q \) as outputs of two independent random permutations \( \Pi _1 \) and \( \Pi _2 \), respectively, over \(q_Y\) and \( q_V \) distinct inputs, respectively. Let \({\textsf {pr}}_{1} \) be the probability of realizing the system of equations \( {\mathcal {L}}\) and \( {\textsf {pr}}_{0} \) be the probability of realizing the q-tuple \( \lambda ^q \) through random function outputs over q distinct inputs. Then, it is easy to see that \( {\textsf {pr}}_{1} = h_q/(2^n)_{q_Y}(2^n)_{q_V}\) and \( {\textsf {pr}}_{0} = 1/2^{nq} \). Clearly, the above-given lower bound on \( h_q \) implies that \( {\textsf {pr}}_{1} \ge (1 - \epsilon ) {\textsf {pr}}_{0} \). When combined with the H-coefficient technique, we get an \( \epsilon \) term in the distinguishing advantage bound for sum of random permutations. Here, \( \epsilon \) can be viewed as the degree of deviation from random function behavior. This is precisely the reason that one finds terms of the form \( (2^n)_{q_Y}(2^n)_{q_V} \) and \( 2^{nq} \) in mirror theory bounds. We refer the readers to [36, 37] for a more detailed exposition on the aim and motivations behind mirror theory.

In [29], \( (4,{3q}/{2^{n}}) \)-restricted mirror theory theorem is used. In Sect. 5, we study the \( (\xi ,{q^4}/{2^{3n}}) \) case, for \( \xi \le 2^n/2q \) and present a variant of mirror theory suitable for tweakable permutation scenario.

3 Revisiting Mennink’s Improved Bound on CLRW2

We first describe the notion of \( \ell \)-wise independent XOR universal hash functions as given in [29]. This notion will be used for the description of CLRW2 (for \( \ell = 2 \)), as well as Mennink’s improved bound on CLRW2 (for \( \ell = 4 \)).

Definition 3.1

For \( \ell \ge 2 \), \( \epsilon \ge 0 \), a family of functions \({\mathcal {H}}= \{h: \{0,1\}^\tau \rightarrow \{0,1\}^n\} \) is called an \(\ell \)-wise independent XOR universal hash up to the bound \(\epsilon \), denoted \( \epsilon \text {-AXU}_{\ell } \), if for any \( j \in \{2,\ldots ,\ell \}\), any \( t^j \in (\{0,1\}^\tau )_{j} \) and a \(\delta ^{j-1} \in (\{0,1\}^n)^{j-1} \), we have

For \( \ell = 2 \), this is nothing but the notion of AXU hash functions, first introduced by Krawczyk [49] and later by Rogaway [50]. In [29], the author suggested a simple \( \text {AXU}_\ell \) hash function family using finite field arithmetic for small domain (\( \tau =n \)). Basically, the hash function family is defined as follows

for \( h = (h_1,\ldots ,h_{\ell -1}) \), where \( \odot \) denotes field multiplication operator with respect to some irreducible polynomial over the binary field \( {{\mathbb {F}}}_{2}^{n}\). For \( \ell =2 \), this yields the popular polyhash function. In general, this function requires \(\ell -1 \) keys and \( \ell -1 \) field multiplications to achieve \(2^{-n}\text {-AXU}_\ell \). Alternatively, secure block ciphers can also be used to construct \( (2^{n}-\ell +1)^{-1}\text {-AXU}_\ell \) hash functions over sufficiently large domains.

3.1 Description of the Cascaded LRW2 Construction

Let \( {E}\in {\textsf {BPerm}}(\kappa ,n) \) be a block cipher. Let \( {\mathcal {H}}\) be a hash function family from \( \{0,1\}^\tau \) to \( \{0,1\}^n\). We define the tweakable block cipher \( \textsf {LRW2} [{E},{\mathcal {H}}] \), based on the block cipher \( {E}\) and the hash function family \( {\mathcal {H}}\), by the following mapping: \( \forall (k,h,t,m) \in \{0,1\}^\kappa \times {\mathcal {H}}\times \{0,1\}^\tau \times \{0,1\}^n\),

For \( \ell \in {{\mathbb {N}}}\), the \( \ell \)-round cascaded LRW2 construction, denoted \( \textsf {CLRW2} [{E},{\mathcal {H}},\ell ] \), is a cascade of \(\ell \) independent LRW2 instances, i.e., \( \textsf {CLRW2} [{E},{\mathcal {H}},\ell ] \) is a tweakable block cipher, based on the block cipher \( {E}\) and the hash function family \( {\mathcal {H}}\), defined as follows: \( \forall (k^\ell ,h^\ell ,t,m) \in \{0,1\}^{\kappa \ell } \times {\mathcal {H}}^\ell \times \{0,1\}^\tau \times \{0,1\}^n\),

The two-round CLRW2 was first analyzed by Landecker et al. [26], whereas the \( \ell > 2 \) case was studied by Lampe and Seurin [28]. Since we mainly focus on the \(\ell = 2 \) case, we use the nomenclatures, CLRW2 and cascaded LRW2, interchangeably with two-round CLRW2. Figure 1 gives a pictorial description of the cascaded LRW2 construction. Throughout the rest of the paper, we use the notations from Fig. 1 in the context of CLRW2.

In [26] the CLRW2 construction was shown to be a BBB secure (up to \( 2^{2n/3} \) queries) TSPRP, provided the underlying block cipher is an SPRP, and the hash function families are AXU.

3.2 Mennink’s Proof Approach

The proof in [29] applies H-coefficient technique coupled with mirror theory. The main focus is to identify a suitable class of bad events on \( (x^q,u^q) \), where q is the number of queries, which makes mirror theory inapplicable. Crudely, the bad events correspond to cases where for some query there is no randomness left (in the sampling of \( y^q \) and \( v^q \)) in the ideal world. Given a good transcript, mirror theory is applied to bound the number of solutions of the system of equation \( \{Y_i \oplus V_i = \lambda _i : i \in [q]\} \), where \( Y_i \) and \( V_i \) are unknowns satisfying \( {x^q}\leftrightsquigarrow {Y^q} \) and \( {V^q}\leftrightsquigarrow {u^q} \), and \( \lambda ^q \) is fixed. The proof relies on three major assumptions:

Assumption 1. \( {\mathcal {H}}\) is \( \text {AXU}_4 \) hash function family.

Assumption 2. For any \( t' \in \{0,1\}^\tau \), \(\mu _{t'} = \mu (t^q,t') \le \gamma = 2^{n/4} \).

Assumption 3. \( \left( 4,\frac{3q}{2^{n}}\right) \)-restricted mirror theory theorem is correct.

Transcript Graph: A graphical view on \( x^q \) and \( u^q \) was used to characterize all bad events. Basically, each transcript is mapped to a unique bipartite graph on \( x^q,u^q \), as defined in Definition 3.2.

Definition 3.2

(Transcript Graph) A transcript graph \( {\mathcal {G}}= ({\mathcal {X}},{\mathcal {U}},{\mathcal {E}}) \) associated with \((x^q,u^q) \), denoted \( {\mathcal {G}}(x^q,u^q) \), is defined as \( {\mathcal {X}}:=\{(x_i,0) : i \in [q]\};~ {\mathcal {U}}:= \{(u_i,1) : i \in [q]\};~\text {and } {{\mathcal {E}}} := \{((x_i,0),(u_i,1)) : i \in [q]\}\). We also associate the value \( \lambda _i = h_1(t_i) \oplus h_2(t_i)\) with edge \( ((x_i,0),(u_i,1)) \in {\mathcal {E}}\).

Note that the graph may not be simple, i.e., it can contain parallel edges. For all practical purposes, we may drop the 0 and 1 for \( (x,0) \in {\mathcal {X}}\) and \( (u,1) \in {\mathcal {U}}\), as they can be easily distinguished from the context and notations. Further, for some \( i,j \in [q] \), if \( x_i = x_j \) (or \( u_i=u_j \)), then they share the same vertex \( x_i = x_j = x_{i,j} \) (or \( u_i = u_j =u_{i,j} \)). The event \( x_i=x_j \) and \( u_i=u_j \), although extremely unlikely, will lead to a parallel edge in \( {\mathcal {G}}\). Finally each edge \( (x_i,u_i) \in {\mathcal {E}}\) corresponds to a query index \( i \in [q] \), so we can equivalently view (and call) the edge \( (x_i,u_i) \) as index i. Figure 2 gives an example graph for \( {\mathcal {G}}\).

Bad Transcripts: A transcript graph \({\mathcal {G}}(x^q,u^q) \) is called bad if:

- 1.

it has a cycle of size \( = 2 \).

- 2.

it has two adjacent edges i and j such that \(\lambda _i \oplus \lambda _j = 0 \).

- 3.

it has a component with number of edges \( \ge 4 \).

All subgraphs in Fig. 2, except the first two from left, are considered bad in [29]. Conditions 1 and 2 correspond to the cases which might lead to degeneracy in the real world. Condition 3 may lead to a cycle of length \( \ge 4 \) edges. The non-fulfillment of conditions 1, 2 and 3 satisfies the cycle-free and non-degeneracy properties required in mirror theory. It also bounds \( \xi _{\max } \le 4 \). Conditions 1 and 2 contribute small and insignificant terms and can be ignored from this discussion. We focus on the major bottleneck, i.e., condition 3. The subgraphs corresponding to condition 3 are given in Fig. 3. Configurations (D), (E) and (F) are symmetric to (A), (B) and (C). So we can study (A), (B) and (C), and the other three can be similarly analyzed.

Bottleneck 1: Bound on the probability of (A), (C), (D) and (F)—This can be divided into two parts:

- (a)

Configuration (A) arises for the event

$$\begin{aligned} \exists ^{*}i,j,k,l \text { such that } x_i = x_j = x_k = x_\ell . \end{aligned}$$This event is upper bounded to \( q^4\epsilon ^3 \) using assumption 1 on hash functions. Similar argument holds for (D).

- (b)

Configuration (C) (similarly for F) arises for the event

$$\begin{aligned} \exists ^{*}i,j,k,\ell \in [q] \text { such that } x_i = x_j = x_k \wedge u_k = u_\ell . \end{aligned}$$In this case, we can apply assumption 1 (even \( \text {AXU}_3 \) would suffice) to get an upper bound of \( q^4\epsilon ^3 \).

Bottleneck 2: Bound on the probability of (B)—Configuration (B) arises for the event

This is probably the trickiest case, which requires assumption 2, i.e., restriction on tweak repetition. Specifically, consider the case \( t_i = t_k \) and \( t_j = t_\ell \). This is precisely the case exploited in Mennink’s attack on CLRW2 [29] (see supplementary material B). In this case, for a fixed \( i,j,k,\ell \) the probability is bounded by \( \epsilon ^2 \). There are at most \( q^2 \) choices for (i, j), at most \((\mu _{t_i}-1) \) choices for k and a single choice for \( \ell \) given i, j and k. Thus, the probability is bounded by \(q^2\gamma \epsilon ^2 \) (using assumption 2). Similar argument holds for (E).

Bottleneck 3: Mirror theory bound—The final hurdle is the use of mirror theory in computation of real-world interpolation probability, which requires assumption 3. Yet another issue is the nature of the mirror theory bound. A straightforward application of mirror theory bound leads to a term of the form

in the ratio of interpolation probabilities (as required for H-coefficient technique), where \( \sum _{t' \in {\widehat{t}}^q} \mu _{t'} = q \). The boxed expression (particularly, the numerator in the expression) is of main interest. In the worst case, \( \mu _{t'} =O(q) \), which gives a lower bound of the form \( 1-{q^2}/{2^n} \) for the boxed expression. But using assumption 2, we get a lower bound of \( 1-{q\gamma }/{2^n} \) as \( \mu _{t'} \le \gamma \).

3.2.1 Severity of the Assumptions in [29]

Among the three assumptions, assumptions 1 and 2 are plausible in the sense that real-life use cases exist for assumption 2 and practical instantiations are possible for assumption 1. Another point of note is the fact that \( \gamma < 2^{n/4} \) is imposed due to bottleneck 3. Otherwise, a better bound of \( \gamma < 2^{n/2} \) could have been used. While assumptions 1 and 2 are plausible to a large extent, assumption 3 is disputable. This is because no publicly verifiable proof exists for the generalized mirror theory. In fact, the proof for a special case of mirror theory also has some unproved gaps and mistakes. See Remark 1 for one such issue.

Although the proof in [29] requires the above-mentioned assumptions, the proof approach seems quite simple, and in some cases, it highlights the bottlenecks in getting tight security. In the remainder of this paper, we aim to resolve all the bottlenecks discussed here, while relaxing all the assumptions made in [29]. Specifically, bottleneck 2 is resolved using the tools from Sect. 4, and bottlenecks 1 and 3 are resolved using the tools from Sects. 4 and 5, and a careful application of the expectation method in Sect. 6.

4 Results on (Multi)Collisions in Universal Hash

Let \( {\mathcal {H}}= \{h\ |\ h: {\mathcal {T}}\rightarrow {\mathcal {B}}\} \) be a family of functions. A pair of distinct elements \( (t, t') \) from \( {\mathcal {T}}\) is said to be colliding for a function \( h \in {\mathcal {H}}\), if \( h(t) =h(t') \). A family of functions \( {\mathcal {H}}= \{h\ |\ h: {\mathcal {T}}\rightarrow {\mathcal {B}}\} \) is called an \( \epsilon \)-universal hash if for all \( t \ne t' \in {\mathcal {T}}\),

Throughout this section, we fix \( t^q = (t_1, \ldots , t_q) \in ({\mathcal {T}})_q \). For a randomly chosen hash function \({\textsf {H}} \leftarrow \!\!{\$}\,{\mathcal {H}}\), the probability of having at least one colliding pair in \( t^q \) is at most \( {q \atopwithdelims ()2} \cdot \epsilon \). This is straightforward from the union bound.

4.1 The Alternating Collisions and Events Lemmata

Suppose \( {\mathcal {H}}\) is an \( \epsilon \)-universal hash, and \({\textsf {H}}_1,{\textsf {H}}_2 \leftarrow \!\!{\$}\,{\mathcal {H}}\) are two independently drawn universal hash functions. Then, by applying independence and union bound, we have

Now we go one step further. We would like to bound the probability of the following event:

For any fixed distinct i, j, k and l, we cannot claim that the probability of the event \( {\textsf {H}}_1(t_i) ={\textsf {H}}_1(t_j) \ \wedge \ {\textsf {H}}_2(t_j) ={\textsf {H}}_2(t_k)\ \wedge \ {\textsf {H}}_1(t_k) ={\textsf {H}}_1(t_l)\) is \(\epsilon ^3\) as the first and last events are no longer independent. Now, we show how we can get an improved bound even in the dependent situation. In particular, we prove the following lemma.

Lemma 4.1

(Alternating Collisions Lemma) Suppose \(\textsf {H} _1,\textsf {H} _2 \leftarrow \!\!{\$}\,{\mathcal {H}}\) are two independently drawn \( \epsilon \) universal hash functions and \( t^q \in ({\mathcal {T}})_q \). Then,

Proof

For any \( h \in {\mathcal {H}}\), we define the following useful set:

Let us denote the size of the above set by \( I_h \). So, \( I_h \) is the number of colliding pairs for the hash functions h. We also define a set \( {\mathcal {H}}_{\le } = \{h: I_h \le \frac{1}{\sqrt{\epsilon }} \} \) which collects all hash functions having a small number of colliding pairs. We denote the complement set by \( {\mathcal {H}}_> \). Now, by using double counting of the set \( \{(h, i,j) : h(t_i) = h(t_j)\} \) we get

Basically for every h, we have exactly \( I_h \) choices of (i, j), and so, the size of the set \( \{(h, i,j) : h(t_i) = h(t_j)\} \) is exactly \( \sum _h I_h \). On the other hand, for any \( 1 \le i <j \le q \), there are at most \( \epsilon \cdot |{\mathcal {H}}| \) hash functions h, such that \( (t_i, t_j) \) is a colliding pair for h. This follows from the definition of the universal hash function. From Eq. (8) and the definition of \({\mathcal {H}}_{\le } \), we have

Let \( \texttt {E} \) denote the event that there exist distinct i, j, k and l such that \( {\textsf {H}}_1(t_i) ={\textsf {H}}_1(t_j)\ \wedge \ {\textsf {H}}_1(t_k) ={\textsf {H}}_1(t_l)\ \wedge \ {\textsf {H}}_2(t_j) ={\textsf {H}}_2(t_k) \). Now, we proceed to bound the probability of this event.

First, we justify inequality 1. Given \( {\textsf {H}}_1 = h \), the probability of the event \( \texttt {E} \) is same as the probability of the following event:

There are at most \( I_h^2 \) pairs of pairs and for each pair of pairs and the collision probability of \( {\textsf {H}}_2(t_j) = {\textsf {H}}_2(t_k) \) is at most \( \epsilon \). So probability of the above event can be at most \( \min \{1, I_h^2\cdot \epsilon \} \). Now, we justify inequality 2 using two facts. First, \( {\textsf {H}}_1 \leftarrow \!\!{\$}\,{\mathcal {H}}\), i.e., \({\Pr _{}\left[ {{\textsf {H}}_1 = h}\right] } = |{\mathcal {H}}|^{-1} \) for all \( h \in {\mathcal {H}}\). Second, for all \( h \in {\mathcal {H}}_{\le } \), \( I_h \le 1/\sqrt{\epsilon } \). Inequality 3 follows from Eq. (9). \(\square \)

Now, we generalize the above result for a more general setting. The proof of the result is similar to the previous proof, and hence, we skip it (given in supplementary material C).

Lemma 4.2

(Alternating Events Lemma) Let \( \textsf {X} ^q =(\textsf {X} _1,\ldots , \textsf {X} _q) \) be a q-tuple of random variables. Suppose for all \( i < j \in [q] \), \(\texttt {E} _{i,j} \) are events associated with \( \textsf {X} _i \) and \(\textsf {X} _j\), possibly dependent. Each event holds with probability at most \( \epsilon \). Moreover, for any distinct \( i, j, k, l \in [q] \), \(\texttt {F} _{i,j, k, l} \) are events associated with \(\textsf {X} _i \), \( \textsf {X} _j\), \(\textsf {X} _k \) and \( \textsf {X} _l \), which holds with probability at most \( \epsilon ' \). Moreover, the collection of events \((\texttt {F} _{i,j, k, l})_{i,j,k,l} \) is independent with the collection of event \((\texttt {E} _{i,j})_{i,j} \). Then,

Note that Lemma 4.1 is a direct corollary of the above lemma. (The event \( \texttt {E}_{i,j} \) denotes that \( (t_i, t_j) \) is a colliding pair of \( {\textsf {H}}_1 \) and \( \texttt {F}_{i,j,k,l} \) denotes that \( (t_j, t_k) \) is a colliding pair of \( {\textsf {H}}_2 \).)

4.2 Expected Multicollisions in Universal Hash

Suppose \( {\mathcal {H}}\) is an \( \epsilon \)-universal hash, and \({\textsf {H}} \leftarrow \!\!{\$}\,{\mathcal {H}}\). Let \( {\textsf {X}}^q ={\textsf {H}}(t^q) \). We define an equivalence relation \(\sim \) on [q] as: \( \alpha \sim \beta \) if and only if \({\textsf {X}}_\alpha = {\textsf {X}}_\beta \) (i.e., \(\sim \) is simply the multicollision relation). Let \({\mathcal {P}}_1,{\mathcal {P}}_2,\ldots ,{\mathcal {P}}_r \) denote those equivalence classes of [q] corresponding to \( \sim \), such that \( {\nu }_i =|{\mathcal {P}}_i| \ge 2 \) for all \( i \in [r] \). In the following lemma, we present a simple yet powerful result on multicollisions in universal hash functions.

Lemma 4.3

Let \( \textsf {C} \) denote the number of colliding pairs in \( \textsf {X} ^q \). Then, we have

Proof

For \( i \in [r] \), each of the \( {\nu _i \atopwithdelims ()2} \) pairs in \(({\mathcal {P}}_i)_2 \) correspond to 1 colliding pair. And each colliding pair belongs to \( ({\mathcal {P}}_i)_2 \) for some \( i \in [r] \), as equality implies that the corresponding indices are related by \(\sim \). Thus, we have

The result follows from the fact that \( {\textsf {Ex} _{}\left[ {{\textsf {C}}}\right] } \le {q \atopwithdelims ()2} \epsilon \). \(\square \)

Lemma 4.3 results in a simple corollary given below, which was independently proved in [51].

Corollary 4.1

Let \( {\nu }_{\max } = \max \{{\nu }_i:i\in [r]\} \). Then, for some \(a\ge 2 \), we have

Proof

We have

where the last inequality follows from Markov’s inequality. \(\square \)

Dutta et al. [52] proved a weakerFootnote 2 variant of Corollary 4.1, using an elegant combinatorial argumentation.

5 Mirror Theory in Tweakable Permutation Setting

As evident from bottleneck 3 of Sect 3.2, a straightforward application of mirror theory bound would lead to a sub-optimal bound. In order to circumvent this sub-optimality, Mennink [29] used a restriction on tweak repetitions (assumption 2 of Sect. 3.2). Specifically, a bound of the form \( O(q/2^{3n/4}) \) requires \( \mu (t^q,t') <2^{n/4}\) for all \( t' \in {\widehat{t}}^q \), where \( t^q \) denotes the q-tuple of tweaks used in the q queries. In order to avoid this assumption, we need a different approach.

A closer inspection of the mirror theory proof reveals that we can actually avoid the restrictions on tweak repetitions. In fact, rather surprisingly, we will see that tweak repetitions are actually helpful in the sense that mirror theory bound is good. In the remainder of this section, we develop a modified version of mirror theory, apt for applications in tweakable permutation settings.

5.1 General Setup and Notations

Isolated and Star Components: In an edge-labeled bipartite graph \( {\mathcal {G}}= ({\mathcal {Y}},{\mathcal {V}},{\mathcal {E}}) \), an edge \( (y,v,\lambda ) \) is called isolated edge if both y and v have degree 1. A component \( {\mathcal {S}}\) of \( {\mathcal {G}}\) is called star, if \(\xi ({\mathcal {S}}) \ge 3 \) and there exists a unique vertex v in \({\mathcal {S}}\) with degree \( \xi ({\mathcal {S}})-1 \). We call v the center of \({\mathcal {S}}\). Further, we call \( {\mathcal {S}}\) a \( {{\mathcal {Y}}}_{\text {-}\star }\) (res. \( {{\mathcal {V}}}_{\text {-}\star }\)) component if its center lies in \( {\mathcal {Y}}\) (res. \( {\mathcal {V}}\)).

The System of Equation: Following the notations and definitions from Sect. 2.5, consider a system of equation \( {\mathcal {L}}\)

such that each component in \( {\mathcal {G}}({\mathcal {L}}) \) is either an isolated edge or a star. Let \( c_1 \), \( c_2 \) and \( c_3 \) denote the number of components of isolated, \( {{\mathcal {Y}}}_{\text {-}\star }\) and \( {{\mathcal {V}}}_{\text {-}\star }\) types, respectively. Let \( q_1 \), \( q_2 \) and \( q_3 \) denote the number of equations of isolated, \( {{\mathcal {Y}}}_{\text {-}\star }\) and \( {{\mathcal {V}}}_{\text {-}\star }\) types, respectively. Therefore, \( c_1 = q_1 \).

Note that the equations in \( {\mathcal {L}}\) can be arranged in any arbitrary order without affecting the number of solutions. For the sake of simplicity, we fix the ordering in such a way that all isolated edges occur first, followed by the star components.

Now, our goal is to give a lower bound on the number of solutions of \( {\mathcal {L}}\), such that the \( Y'_i \) values are pairwise distinct and \(V'_i\) values are pairwise distinct. More formally, we aim to prove the following result.

Theorem 5.1

Let \( {\mathcal {L}}\) be the system of linear equations as described above with \( q < 2^{n-2} \) and \( \xi _{\max }q \le 2^{n-1} \). Then, the number of tuples \( (y_1,\ldots ,y_{q_Y},v_1,\ldots ,v_{q_V}) \) that satisfy \( {\mathcal {L}}\), denoted \( h_q \), such that \( y_i \ne y_j \) and \(v_i \ne v_j \), for all \( i \ne j \), satisfies:

where \( \eta _{j} = \xi _{j}-1 \) and \( \xi _{j} \) denotes the size (number of vertices) of the jth component, for all \(j\in [c_1+c_2+c_3] \).

We note here that the bound in Theorem 5.1 is parametrized in q and \(\xi \). This is a bit different from the traditional mirror theory bounds. Further, we note that the bounds in Theorem 5.1 becomes \( 1-O(q^4/2^{3n}) \), when the value of \( \sum _{i=1}^{c_2+c_3}\eta ^2_{c_1+i} \) is \(O(q^2/2^{n}) \). When we apply this result to CLRW2 and \(\textsf {DbHtS-p}\), we can show that the expected value of the term is indeed \(O(q^2/2^{n})\) (a good time to revisit Lemma 4.3). Corollary 5.1 is useful for random function setting.

Corollary 5.1

Let \( {\mathcal {L}}\) be the system of linear equations as described above with \( q < 2^{n-2} \) and \( \xi _{\max }q < 2^{n-1} \). Then, the number of tuples \( (y_1,\ldots ,y_{q_Y},v_1,\ldots ,v_{q_V}) \) that satisfy \({\mathcal {L}}\), denoted \( h_q \), such that \( y_i \ne y_j \) and \( v_i \ne v_j \), for all \( i \ne j \), satisfies:

where \( \eta _{j} = \xi _{j}-1 \) and \( \xi _{j} \) denotes the size (number of vertices) of the jth component, for all \(j\in [c_1+c_2+c_3] \).

Note the difference between the expressions given in Theorem 5.1 and Corollary 5.1. Looking back at the discussion given toward the end of Sect. 2.5, one can see the motivation behind the denominator given in Corollary 5.1. Since we aim to apply mirror theory in tweakable permutation setting, the denominator is changed accordingly in Theorem 5.1.

The proof of Theorem 5.1 uses an inductive approach similar to the one in [37]. We postpone the complete proof to supplementary material D.

6 Tight Security Bound of CLRW2

Based on the tools, we developed in Sects. 4 and 5, and we now show that the CLRW2 construction achieves security up to the query complexity approximately \( 2^{3n/4} \). Given Mennink’s attack [29] (see supplementary material B) in roughly these many queries, we can conclude that the bound is tight.

Theorem 6.1

Let \( \kappa ,\tau ,n \in {{\mathbb {N}}}\) and \( \epsilon > 0 \). Let \( {E}\in {\textsf {BPerm} }(\kappa ,n) \), and let \( {\mathcal {H}}\) be an \( (\{0,1\}^\tau ,\{0,1\}^n,\epsilon ) \)-AXU hash function family. Consider

For \( q \le 2^{n-2} \) and \( t > 0 \), the TSPRP security of \(\text {\textsf {CLRW2}}[{E},{\mathcal {H}}] \) against \( {{\mathbb {A}}}(q,t) \) is given by

where \( t' = c(t + qt_{{\mathcal {H}}}) \), \( t_{{\mathcal {H}}} \) being the time complexity for computing the hash function \( {\mathcal {H}}\), \( c > 0 \) is a constant depending upon the computation model, and

On putting \( \epsilon = 1/2^n \), in Eq. (10) and further simplifying, we get

Corollary 6.1

For \( \epsilon = \frac{1}{2^n} \), we have

Specifically, the advantage bound is meaningful up to \( q \approx 2^{\frac{3n}{4}-1.43} \) queries.

The proof of Theorem 6.1 employs the expectation method coupled with an adaptation of \( (2^n/2q,q^4/2^{3n}) \)-restricted mirror theory [37] in tweakable permutation settings. While our use of mirror theory is somewhat inspired by its recent use in [29], in contrast to [29], we apply a modified version of mirror theory and that too for a restricted subset of queries. The complete proof of Theorem 6.1 is given in the remainder of this section.

6.1 Initial Step

Consider the instantiation \( \textsf {CLRW2} [{E}_{{\textsf {K}}_1},{E}_{{\textsf {K}}_2},{\textsf {H}}_1,{\textsf {H}}_2] \) of \( \textsf {CLRW2} [{E},{\mathcal {H}}] \), where \( {\textsf {K}}_1\), \({\textsf {K}}_2\), \( {\textsf {H}}_1\), \({\textsf {H}}_2 \) are independent and \( ({\textsf {K}}_1, {\textsf {K}}_2) \leftarrow \!\!{\$}\,(\{0,1\}^{\kappa })^2 \), \( ({\textsf {H}}_1,{\textsf {H}}_2) \leftarrow \!\!{\$}\,{\mathcal {H}}^2 \). As the first step, we switch to the information-theoretic setting, i.e., we replace \( ({E}_{{\textsf {K}}_1},{E}_{{\textsf {K}}_2}) \) with \( (\Pi _1,\Pi _2) \leftarrow \!\!{\$}\,{\textsf {Perm}}(n)^2 \). For the sake of simplicity, we write the modified instantiation \( \textsf {CLRW2} [\Pi _1,\Pi _2,{\textsf {H}}_1,{\textsf {H}}_2] \) as \( \textsf {CLRW2} \), i.e., without any parametrization. This switching is done via a standard hybrid argument that incurs a cost of \( 2\mathbf {Adv}^{{\textsf {sprp}}}_{{E}}(q,t') \) where \( t' = O(t + qt_{{\mathcal {H}}}) \). Thus, we have

So, in Eq. (12), we have to give an upper bound on \( \mathbf {Adv}^{{\textsf {tsprp}}}_{\textsf {CLRW2}}(q) \). At this point, we are in the information-theoretic setting. In other words, we consider computationally unbounded distinguisher \( {{\mathscr {A}}} \). Without loss of generality, we assume that \( {{\mathscr {A}}} \) is deterministic and non-trivial. Under this setup, we are now ready to apply the expectation method.

6.2 Oracle Description

The two oracles of interest are: \( {\mathcal {O}}_1 \), the real oracle, that implements \( \textsf {CLRW2} \); and \( {\mathcal {O}}_0 \), the ideal oracle, that implements \( {{\widetilde{\Pi }}}\leftarrow \!\!{\$}\,{\textsf {BPerm}}(\tau ,n) \). We consider an extended version of these oracles, the one in which they release some additional information. We use notations analogously as given in Fig. 1 to describe the transcript generated by \( {{\mathscr {A}}} \)’s interaction with its oracle.

6.2.1 Description of the Real Oracle, \( {\mathcal {O}}_1 \):

The real oracle \( {\mathcal {O}}_1 \) faithfully runs \( \textsf {CLRW2} \). We denote the transcript random variable generated by \( {{\mathscr {A}}} \)’s interaction with \( {\mathcal {O}}_1 \) by the usual notation  , which is a 10-ary q-tuple

, which is a 10-ary q-tuple

defined as follows: The initial transcript consists of \( ({\textsf {T}}^q,{\textsf {M}}^q,{\textsf {C}}^q) \), where for all \( i \in [q] \):

\( {\textsf {T}}_i \): ith tweak value, \( {\textsf {M}}_i \): ith plaintext value, \( {\textsf {C}}_i \): ith ciphertext value

where \( {\textsf {C}}^q = \textsf {CLRW2} ({\textsf {T}}^q,{\textsf {M}}^q) \). At the end of the query–response phase, \( {\mathcal {O}}_1 \) releases some additional information \( ({\textsf {X}}^q,{\textsf {Y}}^q,{\textsf {V}}^q,{\textsf {U}}^q,{\lambda }^q,{\textsf {H}}_1,{\textsf {H}}_2) \), where for all \( i \in [q] \):

\( ({\textsf {X}}_i,{\textsf {Y}}_i) \): ith input–output pair for \( \Pi _1 \),

\( ({\textsf {V}}_i,{\textsf {U}}_i) \): ith input–output pair for \( \Pi _2 \),

\( {\lambda }_i \): ith internal masking, \( {\textsf {H}}_1,{\textsf {H}}_2 \): the hash keys.

Note that \( {\textsf {X}}^q \), \( {\textsf {U}}^q \) and \( {\lambda }^q \) are completely determined by the hash keys \( {\textsf {H}}_1,{\textsf {H}}_2 \) and the initial transcript \( ({\textsf {T}}^q,{\textsf {M}}^q,{\textsf {C}}^q) \). But, we include them anyhow to ease the analysis.

6.2.2 Description of the Ideal Oracle, \( {\mathcal {O}}_0 \):

The ideal oracle \( {\mathcal {O}}_0 \) has access to \( {{\widetilde{\Pi }}}\). Since \( {\mathcal {O}}_1 \) releases some additional information, \( {\mathcal {O}}_0 \) must generate these values as well. The ideal transcript random variable  is also a 10-ary q-tuple

is also a 10-ary q-tuple

defined below. Note that we use the same notation to represent the variables of transcripts in the both worlds. However, the probability distributions of these would be determined from their definitions. The initial transcript consists of \( ({\textsf {T}}^q,{\textsf {M}}^q,{\textsf {C}}^q) \), where for all \( i \in [q] \):

\( {\textsf {T}}_i \): ith tweak value, \( {\textsf {M}}_i \): ith plaintext value, \( {\textsf {C}}_i \): ith ciphertext value,

where \( {\textsf {C}}^q = {{\widetilde{\Pi }}}({\textsf {T}}^q,{\textsf {M}}^q) \). Once the query–response phase is over \( {\mathcal {O}}_0 \) first samples \( ({\textsf {H}}_1,{\textsf {H}}_2) \leftarrow \!\!{\$}\,{\mathcal {H}}^2 \) and computes \( {\textsf {X}}^q,{\textsf {U}}^q,{\lambda }^q \), where for all \( i \in [q] \):

\( {\textsf {X}}_i := {\textsf {H}}_1({\textsf {T}}_i) \oplus {\textsf {M}}_i \), \( {\textsf {U}}_i := {\textsf {H}}_2({\textsf {T}}_i) \oplus {\textsf {C}}_i \), \( {\lambda }_i := {\textsf {H}}_1({\textsf {T}}_i) \oplus {\textsf {H}}_2({\textsf {T}}_i) \).

This means that \( {\textsf {X}}^q \), \( {\textsf {U}}^q \) and \( {\lambda }^q \) are defined honestly. Given the partial transcript  we wish to characterize the hash key \( {\textsf {H}} := ({\textsf {H}}_1,{\textsf {H}}_2) \) as good or bad. We write \( {\mathcal {H}}_{{\textsf {bad}}} \) for the set of bad hash keys, and \( {\mathcal {H}}_{\textsf {good}}= {\mathcal {H}}^2{\setminus }{\mathcal {H}}_{{\textsf {bad}}} \). We say that a hash key \( {\textsf {H}} \in {\mathcal {H}}_{{\textsf {bad}}} \) (or \( {\textsf {H}} \) is bad) if and only if one of the following predicates is true:

we wish to characterize the hash key \( {\textsf {H}} := ({\textsf {H}}_1,{\textsf {H}}_2) \) as good or bad. We write \( {\mathcal {H}}_{{\textsf {bad}}} \) for the set of bad hash keys, and \( {\mathcal {H}}_{\textsf {good}}= {\mathcal {H}}^2{\setminus }{\mathcal {H}}_{{\textsf {bad}}} \). We say that a hash key \( {\textsf {H}} \in {\mathcal {H}}_{{\textsf {bad}}} \) (or \( {\textsf {H}} \) is bad) if and only if one of the following predicates is true:

- 1.

\({\texttt {H}}_1\):\( \exists ^{*}i,j \in [q] \) such that \( {\textsf {X}}_i = {\textsf {X}}_j \wedge {\textsf {U}}_i = {\textsf {U}}_j \).

- 2.

\({\texttt {H}}_2\):\( \exists ^{*}i,j \in [q] \) such that \( {\textsf {X}}_i = {\textsf {X}}_j \wedge {\lambda }_i = {\lambda }_j \).

- 3.

\(\texttt {H}_3\):\( \exists ^{*}i,j \in [q] \) such that \( {\textsf {U}}_i = {\textsf {U}}_j \wedge {\lambda }_i = {\lambda }_j \).

- 4.

\(\texttt {H}_4\):\( \exists ^{*}i,j,k,\ell \in [q] \) such that \( {\textsf {X}}_i = {\textsf {X}}_j \wedge {\textsf {U}}_j = {\textsf {U}}_k \wedge {\textsf {X}}_k = {\textsf {X}}_\ell \).

- 5.

\(\texttt {H}_5\):\( \exists ^{*}i,j,k,\ell \in [q] \) such that \( {\textsf {U}}_i = {\textsf {U}}_j \wedge {\textsf {X}}_j = {\textsf {X}}_k \wedge {\textsf {U}}_k = {\textsf {U}}_\ell \).

- 6.

\(\texttt {H}_6\):\( \exists k \ge 2^n/2q, \exists ^{*}i_1,i_2,\ldots ,i_{k} \in [q] \) such that \( {\textsf {X}}_{i_1} = \cdots = {\textsf {X}}_{i_{k}} \).

- 7.

\(\texttt {H}_7\):\( \exists k \ge 2^n/2q, \exists ^{*}i_1,i_2,\ldots ,i_{k} \in [q] \) such that \( {\textsf {U}}_{i_1} = \cdots = {\textsf {U}}_{i_{k}} \).

Case 1.\( {\textsf {H}} \)is bad: If the hash key \( {\textsf {H}} \) is bad, then \( {\textsf {Y}}^q \) and \( {\textsf {V}}^q \) values are sampled degenerately as \( {\textsf {Y}}_i = {\textsf {V}}_i = 0 \) for all \( i \in [q] \). It means that we sample without maintaining any specific conditions, which may lead to inconsistencies.

Case 2.\( {\textsf {H}} \)is good: To characterize the transcript corresponding to a good hash key, it will be useful to study a graph, similar to the one in Sect. 3, associated with \( ({\textsf {X}}^q,{\textsf {U}}^q) \). Specifically, we consider the random transcript graph \( {\mathcal {G}}({\textsf {X}}^q,{\textsf {U}}^q) \) arising due to \( {\textsf {H}} \in {\mathcal {H}}_{\textsf {good}}\). Lemma 6.1 and Fig. 4 characterize the different types of possible components in \( {\mathcal {G}}({\textsf {X}}^q,{\textsf {U}}^q) \). Note that type 2, type 3, type 4 and type 5 graphs are the same as configurations (A), (D), (C) and (F) of Fig. 3, for \( \ge 4 \) edges. These graphs are considered as bad in [29], whereas we allow such components.

Enumerating all possible types of components of a transcript graph corresponding to a good hash key: type 1 is the only possible component of size \( = 1 \) edge; type 2 and type 3 are \( {\mathcal {X}}_{\text {-}\star } \) and \( {\mathcal {U}}_{\text {-}\star } \) components, respectively; type 4 and type 5 are the only possible components that are not isolated or star (can have degree 2 vertices in both \( {\mathcal {X}}\) and \( {\mathcal {U}}\))

Lemma 6.1

The transcript graph \( {\mathcal {G}}\) corresponding to \( (\textsf {X} ^q,\textsf {U} ^q) \) generated by a good hash key \( \textsf {H} \) has the following properties:

- 1.

\( {\mathcal {G}}\) is simple, acyclic and has no isolated vertices.

- 2.

\( {\mathcal {G}}\) has no two adjacent edges i and j such that \( {\lambda }_i \oplus {\lambda }_j = 0 \).

- 3.

\( {\mathcal {G}}\) has no component of size \( > 2^n/2q \) edges.

- 4.

\( {\mathcal {G}}\) has no component such that it has 2 distinct degree 2 vertices in \( {\mathcal {X}}\) or \( {\mathcal {U}}\).

In fact, the all possible types of components of \( {\mathcal {G}}\) are enumerated in Fig. 4.

The proof of Lemma 6.1 is elementary and given in supplementary material E for the sake of completeness.

In what follows, we describe the sampling of \( {\textsf {Y}}^q \) and \( {\textsf {V}}^q \) when \( {\textsf {H}} \in {\mathcal {H}}_{\textsf {good}}\). We collect the indices \( i \in [q] \) corresponding to the edges in all type 1, type 2, type 3, type 4 and type 5 components, in the index sets \( {\mathcal {I}}_{1} \), \( {\mathcal {I}}_{2} \), \( {\mathcal {I}}_{3} \), \( {\mathcal {I}}_{4} \) and \( {\mathcal {I}}_{5} \), respectively. Clearly, the five sets are disjoint, and \( [q] = {\mathcal {I}}_{1} \sqcup {\mathcal {I}}_{2} \sqcup {\mathcal {I}}_{3} \sqcup {\mathcal {I}}_{4} \sqcup {\mathcal {I}}_{5} \). Let \( {\mathcal {I}}= {\mathcal {I}}_{1} \sqcup {\mathcal {I}}_{2} \sqcup {\mathcal {I}}_{3} \). Consider the system of equation

where \( Y_i = Y_j \) (res. \( V_i = V_j \)) if and only if \( {\textsf {X}}_i ={\textsf {X}}_j \) (res. \( {\textsf {U}}_i ={\textsf {U}}_j \)) for all \( i,j \in [q] \). The solution set of \( {\mathcal {L}}\) is precisely the set

Given these definitions, the ideal oracle \( {\mathcal {O}}_0 \) samples \( ({\textsf {Y}}^q,{\textsf {V}}^q) \) as follows:

\( ({\textsf {Y}}^{{\mathcal {I}}},{\textsf {V}}^{{\mathcal {I}}}) \leftarrow \!\!{\$}\,{\mathcal {S}}\), i.e., \( {\mathcal {O}}_0 \) uniformly samples one valid assignment from the set of all valid assignments.

Let \( {\mathcal {G}}{\setminus } {\mathcal {I}}\) denote the subgraph of \( {\mathcal {G}}\) after the removal of edges and vertices corresponding to \( i \in {\mathcal {I}}\). For each component \( {\mathcal {C}}\) of \( {\mathcal {G}}{\setminus } {\mathcal {I}}\):

Suppose \( ({\textsf {X}}_i,{\textsf {U}}_i) \in {\mathcal {C}}\) corresponds to the edge in \( {\mathcal {C}}\), where both \( {\textsf {X}}_i \) and \( {\textsf {U}}_i \) have degree \( \ge 2 \). Then, \( {\textsf {Y}}_i \leftarrow \!\!{\$}\,\{0,1\}^n\) and \( {\textsf {V}}_i = {\textsf {Y}}_i \oplus {\lambda }_i \).

For each edge \( ({\textsf {X}}_{i'},{\textsf {U}}_{i'}) \ne ({\textsf {X}}_i,{\textsf {U}}_i) \in {\mathcal {C}}\), either \( {\textsf {X}}_{i'} = {\textsf {X}}_i \) or \( {\textsf {U}}_{i'} = {\textsf {U}}_i \). Suppose, \( {\textsf {X}}_{i'} = {\textsf {X}}_i \). Then, \( {\textsf {Y}}_{i'} = {\textsf {Y}}_i \) and \( {\textsf {V}}_{i'} = {\textsf {Y}}_{i'} \oplus {\lambda }_{i'} \). Now, suppose \( {\textsf {U}}_{i'} = {\textsf {U}}_i \). Then, \( {\textsf {V}}_{i'} = {\textsf {V}}_i \) and \( {\textsf {Y}}_{i'} = {\textsf {V}}_{i'} \oplus {\lambda }_{i'} \).

At this point,  is completely defined. In this way, we maintain both the consistency of equations of the form \( {\textsf {Y}}_i \oplus {\textsf {V}}_i = {\lambda }_i \) (as in the case of real world) and the permutation consistency within each component, when \( {\textsf {H}} \in {\mathcal {H}}_{\textsf {good}}\). However, there might be collisions among \( {\textsf {Y}} \) or \( {\textsf {V}} \) values from different components.

is completely defined. In this way, we maintain both the consistency of equations of the form \( {\textsf {Y}}_i \oplus {\textsf {V}}_i = {\lambda }_i \) (as in the case of real world) and the permutation consistency within each component, when \( {\textsf {H}} \in {\mathcal {H}}_{\textsf {good}}\). However, there might be collisions among \( {\textsf {Y}} \) or \( {\textsf {V}} \) values from different components.

6.3 Definition and Analysis of Bad Transcripts

Given the description of the transcript random variable corresponding to the ideal oracle, we can define the set of transcripts \( \varOmega \) as the set of all tuples \( \omega = (t^q,m^q,c^q,x^q,y^q,v^q,u^q,\lambda ^q,h_1,h_2) \), where \( t^q \in (\{0,1\}^\tau )^q \); \( m^q,c^q,y^q,v^q \in (\{0,1\}^n)^q \); \( (h_1,h_2) \in {\mathcal {H}}^2 \); \( x^q = h_1(t^q) \oplus m^q \); \( u^q = h_2(t^q) \oplus c^q \); \( \lambda ^q = h_1(t^q) \oplus h_2(t^q) \); and \( ({t^q}, {m^q})\leftrightsquigarrow ({t^q}, {c^q}) \).

Our bad transcript definition is inspired by two requirements:

- 1.

Eliminate all \( x^q \), \( u^q \) and \( \lambda ^q \) tuples such that both \( y^q \) and \( v^q \) are trivially restricted by way of linear dependence. For example, consider the condition \( \texttt {H}_2 \). This leads to \( y_i = y_j \), which would imply \( v_i = y_i \oplus \lambda _i = y_j \oplus \lambda _j = v_j \). Assuming \( i > j \), \( v_i \) is trivially restricted (\( = v_j \)) by way of linear dependence. This may lead to

as \( u_i \) may not be equal to \( u_j \).

as \( u_i \) may not be equal to \( u_j \). - 2.

Eliminate all \( x^q \), \( u^q \), \( y^q \), \( v^q \) tuples such that

or

or  .

.

Among the two, requirement 2 is trivial as \( {x^q}\leftrightsquigarrow {y^q} \) and \( {u^q}\leftrightsquigarrow {v^q} \) are always true for real-world transcript. Requirement 1 is more of a technical one that helps in the ideal world sampling of \( y^q \) and \( v^q \).

Bad Transcript Definition: We first define certain transcripts as bad depending upon the characterization of hash keys. Inspired by the ideal world description, we say that a hash key \( (h_1,h_2) \in {\mathcal {H}}_{{\textsf {bad}}} \) (or \( (h_1,h_2) \) is bad) if and only if the following predicate is true:

We say that \( \omega \) is hash-induced bad transcript, if \( (h_1,h_2) \in {\mathcal {H}}_{\textsf {bad}} \). We write this event as \( \texttt {BAD-HASH}\), and by a slight abuse of notations,Footnote 3 we have

This takes care of the first requirement. For the second one, we have to enumerate all the conditions which might lead to  or

or  . Since we sample degenerately when the hash key is bad, the transcript is trivially inconsistent in this case. For good hash keys, if \( x_i=x_j \) (or \( u_i=u_j \)) then we always have \( y_i=y_j \) (res. \( v_i=v_j \)); hence, the inconsistency would not arise. So, given that the hash key is good, we say that \( \omega \) is sampling-induced bad transcript, if one of the following conditions is true:

. Since we sample degenerately when the hash key is bad, the transcript is trivially inconsistent in this case. For good hash keys, if \( x_i=x_j \) (or \( u_i=u_j \)) then we always have \( y_i=y_j \) (res. \( v_i=v_j \)); hence, the inconsistency would not arise. So, given that the hash key is good, we say that \( \omega \) is sampling-induced bad transcript, if one of the following conditions is true:

For some \( \alpha \in [5] \) and \( \beta \in \{\alpha ,\ldots ,5\} \), we have

\(\texttt {Ycoll}_{{\alpha }{\beta }}:\exists i \in {\mathcal {I}}_{\alpha }, j \in {\mathcal {I}}_{\beta } \), such that \( x_i \ne x_j \wedge y_i = y_j \), and

\(\texttt {Vcoll}_{{\alpha }{\beta }}:\exists i \in {\mathcal {I}}_{\alpha }, j \in {\mathcal {I}}_{\beta } \), such that \( u_i \ne u_j \wedge v_i = v_j \),

where \( {\mathcal {I}}_i \) is defined as before in Sect. 6.2. By varying \( \alpha \) and \( \beta \) over all possible values, we get all 30 conditions which might lead to  or

or  . Here, we remark that some of these 30 conditions are never satisfied due to the sampling mechanism prescribed in Sect. 6.2. These are \( \texttt {Ycoll}_{{1}{1}} \), \( \texttt {Ycoll}_{{1}{2}} \), \( \texttt {Ycoll}_{{1}{3}} \), \( \texttt {Ycoll}_{{2}{2}} \), \( \texttt {Ycoll}_{{2}{3}} \), \( \texttt {Ycoll}_{{3}{3}} \), \( \texttt {Vcoll}_{{1}{1}} \), \( \texttt {Vcoll}_{{1}{2}} \), \( \texttt {Vcoll}_{{1}{3}} \), \( \texttt {Vcoll}_{{2}{2}} \), \( \texttt {Vcoll}_{{2}{3}} \) and \( \texttt {Vcoll}_{{3}{3}} \). We listed them here only for the sake of completeness. We write the combined event that one of the 30 conditions hold as \( \texttt {BAD-SAMP}\). Again by an abuse of notations, we have

. Here, we remark that some of these 30 conditions are never satisfied due to the sampling mechanism prescribed in Sect. 6.2. These are \( \texttt {Ycoll}_{{1}{1}} \), \( \texttt {Ycoll}_{{1}{2}} \), \( \texttt {Ycoll}_{{1}{3}} \), \( \texttt {Ycoll}_{{2}{2}} \), \( \texttt {Ycoll}_{{2}{3}} \), \( \texttt {Ycoll}_{{3}{3}} \), \( \texttt {Vcoll}_{{1}{1}} \), \( \texttt {Vcoll}_{{1}{2}} \), \( \texttt {Vcoll}_{{1}{3}} \), \( \texttt {Vcoll}_{{2}{2}} \), \( \texttt {Vcoll}_{{2}{3}} \) and \( \texttt {Vcoll}_{{3}{3}} \). We listed them here only for the sake of completeness. We write the combined event that one of the 30 conditions hold as \( \texttt {BAD-SAMP}\). Again by an abuse of notations, we have

Finally, a transcript \( \omega \) is called bad, i.e., \( \omega \in \varOmega _{{\textsf {bad}}} \), if it is either a hash- or a sampling-induced bad transcript. All other transcripts are called good. It is easy to see that all good transcripts are attainable (as required in the H-coefficient technique or the expectation method).

Bad Transcript Analysis: We analyze the probability of realizing a bad transcript in the ideal world. By definition, this is possible if and only if one of \( \texttt {BAD-HASH}\) or \( \texttt {BAD-SAMP}\) occurs. So, we have

Lemma 6.2 upper bounds \( \epsilon _{\textsf {hash} }\) to \( 2q^2\epsilon ^2 + 2q^2\epsilon ^{1.5} + 16q^4\epsilon 2^{-2n} \) and Lemma 6.3 upper bounds \( \epsilon _{{\textsf {samp}}}\) to \( 9q^4\epsilon ^22^{-n} \). Substituting these values in Eq. (15), we get

Lemma 6.2

\( \displaystyle {\epsilon _{\textsf {hash} }} \le 2q^2\epsilon ^2 + 2q^2\epsilon ^{1.5} + \frac{16q^4\epsilon }{2^{2n}} \).

Proof

Using Eqs. (13) and (15), we have

\( {\texttt {H}}_1\) is true if for some distinct i, j both \( {\textsf {X}}_i = {\textsf {X}}_j \) and \( {\textsf {U}}_i = {\textsf {U}}_j \). Now \( {\textsf {T}}_i = {\textsf {T}}_j \implies {\textsf {M}}_i \ne {\textsf {M}}_j \). Hence, \( {\textsf {X}}_i \ne {\textsf {X}}_j \) and \( {\texttt {H}}_1\) is not true. So suppose \( {\textsf {T}}_i \ne {\textsf {T}}_j \). Then for a fixed i, j we get an upper bound of \( \epsilon ^2 \) as \( {\mathcal {H}}\) is \( \epsilon \)-AXU, and we have at most \( {q \atopwithdelims ()2} \) pairs of i, j. Thus, \( {\Pr _{}\left[ {{\texttt {H}}_1}\right] } \le {q \atopwithdelims ()2}\epsilon ^2 \). Following a similar line of argument, one can bound \( {\Pr _{}\left[ {{\texttt {H}}_2}\right] } \le {q \atopwithdelims ()2}\epsilon ^2 \) and \( {\Pr _{}\left[ {\texttt {H}_3}\right] } \le {q \atopwithdelims ()2}\epsilon ^2 \).

In the remaining, we bound the probability of \( \texttt {H}_4\) and \( \texttt {H}_6\), while the probability of \( \texttt {H}_5\) and \( \texttt {H}_7\) can be bounded analogously. For any function \( f: \{0,1\}^\tau \in \{0,1\}^n\), let \( f': \{0,1\}^\tau \times \{0,1\}^n\rightarrow \{0,1\}^n\) be defined as \( f'(t,m) = f(t) \oplus m \). So \( {\textsf {X}}_i = {\textsf {H}}'_1({\textsf {T}}_i,{\textsf {M}}_i) \), and \( {\textsf {U}}_i = {\textsf {H}}'_2({\textsf {T}}_i,{\textsf {C}}_i) \), for all \( i \in [q] \). It is easy to see that \( {\textsf {H}}'_b \) is \( \epsilon \)-universal if \( {\textsf {H}}_b \) is \( \epsilon \)-AXU for \( b \in [2] \). Using the renewed description, \( \texttt {H}_4\) is true if for some distinct \( i,j,k,\ell \),

Since \( (t_i,m_i) \ne (t_j,m_j) \) and \( (t_i,c_i) \ne (t_j,c_j) \) for distinct i and j, we can apply the alternating collisions lemma of Lemma 4.1 to get \( {\Pr _{}\left[ {\texttt {H}_4}\right] } \le q^2\epsilon ^{1.5} \).

For \( \texttt {H}_6\), we have

where \( k \ge 2^n/2q \). Since \( (t_{i_{j}},m_{i_{j}}) \ne (t_{i_l},m_{i_l}) \) for all \( j \ne l \), we can apply Corollary 4.1 with \( a = 2^n/2q \) to get \( {\Pr _{}\left[ {\texttt {H}_6}\right] } \le \frac{8q^4\epsilon }{2^{2n}} \). \(\square \)

Lemma 6.3

\( \displaystyle \epsilon _{{\textsf {samp}}}\le \frac{9q^4\epsilon ^2}{2^n} \).

Proof

Using Eqs. (14) and (15), we have

We bound the probabilities of the events on the right-hand side in groups as given below:

\({{1.\hbox { Bounding } \sum _{\alpha \in [3],\beta \in \{\alpha ,\ldots ,3\}}{\Pr _{}\left[ {\texttt {Ycoll}_{{\alpha }{\beta }}}\right] }+{\Pr _{}\left[ {\texttt {Vcoll}_{{\alpha }{\beta }}}\right] } }}\): Recall that the sampling of \( {\textsf {Y}} \) and \( {\textsf {V}} \) values is always done consistently for indices belonging to \( {\mathcal {I}}= {\mathcal {I}}_1 \sqcup {\mathcal {I}}_2 \sqcup {\mathcal {I}}_3 \). Hence,

\({{2.\hbox { Bounding } \sum _{\alpha \in [3],\beta \in \{4,5\}} {\Pr _{}\left[ {\texttt {Ycoll}_{{\alpha }{\beta }}}\right] }+{\Pr _{}\left[ {\texttt {Vcoll}_{{\alpha }{\beta }}}\right] } }}\): Let us consider the event \( \texttt {Ycoll}_{{1}{4}} \), which translates to existing indices \( i \in {\mathcal {I}}_{1} \) and \( j \in {\mathcal {I}}_{4} \) such that \( {\textsf {X}}_i \ne {\textsf {X}}_j \wedge {\textsf {Y}}_i = {\textsf {Y}}_j \). Since \( j \in {\mathcal {I}}_{4} \), there must exist \( k,\ell \in {\mathcal {I}}_{4}{\setminus }\{j\} \), such that one of the following happens

We analyze the first case, while the other two cases can be similarly bounded. To bound the probability of \( \texttt {Ycoll}_{{1}{4}} \), we can thus look at the joint event

Note that the event \( {\textsf {Y}}_i = {\textsf {Y}}_j \) is independent of \( {\textsf {X}}_j = {\textsf {X}}_k \wedge {\textsf {U}}_k = {\textsf {U}}_\ell \), as both \( {\textsf {Y}}_i \) and \( {\textsf {Y}}_j \) are sampled independent of the hash key. Thus, we get

where the last inequality follows from the uniform randomness of \( {\textsf {Y}}_j \) and the AXU property of \( {\textsf {H}}_1 \) and \( {\textsf {H}}_2 \). The probability of the other two cases is similarly bounded to \( q{q \atopwithdelims ()3}\frac{\epsilon ^2}{2^n} \), whence we get

We can bound the probabilities of \( \texttt {Ycoll}_{{2}{4}} \), \( \texttt {Ycoll}_{{3}{4}} \), \( \texttt {Ycoll}_{{\alpha }{5}} \), \( \texttt {Vcoll}_{{\alpha }{4}} \) and \( \texttt {Vcoll}_{{\alpha }{5}} \), for \( \alpha \in [3] \), in a similar manner as in the case of \( \texttt {Ycoll}_{{1}{4}} \). So, we skip the argumentation for these cases and summarize the probability for this group as

\({{3.\hbox { Bounding } \sum _{\alpha \in \{4,5\},\beta \in \{\alpha ,5\}}{\Pr _{}\left[ {\texttt {Ycoll}_{{\alpha }{\beta }}}\right] }+{\Pr _{}\left[ {\texttt {Vcoll}_{{\alpha }{\beta }}}\right] } }}\): Consider the event \( \texttt {Ycoll}_{{4}{4}} \), which translates to there exists distinct indices \( i,j \in {\mathcal {I}}_4 \) such that \( {\textsf {X}}_i \ne {\textsf {X}}_j \wedge {\textsf {Y}}_i = {\textsf {Y}}_j \). Here, as \( i,j \in {\mathcal {I}}_4 \), there must exist \( k,\ell \in {\mathcal {I}}_4{\setminus }\{j\} \) such that one of the following happens

The analysis of these cases is similar to 2 above. So, we skip it and provide the final bound

The probabilities of all the remaining events in this group can be bounded in a similar fashion.

The result follows by combining Eqs. (17–19), followed by some algebraic simplifications. \(\square \)

6.4 Good Transcript Analysis

From Sect. 6.2, we know the types of components present in the transcript graph corresponding to a good transcript \( \omega \) are exactly as in Fig. 4. Let \( \omega = (t^q,m^q,c^q,x^q,y^q,v^q, u^q,\lambda ^q,h_1,h_2) \) be the good transcript at hand. From the bad transcript description of Sect. 6.3, we know that for a good transcript \( ({t^q}, {m^q})\leftrightsquigarrow ({t^q}, {c^q}) \), \( {x^q}\leftrightsquigarrow {y^q} \), \( {v^q}\leftrightsquigarrow {u^q} \) and \( y^q \oplus v^q = \lambda ^q \).

We add some new parameters with respect to \( \omega \) to aid our analysis of good transcripts. For \( i \in [{5}] \), let \( c_i(\omega ) \) and \( q_i(\omega ) \) denote the number of components and number of indices (corresponding to the edges), respectively, of type i in \( \omega \). Note that \( q_1(\omega ) = c_1(\omega ) \), \( q_i(\omega ) \ge 2c_i(\omega ) \) for \( i \in \{2,3\} \), and \( q_i(\omega ) \ge 3c_i(\omega ) \) for \( i \in \{4,5\} \). Obviously, for a good transcript \( q = \sum _{i=1}^{5} q_i(\omega ) \). For all these parameters, we will drop the \( \omega \) parametrization whenever it is understood from the context.

Interpolation probability for the real oracle: In the real oracle, \( ({\textsf {H}}_1,{\textsf {H}}_2) \leftarrow \!\!{\$}\,{\mathcal {H}}^2 \), \( \Pi _1 \) is called exactly \( q_1+c_2+q_3+2c_4+q_5-c_5 \) times and \( \Pi _2 \) is called exactly \( q_1+q_2+c_3+q_4-c_4+2c_5 \) times. Thus, we have

Interpolation probability for the ideal oracle: In the ideal oracle, the sampling is done in parts:

- I.:

\({{\widetilde{\Pi }}}\)sampling: Let \( (t'_1,t'_2,\cdots ,t'_r) \) denote the tuple of distinct tweaks in \( t^q \), and for all \( i \in [r] \), let \( a_i = \mu (t^q,t'_i) \), i.e., \( r \le q \) and \( \sum _{i=1}^{r} a_i = q \). Then, we have

$$\begin{aligned} {\Pr _{}\left[ {{{\widetilde{\Pi }}}(t^q,m^q)=c^q}\right] } \le \frac{1}{\prod _{i = 1}^{r}(2^n)_{a_i}}. \end{aligned}$$- II.:

Hash key sampling: The hash keys are sampled uniformly from \( {\mathcal {H}}^2 \), i.e., \( {\Pr _{}\left[ {({\textsf {H}}_1,{\textsf {H}}_2)=(h_1,h_2)}\right] } = \frac{1}{|{\mathcal {H}}|^2} \).

- III.:

Internal variables sampling: The internal variables \( {\textsf {Y}}^q \) and \( {\textsf {V}}^q \) are sampled in two stages.

- (A).:

type 1, type 2 and type 3 sampling: Recall the sets \( {\mathcal {I}}_{1} \), \( {\mathcal {I}}_{2} \) and \({\mathcal {I}}_{3} \), from Sect. 6.3. Consider the system of equation

$$\begin{aligned} {\mathcal {L}}= \{{\textsf {Y}}_i \oplus {\textsf {V}}_i = \lambda _i~:~i \in {\mathcal {I}}\}. \end{aligned}$$Let \( (\lambda '_1,\lambda '_2,\cdots ,\lambda '_s) \) denote the tuple of distinct elements in \( \lambda ^{\mathcal {I}}\), and for all \( i \in [s] \), let \( b_i = \mu (\lambda ^{\mathcal {I}},\lambda '_i) \). Figure 4 shows that \( {\mathcal {L}}\) is cycle-free and non-degenerate. Further, \( \xi _{\max }({\mathcal {L}}) \le 2^n/2q \), since the transcript is good. So, we can apply Theorem 5.1 to get a lower bound on the number of valid solutions, \( |{\mathcal {S}}| \) for \( {\mathcal {L}}\). Using the fact that \( ({\textsf {Y}}^{{\mathcal {I}}},{\textsf {V}}^{{\mathcal {I}}}) \leftarrow \!\!{\$}\,{\mathcal {S}}\) and Theorem 5.1, we have

$$\begin{aligned} {\Pr _{}\left[ {({\textsf {Y}}^{{\mathcal {I}}},{\textsf {V}}^{{\mathcal {I}}}) = (y^{{\mathcal {I}}},v^{{\mathcal {I}}})}\right] } \le \frac{\prod _{i=1}^{s}(2^n)_{b_i}}{\zeta (\omega )(2^n)_{q_1+c_2+q_3}(2^n)_{q_1+q_2+c_3}}, \end{aligned}$$where

$$\begin{aligned} \zeta (\omega ) = \left( 1 - \frac{13q^4}{2^{3n}} - \frac{2q^2}{2^{2n}} - \left( \sum _{i=1}^{c_2+c_3}\eta ^2_{c_1+i}\right) \frac{4q^2}{2^{2n}}\right) . \end{aligned}$$- (B).:

type 4 and type 5 sampling: For the remaining indices, one value is sampled uniformly for each of the components, i.e., we have

$$\begin{aligned} {\Pr _{}\left[ {\left( {\textsf {Y}}^{[q] {\setminus } {\mathcal {I}}},{\textsf {V}}^{[q] {\setminus } {\mathcal {I}}}\right) = \left( y^{[q] {\setminus } {\mathcal {I}}},v^{[q] {\setminus } {\mathcal {I}}}\right) }\right] } = \frac{1}{(2^n)^{c_4+c_5}}. \end{aligned}$$

By combining I, II, III, and rearranging the terms, we have

(21)