Abstract

In this paper, we study the problem of zero-shot sketch-based image retrieval (ZS-SBIR), which is challenging because of the modal gap between sketch and image and the semantic inconsistency between seen categories and unseen categories. Most of the previous methods in ZS-SBIR, need external semantic information, i.e., texts and class labels, to minimize modal gap or semantic inconsistency. To tackle the challenging ZS-SBIR without external semantic information which is labor intensive, we propose a novel method of learning the visual correspondences between different modalities, i.e., sketch and image, to transfer knowledge from seen data to unseen data. This method is based on a transformer-based dual-pathway structure to learn the visual correspondences. In order to eliminate the modal gap between sketch and image, triplet loss and Gaussian distribution based domain alignment mechanism are introduced and performed on tokens obtained from our proposed structure. In addition, knowledge distillation is introduced to maintain the generalization capability brought by the vision transformer (ViT) used as the backbone to build the model. The comprehensive experiments on three benchmark datasets, i.e., Sketchy, TU-Berlin and QuickDraw, demonstrate that our method achieves superior results compared to baselines on all three datasets without external semantic information.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Zero-shot sketch-based image retrieval (ZS-SBIR) is from the Sketch-based image retrieval (SBIR) [8, 12], that the sketch is used as a query to retrieval relevant images from the gallery. In SBIR, the training and testing data are from the same categories, and the biggest challenge in addressing SBIR is the modal gap between sketch and image. SBIR with zero-shot setting, called as ZS-SBIR, is proposed due to the data scarcity problem of human sketches and human-annotated samples [7, 23]. The zero-shot setting means that the training categories called as seen categories and the testing categories called as unseen categories are disjoint, and this setting brings up a new issue of semantic inconsistency between seen categories and unseen categories.

In recent years, in order to address the issue of semantic inconsistency, most of the research on ZS-SBIR has focused on incorporating external semantic information to facilitate the transfer of knowledge from seen categories to unseen categories or to alleviate the modal gap between different modalities. At the very beginning, a series of research [4, 7, 15] aims to bridge the seen and unseen classes through semantic embeddings. In general, these semantic embeddings are obtained through annotations. But these annotations require additional human labor costs to obtain. Then, some methods [11, 16, 17] based on pretrained convolutional neural networks (CNN) or pretrained vision transformer (ViT), have utilized knowledge distillation to preserve the powerful representation capability for transferring knowledge from seen categories to unseen categories. Although these methods using knowledge distillation have achieved great success, they still need external semantic information, such as class labels. However, these methods can also result in poorly discriminative features for retrieval due to knowledge distillation. To solve the problem brought by knowledge distillation, they have utilized class labels to make the features more discriminative. Recently, a method called ZSE [9] has been proposed. Compared to previous methods, it has a different perspective on addressing ZS-SBIR: the visual correspondence between sketch and image. It achieves superior performance using a transformer-based model, without relying on any external semantic information such as texts or class labels. However, it designs a kernel-based relation network to learn the relationships between sketches and images at every pair and ignores the relationship at higher levels, such as class-level and modality-level. And it also fails to preserve the generalization capability brought by the ViT used as the backbone to build the model.

Motivated by the above observations, we propose a method that shares the same idea as ZSE to address ZS-SBIR without external semantic information by learning the visual correspondence between sketch and image. Specifically, as shown in Fig. 1, we design a dual-pathway transformer-based structure corresponding to sketches and images, respectively. This structure takes data from the two modalities as input in order to establish local correspondences between them. In addition, a triplet loss is used for preliminary alignment between retrieval tokens from sketch and image. In order to maintain the relationship at various levels, such as instance-level, class-level and modality-level, a distribution alignment loss is employed to prevent the alignment of data only at the instance-level. Besides, a teacher ViT is employed to perform knowledge distillation on the self-attention modules using the instance-level kd loss to maintain the generalization capability brought by the ViT for constructing the self-attention modules. Extensive experiments on three benchmark datasets of ZS-SBIR verify the superiority of our method.

We summarize our contributions in this paper as follows:

-

1.

We propose a novel method based on a transformer-based dual-pathway structure to learn the visual correspondence between sketch and image to address ZS-SBIR. The method achieves superior performance in ZS-SBIR without any external semantic information which requires extra human labor cost.

-

2.

We propose a distribution alignment loss, which aligns the data from sketch and image in a global view and maintains the relationships between sketch and image at various levels, such as instance-level, class-level and modality-level.

-

3.

We introduce knowledge distillation using the instance-level kd loss, which preserve the generalization capability brought by the ViT used as the backbone to build the model.

2 Related Work

2.1 Zero-Shot Sketch-Based Image Retrieval (ZS-SBIR)

ZS-SBIR is a challenging task that must simultaneously address the inherent modal gap and the semantic inconsistency. Pioneering researches [4, 7, 15] in ZS-SBIR are inspired by the knowledge transfer mechanism in zero-shot learning, has used the semantic embeddings obtained from the labeled category-level texts extracted from the text-based model, to support knowledge transfer from the source domain (seen categories) to the target domain (unseen categories).

Liu et al. [11] used the CNN-based model pretrained in ImageNet as the backbone to map the data from different modalities to the semantic space of the pretrained model, and knowledge distillation was introduced for the first time to preserve the rich semantic information brought by the pretrained model to transfer knowledge. Tian et al. [16] also used knowledge distillation and selected DINO [1] as the backbone, which has a strong ability to detect global structural information. In addition, they proposed the hypersphere learning framework to align the data from different modalities.

Recently, Lin et al. [9] proposed ZSE that differed from previous work in that it thought a cross-modal matching problem such as ZS-SBIR as the comparisons of groups of key local patches, which had the advantage of not requiring external semantic knowledge and achieved superior performances in ZS-SBIR. In this paper, we adopt the same idea of ZSE to address ZS-SBIR by learning a local visual match between sketch and photo, but we perform the match from a global perspective and use knowledge distillation to prevent catastrophic forgetting.

2.2 Vision Transformer(ViT)

Transformer [19] was originally proposed for machine translation and has achieved tremendous success in many fields of artificial intelligence, such as natural language processing and computer vision. ViT [6] comes from the idea of applying Transformer structures to computer vision, and is a transformer-based image classification model with excellent representational capabilities and strong transferability that can be applied to other vision tasks such as object detection and semantic segmentation.

Subsequently, several researches [2, 5] have found that reasoning based on cross attention was shown effective on image classification, few-shot learning and sketch segment matching, they have tried to learn visual correspondence at different levels between different images by cross-attention mechanism. In this paper, we use the ViT with powerful representation ability to build self-attention module and use it to produce visual tokens that correspond to the most informative local regions, and a cross-attention module followed by self-attention module to learn a local visual match between sketch and photo.

The overview of our proposed method. A dual-pathway structure is designed to learn visual correspondences between sketches and images. Triplet Loss is applied to retrieval tokens for preliminary alignment. Distribution Alignment Loss eliminates the domain gap between sketch and image by aligning their different latent feature distributions. Instance-level KD Loss is used for the Pseudo ImageNet Label produced by the Self-Attention Module or Teacher ViT, to preserve generalization.

3 Methodology

In this section, following the overall scheme of our proposed framework which is illustrated in Fig. 1, we explain the main modules and learning objectives of our method in detail.

3.1 Cross-Domain ViT

To establish patch-to-patch correspondences between sketches and images, as shown in Fig. 1, we design a dual-pathway structure corresponding to sketches and images, respectively. Specifically, sketches and images are first used to obtain tokens have rich visual information independently within the modality through the corresponding self-attention module, and then interact with tokens from other modality through the corresponding cross-attention module to establish local correspondences between tokens from the two modalities. Furthermore, triplet loss is applied to our proposed method for preliminary alignment of different modalities tokens from the dual-pathway structure.

Self-attention Module. We select ViT to build the self-attention module. ViT is composed of L layers of multi-head self-attention (MSA) and Feed-Forward Network (FFN) blocks. The inputs of ViT are first resized to a fixed resolution. Subsequently, each input is divided into a sequence of patches of fixed resolution. A learnable class token cls for image recognition is added. We replaced the cls with a learnable retrieval token \(x_{ret}\) to obtain the global features of an image or sketch for retrieval. All tokens including \(x_{ret}\) are \(d-\)dimensional token and they are \(X_{0}=(x_{ret},x_{1},\dots ,x_{n})\), \(x_{i}\) is the visual token and n is the number of visual tokens. For the \(i-\)th layer of MSA and FFN, the MSA module has \((W_{q},W_{k},W_{v})\), which project the same token into Queries, Keys and Values, and the whole process of MSA can be formulated as follows:

where \(X_{i-1}\) is the output of the last layer or \(X_{0}\) and \(\psi \) is softmax operation, the feed-forward process can be formulated as follows:

where LN is layer normalization.

Cross-Attention Module. We use the method proposed by ZSE [9] to build cross-attention module. Each cross-attention module takes visual tokens and \(x_{ret}\) from all modalities as inputs to build the pairwise connections between tokens from the two modalities. In detail, the sketch Query \(Q_{s}\) and the image Query \(Q_{p}\) are swapped, and the cross-modal attention of the sketch can be formulated as:

Similarly, the cross-modal attention of the image can be formulated as:

Triplet Loss. To align retrieval tokens from different modalities, i.e., sketch and image, we use triplet loss to make the sketch retrieval token \(x_{ret}^{s+}\) close to the image retrieval token \(x_{ret}^{p+}\) that has the same class, and away from the image retrieval token \(x^{p-}_{ret}\) that has different class, and the triplet loss for a batch of N can be formulated as:

where m is the margin.

3.2 Gaussian Distribution Based Domain Alignment

Wang et al. [20] propose that different domains datasets with different latent feature distributions can be aligned under the guidance of the Gaussian prior. This alignment can build a common feature space for the datasets from different domains and the common space has the discriminative features to achieve excellent performance in eliminating the domain gap between different domains. So, we adopt a Gaussian prior to guide the alignment between the sketch and image in order to alleviate the domain gap between them, specifically we follow the method proposed by Wu et al. [22] and utilize the Kullback-Leibler divergence to align \(x^{s}_{ret}\) of sketches and \(x^{p}_{ret}\) of images with a common Gaussian distribution. With our proposed dual-pathway structure, a batch of retrieval tokens \(X_{ret} = \{ x_{ret}^{i} \in R^{d} \}^{N}\) representing the global visual information of sketches or images can be generated from a batch of training samples. We sample a batch of random features \(F = \{ f_{i} \in R^{d} \}^{N}\) from Gaussian distribution \(\mathcal N(0,1)\) simultaneously and the distribution alignment loss \(\mathcal L_{da}\) can be formulated as:

where KL is the Kullback-Leibler divergence. By applying \(\mathcal L_{da}\) to both \(x^{s}_{ret}\) and \(x^{p}_{ret}\), the distributions of the two modalities can be aligned under the guidance of Gaussian distribution and the domain gap between sketch and image is indirectly mitigated.

3.3 Instance-Level Knowledge Distillation

Since ViT is pretrained on large-scale image dataset, e.g., ImageNet, it has a powerful discrimination capability to provide probability vectors containing fine-grained semantic information for the input images. However, when ViT is used as the backbone to build the self-attention module and finetuned in a much smaller ZS-SBIR dataset, its rich knowledge originally learned from ImageNet is eliminated. Inspired by the method proposed by Tian et al. [17], we introduce instance-level knowledge distillation to our method. More specifically, as the Fig. 1 is illustrated, we use the teacher ViT and self-attention module to produce probability vectors, which are originally used to predict the categories from ImageNet and we dub them as pseudo ImageNet label. Then, we let self-attention module to mimic teacher ViT’s response by aligning the pseudo ImageNet label. However, this alignment operation is only applied to images because of the domain gap between the sketches and images from ImageNet.

Given an image \(p_{i}\), it is fed into the teacher and the self-attention module to obtain pseudo ImageNet label \(e^{t}_{i}\) and \(e^{s}_{i}\), and the instance-level knowledge distillation loss \(\mathcal L_{ikd}\) can be formulated as:

3.4 Overall Objective

Finally, the full objective function of our method can be formulated as:

where \(\lambda _{1}\), \(\lambda _{2}\) and \(\lambda _{3}\) are weight factors to balance the contributions of \(\mathcal L_{tri}\), \(\mathcal L_{da}\) and \(\mathcal L_{ikd}\), respectively.

4 Experiments

4.1 Datasets and Setup

Datasets. We evaluate our method on three benchmark datasets for ZS-SBIR, i.e., Sketchy [14], TU-Berlin [8] and QuickDraw [4]. Sketchy has 12,500 natural images and 75,471 sketches in 125 categories. Liu et al. [10] extended it by adding another 60,502 natural images to alleviate the data imbalance between two modalities. There are two kinds of seen and unseen class divisions for Sketchy, we refer to them as Sketchy and Sketchy-NO. The former one [10] randomly selects 25 classes as unseen classes, and the latter one [23] selects 21 classes which do not overlap with the classes in ImageNet. TU-Berlin has 13,419 natural images and 20,000 sketches in 250 categories. Zhang et al. [24] extended it by adding another 204,489 natural images. One seen and unseen class division [15] is widely used for TU-Berlin and it selects 30 categories as unseen classes. QuickDraw has 330,000 sketches and 204,000 images in 110 categories. QuickDraw has a seen and unseen class division [4] and this division also selects 30 classes that do not overlap with the classes in ImageNet.

Implementation Details. We use PyTorch as an implementation framework to implement our method with a Geforce RTX2080ti GPU. The ViT pretrained on ImageNet-1K is used to build the self-attention module, which consists of 12 layers of MSA and FFN blocks and a fully-connected layer to produced 1000 dimensional pseudo ImageNet labels. The cross-attention module only contains one layer. The dimension of retrieval tokens and visual tokens is 768. The input size of the sketch or image is \(224 \times 224\). AdamW is used as the optimizer and the learning rate is \(10^{-5}\). The batch size is set as 64 with 2 gradient accumulation steps. To obtain \((x_{ret}^{s+}, x_{ret}^{p+}, x_{ret}^{p-})\) for the triplet loss, each batch consists of 32 sketches from the same category and 32 images from two categories, and half of the images in the batch have the same category as the sketches. Epoch is set to 30 for training the model. \(\lambda _{1}\), \(\lambda _{2}\) and \(\lambda _{3}\) are set to 2.0, 0.1 and 1.0 in all experiments. In the test phase, we use the retrieval tokens for retrieval.

Evaluation Protocol. Following the previous works [11] in ZS-SBIR, we utilize precision (Prec@k) and mean average precision (mAP@k) as the evaluation protocols for fair comparisons. In all experiments, these evaluation protocols are computed using cosine similarity as the distance metric (Table 1).

4.2 Comparison with the State-of-the-Arts

Comparison Methods. We compared our method with some baselines, including ZSIH [15], SEM-PCYC [7], DOODLE [4], SAKE [11], PDFD [3], DSN [21], RPKD [17], SBTKNet [18], Sketch3T [13], TVT [16], and ZSE [9]. There are two retrieval approaches in which ZSE is used and we compare our method with both approaches for a fair comparation with ZSE. One is using the matching scores of the sketch and image output from the relation network, referred to as ZSE-RN. The other is using retrieval tokens for retrieval, referred to as ZSE-Ret. It is worth noting that all methods, except ours and ZSE, utilize external semantic information, such as text or class labels.

Overall Results. We evaluate our method on Sketchy, Sketchy-NO, TU-Berlin and QuickDraw, and compare the experimental results with other baselines in the table. Compared to the state-of-the-art ZSE, we surpass it in most of the results, which highlights the superiority of our efficient Gaussian distribution based domain alignment and knowledge distillation for preserving knowledge.

When compared to other methods that use external semantic information, our method significantly outperforms them on Sketchy and TU-Berlin. We also have the best mAP@all result on QuickDraw. The results on Sketchy-NO are slightly worse than some of them, because the unseen categories of Sketchy-NO do not overlap with the classes in ImageNet and it is tough to improve the results without any external semantic information. All of this shows the effectiveness of our method since we do not utilize any external sematic information.

4.3 Further Analysis

Ablation Study. We evaluate the effect of each loss term our method used by ablating one of them in the training phase. The experimental results are shown in Table 2, where “w/o” means the ablating behavior. From the comparison of each variant and our full model, we can draw the following conclusion: 1) \(\mathcal L_{tri}\) is the most substantial one among the three losses, which directly aligns the retrieval tokens used for retrieval, since the variant without \(\mathcal L_{tri}\) drops significantly and preforms worse than other variants. 2) The performance of the variant without \(\mathcal L_{da}\) indicates that adopting Gaussian prior to guide the alignment between sketch and image can alleviate the domain gap between them. 3) The variant without \(\mathcal L_{ikd}\) performs slightly worse than the full model. This observation shows that \(\mathcal L_{ikd}\) can make the backbone retain the extensive knowledge learned from the large-scale ImageNet.

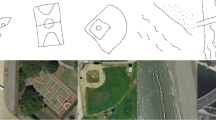

Retrieval Results. As shown in Fig. 2, we visualize the top 10 retrieved results of sketches queries, where correct and incorrect candidates are marked with checkmarks and crosses, respectively. The majority of the top 10 images retrieved using our approach resemble the query sketches in terms of the overall object pose and shape characteristics, even the incorrect retrieved results have a similar shape to the queries. This observation proves the validity of our method.

Visualization of Embeddings. As shown in Fig. 3, we evaluate the effect of our method in semantic alignments across modalities by utilizing the t-SNE tool. We conduct this visualization on both seen and unseen data from Sketchy. From Fig. 3, we can find that our method successfully clusters seen data from different modalities. To some extent, the unseen data can also cluster together by our method. Furthermore, most of the categories are properly separated regardless of modalities. This observation is a good indication of the effectiveness of our method in aligning different modal data and demonstrates a strong generalization ability to align data from unseen classes.

5 Conclusion

This paper tackled ZS-SBIR by focusing on learning a local visual correspondence between sketch and photo. We proposed a transformer-based dual-pathway model to learn the local visual correspondence between sketch and photo. In this way, the semantic inconsistency is minimized. In order to eliminate the modal gap, triplet loss and distribution alignment loss were introduced to align the data from different modalities. Furthermore, knowledge distillation was introduced to maintain the generalization capability. We conducted extensive experiments on three benchmark datasets, and our method achieves competitive results without external semantic information compared to the baselines. In the future, we will focus on addressing ZS-SBIR by exploring a more effective solution to learn the visual correspondence between sketch and photo.

References

Caron, M., et al.: Emerging properties in self-supervised vision transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 9650–9660, October 2021

Casey, E., Pérez, V., Li, Z.: The animation transformer: visual correspondence via segment matching. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 11323–11332, October 2021

Deng, C., Xu, X., Wang, H., Yang, M., Tao, D.: Progressive cross-modal semantic network for zero-shot sketch-based image retrieval. IEEE Trans. Image Process. 29, 8892–8902 (2020). https://doi.org/10.1109/TIP.2020.3020383

Dey, S., Riba, P., Dutta, A., Llados, J., Song, Y.Z.: Doodle to search: practical zero-shot sketch-based image retrieval. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2179–2188 (2019)

Doersch, C., Gupta, A., Zisserman, A.: Crosstransformers: spatially-aware few-shot transfer. In: Proceedings of the 34th International Conference on Neural Information Processing Systems. NIPS’20, Curran Associates Inc., Red Hook, NY, USA (2020)

Dosovitskiy, A., et al.: An image is worth \(16 \times 16\) words: transformers for image recognition at scale (2021)

Dutta, A., Akata, Z.: Semantically tied paired cycle consistency for zero-shot sketch-based image retrieval. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2019

Eitz, M., Hildebrand, K., Boubekeur, T., Alexa, M.: An evaluation of descriptors for large-scale image retrieval from sketched feature lines. Comput. Graph. 34(5), 482–498 (2010)

Lin, F., Li, M., Li, D., Hospedales, T., Song, Y.Z., Qi, Y.: Zero-shot everything sketch-based image retrieval, and in explainable style. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 23349–23358, June 2023

Liu, L., Shen, F., Shen, Y., Liu, X., Shao, L.: Deep sketch hashing: fast free-hand sketch-based image retrieval. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2862–2871 (2017)

Liu, Q., Xie, L., Wang, H., Yuille, A.L.: Semantic-aware knowledge preservation for zero-shot sketch-based image retrieval. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), October 2019

Saavedra, J.M., Barrios, J.M.: Sketch based image retrieval using learned keyshapes (LKS). In: British Machine Vision Conference (2015). https://api.semanticscholar.org/CorpusID:11324587

Sain, A., Bhunia, A.K., Potlapalli, V., Chowdhury, P.N., Xiang, T., Song, Y.Z.: Sketch3t: test-time training for zero-shot SBIR. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7452–7461 (2022). https://api.semanticscholar.org/CorpusID:247762119

Sangkloy, P., Burnell, N., Ham, C., Hays, J.: The sketchy database: learning to retrieve badly drawn bunnies. ACM Trans. Graph. (TOG) 35(4), 1–12 (2016)

Shen, Y., Liu, L., Shen, F., Shao, L.: Zero-shot sketch-image hashing. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3598–3607 (2018)

Tian, J., Xu, X., Shen, F., Yang, Y., Shen, H.T.: TVT: three-way vision transformer through multi-modal hypersphere learning for zero-shot sketch-based image retrieval. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, no. 2, pp. 2370–2378, June 2022. https://doi.org/10.1609/aaai.v36i2.20136, https://ojs.aaai.org/index.php/AAAI/article/view/20136

Tian, J., Xu, X., Wang, Z., Shen, F., Liu, X.: Relationship-preserving knowledge distillation for zero-shot sketch based image retrieval. In: Proceedings of the 29th ACM International Conference on Multimedia, pp. 5473–5481. MM ’21, Association for Computing Machinery, New York, NY, USA (2021). https://doi.org/10.1145/3474085.3475676

Tursun, O., Denman, S., Sridharan, S., Goan, E., Fookes, C.: An efficient framework for zero-shot sketch-based image retrieval. Pattern Recognit. 126, 108528 (2022). https://doi.org/10.1016/j.patcog.2022.108528, https://www.sciencedirect.com/science/article/pii/S0031320322000097

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Wang, J., Chen, J., Lin, J., Sigal, L., de Silva, C.W.: Discriminative feature alignment: improving transferability of unsupervised domain adaptation by gaussian-guided latent alignment. Pattern Recognit. 116, 107943 (2021). https://doi.org/10.1016/j.patcog.2021.107943, https://www.sciencedirect.com/science/article/pii/S0031320321001308

Wang, Z., Wang, H., Yan, J., Wu, A., Deng, C.: Domain-smoothing network for zero-shot sketch-based image retrieval. ArXiv abs/2106.11841 (2021). https://api.semanticscholar.org/CorpusID:235593135

Wu, Y., Song, K., Zhao, F., Chen, J., Ma, H.: Distribution aligned feature clustering for zero-shot sketch-based image retrieval (2023)

Yelamarthi, S.K., Reddy, S.K., Mishra, A., Mittal, A.: A zero-shot framework for sketch based image retrieval. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11208, pp. 316–333. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01225-0_19

Zhang, H., Liu, S., Zhang, C., Ren, W., Wang, R., Cao, X.: Sketchnet: sketch classification with web images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1105–1113 (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Gao, Z., Wang, K. (2024). Cross-Modal Visual Correspondences Learning Without External Semantic Information for Zero-Shot Sketch-Based Image Retrieval. In: Lu, H., Cai, J. (eds) Artificial Intelligence and Robotics. ISAIR 2023. Communications in Computer and Information Science, vol 1998. Springer, Singapore. https://doi.org/10.1007/978-981-99-9109-9_34

Download citation

DOI: https://doi.org/10.1007/978-981-99-9109-9_34

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-9108-2

Online ISBN: 978-981-99-9109-9

eBook Packages: Computer ScienceComputer Science (R0)