Abstract

In the last decade, generative models have seen widespread use for their ability to generate diverse artefacts in an increasingly simple way. Historically, the use of evolutionary computation as a generative model approach was dominant, and recently, as a consequence of the rise in popularity and amount of research being conducted in artificial intelligence, the application of evolutionary computation to generative models has broadened its scope to encompass more complex machine learning approaches. Therefore, it is opportune to propose a term capable of accommodating all these models under the same umbrella. To address this, we propose the term evolutionary generative models to refer to generative approaches that employ any type of evolutionary algorithm, whether applied on its own or in conjunction with other methods. In particular, we present a literature review on this topic, identifying the main properties of evolutionary generative models and categorising them into four different categories: evolutionary computation without machine learning, evolutionary computation aided by machine learning, machine learning aided by evolutionary computation and machine learning evolved by evolutionary computation. Therefore, we systematically analyse a selection of prominent works concerning evolutionary generative models. We conclude by addressing the most relevant challenges and open problems faced by current evolutionary generative models and discussing where the topic’s future is headed.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

In recent years, the growth of interest in artificial intelligence research and development has sparked the development of increasingly more complex systems in many fields. In particular, generative models have seen widespread use in the fields of machine learning and evolutionary computation for their ability to generate new instances that follow the probabilistic distribution of a set of pre-existing ones. Due to their recent advancements and impressive results, generative models have become a hot topic. Their outputs can be diverse and applied to a broad range of application domains, e.g. image, music, text, engineering and game design.

Within generative machine learning, the proposal of generative adversarial neural networks (GANs) in 2014 by Goodfellow et al. [72] dramatically changed the landscape in research on generative models by proposing an effective adversarial way of training them. Consequently, GANs quickly gained acclamation to the extent of being coined as “the most interesting idea in the last 10 years in Machine Learning” by Yann LeCun. However, before the advent of GANs, evolutionary computation was the de facto approach employed in generative modelling [171]. With the rapid surge of interest around GANs and other deep learning generative approaches, most machine learning-based approaches surpassed what most evolutionary computation generative approaches and models have achieved. This was the case until the last few years when a paradigm shift happened propelled by the maturity of the field of evolutionary machine learning, where methods from evolutionary computation and machine learning are combined as a unified solution.

This chapter surveys the work on evolutionary generative models. We propose this term to identify generative models that use evolutionary computation in any part of the generative process. This definition of evolutionary generative model means that a substantial body of the surveyed work includes plain evolutionary computation approaches that date back to the 1980s, although authors such as Richard Dawkins and Karl Sims have never described their work as evolutionary generative models. Given the increasing adoption of evolutionary machine learning techniques by the research community as well as the rise of the popularity of generative models, the study of evolutionary generative models is ever more frequent in the literature. Despite this, to the best of our knowledge, there is still no comprehensive review focussed on the existing literature for evolutionary generative models. Therefore, in this chapter, we survey the existing literature related to this type of model and analyse existing work from both a historical and scientific point of view.

During the survey, we found that the evolutionary generative model can be divided into four different categories based on the role of machine learning in the generative process: evolutionary computation without machine learning, evolutionary computation aided by machine learning, machine learning aided by evolutionary computation, and machine learning evolved by evolutionary computation. The division along the four categories is central to the structure and narrative of this chapter.

The document outline is as follows. In Sect. 10.2, we introduce the core concepts and definitions regarding evolutionary generative models and outline the main properties of these models, thus providing a knowledge base for the chapter and defining its scope. Then, in Sect. 10.3, we propose a taxonomy to classify evolutionary generative models. Next, in Sect. 10.4, we provide a brief historical overview to contextualise research on this type of generative model, describing the most relevant works and presenting a visual timeline. The following four Sects. 10.5, 10.6, 10.7 and 10.8, analyse the collected papers of the four defined categories. Then, in Sect. 10.9, we overview open problems in the area of evolutionary generative models and identify new research opportunities. Finally, Sect. 10.10 lays the closing remarks by enumerating the main contributions, opportunities and the conclusion of this chapter.

2 Fundamentals

We define an evolutionary generative model as a generative model that employs evolutionary computation in any part of the generative process. Although the evolutionary part of this definition is explicit, we believe that generative model may have different interpretations.

When we look at the broader concept of generative model and generative modelling, we can encounter different definitions. For instance, in machine learning, generative models are considered models capable of generating new data instances. More formally, given a set of data instances X and a set of labels Y, generative models capture the joint probability p(X, Y), or just p(X) if there are no labels. Therefore, a generative model describes how a dataset is generated in terms of a probabilistic model that can create instances from a distribution similar to the training data instances. In statistics, a generative model is a statistical model of the joint probability distribution p(X, Y) of a given observable variable X and target variable Y. In graphic design, a generative process is concerned with the creation of an algorithm, or model that produces multiple designs that instantiate a concept. Considering these definitions and our analysis of varied works in this area, we can define a common ground in what concerns the specifications that characterise generative models.

A generative model typically implements an algorithmic process that generates outputs that serve a certain objective or concept. This way, the generated outputs tend to follow a given distribution within an objective class, thus forming a space where the outputs share common characteristics. In addition, the generative model can often receive input information that influences the generative process and consequently changes the outputs. This generative process can be implemented using evolutionary computation or enhanced by it.

During our analysis of existing evolutionary generative models, we identified five properties which we consider relevant to study in each model: application domain, evolutionary algorithm, population, representation and fitness. These 1properties are outlined in Fig. 10.1 and described in the following paragraphs.

Domain corresponds to the application domain of the model being analysed: image, music, text or other applications such as engineering, architecture and even less common disciplines such as dance [59] and culinary recipes [152]. It can be argued that this property pertains to the applications of the model and not to the model itself. However, as far as evolutionary computation is concerned, we found that most algorithms follow specific representations and parameterisations according to the application domain.

Evolutionary Algorithm establishes which evolutionary paradigm is employed by the evolutionary generative model, namely, genetic algorithms, genetic programming or evolutionary strategies.

Population relates to the fact of the generative model having a single or multiple populations of individuals being evolved. Within approaches with multiple populations, we have two different types: cooperative and adversarial. In a cooperative setup, multiple populations collaborate to achieve a common goal, where typically each population specialises in a specific task or sub-problem, and their combined efforts contribute to the overall solution. On the other hand, in an adversarial setup, different populations are evolved and compete with each other.

Representation refers to the structure of the genotype. Based on the categorisation of evolutionary art systems by Machado [123], we generalised the categorisation and divided the representations used in the analysed works into three main types: descriptive, parametric and procedural. In a descriptive representation, we evolve values that directly define the properties of each individual. In a parametric representation, we also evolve values, but they are used as input parameters for a model that generates each individual. This way, individuals are defined explicitly by the parametric model and the properties of these individuals are controlled by varying the parameter values of the model, which are encoded in the genotype. In a procedural representation, on the other hand, we evolve a sequence of procedures or rules, which can be seen as a model that is then used to generate the individual. In summary, while descriptive and parametric representations are data oriented, as they encode values, procedural representations are process oriented, since the evolutionary algorithm evolves, for instance, mathematical expressions, networks or rules.

Fitness evaluation in the generative process is crucial, and according to the literature, there are several ways of doing it and it depends on many factors. However, in this chapter, we are mainly interested in whether the fitness evaluation changes throughout the evolutionary process or not. Thus, we divided the surveyed works into two categories: static and dynamic. In the case of static fitness, if the individual is the same, no change should occur to its fitness throughout the evolutionary process. As for dynamic fitness, we consider cases where the output of the evaluation may vary during the evolutionary process, i.e. when different fitness values can be assigned to the same individual in different moments of the evolutionary process. Among the approaches that implement dynamic fitness, we can find, for instance, those that employ user feedback to guide the evolutionary process, i.e. interactive evolutionary computation approaches. We consider that fitness assignment based on user feedback, i.e. interactive evolutionary computation, is dynamic.

All these properties helped to structure and sort the analysed works, as can be seen later in this chapter, in Tables 10.1 and 10.4, which contain the analysed evolutionary generative models.

3 Taxonomy

We propose a taxonomy to classify evolutionary generative models into four categories according to the existence and role of machine learning in the generative model. The proposed categories are described next.

Evolutionary computation without machine learning—systems where the generative model only uses evolutionary computation with no intervention of any kind of machine learning technique. The modelling is purely based on evolutionary computation and the evaluation typically involves user guidance, hardwired fitness functions or a set of rules and conditions.

Evolutionary computation aided by machine learning—systems that combine evolutionary computation and machine learning, where the utilisation of machine learning enhances the capability of the evolutionary generative model. For instance, using the activation of a machine learning classifier to assign fitness and guide evolution towards outputs that maximise the classifier activation or using a clustering technique to aid in the selection of evolved individuals.

Machine learning aided by evolutionary computation—systems which are machine learning-based and where evolutionary computation is employed to enhance the capability of the machine learning part. For instance, neuroevolution approaches fall into this category; the same applies to approaches that explore the latent space of a GAN to generate suitable outputs or use evolutionary computation instead of gradient optimisation to train or fine-tune generative machine learning models.

Machine learning evolved by evolutionary computation—systems in which evolutionary computation evolves entire machine learning generative models or entire machine learning components. For instance, neuroevolution of augmenting topologies (NEAT) evolves artificial neural networks and their components using a genetic algorithm or the evolution of populations of GANs.

4 Historical Overview

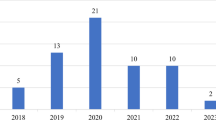

This section provides a brief historical overview of key publications in each of the four proposed categories for evolutionary generative models. In particular, in each of the following subsections, we summarise the publication timeline of each category while presenting a table containing several examples and their main properties. Figure 10.2 presents a timeline for the four proposed categories.

Timeline showing all publications on evolutionary generative models cited in this chapter. Publications are ordered chronologically (oldest at the top and most recent at the bottom) and divided horizontally by category (from left to right: evolutionary computation without machine learning, evolutionary computation aided by machine learning, machine learning aided by evolutionary computation and machine learning evolved by evolutionary computation). Each publication is represented with a rectangle containing the corresponding reference number. The colour of each rectangle indicates the number of citations of the corresponding publication according to the scale shown at the bottom of the figure (darker colours indicate a greater number of citations). The number of citations was queried to Google Scholar on June 7, 2023

4.1 Evolutionary Computation Without Machine Learning

This category of evolutionary generative models is the one with the highest volume of publications (please refer to Fig. 10.2), which is explained by the fact that before the popularisation of GANs and neuroevolution, evolutionary computation approaches represented the early instances of evolutionary generative models.

The biomorphs presented by Dawkins [52] in his book The Blind Watchmaker, in 1986, were one of the earliest examples of using evolutionary computation to create images using user interaction, basically setting the start for interactive evolutionary computation. Afterwards, in 1991, Sims [185] employed user-guided evolution to create images, solid textures and animations encoded as Lisp-based expressions of mathematical functions, variables and constants, also referred to as symbolic expressions. The idea of using symbolic expressions in evolutionary algorithms had previously been explored by Koza [100] in 1990 and would eventually give rise to the nowadays prolific field of genetic programming. Despite gaining popularity later on, the approach of using evolutionary computation for image generation was not yet common in the computer science community at this point in time (see Fig. 10.2). That would change, however, in 1992 with the work by Todd and Latham [199], who popularised evolutionary art in general with their work on the generation of 2D and 3D images and by making it available to the general public. In line with this idea, in 1999, Draves [58] created the collaborative abstract artwork “Electric Sheep” that evolved fractal flames, a generalisation and refinement of the iterative function systems. Several researchers were experimenting with varied representations for evolutionary algorithms. Bentley and Kumar [14] explored the importance of different embryogenesis processes, i.e. the mapping from the genotype representation to phenotype, in the same generative design problem demonstrating the impact of having a direct genotype on phenotype mapping on the final solutions and evolution with distinct solutions using a genetic algorithm or genetic programming.

Given the impact and amount of publications regarding evolutionary computation for image generation, we can conclude that the first half of the 1990s sparked more interest in evolutionary art in general following its appearance. This interest was eventually picked back up around the turn of the millennium, with many works in evolutionary computation being published (see Fig. 10.2). Within this period, it is worth mentioning the work by Lewis [104] concerning aesthetic evolutionary design with network data flows, a direct acyclic graph representation of transformations on geometric primitives for generating specific types of images such as cartoon faces and human figure geometry. Greenfield [78] used the image generation approach of Sims [185] with a bio-inspired coevolutionary fitness scheme, inspired by hosts and parasites dynamics, to instantiate an evolutionary simulation for the generation of aesthetic image tiles. Aupetit et al. [7] created an interactive genetic algorithm to evolve parameters for an ant colony optimisation approach to generate paintings. Hart [80] proposed new mutation operators for expression-based evolutionary image generation and tree alignments schemes for improving the morphing between two individuals, thus enabling a smoother animation process and expanding the possible solutions for the cross-dissolve process between individuals [186]. Genetic programming was used extensively around this time, with a few more notable examples to be mentioned. Di Paola and Gabora [56] tried to evolve a portrait of Charles Darwin using genetic programming, an endeavour that presented many hurdles, such as handling fitness values plateaus which proved difficult to overcome. The work by den Heijer and Eiben [54] used a scalable vector graphics format as a genetic representation of their genetic programming approach for the evolution of abstract images. Outside of expression-based image generation, we should mention the work by Collomesse [35], which performed non-photorealistic rendering by generating images with pre-processed and parameterised brush strokes based on a starting image. To the best of our knowledge, although many genetic programming approaches have done it before [185], this work represents one of the first efforts in using genetic algorithms to evolve filters from existing images. In 2010, Machado et al. [131] presented an evolutionary engine that allows the evolution of context-free grammars using a graph representation and operators.

The early use of genetic algorithms in the generation and evolution of music was explored by Jacob [95], who employed an interactive system to assign fitness in music composition, and by Biles [18], who introduced the framework Genjam to generate Jazz solos. It is important to note that these first studies in music generation mainly focussed on the evolution of melodic progressions. One of the first studies specifically targeting metric and rhythm was performed by Horowitz [92] in 1994. Furthermore, around this period, the use of expression-based methods to create music was introduced by Spector and Alpern [188] with a system to create Bebop music using genetic programming. These first efforts were later picked up and extended upon, which saw the introduction of new frameworks and techniques. It is also worth mentioning the work by de la Puente et al. [53] in the early 2000s, which, to the best of our knowledge, corresponds to the first application of a grammatical evolution algorithm to music composition. Moreover, Donnelly and Sheppard [57] proposed the use of a genetic algorithm to evolve four-part melodic harmonies, and frameworks such as DarwinTunes [122] were introduced using genetic programming tree-like representation to represent full songs. In addition to this publication, it is important to mention MetaCompose [180], a framework still used nowadays, consisting of a graph-based chord sequence generator, as well as a melody and accompaniment generator. More recently, a system that has been gaining interest is Evocomposer [166]. The novelty of this framework is the hybrid evaluation function capable of multi-objective optimisation to solve a four-voice harmonisation problem, i.e. given a music line as input, the algorithm must generate the remaining three accompanying voices.

Concerning the domain of text generation, the body of existing research seems to be more sparse. At the beginning of the millennium, Manurung et al. [140] presented the first efforts in applying evolutionary techniques to the development of an English poetry generation system using genetic programming. Manurung [139] would later extend upon this research in poetry generation, this time employing grammatical evolution. Although poetry seems to be the preferred text type for generative systems, it is worth highlighting pioneers in other genres, such as the work by Montero and Araki [150] in 2006, who has worked on the generation of dialogue in natural language. Also in the mid-2000s, Hervás et al. [82] proposed an evolutionary approach to assist in the generation of alliterative text that preserves the meaning of a given input sentence. Gervás [70] used an evolutionary algorithm coupled with his “wishful automatic Spanish poet” (WASP) system for poetry generation.

Lastly, beyond the image and music domains, we should also note the integration of evolutionary techniques with other types of domains of applications throughout the timeline. It is the case of the work by Frade et al. [66], which uses genetic programming for the evolution of realistic video game terrain. Additionally, the work of Shaker et al. [183] used grammatical evolution for the generation of Super Mario Bros. levels. Aside from the efforts of creating gaming assets, Browne and Maire [26] presented an approach to evolve interesting game designs from a human perspective with the Ludi framework. Still, in the domain of game design, the work by Hoover et al. [87] represents one of the first systems using evolutionary techniques to develop multiple aspects of a level, including visuals, audio and gameplay, along with the work by Cook et al. [40] with the ANGELINA framework.

4.2 Evolutionary Computation Aided by Machine Learning

After summarising the timeline of evolutionary generative models that use solely evolutionary computation, we now move on to evolutionary generative models that, in addition to evolutionary computation, also use machine learning on any part of the generative process.

The machine learning approaches have aided evolutionary generative models mostly in two aspects: fitness assignment or auxiliary representations for selecting individuals. Starting with the latter, Saunders and Gero [176], in the early 2000s, proposed a system that used a self-organising map to categorise each generated image and acted as a short-term memory of their agent-based and evolutionary generative model. This is one of the first examples of using an auxiliary representation to help the evolutionary process. Nevertheless, based on our collection of works, most of the work done on evolutionary generative models aided by machine learning mostly uses machine learning to evaluate or assist in evaluating generated individuals. Until the early 1990s, the evolution of images had been done by resorting to user interaction to assign fitness to individuals, i.e. an interactive evolutionary computation approach. Albeit effective, interactive fitness assignment is tied to problems such as user fatigue or inconsistent evaluation, and it is hard to scale. The work by Baluja et al. [12] aimed to change this paradigm by proposing an automatic fitness assignment scheme based on the evaluation of images using machine learning, more precisely, an artificial neural network, to automate the evolutionary process. However, this approach only had partial success, the main problem being the employed artificial neural network was not able to generalise and distinguish between different visually striking image features and properly guide the evolutionary process. Most works at that time tended to use interactive fitness assignment schemes or opted for a semi-automatic form with the help of machine learning approaches, as is the case of the incorporation of an artificial neural network to aid fitness assignment in the enhanced GenJam framework proposed by Biles et al. [17].

In 2008, Machado et al. [133] experimented with automatic fitness assignment using artificial neural networks, similar to the work by Baluja et al. but promoting an adversarial scenario between an artificial neural network and the evolutionary computation approach. The artificial neural network was trained to discriminate among images previously generated by the evolutionary approach and famous paintings. Then the evolutionary approach was used to generate images that the artificial neural network classifies as paintings. The images created throughout the evolutionary run were added to the training set, and the process was repeated. The iterative process led to the refinement of the classifier forcing the evolutionary approach to explore different types of images after each refinement. Based on this work, Correia et al. [41] used a machine learning approach trained with multiple object detectors to evolve figurative images consistently, i.e. images that contain a certain object according to the machine learning approach. Although different types of images generated resemble the object, some of the images evaluated by the machine learning approaches yielded high confidence but did not resemble it or were exploits of the machine learning approaches that did not correspond to the intended object. The work was later extended by Machado et al. [134], generating images that were ambiguous from a human and machine learning approach perspective. Following up on the idea of having fitness assignment schemes with machine learning approaches and their exploits, the work by Nguyen et al. [156] demonstrated how easily state-of-the-art machine learning approaches based on deep artificial neural networks can be fooled, i.e. classify images with high confidence of belonging to a certain class, when in reality the images do not resemble that class from a human perspective. The authors have shown that it is possible while using a simple genetic algorithm with a direct encoding or using compositional pattern-producing networks (CPPNs) to generate images that were unrecognisable by humans but recognised by deep neural networks with high confidence. Despite its exploits, machine learning intervention in fitness assignment schemes proliferated and is still one of the most used approaches for automating the fitness assignment process.

The idea of using machine learning to assist with fitness assignments was also used in other types of applications. For sound generation, the work by Johanson and Poli [96] presented the generation of music with automatic fitness using an artificial neural network to rate the generated music. Manaris et al. [137] proposed a hybrid approach that saw the combination of evolutionary techniques along with machine learning to compose and analyse music. In terms of text generation, akin to Manurung et al. [140], Levy [103] explored evolutionary techniques coupled with machine learning for poetry generation, generation of stories with plot induction by McIntyre and Lapata [148] and Al-Najjar and Hämäläinen [2] worked with the generation of culturally satirical movie titles. As an example of an evolutionary system employing machine learning for other applications, we include the work by Morris et al. [152] concerning evolutionary food recipes using a genetic algorithm and an artificial neural network trained on a starting set of recipes.

4.3 Machine Learning Aided by Evolutionary Computation

In this subsection, we shift our focus to evolutionary generative models that are machine learning-based and where evolutionary computation is employed to improve the capability of the machine learning part.

As can be seen in Fig. 10.2, this is the section with the least entries among all the categories. This can be mostly related to the fact that the generative models based on machine learning gained traction around 2014 with the advent of GANs, and most of the researchers working on evolutionary approaches started to explore models that combined the machine learning models with the evolutionary ones. Before 2014, the work by Manaris et al. [136] introduced in 2011 the Monterey Mirror, a system that uses a genetic algorithm to evolve musical phrases provided by a Markov model.

After 2014, in this category of evolutionary generative models, most publications pertain to latent variable evolution, a term initially coined by Bontrager et al. [22] to refer to the evolutionary exploration of a given latent space. Latent spaces in generative machine learning are intermediate compressed versions of the original inputs, e.g. bottleneck layer of auto-encoders. Thus, these compressed versions, which are typically composed of numerical vectors, encode original samples and by sampling the latent space and decoding via the generative model, we can explore samples that were learnt by the generative model. This exploration by sampling and decoding vectors of the latent space holds potential that has been researched until today. For instance, in the work of Bontrager et al. [22], the authors trained a GAN to generate fingerprints capable of matching a high percentage of users and afterwards explored the generated latent space of the model using covariance matrix adaptation evolution strategy (CMA-ES). In a different setting, Fernandes et al. [64] explored the latent space to evolve groups of diverse images using genetic algorithms and multi-dimensional archive of phenotypic elites (MAP-elites). In the context of a procedural content generation scenario and following similar procedures, Volz et al. [210] generated game levels using latent variable evolution with CMA-ES and a GAN. Moreover, in the work by Schrum et al. [178], instead of directly evolving latent vectors, it evolves parameters for CPPNs that will, in turn, generate latent vectors for GANs.

4.4 Machine Learning Evolved by Evolutionary Computation

Lastly, we overview the timeline of the last category of evolutionary generative models, in which evolutionary computation is used to evolve entire machine learning models or entire machine learning components.

A significant instance in the literature of machine learning models evolved by evolutionary computation for generative purposes would be the NEAT framework in 2002 by Stanley and Miikkulainen [191]. NEAT represented one of the first neuroevolution approaches capable of automatically evolving and adjusting the topology and weights of an artificial neural network. Stanley [190] would later extend upon his work by proposing CPPNs and evolving these networks with the NEAT method. Thus, in 2008, the CPPN-NEAT approach was made publicly available in the form of an online evolutionary tool named Picbreeder [181], capable of guiding evolution using an interactive fitness assignment scheme. Furthermore, Dubbin and Stanley [59] generated dance moves using the CPPN-NEAT model.

Around the same time, Togelius et al. [200] explored a competitive coevolutionary generative model for racing car controllers. These early studies regarding controllers led the way to the evolutionary exploration of game design features, which was mostly set in motion by Togelius et al. [201] with the evolution of controllers to play Super Mario Bros. Other works followed, such as the generation of shapes in video games by Liapis et al. [111] and the evolution of gameplay in the work of Cardona et al. [30], using competitive coevolution to evolve agents for both the player and the non-player characters in the classic game Pac-Man.

In the realm of music, we mention the work by Bell [13] in the generation of musical compositions by evolving populations of Markov models with a genetic algorithm. Despite this, and perhaps motivated by a saturation of research concerning traditional machine learning methods to improve the performance of GANs, the end of the last decade saw an increase in evolutionary machine learning research mostly based on coevolution. Aside from neuroevolution being central in the same timeline, evolutionary approaches that operate to evolve entire generative models, such as GANs, have a high computational cost. Here the advantages of the optimisation aspect of evolutionary computation were to be explored while minimising the computational cost of an already demanding training process of the state-of-the-art generative machine learning models.

Extending upon the GAN scheme, in 2018, Garciarena et al. [68] presented a method for generating Pareto set approximations of generators and discriminators. In this method, the authors resort to the coevolution of generators and discriminators where a single individual represents both components. In the same year, the Lippizanner framework was proposed by Al-Dujaili et al. [1] as the first system encompassing a spatial approach to coevolution where a grid of cells was used for evolving groups of GANs. Moreover, in 2019, Wang et al. [211] proposed the evolutionary GAN (E-GAN) model, which consisted of the evolution of a population of generators within a GAN model. This approach was later enhanced by Costa et al. [49] with the proposal of coevolutionary GAN, a model based on coevolution where an additional population of discriminators is evolved alongside the population of generators. Additionally, Toutouh et al. [203] extended the Lippizanner framework by incorporating the generator training method from the E-GAN model, thus effectively combining variation operators and population-level approaches to improve the diversity of GANs.

5 Evolutionary Computation Without Machine Learning

In the last section, we provided a historical overview and timeline of the works in each category. In this section, as well as in the three following ones, we will cover the collected works on evolutionary generative models. We start by analysing the works that only use evolutionary algorithms. Table 10.1 enumerates a series of evolutionary generative models which we consider to belong to the category evolutionary computation without machine learning. The listed publications are grouped by application domain (first column) and then sorted by year (second column) and by authors (third column).

Our analysis begins with applications in the image domain, including shape generation, 3D modelling and video generation, among others. Afterwards, we delve into sound applications, covering everything from sound effects to full-fledged music composition. Next, the most relevant works in text generation are analysed, and we finish the section with other applications which do not fit into the mentioned application domains. Each of the works analysed in each domain is further subdivided by the type of evolutionary algorithm used.

When it comes to using evolutionary computation as an image generative model, the two most common approaches are by far genetic programming and genetic algorithm, with genetic programming taking a slight preference within the literature analysed (see Table 10.1). Most of the approaches that employ genetic programming do so by evolving symbolic expressions that generate images through the process of feeding coordinates as input. This way, to evaluate an individual, each pixel coordinate is fed into the expression that will generate a colour (typically a tuple of values representing RGB channels) of which the output image, i.e. the individual, will be composed of. These approaches have been applied to the generation of a diverse range of image types, including aesthetic imagery [76,77,78, 80, 126, 172, 185, 186, 207, 214], fractals as iterated function systems [58] or the generation of specific image classes [9, 16, 55, 56, 124, 125, 169]. Other works define the features of an output image iteratively: vector graphics [54], stochastic sequencing of points to generate iterated function systems [31], evolving image filters [154] and the evolution of 2D renders of 3D compositions [129]. Regarding the type of fitness function, for the purposes of image generation with genetic programming, we observe that there are more static than dynamic (see Table 10.1), mostly due to automation of the generative model via hardwired fitness functions.

Akin to genetic programming, genetic algorithms were also used to generate abstract imagery [37, 52, 187]. Aside from image generation, genetic algorithm-based generative systems were used to generate drawings of a specific category by evolving properties of primitives such as coordinates, rotation and size. Line drawings are perhaps the simpler examples of these approaches. Within fractals, Ventrella [208] used a genetic algorithm to explore different configurations of the Mandelbrot set. Baker and Seltzer [11] evolved lines either using line drawings coordinates or by evolving agents that live on a canvas and spread their raster until they die. This aspect of agents having an energy limit to operate was a typical aspect of agent-based approaches [79, 145, 175].

The evolution of vector graphics is also explored in genetic algorithms and evolutionary strategies for image generation [15]. Aside from vectors, similar works made use of other primitives. Examples include examples of evolving images directly bitmaps via transformations and distortions [74], the generation of cartoon faces [104, 157], emojis [50], letterings [161], posters [167], the evolution of renderings based on existing images (non-photorealistic rendering) [35, 132] and the evolution of context-free grammars [131]. Animation is another field where genetic algorithms stand out, with some works evolving fractals [5, 6, 24] and screensavers [58].

Other works demonstrated the interplay between the image and music domains by generating images based on musical input directly [217] or based on systems evolved using music [170]. Other authors developed meta-models by evolving the parameters of these algorithms [7, 121] or resorted to an indirect way of generating the outputs, e.g. Greenfield [75], although not concerning image generation directly, produced controllers for drawing robots that, in turn, created visually appealing imagery.

Readers interested in a thorough analysis of evolutionary computation for the purposes of image generation are encouraged to read the works by Galanter [67], Romero and Machado [171] as well as Lewis [105].

Akin to image generation, genetic algorithms and genetic programming are again the most prolific techniques in the generation of sound, with genetic algorithms taking a slight preference within the literature reviewed. Concerning genetic programming, many works deal with the generation of musical melodies [97, 164], some of them of a particular style such as Jazz [188]. However, the largest body of research includes both the generation of rhythm along with accompanying melodies [51, 85, 101, 102, 122, 138, 147, 193]. Some of these are music guided towards specific properties of sound and musical score [51], while others focus on the methods behind the compositional process [193]. Other works resort to grammatical evolution for the generation of music that has been applied mainly to musical composition [3, 184], including melody formation [53, 115, 116, 119, 168] as well as live coding [117].

Regarding genetic algorithms, a number of works dealt with the generation of specific parts of music such as the melody [19, 71, 151, 196] and the rhythm [92]. Some studies delved into the problem of harmony production, i.e. given a musical line/voice, the system is tasked with the creation of accompanying lines/voices to have a complete musical piece with chords for each input note [57, 165, 166], with the harmonisation of bass lines being a recurring theme, in particular [165].

However, most works in this section tackle the broader problem of automated musical composition [61, 69, 93, 98, 159, 180], some of these deal with the generation of responses to given musical phrases instead of generating sound from the ground up [18]. It is worth noting that in musical composition, many approaches tackle different problems, such as generating variations from existing pieces, thematic bridging and live coding. The generation of variations deals with the composition of pieces given an existing source [4, 95, 197]. On the other hand, thematic bridging concerns the generation of lines capable of seamlessly transitioning between two given musical phrases [91]. More recently, thematic bridging was also explored using evolutionary strategies in the work by Martínez-Rodríguez [142], who proposed fitness as a weighted neighbourhood average distance metric between a solution and the target. More applications include live coding, which tackles the use of domain-specific languages for generating new musical patterns in a live concert setting [84].

Generally, we can observe that most systems for sound generation based on genetic algorithms and genetic programming implement static fitness assignments, with user-defined fitness being a minority. Still, in music composition, more recent works try to gather inspiration from different domains. An example of this is the work by Santos et al. [174], which bridges the fields of image and music generation by using images as a source of inspiration for the compositional process.

The above-mentioned works illustrate examples from the literature for evolutionary computation in music. Readers who seek a complete analysis of the application of evolutionary computation to music should refer to the following surveys by Todd and Werner [198], McCormack [146], Loughran and O’Neill [118] and Eigenfeldt et al. [60].

The body of work analysed regarding the application of evolutionary computation for text generation is rather lacking compared to the domains of image and sound. Nevertheless, the genetic algorithm is by far the most used approach, with applications to the generation of poetry [70, 139,140,141], dialogue (i.e. natural language generation) [150], as well as alliterative text [82]. In particular, it is worth mentioning the work by Manurung [139], where the evolution of grammars was used to generate poetry. For the more curious reader, a survey by Oliveira [158] analyses automated poetry, which goes beyond the scope of evolutionary computation.

Finally, we list works that one way or another concern the generation of artefacts that do not fit the categories of either images, sound or text. Here it is worth mentioning the automatic evolution of designs for Lace Knitting Stitch Patterns by Ekárt [62], and the modelling of terrain maps by Frade et al. [66], both resorting to genetic programming techniques. Moreover, the works dealing with 3D [28, 177] and shape design [153] are worth noting. Similar to what was verified for sound, text and other applications, most of these applications implement fixed fitness assignment as well. Lastly, another avenue where evolutionary computation has been applied is to aid game design. In this context, we include Ludi, a general game system introduced by Browne and Maire [26] to synthesise and evaluate new games capable of captivating the interest of human players, as well as the use of a \((1+1)\) evolutionary strategy for the generation of decks of cards by Cardona et al. [29]. Regarding genetic algorithms, we can mention the works by Cook et al. [40] and Cook and Colton [39] in the automated and cooperative coevolution of game design. Still pertaining to game design, some other examples consist in the co-generation of game levels alongside a human designer [120] as well as the evolution of level design to increase the feeling of in-game tension [114]. Refer to the survey of Liapis [109] for a thorough analysis of the applications of evolutionary computation and procedural content generation, among other techniques to the generation of game design.

In terms of representation used in the analysed evolutionary generative models, we found instances of the different types of representation, with a clear predominance of procedural representations (70 procedural, 23 descriptive and 19 parametric representations).

6 Evolutionary Computation Aided by Machine Learning

This section includes approaches where models are inherently evolutionary, but their functionality or performance is improved with machine learning approaches. A common example is an approach where the evolutionary model uses machine learning in their fitness assignment or one of the components of the evolutionary computation model is replaced by machine learning. Table 10.2 presents examples of evolutionary generative models, which we consider to belong to the category evolutionary computation aided by machine learning. The listed publications are grouped by application domain (first column) and then sorted by year (second column) and by authors (third column).

Similar to Sect. 10.5, the use of genetic programming in this section mainly pertains to the generation of specific image classes/types [41, 127, 128, 134, 143, 195, 209] or to aesthetic imagery [12, 42]. In addition to this, genetic programming methods for the transformation of existing images have also been presented, such as the evolution of image pipelines by Correia et al. [45]. Machado et al. [133] and Correia et al. [42] explored the stylistic change in evolutionary art in a framework similar to GANs using an adaptive classifier along with a genetic programming engine. Similarly, Baeta et al. [10] proposed another example of a system having a competition between isolated components, where the generator of a standard deep convolutional GAN is replaced by a genetic programming approach.

Regarding genetic algorithms for the image domain, examples of machine learning for the fitness assignment phase include the use of artificial neural networks either by evolving false positive images [156], false negatives [44] or even to perform data augmentation [32, 43]. Lopes et al. [113] and Martins et al. [144] also employed a genetic algorithm guided by a machine learning model to evolve vector graphics, and stencils for typefaces, respectively. Similar to the work by Bergen and Ross [15] (see previous section), both modalities of fitness function (static and dynamic) were explored, the latter as a separate experiment and the former by mixing a static target function with user-defined options. Graph evolution is another example of the application of genetic programming to image generation, as exemplified by Vinhas et al. [209].

Concerning genetic programming, there are examples for the generation of melodies [96, 163], including Jazz [188], and the generation of rhythm with accompanying melodic tracks, explored by Manaris et al. [137] as well as Spector and Alpern [189]. Using genetic algorithms, many works dealt with the generation of specific parts of music such as melody [71] and rhythm [27]. Other works focussed solely on harmony generation [162], i.e. musical responses, instead of generating sound from scratch. Like in the category evolutionary computation without machine learning, automated music composition is also addressed in this section with the work of Biles et al. [17], who used artificial neural networks for fitness assignment. Although almost all examples of the application of genetic algorithms to music generation dealt with music, the broader exploration of audio, in general, was addressed by Ianigro and Bown [94].

Relating to text generation, a genetic algorithm was used by Levy [103] for the purposes of poetry generation with an artificial neural network as a measure of adaptive fitness. Other examples include the study of satire generation by Winters and Delobelle [213] and by Al-Najjar and Hämäläinen [2]. Finally, examples of other applications include the works by Liapis [108] for the evolution of colour palettes, the evolution of scene elements by Colton [36] and the introduction of a system for generating novel culinary recipes by Morris et al. [152].

In this section, most of the analysed works in terms of representation have representativity of the three types of representation but with a prevalence of the procedural ones (20 procedural, 9 descriptive and 6 parametric representations).

7 Machine Learning Aided by Evolutionary Computation

In this section, we include the approaches where evolutionary computation is used to enhance the functionality or performance of the machine learning model. As an example, latent variable evolution belongs in this section because these approaches use evolutionary computation to explore a latent space previously built using a machine learning model. Table 10.3 contains examples of evolutionary generative models which we consider to belong to the category machine learning aided by evolutionary computation. The listed publications are grouped by application domain (first column) and then sorted by year (second column) and by authors (third column).

The idea of latent variable evolution was introduced by Bontrager et al. [22, 23] to explore the latent space of inputs to the generator network of a GAN using a CMA-ES approach to generate fingerprints that maximise the number of imposter matches. Concerning genetic algorithms, Schrum et al. [178] evolved parameters for CPPNs, which in turn generated a variety of different latent vectors for GANs.

Other examples concerning the application of genetic algorithms to latent variable evolution include the work by Grabe et al. [73], who used Gaussian mixture models to explore possible latent vectors to evolve distinct images, along with interactive methods to perform the evolutionary search by Zaltron et al. [215], among others [21, 64, 135]. Outside of latent variable evolution but still pertaining to image generation, Colton [38] also proposed a system to generate image filters using a genetic algorithm with MAP-elites or the work by Korde et al. [99], which uses an evolutionary algorithm to train in the initial iterations to stabilise the weights before using normal optimisation techniques to train GANs.

Concerning other applications, an approach combining genetic algorithms and Markov chains was proposed by Manaris et al. [136] to evolve musical phrases. Volz et al. [210] worked on the generation of game levels using latent variable evolution with adversarial training. Moreover, the work by O’Reilly et al. [160] in cyber-attacks and defence mechanisms using genetic programming in an adversarial setting, among other techniques, explored generating and evolving executable adversarial programs. Lastly, we mention the work of Liapis et al. [110] for the creation of suitable spaceships for arcade-style games using CPPN-NEAT and novelty search.

One of the most noticeable aspects of this section lies in the fact that, when compared to other ones, the body of work concerned with aiding machine learning with evolutionary computation is significantly less, especially when compared to Sect. 10.5. Moreover, in terms of representation, we can observe that most approaches are parametric, with just a few being procedural (in total, there are 3 procedural, 11 parametric and no descriptive representations). This is because the analysed works mostly use latent variable evolution, which evolves parameters to generative machine learning models.

8 Machine Learning Evolved by Evolutionary Computation

This section addresses approaches where evolutionary computation is used to evolve one or more populations of generative machine learning models. We present direct applications of evolutionary computation to machine learning or parts thereof. These applications are direct in the sense that the evolutionary computation techniques are applied as is, i.e. without modifications, over the set of machine learning individuals. As an example, here we include the NEAT-like approaches because the NEAT algorithm evolves a population of artificial neural networks. Table 10.4 presents examples of evolutionary generative models which we consider to belong to the category machine learning evolved by evolutionary computation. The listed publications are grouped by application domain (first column) and then sorted by year (second column) and by authors (third column).

Most of the research within the literature concerning machine learning models evolved by evolutionary computation has to do with the evolution of artificial neural networks and CPPNs [190], and with the use of neuroevolution techniques such as NEAT [191]. Because most fixed-topology neuroevolution models typically generate artificial neural networks to be used for classification tasks, such approaches are not within the scope of this section. For literature regarding the use of evolutionary approaches in supervised and unsupervised learning, see Sects. 1.6 and 2.5, respectively.

There have been a number of works inspired by the CPPN-NEAT framework proposed by Stanley [190] to generate images by augmenting and evolving a CPPN [63, 181, 182, 206, 216]. It is worth noting that some of the mentioned works have been applied to the GAN model, as is the case with the replacement of a standard deep convolutional GAN generator with a CPPN evolved with NEAT, proposed by Ekern and Gambäck [63]. Another example related to GANs is the work by Turhan and Bilge [206], which combined a variational auto-encoder with the pixel-wise generative capability of CPPNs to create a GAN-variational auto-encoder model, i.e. a GAN with an inference mechanism in the form of an extra encoder network. Similar to Sect. 10.6, despite most models in this section not exhibiting competition, these last two studies can be identified as having a competition between isolated components [63, 206].

Apart from NEAT approaches, but still within the context of genetic algorithms, Wang et al. [211] proposed E-GANs, which consisted in the evolution of populations of generators. Similar works also used GANs to evolve samples from the generator [34]. Costa et al. [49] worked on the coevolution of sub-populations of generators and discriminators based on CoDeepNEAT [149] with multiple variants, e.g. one based on novelty search with local competition [47]. The technique of evolving both the generator and the discriminator was applied in other works, both with different sub-populations for each component [33] and in a single population where each individual represents a tuple consisting of both components [68].

Regarding coevolutionary approaches, we can identify a sub-category of models dealing with spatial coevolution. This idea was first proposed by Al-Dujaili et al. [1] with the introduction of the Lipizzaner framework using a grid of cells where GANs are allowed to evolve and communicate. Other works expanded on Lipizzaner by hybridising it with E-GAN and Lipizzaner to increase solution diversity [203] and proposing a ring topology for the spatial cell grid instead of a toroidal one [205]. Other works apply the same idea by scaling the framework in the context of a high-performance computing setting for the medical domain [65] or by further exploring the features of Lipizzaner [81, 204]. Lastly, although during our literature review, we did not find a substantial body of work in this section outside of the image domain, it is interesting to note the evolution of Markov chains in the realm of music using genetic algorithms [13], an approach later explored further by Scirea [179] for the generation of music from scientific papers.

In the realm of music generation, many of the studies analysed here also employ NEAT to evolve CPPNs. These approaches typically use functional scaffolding, a technique that exploits the fact that a pattern of notes and rhythms in different instrumental parts of the same song is functionally related. These studies were initially performed by Hoover et al. [88] and Hoover and Stanley [89], who started by presenting a framework called NEAT Drummer for the generation of drum patterns, which was later extended to the application of full-fledged music composition [86, 90]. Concerning text generation, we point out the work of Liu et al. [112] pertaining to the creation of a category-aware model employing a genetic algorithm to evolve a population of GANs.

Albeit not many, there are a few noteworthy works in the scope of this section that are concerned with the generation of artefacts not pertaining to imagery or music. It is the case with the generation of shapes in video games by Liapis et al. [111], as well as other work exploring the coevolution of controllers [30, 200]. Furthermore, Dubbin and Stanley [59] generated dance moves using the CPPN-NEAT model.

As shown in Table 10.4, genetic algorithms account for the vast majority of approaches in this section (31 out of 32 papers), with only one using genetic programming to design both the structure and connectivity of the convolutional neural networks [192]. As a matter of fact, the standard NEAT framework representation is used in almost half of the models analysed herein and it is procedural by definition (in total there are 33 procedural, 2 parametric and no descriptive representations). Lastly, regarding the fitness assignment, we conclude that most approaches are dynamic, especially the ones in an adversarial setting.

9 Open Problems and Challenges

Throughout the history of evolutionary generative models, several problems have been tackled progressively. It is the case with the fitness assignment of early evolutionary computation as discussed in Sect. 10.4. The first explorations of the generative capabilities of evolutionary computation models required the user to select the outputs for the evolutionary generative model. For many years, and especially in the fields of evolutionary art and music generation, user fitness assignment was the de facto technique. However, researchers noted that having the user in the loop with the system presented an enormous bottleneck that had to be addressed for the field to progress, and thus some papers started tackling this issue [12]. Nowadays, even though interactive systems are still very prolific, the most standard way of dealing with fitness assignment is to have some automatic algorithm, be it analytical, statistical (such as Markov models and classifiers) or indirectly informed in some other way. This section addresses some of these problems along with the strides that have recently been taken in addressing them.

Throughout this survey, we have identified the following challenges when dealing with evolutionary generative models.

9.1 How to Represent the Space of Solutions Generated by the Model?

GANs and other generative machine learning approaches are characterised by having a latent space, from which one selects a sample from that space, inputs it into the model and generates the output solution. Moreover, GANs allow for the conditional exploration of solutions through algebraic vector operations such as addition, subtraction and interpolation, to mention a few, which will, in turn, ease the exploration of such solutions. However, most evolutionary generative models, especially those that apply evolutionary computation directly (see Sect. 10.5), do not aggregate solutions in such an organised latent space. Therefore, a way to better represent the space of generated solutions is oftentimes needed. In this scope, several viable alternatives are considered throughout the literature. Although not latent spaces, spatial representations of the solutions have been constructed by resorting to an archive that stores solutions according to their novelty [42, 144] or by employing manifold learning algorithms to aggregate solutions on a space with lower dimensionality with techniques such as t-distributed stochastic neighbour embedding (t-SNE) [48]. Another trending solution is to build a latent space by combining a variational auto-encoder into the model by training an additional network that learns to encode an image into a smaller latent vector. These are called the GAN-variational auto-encoder approaches [206]. At the moment, this challenge differentiates evolutionary computation without machine learning and evolutionary computation aided by machine learning approaches from machine learning aided by evolutionary computation and machine learning evolved by evolutionary computation because by using machine learning as a generative approach, typically the machine learning model will have the latent space with a solution space to be drawn. In the case of evolutionary computation-based approaches, we have solutions being evolved but they are independent solutions, unstructured, and not contained in a defined space, which can be seen as a drawback.

9.2 How to Navigate the Generated Space of Solutions?

This challenge concerns the exploration of all feasible solutions that can be generated by the model. Within the models that organise their solutions in a latent space, an effective way of exploring these solutions is to perform latent variable evolution directly over this space. Because a latent vector is the genotype representation of latent variable evolution, the types of algorithms used are mostly genetic algorithms [64, 73], and evolutionary strategies in some cases [22] (see Sect. 10.7 for a thorough analysis). For models where a latent space does not exist, the exploration of the solutions space cannot be done directly, and thus other methods need to be employed to aid solution exploration during evolution, e.g. exploration with variation operators or novelty search [110, 209]. Such methods typically involve hand-crafting specific variation operators to fence off the bias of the models. This can be seen, for instance, in the work by Li et al. [107] with the proposal of a new crossover operator for the E-GAN model and in the implementation of a mutation operator with features tailored to ease the process of interactive 3D design by Byrne et al. [28].

9.3 How to Evaluate the Generative Capabilities of a Model?

In this challenge, we are concerned with evaluating the generative capabilities of the model by itself without comparing it to similar generative models, whether evolutionary or not. This problem is often difficult to tackle as it depends a lot on the desired output of the model and the process itself. For instance, when it comes to music composition, Ng and Jordan [155] used genetic programming to examine evolving compositional processes instead of a musical piece. As further explained by Loughran and O’Neill [118], it is imperative to look beyond the generated output of a model when investigating the ability of the system to compose. This way, as analysed by Li et al. [106], there are two ways to evaluate a music composer: by evaluating the music it composes or by evaluating the composition process. This idea can be extended outside the specific domain of music generation. Given the difficulties in evaluating isolated solutions, we might see more works where models evaluate the generative process itself and not only the output solutions.

9.4 How to Compare Evolutionary and Non-evolutionary Models?

Following the challenge of isolated evaluation, we turn our attention to the comparison of performance between different generative models, namely, between generative models with and without evolutionary computation. It is demonstrated that in adversarial generative models, the loss function is not stable enough to be taken as a comparative measure of generative performance [46]. In fact, it is shown that even simple metrics such as the inception score, which applies the Inception Model [194] to every generated image in order to get the conditional probability distribution of classes in the original data [173], fails to capture similarities between generated images adequately. For this reason, the Frechét inception distance metric [83] has been proposed. Although the Frechét inception distance was specifically developed to evaluate the performance of GANs, it can be applied to any image generation model. The Frechét inception distance directly compares the statistics of real samples with the statistics of generated ones, allowing for the capture of features used for a collection of both real and generated images gathered from training the network on image classification tasks. One major shortcoming of the inception score and Frechét inception distance scores is that they fail to determine whether the proposed model is overfitting or underfitting [20]. This problem pertains not only to evolutionary generative models but also to standard generative models. To overcome this hurdle, metrics such as the Reconstruction Error [212] and the Kernel Inception Distance were introduced [20]. Even though applying these metrics in evolutionary generative models still seems very rare, we posit that using these more advanced metrics will become more widespread in the study of evolutionary generative models as research in the field keeps taking off.

9.5 How to Improve the Computational Efficiency and Scalability of Evolutionary Generative Models?

Generally speaking, when it comes to improving computational efficiency, there are two ways a model can be optimised: through macro-optimisations, i.e. algorithmic improvements in our case, or through micro-optimisations, which in computer science typically means small statement-level optimisations to the source code. However, by micro-optimisations, we refer to the adaption of the model to run in different hardware according to the demands of operations in its algorithm. As an example, the work by Turhan and Bilge [206] tackles the problem of efficiently generating high-resolution versions of handwritten digits, which is a slow task in conventional GAN-variational auto-encoder models. The proposed model converges more efficiently and thus faster than the baseline models by allowing the feature-wise reconstruction of a GAN-variational auto-encoder hybrid model instead of the standard pixel-wise reconstruction approach. This is a typical example of an algorithmic improvement. Another noteworthy example in the category of computational performance is TensorGP [9]. TensorGP is a genetic programming engine that not only performs algorithmic optimisations by changing the internal representation of symbolic expressions from the traditional tree graph to a directed acyclic graph, thus avoiding code re-execution but also has the option to evaluate expressions in dedicated hardware if available. This way, while most of the evolutionary stages such as variation operators, selection and other control mechanisms run on the central processing unit (CPU), the evaluation can take place in graphics processing units (GPUs) or tensor processing units (TPUs), which are capable of vectorising the pipeline of genetic programming operations, something that is still not implemented in many off-the-shelf genetic programming engines [8]. With the continued slowing down of Moore’s law and GPU computing still on the rise, it makes sense to expect an increase in the number of frameworks using parallel capable hardware not only in evolutionary generative models but in generative models.

Coupled with the problem of computational efficiency, there is the problem of complexity associated with the generative process. The early works by Sims [185] in image generation using symbolic expressions are straightforward examples of low computational cost in terms of the generative process. As a counter-example, the work by Correia et al. [45] in enhancing image pipelines is fairly heavier than most generative processes because the evolutionary generative model encompasses more transformation steps. In this work, genetic programming was used to evolve image filters that, aside from requiring the traditional evaluation of the individual tree graphs, are then applied to a predefined image, which is, in turn, evaluated using an external classifier for fitness assignment. Overall, it seems that as time passes by, generative models tend to complexify, demanding more computational resources and thus becoming harder to scale and deal with.

9.6 How to Improve the Interaction of Evolutionary Generative Models?

Another aspect that we believe to be extremely relevant is the way we interact with evolutionary generative models. Not all generative models are easy to use for the average user, offering a poor user experience which will probably limit their reach to a broad audience. Therefore, it is essential to explore effective ways to control and visualise the different elements of an evolutionary generative model. This goes beyond facilitating a given action. Interaction can help to understand a certain functionality or aspect of the models that are often very technical.

For instance, although interfaces for large language models such as ChatGPTFootnote 1 have been trending as of late, the generative pre-trained transformer (GPT) model from which ChatGPT was built is not widely known despite having been created earlier [25]. The main reason for this lies in the fact that ChatGPT has a simple, natural and inviting user interface. Likewise, Midjourney,Footnote 2 a system which offers a model for the generation of images from natural language descriptions in the form of text prompts, is another example of an increasingly popular artificial intelligence service. Both examples have the benefit of being easily accessible: ChatGPT through the OpenAI website and Midjourney through a server hosted on Discord, a popular chat application.

Historically, interfaces in evolutionary generative models were created to aid the user to interact with them, control the evolutionary process, preview individuals and assign fitness [124, 181]. Other systems allow the designing of fitness functions [130, 144], a process that poses challenges in terms of interpretability and explainability to its design. There are other systems that allow for inspecting and navigation of the outputs [80, 143, 182], which also poses challenges from the implementation and user interaction perspectives.

However, the majority of evolutionary generative models possess neither this level of user-friendliness nor accessibility. In fact, most evolutionary generative model frameworks use their own interfaces, with different layouts and sometimes unintuitive functionality, lacking both generalisation and scalability.

We believe that the interaction with these models can be planned not only with human users in mind but also with other computational systems with which they can be integrated, following the example of nowadays machine learning-based systems. This can be done by developing APIs that allow for the development of new systems while promoting their modularity. The solution of using APIs solves the decoupling of the generative model, however, does not solve the part of adding a standardised experience for the interaction with the generative model which can be seen as an open problem.

9.7 How to Increase the Availability of Evolutionary Generative Model Systems?

As a final open challenge, we address the availability of the implemented systems to the general public. Within the surveyed literature, the number of papers with publicly source-code repositories is relatively scarce. Truth be told, this is not a problem specific to the field of generative models or even to artificial intelligence but one that is common in most research fields. Albeit a big challenge, websites such as huggingface.com and paperswithcode.com are a good step forward as they help disseminate and organise machine learning and artificial intelligence papers alike, along with their respective code. Moreover, we believe that having open access to new methods and their respective implementations is especially important to advance the field of evolutionary generative models.

Having identified the core challenges and future trends in evolutionary generative models, it is clear that some are inherently more difficult to tackle than others. Challenges such as the availability of implemented evolutionary generative model systems are more of an ongoing problem that, arguably, is not expected to be solved so soon as it is pervasive in many other fields. Similarly, despite recent breakthroughs, technical challenges such as the construction of organised solution spaces or the evaluation of the generative capabilities of a model are non-obvious challenges that, due to being very dependent on the type of evolutionary generative model, will likely keep posing challenges as the topic of evolutionary generative models evolves, and new approaches are proposed. In contrast, challenges such as bottleneck mitigation and overhead reduction can arguably be easier to implement in the sense that they typically consist of general techniques that can be applied to a wide range of models. For instance, with the continuous rise in parallel computing, techniques as well as research and development investments in hardware with vectorisation technologies are increasing. Nonetheless, instead of dwelling on the many hurdles surrounding this topic, it is also worth praising the number of positive efforts being put in place to help advance and develop research on evolutionary generative models.

10 Conclusions

In this chapter, we presented a comprehensive survey on evolutionary generative models, analysing their main properties, along with a taxonomy to categorise these models. The lack of a well-established categorisation of these models in the literature led us to identify four main categories of evolutionary generative models: evolutionary computation without machine learning, evolutionary computation aided by machine learning, machine learning aided by evolutionary computation and machine learning evolved by evolutionary computation.

The importance of this contribution is twofold. First, it aims to standardise or at least provide a basis for further classifications of evolutionary generative models while easing the process of analysing the existing body of research. Second, we collected and categorised some of the most prominent literature concerning evolutionary generative models. We are aware that the work in evolutionary generative models is extensive, and therefore it is not feasible to carry out an exhaustive survey of all existing approaches.

Early instances of evolutionary generative models started as plain evolutionary computation approaches. With the growth in the research and adoption of machine learning seen within the past decades, the evolutionary computation approaches evolved into models incorporating machine learning techniques. For this reason, we have performed an in-depth listing of applications, surveys, position papers and other research pertaining to and spanning from the beginnings of the use of evolutionary computation as a generative model to the state-of-the-art approaches. Overall, more than 200 publications ranging from plain evolutionary computation systems to evolutionary generative machine learning for the generation of images, music, text, as well as other domains were explored and classified. Each publication was classified according to the main properties that we have identified, namely, application domain, evolutionary algorithm, population, representation and fitness.

Furthermore, the extent of the present study made it possible to identify open problems and challenges for evolutionary generative models. Namely, challenges such as the need for adequate metrics for evaluating and validating generative performance, the aggregation of the generated outputs in a single, organised solution space as well as the search for well-suited operators capable of efficiently navigating through the associated solutions space is at the forefront of current open problems. It is worth noting that the growth of evolutionary generative models is tied to the maturity of the field of evolutionary machine learning, which, as an emerging field, is primarily propelled by the transparency and availability of the implemented systems to the general public. This challenge is in no way less relevant than the above-mentioned ones.

With the emergence of more promising methods such as GANs and other deep learning techniques, the use of evolutionary computation in the context of generative models might seem lost at first sight. However, based on recent trends demonstrated throughout this chapter, the future of the topic seems to point towards the symbiosis between both fields, where the power of machine learning interplays with the representation flexibility of evolutionary computation to improve the generative performance and computational efficiency of current and future evolutionary generative models.

References

Al-Dujaili, A., Schmiedlechner, T., O’Reilly, U.M.: Towards distributed coevolutionary GANs. In: Association for the Advancement of Artificial Intelligence (AAAI) 2018 Fall Symposium (2018)

Al-Najjar, K., Hämäläinen, M.: A master-apprentice approach to automatic creation of culturally satirical movie titles. In: Krahmer, E., Gatt, A., Goudbeek, M. (eds.) Proceedings of the 11th International Conference on Natural Language Generation, Tilburg University, The Netherlands, pp. 274–283. Association for Computational Linguistics (2018). Accessed from 5–8 Nov 2018

Albarracn-Molina, D.D., Raglio, A., Rivas-Ruiz, F., Vico, F.J.: Using formal grammars as musical genome. Appl. Sci. 11(9) (2021)

Alvarado, F.H.C., Lee, W.H., Huang, Y.H., Chen, Y.S.: Melody similarity and tempo diversity as evolutionary factors for music variations by genetic algorithms. In: Cardoso, F.A., Machado, P., Veale, T., Cunha, J.M. (eds.) Proceedings of the Eleventh International Conference on Computational Creativity, ICCC 2020, Coimbra, Portugal, pp. 251–254. Association for Computational Creativity (ACC) (2020). 7–11 Sept 2020

Angeline, P.J.: Evolving fractal movies. In: Proceedings of the 1st Annual Conference on Genetic Programming, pp. 503–511. MIT Press (1996)