Abstract

Deep Learning (DL) has accomplished best in class execution in medical imaging. In any case, these techniques for illness discovery center only around improving the precision of order or forecasts without measuring vulnerability in a choice. Knowing how much sureness there is in a PC-based clinical finding is crucial for getting clinicians’ confidence in the advancement and, in this way, further developing treatment. The COVID-19 pandemic has had a significant impact on many aspects of society, including air pollution. In some areas, lockdowns and reduced economic activity have led to temporary reductions in air pollution levels, but in other areas, the increase in residential heating and cooking has led to an increase in indoor air pollution. Recognizing Coronavirus in chest X-Ray (CXR) pictures is critical for assurance, assessment, and treatment. Nevertheless, decisive weakness in a report is a troublesome yet unavoidable task for radiologists. In this work, we use a deep convolution neural network (DCNN) to work on the demonstrative presentation of consolidated human–machine. This is critical in separating Coronavirus patients from those without the infection, where the expense of a blunder is exceptionally high. In this work, we have used Inception V3, VGG-16, VGG-19 methods.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

6.1 Introduction

Deep learning (DL) has made significant advancements in the field of medical imaging and diagnostics. In recent years, DL algorithms have been used to develop computer vision models that can accurately classify and detect various diseases, such as lung diseases, breast cancer metastasis, skin conditions, and diabetic retinopathy. Additionally, DL has been used to enhance medical imaging, such as X-ray and PET/CT scans, to improve accuracy and detect subtle changes that may be indicative of disease. Along these lines, it is fundamental to appraise weakness in clinical imaging as a piece of additional knowledge to direct forecasts toward work on the steadfast quality in just choosing. The COVID-19 disease, achieved by the SARS-CoV-2 virus, casually known as the Coronavirus, disrupts huge parts of the world. We know minimal about the best technique to get to a diagnosis, concerning what anticipations are suggested, not to mention what treatment works best in specific situations. We overall need to join powers to get this virus evened out.

Moreover, by all, we mean humans and machines. Innovation is critical to help us through this, and a particular occupation for artificial intelligence (AI) is not ordinary. The current framework (for example, confined picture data sources with ace named instructive assortment) for the distinguishing proof of Coronavirus positive patients is deficient, and manual acknowledgment is monotonous. With the increment in generally speaking frequencies, it is not unexpected that a DL-based arrangement will in a short time be made and gotten together with clinical practices to give cost-effective, exact, and effortlessly performed robotized ID of Coronavirus to help the screening philosophy. Regardless, notwithstanding imperative execution, DL models will make presumptuous forecasts for the most part (Ghoshal and Tucker 2020).

Our goal is not to accomplish a state-of-the-art approach yet instead to use DCNN to work on the diagnostic exhibition of joined human–machine. This is imperative in isolating Coronavirus patients from those without the sickness, where the expense of an error is very high. Hence, it is vital to appraise weakness in a model’s expectations to avoid Coronavirus misdiagnoses. In this paper, we examine how DCNN can assess weakness in DL to chip away at the demonstrative show of human–machine choices, utilizing straightforwardly available Coronavirus CXR datasets, and show that the assessed weakness in the figure has a solid association with characterization precision, consequently engaging the distinctive confirmation of bogus expectations or dark cases (Ghoshal and Tucker 2020). Early detection and accurate diagnosis of diseases are critical for successful treatment and management. Medical imaging, such as X-rays, CT scans, and MRI, can provide valuable information about a patient's health and can often detect signs of disease at an early stage. Yes, that is a potential concern with using deep learning algorithms for medical imaging and diagnostics. Overfitting is a common problem in machine learning where a model becomes too closely tied to the training data, resulting in poor performance on unseen data. In medical imaging, overfitting can occur when a deep learning model is trained on a limited dataset, and the model may perform well on the training data but poorly on new, unseen images. This can lead to incorrect diagnoses and false positive results, which can have serious consequences for patient care. The quality and number of information should also be improved to make the results clinically significant. Henceforth, more datasets should be attempted to join the clinically assembled CXR and CT images. DL has turned into the standard approach in doing combating COVID-19. In any case, the imaging data in COVID-19 applications might have insufficient, ambiguous, and erroneous marks, which gives a test to set up a distinct division and diagnostic network. At this moment, supervised DL strategies could be used. Labeling medical imaging data for deep learning algorithms can be a time-consuming and resource-intensive process. This is because each image must be manually annotated by a human expert, who must identify and label relevant features and structures within the image. Multi-focus concentrates on COVID-19 should likewise be progressed. Follow-up is essential in diagnosing COVID-19 and assessing treatment. We acknowledge that strategies from other related investigations could be obtained despite all local examinations. (1) In the forecast of other pneumonia maladies, AI-based philosophy could move the subsequent investigation of COVID-19 (Xu et al. 2019; Kourou et al. 2015; Kim et al. 2019; Hao et al. 2019). (2) The development inside and outside medical clinics could be joined as an extensive stretch for the COVID patients. (3) Multidisciplinary blend, i.e., medical imaging (Wang et al. 2018), regular language processing, and oncology and mix (Yuan et al. 2019), could benefit the subsequent general strategy of estimation for COVID-19 (Shi et al. 2020). The detection of COVID-19 using chest X-rays (CXR) is a challenging task, due to several factors. One of the main difficulties is the low contrast between the lungs and the surrounding tissue, as well as the presence of the ribs which can obscure or mask some of the relevant features. This is predominantly legitimate for DL-based methodologies as DL is famously being data-hungry. Addressing data imbalance is a key challenge in developing deep learning algorithms for COVID-19 detection using chest X-rays (CXR). Data imbalance refers to a situation where the number of samples in one class (e.g., COVID-19 positive cases) is much smaller than in the other class (e.g., COVID-19 negative cases). This can negatively impact the performance of deep learning algorithms, as they may be biased towards the majority class. To address this issue, researchers have proposed several strategies for improving the performance of CXR-based COVID-19 screening models. These include oversampling the minority class, undersampling the majority class, and using synthetic data augmentation techniques to artificially increase the size of the minority class (Pang et al. 2019; Zhang et al. 2020).

A few examinations have demonstrated the utilization of imaging strategies, such as X-Rays or CT, to discover trademark side effects of the novel crown infection in these imaging systems (Xu et al. 2020; Ng et al. 2020). Progressing assessments suggest the usage of chest radiography in the pandemic zones for the virtual screening of Coronavirus (Ai et al. 2020). Along these lines, the screening of radiography pictures can be used as a substitute to the PCR procedure, which shows higher affectability every so often (Fang et al. 2020). The principal bottleneck of the radiologist’s involvement with investigating radiography pictures is the visual checking of the unobtrusive experiences. This involves the utilization of shrewd methodologies that can consequently remove helpful bits of knowledge from the CXR that are attributes of COVID-19. Different examinations have demonstrated the limit of neural networks (NNs), especially convolutional neural networks (CNN), to unequivocally perceive the closeness of Coronavirus from CT channels (Gozes et al. 2020). Be that as it may, the datasets are regularly not freely accessible, which diminishes their entrance to the more extensive research network and further advancement of order procedures on institutionalized. We utilize state-of-the-art strategies to achieve these goals with consistent human data and show that human intercession in the planning can work on introducing the models and diminishing getting ready time (Ghoshal and Tucker 2020).

6.2 Related Works

In 2020, Shi et al. (2020) published a paper discussing the use of artificial intelligence (AI) in the context of COVID-19. The authors highlighted the potential of AI to provide safe, accurate, and efficient imaging methods for COVID-19 diagnosis and treatment. The intelligent imaging methods, clinical analysis, and spearheading research are evaluated in detail, covering the whole pipeline of AI-engaged imaging applications in COVID-19. Two imaging techniques, i.e., X-ray and CT, are utilized to exhibit the effectiveness of AI-enabled clinical imaging for COVID-19. Here in Farooq and Hafeez (2020), the Bayesian DL classifier has been prepared using the move learning procedure on Coronavirus CXR pictures to assess model weakness. Their investigation has demonstrated a solid connection between model vulnerability and precision of expectation. The target (Ghoshal and Tucker 2020) is to build open source and open access datasets and present a solid CNN framework for isolating Coronavirus cases from other pneumonia cases. This work involves top-tier getting-ready techniques, including dynamic resizing, designed learning rate finding, and discriminative learning rates to plan fast and precise excess NNs. Zhang et al. (2020) aimed to develop a new deep anomaly detection model for rapid and reliable screening of COVID-19. The use of deep learning models for anomaly detection has gained popularity in recent years, as they are capable of detecting patterns and deviations in large amounts of data, making them well suited for medical imaging analysis. We have gathered 100 CXR pictures of 70 patients affirmed with COVID-19 from the GitHub archive to assess the model execution. Nguyen (n.d.) conducted a review of the various AI techniques being used in different applications in the fight against the COVID-19 pandemic and highlighted the key roles of AI research currently. Gozes et al. (2020) created AI-based mechanized CT picture examination means for detection, measurement and following of COVID and show that they can separate coronavirus patients from the individuals who do not have the malady (Zhu et al. 2020). VHP (Virus-Host Prediction) was introduced to predict prospective virus hosts using DL computing. According to their findings, 2019-nCoV shares close infectivity with other human coronaviruses, namely the severe acute respiratory syndrome coronavirus (SARS-CoV), Bat SARS-like Coronaviruses, and the Middle East respiration syndrome coronavirus (MERS-CoV). Considering COVID-19 radiographical changes in CT pictures, authors planned to develop a DL procedure that could eliminate COVID-19’s graphical features to give a clinical end before the pathogenic test, this way saving significant time for disease control (Wang et al. 2020). Sujath et al. (2020) performed logistic regression (LR), Multi-layer Perceptron (MLP), and Vector Autoregression (VAR) models to make predictions on the Coronavirus Kaggle data to estimate the epidemiological pattern and rate of Coronavirus 2019 cases in India. The authors aimed to use machine learning techniques to better understand the spread and evolution of the COVID-19 pandemic in India and to provide insights into the future evolution of the disease. To detect COVID-19 from 3D CT images, a pitifully regulated DL-based programming method was created (Zheng et al. 2020). The lung region was divided using a pre-programmed UNet for each quiet, and the separated 3D lung region was fed into a 3D deep neural network (DNN) to estimate the likelihood of COVID-19.

In 2019, Alonso et al. presented the Coronavirus MobileXpert, a lightweight Deep Neural Network (DNN) based application for point-of-care Coronavirus screening using chest X-ray (CXR) images. The authors aimed to develop a portable and user-friendly tool for the screening of Coronavirus infections that can be easily deployed in a variety of settings, including remote and resource-limited areas. They arranged a course of action and completed a smart three-player information move and refining (KTD) structure, including a pre-arranged going to doctor (AP) network that removes CXR imaging highlights from the huge size of lung contamination CXR pictures, a changed inhabitant individual (RF) network that learns the fundamental CXR imaging elements to disengage Coronavirus from pneumonia and typical cases utilizing an inconspicuous measure of Coronavirus cases, and a set up lightweight clinical understudy (MS) network that performs on-gadget Coronavirus screening.

6.3 Experiment Setup

Analyzing the Biomedical image and retrieving the results about disease prevalence or the severity of the same is challenging. Incorporating the DL in phase with the traditional primary method ensures better classification and helps in earlier decision-making. When this process is incorporated with a visual way of representation, it is easy for all the disciplines of researchers (Godec et al. 2019). Machine learning was used in the initial stage of the research years back, but the invention of DL, a branch of machine learning, gained more popularity by how it works over the data in retrieving helpful information. NN acts as a brand ambassador in the DL perspective, where the structure is like the human brain’s neuron interface. The analogy to the message passing in the human system, NN works efficiently on the applied data. Deep Convolutional Neural Networks (DCNNs) are a type of artificial neural network commonly used in medical imaging tasks, including COVID-19 detection. DCNNs are designed to automatically learn and extract features from large amounts of image data. They consist of multiple layers of convolutional, activation, and pooling operations, and are capable of detecting patterns and correlations in images.

6.3.1 Dataset Description

The Kaggle COVID-19 CXR and CT scan dataset collected from the premier weblinks considers the image’s quality as the primary perspective, which helps in better findings. The following sources are utilized for the compiling purpose: radiopaedia.org, Figure1.com and the Italian Society of Medical and Intervention Radiology along with various attributes like patient identification number, data about the offset of infection indicated by the number of days, gender, age, findings, survival, view of X-ray or CT scan based on positioning during image capturing along with date and location based on the extraction place, clinical notes. Our work concentrated on the images and findings of the same. Based on the type of pneumonia, images are classified broadly into ARDS, Pneumocystis spp., Streptococcus spp., SARSr-CoV-1 or SARS, and SARSr-CoV-2 or COVID-19. Images cumulated are even from previous years, and COVID-19 images are present in critically infected patients scanning. ARDS stands for acute respiratory distress syndrome, a lung ailment that causes issues in all age groups. SARS indicates severe acute respiratory syndrome with CoV stands for coronavirus and, based on the severity of the same classified into 1 and 2. SARS with CoV2 is the current outbreak and is popularly called COVID-19 (Cohen et al. 2020; Bachir 2020; Abidin et al. 2018).

6.3.2 DCNN

In our work, for the embedding purpose, we used Inception V3, VGG-16, and VGG-19. Inception V3 was the product of Google’s deep neural network, and VGG’s were given by Oxford’s Visual Geometry Group. Transfer learning is the keyword in the DCNN methods that we have considered in our work. It is an approach where a NN model accomplished on a problem is utilized in another related work. Due to this, the system works faster, and accuracy gradually increases with the number of models and iterations (Lagunas and Garces 2018).

6.3.2.1 Inception V3

Based on inevitable setbacks of Inception V1 and V2, Inception V3 was designed to recognize the images. Inception V3 is a 48-layer deep neural network structure and quite complex to understand the basic configuration. It possesses many convolution layers and max pooling with fully connected NN. Based on the architecture, it has a Convolutional filter, Layers like Average pooling, Maxpooling, Concatenation, Dropout, SoftMax, and Fully connected (Esteva et al. 2017); the same could be inferred in Fig. 6.1 by small blocks in the Inception V3 part.

6.3.2.2 VGG-16

VGG-16 is a 16-layer DCNN architecture. In the 2014 ILSVR (ImageNet) competition, VGG-16 was the DCNN architecture used by the winner. To date, this DCNN architecture is observed to be one of the excellent vision model architectures for image processing. This architecture is focused on having 3*3 convolutional layers filter with mono-stride and used the same padding and max pool layer with a 2*2 filter of di-stride always rather than having many hyper-parameters. The entire architecture follows the same arrangement as mentioned above of convolution and max pool layers consistently. It has two fully connected layers followed by a SoftMax intended for output. The number 16 in the name (VGG-16) of architecture represents 16 layers with weight. This structure is massive, with about 138 million variables (Kolar et al. 2018).

6.3.2.3 VGG-19

VGG-19 is a variant of DCNN architecture with 19 layers. It has 19.6 billion FLOPs. VGG, in the name of architecture, stands for visual geometry group at Oxford. VGG is the successor of AlexNet. This is used as an exemplary classification architecture for datasets. This DCNN architecture is beneficial in facial recognition. The architecture consists of 16 convolutional layers, five max pool layers, three fully connected layers, and 1 SoftMax layer (Toğaçar et al. 2019).

6.3.3 Parameters Information

The workflow begins with creating the dataset preparation and applying the embedders, followed by sampling 1010-fold cross-validation in a stratified manner. NN parameters are two hidden layers with 100,100 neurons, with solvers, namely Adam, SGD, and L-BFGS-B varying with various activation like tanh, identity, Logistic, and ReLu for 200 iterations with a regularization value of 0.01 in the replicable training environment.

6.3.3.1 Adam

Adam is an adaptive learning proportion optimizer algorithm intended for training the deep neural network. It was first published at the ICLR conference for DL consultants in 2014. This algorithm controls the power of adaptive learning proportion for finding the individual learning proportion of each parameter.

6.3.3.2 Stochastic Gradient Descent (SGD)

SGD is the faster technique and performs a parameter update for every training example.

where {a(i), b(i)} are the training examples.

This high variance happens because the frequent updates and loss function will fluctuate. It helps in finding new and better local minima (Ruder 2016).

6.3.3.3 Limited Memory: Broyden–Fletcher–Goldfarb–Shanno Algorithm (L-BFGS-B)

The reason for calculation L—BFGS-B is to limit the nonlinear capacity of n factors

The vectors l and u address lower and upper limits of factors. This calculation is reasonable for huge issues. The memory prerequisite is generally (12 + 2 m) N, where m is the quantity of BFGS refreshes kept in memory and N is the elements of the model space. Practically speaking, m = 5 might be an average decision. Per emphasis, the quantity of augmentations goes from 4mN + N when no limitations are applied to m2N when all factors are limited. The program offers the freedom to have various limits for different marks of the model space. Postfix B demonstrates augmentation of L-BFGS (Mokhtari and Ribeiro 2015).

6.3.3.4 tanh

Its performance overperforms the logistic sigmoid function. It helps in classification among two targets. Used in feed-forward and value range −1 to +1.

6.3.3.5 Identity

The identity function could be a function that returns the identical value, which was used as its argument. It is also called an identity relation or identity map or identity transformation. Value range −∞ to −∞.

6.3.3.6 Logistic

It is used in the scenario where the output is to predict probability so it is obvious the values range between 0 and 1. Function in monotonic and differentiable.

6.3.3.7 ReLu

The Rectified linear unit is the quicker learning actuation work that has been the premier fruitful and generally utilized capacity. It offers better execution and speculation in DL contrasted with the Sigmoid and tanh enactment capacities. The ReLU conserves the properties of linear models by being virtually linear functions. This makes it easy to optimize. Value range between 0 and ∞ (Cao et al. 2018).

6.3.4 Evaluation and Classification

Various evaluation metrics obtained on applying parameters in the built DCNN are Area Under Curve (AUC), classification accuracy (CA), F1, precision, and the confusion matrix. Scatter plots mapped from the confusion matrix and the regression line substantiate our output (Nwankpa et al. 2018).

Figures 6.2, 6.3, and 6.4 illustrate the values for the Inception V3 with solver and activation functions. In Fig. 6.2, AUC has appreciable value with the combination of Adam with all activation. CA and F1 are perfect in the case of Adam with ReLu and logistic activation. Precision is comparatively high with all the combinations. In Fig. 6.3, SGD shows the good results for AUC, CA, F1, and precision for the ReLu activation. In Fig. 6.4, L-BFGS-B shows the best result for AUC, CA, F1, and precision with all the activation functions. Inception V3 is serving the best with the L-BFGS-B solver and all the activation functions.

Figures 6.5, 6.6, and 6.7 illustrate the values for the VGG-16 with solver and activation functions. In Fig. 6.5, AUC and precision have appreciable value with the combination of Adam with all activation. CA and F1 are perfect in the case of Adam with ReLu. In Fig. 6.6, SGD shows promising results for AUC and precision with the identity activation, CA and F1 good with ReLu. In Fig. 6.7, L-BFGS-B shows excellent results for AUC, CA, F1, and precision with all the activation functions. VGG-16 is also serving the best with the L-BFGS-B solver and all the activation functions.

Figures 6.8, 6.9, and 6.10 illustrate the values for the VGG-19 with solver and activation functions. In Fig. 6.8, all the evaluation metrics show the appreciable result with the combination of Adam with all activation. CA and F1 are perfect in the case of Adam with ReLu. In Fig. 6.9, SGD shows promising results for AUC and precision with the identity activation, CA, and F1 decent with ReLu. In Fig. 6.10, L-BFGS-B shows excellent results for AUC, CA, F1, and precision with ReLu and tanh rate is perfect compared to identity and logistic activation function. VGG-19 brings the best with the L-BFGS-B solver and all the activation functions on the comparison.

Figures 6.11 and 6.12 show the confusion matrix and scatter plot, respectively, for the Inception V3 process. In Fig. 6.11, out of 149 records, 127 are correctly classified, two records of Pneumocystis spp. have been misclassified as COVID-19, five records of SARS misclassified as COVID-19, four records of Streptococcus spp. have been misclassified as COVID-19, three records of COVID-19 misclassified as SARS, four records of COVID-19 misclassified as Streptococcus spp. The spread of these different records of the confusion matrix is illustrated in Fig. 6.12 with the scatter plot.

Figures 6.13 and 6.14 show the confusion matrix and scatter plot, respectively, for the VGG-16 process. In Fig. 6.13, out of 149 records, 134 are correctly classified, four records of COVID-19 misclassified as ARDS, two records of Pneumocystis spp. have been misclassified as ARDS, five records of SARS misclassified as COVID-19, two records of streptococcus spp. have been misclassified as COVID-19, five records of COVID-19 misclassified as SARS, three records of COVID-19 misclassified as Streptococcus spp. The spread of these different records of the confusion matrix is demonstrated in Fig. 6.14 with the scatter plot.

Figures 6.15 and 6.16 show the confusion matrix and scatter plot, respectively, for the VGG-19 process. In Fig. 6.15, out of 149 records, 130 are correctly classified, two records of Pneumocystis spp. and SARS, three records of Streptococcus spp. have been misclassified as COVID-19, five records of COVID-19 misclassified as SARS, two records of COVID-19 misclassified as of Streptococcus spp. The spread of these different records of the confusion matrix is demonstrated in Fig. 6.16 with the scatter plot.

6.4 Conclusion and Future Work

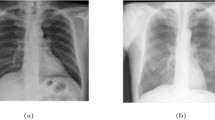

CNN is the hot research area used in many applications to make better decision-making. In this present work, we have considered both the CXR and CT scans for transfer learning. In the CT modality, axial and coronal views are considered. Posteroanterior, anteroposterior, and AP Supine (laying down) views are considered in the X-Ray modality. Incorporating similar images and applying the diagnosis will provide a higher level of inferences. With the available images, mapping COVID and accuracy received requires to be fine-tuned. Our system can rank the accuracy in Inception V3, VGG-19, followed by VGG-16.

In comparison with all the DCNN considered in our system, Inception V3 classified the most significant number of records correctly. Various other parameters or features of the clinical diagnosis are required to make a still more perfect classifier model. Other symptoms like the patient’s temperature, body ache, and other clinical information about the immune system of the infected will gradually increase the classification accuracy. As of now, the time required to find the infected person is challenging because of its asymptotic nature. Due to the mutation of the coronavirus, it is very tough for the practitioners to track in the initial stage itself. The perfect combination of the various experts from the virology department, medical practitioners, radiologists, and specific components of the information and communication experts will help make the system faster and provide treatment at the earliest. Indulging artificial intelligence along with some bio-inspired computing-based systems that possess more intelligence based on prior learning from similar cases with input from experts makes the best diagnosis system.

References

Abidin AZ, Deng B, D’Souza AM, Nagarajan MB, Coan P, Wismüller A (2018) Deep transfer learning for characterizing chondrocyte patterns in phase-contrast X-ray computed tomography images of the human patellar cartilage. Comput Biol Med 95:24–33

Ai T, Yang Z, Hou H, Zhan C, Chen C, Lv W, Xia L (2020) Correlation of chest CT and RT-PCR testing in coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology 200642:E32

Alonso RJ, Bagland V, Lods B (2019) Convergence to self-similarity for ballistic annihilation dynamics. J Math Pures Appl

Bachir (2020) COVID-19 chest X-ray. Retrieved April 14, 2020, from https://www.kaggle.com/bachrr/covid-chest-xray/kernels

Cao J, Pang Y, Li X, Liang J (2018) Randomly translational activation inspired by the input distributions of ReLU. Neurocomputing 275:859–868

Cohen JP, Morrison P, Dao L (2020) COVID-19 image data collection. arXiv preprint arXiv:2003.11597

Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S (2017) Dermatologist-level classification of skin cancer with deep neural networks. Nature 542(7639):115–118

Fang Y, Zhang H, Xie J, Lin M, Ying L, Pang P, Ji W (2020) Sensitivity of chest CT for COVID-19: comparison to RT-PCR. Radiology 200432:E115

Farooq M, Hafeez A (2020) COVID-ResNet: a deep learning framework for screening of COVID19 from radiographs. arXiv preprint arXiv:2003.14395

Ghoshal B, Tucker A (2020) Estimating uncertainty and interpretability in deep learning for coronavirus (COVID-19) detection. arXiv preprint arXiv:2003.10769

Godec P, Pančur M, Ilenič N, Čopar A, Stražar M, Erjavec A, Žagar L (2019) Democratized image analytics by visual programming through the integration of deep models and small-scale machine learning. Nat Commun 10(1):1–7

Gozes O, Frid-Adar M, Greenspan H, Browning PD, Zhang H, Ji W, Siegel E (2020) Rapid AI development cycle for the coronavirus (covid-19) pandemic: initial results for automated detection & patient monitoring using deep learning CT image analysis. arXiv preprint arXiv:2003.05037

Hao J, Kim Y, Mallavarapu T, Oh JH, Kang M (2019) Interpretable deep neural network for cancer survival analysis by integrating genomic and clinical data. BMC Med Genet 12(10):1–13

Kim DW, Lee S, Kwon S, Nam W, Cha IH, Kim HJ (2019) Deep learning-based survival prediction of oral cancer patients. Sci Rep 9(1):1–10

Kolar Z, Chen H, Luo X (2018) Transfer learning and deep convolutional neural networks for safety guardrail detection in 2D images. Autom Constr 89:58–70

Kourou K, Exarchos TP, Exarchos KP, Karamouzis MV, Fotiadis DI (2015) Machine learning applications in cancer prognosis and prediction. Comput Struct Biotechnol J 13:8–17

Lagunas M, Garces E (2018) Transfer learning for illustration classification. arXiv preprint arXiv:1806.02682

Mokhtari A, Ribeiro A (2015) Global convergence of online limited memory BFGS. J Mach Learn Res 16(1):3151–3181

Ng MY, Lee EY, Yang J, Yang F, Li X, Wang H, Hui CKM (2020) Imaging profile of the COVID-19 infection: radiologic findings and literature review. Radiology 2(1):e200034

Nguyen TT. Artificial Intelligence in the battle against coronavirus (COVID-19): a survey and future research directions

Nwankpa C, Ijomah W, Gachagan A, Marshall S (2018) Activation functions: comparison of trends in practice and research for deep learning. arXiv preprint arXiv:1811.03378

Pang G, Shen C, van den Hengel A (2019) Deep anomaly detection with deviation networks. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pp 353–362

Ruder S (2016) An overview of gradient descent optimization algorithms. arXiv preprint arXiv:1609.04747

Shi F, Wang J, Shi J, Wu Z, Wang Q, Tang Z, Shen D (2020) Review of artificial intelligence techniques in imaging data acquisition, segmentation, and diagnosis for COVID-19. arXiv preprint arXiv:2004.02731

Sujath R, Chatterjee JM, Hassanien AE (2020) A machine learning forecasting model for COVID-19 pandemic in India. Stoch Env Res Risk A 34:959–972

Toğaçar M, Ergen B, Cömert Z (2019) A deep feature learning model for pneumonia detection applying a combination of mRMR feature selection and machine learning models. IRBM

Wang X, Peng Y, Lu L, Lu Z, Summers RM (2018) Tienet: text-image embedding network for common thorax disease classification and reporting in chest X-rays. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9049–9058

Wang S, Kang B, Ma J, Zeng X, Xiao M, Guo J, Xu B (2020) A deep learning algorithm using CT images to screen for Corona Virus Disease (COVID-19). medRxiv

Xu Y, Hosny A, Zeleznik R, Parmar C, Coroller T, Franco I, Aerts HJ (2019) Deep learning predicts lung cancer treatment response from serial medical imaging. Clin Cancer Res 25(11):3266–3275

Xu X, Jiang X, Ma C, Du P, Li X, Lv S, Li Y (2020) Deep learning system to screen coronavirus disease 2019 pneumonia. arXiv preprint arXiv:2002.09334

Yuan J, Liao H, Luo R, Luo J (2019) Automatic radiology report generation based on multi-view image fusion and medical concept enrichment. In: International conference on medical image computing and computer-assisted intervention. Springer, Cham, pp 721–729

Zhang J, Xie Y, Li Y, Shen C, Xia Y (2020) COVID-19 screening on chest X-ray images using deep learning-based anomaly detection. arXiv preprint arXiv:2003.12338

Zheng C, Deng X, Fu Q, Zhou Q, Feng J, Ma H, Wang X (2020) Deep learning-based detection for COVID-19 from chest CT using weak label. medRxiv

Zhu H, Guo Q, Li M, Wang C, Fang Z, Wang P, Xiao Y (2020) Host and infectivity prediction of Wuhan 2019 novel coronavirus using a deep learning algorithm. BioRxiv

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Chatterjee, J.M., Sujatha, R. (2023). Detection of COVID-19 Cases from X-Ray and CT Images Using Transfer Learning and Deep Convolution Neural Networks. In: Moy Chatterjee, J., Saxena, S.K. (eds) Artificial Intelligence in Medical Virology. Medical Virology: From Pathogenesis to Disease Control. Springer, Singapore. https://doi.org/10.1007/978-981-99-0369-6_6

Download citation

DOI: https://doi.org/10.1007/978-981-99-0369-6_6

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-0368-9

Online ISBN: 978-981-99-0369-6

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)