Abstract

The undecimated discrete wavelet transform domain is used for fusing (UDWT). Medical image fusion aims in the direction of coalescing several images out of multifarious sources as an individual representation that may be utilized for better diagnosis. The discrete wavelet transform is used in the majority of state-of-the-art picture fusion algorithms (DWT). When DWT is employed for picture fusion, there is a small blurring. This blurring is greatly decreased when utilizing UDWT. In UDWT, there is no decimation step. As a result, wavelet coefficients for each site are generated, allowing for better recognition of dominating characteristics. It is a multi-resolution decomposition that is not orthogonal. The maximum selection rule employed en routes merging of low-frequency sub-bands in the UDWT domain, whereas modified spatial selection rule is utilized to combine high-frequency sub-bands. In this project, the recommended approach is implemented using the Python tool. The proposed method's superiority is displayed and justified. Various quality measures, include peak signal-to-noise ratio (PSNR), entropy, correlation coefficient, global consistency error (GCE) and mean square error (MSE), are employed to evaluate the performance of fused images.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Image fusion

- Undecimated discrete wavelet transform

- Correlation coefficient

- Global consistency error

- Mean square error

- Peak signal-to-noise ratio

- Entropy first keyword

- Second keyword

- Third keyword

1 Introduction

1.1 Image Fusion

Image fusion is a technique for integrating several input photos as a keen individual output entity with the aim of brief overview of the scenario than input images alone. Image fusion used to improve image quality while also reducing unpredictability and redundancy, making it easier to diagnose medical concerns. Multi-sensor image fusion, in the computerized version, is the process of fusing significant features of manifold descriptions information as a solitary picture. With the comparison towards input image, fused illustrations will have more data. Image fusion's main objective is to produce an image that is more detailed for human perception and more adaptable for further vision processing.

1.2 Fusion Categories

Different fusion categories are shown in Fig. 1. Depending on the type of images to be merged, fusion may be divided into the following categories.

-

(a)

Multi-view fusion

-

(b)

Multi-temporal fusion

-

(c)

Multi-focus fusion

-

(d)

Multi-modality fusion.

Multi-view Fusion: The targeted images have the same sensory modality and are sent simultaneously, the same moment, yet under different conditions. The fusion principal aims in this category, the method is to group all of the relevant information into separate categories. In the merged picture, there are a number of different factors to consider.

Multi-temporal Fusion: The main objective of the fusion procedure at any instance will spot the dissimilarities of the image over time by subtracting two or more photos. Although the photographs that need combination be from identical processes and affianced during distinct phases.

Multi-focus Fusion: The basic target in this method produces amalgamated picture by integrating as much details from the various modalities as is feasible while preserving the overall meaning of the images. The pictures for synthesis are collected from various techniques.

Multi-modality Fusion: Multi-modality fusion is the process of integrating many images gathered by screening techniques to produce a composite image with a wealth of information that may be applied in medical settings.

1.3 Medical Images Used for Fusion

1.3.1 Magnetic Resonance Imaging (MRI)

The structure and function of the body are examined utilizing non-invasive imaging equipment, which does not expose users to potentially harmful ionizing radiation. It is characteristically drawn for analysing health disorders and to track the succession and also to categorize unusual and unique developments. Using strong magnets and its powerful field effect, magnetic resonance imaging (MRI) causes the body's protons to align with the magnetic field. Protons get initiated and start to swirl with a balance while the RF energy is throbbed on a patient. The formed pressure due to the proton realignment with magnetic field strength triggers the MRI sensors to distinguish the fluctuations even after the RF field switch over. The amount of liberated force and proton position to a different restoration varies depending on the surroundings and compound composition based on molecular reactions. Based on magnetic characteristics, physicians may discriminate various tissue kinds.

Soft tissues and other non-bony bodily components are particularly well adapted to MRI scanning. MRI scanning is different to computed tomography since it does not use X-rays, which produce dangerous ionizing radiation. Because MRI does not utilize X-rays, it can see the muscles, ligaments and tendons as well as the brain, spinal cord and nerves far more clearly than traditional CT and X-rays. MRI is commonly utilized to image shoulder disorders and knee. MRI can discriminate among grey and white matter in the brain and also identifies aneurysms and other abnormalities. While repeated illustrations are needed in support of conclusion or treatment, especially in the brain, MRI is the imaging modality of choice as it does not apply X-rays or even former types of emission.

1.3.2 Single Photon Emission Computed Tomography (SPECT)

SPECT is a sort of fusion (nuclear) imaging with the aim of utilizing radioactive compounds (fluorine-18, technetium-99, iodine-123, xenon-133 and thallium-201) and specific gamma cameras to examine how well inside organs are operating. The procedure makes a 3D scan of the “insides” from various angles and offers information on how different areas of the body operate, allowing any issues to be readily identified.

A nuclear imaging examination called a SPECT coalesce computed tomography (CT) with an irradiated tracery. Treatment centre will be able to monitor in what way blood reaches tissues and body parts using the tracery. Tracer is infused keenly on the bloodstream prior to the SPECT examination. The radio-tagged tracer releases gamma emission that a CT machine can sense. The gamma ray’s info is congregated by the computer and flaunted on the CT cavilling segments. A three-dimensional representation of the patient's brain is produced by reassembling these cross-sections. Some of the radioisotopes used in SPECT include fluorine-18, technetium-99, iodine-123, xenon-133 and thallium-201. As the listed radioactive natural element forms pass through the patient's body, the scanner will detect them. The type of tracer used is dictated by the doctor's objectives. In order to assess how the tumour metabolizes radio-labelled glucose, or fluorodeoxy glucose (FDG), during a tumour examination (Figs. 2 and 3).

2 Literature Review

A technique called “image fusion” combines many medical imaging process and procedures, counting X-rays, magnetic resonance imaging (MRI), positron emission tomography (PET), magnetic resonance angiography (MRA), computed tomography (CT) and single photon emission computed tomography (SPECT) is becoming increasingly important in modern medicine and health care. Over the last ten years, medical data processing and analysis research has exploded. Since the MR image may offer information on both healthy and sick soft tissue, the SPECT image can only provide information on blood flow to tissues and organs and cannot identify biological changes. Combining SPECT and MRI provides a wealth of information that is useful for diagnosis. The development of new algorithms in image processing has offered substantial impetus for new techniques obtaining information from images in depth. The general goal of computer-assisted diagnostic systems is to allow for early detection, illness monitoring and improved treatment. Medical pictures from several modalities give more data, which is combined for improved analysis. The fusion of multimodal medical pictures creates a single composite image that may be relied on for better analysis and diagnosis.

In medical image processing, the most important research issue is to extract maximum information by fusing multimodal images. In extracting the detail information from images, the advancement of developing new algorithms has provided major impetus for new algorithms in signal and image processing [1]. Pixel averaging [2], gradient pyramid [3], Laplacian pyramid [4], contrast pyramid [5], ratio-of-low-pass pyramid [6], morphological pyramid [7], ripplet transform [8] and the DWT method [9,10,11] are some of the existing research methodologies. The fusion of medical images in many combinations assists in utilizing medical image fusion for medicinal diagnostics and examination. There is a tremendous progress in the fields of deep learning, artificial intelligence and bio-inspired optimization techniques. Effective utilization of these techniques can be used to further improve the efficiency of image fusion algorithms [12]. A thorough survey of medical image fusion techniques is referred in [13]. The application of wavelet transforms like discrete wavelet transform (DWT), double density discrete wavelet transforms (DDDWT) are compared [14]. The time varying characteristics of the 1D signal are extracted by wavelet transform [15].

Using pixel-by-pixel average of two photos is the simplest method of picture fusion, but it has drawbacks like less contrast. All pyramid-based decomposition techniques do not account for spatial orientation selectivity, which causes blocking effects and unattractive edges in the fused picture. The other method of multiresolution fusion technique is the wavelet-based method which uses discrete wavelet transform (DWT) in the fusion where DWT preserves different frequency information in stable form and allows good localization in time and frequency domain. The main disadvantage of DWT is that it lacks shift invariance, which results in a significant change in the wavelet coefficients of the picture when the input image is changed slightly. In medical imaging analysis, it is important to know and preserve the exact location of this information and it is happened with a wavelet called undecimated discrete wavelet transform [16]. In medical images, acquiring the most information out of multimodal images by fusing them together is the most important area of research in medical image processing. Pixel averaging, gradient pyramid, contrast pyramid, Laplacian pyramid, morphological pyramid, ripplet transform, ratio-of-low-pass pyramid and DWT technique are some of the existing research methodologies. Using pixel-by-pixel average of two photos is the simplest method of picture fusion, but it has drawbacks like less contrast. All pyramid-based decomposition techniques do not account for spatial orientation selectivity, which causes blocking effects and unattractive edges in the fused picture. Discrete wavelet transform (DWT) is used in the wavelet-based approach of multi-resolution fusion to keep varied frequency information steadily. The main disadvantage of DWT is that it lacks shift invariance, which results in a significant change in the wavelet coefficients of the picture when the input image is changed slightly.

3 Proposed Method

The proposed technique fuses MRI and SPECT images to provide a fused image that has more information than the individual modality image (MRI and SPECT images). Both MRI and SPECT images are fused using image fusion. Image fusion is a method for integrating many input photos as an extracted individual representation which portrays the objects except all the other key images could do on their own. Image fusion is used to improve image quality while also reducing unpredictability and redundancy, making it easier to diagnose medical concerns. This section introduces a novel method for combining components from high-frequency sub-bands (HFSs) and low-frequency sub-bands (LFSs) into a single medical image. Major goal of this research is to fuse medical photos by considering the features of the images.

3.1 Undecimated Discrete Wavelet Transform

Mallat’s method [17], known as the discrete wavelet transform (DWT), is supported by orthogonal decomposition of the picture to a wavelet basis directed to remove duplication of data in the pyramid at every plane of declaration. As a result, undecimated discrete wavelet transform (UDWT) avoids picture decimation, which is used in applications of image processing including texture classification [18], denoising [19,20,21], pattern recognition and fusion. The “a trous” (with holes) method may be used to discretize UDWT [22].

Some interesting properties of UDWT are: The wavelet decomposition's assessment may be tracked from level to level. At each level of decomposition, a separate wavelet coefficient plane is generated. Each location's wavelet coefficients are generated, allowing for a more accurate detection of the dominating feature. It is simple to implement. A non-orthogonal multi-resolution decomposition known as the “a trous” wavelet transform divides information into low-frequency components and high-frequency components (detail coefficients). In this separation, a low-pass filter is associated with the scale function is employed to generate the following series of scale-based signal approximations:

where j is the scale index and the N number of scales and a0(k) is the original discrete signal s(k).

The following filtering process is applied to a high-pass filter connected to the wavelet function to produce the wavelet coefficients (2).

Two dual filters are used to complete flawless data reconstruction, and they must meet the quadrature mirror filter requirement.

where \(\delta (l)\) is the Dirac function. Considering \(hr(n)\) and \(gr(n)\) filters as equal to Dirac function \((hr(n) = gr(n) = \delta (n))\). g(n) is deduced from (3) as

As a result, the wavelet coefficients are generated by simply comparing the differences of two consecutive approximations (5).

This method generates the sequence via successive convolutions with a filter created from an auxiliary function called the scaling function (6). The following are the creations of the series of approximations:

where F represents a scale function. It is common practice to characterize the scale function using a B3 cubic spline function, which causes a convolution with a mask of (7) [20] \(5 \times 5\)

As previously stated, the difference between two consecutive approximations \(A_{j - 1}\) and Aj is used to compute the wavelet planes.

where A0 = I, the reconstruction Formula (9) is

3.2 Low-Frequency Sub-bands Fusing Using Maximum Selection Rule

The LFSs coefficients fused utilizing maximum selection technique. The frequency coefficients with the highest absolute value are chosen as fused coefficients using this fusion rule which is shown in Eq. (10).

where X image and Y image are two inputs and F represents the fused coefficient image. LFSF is the fused LFS image, LFSix and LFSiy are the low-frequency sub-bands of ith region of LFS image for X and Y images, respectively.

3.3 High-Frequency Sub-bands Fusing Using Modified Spatial Frequency

The Eskicioglu et al. [23] suggested spatial frequency (SF) is determined using row and column frequency. The greater the SF [24, 25], the more detailed distinctions and textural changes are seen, reflecting the overall activity level of a picture. The suggested image fusion approach makes use of a modified version of SF. Three frequencies make up modified spatial frequency (MSF): diagonal frequency (DF), column frequency (CF) and row frequency (RF). The MSF is specified for a m × n image F as

where

where

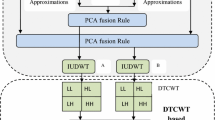

3.4 Algorithm

The suggested medical image fusion method's schematic diagram is given away in Fig. 4. The subsequent order is used to complete the fusion process: The source medical images X and image Y separated using UDWT at level 1 to obtain LFSs and HFSs. The LFSs coefficients are fused to get fused LFS by means of the maximum assortment imperatives as explained in Sect. 3.2. The coefficients of HFSs of two source images are segmented into several regions. The modified spatial frequency of the appropriate areas of the segmented HFSs of the two input images is calculated as explained in Sect. 3.3. To determine which source picture should be utilized to create the fused HFS image, make a comparison between the changed spatial frequency of the respective areas of input images.

where HFSF is the fused HFS image, MSFiX and MSFiY are the modified spatial frequencies of the ith region of HFS image for X and Y images, correspondingly. Final fused medical likeness is formed by doing converse undecimated discrete wavelet transform on the fused LFSs and HFSs.

3.5 Evaluation Metrics [26]

PSNR

The (PSNR) peak signal-to-noise ratio [12, 27] refers to the relation among the highest attainable control of an image and the maximum influence of degrading noise to facilitate the quality representation of the picture. It is essential to make comparisons of an ideal, clean image with the greatest amount of potential power in order to determine its PSNR. The efficiency of compressors, filters and other equipment is frequently assessed using PSNR. The efficiency of the compression or filtering strategy increases with the PSNR value.

The highest possible intensity levels are represented by L (least intensity level is assumed to be 0). Here, O denotes the original image's data. Where the matrix data of the degraded image represented by D. M signifies number of pixels in a row, and image's index for that row is denoted by i. N stands for number of pixel columns in the picture, whereas the index of that column is denoted by j.

Mean Square Error (MSE)

The image quality is measured using the MSE [23] method. The quality and RMSE (root mean squared error) are inversely related. The RMSE number decreases as the eminence of the amalgamated representation increases. It is delineated as follows:

where O denotes the original image's matrix data. Where D symbolizes the matrix information of the corrupted illustration. M describes the number of pixels in a row and i denotes image's index for that row. N denotes the number of pixel columns in picture, whereas the index of that column is denoted by j.

Entropy (EN)

Entropy is a statistical computation [23] that describes the order in the image. It is a metric indicating how much information is included in a picture. The amount of information included in the fused picture determines the entropy value. Because it tracks how many times a picture has changed, the entropy of an image reveals its degree of unpredictability. When coding pictures, using the entropy is advantageous since it reduces the average coding length in a pixel to a fraction of prior values, allowing operators to operate without affecting the image's data quality. As a result, it is linked to the quantity of data in the image.

Global Consistency Error (GCE) [20]

GCE is a test that determines how well a segmentation algorithm resembles human behaviour. They are made such that when comparing two separate segmentations, the error value should be very tiny, if one is a refinement of the other. They define refinement as the consistency of segmentations with one segmentation having a higher level of detail than the other. The reason is because, in general, humans do not construct similar segmentations of the same sight. The range of GCE measurements is [0, 1], with values closer to zero indicating better segmentation.

Correlation Coefficient [19, 27, 28]

The correlation coefficient (r) is a summary metric that expresses the degree to which two images are statistically related. The correlation coefficient is adjusted to be between 0 and 1 at all times. When the correlation coefficient is close to 0, it suggests there is minimal association between the variables, and the further away from 0 or closer to 1, the stronger the relationship between the two images. Correlation coefficient 0: No correlation. The images do not have a relationship with each other. Correlation coefficient 1: Perfect correlation.

4 Results and Discussions

Image fusion is a technique by fusing several input images to form one output image that can represent an image with greater accuracy than the majority of the other input photos alone. By enhancing picture quality and lowering uncertainty and redundancy, image fusion is a technology that makes it simpler to identify medical problems. Figure 5a SPECT image provides information about how brain is functioning but no soft tissues information, while MRI image as shown in Fig. 5b provides clear soft tissues information but no bone information and brain functioning. Hence, when the two images are fused both brain functioning and soft tissues information can be seen in the output fused image Fig. 5e.

Maximum selection rule is applied to combine the low-frequency sub-bands (LFSs) coefficients of two input images, and the output image of low-frequency sub-bands coefficient is shown in Fig. 5c. Modified spatial frequency is employed to coalesce the HFSs high-frequency sub-bands of two input descriptions to give image shown in Fig. 5d. Final fused medical image shown in Fig. 5e is produced by performing inverse undecimated discrete wavelet transform on the fused LFSs and HFSs. Parameters computed between fused image shown in Fig. 5e with respect to SPECT image shown in Fig. 5a and MRI image shown in Fig. 5b are mean square error (MSE), peak signal-to-noise ratio (PSNR), entropy, global consistency error (GCE) and correlation coefficient. These parameter values were presented in Tables 1 and 2, respectively.

5 Conclusions

For merging medical images, an approach based on UDWT and changed spatial frequency was implemented in this paper. Medical image fusion is significant in many clinical applications because it can offer more accurate information than a single picture. Some of the drawbacks of pixel-level fusion techniques, such as fading effects eliminated. This study demonstrates how to use UDWT for medical picture fusion in three phases. UDWT decomposes the medical pictures to be fused into sub-images in the first step. In the second step, the low-frequency band coefficients fused utilizing utmost assortment criterion, where the high-frequency band coefficients are amalgamated by means of modified spatial frequency. In the last stage, the composite coefficients are employed to generate the fused picture using the inverse UDWT. By merging LFSs with HFSs, the suggested technique can improve diagnostic decisions. It contains details of the soft and hard tissues. The suggested approach is able to present soft and hard tissues in fusion image and is closer to tissues in MRI and SPECT images. According to the evaluation metrics GCE is least, the MSE is the smallest, and the correlation coefficient is the closest to one.

6 Future Scope

Highly rated medical institutes in India, such as AIIMS Delhi, PGIMER Chandigarh and a number of other private and government medical colleges, utilize expensive medical image fusion tools purchased from outside. As a result, these tools are becoming extremely unaffordable for small clinics or hospitals, particularly in rural regions. As a result, there is a critical need for low-cost indigenous equipment in these locations to supplement the demand for this high-priced equipment. Furthermore, the design of these computer-assisted instruments may make this technology cheap to those in rural regions of the country.

References

Vijaya Kishore V, Kalpana V (2022) Application tool for assisting diagnosis and forecast progression of abnormality and medical condition of patients. Cardiometry J 22

Mitianoudis N, Stathaki T (2007) Pixel-based and Region-based image fusion schemes using ICA bases. Inf Fusion 8(2):131–142

Burt PJ, Kolczynski RJ (1993) Enhanced image capture through fusion. In: IEEE international conference on computer vision, pp 173–182

Burt PJ, Adelson EH (1983) The Laplacian pyramid as a compact image code. IEEE Trans Commun 31(4):532–540

Toet A, Van Ruyven JJ, Valeton JM (1989) Merging thermal and visual images by a contrast pyramid. Opt Eng 28(7):789–792

Toet A (1989) Image fusion by a ratio of low-pass pyramid. Pattern Recogn Lett 9(4):245–253

Toet A (1989) A morphological pyramidal image decomposition. Pattern Recogn Lett 9(4):255–261

Das S, Chowdhury M, Kundu MK (2011) Medical image fusion based on Ripplet transform type-I. Progress Electromagnet Res B 30:355-370

Li H, Manjunath BS, Mitra SK (1995) Multisensor image fusion using the wavelet transform. Graphical Models Image Process 57(3):235–245

Yang Y, Park DS, Huang S, Rao N (2010) Medical image fusion via an effective wavelet-based approach. EURASIP J Adv Signal Process 2010, article ID 579341

Guihong Q, Dali Z, Pingfan Y (2001) Medical image fusion by wavelet transform modulus maxima. Opt Express 9(4):184–190

Tirupal T, Mohan Chandra B, Kumar Srinivas S (2021) Multimodal medical image fusion techniques—a review. Curr Signal Transduction Therapy 16(2)

James AP, Dasarathy BV (2014) Medical image fusion: a survey of the state of the art. Inf Fusion

Leela Rani D, Bharathi M, Padmaja N (2019) Performance comparison of FFT, DCT, DWT and DDDWT-OFDM in Rayleigh channel. International conference on smart systems and inventive technology (ICSSIT 2019), Francis Xavier Engineering College, Tirunelveli, India. ISBN Number: 978-1-7281-2118-5, 27th to 29th Nov 2019

Flower J (2005) The redundant discrete wavelet transform and additive noise. IEEE Signal Process Lett 12(9):629–632

Hemachandra S, Jaya Prakash G, Venkatesh G, Moulik G (2020) Real time analysis of ECG signal using discrete wavelet transform. Int J Adv Sci Technol 29:88–96

Mallat S (1989) A theory for multiresolution signal decomposition: the wavelet representation. IEEE Trans Pattern Anal Mach Intell 11(7):674–693

Unser M (1995) Texture classification and segmentation using wavelet frames. In: IEEE transactions on image processing, vol 4, no 11, pp 1549–1560

Vijaya Kishore V, Kalpana V (2021) Impact of noise in the quantification of ILD patterns in lung CT images. Int J Future Gener Commun Netw 14(1):2758–2768

Tallapragada VVS, Manga NA, Kumar GVP et al (2020) Mixed image denoising using weighted coding and non-local similarity. SN Appl Sci 2:997

Venkat Reddy D, Satyanarayana Tallapragada VV, Raghu K, Venkat Naresh M (2020) Hybrid tone mapping with structural and edge-preserving priors. IJAST 29(7):5135–5143

Li X, He M, Roux M (2010) Multifocus image fusion based on redundant wavelet transform. IET Image Proc 4(4):283–293

Eskicioglu A, Fisher P (1995) Image quality measures and their performance. IEEE Trans Commun 43(12):2959–2965

Malfait M, Roose D (1997) Wavelet-based Image denoising using a Markov random field a priori model. IEEE Trans Image Process 6(4):549–565

Li S, Yang B (2008) Multifocus image fusion using region segmentation and spatial frequency. Image Vision Comput 26(7):971–979

Vijaya Kishore V, Kalpana V (2020) Effect of noise on segmentation evaluation parameters. In: Pant M, Kumar Sharma T, Arya R, Sahana B, Zolfagharinia H (eds) Soft computing: theories and applications. Adv Intell Syst Comput 1154. Springer, Singapore. https://doi.org/10.1007/978-981-15-4032-5_41

Pratt WK (2007) Digital image processing, 4th edn. WILEY-INTERSCIENCEA John Wiley & Sons, Inc.

Vijaya Kishore V, Kalpana V (2020) ROI segmentation and detection of neoplasm based on morphology using segmentation operators. In: Hitendra Sarma T, Sankar V, Shaik R (eds) Emerging trends in electrical, communications, and information technologies. Lecture notes in electrical engineering, vol 569. Springer, Singapore. https://doi.org/10.1007/978-981-13-8942-9_41

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Kalpana, V., Vijaya Kishore, V., Satyanarayana, R.V.S. (2023). MRI and SPECT Brain Image Analysis Using Image Fusion. In: Marriwala, N., Tripathi, C., Jain, S., Kumar, D. (eds) Mobile Radio Communications and 5G Networks. Lecture Notes in Networks and Systems, vol 588. Springer, Singapore. https://doi.org/10.1007/978-981-19-7982-8_48

Download citation

DOI: https://doi.org/10.1007/978-981-19-7982-8_48

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-7981-1

Online ISBN: 978-981-19-7982-8

eBook Packages: EngineeringEngineering (R0)