Abstract

Successful kidney transplantation requires the interdisciplinary cooperation of surgeons, nephrologists, pharmacists, social workers, nutritionists, and others. It necessitates careful pretransplant evaluation by specialists, thorough immunologic evaluation of recipients and potential donors, and vigilant posttransplant monitoring and care. As with any successful enterprise, the development of transplant as a field for the treatment of men, women, and children with kidney failure was the coming together of disparate research fields after decades of incremental progress, wrong turns, and outright failures. No account could possibly capture the many people responsible for the successes responsible for developing the field of pediatric kidney transplantation as we know it today. In this chapter, we attempt to weave together the stories that lead to the hope of transplantation today.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

My interest in transplantation began when I was a medical student. I was particularly stimulated by the consistent lack of encouragement and negative response which my naïve suggestions were met. Taking care of a youngster about my own age with Bright’s disease… I was told by the senior consultant that we would have to make him as comfortable as possible for the two weeks of life which remained. I asked if he could receive a kidney graft and was told no; I then asked why not and was told because it cannot be done. – Roy Calne [1].

1 The Triangulation Technique

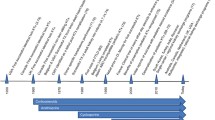

In 1894, French president Sadi Carnot was assassinated in a knife attack in Lyon, France, dying from a lacerated portal vein. General consensus was that the president could not have been saved, but Alexis Carrel, a medical student at the University of Lyon, argued that surgeons should be able to repair blood vessels like any other tissue. In 1896, after Matthieu Jaboulay’s successful repair of a divided carotid artery, Carrel began experiments on techniques for suturing small blood vessels. He obtained needle and thread from a wholesale haberdashery near his home and learned the manual dexterity required for fine work from a local embroideress [2]. Carrel carried out his experiments while working as a house officer and, in 1902, published his first article on vascular anastomosis [3]. Using this technique, he was able to autotransplant the kidney of a dog, replicating a procedure first performed by Emerich Ullmann that same year.

Over the next 10 years, Carrel published extensively on transplantation, reporting that autografts were successful, but allografts eventually failed after a brief period of function for reasons unknown at that time. By the beginning of World War I, interest in organ transplantation was low. Carrel changed his focus to other areas, including tissue culture, and, along with Charles A. Lindbergh, the development of the first organ perfusion pump. In 1914, he spoke at the International Surgical Association. “The surgical side of the transplantation of organs is now completed, as we are now able to perform transplantations of organs with perfect ease and with excellent results from an anatomic standpoint. But as yet the methods cannot be applied to human surgery, for the reason that homoplastic transplantations are almost always unsuccessful from the standpoint of functioning organs. All of our efforts must now be directed toward the biologic methods which will prevent the reaction of the organism against foreign tissue and allow the adapting of homoplastic grafts to the hosts” [4].

2 An Immunologic Barrier

Brazilian-born zoologist Peter Medawar was a 24-year-old recent graduate assigned by Thomas Gibson to study whether skin allografts could be used to help treat victims of the Battle of Britain [5]. At the time, skin grafting had been practiced for over 100 years, but common knowledge at the time was that allografts “cannot be used to form a permanent graft in human beings, except, without a doubt, between monozygotic twins” [6]. Medawar and Gibson reported the case of a 22-year-old woman with severe burns who received multiple rounds of skin autografts and allografts (from her brother). The autografts did well, but the first round of allografts showed evidence of inflammation by day 5 and significant degeneration by day 15. Importantly, the second round of skin allografts degenerated even faster, by day 8 [6]. From this and studies in rabbits, Medawar concluded that “the mechanism by means of which foreign skin is eliminated belongs in broad outline to the category of actively acquired immune reactions,” [7] an early description of allograft rejection.

Six years later, Medawar was at a cocktail party with his colleague, Hugh Donald, who was looking for a way to differentiate identical twins from fraternal twins in cattle. Medawar suggested using skin grafts, as fraternal twins should reject the graft from their twin. He enlisted his first graduate student, Rupert Billingham, grandson of a dairy farmer, to help. Medawar initially thought the outcome was predictable, but instead the results showed that most twin cows accepted the skin graft, even when the twins were of different genders. Seeking to explain their results, Medawar went back to Donald, who directed him and Billingham to the research of Frank Lillie on the interconnected placental circulation of twin Freemartin cattle and Ray Owen, who discovered that Freemartin cattle carry two red blood cell types – their own and their twin’s [8]. They hypothesized that the twin cattle exchanged blood in utero, leading to donor cell chimerism in adulthood, and that this chimerism would exist for white blood cells as well. It was the first description of immune tolerance [9]. Medawar and Billingham, along with Leslie Brent, moved on to confirm their studies in mice (“Thank God we’ve left those cows behind,” Medawar reportedly said) [8], and, while not immediately clinically applicable, their findings raised hopes that the immunologic barrier could be breached in human transplantation [10].

Meanwhile, George Snell was studying tumor transplantation and found that certain genes were associated with the failure of tumors transplanted from one strain of mice to another. His across-the-hall neighbor named them H genes, or “histocompatibility” genes [11]. In France, Jean Dausset had become interested in the biology of blood transfusions while working as a medic in World War II and was widely published on the topic. In 1952, he wondered “If there existed individual differences carried by red blood cells, why wouldn’t there exist others, carried on white blood cells” [3]. He combined the white blood cells of one individual with the serum of another individual, one who had received multiple blood transfusions. “With the naked eye, I saw the formation of enormous clumps of agglutinins” [3]. By 1958, Dausset had described the human equivalent of Snell’s H gene, which he initially called “Mac.” Seven years and over 900 skin graft trials later, Dausset, along with Czech researchers Pavol and Dagmar Ivany, clarified the genetic region and multiple loci today known as the human leukocyte antigens [3].

3 “Science Fiction”: The Beginnings of Human Transplantation

The first attempted human-to-human kidney transplantation was performed by Ukranian Yuriy Voronoy in 1933 on a 26-year-old woman with acute kidney injury from mercury poisoning. Voronoy was hopeful that the immunosuppressive effects of the mercury would allow the graft to survive long enough for the native kidneys to recover. The kidney was transplanted into the right thigh and initially made urine but, after transfusion with a different blood type, the graft failed, and the patient died 48 hours after surgery [12]. Voronoy tried six more times in the following 16 years; none of the kidneys functioned for any appreciable length of time [4]. In 1945, Charles Hufnagel, David Hume, and Ernest Landsteiner at the Brigham Hospital in Boston transplanted a human kidney to arm vessels. The kidney functioned briefly, with little urine output, but despite this spurred increased interest in transplantation at both Brigham Hospital and elsewhere [13].

In the early 1950s, teams in Boston and Paris began pursuing human kidney transplantation, but results remained poor, with graft survival lasting days to months. In Paris, urologist René Küss was initially using kidneys donated by prisoners condemned to death who consented to postmortem procurement. The donor nephrectomies were performed minutes after decapitation “on the ground, by torchlight…which strongly offended the sensitivity of some of us” [14]. The kidneys made urine transiently, but all recipients died within days to weeks. Across town, Jean Hamburger performed the first living related kidney transplant on a 16-year-old carpenter who ruptured a solitary right kidney in a fall from scaffolding. “The boy’s mother pleaded with us to attempt to transplant one of her kidneys to her son” [15]. Hopeful that the close biologic relationship between mother and son would prevent rejection, surgeons agreed and performed the procedure on Christmas Eve. The graft functioned immediately with normalization of the boy’s blood urea levels, but on postoperative day 22, the kidney developed rejection and the patient died.

In October 1954, David Miller was caring for 23-year-old Richard Herrick at the Public Service Hospital in Brighton, MA. Richard was diagnosed with chronic kidney disease secondary to chronic nephritis and his death seemed imminent. Richard’s older brother Van had asked Dr. Miller if he could give a kidney to his brother. Dr. Miller told him it was impossible, but then remembered that Richard had an identical twin brother, Ronald. He was aware of ongoing transplant research and sent the brothers to nephrologist John Merrill at Peter Bent Brigham Hospital in Boston.

Over the next 2 months, the Brigham team grappled with the ethical issues in removing a kidney from a healthy individual, consulting physicians, clergy, lawyers, and insurance actuarial tables before finally leaving it up to Ronald. “I had heard of such things,” Ronald remembered, “but it seemed in the realm of science fiction. [We] were caught up in the enthusiasm, but I felt a knot in the pit of my stomach…the only operation I’d ever had before was an appendectomy, and I hadn’t much liked that” [10]. Even Richard, the recipient, had last minute thoughts. “Get out of here and go home,” Richard wrote to Ronald the night before the surgery. “I am here, and I am going to stay,” Ronald responded. On December 23, urologist J. Hartwell Harrison removed Ronald Herrick’s left kidney and surgeon Joseph Murray transplanted it into Richard Herrick. The kidney functioned immediately; urine “had to be mopped up from the floor” [10]. Richard married his recovery room nurse, had two children, and lived 8 more years before dying in 1962 from recurrence of his original disease. Ronald lived to the age of 79 years. The first successful kidney transplantation was front-page news that rekindled interest in transplant research around the world; seven identical twin transplants would be performed in the next year, including two unsuccessful attempts in children (one due to recurrence of glomerulonephritis and one due to primary nonfunction) [16]. The effect of this new treatment was, however, limited. The immunologic barrier remained, and most with kidney disease would not have an identical twin.

4 Breaking the Barrier

Medawar had shown that immunologic tolerance was possible with his skin grafting experiments, and research endeavors turned to suppression of the immune system. E. Donnall Thomas had been a hematologist at the Brigham Hospital and was involved, for a short time, in the care of Richard Herrick. In 1955, he and surgeon John Mannick were studying bone marrow transplantation using irradiation at Mary Imogene Bassett Hospital in Cooperstown, New York, where the “cold winters of that upstate New York rural community were conducive to the conduct of research” [17]. In 1959, they reported the successful transplantation of a kidney into a beagle who had received total body irradiation followed by bone marrow allograft from the kidney donor. The kidney functioned normally until the dog died 49 days later from pneumonia; on autopsy, the kidney pathology was normal [18]. Murray’s team in Boston began experimenting with Thomas and Mannick’s protocol in dogs and 12 humans. In January 1959, they performed a kidney transplant between 24-year-old John Riteris and his fraternal twin brother using sublethal irradiation. John lived 27 years before dying of congestive heart failure. The only time he ever discussed his transplant with his brother was in an inscription in a book he gave to Andrew just before his death. It read, “To Andrew – Thanks for the second drink” [10]. The immunologic barrier had been broken for the first time, but it was the only success out of 12 attempts. The infectious complications of irradiation were unacceptably high.

Roy Calne was teaching anatomy at Oxford University in 1956 when he attended a seminar given by Peter Medawar on immunologic tolerance. “He had the audience spellbound with his brilliant oratory and the content of his message,” Calne recalled. “When he finished, a medical student asked if there were a potential application of the work; Medawar’s reply was short, in fact two words, “Absolutely none!” [1]. Calne disagreed and asked his Department Head if he could have a letter of recommendation to join Medawar’s lab. The Department Head replied that Medawar “was a very busy man and since I wanted to be a surgeon I had better go and learn to do hernias” [1]. Calne did so and obtained a residency position at the Royal Free Hospital, which had neither facilities nor funding for research. Nevertheless, Calne obtained permission to begin trying kidney transplants, first in mice and then in dogs. He had no more successes than others. Irradiation had a track record of failure; he wondered if cancer drugs might prove a viable alternative.

Calne reached out to Ken Porter, a pathologist who had used thiotepa to prolong survival of skin grafts in rabbits. Porter pointed Calne to a paper published that same week by Robert Schwartz and William Damashek reporting the induction of immunologic tolerance in rabbits treated with 6-mercaptopulrine (6-MP). Calne began treating his canine transplant recipients with 6-MP, resulting in some prolonged survivals. Excited by the results, he called Ken Porter; “before I could give him my news, he said “You remember the 6-MP experiments, well it did not have any significant effect on rabbit skin allografts so I wouldn’t bother to try it in the dogs” [1]. Calne published his results in The Lancet in 1960, prompting at least one letter to the editor that implied his results were not accurate. As Calne was a junior surgeon publishing his first paper, the response caused him “some distress.” After some prompting, he contacted Medawar’s office to discuss his results. “[I] very meekly asked if I might sometime have a chance to speak with him. [His secretary] said, “I’ll put you through;” I was protesting, “Oh no, no- he’s a very busy man!” By then I was speaking to the great man who gave me the impression that he had all the time in the world” [1].

Calne’s results sparked interest, and soon Charlie Zukoski and David Hume had published independent replication of his results. Calne received permission to use 6-MP in clinical kidney transplantation. Their first case, a woman with polycystic kidney disease with a potential donor who died from a subarachnoid hemorrhage, was cancelled when the donor kidney was also found to be polycystic. “I have never since forgotten the association of polycystic disease with berry aneurysms… It seemed that perhaps transplantation was not meant to start at that time” [1]. Calne took an 18-month research sabbatical to Boston, where he collaborated with George Hitchings and Gertrude Elion, researchers at Burroughs Wellcome laboratories who were working on synthesizing purine analogs with a better therapeutic index than 6-MP. The best of these was BW57–322, known today as azathioprine. On his return to St. Mary’s Hospital, Calne and Porter began trialing kidney transplants with azathioprine and steroids.

Jean Hamburger and René Küss were re-energized by the success in Boston and the advances in immunology. Hamburger was working in the same hospital as Jean Dausset, the man responsible for the identification of the HLA gene. In February 1962, Hamburger performed a kidney transplant between an 18-year-old boy with nephronophthisis and his first cousin, selected as donor from among multiple family members using the “leukocyte group” detection available at the time, with preoperative irradiation. It was the first successful non-twin transplant. The patient “was so impressed by the whole event that he decided to study medicine and became a cardiologist” [15]. The kidney functioned for 15 years, and the patient was still alive 30 years later with a second transplant. Meanwhile, Küss performed ten transplants using varying combinations of irradiation and 6-mercaptopurine, but only three patients survived [14]. In September 1963, Küss was one of 20–25 transplant surgeons and physicians who met in Washington, DC. “Each of us presented the results of his experience, which overall was fairly disastrous…” with only 10% of grafts surviving 3 months [9, 14]. “The review caused some of us to doubt the real value of renal transplantation when, at the end of the meeting, a newcomer amongst the group of pioneers, Thomas Starzl, unraveled three rolls of paper which he carried under his arm and raised our hopes by presenting his results obtained with azathioprine and cortisone” [14].

Starzl was a late addition to the program, invited only at Will Goodwin’s request. “I felt like someone who had parachuted unannounced from another planet onto turf that was already occupied.” He remembers the “naked incredulity about our results,” [19] which reported 70% graft survival at 1 year. Luckily, he’d been warned to bring the patient charts with him as proof. Starzl’s early career had been mired in frustrations, his clinical practice hampered by departmental politics, and his research presentations ignored or mocked [19]. In December 1961, he had taken a position at the University of Colorado, and by March of 1962, his team had successfully performed its first kidney transplant between identical twins.

A few weeks after public announcement of that transplant, 12-year-old Royal Jones was referred to the University of Colorado, with his mother as a potential donor. Joe Holmes, chief of the University of Colorado nephrology program, agreed to try and maintain Jones on chronic hemodialysis, itself a relatively novel treatment, until the transplant program was ready to attempt a non-twin transplant. Jones’ case “was enough to mobilize an army, and this was exactly what happened” [19]. Laboratory and surgical teams were recruited, anesthesia machines refurbished, and research funds diverted from other programs. The team performed eight to ten dog transplants per day to perfect techniques and study different immunosuppression regimens. A supply of azathioprine, then under clinical trials, was obtained. By summer of 1962, 20–25% of dogs with kidney transplants were surviving for 100 days after transplant, but Royal was running out of vascular access for dialysis.

On November 24, 1962, Royal received a renal transplant from his mother with a combination of irradiation, azathioprine, and prednisone for immunosuppression. He was kept in one of the operating rooms for 1 month after transplant to decrease the risk of infection, suffered one episode of early rejection that was reversed with prednisone, and returned to school a few weeks later. His initial allograft lasted 6 years before requiring a second transplant, from his father, that survived a further 14 years. Thirty years later, he was still alive and waiting for a third kidney [19]. Between 1962 and 1964, 16 children would receive kidney transplants in Colorado, ten of them were still alive at 25 years of follow-up [20].

By 1970, there were five published case series of kidney transplantation in children, with mortality (13%) that was similar to adult reports. Nearly all of these were living donor transplants, for which outcomes were significantly better compared to deceased donor grafts. Death was typically caused by infection [21], with the risk seeming to correlate with the steroid dose [22]. Most children underwent bilateral nephrectomy and splenectomy either prior to or at the time of transplant.

5 Youthful Rebellion and Tissue Typing

The discovery that leukocyte antibodies form during pregnancy, by the Dutch team of Jon van Rood, Aad van Leeuwen, and George Eernisse in 1958, provided substrate to begin testing potential tissue donors for HLA type, though the initial study results remained uninterpretable until computer analysis arrived in the early 1960s. Armed with this new technique, van Rood, van Leeuwen, Ali Schippers, and Hans Bruning traveled to transplant centers around the world – Brussels, Louvain, Edinburgh, Boston, Denver, and Minneapolis – to collect tissue samples from over 100 kidney transplant recipients and their sibling donors. They found that those with a perfect HLA match were significantly more likely to survive than those without. Their results led to the founding of Eurotransplant in 1967, the first large-scale effort to implement transplant immunology in clinical transplantation [23]. The initial analyses of Eurotransplant outcomes in 1969 were disappointing. “There was really very little to be said about the effects of matching,” [23] though the results (68% graft survival at 1 year) were much better than those in the International Registry for Kidney Transplantation (approximately 40% graft survival at 1 year). However, with follow-up, it became clear that matching led to improved long-term graft survival and decreased lymphocyte infiltrate in the kidney, despite the fact that they were only matching for the “Leiden antigens”: A2, A28, and the cross-reactive groups of HLA-B.

In 1955, Paul Terasaki was a zoologist studying immune tolerance in chick embryos at the University of California, Los Angeles, when he realized his work was mainly retreading the studies of Billingham, Brent, and Medawar. In search of a new direction, he applied for a research position in Medawar’s lab; he was denied based on lack of space. Undeterred, he made a visit to Ray Owen’s laboratory at Cal Tech, where Brent was spending a year, to get their opinions on his research. A month later, Medawar called Terasaki offering a position in his London lab; apparently Brent had put in a good word [24]. After a year in London, Terasaki “somehow came to the conclusion that humoral immunity was more important than cellular immunity,” the exact opposite of Medawar’s research. “To this day, I’m not sure whether this view was simply youthful rebellion” [24].

Terasaki was interested in Jean Dausset’s leukoagglutination test, but felt it was “too capricious” for clinical use. “Many hours in the laboratory were required to learn what would NOT work, despite publications to the contrary” [24]. The lymphocyte microcytotoxicity test, developed with John McClelland in 1964, used a piece of aluminum foil taped to the edge of a cover glass to create an oil chamber. Reagents were limited, so the testing used the smallest volume that could be dispensed – 0.001 mL, or one lambda. By 1970, the microcytotoxicity test was the primary form of tissue typing. Blood samples were shipped to the Terasaki lab using a two-chamber plastic bag with nylon wool in the top. Granulocytes in blood injected into the bag would stick to the nylon wool, while red cells and lymphocytes would fall into the lower chamber, which contained a tampon. Upon arrival in the lab, a large vise was used to squeeze blood out of the tampon for testing. Using this system, nearly every kidney transplant in the United States between 1965 and 1968 underwent typing [24].

6 “It Seemed Too Good to be True”: Pharmacologic Immunosuppression

The search continued for immunosuppressive medications that could decrease or replace steroids and limit their significant side effects. In 1899, Ilya Ilyitch Metchnikov had developed antilymphocyte serum (ALS), made by injecting guinea pigs with cells from rat spleen and lymph node. Medawar was enthusiastic about this idea, but clinicians were reluctant to risk their success with azathioprine and steroids. The injection of animal serum into humans was “not a particularly palatable idea, especially when the dosage into the abdomen would be several gallons if experimental information was applied to clinical practice” [19]. Beginning in 1964, K.A. Porter and Yoji Iwasaki began using horses to raise serum and then identified and purified the gamma globulin fraction. The first patients were treated in the summer of 1966 and “could be picked out of a crowd… The ALG (antilymphocyte globulin) was given into the muscles of the buttock and caused such severe pain and swelling that patients constantly walked the floors trying to rid themselves of what felt like a charley horse. They sat crookedly on chairs and formed their own support groups to exchange tall tales, and especially complaints” [19]. The initial study was a success, with reduced rates of early rejection and a 50% decrease in prednisone dose [25]. The concept was further refined by Ben Cosimi when he used newly developed cell culture techniques to produce a monoclonal antilymphocyte antibody – OKT3 – first used in 1980 [19]. However, antilymphocyte antibodies could not be used long term because of the inevitable development of an immune reaction to the horse protein and the higher incidence of viral infections and malignancies.

In 1976, Jean Borel, a researcher at Sandoz pharmaceutical company in Switzerland, presented preclinical data on the potential immunosuppressive effects of a metabolite of the Tolypocladium inflatum fungus called cyclosporine [26]. Meanwhile, in the Cambridge lab of David White, visiting research fellow, Alkis Kostakis was reaching 2 years without significant success. Worried his professors in Greece would be upset if he returned home without any research product, Kostakis transitioned studying immunosuppression. Borel had given White a bottle of cyclosporine, which White passed along to Kostakis for experimentation. Two months later, he called Calne reporting significant prolongation of rat heart allograft survival [1]. “It seemed too good to be true,” so Calne had him repeat the studies. The results were even better when Kostakis dissolved the drug in the olive oil his mother had sent him, “worried that he might starve whilst he was in England” [1]. Initial clinical trials of cyclosporine monotherapy showed no better outcomes than the conventional azathioprine-prednisone combination with increased complications, but a regimen combining a lower dose with prednisone showed success [19]. Cyclosporine was approved for use in the United States in 1983 and resulted in a 20% decrease in 1-year graft loss [27] and lower doses of prednisone [19].

In the years since, the search for better immunosuppression medication – more targeted, more effective, fewer side effects – has continued. In 1986, Takenori Ochiai first presented preliminary data on FR900506, an extract of Streptomyces tsukubaensis, that was found in soil samples from the base of Tsukuba mountain in Japan. The results of the first clinical trials of this new drug, shortened to FK-506, were so exciting to the public that they were first published by the Pittsburgh Post-Gazette, scooping the official The Lancet publication [19]. The original hope that it could work synergistically with cyclosporine, replacing prednisone in a two-drug regimen, was dashed when it was discovered that the medications were both calcineurin inhibitors. FK-506 was approved by the US Food and Drug Administration (FDA) in 1994 as tacrolimus. A Cochrane review of tacrolimus ultimately found that it decreased the risk of graft loss by nearly 50% at 3 years [28] relative to cyclosporine.

In the 1970s, South African geneticist Anthony Allison and Argentinian biochemist Elsie M Eugui were studying children with defects in purine metabolism. They noted that de novo purine synthesis is inhibited in adenosine deaminase deficiency, an immunodeficiency, while purine salvage pathways are blocked in Lesch-Nyhan syndrome, a neurodevelopmental disorder. Their hypothesis that targeted inhibition of de novo purine synthesis might serve as an immunosuppressant led to identification of mycophenolate mofetil, an ester derivative mycophenolic acid, which was studied as an antibiotic in 1896 but abandoned due to toxicity [29]. Mycophenolate mofetil prolonged allograft survival and reduced the occurrence of graft rejection and was approved by the US FDA in 1995. As of 2018, over 95% of pediatric kidney transplants were managed using a combination of tacrolimus and mycophenolate mofetil, with or without prednisone [30].

Surendra Nath Sehgal was studying soil samples from the island of Rapa Nui when he found that isolates of Streptomyces hygroscopicus produced a compound with activity against Candida albicans. Further study, however, showed that the compound had immunosuppressive properties that made it impractical as an antifungal. Undeterred, Sehgal sent the compound to the National Cancer Institute, where it was found to block growth in several tumor cell lines, but the research was dropped in 1982 after a laboratory closure. In 1988, Sehgal successfully advocated for renewed research on this compound, and in 1999, the US FDA unanimously approved sirolimus, also called rapamycin after its island origin [31]. Sirolimus lacks the nephrotoxic side effects of tacrolimus and cyclosporine, making it an attractive option for reducing or eliminating calcineurin inhibitor exposure; however, studies in children showing an increased risk for posttransplant lymphoproliferative disease with a high-dose sirolimus regimen have limited its widespread use [32]. As of 2014, approximately 7.7% of US pediatric renal transplant recipients were using sirolimus at 1 year posttransplant, and studies about its efficacy and side effects in children remain ongoing [33].

7 A Framework for Allocation

In the early 1960s, deceased donor donation in the United States required that the potential donor’s heartbeat be allowed to stop, after which the medical team would move rapidly to restart circulation and ventilation to preserve kidney oxygenation. Conversely, teams in Sweden and Belgium would continue ventilator support for patients with le coma depassé (literally “a state beyond coma”). The ethical debate about the appropriateness of this continued through 1968, when the Ad Hoc Committee of Harvard Medical School, led by medical ethicist Henry Beecher, published “A Definition of Irreversible Coma,” laying out criteria to diagnose brain death. This definition was soon given legal standing in the United States and elsewhere, although its acceptance is not universal [34].

With a definition of “brain death,” there was increased opportunity to obtain higher-quality organs from deceased donors, but early kidney allocation systems were ad hoc, informal networks between hospitals. Paul Taylor, the first organ procurement officer at the University of Denver in the 1960s, would “visit hospitals throughout the region and identify victims of accidents or disease whose organs might still be useable” [19]. After the 1968 guidelines on the dead donor rule, the first organ procurement organization (OPO) in the United States, the New England Organ Bank, was established. OPOs in the United States remained unregulated and often informally run through the 1970s [19]. By 1969, the National Transplant Communications Network was maintaining a file of kidney transplant candidates with their tissue and blood type from 61 centers in the United States and Canada. Printouts of these lists were sent to participating centers monthly; installing a computer at each center was thought to be “too great an extravagance” [35]. If a deceased donor kidney became available, and could not be used at the procurement hospital or a hospital nearby, the procuring team could consult the list and directly contact the physicians of the potential recipient with the best match to the donor [35]. There were not always clear organ allocation principles, and even Starzl’s large Pittsburgh program was dependent on donated corporate jet access to fly to procurements. In 1984, the United States passed the National Organ Transplant Act (colloquially known as the “Gore Bill”) establishing a national Organ Procurement and Transplantation Network, providing funding for transplantation medications, and outlawing the sale of human organs (in response to an unpopular proposal by a Virginia physician to establish a kidney brokerage business) [19].

After passage of the Gore Bill, “no one knew what to do with it” [19]. There was no accepted method for allocating organs nationwide. Olga Jonasson chaired a task force that held public hearings for over a year before issuing broad guidelines rejecting discrimination on the basis of gender, race, nationality, or economic class and cautioning the use of age, lifestyle, and measures of social worth in allocation decisions. These guidelines were incorporated into the original 1986 contract with the United Network for Organ Sharing (UNOS). Under pressure to develop a more detailed system for organ allocation, UNOS largely adopted the “points” system that was used at the University of Pittsburgh (initially in response to accusations of allocation improprieties) [19]. Patients waitlisted for a kidney at Pittsburgh were awarded points based on wait time, HLA match, panel reactive antibody (PRA), medical urgency, and if the kidney’s cold ischemia time was greater than 24 hours at the time of allocation, logistics. Patients with a six out of six HLA match were given priority. Children less than 10 years of age or 27 kg were on a separate waitlist from adults [36].

Pediatric priority has remained a core component of organ allocation in the United States. Initially, children were awarded extra points to minimize wait time; however, pediatric transplantation rates remained lower than desired. In 1998, UNOS instituted a policy in which a child would be moved to the top of the allocation sequence if they had not received a transplant by a predetermined time: 6 months for children less than 5 years, 2 months for children 6 to 10 years, and 18 months for children 11 to 17 years of age. While this improved transplant offers, these organs were often declined due to poor organ quality. In 2005, the decision was made to give pediatric patients high priority from donors aged less than 35 years [37]. “Share 35” significantly improved pediatric wait times, but there was a concurrent 27% decline in living donor transplant rates [38]. In 2014, UNOS transitioned to a new kidney allocation system and introduced the Kidney Donor Profile Index (KDPI), an estimate of the likely survival of an allograft relative to all others. In this scheme, children are given priority for the best 35% of kidneys after multiorgan transplant recipients, recipients with zero HLA mismatches to the donor, prior living donors, and highly sensitized recipients. The new system has resulted in a decrease in pediatric donor kidneys transplanted into pediatric recipients, as the Kidney Donor Profile Index assigns worse scores to kidneys from donors less than 18 years old. An early analysis showed no increase in wait time and no increase in transplant rate for pediatric transplant recipients under this system, but long-term data is pending [39].

8 “The Greatest Application”: Pediatric Transplantation

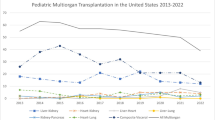

Early on, some questioned whether transplantation in children was ethical given the challenges of pediatric dialysis and the uncertain long-term outcomes of the procedure [40]. Initially, most pediatric transplants used adult-sized kidneys, as they were living donor transplants. As deceased donor transplantation became more common, interest focused on transplanting pediatric deceased donor kidneys into children “to increase the number of cadaver kidneys” [41]. Outcomes were dismal. The first two case series included nine children, of whom four died within 2 months [41, 42]. Some programs tried using kidneys donated by anencephalic infants; 43% never functioned, primarily due to vascular thrombosis [43]. As late as 1994, outcomes for deceased donor kidney transplantation in children less than 5 years of age were described as “disappointing” [43] and worse than those of older children and adults [44], although it was acknowledged that transplant was the only available treatment for children with ESRD [44]. In 1992, the North American Pediatric Renal Trials and Collaborative Studies report showed that 1-year graft survival for recipients of donors 0–5 years of age was 63%, 73% for recipients of donors 6–10 years of age, and 80% for recipients of donors greater than 10 years old [45]. Results were even worse among donor kidneys from children less than 3 year of age that were placed in similarly aged children [46]. When a transition was made to placing larger donor kidneys into children, 1-year deceased donor graft survival improved significantly [43]. As of 2018, pediatric kidney allograft survival is 97% at 1 year and 60.6% at 10 years, generally similar to adult outcomes [30].

With more experience, the particular complications of transplant in young children emerged. Children less than 12 years or 35 kg may not have room in the iliac fossa for placement of an adult donor kidney in children less than 12 years, necessitating development of an intraperitoneal organ placement via a midline incision [47]. The postoperative diuresis may be more severe in an infant or young child; one of Starzl’s initial 22 pediatric transplants died of iatrogenic hyponatremia and hyperkalemia on post-op day 1. Young children clear cyclosporine approximately twice as fast as adults; the initial rapid improvement in adult outcomes with the introduction of cyclosporine was not seen in children until this was recognized in dosing protocols [43].

Steroid side effects were particularly notable in children, especially growth arrest, delayed puberty, cataracts, weight redistribution, acne, and the associated psychological reactions [47]. “Soon the eye clinics were flooded with moon-faced children and young adults who were going blind” Starzl wrote. Then “the orthopedic clinics were filled with moon-faced kidney recipients whose… bones might break with a movement as slight as a cough. Muscles wasted away” [19]. In a long-term follow-up study of 25 children transplanted at the University of California, San Francisco, between 1964 and 1970 and still alive in 1991, 14 had cataracts and 5 had skeletal problems, mainly aseptic necrosis [48]. Due to these concerns, steroid minimization and avoidance has been studied since the 1970s [43]. While early studies of these protocols showed decreased hypertension and improved linear growth, there was also a high rate of acute rejection leading to permanent declines in function or graft failure [49,50,51]. However, when these studies were repeated using tacrolimus and mycophenolate mofetil for maintenance immunosuppression, there was no difference in graft survival between protocols that did and did not include steroids [52, 53]. As of 2018, 37.5% of pediatric kidney transplants use a steroid-free immunosuppression protocol [30].

Beyond the basics of patient and graft survival, the central question for pediatric transplantation, given the risks and complications, was whether the recipients would be able to live a quality life. Even in the earliest days of transplant, it was apparent that the answer was yes. In 1976, Weil et al. reported follow-up of 57 children who received a kidney transplant between 1962 and 1969. At follow-up, 61% of children were alive, most of whom had experienced “catchup growth” after transplant and were working or attending school full-time [22]. Similarly, in 1991, Potter et al. reported outcomes of 37 children transplanted between 1964 and 1970. Of the 25 survivors, 18 were either employed outside the home or as homemakers and 6 had children of their own. No patient had a Karnofsky Performance Status score of less than 80% on a scale of 0–100% [48].

Research continues to seek improvements in pediatric kidney transplant outcomes. Clinical trials are investigating novel immunosuppression therapies and refinement of current protocols. Observational studies are using unprecedented access to large databases, such as the North American Pediatric Renal Trials Collaborative to provide a more detailed understanding of risk factor, including at the molecular level. New technology allows the creation of learning health systems, such as the Improving Renal Outcomes Collaborative, for large-scale quality improvement. A focus on the psychosocial contributors to graft survival drive studies on adherence and transitions to adult care.

“Kidney transplantation burst onto the scene so unexpectedly in the early 1960s that little forethought had been given to its impact on society. Nor had its relation to existing legal, philosophic, or religious systems been considered. Procedures and policies were largely left to the conscience and common sense of the transplant surgeons involved” [19]. Faith in humanity and people who were willing to persist through failure with grit and determination are responsible for the legacy of transplantation. From the lessons of the past, we have much to accomplish as we continue to learn and grow the field of pediatric transplantation.

References

Calne R. Recollections from the laboratory to the clinic. In: Terasaki PI, editor. Hist. transplant. thirty-five recollect. Los Angeles: UCLA Tissue Typing Laboratory; 1991. p. 227–44.

Sade RM. Transplantation at 100 years: Alexis carrel, pioneer surgeon. Ann Thorac Surg. 2005;80:2415–8.

Simmons J. Doctors and discoveries: lives that created today’s medicine. Houghton Mifflin; 2002.

Hamilton D. Kidney transplantation: a history. In: Morris PJ, editor. Kidney transplant. princ. pract. 4th ed. Philadelphia: W.B. Saunders; 1994. p. 1–7.

Starzl TE. Peter Brian Medawar: father of transplantation. J Am Coll Surg. 1995;180:332–6.

Gibson T, Medawar PB. The fate of skin homografts in man. J Anat. 1943;77(4):299–310.

Medawar PB. The behaviour and fate of skin autografts and skin homografts in rabbits: a report to the war wounds Committee of the Medical Research Council. J Anat. 1944;78:176–99.

Billingham RE. Reminiscences of a “Transplanter”. In: Terasaki PI, editor. Hist. transplant. thirty-five recollect. Los Angeles: UCLA Tissue Typing Laboratory; 1991. p. 75–91.

Barker CF, Markmann JF. Historical overview of transplantation. Cold Spring Harb Perspect Med. 2013;3 https://doi.org/10.1101/cshperspect.a014977.

Murray JE, Boston Medical Library. (2001) Surgery of the soul: reflections on a curious career. Published for the Boston Medical Library by Science History Publications.

Snell GD. A Geneticist’s recollections of early transplantation studies. In: Terasaki P, editor. Hist. transplant. thirty-five recollect. Los Angeles: UCLA Tissue Typing Laboratory; 1991. p. 19–36.

Schlich T. The origins of organ transplantation: surgery and laboratory science, 1880–1930. University of Rochester Press; 2010.

Murray JE. Nobel prize lecture: the first successful organ transplants in man. In: Terasaki PI, editor. Hist. transplant. thirty-five recollect. Los Angeles: UCLA Tissue Typing Laboratory; 1991. p. 121–44.

Küss R. Human renal transplantation memories, 1951 to 1981. In: Terasaki PI, editor. Hist. transplant. thirty-five recollect. UCLA Tissue Typing Laboratory, Los Angeles; 1991. p. 37–60.

Hamburger J. Memories of old times. In: Terasaki PI, editor. Hist. transplant. thirty-five recollect. Los Angeles: UCLA Tissue Typing Laboratory; 1991. p. 61–72.

Papalois VE, Najarian JS. Pediatric kidney transplantation: historic hallmarks and a personal perspective. Pediatr Transplant. 2001;5:239–45.

Thomas ED. Allogenic marrow grafting: a story of man and dog. In: Terasaki PI, editor. Hist. transplant. thirty-five recollect. Los Angeles: UCLA Tissue Typing Laboratory; 1991. p. 379–94.

Mannick JA, Lochte HL, Ashley CA, Thomas ED, Ferrebee JW. A functioning kidney homotransplant in the dog. Surgery. 1959;46:821–8.

Starzl TE. The puzzle people: memoirs of a transplant surgeon. University of Pittsburgh Press; 2003.

Starzl TE, Schroter GP, Hartmann NJ, Barfield N, Taylor P, Mangan TL. Long-term (25-year) survival after renal homotransplantation--the world experience. Transplant Proc. 1990;22:2361–5.

Potter D, Belzer FO, Rames L, Holliday MA, Kountz SL, Najarian JS. The treatment of chronic uremia in childhood I. Transplantation. Pediatrics. 1970;45:432.

Weil R, Putnam CW, Porter KA, Starzl TE. Transplantation in children. Surg Clin North Am. 1976;56:467–76.

van Rood JJ, van Leeuwen A, Eernisse J. The Eurotransplant story. In: Terasaki PI, editor. Hist. transplant. thirty-five recollect. Los Angeles: UCLA Tissue Typing Laboratory; 1991. p. 511–238.

Terasaki PI. Histocompatability. In: Terasaki PI, editor. Hist. transplant. thirty-five recollect. Los Angeles: UCLA Tissue Typing Laboratory; 1991. p. 497–510.

Starzl TE, Marchioro TL, Porter KA, Iwasaki Y, Cerilli GJ. The use of heterologous antilymphoid agents in canine renal and liver homotransplantation and in human renal homotransplantation. Surg Gynecol Obstet. 1967;124:301–8.

Heusler K, Pletscher A. The controversial early history of cyclosporin. Swiss Med Wkly. 2001;131:299–302.

European Multicentre Trial Group. CYCLOSPORIN in cadaveric renal transplantation: one-year follow-up of a multicentre trial. Lancet. 1983;322:986–9.

Webster AC, Taylor RR, Chapman JR, Craig JC. Tacrolimus versus cyclosporin as primary immunosuppression for kidney transplant recipients. Cochrane Database Syst Rev. 2005; https://doi.org/10.1002/14651858.cd003961.pub2.

Hong JC, Kahan BD. The history of immunosuppression for organ transplantation. In: Current and future immunosuppressive therapies following transplantation. Dordrecht: Springer; 2001. p. 3–17

Hart A, Smith JM, Skeans MA, et al. OPTN/SRTR 2018 annual data report: kidney. Am J Transplant. 2020;20:20–130.

Samanta D. Surendra Nath Sehgal: a pioneer in rapamycin discovery. Indian J Cancer. 2017;54:697.

McDonald RA, Smith JM, Ho M, et al. Incidence of PTLD in pediatric renal transplant recipients receiving basiliximab, calcineurin inhibitor, sirolimus and steroids. Am J Transplant. 2008;8:984–9.

Pape L. State-of-the-art immunosuppression protocols for pediatric renal transplant recipients. Pediatr Nephrol. 2019;34:187–94.

Ross LF, Thistlethwaite JR. The 1966 Ciba symposium on transplantation ethics. Transplantation. 2016;100:1191–7.

Terasaki PI, Wilkinson G, McClelland J. National transplant communications network. JAMA J Am Med Assoc. 1971;218:1674–8.

Starzl TE, Hakala TR, Tzakis A, Gordon R, Stieber A, Makowka L, Klimoski J, Bahnson HT. A multifactorial system for equitable selection of cadaver kidney recipients. JAMA J Am Med Assoc. 1987;257:3073–5.

Capitaine L, Van Assche K, Pennings G, Sterckx S. Pediatric priority in kidney allocation: challenging its acceptability. Transpl Int. 2014;27:533–40.

Chesley C, Parker W, Ross L, Thistlewaithe Jr J (2013) Intended and unintended consequences of share 35 - ATC abstracts. In: Am Transpl Congr https://atcmeetingabstracts.com/abstract/intended-and-unintended-consequences-of-share-35/. Accessed 1 Apr 2020.

Nazarian SM, Peng AW, Duggirala B, Gupta M, Bittermann T, Amaral S, Levine MH. The kidney allocation system does not appropriately stratify risk of pediatric donor kidneys: implications for pediatric recipients. Am J Transplant. 2018;18:574–9.

Hutchings RH, Hickman R, Scribner BH. Chronic hemodialysis in a pre-adolescent. Pediatrics. 1966;37:68–73.

Fine RN, Brennan LP, Edelbrock HH, Riddell H, Stiles Q, Lieberman E. Use of pediatric cadaver kidneys for homotransplantation in children. JAMA. 1969;210:477–84.

Kelly WD, Lillehei RC, Aust JB, et al. Kidney transplantation: experiences at the University of Minnesota hospitals. Surgery. 1967;62:704–20.

Fine RN, Ettenger R. Renal transplantation in children. In: Morris PJ, editor. Kidney transplant. princ. pract. 4th ed. W.B. Saunders; 1994. p. 412–59.

Ettenger RB. Children are different: the challenges of pediatric renal transplantation. Am J Kidney Dis. 1992;20:668–72.

Mcenery PT, Stablein DM, Arbus G, Tejani A. Renal transplantation in children: a report of the north American pediatric renal transplant cooperative study. N Engl J Med. 1992;326:1727–32.

Arbus GS, Rochon J, Thompson D. Survival of cadaveric renal transplant grafts from young donors and in young recipients. Pediatr Nephrol. 1991;5:152–7.

Starzl TE, Marchioro TL, Porter KA, Faris TD, Carey TA. The role of organ transplantation in pediatrics. Pediatr Clin N Am. 1966;13:381–422.

Potter DE, Najarian J, Belzer F, Holliday MA, Horns G, Salvatierra O. Long-term results of renal transplantation in children. Kidney Int. 1991;40:752–6.

Feldhoff C, Goldman AI, Najarian JS, Mauer SM. A comparison of alternate day and daily steroid therapy in children following renal transplantation. Int J Pediatr Nephrol. 1984;5:11–4.

Derici U, Ayerdem F, Arinsoy T, Reis KA, Dalgic A, Sindel S. The use of granulocyte colony-stimulating factor in a neutropenic renal transplant recipient. Haematologia (Budap). 2002;32:557–60.

Reisman L, Lieberman KV, Burrows L, Schanzer H. Follow-up of cyclosporine-treated pediatric renal allograft recipients after cessation of prednisone. Transplantation. 1990;49:76–80.

Kasiske B, Zeier M, Craig J, et al. Special Issue: KDIGO. Clinical practice guideline for the Care of Kidney Transplant Recipients. Am J Transplant. 2009;9:S1–S155.

Nehus E, Goebel J, Abraham E. Outcomes of steroid-avoidance protocols in pediatric kidney transplant recipients. Am J Transplant. 2012;12:3441–8.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Engen, R.M., Verghese, P.S. (2023). Pediatric Kidney Transplantation: A Historic View. In: Shapiro, R., Sarwal, M.M., Raina, R., Sethi, S.K. (eds) Pediatric Solid Organ Transplantation. Springer, Singapore. https://doi.org/10.1007/978-981-19-6909-6_1

Download citation

DOI: https://doi.org/10.1007/978-981-19-6909-6_1

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-6908-9

Online ISBN: 978-981-19-6909-6

eBook Packages: MedicineMedicine (R0)