Abstract

Wireless sensor network (WSN) is a collection of sensor nodes with limited power supply and limited transmission capability. Forwarding of data packets takes place in multi-hop data transmission through several possible paths. This paper presents a packet priority scheduling for data delivery in multipath routing, which utilizes the notion of service differentiation to permit urgent traffic to arrive in the sink node in a suitable delay, and decreases the end-to-end delay through the distribution of the traffic over several paths. During the construction path phase, from the sink node to the source node, the packet priority scheduling multipath routing (PPSMR) utilizes the remaining energy, node available buffer size, packet reception ratio, number of hops, and delay to select the best next hop. Furthermore, it adopts packet priority and data forwarding decision, which categorizes the packets to four classes founded on reliability and real-time necessities, and allows the source node to make data forwarding decision depending on the priority of the data packet and the path classifier to select the suitable path. Results show that PPSMR achieves lower average delay, low average energy consumption, and high packet delivery ratio than the EQSR routing.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Wireless sensor network

- Wireless multimedia sensor network

- Packet priority scheduling

- Multipath routing

- Quality-of-service

1 Introduction

Due to the broad accessibility of WSN, they are generating a lot of research interest in recent times [1]. The broad diversity of WSN applications in military and civil fields and the rapid expansion of wireless network demonstrate that the upcoming network designs should be able to support different kinds of application with various types of quality-of-service (QoS) demands [2, 3]. The QoS-based routing protocol mechanism tends to ensure that the network has the flexibility to balance its traffic and concurrently enhance the network performance. QoS routing metrics have been defined by many researchers as a set of restrictions, known to be path constraints or wireless link constraints. Link restrictions identify the constraint on the use of links, for instance, delay, while a path restriction identifies the end-to-end QoS demand, for example, data transmission reliability restriction. Therefore, routing mechanisms are important to discover several paths for every application requests.

In several applications, the network life prolongation is regarded as highly essential when compared with data quality, and this is related to the decreasing of energy wastage in the sensor nodes, so energy-aware routing protocol is needed in the WSN [4]. In real-time applications, the data has to be transmitted promptly else the data might become unusable. For this kind of situation, a timeliness-aware routing protocol is needed by the network. But in certain types of situation, some application might use a reliable routing protocol if data reliability of data transmission is regarded as the most crucial requirements in the network.

Numerous recommended QoS routing protocols in WSN identify the network through a particular metric like delay, hop count, or energy-efficient algorithms, which is used for calculating the routes. But due to the immense energy limitations of the WSN, the main motivation of many of the protocols is ensuring energy efficiency and how to optimize the lifetime of the network [5,6,7]. Yet, to maintain various types and numerous QoS requirements, the protocols must specify the network with several metrics like delay, energy, and the possibility of data loss. So, it is difficult to discover the best path that can satisfy the multiple restrictions for QoS routing through an energy-efficient approach. In comparison with routing decision utilizing single link or single objective constraint, the multi-objective or the multi-constraint routing is extremely diverse, and the challenges of attempting to discover the best QoS path that can meet the multiple restrictions have been demonstrated to be non-polynomial (NP) complete [8].

Several applications in WSN, such as target tracking, environment monitoring, and home automation applications, are some examples of the wireless multimedia sensor network (WMSN) that necessitates for further innovative know-how to resolve the problems of energy efficiency in multimedia processing and communication in WMSN. Some of these QoS benchmarks are in the form of jitter, packet loss and delays, etc. [1, 9]. There are two main QoS ways at the network layer in WMSN performing differentiate service, namely multipath routing and traffic scheduling [10]. In traffic scheduling, multimedia packets that are considered more significant and urgent, or if the QoS requirement is higher, are assigned greater priority levels. Multipath routing is usually provided for reliability in WMSN [9, 11] and deemed an efficient technique to spread multimedia data packets in WMSN [1]. Nevertheless, many of the current traffic scheduling mechanisms have been proposed in a single routing path, with only a few over multiple routing. In the meantime, only delay (whether it is real-time or non-real-time) is in these works [12, 13]. However, in WMSN applications, the reliability requirement, which is associated with packet loss, is another essential component that should be taken into consideration. For this reason, much attention is put on discovering an innovative traffic scheduling technique across node disjoint multiple-path routing that can be used for sending multimedia data packet in WMSN by taking into account delays and loss of the packet. Thus, a method of packet priority scheduling has been proposed for data delivery in multipath routing for WSN, known as packet priority scheduling multipath routing (PPSMR). The rest of the paper is structured as follow. Section II describes an overview of existing works. Section III presents the packet priority scheduling for data delivery in multipath routing in detail. Section IV deals with the simulation study and performance evaluation with respect to various performance metrics. Section V concludes this paper.

2 Related Work

The rapid expansion and deployment of WSN have contributed to numerous research works being conducted for QoS routing-related issues [3, 12, 14, 15]. However, several of the protocols being undertaken paid much attention to limited QoS fields. For example, in [16], bandwidth utilization is the only QoS espoused, whereas collision is discussed in [17], where multipath routing is recommended to ensure prolongation of lifetime of the network and throughput and at the same time, latency reduction. Furthermore, a secure and energy-efficient multipath routing for WSN is reported in [18].

In multi-hop wireless networks, either geographic routing or position-based routing [19] can employ a technique, known as greedy forwarding, to ensure packet delivery. Direct neighbouring nodes can exchange location information within their neighbourhood and choose the sensor node, which is nearer to the sink. With this situation, a sensor node is required to know its next-hop neighbour location information without the knowledge of the whole network. For this reason, the discovery flooding and also state propagation might not be needed beyond particular hop [20]. In contrast, in the case of employing traditional greedy forwarding, a failure might occur during transmission if the node neighbours are not nearer to the destination, and several constraints could happen when being deployed in practical networks [21].

Sequential assignment routing (SAR) considers QoS-based routing protocols [22]. SAR protocol constructs trees rooted at one hop neighbours of the sink by considering OoS metrics on every route, the level of energy resources on every route, and the priority level of every packet. Also, through the method of construction trees, several routes are constructed. Then, a single path is chosen from existing routes in accordance with QoS and energy resources in the route. Nevertheless, sensors encounter an additional overhead that comes up from route set-up. The procedure presented in [12] attempts to evade the issues of the overheads, associated with the SAR, through the process of choosing a path inside a list of applicant paths, which appropriately fit into the end-to-end delay necessity, and also increases the throughput for optimal attempt data traffic. The concept being envisaged is that every node in the network consists of a classifier with the ability to detect and reroute incoming traffic to various priority queue for either real-time traffic or non-real-time data traffic. That is why a multipath routing protocol is recommended to deliver the optimal endeavour and real-time traffic. The gateway makes a precise ratio bandwidth as a primary value to represent a particular bandwidth to be devoted to real-time and non-real-time data traffic on a specific spreading channel, to forestall any congestion that might arise. Every node amends the bandwidth and the delay requirement by employing the criteria of the path length and the data traffic. But, the only side effect of the protocol is that it is not efficient because the comprehensive information in the topology of the network is needed for every node and extra information of QoS requirement is not maintained for real-time data traffic. Similarly, the protocol employs a mechanism, known as the class-based priority queuing method, which is regarded as extremely difficult and costly.

Research undertaken in SPEED [23] recommended a location built on real-time protocol having a soft end-to-end deadline assurance in order to preserve the best desire speed delivery in the network might need. The concern is that energy metric has not been looked at carefully during the SPEED protocol design. There is another version of SPEED, known as MMSPEED [24], that was promulgated to deliver differentiation of QoS through the areas of timeliness and reliability domains in WSN through the transmission of packets with specific conditions, such as the needed end-to-end delay parameter so that congestion can be eliminated and packet loss rate reduced. Timeliness can be supported in situations where several network data packet delivery speed option provides for the various traffic in order to meet the end-to-end deadline. So, by support reliability, multipath can be employed to regulate the total amount of delivery paths by using the criteria of end-to-end and attaining the probability needed.

LQER protocol is a new routing protocol that aims of resolving the issues on energy efficiency and QoS [25]. Path selection is established on historical states of the quality of the link, once the minimum hop field is established. Reliability and also energy efficiency are guaranteed because of the link quality estimation approach. A protocol, known as a multi-objective cross-layer routing, for resolving resource constraints in WSN has promulgated [26]. It can compute the cost of every probable route among the source and the sink once the applications allocate the weight for every requirement needed to enable attainment of the multi-objectivity of existing protocols.

In [27], every node employs its own and its neighbourhood status information to adjust the routing and MAC layer behaviours through using, at the routing layer, a flexible cost functions and adaptive duty cycle at the MAC layer level, which solely depends on local judgements for the stabilization of the consumption of energy for the entire nodes. When the processes occur, extra overhead addition is very small, and the routes are not difficult to preserve. However, several decisions are undertaken locally without deeming the whole path from source to destination.

The multi-constrained QoS routing-MCMP [28] is another protocol, with the ability to employ braided routes in sending packets to the sink to enhance the performance of the network with practical energy cost and to achieve the necessary QoS benchmarks of delay and reliability. The end-to-end delay is expressed as an optimize challenge, and it is addressed through an algorithm centred on linear integer programming. Nonetheless, routing data across a minimum hop count path to fully assure the required QoS contributes to higher consumption of energy. The ECMP [29] was recommended as an extended version to MCMP. It takes into account the QoS routing problems as a path-based energy minimization problems constrainers by delays, reliability, and energy expenditure.

There have been numerous routing protocols being designed to deliver QoS, but not all can fulfil the demands of the various requirements, whilst some are more suited for some application requirements and can perform at optimum levels, whereas others are not appropriate for some applications because of their constraints and performance requirements. Moreover, it is worth mentioning that the packet priority scheduling for node disjoint multipath routing scheme in WMSN will be discussed in this paper and has not been considered in any of the aforementioned works.

3 Packet Priority Scheduling for Data Delivery in Node Disjoint Multipath Routing

The PPSMR consists of four phases. These are initialization phase, path construction phase, path failure recovery phase, packet priority scheduling, and data forwarding decision phase. The first phase happens in order to make the senor node aware of its neighbours and have knowledge about its surrounding neighbour nodes. The second phase determines and classifies the number of multipath from a source node to a sink node based on the calculation of link cost metric. In the third phase, which is the path failure recovery phase, the method dynamically changes the path in case of node failure while sending the data on the path. Finally, the packet priority scheduling and data forwarding decision phase highlight how to assist in scheduling priority for packets by using node disjoint multipath routing. This final phase highlights the queuing model and explains the urgency given to packets depending on their priority, i.e. whether higher than less important packets, during transmissions and therefore making data forwarding decision built on the priority of the data packet and the path classifier. The next subsections give details about the network model and assumption and then provide the details of PPSMR phases.

3.1 Network Model and Assumptions

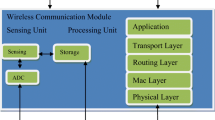

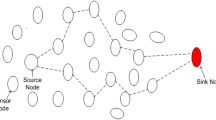

As shown in Fig. 1, the model illustrates that the WSN consists of one sink and N sensor nodes as an undirected graph G = (S, L, Q), S represents the group of vertices that denote the communication sensor nodes, L represents the group of edges that represent links among nodes, and Q is a nonnegative QoS capacity vector of every edge. The distance of a direct link L (sx, sy) ∈ L among nodes sx and sy is dsx,sy. A path is identified as a series of links from the source node towards the sink, and P = {path1, path2, …, pathn} is the group of n existing node disjoint paths among the source node and sink node. The sensor nodes randomly organized in a plane square area. During the initialization phase, sensor nodes discover the neighbours by using HELLO beacon messages. The sensor nodes are identical; every sensor owns equal transmission range, and additionally, they expend an equal amount of power to send out a bit of data and have adequate energy to perform computing, communication activities, and sensing. The network is dense and completely linked, and data packets are sent from one node to another node in a multi-hop manner. Every node has a distinctive ID, and all sensor nodes are interesting to join in the communication processes through sending data and monitoring the incidents in the sensing range of the node through utilizing an integrated sensor. The gathered information contains video streams, images, audio streams, temperature, noise, humidity, atmospheric pressure, and so on. In the network model, {t1, t2, t3, t4} signify the data categories based on the level of urgency required, such as urgent, highly important, moderately important, and less important traffic. Moreover, the sensor nodes are static, and every node is supposed to recognize its precise location, the location of nodes inside its communication range, neighbour nodes, and the sink node.

3.2 Path Discovery in PPSMR

In the path discovery, the sink begins to find several paths in order to form a group of neighbours that are capable of sending a data packet from the source node to the sink node. The construction of the multipath is node disjoint paths, which means that there are no joint nodes, excluding the source and the sink nodes. The reason behind proposing node disjoint paths is that they use the mainly accessible network resources. Furthermore, they are most fault-tolerant, which means that if a node fails in a group of node disjoint paths, just the path including that node is influenced, and as a result, there is a less effect to the diversity of the routes. The following explains the processes of achieving the path discovery phase.

3.2.1 Calculation of Link Costs Metrics

In the path discovery phase, the link cost metrics are utilized through the node to choose the subsequent hop. Let Mi be the group of neighbours of node i. Then, the link costs metrics contain an energy element, the packet reception rate, available buffer size, hop count, and the end-to-end delay factor. This metric can be calculated by Eq. (1).

where j is the node at the next hop, \(E_{{{\text{remain}},j}}\) is the remaining energy of node j, given that \(j \in M_{i}\), \(B_{{{\text{buffer}},j}}\) is the remaining buffer size of node j, and H is the hop count. The delay between two nodes is signified as \(D_{{{\text{link}}_{i,j} }}\), which is the time elapsed from data packets departure from the sending node to the receiving node. Let \(D_{{{\text{link}}_{i,j} }}\) be the delays of intermediate links. The delay of a path, \(D_{{{\text{Path}}_{P} }}\), is the total number of the delay at all the neighbour nodes in the path and computed using Eq. (2).

Another link cost metric to consider is PRR, which is the packet reception ratio. Given that \(D_{{{\text{re}}}}\) is a total number of packets received, and \(D_{{{\text{se}}}}\) is the whole number of packets that have been sent, then each node can calculate the value of PRR using Eq. (3).

The total cost metric \(CM_{{{\text{total}}}}\) for a path P, involving a group of K nodes, is the total of all the single link cost metrics \(l_{ij}\) alongside that path. Thus, the total cost metrics can be computed as in Eq. (4).

3.2.2 Initialization Phase

In this phase, every node forwards a HELLO beacon message throughout the network to have adequate information about its neighbours. In this phase, every sensor node preserves and refreshes its neighbour table. The neighbour table holds information of neighbour node. Figure 2 shows the construction and sequence of the HELLO beacon message. At the end of this phase, every node has adequate information to calculate the link cost metrics for its neighbour nodes.

3.2.3 Path Construction Phase

In this phase, once every node has adequate information to calculate the link cost metrics for its neighbour nodes, the sink node will locally calculate its favourite subsequent hop node by utilizing the link cost metrics, and forward a RREQ to its favoured subsequent hop. Figure 3 illustrates the formation of the RREQ. In the same way, throughout the link cost metrics, the favoured subsequent hop node of the sink calculates locally the subsequent hop towards the source node and forwards an RREQ to its subsequent hop. This process will carry on until the source node.

For these other paths, the sink node forwards many different RREQ to its subsequent best neighbouring nodes. In order to make node disjoint paths, only allow one RREQ to be accepted by every node. Therefore, when a node receives more RREQ, it will allow only the first RREQ and discard all other RREQ as shown in Fig. 4, which highlights the path construction process. In this instance, Node T calculates its subsequent favoured neighbour, which happens to be Node M. Node T generates RREQ and sends it to Node M. However, Node M has been involved in Path 1, and therefore, Node M simply reacts to Node T with a message INUSE showing that Node M was previously chosen in a routing path. Therefore, Node T finds out its neighbour table, calculates the subsequent favoured node, which will be Node Y, and forwards a RREQ to it. Thus, Node Y accepts the RREQ and carries on the process in the way of the source node. The Algorithm 1 highlights the multipath route discovery and path classifier based on link cost metrics, for example, remaining energy, node available buffer size, packet reception ratio, hop count, and end-to-end delay.

3.2.4 Path Classifier

When the path from the source node to the sink node is discovered, the path is categorized according to the condition of every node disjoint path into four categories, that is the best path, loss-tolerant path, delay-tolerant path, and non-critical path. When all three conditions, namely energy level, packet reception ratio, and delay, are provided effectively in the path, then the path is regarded as the best path. When the delay is higher in the path, then the path is categorized as a delay-tolerant path. If the loss rate is higher in the path, then the path is categorized as a loss-tolerant path by the source. When the path has a higher delay and loss rate, then the path is categorized as a non-critical path. Algorithm 1 highlights the multipath route discovery and path classifier based on link cost metrics.

3.2.5 Path Failure Recovery Phase

While forwarding data on the selected path, if a given node along the selected path is about to fail, then the method dynamically selects another node in place of the failed node and keeps forwarding data on the new path. For instance, as shown in Fig. 5, if data is sent on Source-X-M-H-B-Sink path, Node H is about to fail due to its reduced energy, and then, when its energy goes below a certain threshold value, it will notify all its neighbours K, G, F, E and C. Thus, the node with highest remaining energy will be selected. Assume that is Node K, which is in the routing table of Node H, then it will forward connection request messages (CREQ) to the disconnected nodes of the path, i.e. B and M, and once the CREQ is received by Node B and Node M, then again, the path starts forwarding the remaining data through Source-X-M-K-B-Sink. The connection request message is shown in Fig. 6.

3.3 Packet Priority Scheduling and Data Forwarding Decision Phase

In this section, packet priority scheduling and queuing model, which consists of packet classifier, priority scheduling based on packet classifier, and traffic allocation is presented here. These follow the data forwarding decisions with several priorities. In this section, a packet priority scheduling with data forwarding decision algorithm is proposed.

3.3.1 Packet Priority Scheduling and Queuing Model

The sensor data may initiate from a variety of events that have dissimilar levels of significance. Thus, the packet priority scheduling should deem dissimilar priorities or importance for different types of data class as shown in Table 1. For instance, urgent packets may be assigned with higher priority in contrast to the less important packets in order to reach the deadline. Therefore, the source node allocated the priority of the data packets. The priority is determined via the source node cached in the field, called ‘Packet Priority’ of the data packet’s header and must keep a part of the header, unchanged until it arrives at the sink node. The priority is therefore determined via the source node cached in the field, called ‘Packet Priority’ of the data packet’s header and must keep a part of the header, unchanged until it arrives at the sink node. The source node utilizes the priority of data class table, shown in Table 1, to allocate one of four priorities to the packet based on delay and reliability requirements. Thus, a packet with an urgent type will be scheduled to forward earlier than the packet with less importance. In addition, before a source node forwards a packet, all the disjoint routing paths from the source node to the sink are classified built on the condition of the paths into best path, delay-tolerant, loss-tolerant, and non-critical path.

Figure 7 shows that the queuing model is particularly designed for node disjoint paths with the existence of dissimilar packet priority. When there are different types of multimedia traffic to the source node, a queue of receiving buffer is used to store the diverse types of the received data traffic. Therefore, the source node categorizes the degree of the significance of every data packet. A packet classifier is utilized to verify the type of the receiving packet and forwards it to the suitable queue. The priority scheduling schedules priority of the queues from the highest priority queue to the lowest one based on how packets classify queues. Moreover, the traffic allocation breaks up the packet to a number of same-sized sub-packets and then schedules these sub-packets at the same time for transmission through several paths. In the sink node side, the sub-packets are gathered, reconstructed, and the original message is recovered.

3.3.2 Data Forwarding Decision

PPSMR defines a data forwarding decision, which permits the source node to choose the most appropriate path, depending on the path classifier and the priority of the packet. Then, the best of the numerous paths are chosen to forward the packets based on the QoS conditions of the packet, as shown in Table 1. This can be achieved effectively by prioritizing the importance of the data packets. As all data packets hold ‘Packet Priority’ field, which is illustrated in Fig. 9, the source node checks the packet’s priority, and based on that, it takes suitable decisions for data forwarding. Along with the priority, the source node is aware of the path classifier after the path construction phase, to decide on whether the path classifier status permits it to forward the given priority data packet or not. The data forwarding decision is made via the source node by utilizing the packet priority and path classifier as revealed in Table 2.

For instance, in Fig. 8, given that the source node wants to send a data packet, but before forwarding, the source node identifies that the priority-type is priority 4 and it recognizes that the path classifier of Node 10 is the best path. Therefore, the source node, after referring to Table 2 (in this case, it falls in the Case IV), sends the sub-packets to available non-critical paths. In other words, it forwards the sub-packets through non-critical paths, which starts from Node 24 and Node 28 because they are considered as non-critical paths. Furthermore, in the same case, it will send a packet of priority 3 to delay-tolerant paths. On the other hand, the source node forwards sub-packets of priority 1 through the available best paths, which start from Node 10 and Node 6. This is because the source node recognizes that the best paths, which start from Node 10 and Node 6, have a good link cost condition to send data packets of priority 1 as it is urgent. Alternatively, when using the best paths many times, it will further exhaust its energy. Therefore, it is not good to forward less important or moderately important packets, such as priority 3 and priority 4 of data packets; that is if the best path used to send the packets will further exhaust its energy. Thus, the nodes in best paths are preserved from getting energy exhausted by less important data packets, and therefore, the best paths are always kept available for forwarding urgent packets.

Figure 9 shows the packet structure in different fields: SrcID is the source address, DesID is the destination address, Nhop is the next hop, RID is the route ID, PP field is used to differentiate the packet priority, and each data packet contains 2 bits for its packet priority (PP) field. If PP field is equal to 11, the packet is an urgent packet. If the PP field is equal to 10, the packet is highly important. If the PP field is equal to 01, the packet is moderately important. If the PP field is equal to 00, the packet is less important. Seq_Number is the sequence number of the packet each time it is sent out, and Data load filed is used to carry the data. Algorithm 2 presents the proposed data forwarding algorithm based on packet priority and path classifier.

4 Simulation Set-up

This section presents the simulation setup used to evaluate the performance of the PPSMR method. The PPSMR method is implemented in NS-2 network simulator, which is an object-oriented and discrete event-driven, as the simulation platform. Also, NS-2 supports energy model and IEEE 802.11 standard. The performance of the proposed PPSMR, which handles packet priority scheduling and makes efficient data forwarding, is compared with EQSR scheme. EQSR scheme is considered a multipath routing for efficient resource utilization. Moreover, EQSR is considered a QoS routing scheme for real-time and non-real-time data traffic. The simulation considers a square region of area 500 m × 500 m, in which the WSN is organized randomly. There is one sink node, which has no power constraints (sink node is deployed at the centre of the area), and one source node in the network. It considers a variety of packet rate from 1 to 3 packets per second with varying number of nodes, from 50 to 250 sensors of nodes, with steps of 50 nodes. The simulation time is 500 s. Every node has a fixed transmission range of 25 m. The data packet size is 510 bytes.

Four categories of data traffic are considered in the simulation: (t1) urgent data traffic, (t2) highly important data traffic, (t3) moderately important data traffic, and (t4) less important data traffic, and these data traffic are forwarded in different paths such as best path, loss-tolerant path, delay-tolerant path, and non-critical path, respectively. The delay and reliability threshold values are 0.7 s and 0.6 [30], respectively. Every node is assigned the same initial energy of 10 J, to maintain the simulation time within a reasonable time, and minimum threshold energy is set at 0.3 J. The simulation further introduced an Omni antenna (antenna type) to every node, adopts the IEEE 802.11 with CSMA/CA, and uses bandwidth 250 kbps at MAC layer, which is provided in the NS-2 simulator. The energy consumptions for transmission and reception are Eelec = 50 nJ/bit. Table 3 shows the standard parameters that have been utilized in the simulation.

4.1 Metrics and Results Analysis

The performance of PPSMR was evaluated using varying numbers of node densities and packet rate per second. Average end-to-end delay, average packet delivery ratio, and average energy consumption are the most straightforward metrics of evaluating the performance of the proposed method.

-

Average End-to-End Delay

It is identified as the average time from the moment a data packet is transmitted through a source node \(t_{{{\text{source}}(i)}}\) and the moment the sink received the data packet \({\text{t}}_{{\text{sink(i)}}}\). N is the number of successfully received packets. The average end-to-end delay, D, is given in Eq. (5).

-

Packet Delivery Ratio

The packet delivery ratio (PDR) is the ratio of the number of deliver data packets to the destination node and the total number of packets transmitted from source node. In mathematical term, PDR is as in Eq. (6). Given that \(D_{{{\text{re}}}}\) is total number of data packets that have been received in the sink node, and \(D_{{{\text{se}}}}\) is the total number of data packets that has been sent from source node.

-

Average Energy Consumption

The average energy consumption is computed for the entire topology. This metric is the measure for the network lifetime. It evaluates the average variations among the initial energy level and the final energy level that is left in every node. Let \(E_{i,i}\) be the initial energy level of node i, \(E_{f,i}\) the final energy level of a node i, and N indicates the number of nodes in the simulation. Therefore, the average energy consumption is given in Eq. (7).

where

4.2 Simulation Results in Average End-to-End Delay

The end-to-end delay is the time taken by a data packet to arrive at the destination. End-to-end delay is considered as a significant metric in evaluating PPSMR and EQSR. As illustrated in Fig. 10, when there is an increase in the size of the network and the packet arrival rate, the average packet delay is increased for both PPSMR and EQSR. As shown in Fig. 10a, in case of urgent data traffic, when the network size is 250 nodes at 1 packet per second, then the end-to-end delay of the proposed PPSMR has 72% lower delay as compared to EQSR. This is because PPSMR considers the energy factor, number of hops, available buffer size, packet reception ratio, and delay factor to select the best subsequent hop during the path construction phase. Moreover, it classifies the paths according to the condition of the paths. However, EQSR routing does not consider the number of hop during the selection of the nodes, and it is not aware of path classifier. In this evaluation, the packet arrival rate at the source node is varying, and the delay for t1, t2, t3, and t4 data traffic is considered. In addition, Fig. 10 illustrates that PPSMR effectively distinguishes network service through giving (t1) urgent data traffic absolute special treatment than other data traffic (t2, t3, t4). The (t1) urgent data traffic constantly comes with low end-to-end delay. However, EQSR performs better than PPSMR in the case of t4, i.e. less important data traffic, due to the overhead emanated from the queuing model. Additionally, the average delay increases when data rates increase, because PPSMR gives priority to process urgent data traffic first, which results in more queuing delay for less important data traffic at every sensor node.

Figure 10b shows that in the case of urgent data traffic when the network size is 250 nodes at 2 packets per second, the average end-to-end delay of the propose PPSMR is 68% lower than EQSR routing. Figure 10c also shows that in the case of urgent data traffic, when the network size is 250 nodes at 3 packets per second, the average end-to-end delay of the proposed PPSMR is 65% lower than EQSR.

4.3 Simulation Results in Packet Delivery Ratio

The PDR is the ratio of the total number of the packets that are received at the sink node to the total number of the packets that are transmitted from the source node in the simulation time. Figure 11 illustrates the PDR of diverse packet types. PDR is considered a significant metric in evaluating PPSMR and EQSR. Figure 11a illustrates the delivery ratio of PPSMR and EQSR. Obviously, PPSMR performs better, i.e. 54% better than EQSR routing, in the case of urgent data traffic when the network size is 250 nodes at a data rate of 1 packet per second; this is because PPSMR utilizes the remaining energy, available buffer size of node, number of hops, packet reception ratio, and delay to select the best subsequent hop during the path construction phase. Moreover, PPSMR splits the packet to a number of fragments of equal sizes and spreads the data packets over a number of node disjoint paths concurrently. Therefore, network congestion is avoided. According to the idea of service differentiation, the PPSMR utilizes a queuing model to adjust different data classes. However, EQSR is not aware of path classifier, and all packets are processed equally, which gives identical preference for all data packets type. Consequently, EQSR does not satisfy in attaining the necessary reliability within the deadline. Moreover, as shown in Fig. 11, the PDR of diverse packet types is clearly different. The PDR of urgent packets is higher than that of EQSR routing. The PDR of moderately important packet

s is higher than that of highly important packets, and this is because moderately important packets are forwarded by delay paths, while highly important packets are forwarded by loss paths. The PDR of highly important packets is higher than that of less important packets.

Figure 11b shows that in the case of urgent data traffic when the network size is 250 nodes at 2 packets per second, the PDR of the proposed PPSMR is 45% higher than EQSR routing. Figure 11c also shows that in the case of urgent data traffic, when the network size is 250 nodes at 3 packets per second, the PDR of the proposed PPSMR is 39% higher than EQSR routing protocol.

4.4 Simulation Results in Average Energy Consumption

Figure 12 illustrates the result for the average energy consumption, and it shows that PPSMR is energy efficient and saves more energy than EQSR. The high energy efficiency of PPSMR is because PPSMR considers the energy factor, number of hops, available buffer size, packet reception ratio, and delay factor to choose the best subsequent hop during the path construction phase. Moreover, PPSMR splits the packet to a number of fragments of equal sizes and spreads the data packets over a number of node disjoint paths, and it is able to reassemble the packet and decrease number of retransmission. Moreover, PPSMR has the ability to recover from path failure. However, EQSR does not consider the number of hops when selecting the nodes, and it keeps on to retransmit a packet on repeated failure even when there is node breakdown. Figure 12a shows that PPSMR provides 24% enhanced performance than EQSR routing when the number of nodes is 250 at a data rate of 1 packet per second. Figure 12b also shows that PPSMR provides 18% enhanced performance than EQSR routing when the number of nodes is 250 at 2 packets per second data rate. Furthermore, Fig. 12c shows that PPSMR provides 10% enhanced performance than EQSR routing when the number of nodes is 250 at 3 packets per second.

5 Conclusion

In this paper, a packet priority scheduling with data forwarding decision in node disjoint multipath routing for WSN is recommended. The method of PPSMR uses the remaining energy, available buffer size of node, packet reception ratio, number of hops, and delay to choose the best next hop during construction path phase from the sink node to source node and classify these paths according to routing path condition. In addition, PPSMR adopts packet priority and data forwarding decision, which categorizes the packets into four data classes according to the real-time and reliability conditions and gives diverse priorities for them, and allows the source node, to make data forwarding decision built on the priority of the data packet and the path classifier in order to choose the suitable path. Finally, the PPSMR uses a queuing model to handle packet priority, which built on the notion of service differentiation. With the different number of nodes and packet rate per second, the simulation results show that PPSMR has lower average delay, lower average energy consumption, and high packet delivery ratio than the EQSR routing.

References

Chennakesavula P, Ebenezer J, Murty SAVS (2012) Real-time routing protocols for wireless sensor networks: a survey. Proc Fourth Int Workshop Comput Netw Commun (CoNeC) 71(9):141–158

Rashid, Rehmani MH (2016) ‘Applications of wireless sensor networks for urban areas: a survey. J Netw Comput Appl 60:192–219

Hamid Z, Hussain F (2014) QoS in wireless multimedia sensor networks: a layered and cross-layered approach. Wireless Pers Commun 75(1):729–757

Boulfekhar S, Benmohammed M (2013) A novel energy efficient and lifetime maximization routing protocol in wireless sensor networks. Wireless Pers Commun 72(2):1333–1349

Abdulla AE, Nishiyama H, Ansari N, Kato N (2014) Energy-aware routing for wireless sensor networks. In: The art of wireless sensor networks, 201–234

Kandris D, Tsagkaropoulos M, Politis I, Tzes A, Kotsopoulos S (2011) Energy efficient and perceived QoS aware video routing over wireless multimedia sensor networks. Ad Hoc Netw 9(4):591–607

Kim D, Kim J, Park KH (2011) An event-aware MAC scheduling for energy efficient aggregation in wireless sensor networks. Comput Netw 55(1):225–240

Ben-Othman J, Yahya B (2010) Energy efficient and QoS based routing protocol for wireless sensor networks. J Parallel Distribut Comput 70(8):849–857

Ukani PT, Parikh V (2017) Routing protocols for wireless multimedia sensor networks: challenges and research Issues. In: Information and communication technology for intelligent systems. Springer International Publishing Cham, 157–164

Alwan H, Agarwal A (2013) MQoSR: a multiobjective QoS routing protocol for wireless sensor networks. Int J Sensor Netw 1–12

Al-Ariki HDE, Swamy MS (2017) A survey and analysis of multipath routing protocols in wireless multimedia sensor networks. Wireless Netw 23(6):1823–1835

Rhee S, Choi H-Y, Lee H-J, Park M-S (2014) Power-aware data transmission for real-time communication in multimedia sensor networks. Int J Distrib Sens Netw 2014:1–12

Sun Y, Ma H, Liu L (2007) Traffic scheduling based on queue model with priority for audio/video sensor networks. In: Proceedings of 2nd international conference on pervasive computing and applications (ICPCA), 709–714

Chen J, Daz M, Llopis L, Rubio B, Troya JM (2011) A survey on quality of service support in wireless sensor and actor networks: requirements and challenges in the context of critical infrastructure protection. J Netw Comput Appl 34(4):1225–1239

Xin Liu Z, Li Dal L, MA K, Ping Guan X (2013) Balance energy-efficient and real-time with reliable communication protocol for wireless sensor network. The J China Univ Posts Telecommun 20(1):37–46

Alwan H, Agarwal A (2013) Multi-objective QoS routing for wireless sensor networks. Int Conf Comput Netw Commun (ICNC), 1074–1079

Chen Y, Nasser N, El Salti T, Zhang H (2010) A multipath QoS routing protocol in wireless sensor networks. Int J Sensor Netw 7(4):207–216

Nasser N, Chen Y (2007) SEEM: secure and energy-efficient multipath routing protocol for wireless sensor networks. Comput Commun 30(11–12):2401–2412

Mitton N, Razafindralambo T, Simplot-Ryl D (2011) Position-based routing in wireless ad hoc and sensor networks. In: Theoretical aspects of distributed computing in sensor networks, 447–477

Cheng L, Niu J, Cao J, Das SK, Gu Y (2014) QoS aware geographic opportunistic routing in wireless sensor networks. Parallel Distribut Syst Trans IEEE 25(7):1864–1875

Huang P, Wang C, Xiao L (2012) Improving end-to-end routing performance of greedy forwarding in sensor networks. Parallel Distribut Syst Trans IEEE 23(3):556–563

Sohrab K, Gao J, Ailawadh V, Pottie GJ (2000) Protocols for self-organization of a wireless sensor network. IEEE Personal Commun J 7(5):16–27

He T, Stankovic JA, Lu C, Abdelzaher T (2003) SPEED: a stateless protocol for real-time communication in sensor networks. In: Proceedings of the 23th IEEE international conference on distributed computing systems. IEEE, Providence, RI, USA, 46–55

Felemban E, Lee CG, Ekici E (2006) MMSPEED: multipath Multi-SPEED protocol for QoS guarantee of reliability and timeliness in wireless sensor networks. IEEE Trans Mob Comput 5(6):738–753

Chen J, Lin R, Li Y, Sun Y (2008) LQER: a link quality estimation based routing for wireless sensor networks. Sensors 8(2):1025–1038

Villaverde BC, Rea S, Pesch D (2009) Multi-objective cross-layer algorithm for routing over wireless sensor networks. In: Proceedings of the 3rd international conference on sensor technologies and applications (SENSORCOMM ’09), 568–574

Jurdak R, Baldi P, Lopes CV (2007) Adaptive low power listening for wireless sensor networks. IEEE Trans Mob Comput 6(8):988–1004

Huang X, Fang Y (2008) Multiconstrained QoS multipath routing in wireless sensor networks. ACM Wireless Netw 14(4):465–478

Bagula AB, Mazandu KG (20008) Energy constrained multipath routing in wireless sensor Networks. In: Proceedings of the 5th international conference on ubiquitous intelligence and computing, 453–467

Agrakhed J, Biradar G, Mytri V (2012) Adaptive multi constraint multipath routing protocol in wireless multimedia sensor network. In: Proceedings of the IEEE international conference on computing sciences (ICCS 2012), 326–331

Acknowledgements

Abdulaleem Ali Almazroi would like to thank Dr. MA Ngadi for his support in his study and also would like to thank Northern Border University in Saudi Arabia for their support in his scholarship.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Ali Almazroi, A., Ngadi, M.A. (2021). Packet Priority Scheduling for Data Delivery Based on Multipath Routing in Wireless Sensor Network. In: Smys, S., Palanisamy, R., Rocha, Á., Beligiannis, G.N. (eds) Computer Networks and Inventive Communication Technologies. Lecture Notes on Data Engineering and Communications Technologies, vol 58. Springer, Singapore. https://doi.org/10.1007/978-981-15-9647-6_5

Download citation

DOI: https://doi.org/10.1007/978-981-15-9647-6_5

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-9646-9

Online ISBN: 978-981-15-9647-6

eBook Packages: EngineeringEngineering (R0)