Abstract

Emergence is one of the most essential features of complex systems. This property implies new collective behaviors due to the interaction and self-organization among elements in the system, which cannot be produced by a single unit. It is our task in this Chapter to extensively discuss the basic principle, the paradigm, and the methods of emergence in complex systems based on nonlinear dynamics and statistical physics. We develop the foundation and treatment of emergent processes of complex systems, and then exhibit the emergence dynamics by studying two typical phenomena. The first example is the emergence of collective sustained oscillation in networks of excitable elements and gene regulatory networks. We show the significance of network topology in leading to the collective oscillation. By using the dominant phase-advanced driving method and the function-weight approach, fundamental topologies responsible for generating sustained oscillations such as Winfree loops and motifs are revealed, and the oscillation core and the propagating paths are identified. In this case, the topology reduction is the key procedure in accomplishing the dimension-reduction description of a complex system. In the presence of multiple periodic motions, different rhythmic dynamics will compete and cooperate and eventually make coherent or synchronous motion. Microdynamics indicates a dimension reduction at the onset of synchronization. We will introduce statistical methods to explore the synchronization of complex systems as a non-equilibrium transition. We will give a detailed discussion of the Kuramoto self-consistency approach and the Ott-Antonsen ansatz. The synchronization dynamics of a star-networked coupled oscillators and give the analytical description of the transitions among various ordered macrostates. Finally, we summarize the paradigms of studies of the emergence and complex systems.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Emergence

- Order parameter

- Slaving principle

- Self-sustained oscillation

- Winfree loop

- Synchronization

- Complex networks

4.1 Introduction

The core mission of physics is to understand basic laws of everything in the universe. The Chinese vocabulary the English word “UNIVERSE” is “宇宙”, which is composed of two characters “宇” and “宙” with distinctly different meanings. The word “宇” means the space, and “宙” refers to the time. The ancient Chinese philosopher Shi Jiao(尸佼, or 尸子, Shi Zi) in the Warring States period (475–221 B.C.), wrote that “四方上下曰宇, 往古来今曰宙” in his book “Shi Zi”. Similar expressions also appeared in other books such as “Wen Zi: the Nature”, “Zhuang Zi. Geng Sang Chu”, “Huai Nai Zi”, and so on [1]. These interesting citations indicate that the mission of physicists is a deep understanding of basic laws of space and time, or say, the common and fundamental laws of variations embedded in different systems.

A basic paradigm of natural science developed throughout the past many decades is the principle of reductionism. The essence of reductionism is that a system is composed of many elements, with each element can be well understood and physically described. As long as the laws governing the elements are clear, it is expected that the properties of the system can be well understood and reconstructed. This belief had been successfully undertaken in the 18–19 centuries, where the structures of matters, ranging from molecules, atoms, protons, neutrons, electrons to quarks, were successfully revealed [2].

The reductionism encountered its crisis from the first half of the twentieth century. The development of thermodynamics and statistical physics, especially the findings of various phase transitions such as superconductivity and superfluidity, indicated that these behaviors result from the collective macroscopic effect of molecules and atoms, which do not occur at the atom/microscopic level. Physicists working on statistical physics interpreted the occurrence of phase transition as a symmetry breaking, which can be extensively found in antiferromagnets, ferroelectrics, liquid crystals and condensed matters in many other states. Philip Andersen connected the later findings of quasiparticles, e.g. phonons, implies collective excitations and modes organized by atoms with interactions [3]. Collective behaviors that are formed by interacted units in a system, e.g. transitions among different phases, are termed as the emergence.

The emergence property of many-body systems implies the collapse and failure of reductionism. Andersen asserted:

The ability to reduce everything to simple fundamental laws does not imply the ability to start from those laws and reconstruct the universe…… The behavior of large and complex aggregates of elementary particles, it turns out, is not to be understood in terms of a simple extrapolation of the properties of a few particles. Instead, at each level of complexity entirely new properties appear, and the understanding of the new behaviors requires research which I think is as fundamental in its nature as any other.

Nowadays, emergent behaviors have been extensively found in living activities of neuron systems, brains and the formation of proteins, DNA, and genes [4]. These phenomena imply that even if one knows everything about an element, the behaviors of a system composed of these elements still cannot be simply predicted based on individual properties. All these are called complex systems.

One can list some common properties of complex systems. When looked at in detail, such as cooperation or self-organization, emergence, and adaption [5]. The process of organized behavior arising without an internal or external controller or leader in a system is called the self organization. Since simple rules produce complex behavior in hard-to-predict ways, the macroscopic behavior of such systems is sometimes called the emergence. A complex system is then often defined as a system that exhibits nontrivial emergent and self-organizing behaviors. The central question of the sciences of complexity is how these emergent self-organized behaviors come about [6].

Emergence is one of the most essential features of complex systems. In this Chapter, we will discuss extensively the basic principle, the paradigm, and the methods of emergence in complex systems based on nonlinear dynamics and statistical physics. We develop the foundation and treatment of emergent processes of complex systems, and then exhibit the emergence dynamics by studying two typical phenomena.

The first example is the emergence of collective sustained oscillation in networks of excitable elements and gene regulatory networks. We show the significance of network topology in leading to the collective oscillation. By using the dominant phase-advanced driving method and the function-weight approach, fundamental topologies responsible for generating sustained oscillations such as Winfree loops and motifs are revealed, and the oscillation core and the propagating paths are identified. In this case, the topology reduction is the key procedure in accomplishing the dimension-reduction description of a complex system.

In the presence of multiple periodic motions, different rhythmic dynamics will compete and cooperate and eventually make coherent or synchronous motion. Microdynamics indicates a dimension reduction at the onset of synchronization. We will introduce statistical methods to explore the synchronization of complex systems as a non-equilibrium transition. We will give a detailed discussion of the Kuramoto self-consistency approach and the Ott-Antonsen ansatz. The synchronization dynamics of a star-networked coupled oscillators gives the analytical description of the transitions among various ordered macrostates.

We will summarize the paradigms of studies of the emergence and complex systems based on the above discussions.

4.2 Emergence: Research Paradigms

Emergence implies the self-organized behavior in a complex system, which occurs under the physically non-equilibrium condition. This collective feature comes from the cooperation of elements through coupling, and it cannot be observed at the microscopic level. To reveal the emergent dynamics at the macroscopic level, scientists have proposed various theories from microscopic to statistical and macroscopic viewpoints.

4.2.1 Entropy Analysis and Dissipative Structure

Let us first discuss the possibility of self-organization in non-equilibrium systems from the viewpoint of thermodynamics and statistical physics. This implicitly requests an open system that can exchange matters, energy, and information with its environment, as shown in Fig. 4.1. We focus on the entropy change dS in a process of an open system. One may decompose the total entropy production dS into the sum of two contributions:

where \(d_{i} S\) is the entropy production due to the irreversible process inside the system, and \(d_{e} S\) is the entropy flux due to the exchanges with the environment. The second thermodynamic law implies that

where \(d_{i} S = 0\) denotes the thermal equilibrium state. If the system is isolated, \(d_{e} S = 0\), one has \(dS = d_{i} S > 0\). In the presence of exchanges with the environment, \(d_{e} S \ne 0\). When this open system reaches the steady state, i.e. the total entropy change \(dS = 0\). This leads to

This means that if there exists a sufficient amount of negative entropy flow, the system can be expected to maintain an ordered configuration. Prigogine and colleagues thus claimed that “Nonequilibrium may be a source of order”, which forms the base of the dissipative structure theory [7].

The emergence of dissipative structure depends on the degree of deviation from the equilibrium state of the system. In the small-deviation regime, the system still keeps its thermodynamic property, and the minimum entropy principle applies. In the linear regime, numerous theories such as linear response theory and dissipation-fluctuation theorem have been proposed. As the system is driven so far from the equilibrium state that the thermodynamic branch becomes unstable, structural branches may emerge and replace the thermodynamic branch [7, 8]. This can be mathematically described in terms of dynamical system theory [9, 10].

Denote the macrostate of a complex system as \(\mathop{u}\limits^{\rightharpoonup} \left( t \right) = \left( {u_{1} ,u_{2} , \ldots ,u_{n} } \right)\), the evolution of the state can be described as

where \(\mathop{f}\limits^{\rightharpoonup} = \left( {f_{1} ,f_{2} , \ldots ,f_{n} } \right)\) is the nonlinear function vector, and \({\varvec{\varepsilon}}\) are a group of control parameters. Equation (4.4) is usually a group of coupled nonlinear equations and can be extensively discussed by using theories of dynamic systems, and the stability of possible states and bifurcations have been exhaustively studied in the past decades. Readers can refer any textbook on nonlinear dynamics and chaos to gain a detailed understanding [11, 12].

Considering the spatial effect, i.e. \(u = u\left( {{\varvec{r}},t} \right)\). The simplest spatial effect in physics is the diffusion process, which is given by Fick’s law as the proportional relation between the flux and the gradient of matter condensation in space:

where D is the diffusion coefficient. Therefore in this case the governing equation of motion can be written as

where \(D\) denotes the diffusion coefficient. Equation (4.6) is called the reaction–diffusion equation. This equation and related mechanism were first proposed by Alan Turing in 1952 as a possible source of biological organism [13]. The reaction term \(f\left( {u,{\varvec{\varepsilon}}} \right)\) gives the local dynamics, which is the source of spatial inhomogeneity. The diffusion term tends to erase the spatial differences of the state \(u\), thus it is the source of spatial homogeneity. Both mechanisms appears at the right hand side of Eq. (4.6) and compete, resulting in an self-organized state. Equation (4.6) and related dynamical systems have been extensively explored in the past a few years, and rich spatiotemporal patterns and dynamics have been revealed. Readers can reach related reviews and monographs to get more information [14,15,16].

4.2.2 Slaving Principles and the Emergence of Order Parameters

The study of dissipative structure is, in fact, largely based on the dynamics of macrostate variables. However, it is very important to appropriately select these macroscopic variables. These state variables are required to reveal the emergence of dissipative structures, hence they should act as order parameters similar to studies of those in phase transitions. The concept of order parameter was first introduced in statistical physics and thermodynamics to describe the emergence of order and the transitions of a thermodynamic system among different macroscopic phases [17,18,19]. It had been naturally extended to non-equilibrium situations to reveal the order out of equilibrium.

Haken and his collaborators proposed the synergetic theory and focused on the conditions, features and evolution laws of the self organization in a complex system with a large number of degrees of freedom under the drive of external parameters [20, 21]. and the interaction between subsystems to form spatial, temporal or functional ordered structures on a macroscopic scale. The core of synergetics is the slaving principle, which reveals how order parameters emerge from a large number of degrees of freedom through competitions and collaborations. As the system approaches the critical point, only a small number of modes/variables with a slow relaxation dominate the macroscopic behavior of the system and characterize the degree of order (called the order parameters) of the system. A large number of fast-changing modes are governed by the order parameters and can be eliminated adiabatically. Thus we can establish the basic equation of the order parameters. The low-dimensional evolution equation of order parameters can thus be used to study the emergence of various non-equilibrium states, their stability and bifurcations/transitions.

To clarify the emergence of order parameters, let us first take a simple two-dimensional nonlinear dynamical system as an example. Suppose the following nonlinear differential equations with two variables \(\left( {u\left( t \right),s\left( t \right)} \right)\):

where the linear coefficients are \(\alpha , \beta\), and \(\beta > 0\). Let us set \(\alpha\) as the modulated parameter. When \(\alpha < 0\), the stationary solution is \(\left( {u,s} \right) = \left( {0,0} \right)\). By changing the parameter α to slightly larger than 0, i.e. \(0 < {\upalpha } \ll 1\), the solution \(\left( {u,s} \right) = \left( {0,0} \right)\) becomes unstable. The new solution

emerges and keeps stable. However, because the new stable solution (4.8) is still near (0,0), (4.7b) can be solved by integrating (4.7b) approximately as

The first expression is the integral form, and the second expression can be obtained by using the partial integral. Considering \(0 < {\upalpha } \ll 1\), and the variables \(u,{\text{ s}},{ }\dot{u}, \dot{s}\) are also small but with different order. Using the simple scaling analysis, one can get

Therefore \(\left| {\dot{u}} \right| \ll \left| {\text{u}} \right|\) when \({\upalpha } \ll 1\), the second term in the second expression of (4.9) is a high-order term and can be neglected. Thus one obtains the following approximated equation:

By comparing (11a) and (4.7b), one can easily find that the result of (11a) is equivalent to setting

in (4.7b), and one can get \(s\left( t \right) = u^{2} \left( t \right)/\beta\). Substituting it to (4.7a) one obtains

This is a one-dimensional dynamical equation and can be easily solved.

The proposition (4.12) is called the adiabatic elimination principle, which is a commonly used approximation method adopted in applied mathematics. This approximation has a profound physical meaning. Eq. (4.12) indicates that, under this condition, the variable \(u(t)\) is a slow-varying and linearly-unstable mode called the slow variable, while \(s(t)\) is a fast-changing and linearly-stable mode called the fast variable. The real essence of (4.12) is that, near the onset of the critical point, the fast mode \(s(t)\) can be so fast that it can always keep up with the change of the slow mode \(u(t)\), and the fast variable can be considered as the function of the slow variable, as shown in (4.11). In other words, the slow mode dominates the evolution of the system and of course, the fast mode can be reduced in terms of adiabatic elimination, leaving only the equation of the slow mode. Therefore, the slow mode will determine the dynamical tendency of the system (7) in the vicinity of the critical point and can be identified as the order parameter. The emergence of order parameters in a complex system is the central point of the slaving principle, which was proposed by Hermann Haken [21].

The above discussion exhibits a typical competition of two modes in a two-dimensional dynamical system. The insightful thought embedded in the slaving principle can be naturally extended to complex systems with a large number of competing modes. Suppose an n-dimensional dynamical system \(\dot{{\varvec{x}}}={\varvec{F}}(\alpha ,{\varvec{x}})\), where \(\alpha\) is the controlling parameter. The equations of motion can be written in the following canonical form near the critical point \({\varvec{x}}=0\):

where x is an n-dimensional state vector \({\varvec{x}}={({x}_{1},{x}_{2},\dots ,{x}_{n})}^{T}\), \(\mathbf{A}=\mathbf{A}(\mathrm{\alpha })\) is an \(n\times n\) Jacobian matrix, and \({\varvec{B}}({\varvec{x}},\mathrm{\alpha })\) is an n-dimensional nonlinear function vector of x. The eigenvalues of the matrix A are \(\left\{{\lambda }_{i}(\mathrm{\alpha })\right\}\), which are aligned as the descending order according to their real parts, i.e.

Assume that \({\varvec{x}}=0\) is a stable solution of (4.14) in a certain parameter regime of \(\mathrm{\alpha }\), i.e. the real parts of all eigenvalues are negative,

By modulating the parameter \(\mathrm{\alpha }\) to a critical point, say, \(\mathrm{\alpha }>{\alpha }_{c}\), when Re \({\lambda }_{1}\) changes from negative to positive, i.e.

and other eigenvalues \(\{\mathrm{Re}{\lambda }_{2},\mathrm{Re}{\lambda }_{3},\dots ,\mathrm{Re}{\lambda }_{n}\}\) remain negative. In this case the solution \({\varvec{x}}=0\) becomes unstable. By introducing the linear transformation matrix T of the Jacobian A as

so that the new matrix is diagonalized as.

To distinguish the eigenvalue \({\lambda }_{1}\) from other eigenvalues, we relabel these eigenvalues as.

where \(\mathrm{Re}{\lambda }_{{s}_{i}}<0\), \(i=\mathrm{1,2},\dots n-1\). Then the corresponding state vector x can be transformed to.

Equations (4.14) can be rewritten as

where s is an (n − 1)-dimensional vector \({\varvec{s}}={({s}_{1},{s}_{2},\dots ,{s}_{n-1})}^{T}\).

By using the above procedure, one successfully separates the slow mode \(u(t)\) from all the variables in terms of the transformation (4.20a), and the remaining variables \(\{{s}_{i}\left(t\right), i=\mathrm{1,2},\dots ,n-1\}\) are fast variables that satisfy Eq. (4.20b). One can apply the adiabatic elimination to (4.20b) based on the same reason as

This leads to the following \(n-1\) equations:

Fast variables s can be analytically solved from the \(n-1\) equations of (4.22) as the function of the slow variable u in the form \({\varvec{s}}={\varvec{s}}\left(u\right)\). Then by inserting \({\varvec{s}}\left(u\right)\) into Eq. (4.20a), one obtains

This is the one-dimensional dynamical equation of the order parameter u, which can be easier to analyze.

When there exist degenerations for the first \(m>1\) eigenvalues \({\{\lambda }_{1},{\lambda }_{2},\dots ,{\lambda }_{m}\}\), which means that they have the same real parts:

these m modes may lose their stability simultaneously at the critical point and all are slow modes, and their eigenvalues \({{\varvec{\lambda}}}_{u}={(\lambda }_{1},{\lambda }_{2},\dots ,{\lambda }_{m})\). In this case u and \({\stackrel{\sim }{B}}_{u}\) in (4.23) should be replaced by vectors. Therefore

and the equations of motion are rewritten as

s(u) can be obtained in terms of the adiabatic elimination (4.20b). Eq. (4.23) becomes m-dimensional equations of motion by inserting the formula s(u) into (4.20a):

These are the equations of motion of the order parameters \({\varvec{u}}={({u}_{1},{u}_{2},\dots ,{u}_{m})}^{T}\). By comparing Eq. (4.27) with Eq. (4.23), one finds that these two equations have the same form. However, they are essentially different. In Eq. (4.26), the s variables are functions of time t, while in Eq. (4.27), s are functions of the slow variables u, and the degrees of freedom of (4.27) is considerably less than that of (4.26). In practice, only a very small portion of modes may lose their stability at a critical point, therefore one can consider the procedure from Eqs. (4.20) to (4.23) and (4.27) as a reduction from high-dimensional to low-dimensional dynamics governed by only a few order parameters. This obviously is a great dynamical simplification, which is an important contribution of the slaving principle at the critical point.

The slaving principle is closely related to the central manifold theorem in topological geometry. The center-manifold theorem is a commonly used method of dimensionality reduction, which is suitable for studying autonomous dynamical systems. The center-manifold method uses the characteristic of the tangent manifold and the corresponding subspace to find out the equation of the system on the center manifold. For high-dimensional dynamical systems, it is difficult to study the dynamical system directly through the traditional bifurcation behavior. In order to better grasp the nature of the problems to be studied, the central-manifold theorem is generally adopted to reduce the system to lower dimensional equations.

4.2.3 Networks: Topology and Dynamics

The blossom of network science is absolutely a great milestone in the exploration of complexity. Early studies of complex networks started from the graph theory in mathematics. One can infer from the early work of Euler on the Konisburg bridge problem. Graph theory proposed a number of useful concepts and laws in analyzing the sets composed of vertices and edges. Although the studies of networks can be traced back to the development of graph theory in mathematics, the scope of today’s network science is an interdisciplinary field covering extensive subjects from physics, chemistry, biology, economy, and even social science. The topics of network science thus are explosive with the development of science and technology, the contributions from various subjects vividly enrich the network science.

The important mission of network science is to give a common understanding of various complex systems from the viewpoint of topology. Behind this mission the important issue relates to the reduction of complexity based on network theory and dynamics. Nowadays we can easily get a knowledge and big data via various measure techniques from complex systems. How to gain useful information from these data is essentially a process of reduction, i.e. to get the truth by getting rid of redundant information. To perform such an effective reduction of a complex system from its microdynamics, the very important starting point is to identify an appropriate microscopic description. Two ingredients are indispensable when one studies the microdynamics, i.e. unit dynamics and the coupling patterns of units in a system. Now let me discuss these two points.

Different systems are composed of units with different properties. This is the fundamental viewpoint of reductionism. For example, a drop of water is in fact composed of ~1023 H2O molecules, the human brain is composed of ~1011 neurons, and a heart tissue is composed of ~1010 cardiac cells. Apparently, these units work with different mechanisms and are described by distinct dynamics. It has been a central topic in exploring the mechanism of these units. For example, a single neuron or a cardiac tissue works in an accumulating-firing manner and can be modeled by a mimic nonlinear electronic circuit. This dynamical feature is described by a number of excitable models, e.g. the Hodgkin-Huxley model, the Fitzhugh-Nagumo model, and so on.

The second ingredient is the modelling of interactions among units. At the particle level, the forces among quarks, elementary particles, atoms, molecules are quite different. It is an important task for physicists to explore these interactions. The interactions out of physics are also system dependent. For example, the relations of individuals in a society are complicated, depending strongly on the type of information that is exchanged between two individuals.

It becomes astonishing when there are too many individuals in a system, when the forms of unit dynamics and coupling functions sometimes matter, while in many cases they are not essential. A typical situation happens when the coupling topology is more important than the specific form of coupling function. The behaviors of emergence form when the macroscopic behaviors appear for a complex system while they do not happen at the level of each unit. Recent studies of the so-called complex networks stimulated by milestone works on small-world [22] and scale-free networks [23] provide a powerful platform in studying complex systems. For example, it is a significant topic to explore network properties of biological gene, DNA, metabolic, and neural networks, social and ecological networks, WWW and internet networks, and so on. Another important topic is the study of dynamical processes on networks, such as synchronization, propagation processes, and network growth. Interested readers may refer related monographs and reviews for more knowledge of network science [24,25,26,27,28,29,30].

4.3 Emergence of Rhythms

4.3.1 Biological Rhythms: An Introduction

Biological rhythm is an old question and can be found ubiquitously in various living systems [31]. The 2017 Nobel Prize in Physiology or Medicine was awarded to J. C. Hall, M. Rosbash and M.W. Young for their discoveries of molecular mechanisms that control circadian rhythms [32, 33]. Circadian rhythms are driven by an internal biological clock that anticipates day-night cycles to optimize the physiology and behavior of organisms. The exploration of the emergence of circadian rhythms from the microscopic level (e.g. molecular or genetic levels) aroused a new era of studies on biological oscillations [34] (Fig. 4.2).

(Adapted from [33])

The observation of de Mairan on the rhythmic daily opening-close in the sun and in the dark. a in the normal sunlight-dark environment. b always in dark., The environment-independent open-closing implies an endogenous origin of the daily rhythm.

Observations that organisms adapt their physiology and behavior to the time of the day in a circadian fashion have been recorded for a long time. The very early observations of leaf and flower movements in plants, for example, the leaves of mimosa plants close at night and open during the day presented interesting biological clocks. In 1729, the French scientist de Mairan observed that the leaves of a mimosa plant in the dark could still open and close rhythmically at the appropriate time of the day, implying an endogenous origin of the daily rhythm rather than external stimulus [35].

The genetic mechanism responsible for the emergence of circadian rhythms was first explored by S. Benzer and R. Konopka from the 1960s. In 1971, their pioneering work identified mutants of the fruit fly Drosophila that displayed alterations in the normal 24-h cycle of pupal eclosion and locomotor activity, which was named as period (PER) [36]. Later on, Hall and Rosbash at Brandeis University [37] and Young at Rockefeller University [38] isolated and molecularly characterized the period gene (Fig. 4.3).

a A simplified illustration of the biochemical process of the circadian clock. b The transcription-translation feedback loop (TTFL), i.e. the transcription of period and its partner gene timeless (TIM) are repressed by the PER and TIM proteins, generating a self-sustained oscillation. (Adapted from [33])

Further studies by Young, Hardin, Hall, Rosbash and Takahashi revealed that the molecular mechanism for the circadian clock relies not on a single gene but on the so-called transcription-translation feedback loop (TTFL), i.e. the transcription of period and its partner gene timeless (TIM) are repressed by the PER and TIM proteins, generating a self-sustained oscillation [39,40,41,42]. These explorations led to numerous potential studies that revealed a series of interlocked TTFL’s together with a complex network of reactions. These involve regulated protein phosphorylation and degradation of TTFL components, protein complex assembly, nuclear translocation and other post-translational modifications, generating oscillations with a period of approximately 24 h. Circadian oscillators within individual cells respond differently to entraining signals and control various physiological outputs, such as sleep patterns, body temperature, hormone release, blood pressure, and metabolism. The seminal discoveries by Hall, Rosbash and Young have revealed a crucial physiological mechanism explaining circadian adaptation, with important implications for human health and disease.

Essentially, biological rhythms ubiquitously existing in various biological systems are physically oscillatory behaviors, which are indications of temporally periodic dynamics of nonlinear systems. On the other hand, rhythmic phenomena can be extensively observed in various situations, ranging from physics, and chemistry to biology [6, 43]. Therefore, these oscillatory behaviors with completely different backgrounds can be universally studied in the framework of nonlinear dynamics.

4.3.1.1 Self-sustained Oscillation in Simple Nonlinear Systems

Self-sustained oscillation, also called the limit cycle, which is defined as a typical time-periodic nonlinear behavior, has received much attention throughout the past century [44]. The essential mechanism of sustained oscillation is the existence of feedback in nonlinear systems, but the source of feedback depends strongly on different situations.

First, the competition-balance mechanism between the positive feedback and the negative feedback can maintain a stable oscillation. In mechanical systems, for example, the conventional Van der Pol oscillator, the adjustable damping provides the key mechanism for the energy compensation to sustain a stable limit cycle. The damping becomes positive to dissipate energy when the amplitude is large and becomes negative to consume energy when the amplitude is small. Stability analysis can well present the dynamical mechanism of the limit-cycle motion. There are a lot of historic examples and models in describing these typical oscillatory dynamics.

Let us study a simple nonlinear system with a limit cycle oscillation by adopting the following complex dynamical equation:

where \(z\left(t\right)=x\left(t\right)+\text{i}y(t)\) is a complex order parameter. We use “i” to denote the imaginary index throughout this chapter. Physically the order parameter \(z\left(t\right)\) can be obtained via the reduction procedure by eliminating the fast modes. Equation (4.28) presents a two-dimensional dynamics in real space, and the possible time-dependent solution of this equation is the limit cycle.

To obtain an analytical solution, it is convenient to introduce the polar coordinates.

and Eq. (4.28) can be decomposed into the following two-dimensional equations of the phase and the amplitude that are uncoupled to each other:

The phase Eq. (4.29a) indicates that the phase evolves uniformly with the phase velocity ω. The amplitude Eq. (4.29b) has two stationary solutions for \(\uplambda >0\): the unstable solution \(r=0\) and the stable solution \({r}_{0}=\sqrt{\lambda /b}\). By considering the dynamics of both the phase and the amplitude, the latter one represents the periodic solution of Eq. (4.28) as.

This is a stable and attractive limit-cycle, and the relaxation process from an arbitrary initial state can lead to this solution. The stability of this sustained oscillator is the result of the competition between the positive feedback term \(\lambda r\) and the negative feedback term \(-b{r}^{3}\).

The second source of the feedback mechanism comes from the collaboration of units in a complex system, which is our focus here. In the following discussions, we will study the collective oscillation of a population of interacting non-oscillatory units. Because each unit in the system does not exhibit oscillatory behavior, the feedback mechanism of sustained oscillations should come from the collaborative feedback of units.

The self-sustained oscillation in complex systems consisting of a large number of units is a typical emergence that results from more complicated competitions and self organizations, and the mechanism of this collective oscillation was of great interest in recent years [45,46,47]. It is thus very interesting and important to explore the mechanism of oscillatory dynamics when these units interact with each other and study how a number of non-oscillatory nodes organize themselves to emerge a collective oscillatory phenomenon [48].

4.3.1.2 Self-sustained Oscillations in Complex Systems

The topic of self-sustained oscillations in complex systems was largely motivated by an extensive study and a strong background of system biology. Non-oscillatory systems exist ubiquitously in biological systems, e.g. the gene segments and neurons. People have extensively studied such common behaviors in nature, such as oscillations in gene-regulatory networks [46, 49,50,51,52,53], neural networks and brains [54,55,56,57,58,59].

The exploration of the key determinants of collective oscillations is a great challenge. It is not an easy task to directly apply the slaving principle in synergetics to dig out the order parameters in governing the self-sustained oscillations in this complex system. Recent progresses revealed that some fundamental topologies or sub-networks may dominate the emergence of sustained oscillations. Although the collective self-sustained oscillation emerges from the organization of units in the system, only a small number or some of key units form typical building blocks and play the dominant role in giving rise to collective dynamics. Thus some key topologies that are composed of a minor proportion of units may lead to a collective oscillation and most other units play the role of slaves. We may call these organizing centers the self-organization core or the oscillation source.

Theoretically, diverse self-sustained oscillatory activities and related determining mechanisms have been reported in different kinds of excitable complex networks. It was discovered that one-dimensional Winfree loops may support self-sustained target group patterns in excitable networks [60, 61]. Moreover, it was also revealed the center nodes and small skeletons to sustain target-wave-like patterns in excitable homogeneous random networks [62,63,64]. The mechanism of long-period rhythmic synchronous firings in excitable scale-free networks has been explored to explain the temporal information processing in neural systems [65].

On the other hand, node dynamics on biological networks depends crucially on different systems. For example, the gene dynamics is totally different from excitable dynamics, and the network structures are also different. It was found some fundamental building blocks in gene-regulatory networks can support sustained oscillations, and the interesting chaotic dynamics and its mechanism were studied [66,67,68].

Revealing the key topology of the organizing center, oscillation cores and further the propagation path is the dominant mission in this section. We first propose two useful methods, i.e. the dominant phase-advanced driving method and the functional weight approach, and then apply them to analyze and further pick up the key topologies from dynamics.

4.3.2 The Dominant Phase-Advanced Driving (DPAD) Method

An important subject in revealing the coordination of units is to explore the core structure and dynamics in the organization process of a large number of non-oscillatory units. DPAD is a dynamical method that can find the strongest cross driving of the target node when a system is in an oscillatory state. Here, we briefly recall the dynamical DPAD structure [60,61,62,63,64].

Given a network consisting of N nodes with non-oscillatory local dynamics described by well-defined coupled ordinary differential equations, there are M (M > N) links among these different nodes. We are interested in the situation when the system displays a global self-sustained oscillation and all nodes that are individually non-oscillatory become oscillatory. It is our motivation to find the mechanism supporting the oscillations in terms of the network topology and oscillation time series of each node.

Let us first clarify the significance of nodes in a network with sustained oscillations by comparing their phase dynamics. Obviously, the oscillatory behavior of an individually non-oscillatory node is driven by signals from one or more interactions with advanced phases, if such a phase variable can be properly defined. We call such a signal the phase-advanced driving (PAD). Among all phase-advanced interactions, the interaction giving the most significant contribution to the given node can be defined as the dominant phase-advanced driving (DPAD). Based on this idea, the corresponding DPAD for each node can be identified. By applying this network reduction approach, the original oscillatory high-dimensional complex network of N nodes with M vertices/interactions can be reduced to a one-dimensional unidirectional network of size N with \({M}^{^{\prime}}\) unidirectional dominant phase-advanced interactions.

An example of clarifying the DPAD is shown in Fig. 4.4a. The black/solid curve denotes the given node as the reference node. Many nodes linking directly to this reference can be checked when a given network is proposed. Suppose there are three nodes whose dynamical time series labeled by green/dashed, blue/dotted and red/dash-dotted curves, respectively. The green/dashed curve exhibits a lagged oscillation to the reference curve, therefore one calls it the phase-lagged oscillation. The blue/dotted and red/dash-dotted curves provide the drivings for exciting the reference node, so these curves are identified as PAD. The blue/dotted one presents the earliest oscillation and makes the most significant contribution, thus it is the DPAD.

(Adapted from Ref. [63])

a A schematic plot of the DPAD. As comparisons, the reference oscillatory time series, a usual PAD and a phase-lagged node dynamics are also presented, respectively. b An example of simplified (unidirectional) network in terms of the DPAD scheme.

Figure 4.4b gives an illustration of a DPAD structure consisting of one loop and the nodes outside the loop radiated from the loop. For excitable node dynamics, as shown below, the red nodes form a unidirectional loop that acts as the oscillatory source, and yellow nodes beyond the loop form paths for the propagation of oscillations.

The DPAD structure reveals the dynamical relationship between different nodes. Based on this functional structure, we can identify the loops as the oscillation source, and illustrate the wave propagation along various branches. All the above ideas are generally applicable to diverse fields for self-sustained oscillations of complex networks consisting of individual non-oscillatory nodes.

4.3.3 The Functional-Weight (FW) Approach

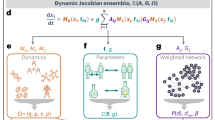

The above DPAD approach provides a way in analyzing the phase relations embedded in dynamical data to unveil information on unit connections. In some cases, it is possible to get the detailed dynamics of coupled systems. We start from the following dynamical system composed of N units labeled as \({\varvec{x}}=({x}_{1},{x}_{2},\dots ,{x}_{N})\):

where \({x}_{i}\) denotes the state variable of the i-th node in a network (for simplicity one adopts the one-dimensional dynamics on the nodes). We separate the linear component from the nonlinear coupling function \({f}_{i}\left({\varvec{x}}\right)\) at the right-hand side of (4.30). It is our motivation here to explore the topological mechanism of collective oscillation in networks of coupled non-oscillatory units. Therefore the linear coefficients \({\lambda }_{i}\) are negative, and we set \({\lambda }_{i}=-1\) without losing generality.

The functional weight (FW) approach comes from a simple but solidly standing idea: as all the nodes in the networks cannot oscillate individually, the oscillation of any node i (the target node) is due to the interactions from its neighbors represented by \({f}_{i}\left({\varvec{x}}\right)\) in Eq. (4.30). However, all the inputs from the neighbors to the target node are mixed together in \({f}_{i}\left({\varvec{x}}\right)\) by nonlinear functions. To measure the importance of different neighbors to the oscillation of the target node, contributions from all these neighbors should be separated. The cross differential force between the i-th and the j-th nodes can be measured by.

where the total driving force is expressed as a simple summation of the contributions of all its neighbors.

It is emphasized that the differential form \(\partial {f}_{i}({\varvec{x}})/\partial {x}_{j}\) rather than \({f}_{i}({\varvec{x}})\) itself plays crucial role in the oscillation generation, because the amount of variation of the target node i caused by the variation of a given neighbor j determines the functional driving relationship from j to i. At time t, the weight of the contribution of the j-th node can be easily computed from Eq. (4.32) as

which is nothing but the normalized Jacobian matrix at time t weighted by \(\dot{x}_{i}\). The overall weight is integrated over T as

For periodic oscillatory dynamics,

where T is the period for periodic oscillations. The quantity \({w}_{ij}\) introduced here represents the weight of neighbor node j in driving the target node i to oscillate and serves as the quantitative measure of the importance of the link from node j to node i. \({w}_{ij}\) is positive or zero, and normalized as \({\sum }_{j=1,j\ne i}^{N}{w}_{ij}=1\). A zero or small \({w}_{ij}\) represents no or a weak functional interaction while large or unity \({w}_{ij}\) denotes a strong or dominant driving [66,67,68].

In the following we will focus on self-sustained oscillations in excitable networks and regulatory gene networks. One can find that both types of systems possess a common feature, that is, only a small number of units participate in the global oscillation, and some fundamental structures act as dominant roles in giving rising to oscillatory behaviors in the system, although the organizing cores differ for these two types of networks.

4.3.4 Self-sustained Oscillation in Excitable Networks

4.3.4.1 Oscillation Sources and Wave Propagations

We first take the representative excitable dynamics as a prototype example to reveal the mechanism of global oscillations. Cooperation among units in the system leads to an ordered dynamical topology to maintain the oscillatory process. In regular media, the oscillation core of a spiral wave is a self-organized topological defect. People also found that loop topology is significant in maintaining the self-sustained oscillation. Jahnke and Winfree proposed the dispersion relation in the Oregonator model [68]. Courtemanche et al. studied the stability of the pulse propagation in 1D chains [69]. If a loop composed of excitable nodes can produce self-sustained oscillations, one may call it the Winfree loop.

It is not difficult to understand the loop topology for a basic structural basis of collective oscillation for a network of non-oscillatory units. An excitable node in a sustained oscillatory state must be driven by other nodes. To maintain such drivings, a simple choice is the existence of a looped linking among interacting nodes. A local excitation leads to a pulse and are propagated along the loop to drive other nodes in order, which forms a feedback mechanism of repeated driving. Furthermore, the oscillation along the loop can be propagated by nodes outside the loop and spread throughout the system. The propagation of oscillation in the media gives rise to the wave patterns.

The loop structure is ubiquitous in real networks plays an important role in network dynamics. Recurrent excitation has been proposed to be the reason supporting self-sustained oscillations in neural networks. The DPAD method can reveal the underlying dynamic structure of self-sustained waves in networks of excitable nodes and the oscillation source. In complex networks, numerous local regular connections coexist with some long-range links. The former plays an important role in target wave propagation and the latter are crucial for maintaining the self-sustained oscillations.

We use the following Bär-Eiswirth model [60] to describe the excitable dynamics and consider an Erdos–Renyi (ER) random network [29]. The network dynamics is described by.

Variables \({u}_{i}(t)\) and \({v}_{i}(t)\) describe the activator and the inhibitor dynamics of the i-th node, respectively. The function \(f(u)\) takes the following piecewise form:

The relaxation parameter \(\varepsilon \ll 1\) represents the time ratio between the activator u and the inhibitor v. The dimensionless parameters a and b denote the activator kinetics of the local dynamics and the ratio \({u}_{T}= b/a\) can effectively control the excitation threshold. D is the coupling strength between linking nodes. \(\mathbf{A}=\{{A}_{i,j}\}\) is the adjacency matrix. For a symmetric and bidirectional network, the matrix is defined as \({A}_{i,j}={A}_{j,i}=1\) if there is a connection linking nodes i and j, and \({A}_{i,j}={A}_{j,i}=0\) otherwise.

We study the random network shown in Fig. 4.5a as a typical example. Without couplings among nodes, each excitable node is non-oscillatory, i.e. they evolve asymptotically to the rest state u = v = 0 and will stay there perpetually unless some external force drives them away from this state. When a node is kicked from its rest state by a stimulus large enough, the unit can excite by its own internal excitable dynamics.

(Adapted from Ref. [63])

a An example random network with N = 100 nodes, and each node connects to other nodes with the same degree \(k=3\). (b–c): The spatiotemporal evolution patterns of two different oscillatory states in the same network shown in (a) by starting from different initial conditions. Both patterns display the evolution of local variable u. The nodes are spatially arranged according to their indexes i. (d) The DPAD structure corresponding to the oscillation state in (b), where a loop and multiple chains are identified; e The DPAD structure corresponding to the oscillation state in (c), where two independent subgraphs are found with each subgraph containing a loop and numerous chains.

With the given network structure and parameters, one studies the dynamics of the system by starting from different sets of random initial conditions. The system evolves asymptotically to the homogeneous rest state in many cases. However, one still finds a small portion of tests eventually exhibit global self-sustained oscillations. The spatiotemporal patterns given in Figs. 4.5b, c are two different examples of these oscillatory (both periodic and self-sustained) states.

One can unveil the mechanism supporting the oscillations and the excitation propagation paths by using the DPAD approach. In Figs. 4.5d, e, the reduced directed networks corresponding to the oscillatory dynamics by using the DPAD method are plotted. For the case with dynamics shown in Fig. 4.5b, the single dynamical loop plays the role of oscillation source, with cells in the loop exciting sequentially to maintain the self-sustained oscillation, as shown in Fig. 4.5d. We can observe waves propagating downstream along several tree branches rooted at various cells in the loop. If we plot the spatiotemporal dynamics along these various paths by re-arranging the node indices according to the sequence in the loop, we can find regular and perfect wave propagation patterns. This indicates that the DPAD structure well illustrates the wave propagation paths. For the case with dynamics shown in Fig. 4.5c, the corresponding DPAD structure given in Fig. 4.5e is a superposition of two sub-DPAD paths, i.e. there are two organization loop centers, where two sustained oscillations are produced in two loops and propagate along the trees.

The DPAD structures in Fig. 4.5d, e clearly show the distinctive significance of some units in the oscillation which cannot be observed in Fig. 4.5b, c, where units evolve in the homogeneous and randomly coupled network, and no unit takes any priority over others in topology. Because the unidirectional loop works as the oscillation source, units in the loop should be more important to the contribution of the oscillation.

4.3.4.2 Minimum Winfree Loop and Self-sustained Oscillations

Studies on the emergence of self-sustained oscillations in excitable networks indicate that regular self-sustained oscillations can emerge. However, whether there is intrinsic mechanism in determining the oscillations in networks is still unclear. For example, for Erdos–Renyi (ER) networks, whether the connection probability is related to sustained oscillations is an open topic.

In this section we study the occurrence of sustained oscillation depending on the linking probability on excitable ER random networks, and find that the minimum Winfree loop (MWL) is the intrinsic mechanism in determining the emergence of collective oscillations. Furthermore, the emergence of sustained oscillation is optimized at an optimal connection probability (OCP), and the OCP is found to form a one-to-one relationship with the MWL length. This relation is well understood that the connection probability interval and the OCP for supporting the oscillations in random networks are exposed to be determined by the MWL. These three important quantities can be approximately predicted by the network structure analysis, and have been verified in numerical simulations [70].

One adopts the Bär-Eiswirth model (1) on ER networks with N nodes. Each pair of nodes are connected with a given probability P, and the total number of connections is \(PN(N-1)/2\). By manipulating P, one can produce a number of random networks with different detailed topologies for a given P.

We introduce the oscillation proportion

as the order parameter to quantitatively investigate the influence of system parameters on self-sustained oscillations in random networks, where \({N}_{ALL}\) is the total number of tests starting from random initial conditions for each set of parameters, and \({N}_{os}\) is the number of self-sustained oscillations counted in \({N}_{ALL}\) dynamical processes.

In Figs. 4.6a–d, the dependence of the oscillation proportion \({P}_{os}\) on the connection probability P for different parameters a, b, ε and D on ER random networks with \(N=100\) nodes are presented. It is shown from all these curves that the system can exhibit self-sustained oscillation in a certain regime of the connection probability, and no oscillations are presented at very small or very large P. Moreover, an OCP \({P=P}_{OCP}\) for supporting self-sustained oscillations can be expected on ER random networks. The number of self-sustained oscillations increases as the parameter a is increased (see Fig. 4.6a), while \({P}_{os}\) decreases as b is increased as shown in Fig. 4.6b. Moreover, the OCP for supporting self-sustained oscillations is independent of the parameters a and b. Figure 4.6c reveals the dependence of \({P}_{os}\) on the relaxation parameter ε. It is shown from Fig. 4.6c that as ε is increased, \({P}_{os}\) decreases remarkably. Increasing the coupling strength D is shown to enhance the sustained oscillation (see Fig. 4.6d).

(Adapted from Ref. [70])

The dependence of the oscillation proportion \({P}_{os}\) on the connection probability P at different system parameters in excitable ER random networks. The OCPs \({P}_{OCP}\) for supporting self-sustained oscillations in ER networks at different parameters are indicated.

The non-trivial dependences of collective oscillations on various parameters such as the connection probability P are very interesting. As discussed above, the excitable wave propagating along an excitable loop can form a 1D Winfree loop, which serves as the oscillation source and maintain self-sustained oscillation in excitable complex networks. Figure 4.7a presents the dependence of the sustained-oscillation period T of the 1D Winfree loop on the loop length, where a shorter/longer period is expected for a shorter/longer loop length. However, due to the existence of the refractory period of excitable dynamics, a too short 1D Winfree loop cannot support sustained oscillations, implying a minimum Winfree loop (MWL) length \({L}_{min}\) for a given set of parameters. Moreover, sustained oscillation ceases for a shortest loop length, corresponding to the MWL.

(Adapted from Ref. [70])

a The dependence of the oscillation period of a Winfree loop against the loop length. When \({L<L}_{\mathrm{min}}\), the oscillation ceases. b The dependence of the OCP on \({L}_{\mathrm{min}}\). c The dependence of the proportion of network structures satisfying \({L\ge L}_{\mathrm{min}}\) on the connection probability P. d The dependence of the proportion of network structures with an APL satisfying \({d}_{APL}\ge {L}_{\mathrm{min}}-1\) on P. e The dependence of the joint probability (JP) on P. The MWL with the length \({L}_{\mathrm{min}}=6\) is used as the example.

In Fig. 4.7b, \({P}_{OCP}\) is found to build a one-to-one correspondence to \({L}_{min}\), indicating that the emergence of collective oscillations is essentially determined by the MWL. This correspondence can be understood by analyzing the following two tendencies. First, as discussed above, a network must contain a topological loop with a length that is not shorter than the MWL, i.e.

Second, the average path length (APL) of a given network should be large enough so that.

These two tendencies propose the necessary conditions for the formation of 1D Winfree loop supporting self-sustained oscillations. Moreover, the first condition leads to the lower critical connection probability, by violating which the network cannot support sustained oscillations. The second condition gives rise to the upper critical connection probability, and if the APL is so short that the loops are too small to support oscillations. In Fig. 4.7c, the probability of the loop length larger than the MWL length is computed against the connection probability P of an ER network. An increasing dependence can be clearly seen, and a lower threshold exists. As shown in Fig. 4.7d, the probability of an ER network with the APL satisfying \({d}_{APL}\ge {L}_{min}-1\) is plotted against P. A decreasing relation and an upper threshold can be found. Necessary condition for sustained oscillation should be a joint probability satisfying both \(L>{L}_{min}\) and \({d}_{APL}\ge {L}_{min}-1\). The dependence of this joint probability on the connection probability P is the product of Figs. 4.7c, d, which naturally leads to a humped tendency shown in Fig. 4.7e, where an OCP expected for the largest joint probability. This gives a perfect correspondence to the results proposed in Fig. 4.7.

The above discussion indicates that self-sustained oscillation are related to the loop topology and dynamics, and are essentially determined by the MWL. The one-to-one correspondence between the optimal connection probability and the MWL length is revealed. The MWL is the key factor in determining the collective oscillations on ER networks [71,72,73].

4.3.5 Sustained Oscillation in Gene Regulatory Networks

4.3.5.1 Gene Regulatory Networks (GRNs)

Gene regulatory networks (GRNs), as a kind of biochemical regulatory networks in systems biology, can be well described by coupled differential equations (ODEs) and have been extensively explored in recent years. The ODEs describing biochemical regulation processes are strongly nonlinear and often have many degrees of freedom. We are concerned with the common features and network structures of GRNs.

Different from the above excitable networks, positive feedback loops (PFLs) and negative feedback loops (NFLs) have been identified in various biochemical regulatory networks and found to be important control modes in GRNs [46, 50,51,52,53]. Self-sustained oscillation, bi-rhythmicity, bursting oscillation and even chaotic oscillations are expected for these objects. Moreover, oscillations may occur in GRNs with a small number of units. The study of sustained oscillations in small GRNs is of great importance in understanding the mechanism of gene regulation processes in very large-scale GRNs. Network motifs as subgraphs can appear in some biological networks, and they are suggested to be elementary building blocks that carry out some key functions in the network. It is our motivation to unveil the relation between network structures and the existence of oscillatory behaviors in GRNs.

We consider the following GRN model:

where \(\left({\varvec{p}}\right)=({p}_{1},{p}_{2},\dots ,{p}_{N})\), and the function \({f}_{i}\left({\varvec{p}}\right)\) satisfies the following form:

The active regulation function is.

and the repressive regulation function is written as

where

and \({p}_{i}\) is the expression level of gene i,\(0<{p}_{i}<1\). The adjacency matrices α, β determine the network structure, which are defined in such a way that \({\alpha }_{ij}=1\) if gene j activates gene i, \({\beta }_{ij}=1\) if gene j inhibits gene i and \({\alpha }_{ij}\cdot {\beta }_{ij}=0\) for no dual-regulation of gene i by gene j. \({act}_{i}\)(\({rep}_{i}\)) represents the sum of active (repressive) transcriptional factors to node i. The regulated expression of genes is represented by Hill functions with cooperative exponent h and the activation coefficient K, characteristic for many real genetic systems [66].

4.3.5.2 Skeletons and Cores

Based on the known oscillation data of GRNs, we can explicitly compute all the functional weights \({w}_{ij}\) between any two genes based on Eqs. (4.33)–(4.35) and draw the FW maps for the oscillatory networks. Figure 4.9a shows a simple example of 6-node GRN with the dynamics given by Eq. (4.39), and computer simulations indicate that this GRN possesses an oscillatory attractor for a set of parameters given in Ref. [66]. From the oscillation data, we can draw the FW map in Fig. 4.9b. An interesting as well as valuable feature of Fig. 4.9b is that the \({w}_{ij}\) distribution is strongly heterogeneous, i.e. some weights \({w}_{ij}\approx 1\) indicate a significant control while many others \({w}_{ij}\approx 0\) represent weak functional links. The heterogeneity allows us to explore the self-organized functional structures supporting oscillatory dynamics by the strongly weighted links. In Fig. 4.9c, we remove all the less important links with \({w}_{ij}\)≤\({w}_{th}\), where the threshold value \({w}_{th}=0.30\). By removing any interaction and node in the network, we always mean to delete their oscillatory parts while keep the average influence of the deleted parts as control parameters in Eq. (4.34). The reduced subnetwork shown in Fig. 4.9c retaining only strongly weighted interactions is called the skeleton of the oscillatory GRN. Furthermore, we can reduce the skeleton network by removing the nodes without output one by one, and finally obtain an irreducible subnetwork where each node has both input and output which is defined as the core of the oscillation.

For the network Fig. 4.8b the core is given in Fig. 4.8d. In Fig. 4.8e, f, we plot the oscillation orbits of the original GRN and those of the core and skeleton in 2D \(({p}_{1},{p}_{2})\) and \(({p}_{4},{p}_{6})\) phase planes, respectively. It is found that the dynamical orbits of the original network given in Fig. 4.8a can be well reproduced by the reduced structures in Fig. 4.8c, d, conforming that the skeleton and core defined by the highly weighted links can dominate the essential dynamics of the original GRN. Considering Fig. 4.8d–e, c–f it is clear that the core Fig. 4.8d serves as the oscillation source of the GRN while the skeleton Fig. 4.8c plays the role of main signal propagation paths from the core throughout the network.

An example of the functional-weight (FW) map for an oscillatory GRN. a An oscillatory GRN with N = 6 genes and I = 15 links. Different node colors represent different phases of the oscillatory nodes. Green full and red dotted arrowed lines represent active and repressive interactions, respectively. b The FW map of all interactions computed by Eqs. (3.34) and (3.35). c The reduced interaction skeleton obtained from (b) by deleting links with small weights. d An irreducible core structure obtained by only retaining interactions with both input and output in (c), which serves as the oscillation source propagating through the path of the skeleton (c. e) A comparison of a dynamical orbit of the original GRN (a) with that of core (d) in a 2D phase plane. f A comparison of an orbit of the GRN (a) with that of skeleton c in another 2D phase plane. Good agreements between the orbits of the full GRN and that of the reduced subnetworks can be found

The analysis presented in Fig. 4.8 can be well applied to more complicated GRNs with larger numbers of nodes and links to fulfill the reduction of network complexity for understanding and controlling network dynamics. Numerical works for a large network with many nodes and links indicates that the comparisons of the dynamics of the core and skeleton with those of the original network are in good agreement.

Let us give a brief summary of the above discussions on the emergence of sustained oscillation dynamics in networks of non-oscillatory units. In this case, the feedback topology is the fundamental mechanism. For a very large network, not every node or link contributes to the collective oscillation, and only a small portion of nodes and their connections dominates, forming some fundamental building blocks such as motifs, loops, or cores. To dig out these basic topologies, an appropriate topology reduction is the key point. This meanwhile leads naturally to dynamics reduction, revealing the emergence of self-organized oscillation from collaborations of non-oscillatory node dynamics. It is a significant issue to make a topology reduction to reduce the dimensionality of dynamics of a complex system to obtain the essential structural ingredient of emergence behaviors. In recent years we developed the related techniques and ways in revealing the embedded topologies [74, 75].

It should be stressed that there exist many fundamental topologies on a large network that may induce various possible sustained oscillations. Competitions and collaborations among these sub-networks and rhythms lead to complicated dynamics.

4.4 Synchronization: Cooperations of Rhythms

4.4.1 Synchrony: An Overview

Collective behavior of complex and nonlinear systems has a variety of specific manifestations, and synchronization should be one of the most fundamental phenomena [76, 77]. Discussions of fundamental problems in synchronization cover a variety of fields of natural science and engineering, and even many behaviors in social science are closely related to the basic feature of synchronization. Many specific systems such as pendula, musical instruments, electronic devices, lasers, biological systems, neurons, cardiology and so on, exhibit very rich synchronous phenomena.

Let us first focus on fireflies, a magical insect on earth. A male firefly can release a special luminescent substance to produce switched periodic flashes to attract females. This corresponds to a typical biological sustained oscillation. A surprising phenomenon occurs when a large number of male fireflies gather together in the dark of night to produce synchronized flashings. This phenomenon was first written in the log of the naturalist and traveler, Engeekert Kaempfer in 1680 when he traveled in Thailand. Later in 1935, Hugh Smith reported his observation on the synchronized flashing of fireflies [78]. More explorations have been performed after these initial observations. Interestingly, Buck elaborated on this phenomenon and published two articles with the same title “synchronized flashing of fireflies” on the same journal in 1938 and 1988, respectively [79].

It is a complex issue in biology to explore the implication of the synchronization flashing of male fireflies. The physical mechanism behind this phenomenon may be more important and interesting because it is a typical dynamical process. If each firefly is considered as an oscillator, the synchronous flashing is an emergent order produced by these oscillators. This collective behavior is obviously originated from the interaction between individuals, and the interplay between them results in an adjustment of the rhythm of every firefly.

Accidentally and interestingly, before Kempfer’s finding of firefly synchronization, Huygens described the synchronization of two coupled pendula [80] in 1673. He discovered that a couple of pendulum clocks hanging from a common support had synchronized, i.e. their oscillations coincided perfectly and the pendula moved always in opposite directions. He also proposed his understanding of the phenomenon by attributing to the coupling brought by the beam that hang two pendula and the coupling-induced energy transfer between the two pendulum clocks.

Although synchronization behaviors had been found in different disciplines such as physics, acoustics, biology, and electronic devices, a common understanding embedded these seemingly distinct phenomena was still lack. A breakthrough was the study of sustained oscillations and limit cycles in nonlinear systems in the early twentieth century [44]. Further, it is theoretically important to study the synchronization between the limit cycles of driving or interaction on the basis of limit cycles [76, 77].

A fruitful modelling of synchronization was pioneered by Winfree, who studied the nonlinear dynamics of a large population of weakly coupled limit-cycle oscillators with distributed intrinsic frequencies [81]. The oscillators can be characterized by their phases, and each oscillator is coupled to the collective rhythm generated by the whole population. Therefore, one may use the following equations of motion to describe the dynamical evolution of interacting oscillators:

where \(j=1, ..., N\). Here \({\theta }_{i}\) denotes the phase of the i-th oscillator, \({\omega }_{i}\) its natural frequency. Each oscillator j exerts a phase-dependent influence \(X({\theta }_{j})\) on all the other oscillators. The corresponding response of oscillator i depends on its phase through the sensitivity function \(Z({\theta }_{i})\).

Winfree discovered that such a population of non-identical oscillators can exhibit a remarkable cooperative phenomenon in terms of the mean-field scheme. When the spread of natural frequencies is large compared to the coupling, the system behaves incoherently, with each oscillator running at its natural frequency. As the spread is decreased, the incoherence persists until a certain threshold is crossed, i.e. then a small cluster of oscillators freezes into synchrony spontaneously.

Kuramoto put forward Winfree’s intuition about the phase model by adopting the following universal form [82]:

where the coupling functions \(\Gamma\) depends on the phase difference and can be calculated as integrals involving certain terms from the original limit-cycle model. A tractable phase model of (4.43) was further proposed by adopting a mean-field sinusoidal coupling function:

\(K\ge 0\) is the coupling strength. The frequencies are randomly chosen from a given probability density g(ω), which is usually assumed to be one-humped and symmetric about its mean \({\omega }_{0}\). This mean-field model was hereforth called the Kuramoto model.

The Kuramoto mean-field model can be successfully solved by using the self-consistency approach in terms of statistical physics, which reveals that a large number of coupled oscillators can overcome the disorder due to different natural frequencies by interacting with each other, and the synchronized state emerges in the system.

The success of Winfree’s and Kuramoto’s works aroused extensive studies of synchronization under more generalized cases (interested reader may refer the review papers and the monographs [83,84,85]). The study of coupled phase oscillator synchronization, and the Kuramoto model on complex networks has become the focus of research [86,87,88,89].

Apart from the self-consistency approach, recently Ott and Antonsen proposed an approach (OA ansatz) to obtain the dynamical equations of order parameters [90, 91]. Strogatz explained the physical meaning of the OA ansatz based on the Watanabe-Strogatz transformation [92, 93].

In recent years, with the widespread studies of chaotic oscillations, the notion of synchronization has been generalized to chaotic systems [76]. The study of synchronization of coupled chaotic oscillators extended the scope of synchronization dynamics, and different types of chaos synchronization such as complete/identical synchronization, generalized synchronization, phase synchronization, and measure synchronization were revealed [88, 94, 95].

4.4.2 Microdynamics of Synchronization

Let us begin with the simplest scenario to explore the synchronous dynamics. It is very important to discuss the microscopic mechanism of synchronization, which can make us better understand how a large number of coupled oscillators form ordered behaviors through interaction and self-organization.

4.4.2.1 Phase-Locking of Two Limit-Cycle Oscillators

We consider two mutually coupled oscillators \({z}_{\mathrm{1,2}}(t)\) that are described by Eq. (4.28) but with different natural frequencies. They are coupled to each other and obey the following dynamical equations of motion

where \({\lambda }_{\mathrm{1,2}}>0\), \({K}_{\mathrm{1,2}}>0\). \({b}_{\mathrm{1,2}}\) are two real parameters, and \({\omega }_{1}\ne {\omega }_{2}\) are the natural frequencies of the two oscillators. The third term at the right hand side of Eq. (4.45) represents the interaction between two oscillators. By introducing the polar coordinates \({r}_{\mathrm{1,2}}(t)\) and \({\theta }_{\mathrm{1,2}}(t)\) as

the motion in two complex equations of (4.45) can be decomposed into four real equations of the amplitudes and phases as

It can be seen from (4.47b) that the coupling term will adapt the actual phase velocities \({\dot{\theta }}_{\mathrm{1,2}}(t)\) even if two oscillators have different natural frequency \({\omega }_{\mathrm{1,2}}\).

We consider the possibility of the attracting tendency of two oscillators in the presence of coupling. By comparing the coupling terms in (4.47a) and (4.47b), it can be found that the first one is of order \({r}^{3}\), while the latter is of order \({r}^{2}\). Therefore the coupling term in (4.47a) can be neglected, and the two equations in (4.47a) are decoupled and can be solved. As \(t\to \infty\), \({r}_{\mathrm{1,2}}\to {r}_{\mathrm{10,20}}=\sqrt{{\lambda }_{\mathrm{1,2}}/{b}_{\mathrm{1,2}}}\). By substituting the amplitudes \({r}_{\mathrm{1,2}}\) in (4.47b), one can obtain the following coupled phase equations:

The above procedure implies the separation of time scales of the amplitude and phase, which is actually the consequence of the slaving principle by setting \({\dot{r}}_{\mathrm{1,2}}=0\), i.e. the amplitudes \({r}_{\mathrm{1,2}}(t)\) are fast state variables, while the phases \({\theta }_{\mathrm{1,2}}(t)\) are neutrally-stable slow variables. Let us keep in working out the phase dynamics of two coupled oscillators. By introducing the phase difference \(\theta (t)={\theta }_{2}(t)-{\theta }_{1}(t)\) and natural-frequency difference \(\Omega ={\omega }_{2}-{\omega }_{1}\), Eq. (4.48) can be changed to

where the parameter

The evolution of (4.49) can be easily solved by integrating the differential equations and eventually one gets

when \(\left|\Omega \right|>|\mathrm{\alpha }|\), the integral (4.50) can be worked out in one period of the phase, and the corresponding period is expressed as

i.e. the phase difference evolves periodically with the period T:

i.e. the actual frequency difference of two coupled oscillators depends on the integral (5.51). When \(\mathrm{\alpha }\ll\Omega\), one has

On the other hand, when \(\left|\Omega \right|<|\mathrm{\alpha }|\), the integral (5.51) diverges at \(\mathrm{sin}\Delta {\theta }_{0}=\alpha /\Omega\). This implies that as \(t\to \infty\), \(\Delta \theta\) tends to a fixed value \(\Delta {\theta }_{0}=\mathrm{arcsin}(\alpha /\Omega )\), and the period \(T\to \infty\) in (5.51). From (5.52) one has

i.e. the frequencies of the two oscillators are pulling to each other and eventually locked. In fact, because α is proportional to the coupling strength, the critical condition \(\left|\Omega \right|\le |\mathrm{\alpha }|\) means that coupling strength \({K}_{\mathrm{1,2}}\) should be strong enough to overcome the natural-frequency difference \(\Omega\). Therefore, the critical condition is \({\alpha }_{c}={\omega }_{2}-{\omega }_{1}\). Near this critical point, i.e. one has \(\langle \Delta \dot{\theta }\rangle \sim {({\alpha }_{c}-\alpha )}^{1/2}\), where < · > represents a long-time average. This implies a saddle-node bifurcation at the onset of synchronization of two coupled oscillators.

As an inspiration, one finds from the above study of synchronization between two interacting limit-cycle oscillations that: (1) The phase is the dominant degree of freedom in the process of synchronization of coupled oscillators as compared to the amplitude variable; (2) The coupling function between oscillators is typically of the sinusoidal form of the phase difference. These two points are in agreement with the proposition of Winfree and Kuramoto in modelling synchronization, which is also a very important starting point in describing the synchronization problem.

4.4.2.2 Synchronization Bifurcation Tree

For \(N>2\) coupled oscillators, it is not an easy task to give analytical discussions of the microdynamics of synchronization. One usually performs numerical simulations and compute some useful quantities. Let us consider the following nearest-neighbor coupled oscillators:

where \(i=\mathrm{1,2},\dots ,N,\) \(\{{\upomega }_{i}\}\) are natural frequencies of oscillators, \(K\) is the coupling strength. Without losing generality, we assume that \(\sum_{i}{\omega }_{i}=0\).

When the coupling strength K is increased from 0, different from the two-oscillator case, the system will show complicated synchronization dynamics because of the competition between the ordering induced by the coupling and the disorder of natural frequencies. For the nearest-neighbor coupling case, there is an additional competition, i.e. the competition between the coupling distance and the natural-frequency differences. As the coupling strength increases, the system will gradually reach the global synchronization. There is a critical coupling \({K}_{c}\), when \(K>{K}_{c}\) the frequencies of all the oscillators are locked to each other. As \(K<{K}_{c}\), a portion of oscillators are synchronized, which is called partial synchronization. To observe the synchrony process, we define the average frequency of the i-th oscillator as

Synchronization between the i-th and the j-th oscillators is achieved when \({\stackrel{-}{\omega }}_{i}={\stackrel{-}{\omega }}_{j}\). As the coupling strength changes, oscillators will undergo a coordinated process to achieve global synchronization.

To observe the synchronization of multiple oscillators clearly, we introduced the so-called synchronization bifurcation tree (SBT), which is defined as the set of the relation \(\{{\stackrel{-}{\omega }}_{i}(K)\}\), i.e. the relationship of the average frequencies of all oscillators and the coupling strength K. The SBT method gives a tree-structured process of synchronization transitions and exhibits vividly how oscillators are organized to become synchronized by varying the coupling [96,97,98,99].

In Fig. 4.9a–b, we plot the average frequencies \(\{{\stackrel{-}{\omega }}_{i}\}\) defined in Eq. (4.56) against the coupling strength K for \(N=5\) and 15, respectively, by varying K from \(K=0\) to\(K={K}_{c}\). In both figures, we find interesting transition trees of synchronizations. When \(K=0\), all oscillators have different winding numbers, and an increase of the coupling may lead to a merging of\({\stackrel{-}{\omega }}_{i}\). As two oscillators become synchronous with each other, their frequencies become the same at a critical coupling and keep the same (a single curve) with further increase of the coupling strength.

(Adapted from Ref. [98])

Transition trees of synchronization for averaged frequencies of oscillators versus the coupling K. a \(N=5\); b \(N=15\). Note the existence of three kinds of transitions labeled A, B, and C. c An enlarged plot of the nonlocal phase synchronization for \(N=15\).

An interesting behavior of SBT is the clustering of oscillators, i.e. several synchronous clusters can be formed with the increase of the coupling, and these clusters have different frequencies and numbers of oscillators. Clusters also form into larger clusters by reducing the number of clusters. For sufficiently strong coupling, only few clusters (usually two clusters) are kept and eventually merge into a single synchronous cluster. The formation of a single cluster implies the global synchronization of all oscillators. For both the SBT in Fig. 4.9a, b, one can observe the interesting tree cascade of synchrony.