Abstract

Colorectal cancer is not only a significant health issue in industrialized and developed countries; its incidence rate is also increasing in developing countries, making it the most prevalent cancer on a global scale. Given the limited effect of risk factor modification for primary prevention, secondary prevention—in the form of screening and early detection—is currently the most effective approach to reducing deaths from colorectal cancer through the use of colonoscopy. Nonetheless, colonoscopy itself is time-consuming, labor-intensive, and not without risk; also, the resource constraint of the limited number of endoscopists makes it not practical to serve as the primary screening tool in many countries. To enable better resource allocation, a two-stage approach is increasingly popular: first, a noninvasive screening test, then a confirmatory examination such as colonoscopy. Such an approach has two advantages: a higher participation rate when most of the target population is asymptomatic and better resource allocation when the screening test is highly accurate in identifying subjects with colorectal neoplasms. Several types of screening tests are available, including stool-based tests, such as the guaiac-based fecal occult blood test, the fecal immunochemical test, and the stool DNA test, and blood-based tests, such as the plasmic methylated septin-9 test. Even for the image-based tests, there are noninvasive tests, such as the computed tomographic colonography and colon capsule endoscopy, in addition to the invasive studies of flexible sigmoidoscopy and colonoscopy. Here, we present a precis of the performance and clinical application of these tests for mass screening.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Colorectal cancer screening

- Guaiac-based fecal occult blood test

- Fecal immunochemical test

- Fecal hemoglobin concentration

- Stool DNA test

- Fecal microbiota

- Plasmic methylated septin-9

- Computed tomographic colonography

- Colon capsule endoscopy

- Flexible sigmoidoscopy

- Colonoscopy

3.1 Introduction

Given the rapid increase in the incidence rate due to the Westernization of lifestyle, colorectal cancer (CRC) poses a significant threat to global health as the second and third most common cause of cancer-related death in men and women, respectively (Siegel et al. 2017; Bishehsari et al. 2014). Although international guidelines and expert consensus have recommended CRC screening for asymptomatic individuals aged 50 years or more (Benard et al. 2018), an increasing trend of CRC risk is generally observed recently in younger generations (Lee et al. 2019a). Given the foreseeable increase in disease burden, an effective strategy to eliminate the threat from CRC is urgently needed (Inra and Syngal 2015; Chiu et al. 2015).

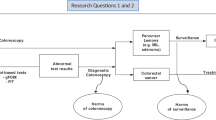

Cancer stage at diagnosis is the most crucial determinant of the survival rate. To reach the goal of early diagnosis, colonoscopy can identify superficial cancerous foci to reduce the rate of CRC-related death, and also offers an opportunity to remove the precancerous lesions (adenomatous polyps) to reduce the number of newly developed cases. However, it still represents a challenge as a primary screening tool due to the limited number of certified endoscopists in most countries (Rex and Lieberman 2001). Therefore, risk stratification is needed for the asymptomatic populations in order to better allocate endoscopy resources (Chiu et al. 2016). In the first stage, noninvasive test with good sensitivity and specificity to detect CRC or advanced adenomas will increase the uptake or adherence rate of asymptomatic populations to screening. In the subsequent second stage for those with positive results, it would increase the colonoscopic yield rate in the discovery of early-stage neoplasms, and potentially make the screening program more cost-effective. Nowadays, the noninvasive triage screening tests that commercially available for screening can be categorized into the stool-based tests and the blood-based tests (Fig. 3.1). The former includes the guaiac fecal occult blood test (gFOBT), the fecal immunochemical test (FIT), and the stool DNA test, while the latter include the plasmic methylated septin-9 test (SEPT9). In addition, we will also introduce the direct visualizing screening or diagnostic modalities, including computed tomographic colonography (CTC), colon capsule endoscopy (CCE), flexible sigmoidoscopy, and colonoscopy (Fig. 3.1). In this chapter, we will address the performance of these tests and compare their merits and drawbacks in the context of mass screening. We will also discuss the emerging roles of measuring the hemoglobin concentration in the stool sample for precise risk stratification and the possibility of quantifying the gut microbiota dysbiosis, for both the primary and the secondary prevention of CRC.

Option of colorectal cancer screening modalities. Stool-based tests include guaiac fecal occult blood test, fecal immunochemical test, and stool DNA test. Blood-based test is a plasmic methylated septin-9 test. Direct visualization examinations include computed tomographic colonography, colon capsule endoscopy, flexible sigmoidoscopy, and colonoscopy

3.2 Stool-Based Tests for Screening

3.2.1 The Fecal Occult Blood Test

When a colorectal tumor increases in size and invasiveness, it starts to shed measurable blood into the feces. The guaiac-based method of detecting occult blood in the feces is the most traditional approach for CRC screening. It involves placing stool samples on the guaiac paper to detect the hemoglobin in feces through the chemical reaction between the heme and the guaiac. Using the gFOBT (for example, the Hemoccult SENSA), previous researchers have demonstrated sensitivity of 79.4% (95% confidence interval [CI]: 64.3–94.5%) and specificity of 86.7% (95% CI: 85.9–87.4%) to detect CRC (Allison et al. 1996). One drawback of this approach is the need for dietary restriction to avoid false-positive results from iron supplements, red meat containing non-human hemoglobin, and certain vegetables containing chemicals with peroxidase properties (Rockey 1999). Another drawback is related to the limited ability of this test to differentiate between the blood spilled from the upper gastrointestinal tract and that from the lower gastrointestinal tract (Chiang et al. 2011). Furthermore, the judgment of positivity is rather subjective, and the need for trained personnel to visually interpret the test results also constrains its application for mass screening.

By contrast, the FIT is specific for human globin (Carroll et al. 2014). A study using an asymptomatic cohort of 2796 subjects who received same-day upper and lower endoscopic examinations demonstrated that FIT was specific for predicting lesions in the lower gastrointestinal tract but unable to detect lesions in the upper gastrointestinal tract (Chiang et al. 2011). Besides, the prevalence rate of lesions in the upper gastrointestinal tract did not differ significantly between subjects with positive and negative FIT results. Another significant advantage of FIT is its ability to provide both qualitative and quantitative measures of the hemoglobin concentration. The former, the qualitative FIT, uses the lateral flow immune-chromatographic method so it can, similar to the guaiac-based test, rapidly provide a visualized result when the concentration of hemoglobin in feces is higher than the cutoff value defined by the manufacturer (Hundt et al. 2009). The quantitative FIT uses the immune-turbidimetric method to measure the hemoglobin concentration in the stool sample. Even though both approaches are based on the same mechanism of an antibody–antigen reaction, the quantitative FIT additionally provides a numerical measure so that the cutoff value for a positive result can be adjusted according to the tradeoff between the number of colonoscopies needed and the colonoscopy yield rate of neoplasms.

In terms of test performance, the simultaneous uses of colonoscopy and qualitative FIT (OC-Light (V-PC50 and V-PH80); Eiken Chemical Co. Ltd., Tokyo, Japan) in a hospital-based study showed a sensitivity of 78.6% (95% CI: 58.5–91.0%) and specificity of 92.8% (95% CI: 92.5–93.2%), for the detection of CRC with the cutoff value of 10 μg Hb/g feces (Chiu et al. 2013). Similarly, one pooled analysis that included nine studies from different ethnic populations found a sensitivity of 89% (95% CI: 80–95%) and specificity of 91% (95% CI: 89–93%) in detecting CRC with a cutoff value of 20 μg Hb/g feces (Lee et al. 2014). Although no randomized controlled trials have yet demonstrated that FIT is superior to gFOBT in terms of the final endpoint of CRC mortality rate, one meta-analysis of 14 randomized controlled trials did compare the performance of FIT with that of gFOBT, finding that FIT could detect more than twice as many CRCs (2.28-fold; 95% CI: 1.68–3.10) and advanced adenomas than gFOBT (Hassan et al. 2012). In addition to its better test performance, FIT also has the advantage of using a rapid, mass throughput system to cope with a large number of returned samples, making it increasingly popular in clinical practice and especially widely used for large-scale population screening programs (Zhu et al. 2010; Tinmouth et al. 2015).

One potential problem of the quantitative FIT is the difficulty of comparing numerical test results between different products. Since the antibodies used to detect hemoglobin, the buffer, and the sampling device may vary, different brands of quantitative FIT, even those which claim the same cutoff value, can still differ in terms of test performance. In a nationwide study in Taiwan, two quantitative FITs (OC-Sensor and HM-Jack) with the same cutoff concentration of 20 μg Hb/g feces demonstrated different performance, especially in the ability to detect proximally located CRCs (Chiang et al. 2014). In a randomized trial from the Netherlands, two quantitative FITs (OC-Sensor and FOB-Gold) with the same cutoff concentration of 10 μg Hb/g feces also showed different positivity rates and led to different diagnostic yields (Grobbee et al. 2017).

Most of the quantitative FITs give the cutoff concentration as ng Hb/mL buffer. Because different brands of FIT have different devices in the sampling stick and different volumes of buffer, it is difficult to compare the results from different brands of FIT. To solve this problem, a standardized system of FIT results has been proposed, with a unified measure of μg Hb/g feces. With this unified unit, it makes the results from different quantitative FITs more comparable (Chiang et al. 2014).

3.2.2 The Role of Fecal Hemoglobin Concentration

Recently, researches indicated that the quantitative measure of fecal hemoglobin concentration (FHbC) is a useful indicator for both the risk stratification for CRC and the priority setting of colonoscopy. One population-based study from Taiwan has shown that a baseline FIT concentration even lower than the cutoff value considered a positive result (i.e., 20 μg Hb/g feces) was associated with a subsequent risk of colorectal neoplasia during the longitudinal follow-up (Chen et al. 2011). Besides, in those with a positive FIT result (higher than the cutoff value of 20 μg Hb/g feces) who did not receive a diagnostic colonoscopy, a higher FHbC at baseline was associated with an increased risk of death from CRC. A gradient relationship was seen: the risk of death was 1.31-fold (95% CI: 1.04–1.71), 2.21-fold (95% CI: 1.55–3.34), and 2.53-fold (95% CI: 1.95–3.43), respectively, for subjects with FHbC of 20–49, 50–99, and >100 μg Hb/g feces, respectively, who did not receive colonoscopic follow-up, as compared with similar subjects with colonoscopic follow-up (Lee et al. 2017). The wait time for a colonoscopy after a positive result of FIT was also associated with increased risk. A significantly gradient relationship was seen between the quantitative value of FIT at baseline and the subsequent risk of any CRC and advanced-stage disease (Lee et al. 2019b). Using patients with a fecal hemoglobin concentration of 20–49 μg Hb/g feces as the baseline, each increase of10 μg Hb/g feces was associated with a 9.9% greater risk of CRC (95% CI: 9.4–10.5%) and a 12.7% greater risk of advanced-stage disease (95% CI: 11.5–13.9%) (Lee et al. 2019b).

3.2.3 Stool DNA Test

The development of CRC is associated with the progression and accumulation of genetic and epigenetic damage, resulting in the inactivation of tumor suppressor genes and activation of the oncogene. Therefore, the direct detection of abnormal DNAs or epigenetic markers shed from colorectal neoplasms into the feces becomes a valuable approach. The commercially available stool DNA test mainly detects DNA mutations, microsatellite instability, impaired DNA mismatch repair, and abnormal DNA methylation. A pilot study of such testing with a panel of 15 point mutations of K-RAS, p-53, APC, and BAT-26 (a microsatellite instability marker) showed a sensitivity of 91% for CRC and 82% for adenomas ≥1 cm, with a specificity of 93% (Ahlquist et al. 2000). In an US large-scale study, 9899 asymptomatic individuals aged 50–84 years underwent testing with the multitarget stool DNA panel, including K-RAS point mutations, aberrantly methylated NDRG4 and BMP3, the β-actin gene (to serve as a control indicator of DNA quantity), with FIT as the reference standard. The results showed that the stool DNA panel had a higher sensitivity of 92.3% for CRC, compared to 73.8% for FIT, and a higher sensitivity of 42.4% for advanced precancerous lesions (advanced adenoma or sessile serrated polyp ≥1 cm), compared to 23.8% for FIT; nonetheless, specificity for stool DNA testing was lower at 86.6% (Imperiale et al. 2014). The multitarget stool DNA panel combines various detecting technologies to detect CRC and early colorectal lesions with higher sensitivity; the weakness, however, in terms of the wide application of this panel, is its very higher cost and lower specificity.

3.2.4 Fecal Microbiota as a Potential Biomarker for CRC Screening

Although the role of the gut microbiota in CRC is currently under enthusiastic exploration, there is limited information on the real-world application for CRC screening. One study found that increased CRC risk was associated with decreased bacterial diversity in feces, depletion of Gram-positive, fiber-fermenting Clostridia, and increased presence of the Gram-negative, pro-inflammatory genera Fusobacterium and Porphyromonas (Ahn et al. 2013). One retrospective case-control study, which evaluated the performance of FIT combined with microbial markers to screen for CRC and advanced adenoma, showed that combining FIT with quantitative fecal Fusobacterium nucleatum significantly increased the detection rates for CRC, with a sensitivity of 92.3% and specificity of 93.0%; for advanced adenoma, the results were 38.6% and 89.0%, respectively, providing additional information for a FIT-based screening program (Wong et al. 2017). Although longitudinal studies are required to further assess the predictive value of microbiota as a biomarker, the topic represents a novel and promising approach (Gagniere et al. 2016).

3.3 Blood-Based Tests for Screening

3.3.1 Plasmic Methylated Septin-9

Carcinoembryonic antigen is the common serum-based glycoprotein CRC marker used in monitoring disease recurrence or the response to therapy and in predicting prognosis; however, it is not recommended for CRC screening due to low sensitivity and the lack of CRC specificity, especially for early-stage CRC (Locker et al. 2006). Instead, the methylation of the SEPT9 gene, a tumor suppressor gene, has been identified by comparing multiple candidate markers in normal colonic epithelium and CRC tissue samples (Lofton-Day et al. 2008). The blood-based SEPT9 gene methylation assay thus aims to detect the aberrant methylation at the promoter region of the SEPT9 gene DNA released from CRC cells into the peripheral blood (Lofton-Day et al. 2008). Reports on the SEPT9 assay used the 1/3, 2/3, 1/2, or 1/1 algorithm to define a positive test, depending on the number of PCR assays (the denominator) performed and the number of positive PCR reactions (the numerator) (Song et al. 2017). In one multicenter study using colonoscopy as a reference standard, the researchers investigated the application of this blood test to detect asymptomatic CRC in an average-risk population; the results showed a sensitivity of 48.2% and 63.9% and a specificity of 91.5% and 88.4% using an 1/2 or 1/3 algorithm, respectively; however, the sensitivity for advanced adenoma was low at 11.2% (Church et al. 2014). According to the pooled data in the meta-analysis, the SEPT9 assay had higher sensitivity than the FIT test (75.6% vs. 67.1%) while the specificity was similar (90.4% vs. 92.0%) in a symptomatic population; in contrast, the SEPT9 assay exhibited lower sensitivity (68.0% vs. 79.0%) and lower specificity (80.0% vs. 94.0%) than the FIT test in an asymptomatic population (Song et al. 2017). The results may indicate different capabilities in detecting early-stage neoplasms, which may require further evaluation. Owing to its insufficient sensitivity to detect early-stage CRC or advanced adenoma, though being approved by the US FDA, such test is recommended to be used for screening only if the screening subjects were not compliant to currently recommended screening test like FIT, gFOBT, endoscopic (colonoscopy or flexible sigmoidoscopy), or CTC screening (Rex et al. 2017).

Though there are several blood biomarkers developed for detecting CRC, only a few had tested their performance in the real screening population regarding screening uptake, neoplasm detection, and effectiveness (Elshimali et al. 2013; Gezer et al. 2015). Further studies in a screening setting are required before their use as the frontline CRC screening tests.

3.4 Estimation of CRC Risk Based on Screening Test Results

The performance of clinically available screening modalities using colonoscopy as the reference standard for CRC and advanced colorectal neoplasms are summarized in Tables 3.1 and 3.2, respectively. As demonstrated in Fig. 3.2, the posttest probability of disease can be estimated by using the baseline risk of an individual and the result of a screening test. For example, in subjects at average risk of CRC, one may expect a prevalence rate of 0.1% for CRC; given a positive FIT result, the posttest probability can be increased to 1% (0.1% × positive likelihood ratio of 10). Therefore, colonoscopic follow-up is recommended. By contrast, the posttest probability can be lowered as far as to 0.01% (0.1% × negative likelihood ratio of 0.1) with a negative result of a stool DNA test, which suggests that such subjects do not need a colonoscopy. Therefore, in clinical practice, different tests may have different advantages in ruling in or ruling out subjects by CRC risk.

Calculation of the posttest probability of an outcome by multiplying the baseline risk of an individual with the likelihood ratio of a positive or negative screening test result. We assume that the positive/negative likelihood ratios of guaiac fecal occult blood test (gFOBT), fecal immunochemical test (FIT), multitarget stool DNA test (stool DNA), and plasmic methylated septin-9 (SEPT9) are 6/0.2, 10/0.2, 5/0.1, and 5/0.5, respectively

3.5 Direct Visualizing Examinations for CRC Screening

3.5.1 Double-Contrast Barium Enema

In double-contrast barium enema (DCBE), the colon is studied through X-rays obtained after coating the mucosa with barium and distending the colon with air via transrectal insertion. In a comparison study, DCBE followed 7–14 days later by CTC and colonoscopy on the same day, the sensitivities of DCBE for lesions ≥10 mm and 6–9 mm were 48% and 35%, respectively (Rockey et al. 2005). Because of its low sensitivity, DCBE is not recommended as a first-line option for CRC screening (Sung et al. 2008).

3.5.2 Computed Tomographic Colonography

CTC uses advanced visualization technology and provides 2- or 3-dimensional endoluminal images of the colon upon reconstructing of computed tomography of the cleansed and air-distended colon (Kay and Evangelou 1996). It has several potential advantages over other screening tests for CRC, including relatively noninvasive technique, rapid imaging of the entire colon, no need for sedation, a low risk of procedure-related complications, and enabling review of extra-colonic organs in addition to the colonic mucosa (Pickhardt 2006). In one tandem study (a comparison study in which the same person was screened sequentially with same-day CTC and colonoscopy), the detection rates of advanced neoplasia (advanced adenoma or cancer) were similar with both screening methods; the sensitivity/specificity of CTC for the detection of adenomas or cancers were 65%/89% for lesions ≥5 mm and 90%/86% for lesions ≥10 mm (Johnson et al. 2008). In a randomized trial, detection rates with CTC, as compared with colonoscopy, were similar for CRC (0.5% vs. 0.5%) but were lower for all advanced adenomas (5.6% vs. 8.2%) and for advanced adenomas ≥10 mm (5.4% vs. 6.3%); besides, participation in this population-based screening program with CTC was significantly better than with colonoscopy (34% vs. 22%) (Stoop et al. 2012). Potential disadvantages associated with CTC include radiation exposure and requiring follow-up colonoscopy after positive results. Besides, CTC involves specially trained and qualified radiologists, which may not be comparable to most practice settings where few radiologists have access to similar training or technology. Generalizability of the findings to a community setting is limited because participating centers were large, academic institutions. Currently, there is limited evidence that a single screening with CTC reduces CRC incidence or mortality.

3.5.3 Colon Capsule Endoscopy

The first-generation colon capsule endoscopy (CCE-1) method for CRC screening was initially introduced in 2006, and consists of swallowing a pill-shaped device which is capable of photographing the gastrointestinal tract as it passes through it; however, low sensitivity but high specificity for detecting large polyps and advanced adenomas was demonstrated, and accuracy for detecting CRC was limited (Van Gossum et al. 2009). With the introduction of the second-generation CCE (CCE-2) in 2009 and the implementation of more standardized bowel cleansing protocols, the detection of colonic lesions has significantly increased diagnostic accuracy (Eliakim et al. 2009). In a prospective study for asymptomatic subjects who underwent CCE-2 followed by colonoscopy, the sensitivity and specificity of CCE-2 for detecting adenoma ≥6 mm was 88% (95% CI: 82–93%) and 82% (95% CI: 80–83%), respectively, and for detecting adenoma ≥10 mm were 92% (95% CI: 82–97%) and 95% (95% CI: 94–95%), respectively (Rex et al. 2015). Although CCE has shown to be a feasible and exceptionally safe procedure for the visualization of the entire colon, the overall accuracy of CCE largely depends on bowel cleanliness and still needs a referral to colonoscopy for clarification of detected lesion. Its high cost, requiring even more amount of bowel cleansing agent before capsule ingestion, and not being able to perform polypectomy are some of the constraints of this modality to be used as the primary screening modality.

3.5.4 Flexible Sigmoidoscopy

The flexible sigmoidoscopy provides visualization of the distal part of the large bowel up to the splenic flexure by using a flexible, 60-cm long endoscope. It requires only minimal bowel preparation and no sedation. It also provides the ability to excise or biopsy detected lesions during the same procedure. In a prospective study concerning the detection of advanced adenomas for an average-risk screening population who underwent sigmoidoscopy with colonoscopy as a reference standard, the sensitivity and specificity were 73.7% (95% CI: 56.9–86.6%) and 89.3% (95% CI: 85.2–92.7%), respectively (Khalid-de Bakker et al. 2011). One updated meta-analysis of randomized controlled trials estimated relative risks after screening with flexible sigmoidoscopy on CRC incidence and mortality were 0.82 (95% CI: 0.75–0.89) and 0.72 (95% CI: 0.65–0.80), respectively (Brenner et al. 2014). Its effectiveness is, however, only confined to distal colon and rectum, and the potential to detect proximal neoplasms depends on colonoscopy referral (Niedermaier et al. 2018).

3.5.5 Colonoscopy

The traditional method of colonoscopy provides visualization of the entire large bowel and the distal part of the small bowel by using a flexible, 130-cm to a 160-cm long endoscope. It is considered as the “gold standard” examination for CRC screening, mainly because of its high sensitivity and specificity for detecting not only cancerous but also precancerous lesions. It also provides the ability to excise or biopsy detected lesions during the same procedure. In the National Polyp Study, after 15 years follow-up, the standardized incidence-based mortality ratio was 0.47 (95% CI: 0.26–0.80) with colonoscopic polypectomy, suggesting a 53% reduction in mortality (Zauber et al. 2012). Although colonoscopy screening is recommended for the prevention of CRC in several European countries and the United States, no randomized trials so far have quantified its possible benefit. With colonoscopy as compared with no colonoscopy, one long-term observational study showed that hazard ratios for CRC were 0.57 (95% CI: 0.45–0.72) after polypectomy and 0.44 (95% CI: 0.38–0.52) after negative colonoscopy (Nishihara et al. 2013). One updated meta-analysis of observational studies estimated relative risks after screening colonoscopy on CRC incidence and mortality were 0.31 (95% CI: 0.12–0.77) and 0.32 (95% CI: 0.23–0.43), respectively (Brenner et al. 2014). Although evidence shows that CRC screening with colonoscopy has the potential to prevent colorectal cancer of the entire large bowel, it is also associated with higher costs, complication rates, colonoscopist capacities, and not without risk.

3.6 Options for CRC Screening in Primary Care Setting

The advantages, disadvantages, and recommended interval of clinically available screening modalities for CRC are summarized in Table 3.3. There are significant differences in the adherence rates and participant preferences between colonoscopy and FIT according to the education, marital status, household income, and self-perceived risk of CRC (Wong et al. 2012). One American study has found that that primary colonoscopic screening might result in a lower completion rate as compared with the fecal occult blood testing; moreover, they also noted that there were differences in the racial/ethnic groups in the completion of fecal occult blood testing and colonoscopy (Inadomi et al. 2012). One Asian study showed that patients who were offered an informed choice (yearly FIT for up to 3 years or one-time colonoscopy) had higher adherence rates than patients who were not offered a choice, suggesting that providing a screening test option is of benefit (Wong et al. 2014). Although no trials have reported long-term findings of direct comparisons of the various screening modalities, the simulation studies have provided a way to extrapolate available evidence (Knudsen et al. 2016). In one simulation modeling study, assuming 100% adherence, the strategies of colonoscopy every 10 years, annual FIT, sigmoidoscopy every 10 years with annual FIT, and CTC every 5 years performed from ages 50 through 75 years can yield similar life-years gained, which indicate different individuals may consider different strategies for screening, in order to maximize the benefit (Knudsen et al. 2016).

3.7 Summary

CRC, historically a cancer typical of industrialized countries, is now a very common cancer and cause of cancer death globally. Evidence suggests that the disease is significantly increased in most developing countries, heralding an even greater disease burden in the near future. Colonoscopy remains the golden standard in diagnosis while a noninvasive test, using either fecal- or blood-based samples, or less invasive imaging tests, could be more suitable for population screening and provide guidance for individualized risk assessment prior to the invasive test of colonoscopy. The majority of guidelines recommend screening average-risk individuals aged 50–75 years using the fecal occult blood test (mainly the FIT, annually or biennially), with quantitative FHbC of FIT serving as the population stratification tool for CRC risk prediction. The sensitivity of the stool DNA panel test is higher due to its combination of multiple detection points in feces. However, the high cost and lower specificity may need improvement before it can be widely used for population screening, particularly in developing countries. The molecular mechanisms mediating the effect of the environment on CRC pathogenesis provide a new platform for the development of novel targets for screening. Animal experiments and larger studies in humans are still needed to elucidate the interplay of microbiota, the innate immune system, genetic factors, diet, and CRC before active intervention through the manipulation of gut microbiota can occur. Noninvasive blood tests, such as the measure of plasmic methylated septin-9, have potential as screening tools for CRC, due to the possibility of improving population compliance to CRC screening compared to the collection of stool samples but insufficient performance remains a concern. The high population risk of CRC worldwide ensures the need for continued development in this area to reduce the associated morbidity and mortality.

References

Ahlquist DA, Skoletsky JE, Boynton KA, et al. Colorectal cancer screening by detection of altered human DNA in stool: feasibility of a multitarget assay panel. Gastroenterology. 2000;119:1219–27.

Ahn J, Sinha R, Pei Z, et al. Human gut microbiome and risk for colorectal cancer. J Natl Cancer Inst. 2013;105:1907–11.

Allison JE, Tekawa IS, Ransom LJ, et al. A comparison of fecal occult-blood tests for colorectal-cancer screening. N Engl J Med. 1996;334:155–9.

Benard F, Barkun AN, Martel M, et al. Systematic review of colorectal cancer screening guidelines for average-risk adults: summarizing the current global recommendations. World J Gastroenterol. 2018;24:124–38.

Bishehsari F, Mahdavinia M, Vacca M, et al. Epidemiological transition of colorectal cancer in developing countries: environmental factors, molecular pathways, and opportunities for prevention. World J Gastroenterol. 2014;20:6055–72.

Brenner H, Stock C, Hoffmeister M. Effect of screening sigmoidoscopy and screening colonoscopy on colorectal cancer incidence and mortality: systematic review and meta-analysis of randomised controlled trials and observational studies. BMJ. 2014;348:g2467.

Carroll MR, Seaman HE, Halloran SP. Tests and investigations for colorectal cancer screening. Clin Biochem. 2014;47:921–39.

Chen LS, Yen AM, Chiu SY, et al. Baseline faecal occult blood concentration as a predictor of incident colorectal neoplasia: longitudinal follow-up of a Taiwanese population-based colorectal cancer screening cohort. Lancet Oncol. 2011;12:551–8.

Chiang TH, Lee YC, Tu CH, et al. Performance of the immunochemical fecal occult blood test in predicting lesions in the lower gastrointestinal tract. CMAJ. 2011;183:1474–81.

Chiang TH, Chuang SL, Chen SL, et al. Difference in performance of fecal immunochemical tests with the same hemoglobin cutoff concentration in a nationwide colorectal cancer screening program. Gastroenterology. 2014;147:1317–26.

Chiu HM, Lee YC, Tu CH, et al. Association between early stage colon neoplasms and false-negative results from the fecal immunochemical test. Clin Gastroenterol Hepatol. 2013;11:832–8 e1-2.

Chiu HM, Chen SL, Yen AM, et al. Effectiveness of fecal immunochemical testing in reducing colorectal cancer mortality from the one million Taiwanese screening program. Cancer. 2015;121:3221–9.

Chiu HM, Ching JY, Wu KC, et al. A risk-scoring system combined with a fecal immunochemical test is effective in screening high-risk subjects for early colonoscopy to detect advanced colorectal neoplasms. Gastroenterology. 2016;150:617–25 e3.

Church TR, Wandell M, Lofton-Day C, et al. Prospective evaluation of methylated SEPT9 in plasma for detection of asymptomatic colorectal cancer. Gut. 2014;63:317–25.

Eliakim R, Yassin K, Niv Y, et al. Prospective multicenter performance evaluation of the second-generation colon capsule compared with colonoscopy. Endoscopy. 2009;41:1026–31.

Elshimali YI, Khaddour H, Sarkissyan M, et al. The clinical utilization of circulating cell free DNA (CCFDNA) in blood of cancer patients. Int J Mol Sci. 2013;14:18925–58.

Gagniere J, Raisch J, Veziant J, et al. Gut microbiota imbalance and colorectal cancer. World J Gastroenterol. 2016;22:501–18.

Gezer U, Yoruker EE, Keskin M, et al. Histone methylation marks on circulating nucleosomes as novel blood-based biomarker in colorectal cancer. Int J Mol Sci. 2015;16:29654–62.

Grobbee EJ, van der Vlugt M, van Vuuren AJ, et al. A randomised comparison of two faecal immunochemical tests in population-based colorectal cancer screening. Gut. 2017;66:1975–82.

Hassan C, Giorgi Rossi P, Camilloni L, et al. Meta-analysis: adherence to colorectal cancer screening and the detection rate for advanced neoplasia, according to the type of screening test. Aliment Pharmacol Ther. 2012;36:929–40.

Hundt S, Haug U, Brenner H. Comparative evaluation of immunochemical fecal occult blood tests for colorectal adenoma detection. Ann Intern Med. 2009;150:162–9.

Imperiale TF, Ransohoff DF, Itzkowitz SH, et al. Multitarget stool DNA testing for colorectal-cancer screening. N Engl J Med. 2014;370:1287–97.

Inadomi JM, Vijan S, Janz NK, et al. Adherence to colorectal cancer screening: a randomized clinical trial of competing strategies. Arch Intern Med. 2012;172:575–82.

Inra JA, Syngal S. Colorectal cancer in young adults. Dig Dis Sci. 2015;60:722–33.

Johnson CD, Chen MH, Toledano AY, et al. Accuracy of CT colonography for detection of large adenomas and cancers. N Engl J Med. 2008;359:1207–17.

Kay CL, Evangelou HA. A review of the technical and clinical aspects of virtual endoscopy. Endoscopy. 1996;28:768–75.

Khalid-de Bakker CA, Jonkers DM, Sanduleanu S, et al. Test performance of immunologic fecal occult blood testing and sigmoidoscopy compared with primary colonoscopy screening for colorectal advanced adenomas. Cancer Prev Res (Phila). 2011;4:1563–71.

Knudsen AB, Zauber AG, Rutter CM, et al. Estimation of benefits, burden, and harms of colorectal cancer screening strategies: modeling study for the US preventive services task force. JAMA. 2016;315:2595–609.

Lee JK, Liles EG, Bent S, et al. Accuracy of fecal immunochemical tests for colorectal cancer: systematic review and meta-analysis. Ann Intern Med. 2014;160:171.

Lee YC, Li-Sheng Chen S, Ming-Fang Yen A, et al. Association between colorectal cancer mortality and gradient fecal hemoglobin concentration in colonoscopy noncompliers. J Natl Cancer Inst 2017;109.

Lee YC, Hsu CY, Chen SL, et al. Effects of screening and universal healthcare on long-term colorectal cancer mortality. Int J Epidemiol. 2019a;48:538–48.

Lee YC, Fann JC, Chiang TH, et al. Time to colonoscopy and risk of colorectal cancer in patients with positive results from fecal immunochemical tests. Clin Gastroenterol Hepatol. 2019b;17:1332–40.

Locker GY, Hamilton S, Harris J, et al. ASCO 2006 update of recommendations for the use of tumor markers in gastrointestinal cancer. J Clin Oncol. 2006;24:5313–27.

Lofton-Day C, Model F, Devos T, et al. DNA methylation biomarkers for blood-based colorectal cancer screening. Clin Chem. 2008;54:414–23.

Niedermaier T, Weigl K, Hoffmeister M, et al. Flexible sigmoidoscopy in colorectal cancer screening: implications of different colonoscopy referral strategies. Eur J Epidemiol. 2018;33:473–84.

Nishihara R, Wu K, Lochhead P, et al. Long-term colorectal-cancer incidence and mortality after lower endoscopy. N Engl J Med. 2013;369:1095–105.

Pickhardt PJ. Incidence of colonic perforation at CT colonography: review of existing data and implications for screening of asymptomatic adults. Radiology. 2006;239:313–6.

Rex DK, Lieberman DA. Feasibility of colonoscopy screening: discussion of issues and recommendations regarding implementation. Gastrointest Endosc. 2001;54:662–7.

Rex DK, Adler SN, Aisenberg J, et al. Accuracy of capsule colonoscopy in detecting colorectal polyps in a screening population. Gastroenterology. 2015;148:948–57 e2.

Rex DK, Boland CR, Dominitz JA, et al. Colorectal cancer screening: recommendations for physicians and patients from the U.S. multi-society task force on colorectal cancer. Gastroenterology. 2017;153:307–23.

Rockey DC. Occult gastrointestinal bleeding. N Engl J Med. 1999;341:38–46.

Rockey DC, Paulson E, Niedzwiecki D, et al. Analysis of air contrast barium enema, computed tomographic colonography, and colonoscopy: prospective comparison. Lancet. 2005;365:305–11.

Siegel RL, Miller KD, Fedewa SA, et al. Colorectal cancer statistics, 2017. CA Cancer J Clin. 2017;67:177–93.

Song L, Jia J, Peng X, et al. The performance of the SEPT9 gene methylation assay and a comparison with other CRC screening tests: a meta-analysis. Sci Rep. 2017;7:3032.

Stoop EM, de Haan MC, de Wijkerslooth TR, et al. Participation and yield of colonoscopy versus non-cathartic CT colonography in population-based screening for colorectal cancer: a randomised controlled trial. Lancet Oncol. 2012;13:55–64.

Sung JJ, Lau JY, Young GP, et al. Asia Pacific consensus recommendations for colorectal cancer screening. Gut. 2008;57:1166–76.

Tinmouth J, Lansdorp-Vogelaar I, Allison JE. Faecal immunochemical tests versus guaiac faecal occult blood tests: what clinicians and colorectal cancer screening programme organisers need to know. Gut. 2015;64:1327–37.

Van Gossum A, Munoz-Navas M, Fernandez-Urien I, et al. Capsule endoscopy versus colonoscopy for the detection of polyps and cancer. N Engl J Med. 2009;361:264–70.

Wong MC, John GK, Hirai HW, et al. Changes in the choice of colorectal cancer screening tests in primary care settings from 7,845 prospectively collected surveys. Cancer Causes Control. 2012;23:1541–8.

Wong MC, Ching JY, Chan VC, et al. Informed choice vs. no choice in colorectal cancer screening tests: a prospective cohort study in real-life screening practice. Am J Gastroenterol. 2014;109:1072–9.

Wong SH, Kwong TNY, Chow TC, et al. Quantitation of faecal Fusobacterium improves faecal immunochemical test in detecting advanced colorectal neoplasia. Gut. 2017;66:1441–8.

Zauber AG, Winawer SJ, O’Brien MJ, et al. Colonoscopic polypectomy and long-term prevention of colorectal-cancer deaths. N Engl J Med. 2012;366:687–96.

Zhu MM, Xu XT, Nie F, et al. Comparison of immunochemical and guaiac-based fecal occult blood test in screening and surveillance for advanced colorectal neoplasms: a meta-analysis. J Dig Dis. 2010;11:148–60.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Chiang, TH., Lee, YC. (2021). Options of Colorectal Cancer Screening: An Overview. In: Chiu, HM., Chen, HH. (eds) Colorectal Cancer Screening. Springer, Singapore. https://doi.org/10.1007/978-981-15-7482-5_3

Download citation

DOI: https://doi.org/10.1007/978-981-15-7482-5_3

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-7481-8

Online ISBN: 978-981-15-7482-5

eBook Packages: MedicineMedicine (R0)