Abstract

Work in a factory is physically demanding. It requires workers to perform tasks in different awkward positions. Thus, long work shifts might have prolonged effects on workers’ physical health. To minimize the risks, we introduce an automatic workers’ pose estimation system, which will calculate a worker’s body angle and indicate which angles are safe or not safe for performing tasks in various work places. By combining CMU OpenPose with body assessment tools, such as Rapid Entire Body Assessment (REBA) and Rapid Upper Limb Assessment (RULA), the proposed system automatically determines a worker’s risk pose. This method, intended to replace a manual analysis of work posture, will help build safer environments for workers.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In this paper, we claim that pose estimation in ergonomics needs to be addressed with digital camera observation rather than manual observation. With the number of ageing populations increasing in the world, many countries are forced to find compatible solutions to increase work efficiency in factories. Many workers leave the industry early due to ill health and musculoskeletal disorders [1]. In Hong Kong, the Pilot Medical Examination Scheme (PMES) for construction workers revealed that 41% of registered workers have musculoskeletal pain [2]. In ergonomics, to address this problem, technical manpower is used to analyze a worker’s working poses and the types of risk that are created in their work environments. These manual methods may be inaccurate and inefficient due to subjective bias [3]. Limitations are common due to frequent changes in work environments. It is difficult to record a worker’s accurate working poses manually.

Since manual observation is done for accident minimization in different work places, we need to change that perspective. Here, we focus mainly on three manual observation techniques in ergonomics accident minimization which are:

-

OWAS (Ovako Working Analysis System)

-

RULA (Rapid Limb Upper Assessment)

-

REBA (Rapid Entire Body Assessment)

Although the above mentioned methods are popular manual observation methods, they have some limitations. Certain angles of a worker’s body pose are not clearly defined and difficult to inspect manually. The OWAS method, for example, does not give information about posture duration, does not distinguish between arms (whether it is left or right), and does not give information about elbow position. Similarly, the other two methods also do not provide valid angles for upper limbs and body bending while working. RULA is a survey method developed for use in ergonomics investigations of work places where work-related upper limb disorders are reported [4]. Likewise, REBA is a postural analysis tool sensitive to musculoskeletal risks in a variety of tasks and assessments of working postures found in health care and other service industries [5]. We propose a method in this paper which will help to increase the efficiency of the above mentioned methods using digital technology.

Ergonomics efficiency is a necessity these days in factories. Many companies do not want to lose their experienced workers due to illness or physical pain. The application of ergonomic principles can help to increase machine performance and productivity, but mostly to help human operators to be comfortable and secure [6]. This can help a significant number of workers adopting bad work postures. Several studies show that the above mentioned working positions need some new techniques to lower the work risk. Thus, we propose an automatic worker’s pose estimation system as well as a neck and wrist angle calculation study in an attempt to find an efficient way to minimize factory workers’ accidents. Our main focus is on minimizing accidents by calculating a worker’s pose estimation and body angles to minimize pain and increase efficiency in work environments.

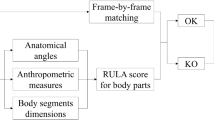

Figure 1 shows our proposed work frame for creating smart worker’s accident reduction. It shows how video frames are used in CMU OpenPose to locate key joints and how RULA and REBA scores are calculated. After the scores and angles are calculated, it checks for the risk angles of work posture. After that, it generates a warning sign if the posture is at risk, so we can stop and correct a worker’s posture.

2 Related Work

Recently, many studies are being done in ergonomics to reduce human fatalities. Research focused on minimizing human work load via manual inspection such as work related musculoskeletal disorders at construction sites [7]. Most of these problems were caused due to prolonged work in awkward positions, overhead lifting, squatting, and stooping [8, 9]. Accordingly, many work environment assessment rules have been proposed for hazard monitoring and control. Representative research on awkward posture assessment rules include the RULA, an ergonomic assessment tool focusing on upper limbs [4]. OWAS is for identifying and evaluating work postures [10]. The ISO 11226:2000 is for determining the acceptable angles and holding times of working postures [11], and the EN 1005-4 is defined as a guidance when designing machinery component parts in assessing machine-related postures and body movements, i.e. during assembly, installation, operation, maintenance, and reparation [12].

Due to the fast development of technology for data acquisition, manual observation is being replaced. Recently, a 3D model was proposed to imitate and animate manual construction tasks in virtual environments based on RULA [13]. Their work also includes analysis of body joint angles based on traditional ergonomic assessment rules to identify postural ergonomic hazards. Some also applied the same method to establish a virtual 3D workplace [14].

Beside manual work posture estimation, many studies have focused on estimating single person and multi person poses. In terms of single person pose estimation, it is mainly focused on finding the joints and adjacent joints. The traditional approach is to articulate human pose estimation for a combination of body parts [15,16,17]. These are spatial models for articulated poses based on tree-structured graphical models, which parametrically encode the spatial relationship between adjacent parts following a kinetic chain, or non-tree models [18,19,20,21]. Tompon et al. applied a deep architecture with a graphical model whose parameters are learned jointly with the network [22]. Pfister et al. used convulsion neural networks (CNNs) to implicitly capture global spatial dependencies by designing networks with large receptive fields proposed by Weit et al. [23, 24]. In terms of ergonomics, some work has been done to improve the accuracy and ability of generalization in vision-based ergonomic posture recognition, which estimates 3D skeletons and joints from 2D video frames. Multi-stage CNN architecture combines a convolutional pose machine and a probabilistic 3D joints estimation model [31].

Similarly for multi person pose estimation, most approaches have used a top down strategy that first detects people, and then independently estimates the pose of each detected region of each person [25,26,27,28,29]. Pishchulin et al. [30] proposed a bottom-up model that jointly detects and labels the parts of candidates and associates them to individual people, with pairwise scores regressed from the spatial offsets of detected parts. Also, significant work was done in multi person 2D pose estimation to detect keypoint association, both for human position and the orientation of human joints [32]. Construction hazard remedy is also done taking 2D skeleton as reference using the probability density feature of angle and length ratio extracted from 2D skeleton motion data captured by a monocular RGB camera.

3 Worker Pose Estimation System

3.1 Architecture of the Proposed System

Figure 2 illustrates the overall pipeline of our method. The system takes a video as input and CMU OpenPose is used as a baseline algorithm for keypoints detection. After the keypoints are located, we combine them with REBA and RULA to calculate a risk score. With REBA and RULA, we calculate all possible angles required to detect the faulty or risky posture. After this calculation, we apply the 2D plane coordinate method to calculate possible angle elevation between shoulder and head keypoints. This allows us to figure out how much elevation the worker is doing while working. For this calculation, we divide the upper body, called the sagittal part, into the limbs and head. This makes a plane angle concept, so it is easier to calculate angles. Similarly for wrist angle calculation, we need to consider the weight a worker is lifting and what surface the worker’s body is elevating. It is the trickiest part because we need to consider different surface angles to evaluate possible risk angles. After this process, the evaluation of risk angles is done which finally shows warning signs. If a worker’s keypoints are not clearly located or bending angles are abnormal, it notifies with warning signs. We consider CMU OpenPose as a baseline of our study to calculate the REBA and RULA scores.

The goal of our system is to digitize the worker’s posture observation. To address the problem of manual observation system, we propose a smart method which combines the CMU OpenPose and manual observation method. With OpenPose we sent our video data to locate the body joints. After the joints are located, we can consider the sagittal part (upper half) of body for angular observation. We measure the angle between the shoulder and neck with a 2D angular evaluation vector. After angles between the shoulder and head and wrist are calculated, we pass those angles in the risk checking section. This section checks the possible angles with ground truth data to determine whether to precede working or notify the worker about the possible risk. Hence, this reduces the total time consuming in manual observations and makes the work of posture evaluation lot easier and faster.

4 Neck and Wrist Angle Calculations

In order to minimize the risk for neck and wrist injuries, we need to focus on calculating the appropriate angle of a worker’s neck position and wrist movements. For this purpose, we have to divide the body into two parts (a) upper body and (b) lower body. The upper body includes the limbs, neck, and wrist. We called it the sagittal plane. In this paper, we consider the shoulder and head keypoints to calculate the bending of the neck. This is explained as a vector equation below. Similarly for wrist angle, we need to consider different hand gestures and positions considering the weight workers are lifting and the surface they are elevating.

This is essential because feature points from OpenPose do not include information about the neck and wrist angle. Using OpenPose as a baseline tool for body pose estimation, we can locate the key body joints (left and right L&R) as shoulder (L&R), elbow (L&R), spine, wrist (L&R), hip (L&R), knee (L&R), ankle (L&R). From the raw data, we locate seventeen joints that are helpful for our study in calculating the angles between the shoulder and head for neck movements and wrist angles from elbow movement from 2D video data sets.

We used a webcam for video data recording and locating joints as per defined by OpenPose. We have articulated widely adopted poses that are representative of human posture. It is because the degree of freedom of movement of the human body is mainly measured by joints. This pose does not contain the background information.

Let us consider two different sagittal surface planes. Their surface normal is n1 and n2 as vector representation, as shown in Fig. 3. Considering the sagittal plane of the body, the limbs vector is represented by the difference of the vectors of the joints:

where \( {\text{U}}_{{{\text{m}} - {\text{n}}}} \) represents the vector pointing from joint m to joint n given by 3D coordinates.The projected vector is calculated as:

where \( {\text{U}}_{{{\text{m}} - {\text{n}}}}^{{{\text{n}}_{\text{i}} }} \) is the projection of \( {\text{U}}_{{{\text{m}} - {\text{n}}}} \) on plane \( {\text{n}}_{\text{i}} \).

For angle calculation, we consider the left half of the body. The right side can be calculated in a mirrored way. A reference vector projected onto n2, and angle \( \uptheta \) is given by the angles of the projected vectors:

Hence, with the above method, we can calculate the score angles for shoulders and wrists as proposed. With n1 and n2 as the reference points of the plane angle and θ1 and θ2 representing the angle between the left and right shoulders. For the neck bending angle with θ as a reference to the surface plane, we can calculate the angles for the neck and wrist taking the surface as reference. This method takes the REBA scores shown in Table 1 to calculate the neck and wrist angles as proposed.

5 Experimental Results

5.1 Discussion and Analysis

Here we show a ground truth data of a worker’s pose at different angles and explain our results. The (x) sign in Table 1 shows when joint are not clearly located. The starting position is taken with reference as body at 0° angle or −180°. The angles of different body parts are measures as reference of REBA scores with the CMU Open Pose as reference.

The measure angles from REBA scores in Table 1 show the different angles of body key joints as shown in Fig. 5. We measure the angles of the left and right elbow, wrist, knee, and foot. Hence different measured angle values are shown in Table 1. When some key joints are not clearly identified by the program, it shows some x notation that means from 2D USB web camera it is not possible to calculate all joint values, so for this remedy we can use a multi angle camera to measure all body joints clearly.

Hence, with all of the upper limbs scores that we evaluated with the CMU OpenPose estimation program finally we can calculate the angles we proposed to limit work place hazards for workers. With the angles, we can distinguish whether it is a high or low risk angle. Then the system program indicates whether to stop working or continue work. Bad posture is identified with a warning sign. We are optimistic this method will be a milestone in identifying the bad work posture of factory workers.

In Tables 2 and 3 above, we show the measurement of REBA scores of the body at different angle positions. We can monitor how the angle variation helps in generating different scores and, with reference to same scores, we categorize the risk level of the body and actions taken. With the level of risk from low to high, we can predict the risk of musculoskeletal disorder (MSD). From the Tables 1 and 2, we can now easily determine which risk level is high and low. Also, it helps in dividing the body into segments to evaluate movement and scoring them according to risk level. Hence, it can be a user friendly tool to calculate REBA scores with less effort in a minimal time frame.

The main focus of our research is to automate a worker’s pose estimation. With reference from CMU OpenPose, we want to make this easier, but there are some limitations in which CMU OpenPose does not define some body angles. The main features of OpenPose are on keypoints detection. They provide real time multi person 2D and 3D keypoints detection. This keypoints detection is helpful in our research, so we use CMU OpenPose as a baseline tool to measure the body bending angles and wrist angles.

In our research, we use body estimation for 2D real time person video. With reference to manual observation methods widely used in industrial areas known as REBA and RULA, we want to make this process easier to estimate. These methods provide visual indications of risk levels and where action is needed to lower risk. REBA provides an easy observational postural analysis tool for whole body activities for high risk. RULA is mainly developed to measure musculoskeletal risk caused by sedentary tasks where upper body demand is high. Both produce risk level scores on a given scale to indicate whether the risk is negligible through to very high. Hence, all of these methods are used in our research to increase the accuracy of pose estimation and make it smart observatory. With digital observatory, it will be a plus point in lowering a worker’s risk in the work place.

Video was recorded via webcam for the simulation of the proposed system. For evaluation, we used CMU OpenPose combined with REBA and RULA tools. With these methods, we evaluate the pose where it shows a warning sign. We evaluate which position is appropriate for workers to perform. This evaluation is further used in calculating the movement angle of neck and wrist positions, which is helpful in calculating the angles of positions that are hazardous for work. Here we take the measurement of movements for the left half of the body, since the other half can be self-mirrored. We provide some body angles in Table 1 and 2 as a ground truth, and measured data with some snapshots of the experiment showing a warning sign when a worker’s pose is prone to future accidents in Fig. 4 and 5.

In this study, we are trying to focus on the possible outcome to make the manual observation of worker’s pose estimation digital. As mentioned above, we can calculate the plane angle taking reference from the shoulder and head joint locations. Similarly for wrist angles, we need to consider if we are carrying a load, since the condition is different with and without weight in the hand. For this study, we took reference videos of some poses that workers do daily in their work environments. With that data set, we calculated the working body pose angles and also located the joints to know which parts of the body are clearly located. As seen in Table 1 and 2, there are some drawbacks we need to improve to increase our accuracy. We collected our data on a 2D webcam, so some parts of body were not detected at some body angles. Based on the CMU OpenPose and REBA and RULA tools, we developed an automatic worker’s pose detection system. In Fig. 5 and 6, we can see how we combined all three methods in one frame to calculate our desired pose estimation. Figure 6 shows frames of the image data for angle evaluation and after evaluation with warning notations on Fig. 5 to identify workers’ bad poses. However, we had some complications finding the keypoints when they were not visible to the webcam. The camera output shows random angle for keypoints when they are not clearly located. Hence, for future work, we have to execute this problem and work with a bigger data set that includes all work related poses and try to solve that problem. Also, a remedy for prolonged work hour posture will be our main focus in near future.

6 Limitations and Ongoing Work on the Proposed System

So far in our article, we have been able to locate keypoints for joints, calculate the RULA scores which can predict bending limits, and propose an angle calculation method for joints in pose estimation. Beside this, there are certain limitations we are working on such as how to find the focal point for the head so as to locate it when the neck is bending since workers move their heads frequently. We need to focus on the accuracy of angles, human detection rates, body bending risk angles, and REBA scores. So far, we are using our system for single images. Work for groups of moving workers is ongoing. Similarly, we are working to ease the wrist angle calculation complications regarding how much weight is lifted by different workers. At this moment, experimental results are presented for our collected data that is primitive and can only be useful to show an introductory system. Thus, we are working on collecting real workers’ data.

7 Conclusion

This paper has presented a smart method for detecting a worker’s pose combining a manual method, REBA, and CMU OpenPose. With CMU OpenPose as our reference, digital REBA scores calculation makes observation smart. As CMU OpenPose gives us a clear location of joints and keypoints, we present an angle calculation method based on the notations of those keypoints. Real time person 2D pose estimation was helpful in our research in visually understanding human joint locations and helpful in calculating REBA scores.

This study proposed a new smart method for calculating RULA and REBA from 2D poses, making it easier to measure work posture and stop work place hazards caused due to bad work posture. We want to make the manual observation more smart and efficient with high accuracy so that work place hazards can be reduced to lower levels. Hence, we provide a smart framework with pose hazard that can be detected by combining all three methods discussed above. In result, we can see how good pose and bad pose are differentiated. We can clearly see that whenever there is a bad pose our system generates a warning signal. With this, we can reduce work place hazards immediately.

For further work, we will be focusing on collecting more work related posture data to solve those problems with deep neural networks. We will work to update our system for different conditions, for example, when there is a bad pose which is not detected by OpenPose and when there are obstacles that might block the vision of the camera that is installed to monitor a worker’s posture observations.

References

Arndt, C., Robinson, S., Tarp, F.: Parameter estimation for a computable general equilibrium model: a maximum entropy approach. Econ. Model. 19(3), 375–398 (2002)

Straker, L., Campbell, A., Coleman, J., Ciccarelli, M., Dankaerts, W.: In vivo laboratory validation of the physiometer: a measurement system for long-term recording of posture and movements in the workplace. Ergonomics 53(5), 672–684 (2010). https://doi.org/10.1080/00140131003671975

McAtamney, L., et al.: RULA: a survey method for the investigation of world-related upper limb disorders. Appl. Ergon. 24, 91–99 (1993)

Hignett, S., McAtamney, L.: REBA: a survey method for the investigation of work-related upper limb disorders. Appl. Ergon. (2000)

Zaheer, A. et al.: Ergonomics: a work place realities in Pakistan. Int. Posture J. Sci. Technol. 2(1), (2012)

Dieёn, J.H.V., Hoozemans, M.J.M., Toussaint, H.M.: Stoop or squat: a review of biomechanical studies on lifting technique. Clin. Biomech. 14(10), 685–696 (1999)

Umer, W., Li, H., Szeto, G.P.Y., Wong, A.Y.L.: Identification of biomechanical risk factors for the development of lower-back disorders during manual rebar tying. J. Constr. Eng. Manage. 143(1), 04016080 (2016)

Jiayu, C., Jun, Q., Changbum, A.: Construction worker’s awkward posture recognition through supervised motion tensor decomposition. Autom. Constr. 77, 67–81 (2017)

Osmo, K., Kansi, P., Kuorinka, I.: Correcting working postures in industry: a practical method for analysis. Appl. Ergon. 8(4), 199–201 (1977)

Delleman, N., Boocock, M., Kapitaniak, B., Schaefer, P., Schaub, K.: ISO/FDIS 11226: evaluation of static working postures. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting vol. 44, no. 35, pp. 442–443 (2000)

Delleman, N.J., Dul, J.: International standards on working postures and movements ISO 11226 and EN 1005-4. Ergonomics 50(11), 1809–1819 (2007)

Xinming, L., Han, S., Gül, M., Al-Hussein, M., El-Rich, M.: 3D visualization-based ergonomic risk assessment and work modification framework and its validation for a lifting task. J. Constr. Eng. Manag. 144(1), 04017093 (2017)

Golabchi, A., Han, S., Seo, J., Han, S., Lee, S., Al-Hussein, M.: An automated biomechanical simulation approach to ergonomic job analysis for workplace design. J. Constr. Eng. Manage. 141(8), 04015020 (2015)

Felzenszwalb, P.F., Huttenlocher, D.P.: Pictorial structures for object recognition. Int. J. Comput. Vis. 61, 55–79 (2005). https://doi.org/10.1023/B:VISI.0000042934.15159.49

Ramanan, D., Forsyth, D.A., Zisserman, A.: Strike a pose: tracking people by finding stylized poses. In: CVPR (2005)

Andriluka, M., Roth, S., Schiele, B.: Monocular 3D pose estimation and tracking by detection. In: CVPR (2010)

Wang, Y., Mori, G.: Multiple tree models for occlusion and spatial constraints in human pose estimation. In: Forsyth, D., Torr, P., Zisserman, A. (eds.) ECCV 2008. LNCS, vol. 5304, pp. 710–724. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-88690-7_53

Sigal, L., Black, M.J.: Measure locally, reason globally: occlusion-sensitive articulated pose estimation. In: CVPR (2006)

Lan, X., Huttenlocher, D.P.: Beyond trees: common-factor models for 2D human pose recovery. In: ICCV (2005)

Karlinsky, L., Ullman, S.: Using linking features in learning non-parametric part models. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7574, pp. 326–339. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33712-3_24

Tompson, J.J., Jain, A., LeCun, Y., Bregler, C.: Joint training of a convolutional network and a graphical model for human pose estimation. In: NIPS (2014)

Pfister, T., Charles, J., Zisserman, A.: Flowing convnets for human pose estimation in videos. In: ICCV (2015)

Wei, S.-E., Ramakrishna, V., Kanade, T., Sheikh, Y.: Convolutional pose machines. In: CVPR (2016)

He, K., Gkioxari, G., Doll´ar, P., Girshick, R.: Mask R-CNN. In: ICCV (2017)

Fang, H.-S., Xie, S., Tai, Y.-W., Lu, C.: RMPE: regional multiperson pose estimation. In: ICCV (2017)

Papandreou, G., et al: Towards accurate multi-person pose estimation in the wild. In: CVPR (2017)

Chen, Y., Wang, Z., Peng, Y., Zhang, Z., Yu, G., Sun, J.: Cascaded pyramid network for multi-person pose estimation. In: CVPR (2018)

Xiao, B., Wu, H., Wei, Y.: Simple Baselines for Human Pose Estimation and Tracking. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11210, pp. 472–487. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01231-1_29

Pishchulin, L., et al: Deepcut: joint subset partition and labeling for multi person pose estimation. In: CVPR (2016)

Zhang, H., Yan, X., Li, H.: Ergonomic posture recognition using 3D view-invariant features from single ordinary camera. Autom. Constr. 94, 1–10 (2018)

Cao, Z., et al.: Realtime multi-person 2D pose estimation using part affinity fields. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2017)

Yan, X., et al.: Development of ergonomic posture recognition technique based on 2D ordinary camera for construction hazard prevention through view-invariant features in 2D skeleton motion. Adv. Eng. Inf. 34, 152–163 (2017)

Acknowledgement

This research was supported by Basic Science Research Program through the National research Foundation of Korea (NRF) funded by the Ministry of Education (2018R1D1A1B07047936).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Paudel, P., Choi, KH. (2020). A Deep-Learning Based Worker’s Pose Estimation. In: Ohyama, W., Jung, S. (eds) Frontiers of Computer Vision. IW-FCV 2020. Communications in Computer and Information Science, vol 1212. Springer, Singapore. https://doi.org/10.1007/978-981-15-4818-5_10

Download citation

DOI: https://doi.org/10.1007/978-981-15-4818-5_10

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-4817-8

Online ISBN: 978-981-15-4818-5

eBook Packages: Computer ScienceComputer Science (R0)