Abstract

SAR ship detection based on deep learning has wide application, however there exist the following three problems for SAR ship detection. Firstly, the ships in the port are seriously disturbed by the onshore buildings. The existing detection methods cannot effectively distinguish the target from the background. Secondly, the algorithm cannot accurately locate the closely arranged ship targets. Finally, the ships in SAR images have a variety of scales, and the existing algorithms have poor positioning effect on ship targets of different scales. To solve the above problems, this paper proposes an object detection network which combines attention mechanism to enhance the network’s ability to accurately locate targets in complex background. To deal with the diversity of ship target scales, we propose a loss function that incorporates Generalized Intersection over Union (GIoU) loss to reduce the sensitivity of the algorithm to scale. The proposed algorithm achieves good results for ship target detection in complex backgrounds based on the extended SAR Ship Detection Dataset (SSDD), while maintaining a fast detection speed.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

With the development of maritime trade, ships play an increasingly important role in the process of marine development and transportation. Monitoring and controlling ships can effectively improve the efficiency of marine transportation and reduce maritime traffic accidents. Synthetic Aperture Radar (SAR) is widely used in marine ship detection because of its advantages of all-day, strong anti-jamming ability and strong penetration [1]. In recent years, the rapid development of TerraSAR-X, RADARSAT-2 and Sentinel-1 has promoted the research of ship target detection in SAR images [2].

With the powerful feature extraction ability of convolutional neural network, deep learning has achieved great success in object detection tasks. Object detection methods based on deep learning are mainly divided into two categories: two-stage detection algorithms such as Faster R-CNN [3] and single-stage detection algorithms such as SSD [4], RFBnet [5]. Two-stage detection algorithm has high positioning accuracy, and in contrast, single-stage detection algorithm has absolute advantage in terms of speed. Both algorithms are widely used in automatic driving, intelligent security, remote sensing detection and other fields. In SAR image object detection task, compared with the traditional Constant False Alarm Rate (CFAR) algorithm, the ship detection algorithm based on deep learning does not require complex modeling process, thus it has attracted more attention and research from scholars. Li et al. applied the improved Faster R-CNN detection algorithm to ship detection in SAR images [6]. Kang et al. combined the traditional CAFR algorithm with Faster R-CNN [7]. Jiao et al. proposed a Fast R-CNN SAR image ship detection method with dense connections [8]. However, there are still some problems for ship detection in SAR images based on deep learning. Firstly, the background of the ship adjacent to the port is complex, and is seriously disturbed by the wharf and shore buildings. The algorithms above cannot effectively distinguish the target from the background and effectively segment the closely arranged ship targets. Secondly, the SAR ship target has the diversity of scale, and for the small-scale ship target, these algorithms cannot effectively detect and locate. Finally, the ship detection algorithm based on deep learning mostly adopts the two-stage detection framework based on Faster R-CNN, which pays more attention on the detection accuracy and ignores the detection speed, thus failing to detect the target in real time.

Visual attention model has been widely applied in object detection, object recognition, object tracking and other fields [9]. The core idea of attention mechanism is to make the model learn to focus on the key information and ignore the irrelevant information. Target detection method based on visual attention mechanism usually obtains saliency feature map through attention model, and then calibrates the target in the image by analyzing saliency map. In the task of ship detection, Song et al. combined the sparse saliency of the target through the attention model with the Local Binary Pattern (LBP), and proposed an automatic ship detection algorithm applied to optical satellite image. The algorithm has good robustness to the interference of cloud and light [10]. With the development of deep learning in the field of computer vision, it is becoming more and more important to build a neural network with attention mechanism. On the one hand, the neural network can learn the attention mechanism independently. On the other hand, the attention mechanism can contribute to us understanding the neural network in turn [11]. In terms of the combination of visual attention mechanism and neural network, Wang et al. proposed a residual network combining attention model, which achieved good results in image classification [12]. Zheng et al. proposed a component learning method of convolutional neural network based on multi-attention model, which enabled the network to obtain better fine-grained features of images [13].

When building SAR ship detection model based on convolution neural network, we need to fully consider the difference between optical image and SAR image, and design convolution neural network model pertinently. In this paper, we propose a single-stage object detection algorithm which combines attention mechanism to solve the existing problems of ship detection in SAR images. The contribution of this paper is threefold. Firstly, in view of the complex background and the difficulty of distinguishing the target from the background in SAR images, we integrate the attention mechanism into the feature pyramid to obtain salient feature maps of different depths, and fuse these features of different depths, which improves the accuracy of the network to detect and locate ship targets in complex background and closely arranged. Secondly, GIoU Loss [14] is introduced into the loss function to reduce the sensitivity of the network to scale. Finally, we apply the single-stage detection algorithm to the task of ship detection in SAR image, and realize the real-time detection of ship target in SAR image.

The rest of the paper is organized as follows. Section 2 illustrates our proposed method and network structure. Section 3 presents the experiments and the results. The paper is concluded in Sect. 4.

2 Method

This paper proposes a ship detection method based on attention mechanism in SAR image. The main flow of the algorithm is as follows. Firstly, feature extraction network is constructed by residual module to obtain multi-level target mapping features. Secondly, the saliency of mapping features is enhanced by attention mechanism to obtain saliency feature maps; then the features expressed in different depths are fused by feature fusion method; on the fused feature maps, the position and confidence scores of targets are predicted. Finally, the predicted values are filtered by non-maximum suppression NMS, and the final detection results are obtained.

2.1 Construction of Feature Extraction Network

To deal with the characteristics of ship target in SAR image, we use Inception-ResNet. [15] as the basic unit to construct feature network and acquire image feature pyramid. The network structure in the algorithm is shown in the Fig. 1. The residual part of the residual module is replaced by Inception module in the network. By the extension of Inception module, the transmission ability of the network to the upper information is enhanced. The introduced shortcut method solves the phenomenon of gradient disappearance and makes the network deeper. We superimpose two convolutions of 3 * 3 size in Inception branch, so that we can get the same size of Receptive Fields (RF) as 5 * 5 [16]. The larger Receptive Fields can get a wider range of information, which is conducive to distinguishing ship targets from complex background. The Inception module is introduced to form a multi-branch convolution structure. The convolution cores of different sizes in each branch increase the diversity of feature information obtained. In the network, 1 * 1 convolution channel is adopted to reduce the dimension, which reduces the number of parameters of each inception model. At the same time, linear convolution is used for dimension stitching to match the dimension of input and output.

2.2 Object Detection Network with Attention Mechanism

The innovation of this paper is to integrate the attention mechanism into the detection network and obtain salient features of ship targets through the attention mechanism, so as to obtain more accurate location information.

The attention model in this paper is mainly composed of two branches: convolution branch and mask branch. Among them, the network structure of the mask branch is an hourglass-like symmetrical structure, including two stages of convolution and deconvolution, as shown in the Fig. 2.

In the process of convolution and deconvolution, the input feature map is firstly Maxpooling to extract the representative activation values in the receptive field; then the high-dimensional features of the target image are obtained through the convolution layer; and finally the corresponding mask is learned through the deconvolution network. Continuous pooling operations results in the loss of location information, which is not conducive to the accurate location of the target in the detection task. Therefore, in the process of deconvolution network, Unpooling is introduced to reconstruct the size of the original feature map [17]. At the same time, we add a dense connection method to fuse the features of different layers, which further highlights the information characteristics of the mask map. Mask maps act on convolutional feature maps by Eq. (1). In this way, elements in mask maps are similar to the weight of feature maps, which enhances regions of interest and suppresses non-target regions.

where \( A \) is the output of attention model, \( M \) is the output of mask branch, \( C \) is the output of convolution branch, \( i \) is the location of points in space, \( n \) is the number of convolution channels.

The network structure of the attention model is shown in Fig. 3. The saliency features are obtained by multiplying the corresponding elements between the mask map and the feature map. In order to avoid the difference between different levels of feature maps caused by attention model, Sigmoid is used as activation function to normalize the pixel values in mask maps into [0, 1]. However, in the process of constructing the network, multiple attention models are stacked and multiplied, which makes the element values in the feature map smaller and smaller. This will destroy the original characteristics of the network. When the network layer becomes deeper, it is easier to fall into local optimum. Therefore, the idea of identical mapping in Resnet [18] is introduced into the attention model. In the convolution branch, an identical mapping branch is added to the original output. On the one hand, the idea of shortcut solves the gradient problem in the network, which can make the network deeper; on the other hand, through addition operation, the salient features of model output are more obvious, and the discrimination of target features is enhanced. The corresponding network can be described as:

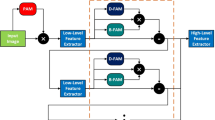

Most of the algorithms use the fusion of shallow location information and deep semantic information to solve the problem of missing target location information in the process of network downsampling [19, 20]. However, ships in SAR images are seriously disturbed by background, and it is difficult to obtain effective location features in shallow network. If effective location information cannot be obtained in shallow network, feature fusion of different depths will also be meaningless. Therefore, a new feature fusion method is proposed in this paper. Firstly, the attention model is fused into the detection network to enhance the saliency of the location information of the target in the shallow features through the attention mechanism. Then, the saliency features of different depths are fused using the structure mode of Feature Pyramid Networks (FPN), which not only retains more semantic information, but also ensures the accuracy of location information. The overall structure of the network is shown in Fig. 4. Firstly, the dimension of the input image is adjusted by 7 * 7 convolution layer, and then downsampling is done by Maxpooling layer. The feature extraction network consists of four stages. Each stage uses Inception-ResNet as the basic unit to construct the feature pyramid, which can enhance the ability to acquire upper information. In each stage, salient feature maps of different depths are obtained by concatenating several attention models in series, and the features of different depths are fused to highlight the advantages of location.

The output of the network is a feature map of three different scales. The algorithm divides the tensor of the feature map into several grids according to the scale. Each grid includes the location attributes of the bounding box and the confidence score of the object. The network output is filtered by confidence threshold and Non-Maximum Suppression (NMS), and the final prediction results are obtained.

2.3 Loss Function

In detection tasks, Mean Squared Error (MSE) Loss is used as a loss function in most detection algorithms to evaluate the effect of bounding box regression. For SAR ship target, the size of ship target varies greatly with different resolution, while MSE Loss is sensitive to scale. Using MSE Loss as loss function will affect the positioning effect of ship target. Therefore, we introduce generalized IoU (GIoU) [15] into loss function to reduce the sensitivity of loss function to scale. The definition of GIoU as follow

Where \( C \) is the smallest enclosed shape that completely contains \( A \) and \( B \). \( \left| {C\backslash \left( {A \cup B} \right)} \right| \) is the area in \( C \) that does not cover \( A \cup B \). GIoU losses can also be defined as \( L_{GIoU} \, = \,1\, - \,GIoU \). In our scheme, the following method is used to calculate \( L_{GIoU} \). Firstly, the coordinates of the predicted bounding box and the real bounding box are obtained by the location information \( x,y,w,h \) of the network prediction.

In Eq. (4), \( x_{1} ,y_{1} ,x_{2} ,y_{2} \) are the coordinate of the predicted bounding box, and the coordinate \( x_{1}^{*} ,y_{1}^{*} ,x_{2}^{*} ,y_{2}^{*} \) of the corresponding real bounding box can also be calculated according to the label. We can calculate the intersection area \( S_{I} \) of these two boxes.

Where \( x_{1}^{I} = \hbox{max} \left( {x_{1}^{{}} ,x_{1}^{*} } \right),x_{2}^{I} = \hbox{min} \left( {x_{2}^{{}} ,x_{2}^{*} } \right) \), \( y_{1}^{I} = \hbox{max} \left( {y_{1} ,x_{1}^{*} } \right),y_{2}^{I} = \hbox{min} \left( {x_{2} ,x_{2}^{*} } \right) \). At the same time, the coordinates of the boundary points of the minimal closed graph \( B_{C} \) can be determined and the corresponding area \( S_{C} \) can be formulated as:

Where \( x_{1}^{C} = \hbox{min} \left( {x_{1} ,x_{1}^{*} } \right),x_{2}^{C} = \hbox{max} \left( {x_{2} ,x_{2}^{*} } \right),y_{1}^{C} = \hbox{min} \left( {y_{1} ,y_{1}^{*} } \right),y_{2}^{C} = \hbox{max} \left( {y_{2} ,y_{2}^{*} } \right) \)

Through the above steps, \( GIoU \) and \( L_{GIoU} \) can be calculated as:

Ship detection belongs to single object detection. The loss function of network consists of two parts: location loss and confidence score loss. The loss function of fusion \( GIoU \) can be expressed as:

In Eq. (9), \( \alpha \) is the balance factor of location loss. \( S \) and \( B \) represent the number of grid partitions and the number of boundaries contained in each grid on the characteristic graph of network prediction. \( \beta \) is the penalty factor, which reduces the impact of bounding box without targets on the loss function. \( C \) and \( C^{*} \) are confidence score and corresponding label of the prediction. Because the output of the algorithm is normalized by the Sigmoid, the cross-entropy loss function as shown in Eq. (10) can get better convergence effect.

3 Experiments

In this section, we describe the experiments carried out in this paper, including network training and analysis of the experimental results.

3.1 Training

Experimental Platform and Dataset Introduction.

All the experiments are implemented on a workstation with the Intel(R) Xeon Silver 4114@2.20 Hz × 20 CPU, NVIDIA GTX TITAN-XP GPU, 128G memory and Pytorch framework. In order to evaluate the detection performance of the model, the open SAR ship object data set SSDD [12] is utilized for the experiments. The data set includes ship objects of different resolutions (1 m to 15 m) and sizes under different backgrounds (coastal, offshore). The scenes diversity of samples ensures that the trained model has stronger generalization ability. In addition, because the ship target is small in low resolution image, it is difficult to judge, so only ship targets with more than three pixels are labeled. Therefore, the data set contains 1160 images with multi-scale ship targets in different scenes. In order to make the trained model more robust, we extend the data set. The specific implementation is that the 12 TerraSAR-X images containing ship targets are cut into small slices and tagged according to the format of PASCAL VOC. Finally, the number of images in the data set is expanded to 1706. The details of extended SSDD are shown in Table 1. We divide the data into training set, verification set and test set according to the ratio of 7:1:2.

Hyper-parameters Selection.

In this paper, the hyper-parameters are selected through many experiments. On the basis of using pre-training weights, the feature extraction network is fine-tuned. The initial learning rate of the feature extraction network is set to 0.001, the initial learning rate of the detection layer is set to 0.01, the attenuation coefficient is 0.1, and the total number of training rounds is 210. The optimization algorithm uses SGD, momentum parameter is 0.9, and the attenuation coefficient is 0.00004, batch size is set to 6 * 3 (GPU parallel operation), the balance factor is 0.5, and the penalty factor is 0.1. The variation of loss function during training is shown in the figure. After 20000 steps of iteration, the network converges completely, and the total loss value is 0. 04483. The loss curve is shown in Fig. 5(a). At the same time, the training time is recorded. As shown in Fig. 5(b), the training time of single sample is about 42 s.

3.2 Object Detection Experiments

Evaluation Metrics.

In order to evaluate the detection effect of the model quantitatively, the performance of the detector is described by the following criteria.

Where \( N_{tp} \) is the correctly detected ship target, \( N_{fp} \) is the incorrectly detected target and \( N_{fn} \) is the missing ship target. We use F1 score to represent the comprehensive performance of the algorithm. We define the predicted bounding box is correct when it has IoU greater 0.5 with a single ground-truth.

Experimental Results.

In order to test the validity of the network model, the ship detection results under different environment conditions in the extended SSDD data set are analyzed, as shown in the Fig. 6. In the first line, we show the detection results of ship targets closely arranged, which is a difficult problem in ship target detection in SAR image. It can be seen that the algorithm in this paper can effectively distinguish closely spaced ships, and also can effectively segment ship targets close to the coast. The second line shows the ship target detection under the ambiguous background. This kind of ship target is characterized by unclear outline and unclear boundary between the target and the background. We can find that the algorithm can effectively distinguish the target from the background. The third line shows the results of ship detection in different sizes and directions in the same image. It can be found that the algorithm can accurately locate the target. The fourth line shows the detection results of small targets with sparse distribution. It can be seen that the algorithm has a better detection effect for small targets and a lower missed detection rate.

Contrast Experiments.

In this paper, through further experiments, the method is compared quantitatively with several mainstream single-stage target detection algorithms based on deep learning in precision, recall, F1 score and detection speed. The quantitative comparison results are shown in the Table 2.

It can be seen from the table that the proposed method achieves the highest precision and recall on the extended SSDD compared with the SSD [4] and RFBNet [5] of different backbone networks. Although it is not as fast as RFBNet, single image detection time of 24 ms can sufficiently achieve real-time detection [21]. It is noted that experiments on different platforms may have some impact on the detection time. In order to intuitively compare the detection results of different algorithms, we show the detection results in Fig. 7. Most of the samples have the same detection results under the three algorithms. In order to show the difference of the detection results more clearly, we select more complex samples for display. It can be seen that the three algorithms can effectively detect ship targets, but in terms of positioning accuracy, the method proposed in this paper has the best effect. At the same time, due to the introduction of attention mechanism, the network can learn more fine features. Compared with the other two algorithms, this algorithm can effectively distinguish closely arranged ships.

4 Conclusions

In this paper, a single-stage object detection algorithm based on attention mechanism is proposed, and the effectiveness of the algorithm is verified on the open data set SSDD. In this paper, we use the modified residual model as the basic unit of feature extraction network, which enhances the network’s ability to acquire upper target information. At the same time, the attention mechanism is integrated into the neural network, which improves the ability to detect and locate the target, and makes the algorithm can effectively distinguish the closely arranged ships. In view of the multi-scale characteristics of ship targets in SAR images, GIoU Loss is introduced into the loss function, which reduces the sensitivity of the network to scale. Another advantage of our proposed algorithm is that it is fast. The detection time of a single image on SSDD is only 24 ms, which can realize real-time ship detection. It should be noted that the algorithm cannot accurately locate ship targets with rotational characteristics, which is a problem we need to solve in the future. The continuous development of SAR will enable us to obtain more high-quality data, which will strongly promote the research of deep learning algorithm in SAR image processing field.

References

Yang, X., Sun, H., Fu, K.: Automatic ship detection in remote sensing images from google earth of complex scenes based on multiscale rotation dense feature pyramid networks. Remote Sens. 10(1), 132 (2018)

Leng, X., Ji, K., Zhou, S.: An adaptive ship detection scheme for spaceborne SAR imagery. Sensors 16(9), 1345 (2016)

Ren, S., He, K., Girshick, R.B.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Neural Information Processing Systems, pp. 91–99. ACM, Montreal (2015)

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Liu, S., Huang, D., Wang, Y.: Receptive field block net for accurate and fast object detection. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11215, pp. 404–419. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01252-6_24

Li, J., Qu, C., Shao, J.: Ship detection in SAR images based on an improved faster R-CNN. In: SAR in Big Data Era: Models, Methods & Applications. IEEE Press, Beijing (2017)

Kang, M., Leng, X., Lin, Z.: A modified faster R-CNN based on CAFR algorithm for SAR ship detection. In: International Workshop on Remote Sensing with Intelligent Processing. IEEE Press, ShangHai (2017)

Jiao, J., Zhang, Y., Sun, H.: A densely connected end-to-end neural network for multiscale and multiscene SAR ship detection. IEEE Access 6(99), 20881–20892 (2018)

Li, W., Wang, P., Qiao, H.: A survey of visual attention based methods for object tracking. Acta Automatica Sinica 40(4), 561–576 (2014)

Song, Z., Sui, H., Wang, Y.: Automatic ship detection for optical satellite images based on visual attention model and LBP. In: IEEE Workshop on Electronics, Computer & Applications. IEEE Press, Ottawa (2014)

Zhang, Q., Nian Wu, Y., Zhu, S.-C.: Interpretable convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8827–8836. IEEE Press, Salt Lake City (2018)

Wang, F., Jiang, M., Qian, C.: Residual attention network for image classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3156–3164. IEEE Press, Hawaii (2017)

Zheng, H., Fu, J., Tao, M.: Learning multi-attention convolutional neural network for fine-grained image recognition. In: IEEE International Conference on Computer Vision. IEEE Press, Hawaii (2017)

Rezatofighi, H., Tsoi, N., Gwak, J.: Generalized intersection over union: a metric and a loss for bounding box regression. arXiv preprint arXiv:1902.09630 (2019)

Szegedy, C., Ioffe, S., Vanhoucke, V.: Inception-v4, inception-ResNet and the impact of residual connections on learning. In: Thirty-First AAAI Conference on Artificial Intelligence, San Francisco (2017)

Szegedy, C., Vanhoucke, V., Ioffe, S.: Rethinking the inception architecture for computer vision. In: Computer Vision & Pattern Recognition. IEEE Press, Las Levas (2016)

Noh, H., Hong, S., Han, B.: Learning deconvolution network for semantic segmentation. In: IEEE International Conference on Computer Vision. IEEE Press, Liverpool (2015)

He, K., Zhang, X., Ren, S.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778. IEEE Press, Las Levas (2016)

Lin, T.-Y., Dollár, P., Girshick, R.: Feature pyramid networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2117–2125. IEEE Press, Hawaii (2017)

Luo, Z., Zhang, H., Zhang, Z., Yang, Y., Li, J.: Object detection based on multiscale merged feature map. In: Wang, Y., Jiang, Z., Peng, Y. (eds.) IGTA 2018. CCIS, vol. 875, pp. 80–87. Springer, Singapore (2018). https://doi.org/10.1007/978-981-13-1702-6_8

Wang, R., Zou, J., Che, M., Xiong, C.: Robust and real-time visual tracking based on single-layer convolutional features and accurate scale estimation. In: Wang, Y., Jiang, Z., Peng, Y. (eds.) IGTA 2018. CCIS, vol. 875, pp. 471–482. Springer, Singapore (2018). https://doi.org/10.1007/978-981-13-1702-6_47

Acknowledgments

This research was supported by the National Natural Science Foundation of China under grant No. 61773389, 61833016, 61573365.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Chen, C., Hu, C., He, C., Pei, H., Pang, Z., Zhao, T. (2019). SAR Ship Detection Under Complex Background Based on Attention Mechanism. In: Wang, Y., Huang, Q., Peng, Y. (eds) Image and Graphics Technologies and Applications. IGTA 2019. Communications in Computer and Information Science, vol 1043. Springer, Singapore. https://doi.org/10.1007/978-981-13-9917-6_54

Download citation

DOI: https://doi.org/10.1007/978-981-13-9917-6_54

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-9916-9

Online ISBN: 978-981-13-9917-6

eBook Packages: Computer ScienceComputer Science (R0)