Abstract

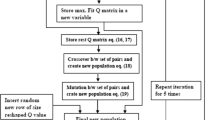

Evolutionary algorithms have come to take a centre stage in diverse areas spanning multiple applications. Reinforcement learning is a novel paradigm that has recently evolved as a major control technique. This paper presents a concise review on implementing reinforcement learning with evolutionary algorithms, e.g. genetic algorithm (GA), particle swarm optimization (PSO), ant colony optimization (ACO), to several benchmark control problems, e.g. inverted pendulum, cart–pole problem, mobile robots. Some techniques have combined Q-Learning with evolutionary approaches to improve their performance. Others have used knowledge acquisition to obtain optimal fuzzy rule set and genetic reinforcement learning (GRL) for designing consequent parts of fuzzy systems. We also propose a Q-value-based GRL for fuzzy controller (QGRF) where evolution is performed after each trial in contrast to GA where many trials are required to be performed before evolution.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Elmer P. Dadios and David J. Williams, “Nonconventional Control of the Flexible Pole–CartBalancing Problem: Experimental Results”, IEEE Transactions On Systems, Man, And Cybernetics—Part B: Cybernetics, Vol. 28, No. 6, 1998.

Chi-Yao Hsu, Yung-Chi Hsu and Sheng-Fuu Lin, “Reinforcement evolutionary learning using data mining algorithm with TSK-type fuzzy controllers”, Applied Soft Computing 11 (2011) 3247–3259.

Cheng-Jian Lin and Yong-Ji Xu, “Anovel genetic reinforcement learning for nonlinear fuzzy control problems”, Neurocomputing 69(2006) 2078–2089.

Farhad Pourpanah, Chee Peng Lim and Junita Mohamad Saleh, “A hybrid model of fuzzy ARTMAP and genetic algorithm for data classification and rule extraction”, Expert Systems With Applications 49 (2016) 74–85.

Deepak Kumar, Brijesh Dhakar and Rajpati Yadav, “Tuning a PID controller using Evolutionary Algorithms for an Non-linear Inverted Pendulum on the Cart System”, International Conference on Advanced Developments in Engineering and Technology (ICADET-14), India, Vol. 4(1), 2014.

Chia-Feng Juang and Chun-Ming Lu, “Ant Colony Optimization Incorporated With Fuzzy Q-Learning for Reinforcement Fuzzy Control”, IEEE Transactions On Systems, Man, And Cybernetics—Part A: Systems And Humans, Vol. 39(3), 2009.

H. K. Lam, Frank H. Leung and Peter K. S. Tam, “Design and Stability Analysis of Fuzzy Model-Based Nonlinear Controller for Nonlinear Systems Using Genetic Algorithm,” IEEE Transactions On Systems, Man, And Cybernetics—Part B: Cybernetics, Vol. 33, No. 2, 2003.

Tandra Pal and Nikhil R. Pal, “SOGARG: A Self-Organized Genetic Algorithm-Based Rule Generation Scheme for Fuzzy Controllers”, IEEE Transactions On Evolutionary Computation, Vol. 7, No. 4, 2003.

Cheng-Jian Lin and Cheng-Hung Chen, “Nonlinear system control using self-evolving neural fuzzy inference networks with reinforcement evolutionary learning”, Applied Soft Computing 11 (2011) 5463–5476.

R. P. Prado, S. Garc´ıa-Gal´an, J. E. Mu˜noz Exp´osito and A. J. Yuste, “Knowledge Acquisition in Fuzzy-Rule-Based Systems with Particle-Swarm Optimization”, IEEE Transactions On Fuzzy Systems, Vol. 18(6), 2010.

Chih-Kuan Chiang, Hung-Yuan Chung and Jin-Jye Lin, “A Self-Learning Fuzzy Logic Controller Using Genetic Algorithms with Reinforcements”, IEEE Transactions On Fuzzy Systems, Vol.5(3), 1997.

Yung-Chi Hsu and Sheng-Fuu Lin, “Reinforcement Hybrid Evolutionary Learning for TSK-type Neuro-Fuzzy Controller Design”, Proceedings of the 17th World Congress The International Federation of Automatic Control Seoul, Korea, July 6–11, 2008.

Yesoda Bhargava, Anupam Shukla and Laxmidhar Behera, “Improved Approach to Area Exploration in an Unknown Environment by Mobile Robot using Genetic Algorithm, Real time Reinforcement Learning and Co-operation among the Controllers’’, Third International Conference on Advances in Control and Optimization of Dynamical Systems March 13–15, 2014. Kanpur, India.

Hitesh Shah and M. Gopal, “A Reinforcement Learning Algorithm with Evolving Fuzzy Neural Networks”, Third International Conference on Advances in Control and Optimization of Dynamical Systems March 13–15, 2014. Kanpur, India.

Changjiu Zhou, “Robot learning with GA-based fuzzy reinforcement learning agents”, Information Sciences 145 (2002) 45–68.

P. K. Das, H. S. Behera and B. K. Panigrahi, “Intelligent-based multi-robot path planning inspired by improved classical Q-learning and improved particle swarm optimization with perturbed velocity”, Engineering Science and Technology, an International Journal 19 (2016) 651–669.

Shotaro Kamio and Hitoshi Iba, “Adaptation Technique for Integrating Genetic Programming and Reinforcement Learning for Real Robots’, IEEE Transactions On Evolutionary Computation, Vol. 9 (3), 2005.

Chia-Feng Juang, “Combination of Online Clustering and Q-Value Based GA for Reinforcement Fuzzy System Design”, IEEE Transactions On Fuzzy Systems, Vol. 13(3), 2005.

Chin-Teng Lin and Chong-Ping Jou, “Controlling Chaos by GA-Based Reinforcement Learning Neural Network”, IEEE Transactions On Neural Networks, Vol. 10(4), 1999.

Chia-Feng Juang and Chia-Hung Hsu, “Reinforcement Interval Type-2 Fuzzy Controller Design by Online Rule Generation and Q-Value-Aided Ant Colony Optimization”, IEEE Transactions On Systems, Man, And Cybernetics—Part B: Cybernetics, Vol. 39(6), 2009.

Chia-Feng Juang, Jiann-Yow Lin and Chin-Teng Lin, “Genetic Reinforcement Learning through Symbiotic Evolution for Fuzzy Controller Design”, IEEE Transactions On Systems, Man, And Cybernetics—Part B: Cybernetics, Vol. 30(2), 2000.

Chin-Teng Lin and Chong-Ping Jou, “GA-Based Fuzzy Reinforcement Learning for Control of a Magnetic Bearing System”, IEEE Transactions On Systems, Man, And Cybernetics—Part B: Cybernetics, Vol. 30, No. 2, 2000.

Chia-Feng Juang and Chun-Ming Lu, “Ant Colony Optimization Incorporated With Fuzzy Q-Learning for Reinforcement Fuzzy Control”, IEEE Transactions On Systems, Man, And Cybernetics—Part A: Systems And Humans, Vol. 39(3), 2009.

Fei Liu and Guangzhou Zeng, “Study of genetic algorithm with reinforcement learning to solve the TSP”, Expert Systems with Applications 36 (2009) 6995–7001.

Tanfei Jiang and Zhijng Liu, “An Adaptive Ant Colony Optimization Algorithm Approach to Reinforcement Learning”, International Symposium on Computational Intelligence and Design, 2008.

Shingo Mabu, Andre Tjahjadi and Kotaro Hirasawa, “Adaptability analysis of genetic network programming with reinforcement learning in dynamically changing environments”, Expert Systems with Applications 39 (2012) 12349–12357.

Hussein Samma, Chee Peng Lim and Junita Mohamad Saleh, “A new Reinforcement Learning-based Memetic Particle Swarm Optimizer”, Applied Soft Computing 43 (2016) 276–297.

Teodoro C. Bora, Luiz Lebensztajn and Leandro Dos S. Coelho, “Non-Dominated Sorting Genetic Algorithm Based on Reinforcement Learning to Optimization of Broad-Band Reflector Antennas Satellite”, IEEE Transactions On Magnetics, Vol. 48(2), 2012.

Duan Houli, Li Zhiheng and Zhang Yi, “Multiobjective Reinforcement Learning for Traffic Signal Control Using Vehicular Ad Hoc Network”, Hindawi Publishing Corporation EURASIP Journal on Advances in Signal Processing Volume 2010, Article ID 724035, 7 pages.

F. Daneshfar and H. Bevrani, “Load–frequency control: A GA-based multi-agent reinforcement learning”, Published in IET Generation, Transmission & Distribution.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Goyal, P., Malik, H., Sharma, R. (2019). Application of Evolutionary Reinforcement Learning (ERL) Approach in Control Domain: A Review. In: Panigrahi, B., Trivedi, M., Mishra, K., Tiwari, S., Singh, P. (eds) Smart Innovations in Communication and Computational Sciences. Advances in Intelligent Systems and Computing, vol 670. Springer, Singapore. https://doi.org/10.1007/978-981-10-8971-8_25

Download citation

DOI: https://doi.org/10.1007/978-981-10-8971-8_25

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-8970-1

Online ISBN: 978-981-10-8971-8

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)