Abstract

In this paper, Visible and Infrared sensors are used to take complementary images of a targeted scene. Image fusion thus aims to integrate the two images so that maximum information and fewer artifacts are introduced in the fused image. The concept of merging two different multisensor images using the combination of Anisotropic Diffusion (AD) and max–min approach is carried out in this paper. Herein, each of the registered source images are decomposed into approximation and detailed layers using AD filter. Later, max–min fusion rules are applied on detail and approximate layer, respectively, to preserve both spectral as well as structural information. Image-quality assessment of the fused images is made using structural similarity index (SSIM) , fusion factor (FF), and entropy (E) which justifies the effectiveness of proposed method.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Image fusion is a method of synthesizing different registered source images having complementary information; enhancing the capability of human visual perception. The aim is to produce a new image, which carries complementary as well as common features of individual images. Multisensor image fusion which involves visible and infrared imaging sensors can be applied for various applications involving guidance/detection system, navigation, surveillance, and targeting as well as remote sensing images [1]. Visible images are organized in visible spectrum and are formed due to scattering whereas thermal radiation creates infrared images and are related with brightness. In unpropitious weather condition and during night use of infrared over visible sensor is required [2]. There is a need to form a composite image so that there will be no loss of information in order to provide a compact representation of targeted scene with enhanced apprehension capability. Literature in the last two decades provides various studies discussing number of image multiresolution decomposition methodologies, such as Discrete Wavelet Transform (DWT) [3], Laplacian Pyramid (LP) [4] and data driven methods, such as Empirical Mode Decomposition (EMD) [5], Independent Component Analysis (ICA) [6] are employed. But the outcome of these methods employs lack of directional information, anisotropy and contains artifacts in the fused image. To overcome this problem AD filter [7] is used which involve multiple iteration for the purpose of image pixel segregation and processing into approximate and detail layer, respectively. Various fusion mechanisms, such as Principal Component Analysis (PCA) [8], pixel-based image fusion techniques which includes Intensity Hue Saturation (IHS), maximum, minimum, averaging, and weighted averaging image fusion have been popularly used. In addition, several other edge preserving and smoothing schemes, such as Guided and Bilateral filter [9], are widely used for fusion purpose. These methods [10] produce gradient reversal artifacts and halo effects [7]. Godse et al. [11] worked on wavelet fusion technique by using maximum intensity approach but the fused image suffered from contrast reduction and blurring. Sadhasivam et al. [12] performed fusion using PCA by selecting maximum pixel intensity but the obtained fused image has low contrast and illumination. From the above discussion, it can be inferred that there is a need to remove the artifacts and redundant information from the fused image so that it contain the complementary information of both the source images. AD-based approach uses intra-region smoothing; preserving edges and removes artifacts [6]. AD processing of source images result in preserving both high-frequency information and contrast. Max-and Min-based approach improves the redundant information and feature enhancement property in the fused image. Therefore, it is assimilated that combination of AD and max–min can be better modeled to provide fusion of visible and infrared images. In this paper, the decomposition of source images is carried out by using AD and further min rule is applied on approximate layer and max rule is applied on detail layer to yield final fused image. The proposed fusion results are promising when evaluated by using parameters, such as SSIM, FF, and E.

2 Proposed Visible-Infrared Fusion Method

The scope of the problem presented in this paper is to perform Visible and Infrared image fusion by using combination of AD and Max–Min fusion rule. Max and Min fusion rule involves selection of maximum and minimum pixel from every corresponding pixel of input images, which in turns forms resultant pixel of input images. Thus, every pixel of fused image will be pixel having maximum and minimum intensity of corresponding pixel in the input images. It helps to suppress the redundant information and highlights the components having maximum information in the source image.

2.1 Anisotropic Diffusion (AD) Filtering

Perona and Malik [13] performed nonlinear filtering on source images to produce base layer and named it as anisotropic diffusion filter. It is an inhomogeneous process that reduces the diffusivity at that location where there is much likelihood to be edges. AD utilizes flux Eq. (1) in order to control the diffusion of an image I which is governed by Eq. (1).

Where, p(m, n, t) = Flux function also known as diffusion rate, \( \Delta \),\( \nabla \) are laplacian and Gradient operator, respectively, t = iteration. Equation (1) is also known as Heat Eq. whose solution is given as:

In Eq. (2) \( I_{m,n}^{t + 1} \) represent coarser image which depend on previous coarser image scale. Value of \( \lambda \) lies between \( 0 \le \lambda \le 1/4 \). \( \overline{\nabla }_{N} \), \( \overline{\nabla }_{S} \), \( \overline{\nabla }_{E} \) and \( \overline{\nabla }_{W} \) represent nearest neighbor distance in respective direction. They can be defined as:

Perona and Malik [13] further proposed two equations as given below as Eqs. (7) and (8).

Equations (7) and (8) regulates smoothing and edge-preservation. Equation (7) is utilized if image consists of high contrast edges over low contrast edges while Eq. (8) is effective when wider regions dominate over smaller regions in the image [7]. These attributes of the conventional AD filter justifies its usage for multisensor images.

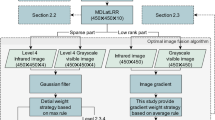

2.2 Image Fusion Algorithm Based on the Combination of AD and Max-Min Rule

The first preprocessing step involves conversion of multisource image from RGB to gray scale. After preprocessing, visible and infrared images (source images) are processed using AD. When AD filter is applied on both the registered multisource images; base layer is obtained. Further, the procedural algorithmic steps for the same are detailed in Table 1.

After the generation of final reconstructed image using the proposed fusion algorithm; their performance parameters, such as SSIM, FF and E [14,15,16] are calculated. Higher values of these parameters justify the worthiness of the proposed fusion algorithm.

3 Results and Discussions

Fusion of different Visible and Infrared images is performed by decomposing it into base layer by applying AD. Further finding detail layer and combining each layer of corresponding source images by utilizing Max and Min fusion rule as fusion algorithms. The proposed fusion method is tested with four different sets of visible and infrared images IMG-1(p1, q1, r1), similarly for IMG-2, IMG-3, IMG-4 to confirm the effectiveness. It can be inferred that in IMG-1 due to image capture from visible sensor house rooftop and greenery in targeted scene is clearly visible whereas roads are not clearly visible as shown in Fig. 1 (p1) whereas in Fig. 1 (q1) due to image captured from infrared sensor roads and person are clearly visible but the house rooftop and greenery are not clearly visible. Figure 1 (r1) represents the fusion of visible and infrared image from the proposed algorithm which shows house rooftop, roads, greenery and person in the fused image. High values of SSIM depict measure similarity in structure between the source and fused images as shown in Table 2. This has been the methodology of utilizing the fused image to improve the loss of information. Similarly, in IMG-2, Fig. 1 (p2) clearly shows alphabets on the roof and street lights whereas cars and people are not clearly visible while in Fig. 1 (q2), the people and car can be clearly visualized while the streetlight and alphabet on the roof is not. The obtained fused images show both the complementary features which inferred the effectiveness of proposed fusion method. Figure 1 also depicts the other test input source images and the obtained fusion results.

The obtained results are evaluated for fusion quality using performance parameters, such as E, FF, and SSIM. The overall impact is that the Entropy (E) of the fused image has been improved which shows a high amount of information content in the fused image. It is further verified through Fusion Factor (FF) shown in Table 2. Large values of E, FF, and SSIM depicts better performance of proposed fusion approach for various source images. Hence, the proposed methodology is validated to show its worth in conserving the spectral as well as spatial features.

4 Conclusion

An AD-based fusion method applicable to visible and infrared images employing Max and Min fusion rule is proposed in this paper. Since, the image acquired by using infrared radiations contains few details with high contrast, whereas visible imaginary provides plenty of high-frequency information but have low contrast. AD processing of source images results in preserving both high-frequency information and contrast. This yields a better result than other multiresolution decomposition techniques [1, 3, 14]. AD and max–min-based image fusion approach leads to minimum loss of information and artifacts. It can be easily observed from the result and the same can be easily validated using different fusion metrics pertaining to E, FF, and SSIM. Further, the AD can be utilized with other fusion algorithm, such as Karhunen–Loeve (KL) Transform [7], Non-Subsampled Contourlet Transform (NSCT) [17], etc. to obtained better results.

References

Srivastava, A., Bhateja, V., Moin, A.: Combination of PCA and Contourlets for Multispectral Image Fusion, In: International Conference on Data Engineering and Communication Technology (ICDECT-2016), Pune, India, Vol. 2, pp. 1–8, Springer (March, 2016).

Bulanon, D. M., Burks, T. F., Alchanatis, V.: Image fusion of visible and thermal images for fruit detection, In: Biosystems Engineering, Vol. 103, No.1, pp. 12–22—Elsevier (May, 2009).

Krishn, A., Bhateja, V., Himanshi, Sahu, A.: Medical Image Fusion Using Combination of PCA and Wavelet Analysis, In: International Conference on Advances in Computing, Communication and Informatics (ICACCI-2014), Gr. Noida (U.P), India, pp. 986–991, IEEE (September, 2014).

Sahu, A., Bhateja, V.,Krishn, A., Himanshi: Medical Image Fusion with Laplacian Pyramid, In: International Conference on Medical Imaging, m-Health & Emerging Communication Systems (MEDCom-2014), Gr. Noida (U.P.), pp. 448–453, IEEE (November, 2014).

Hui, C. S., Hongbo, S., Renhua, S., Jing, T.: Fusing remote sensing images using a trous wavelet transform and empirical mode decomposition, In: Pattern Recognition Letters, Vol. 29, No.3, pp. 330–342, Elsevier (1 February, 2008).

Mitianoudis, N., Stathaki, T.: Pixel-based and region-based image fusion schemes using ICA bases, In: Information Fusion, Vol. 8, No. 2, pp. 131–142, Elsevier (2007).

Bavirisetti, D. P., Dhuli, R.: Fusion of Infrared and Visible sensor images based on Anisotropic diffusion and Karhunen-Loeve Transform, In: IEEE Sensor Journal, Vol. 16, No. 1, pp. 203–209, IEEE (14 September 2015).

He, C., Liu, Q., Li, H., Wang, H.: Multimodal Medical Image Fusion Based on IHS and PCA, In: Symposium on Security Detection and Information Processing, Vol. 7, pp. 280–285, Elsevier (2010).

He, K., Sun, J., Tang, X.: Guided Image Filtering, In: IEEE Transaction on Pattern Analysis and Machine Intelligence, Vol. 35, No. 6, pp. 1397–1408, IEEE (June 2013).

Li, S., Kang, X., Hu, J.: Image Fusion with Guided Filtering, In: IEEE Transactions on Image Processing, Vol. 22, No. 7, pp. 2864–2875, IEEE (July 2013).

Godse, D. A., Bormane, D. S.: Wavelet based Image Fusion using Pixel based Maximum Selection rule, In: International Journal of Engineering Science and Technology, vol. 3, no. 7, pp. 5572–5578, (2011).

Sadhasivam, S. K., Keerthivasan, M. K., Muttan, S.: Implementation of Max Principle with PCA in Image Fusion for Surveillance and Navigation Application, In: Electronic Letters on Computer Vision and Image Analysis, Vol. 10, No. 1, pp. 1–10, (2011).

Perona, P., Malik, J.: Scale-Space and Edge Detection Using Anisotropic Diffusion, In: IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 12, No. 7, pp. 629–639, (July 1990).

Himanshi, Bhateja, V., Krishn, A., Sahu, A.: Medical Image Fusion in Curvelet Domain Employing PCA and Maximum Selection Rule, In: International Conference on Computers and Communication Technologies (IC3T-2015), Hyderabad, India, vol. 1, pp. 1–9, IEEE (July, 2015).

Moin, A., Bhateja, V., Srivastava, A.: Multispectral Medical Image Fusion using PCA in Wavelet Domain, In: Proc. (ACM-ICPS) Second International Conference on Information and Communication Technology for Competitive Strategies (ICTCS-2016), Udaipur, India, pp. 1–6, (March, 2016).

Krishn, A., Bhateja, V., Himanshi, Sahu, A.: PCA based Medical Image Fusion in Ridgelet Domain, In: International Conference on Frontiers in Intelligent Computing Theory and Applications (FICTA-2014), Bhubaneswar, India, vol. 328, pp. 475–482, Springer (November 2014).

Xiao-bo, Q., Wen, Y. J., Zhi, X. H., Zi-Qian, z.: Image Fusion Algorithm Based on Spatial Frequency-Motivated Pulse Coupled Neural Networks in Nonsubsampled Contourlet Transform Domain, In: Acta Automatica Sinica, vol. 34, no.12, pp. 1508–1514, Elsevier (2008).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Singhal, A., Bhateja, V., Singh, A., Satapathy, S.C. (2018). Visible-Infrared Image Fusion Method Using Anisotropic Diffusion. In: Bhalla, S., Bhateja, V., Chandavale, A., Hiwale, A., Satapathy, S. (eds) Intelligent Computing and Information and Communication. Advances in Intelligent Systems and Computing, vol 673. Springer, Singapore. https://doi.org/10.1007/978-981-10-7245-1_51

Download citation

DOI: https://doi.org/10.1007/978-981-10-7245-1_51

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7244-4

Online ISBN: 978-981-10-7245-1

eBook Packages: EngineeringEngineering (R0)