Abstract

This chapter illustrates the application of principal component analysis (PCA) plus statistical hypothesis testing to online damage detection in structures, and to fault detection of an advanced wind turbine benchmark under actuators (pitch and torque) and sensors (pitch angle measurement) faults. A baseline pattern or PCA model is created with the healthy state of the structure using data from sensors. Subsequently, when the structure is inspected or supervised, new measurements are obtained and projected into the baseline PCA model. When both sets of data are compared, both univariate and multivariate statistical hypothesis testing is used to make a decision. In this work, both experimental results (with a small aluminum plate) and numerical simulations (with a well-known benchmark wind turbine) show that the proposed technique is a valuable tool to detect structural changes or faults.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction

Principal component analysis (PCA) is a statistical technique that transforms a number of possibly correlated variables into a smaller number of uncorrelated variables called principal components. It is well-known that the basic idea behind the PCA is to reduce the dimension of the data, while retaining as much as possible the variation present in these data, see [1]. Applications of PCA can be found in a vast variety of fields from neuroscience to image processing. This chapter provides a thorough review to the application of PCA to detect structural changes (damages, structural health monitoring) or faults (in the sensors or in the actuators, condition monitoring). First reviewing how data (from sensors) is usually represented, second showing how in this work is represented in a different manner, then reviewing the group-scaling processing of the data, and finally showing that PCA plus (univariate and multivariate) statistical hypothesis testing is a valuable tool to detect structural changes or faults.

In a standard application of the principal component analysis strategy in the field of structural health monitoring or condition monitoring, the projections onto the vectorial space spanned by the principal components (scores) allow a visual grouping or separation. In some other cases, two classical indices can be used for damage or fault detection, such as the Q index and the Hotelling’s \(T^2\) index, see [2]. However, when a visual grouping, clustering or separation cannot be performed with the scores a more powerful and reliable tool is needed to be able to detect a damage or a fault. The approaches proposed in this chapter for the damage or fault detection are based on a group scaling of the data and multiway principal component analysis (MPCA) combined with both univariate and multivariate statistical hypothesis testing [3,4,5].

On one hand, the basic premise of vibration based structural health monitoring feature selection is that damage will significantly alter the stiffness, mass or energy dissipation properties of a system, which, in turn, alter the measured dynamic response of that system. Subsequently, the structure to be diagnosed is excited by the same signal and the dynamic response is compared with the pattern, see [6]. In this chapter, these techniques will be applied to an experimental set-up with a smooth-raw aluminium plate.

On the other hand, in the fault detection case (condition monitoring), this chapter applies the techniques to an advanced wind turbine benchmark (numerical simulations). In this case, the only available excitation is the wind. Therefore, guided waves in wind turbines cannot be considered as a realistic scenario. In spite of that, the new paradigm described is based on the fact that, even with a different wind field, the fault detection strategy based on PCA and statistical hypothesis testing will be able to detect faults. A growing interest is being shown in offshore wind turbines, because they have enormous advantages compared to their onshore version including higher and steadier wind speed, and less restrictions due to remoteness to urban areas, see [7]. The main disadvantages of offshore wind energy farms are high construction costs, and operation and maintenance (O&M) costs because they must withstand rough weather conditions. The field of wind turbine O&M represents a growing research topic as they are the critical elements affecting profitability in the offshore wind turbine sector. We believe that PCA plus statistical hypothesis testing has a tremendous potential in this area. In fact, the work described in this chapter is only the beginning of a large venture. Future work will develop complete fault detection, isolation, and reconfigurable control strategies in response to faults based on efficient fault feature extraction by means of PCA.

This chapter is divided into five main sections. In Sect. 1 we introduce the scope of the chapter. Section 2 poses the experimental set-up and the reference wind turbine where the techniques will be applied and tested. The methodology is stated in Sect. 3. The obtained results are discussed and analyzed in Sect. 4. Finally, Sect. 5 draws the conclusions.

2 Experimental Set-Up and Reference Wind Turbine

The damage and fault detection strategies reviewed in this chapter will be applied to both an experimental set-up and a simulated wind turbine, as described in Sects. 2.1 and 2.2. On one hand, with respect to the experimental set-up, the analysis of changes in the vibrational properties of a small aluminum plate is used to explain, validate and test the damage detection strategies. As the aluminum plate will be always excited by the same signal, this experiment corresponds to guided waves in structures for structural health monitoring. On the other hand, we will address the problem of online fault detection of an advanced wind turbine benchmark under actuators (pitch and torque) and sensors (pitch angle measurements) faults of different type. In this case, the excitation signal is never the same, as it is given by the wind. Even in this case, with a different wind signal, the fault detection strategy will be able to detect the faults. More precisely, the key idea behind the detection strategy is the assumption that a change in the behavior of the overall system, even with a different excitation, has to be detected.

2.1 Experimental Set-Up

The small aluminium plate (25 cm \(\times \) 25 cm \(\times \) 0.2 cm) in Fig. 1 (top) is used to experimentally validate the proposed approach in this work. The plate is suspended by two elastic ropes in a metallic frame in order to isolate the environmental noise and remove boundary conditions (Fig. 2). Four piezoelectric transducer discs (PZT’s) are attached on the surface, as can be seen in Fig. 1 (bottom). Each PZT is able to produce a mechanical vibration (Lamb waves in a thin plate) if some electrical excitation is applied (actuator mode). Besides, PZT’s are able to detect time varying mechanical response data (sensor mode). In every phase of the experimental stage, just one PZT is used as actuator (exciting the plate) and the rest are used as sensors (and thus recording the dynamical response). A total number of 100 experiments were performed using the healthy structure: 50 for the baseline (BL) and 50 for testing (Un, which stands for undamaged, is an abbreviation used throughout the chapter). Additionally, nine damages (D1, D2, ..., D9) were simulated adding different masses at different locations, see Fig. 1 (bottom). For each damage, 50 experiments were implemented, resulting in a total number of 450 experiments. The excitation is a sinusoidal signal of 112 KHz modulated by a Hamming window, as illustrated in Fig. 3 (top). An example of the signal collected by PZT2 is shown in Fig. 3 (bottom).

2.2 Reference Wind Turbine

The National Renewable Energy Laboratory (NREL) offshore 5-MW baseline wind turbine [8] is used in the simulations of the fault detection strategy. This model is used as a reference by research teams throughout the world to standardize baseline offshore wind turbine specifications and to quantify the benefits of advanced land- and sea-based wind energy technologies. In this work, the wind turbine is operated in its onshore version and in the above-rated wind-speed range. The main properties of this turbine are listed in Table 1.

In this chapter, the proposed fault detection method is SCADA-data based, that is, it uses data already collected at the wind turbine controller. In particular, Table 2 presents assumed available data on a MW-scale commercial wind turbine that is used in this work by the fault detection method.

The reference wind turbine has a conventional variable-speed, variable blade-pitch-to-feather configuration. In such wind turbines, the conventional approach for controlling power-production operation relies on the design of two basic control systems: a generator-torque controller and a rotor-collective blade-pitch controller. In this work, the baseline torque and pitch controllers are utilized, but the generator-converter and the pitch actuators are modeled and implemented externally; i.e., apart from the embedded FAST code. This will facilitate to model different type of faults on the generator and the pitch actuator. The next subsections recall these models and also the wind model used to generate the wind data.

2.2.1 Wind Modeling

The TurbSim stochastic inflow turbulence tool (National Wind Technology Center, Boulder, Colorado, USA) [9] has been used. It provides the ability to drive design code (e.g., FAST) simulations of advanced turbine designs with simulated inflow turbulence environments that incorporate many of the important fluid dynamic features known to adversely affect turbine aeroelastic response and loading.

The generated wind data has the following characteristics: Kaimal turbulence model with intensity set to \(10\%\), logarithmic profile wind type, mean speed is set to 18.2 m/s and simulated at hub height, and the roughness factor is set to 0.01 m.

In this work, every simulation is ran with a different wind data set.

2.2.2 Generator-Converter Actuator Model and Pitch Actuator Model

The generator-converter and the pitch actuators are modeled apart from the embedded FAST code, with the objective to ease the model of different type of faults on these parts of the wind turbine.

On one hand, the generator-converter can be modeled by a first-order differential system [10]:

where \(\tau _r\) and \(\tau _{c}\) are the real generator torque and its reference (given by the controller), respectively, and we set \(\alpha _{gc}=50\) [8]. The power produced by the generator, \(P_e(t)\), can be modeled by [10]:

where \(\eta _g\) is the efficiency of the generator and \(\omega _g\) is the generator speed. In the numerical experiments, \(\eta _g=0.98\) is used [10].

On the other hand, the three pitch actuators are modeled as a second-order linear differential equation, pitch angle \(\beta _i(t)\), and its reference u(t) (given by the collective-pitch controller) [10]:

where \(\omega _n\) and \(\xi \) are the natural frequency and the damping ratio, respectively. In the fault free case, these values are set to \(\omega _n=11.11\) rad/s, and \(\xi =0.6\).

2.2.3 Fault Description

In this chapter, the different faults proposed in the fault tolerant control benchmark [11] will be considered, as gathered in Table 3. These faults selected by the benchmark cover different parts of the wind turbine, different fault types and classes, and different levels of severity.

Usually, pitch systems use either an electric or a fluid power actuator. However, the fluid power subsystem has lower failure rates and better capability of handling extreme loads than the electrical systems. Therefore, fluid power pitch systems are preferred on multi-MW size and offshore turbines. However, general issues such as leakage, contamination, component malfunction and electrical faults make current systems work sub-optimal [12]. In this work, faults in the pitch actuator are considered in the hydraulic system, which result in changed dynamics due to either a high air content in oil (fault 1) or a drop in pressure in the hydraulic supply system due to pump wear (fault 2) or hydraulic leakage (fault 3) [13], as well as pitch position sensor faults (faults 5–7).

Pump wear (fault 2) is an irreversible slow process over the years that results in low pump pressure. As this wear is irreversible, the only possibility to fix it is to replace the pump, which will happen after pump wear reaches certain level. Meanwhile, the pump will still be operating and the system dynamics is slowly changing, while the turbine structure should be able to withstand the effects of this fault. Pump wear after approximately 20 years of operation might result in pressure reduction to \(75\%\) of the rated pressure, which is reflected by the faulty natural frequency \(\omega _{n}=7.27\) rad/s and a fault damping ratio of \(\xi =0.75\).

Hydraulic leakage (fault 3) is another irreversible incipient fault but is introduced considerably faster than the pump wear. Leakage of pitch cylinders can be internal or external [12]. When this fault reaches a certain level, system repair is necessary, and if the leakage is too fast (normally due to external leakage), it will lead to a pressure drop and the preventive procedure is deployed to shut down the turbine before the blade is stuck in undesired position (if the hydraulic pressure is too low, the hydraulic system will not be able to move the blades that will cause the actuator to be stuck in its current position resulting in blade seize). The fast pressure drop is easy to detect (even visually as it is normally related to external leakage) and requires immediate reaction; however, the slow hydraulic leakage reduces the dynamics of the pitch system, and for a reduction of \(50\%\) of the nominal pressure the natural frequency under this fault condition is reduced to \(\omega _{n}=3.42\) rad/s and the corresponding damping ratio is \(\xi =0.9\). In this work, the slow (internal) hydraulic leakage is studied.

On the contrary to pump wear and hydraulic leakage, high air content in the oil (fault 1) is an incipient reversible process, which means that the air content in the oil may disappear without any necessary repair to the system. The nominal value of the air content in the oil is \(7\%\), whereas the high air content in the oil corresponds to \(15\%\). The effect of such a fault is expressed by the new natural frequency \(\omega _{n}=5.73\) rad/s and the damping ratio of \(\xi =0.45\) (corresponding to the high air content in the oil).

The generator speed measurement is done using encoders. The gain factor fault (fault 4) is introduced when the encoder reads more marks on the rotating part than actually present, which can happen as a result of dirt or other false markings on the rotating part.

Faults in the pitch position measurement (pitch position sensor fault) are also advised. This is one of the most important failure modes found on actual systems [12, 14]. The origin of these faults is either electrical or mechanical, and it can result in either a fixed value (faults 5 and 6) or a changed gain factor (fault 7) on the measurements. In particular, the fixed value fault should be easy to detect, and, therefore, it is important that a fault detection, isolation, and accommodation scheme be able to deal with this fault. If not handled correctly, these faults will influence the pitch reference position because the pitch controller is based on these pitch position measurements.

Finally, a converter torque offset fault is considered (fault 8). It is difficult to detect this fault internally (by the electronics of the converter controller). However, from a wind turbine level, it is possible to be detected, isolated, and accommodated because it changes the torque balance in the wind turbine power train.

3 Fault Detection Strategy

The overall fault detection strategy is based on principal component analysis and statistical hypothesis testing. A baseline pattern or PCA model is created with the healthy state of the structure (plate or wind turbine) to study. When the current state has to be diagnosed, the collected data is projected using the PCA model. The final diagnosis is performed using statistical hypothesis testing.

The main paradigm of vibration based structural health monitoring is based on the basic idea that a change in physical properties due to structural changes or damage will cause detectable changes in dynamical responses. This idea is illustrated in Fig. 4, where the healthy structure is excited by a signal to create a pattern. Subsequently, the structure to be diagnosed is excited by the same signal and the dynamic response is compared with the pattern. The scheme in Fig. 4 is also know as guided waves in structures for structural health monitoring [6].

Guided waves in structures for structural health monitoring. The healthy structure is excited by a signal and the dynamic response is measured to create a baseline pattern. Then, the structure to diagnose is excited by the same signal and the dynamic response is also measured and compared with the baseline pattern. A significant difference in the pattern would imply the existence of a fault

However, in the case of wind turbines, the only available excitation is the wind. Therefore, guided waves in wind turbines for SHM as in Fig. 4 cannot be considered as a realistic scenario. In spite of that, the new paradigm described in Fig. 5 is based on the fact that, even with a different wind field, the fault detection strategy based on PCA and statistical hypothesis testing will be able to detect some damage, fault or misbehavior. More precisely, the key idea behind the detection strategy is the assumption that a change in the behavior of the overall system, even with a different excitation, has to be detected. The results presented in Sects. 4.3 and 4.4 confirm this hypothesis.

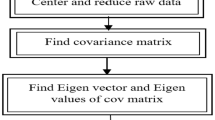

3.1 Data Driven Baseline Modeling Based on PCA

Classical approaches to the application of principal component analysis can be summarized in the following example. Let us assume that we have N sensors or variables that are measuring during \((L-1)\varDelta \) seconds, where \(\varDelta \) is the sampling time and \(L\in \mathbb {N}\). The discretized measures of each sensor can be arranged as a column vector \(\mathbf {x}^i=(x_1^i,x_2^i,\ldots ,x_L^i)^T,\ i=1,\ldots ,N\) so we can build up a \(L\times N\) matrix as follows:

It is worth noting that each column in matrix X in Eq. (2) represents the measures of a single sensor or variable.

However, when multiway principal component analysis is applied to data coming from N sensors at L discretization instants and n experimental trials, the information can be stored in an unfolded \(n\,{\times }\,(N\times L)\) matrix as follows:

In this case, a column in matrix \(\mathbf {X}\) in Eq. (3) no longer represents the values of a variable at different time instants but the measurements of a variable at one particular time instant in the whole set of experimental trials. The work by Mujica et al. [2] presents one of the first applications of multiway principal component analysis (MPCA) for damage assessment in structures using two different measures or distances (Q and T indices). One of the advantages of the classical approach of principal component analysis is that the largest components (in absolute value) of the unit eigenvector related to the largest eigenvalue gives direct information on the most important sensors installed in the structure [15, 16]. This information is no longer available when multiway principal component analysis is applied to the collected data [16]. Another important difference between the classical approach and MPCA lies on normalization. On one hand, to apply the PCA in its classical version, each column vector is normalized to have zero mean and unit variance. On the other hand (MPCA), the normalization has to take into account that several columns contain the information of the same sensor. In this case, several strategies can be applied, such as autoscaling or group scaling. In this work we use the so-called group scaling, that it is detailed in Sect. 3.1.3.

3.1.1 Guides Waves in Structures for Structural Health Monitoring: Data Collection

Let us address the PCA modeling by measuring, from a healthy structure, N sensors at L discretization instants and n experimental trials. In this case, since we consider guided waves, the structure is excited by the same signal at each experimental trial. This way, the collected data can be arranged in matrix form as follows:

In this way, each row vector represents, for a particular experimental trial, the measurements from all the sensors at every specific time instant. Similarly, each column vector represents measurements from one sensor at one specific time instant in the whole set of experimental trials. The number of rows of matrix \(\mathbf {X}_{\text {GW}}\) in Eq. (4), n, is defined by the number of experimental trials. The number of columns of matrix \(\mathbf {X}_{\text {GW}}\), \(N\cdot L\), is the number of sensors (N) times the number of discretization instants (L).

3.1.2 Condition Monitoring of Wind Turbines: Data Collection

In the case of wind turbines, the excitation comes from different wind fields. Therefore, instead of considering different experimental trials as in Sect. 3.1.1, we will first measure, from a healthy wind turbine, a sensor during \((nL-1)\varDelta \) seconds, where \(\varDelta \) is the sampling time and \(n,L\in \mathbb {N}\). The discretized measures of the sensor are a real vector

where the real number \(x_{ij},\ i=1,\ldots ,n,\ j=1,\ldots ,L\) corresponds to the measure of the sensor at time \(\left( (i-1)L+(j-1)\right) \varDelta \) seconds. This collected data can be arranged in matrix form as follows:

where \(\mathscr {M}_{n\,{\times }\, L}(\mathbb {R})\) is the vector space of \(n\,{\times }\, L\) matrices over \(\mathbb {R}\). When the measures are obtained from \(N\in \mathbb {N}\) sensors also during \((nL-1)\varDelta \) seconds, the collected data, for each sensor, can be arranged in a matrix as in Eq. (6). Finally, all the collected data coming from the N sensors is disposed in a matrix \(\mathbf {X}_{\text {WT}}\in \mathscr {M}_{n\,{\times }\, (N\cdot L)}\) as follows:

where the superindex \(k=1,\ldots ,N\) of each element \(x_{ij}^k\) in the matrix represents the number of sensor.

It is worth noting that in both approaches —guided waves for structural health monitoring and condition monitoring of wind turbines— the structure of matrices \(\mathbf {X}_{\text {GW}}\) and \(\mathbf {X}_{\text {WT}}\) in Eqs. (4) and (7), respectively, are completely equivalent. Therefore, in the rest of the chapter, we will simply refer to these matrices as \(\mathbf {X}\).

The objective of the principal component analysis, as a pattern recognition technique, is to find a linear transformation orthogonal matrix \(\mathbf {P}\in \mathscr {M}_{(N\cdot L)\,{\times }\, (N\cdot L)}(\mathbb {R})\) that will be used to transform or project the original data matrix \(\mathbf {X}\) according to the subsequent matrix product:

where \(\mathbf {T}\) is a matrix having a diagonal covariance matrix.

3.1.3 Group Scaling

Since the data in matrix \(\mathbf {X}\) come from several sensors and could have different scales and magnitudes, it is required to apply a preprocessing step to rescale the data using the mean of all measurements of the sensor at the same column and the standard deviation of all measurements of a sensor [17].

More precisely, for \(k=1,2,\ldots ,N\) we define

where \(\mu _j^k\) is the mean of the measures placed at the same column, that is, the mean of the n measures of sensor k in matrix \(\mathbf {X}^k\) —that corresponds to the n measures of sensor k at the j-th discretization instant for the whole set of experimental trials (guided waves) or the measures of sensor k at time instants \(\left( (i-1)L+(j-1)\right) \varDelta \) seconds, \(i=1,\ldots ,n\) (wind turbine)—; \(\mu ^k\) is the mean of all the elements in matrix \(\mathbf {X}^k\), that is, the mean of all the measures of sensor k; and \(\sigma ^k\) is the standard deviation of all the measures of sensor k. Therefore, the elements \(x_{ij}^k\) of matrix \(\mathbf {X}\) are scaled to define a new matrix \(\check{\mathbf {X}}\) as

When the data are normalized using Eq. (12), the scaling procedure is called variable scaling or group scaling [18].

For the sake of clarity, and throughout the rest of the chapter, the scaled matrix \(\check{\mathbf {X}}\) is renamed as simply \(\mathbf {X}\). The mean of each column vector in the scaled matrix \(\mathbf {X}\) can be computed as

Since the scaled matrix \(\mathbf {X}\) is a mean-centered matrix, it is possible to calculate its covariance matrix as follows:

The covariance matrix \(\mathbf C _{\mathbf {X}}\) is a \((N\cdot L)\,{\times }\, (N\cdot L)\) symmetric matrix that measures the degree of linear relationship within the data set between all possible pairs of columns. At this point it is worth noting that each column can be viewed as a virtual sensor and, therefore, each column vector \(\mathbf {X}(:,j)\in \mathbb {R}^n, \ j=1,\ldots ,N\cdot L,\) represents a set of measurements from one virtual sensor.

The subspaces in PCA are defined by the eigenvectors and eigenvalues of the covariance matrix as follows:

where the columns of \(\mathbf {P}\in \mathscr {M}_{(N\cdot L)\,{\times }\, (N\cdot L)}(\mathbb {R})\) are the eigenvectors of \(\mathbf C _{\mathbf {X}}\). The diagonal terms of matrix \(\varLambda \in \mathscr {M}_{(N\cdot L)\,{\times }\, (N\cdot L)}(\mathbb {R})\) are the eigenvalues \(\lambda _i,\ i=1,\ldots ,N\cdot L,\) of \(\mathbf C _{\mathbf {X}}\) whereas the off-diagonal terms are zero, that is,

The eigenvectors \(p_j,\ j=1,\ldots ,N\cdot L\), representing the columns of the transformation matrix \(\mathbf {P}\) are classified according to the eigenvalues in descending order and they are called the principal components or the loading vectors of the data set. The eigenvector with the highest eigenvalue, called the first principal component, represents the most important pattern in the data with the largest quantity of information.

Matrix \(\mathbf {P}\) is usually called the principal components of the data set or loading matrix and matrix \(\mathbf {T}\) is the transformed or projected matrix to the principal component space, also called score matrix. Using all the \(N\cdot L\) principal components, that is, in the full dimensional case, the orthogonality of \(\mathbf {P}\) implies \(\mathbf {PP}^{\text {T}}=\mathbf {I}\), where \(\mathbf {I}\) is the \((N\cdot L)\,{\times }\, (N\cdot L)\) identity matrix. Therefore, the projection can be inverted to recover the original data as

However, the objective of PCA is, as said before, to reduce the dimensionality of the data set \(\mathbf {X}\) by selecting only a limited number \(\ell <N\cdot L\) of principal components, that is, only the eigenvectors related to the \(\ell \) highest eigenvalues. Thus, given the reduced matrix

matrix \(\hat{\mathbf{T }}\) is defined as

Note that opposite to \(\mathbf {T}, \hat{\mathbf {T}}\) is no longer invertible. Consequently, it is not possible to fully recover \(\mathbf {X}\) although \(\hat{\mathbf {T}}\) can be projected back onto the original \(N\cdot L-\)dimensional space to get a data matrix \(\hat{\mathbf{X }}\) as follows:

The difference between the original data matrix \(\mathbf X \) and \(\hat{\mathbf{X }}\) is defined as the residual error matrix \(\mathbf {E}\) or \(\tilde{\mathbf {X}}\) as follows:

or, equivalenty,

The residual error matrix \(\mathbf {E}\) describes the variability not represented by the data matrix \(\hat{\mathbf{X }}\), and can also be expressed as

Even though the real measures obtained from the sensors as a function of time represent physical magnitudes, when these measures are projected and the scores are obtained, these scores no longer represent any physical magnitude [3]. The key aspect in this approach is that the scores from different experiments can be compared with the reference pattern to try to detect a different behavior.

3.2 Fault Detection Based on Univariate Hypothesis Testing

The current structure to diagnose—in Sects. 3.2 and 3.3 we will refer to a structure as a generic noun for both the aluminium plate, the wind turbine or more complex mechanical systems—is subjected to the same excitation (guided waves) or to a wind field (wind turbines) as described in Sects. 3.1.1 and 3.1.2. When the measures are obtained from \(N\in \mathbb {N}\) sensors at L discretization instants and \(\nu \) experimental trials (guides waves) or during \((\nu L-1)\varDelta \) seconds (wind turbines), a new data matrix \(\mathbf {Y}\) is constructed as in Eqs. (4) and (7), respectively:

It is worth remarking that the natural number \(\nu \) (the number of rows of matrix \(\mathbf {Y}\)) is not necessarily equal to n (the number of rows of \(\mathbf {X}\)), but the number of columns of \(\mathbf {Y}\) must agree with that of \(\mathbf {X}\); that is, in both cases the number N of sensors and the number of samples per row must be equal.

Before the collected data arranged in matrix \(\mathbf {Y}\) is projected into the new space spanned by the eigenvectors in matrix \(\mathbf {P}\) in Eq. (17), the matrix has to be scaled to define a new matrix \(\check{\mathbf {Y}}\) as in Eq. (12):

where \(\mu _j^k\) and \(\sigma ^k\) are defined in Eqs. (9) and (11), respectively.

The projection of each row vector

of matrix \(\check{\mathbf {Y}}\) into the space spanned by the eigenvectors in \(\hat{\mathbf{P }}\) is performed through the following vector to matrix multiplication:

For each row vector \(r^i, i=1,\ldots ,\nu \), the first component of vector \(t^i\) is called the first score or score 1; similarly, the second component of vector \(t^i\) is called the second score or score 2, and so on.

In a standard application of the principal component analysis strategy in the field of structural health monitoring, the scores allow a visual grouping or separation [2]. In some other cases, as in [19], two classical indices can be used for damage detection, such as the Q index (also known as SPE, square prediction error) and the Hotelling’s \(T^2\) index. The Q index of the ith row \(y_i^T\) of matrix \(\check{\mathbf {Y}}\) is defined as follows:

The \(T^2\) index of the ith row \(y_i^T\) of matrix \(\check{\mathbf {Y}}\) is defined as follows:

In this case, however, it can be observed in Fig. 6—where the projection onto the two first principal components of samples coming from the healthy and faulty wind turbines are plotted—that a visual grouping, clustering or separation cannot be performed. A similar conclusion is deducted from Fig. 7. In this case, the plot of the natural logarithm of indices Q and \(T^2\)—defined in Eqs. (31) and (32)—of samples coming from the healthy and faulty wind turbines does not allow any visual grouping. A visual separation is neither possible from Fig. 8, where the first score for baseline experiments of the healthy aluminium plate are plotted together with testing experiments with several damages. Some strategies can be found in the literature with the objective to overcome these difficulties. For instance, principal component analysis together with self-organizing maps SOM [20], a robust version of principal component analysis (RPCA) in the presence of outliers [21] or even nonlinear PCA (NPCA) or hierarchical PCA (HPCA) [22]. Some of these approaches have a high computational cost that can lead to delays in the damage or fault diagnosis [16]. Therefore, the methodologies reviewed in this work can be seen as a powerful and reliable tool with less computational cost with the aim of online damage and fault detection of structures using principal component analysis.

3.2.1 The Random Nature of the Scores

Since the dynamic response of a structure (guided waves) and the turbulent wind (wind turbine) can be considered as a random process, the dynamic response of the structure (aluminium plate and wind turbine) can be considered as a stochastic process and the measurements in \(r^i\) are also stochastic. Therefore, each component of \(t^i\) in Eq. (30) acquires this stochastic nature and it will be regarded as a random variable to construct the stochastic approach in this chapter.

3.2.2 Test for the Equality of Means

The objective of the present work is to examine whether the current structure to diagnosed is healthy or subjected to a damage (aluminium plate) or to a fault as those described in Table 3 (wind turbine). To achieve this end, we have a PCA model (matrix \(\hat{\mathbf{P }}\) in Eq. (21)) built as in Sect. 3.1.3 with data coming from a structure or a wind turbine in a full healthy state. For each principal component \(j=1,\ldots ,\ell \), the baseline sample is defined as the set of n real numbers computed as the \(j-\)th component of the vector to matrix multiplication \(\mathbf {X}(i,:)\cdot \hat{\mathbf{P }}\). Note that n is the number of rows of matrix \(\mathbf {X}\) in Eq. (7). That is, we define the baseline sample as the set of numbers \(\{\tau _j^i\}_{i=1,\ldots ,n}\) given by

where \(\mathbf {e}_j\) is the \(j-\)th vector of the canonical basis.

Similarly, and for each principal component \(j=1,\ldots ,\ell \), the sample of the current structure to diagnose is defined as the set of \(\nu \) real numbers computed as the \(j-\)th component of the vector \(t^i\) in Eq. (30). Note that \(\nu \) is the number of rows of matrix \(\mathbf {Y}\) in Eq. (27). That is, we define the sample to diagnose as the set of numbers \(\{t^i_j\}_{i=1,\ldots ,\nu }\) given by

As said before, the goal of this chapter is to obtain a damage and fault detection method such that when the distribution of the current sample is related to the distribution of the baseline sample a healthy state is predicted and otherwise a damage or fault is detected. To that end, a test for the equality of means will be performed. Let us consider that, for a given principal component, (a) the baseline sample is a random sample of a random variable having a normal distribution with unknown mean \(\mu _X\) and unknown standard deviation \(\sigma _X\); and (b) the random sample of the current structure is also normally distributed with unknown mean \(\mu _Y\) and unknown standard deviation \(\sigma _Y\). Let us finally consider that the variances of these two samples are not necessarily equal. As said previously, the problem that we will consider is to determine whether these means are equal, that is, \(\mu _X=\mu _Y\), or equivalently, \(\mu _X-\mu _Y=0\). This statement leads immediately to a test of the hypotheses

that is, the null hypothesis is “the sample of the structure to be diagnosed is distributed as the baseline sample” and the alternative hypothesis is “the sample of the structure to be diagnosed is not distributed as the baseline sample”. In other words, if the result of the test is that the null hypothesis is not rejected, the current structure is categorized as healthy. Otherwise, if the null hypothesis is rejected in favor of the alternative, this would indicate the presence of some damage or faults in the structure.

The test is based on the Welch-Satterthwaite method [23], which is outlined below. When random samples of size n and \(\nu \), respectively, are taken from two normal distributions \(N\left( \mu _X,\sigma _X\right) \) and \(N\left( \mu _Y,\sigma _Y\right) \) and the population variances are unknown, the random variable

can be approximated with a t-distribution with \(\rho \) degrees of freedom, that is

where

and where \(\bar{X},\bar{Y}\) is the sample mean as a random variable; \(S^2\) is the sample variance as a random variable; \(s^2\) is the variance of a sample; and \(\lfloor \cdot \rfloor \) is the floor function.

The value of the standardized test statistic using this method is defined as

where \(\bar{x},\bar{y}\) is the mean of a particular sample. The quantity \(t_{\text {obs}}\) is the damage or fault indicator. We can then construct the following test:

where \(t^\star \) is such that

where \(\mathbb {P}\) is a probability measure and \(\alpha \) is the chosen risk (significance) level for the test. More precisely, the null hypothesis is rejected if \(\left| t_{\text {obs}}\right| >t^\star \) (this would indicate the existence of a damage or fault in the structure). Otherwise, if \(\left| t_{\text {obs}}\right| \le t^\star \) there is no statistical evidence to suggest that both samples are normally distributed but with different means, thus indicating that no damage or fault in the structure has been found. This idea is represented in Fig. 9.

3.3 Fault Detection Based on Multivariate Hypothesis Testing

In this section, the projections onto the first components —the so-called scores— are used for the construction of the multivariate random samples to be compared and consequently to obtain the structural damage or fault indicator, as it is illustrated in Figs. 10 (guided waves) and 11 (wind turbine).

3.3.1 Multivariate Random Variables and Multivariate Random Samples

As in Sect. 3.2, the current structure to diagnose is subjected to the same excitation (guided waves) or to a wind field (wind turbines). The time responses recorded by the sensors are arranged in a matrix \(\mathbf {Y}\in \mathscr {M}_{\nu \,{\times }\, (N\cdot L)}(\mathbb R)\) as in Eq. (27). The rows of matrix \(\mathbf {Y}\) are called \(r^i\in \mathbb R^{N\cdot L},\ i=1,\ldots ,\nu \), as in Eq. (29), where N is the number of sensors, L is the number of discretization instants and \(\nu \) is the number of experimental trials (guides waves) or the number of rows of matrix \(\mathbf {Y}\) in Eq. (27). Selecting the jth principal component, \(v_j,\ j=1,\ldots ,\ell \), the projection of the recorded data onto this principal component is the dot product

as in Eq. (34).

Since the dynamic behaviour of a structure depends on some indeterminacy, its dynamic response can be considered as a stochastic process and the measurements in \(r^i\) are also stochastic. On the one hand, \(t_j^i\) acquires this stochastic nature and it will be regarded as a random variable to construct the stochastic approach in this section. On the other hand, an s-dimensional random vector can be defined by considering the projections onto several principal components as follows

The set of s-dimensional vectors \(\left\{ \mathbf {t}^i_{j_1,\ldots ,j_s}\right\} _{i=1,\ldots ,\nu }\) can be seen as a realization of a multivariate random sample of the variable \(\mathbf {t}_{j_1,\ldots ,j_s}\). When the realization is performed on the healthy structure, the baseline sample is denoted as the set of s-dimensional vectors

where n is the number of rows of matrix \(\mathbf {X}\) in Eqs. (4) (guides waves) and (7) (wind turbine). As an example, in the case of the aluminium plate experimental set-up, in Fig. 12 two three-dimensional samples are represented; one is the three-dimensional baseline sample (left) and the other is referred to damages 1 to 3 (right). This illustrating example refers to actuator phase 1 and the first, second and third principal components. More precisely, Fig. 12 (right) depicts the values of the multivariate random variable \(\mathbf {t}_{1,2,3}\). The diagnosis sample is formed by 20 experiments and the baseline sample is made by 100 experiments.

3.3.2 Detection Phase and Testing for Multivariate Normality

In this work, the framework of multivariate statistical inference is used with the objective of the classification of structures in healthy or damaged. With this goal, a test for multivariate normality is first performed. A test for the plausibility of a value for a normal population mean vector is then performed.

Many statistical tests and graphical approaches are available to check the multivariate normality assumption [24]. But there is no a single most powerful test and it is recommended to perform several tests to assess the multivariate normality. Let us consider the three most widely used multivariate normality tests. That is: (i) Mardia’s; (ii) Henze-Zirkler’s; and (iii) Royston’s multivariate normality tests. We include a brief explanation of these methods for the sake of completeness.

Mardia’s test

Mardia’s test is based on multivariate extensions of skewness (\(\hat{\gamma }_{1,s}\)) and kurtosis (\(\hat{\gamma }_{2,s}\)) measures [24, 25]:

where \(m_{ij}=\left( x_i-\bar{x}\right) ^TS^{-1}\left( x_j-\bar{x}\right) ,\ i,j=1,\ldots ,\nu \) is the squared Mahalanobis distance, S is the variance-covariance matrix, s is the number of variables and \(\nu \) is the sample size. The test statistic for skewness, \(\left( \nu /6\right) \hat{\gamma }_{1,s}\), is approximately \(\chi ^2\) distributed with \(s\left( s+1\right) \left( s+2\right) /6\) degrees of freedom. Similarly, the test statistic for kurtosis, \(\hat{\gamma }_{2,s}\), is approximately normally distributed with mean \(s\left( s+2\right) \) and variance \(8s\left( s+2\right) /\nu \). For multivariate normality, both p-values of skewness and kurtosis statistics should be greater than 0.05.

For small samples, the power and the type I error could be violated. Therefore, Mardia introduced a correction term into the skewness test statistic [26], usually when \(\nu <20\), in order to control type I errors. The corrected skewness statistic for small samples is \(\left( \nu k/6\right) \hat{\gamma }_{1,s}\), where

This statistic is also \(\chi ^2\) distributed with \(s\left( s+1\right) \left( s+2\right) /6\) degrees of freedom.

Henze-Zirkler’s test

The Henze-Zirkler’s test is based on a non-negative functional distance that measures the distance between two distribution functions [25, 27]. If the data is multivariate normal distributed, the test statistic HZ in Eq. (46) is approximately lognormally distributed. It proceeds to calculate the mean, variance and smoothness parameter. Then, mean and variance are lognormalized and the p-value is estimated. The test statistic of Henze-Zirkler’s multivariate normality test is

where s is the number of variables,

\(D_i\) gives the squared Mahalanobis distance of the ith observation to the centroid and \(D_{ij}\) gives the Mahalanobis distance between the ith and the jth observations. If data are multivariate normal distributed, the test statistic (HZ) is approximately lognormally distributed with mean \(\mu \) and variance \(\sigma ^2\) as given below:

where \(a=1+2\beta ^2\) and \(w_{\beta }=\left( 1+\beta ^2\right) \left( a+3\beta ^2\right) \). Hence, the lognormalized mean and variance of the HZ statistic can be defined as follows:

By using the lognormal distribution parameters, \(\mu _{\log }\) and \(\sigma ^2_{\log }\), we can test the significance of multivariate normality. The Wald test statistic for multivariate normality is given in the following equation:

Royston’s test

Royston’s test uses the Shapiro-Wilk/Shapiro-Francia statistic to test multivariate normality [25]. If kurtosis of the data is greater than 3, then it uses the Shapiro-Francia test for leptokurtic distributions. Otherwise, it uses the Shapiro-Wilk test for platykurtic distributions. The Shapiro-Wilk test statistic is:

where \(x_{(i)}\) is the ith order statistic, that is, the ith-smallest number in the sample, \(\mu \) is the mean, \(a=\frac{\mathbf {m}^TV^{-1}}{\sqrt{\mathbf {m}^TV^{-1}V^{-1}\mathbf {m}}}\), V is the covariance matrix of the order statistics of a sample of s standard normal random variables with expectation vector \(\mathbf {m}\). Let \(W_j\) be the Shapiro-Wilk/Shapiro-Francia test statistic for the jth variable, \(j=1,\ldots ,s\), and \(Z_j\) be the values obtained from the normality transformation proposed by [28]:

Then transformed values of each random variable can be obtained from the following equation:

where \(\gamma \), \(\mu \) and \(\sigma \) are derived from the polynomial approximations given in equations [28]:

The Royston’s test statistic for multivariate normality is then defined as follows:

where \(\varepsilon \) is the equivalent degrees of freedom (edf) and \(\varPhi (\cdot )\) is the cumulative distribution function for standard normal distribution such that,

Another extra term \(\bar{c}\) has to be calculated in order to continue with the statistical significance of Royston’s test statistic. Let R be the correlation matrix and \(r_{ij}\) be the correlation between ith and jth variables. Then, the extra term can be found by using equation:

where

with the boundaries of \(g(\cdot )\) as \(g(0,\nu )=0\) and \(g(1,\nu )=1\). The function \(g(\cdot )\) is defined as follows:

The unknown parameters \(\mu \), \(\lambda \) and \(\xi \) were estimated from a simulation study conducted by [28]. He found \(\mu =0.715\) and \(\lambda =5\) for sample size \(10\le \nu \le 2000\) and \(\xi \) is a cubic function which can be obtained as follows:

Quantile-quantile plot

Apart from the multivariate normality tests, some visual representations can also be used to test for multivariate normality. The quantile–quantile (Q–Q) plot is a widely used graphical approach to evaluate the agreement between two probability distributions [24, 25]. Each axis refers to the quantiles of probability distributions to be compared, where one of the axes indicates theoretical quantiles (hypothesized quantiles) and the other indicates the observed quantiles. If the observed data fit hypothesized distribution, the points in the Q–Q plot will approximately lie on the bisectrix \(y=x\). The sample quantiles for the Q–Q plot are obtained as follows. First we rank the observations \(y_1, y_2,\ldots ,y_\nu \) and denote the ordered values by \(y_{(1)},y_{(2)},\ldots ,y_{(\nu )}\); thus \(y_{(1)} \le y_{(2)} \le \cdots \le y_{(\nu )}\). Then the point \(y_{(i)}\) is the \(i/\nu \) sample quantile. The fraction \(i/\nu \) is often changed to \((i-0.5)/\nu \) as a continuity correction. With this convention, \(y_{(i)}\) is designated as the \((i-0.5)/\nu \) sample quantile. The population quantiles for the Q–Q plot are similarly defined corresponding to \((i-0.5)/\nu \). If we denote these by \(q_1,q_2,\ldots ,q_\nu \), then \(q_i\) is the value below which a proportion \(\left( i-0.5\right) /\nu \) of the observations in the population lie; that is, \(\left( i-0.5\right) /\nu \) is the probability of getting an observation less than or equal to \(q_i\). Formally, \(q_i\) can be found for the standard normal random variable Y with distribution N(0, 1) by solving

which would require numerical integration or tables of the cumulative standard normal distribution, \(\varPhi (x)\). Another benefit of using \(\left( i-0.5\right) /\nu \) instead of \(i/\nu \) is that \(\nu /\nu =1\) would make \(q_\nu =+\infty \). The population need not have the same mean and variance as the sample, since changes in mean and variance merely change the slope and intercept of the plotted lie in the Q–Q plot. Therefore, we use the standard normal distribution, and the \(q_i\) values can easily be found from a table of cumulative standard normal probabilities. We then plot the pairs \((q_i, y_{(i)})\) and examine the resulting Q–Q plot for linearity.

Contour plot

In addition to Q–Q plots, creating perspective and contour plots can be also useful [24, 25]. The perspective plot is an extension of the univariate probability distribution curve into a three-dimensional probability distribution surface related with bivariate distributions. It also gives information about where data are gathered and how two variables are correlated with each other. It consists of three dimensions where two dimensions refer to the values of the two variables and the third dimension, which is likely in univariate cases, is the value of the multivariate normal probability density function. Another alternative graph, which is called the contour plot, involves the projection of the perspective plot into a two-dimensional space and this can be used for checking multivariate normality assumption. Figure 13 shows the contour plot for bivariate normal distribution with mean \(\left( \begin{array}{cc}0&0\end{array}\right) ^T\in \mathbb {R}^2\) and covariance matrix \(\left( \begin{array}{cc}0.250 &{} 0.375 \\ 0.375&{} 1.000\end{array}\right) \in \mathscr {M}_{2\,{\times }\, 2}(\mathbb {R})\). For bivariate normally distributed data, we expect to obtain a three-dimensional bell-shaped graph from the perspective plot. Similarly, in the contour plot, we can observe a similar pattern.

3.3.3 Testing a Multivariate Mean Vector

The objective of this section is to determine whether the distribution of the multivariate random samples that are obtained from the structure to be diagnosed (undamaged or not, faulty or not) is connected to the distribution of the baseline. To this end, a test for the plausibility of a value for a normal population mean vector will be performed. Let \(s\in \mathbb {N}\) be the number of principal components that will be considered jointly. We will also consider that: (a) the baseline projection is a multivariate random sample of a multivariate random variable following a multivariate normal distribution with known population mean vector \(\varvec{\mu }_{\text {h}}\in \mathbb R^s\) and known variance-covariance matrix \(\varvec{\varSigma }\in \mathscr {M}_{s\,{\times }\, s}(\mathbb R)\); and (b) the multivariate random sample of the structure to be diagnosed also follows a multivariate normal distribution with unknown multivariate mean vector \(\varvec{\mu }_{\text {c}}\in \mathbb R^s\) and known variance-covariance matrix \(\varvec{\varSigma }\in \mathscr {M}_{s\,{\times }\, s}(\mathbb R)\).

As said previously, the problem that we will consider is to determine whether a given s-dimensional vector \(\varvec{\mu }_{\text {c}}\) is a plausible value for the mean of a multivariate normal distribution \(N_s(\varvec{\mu }_{\text {h}},\varvec{\varSigma })\). This statement leads immediately to a test of the hypothesis

that is, the null hypothesis is ‘the multivariate random sample of the structure to be diagnosed is distributed as the baseline projection’ and the alternative hypothesis is ‘the multivariate random sample of the structure to be diagnosed is not distributed as the baseline projection’. In other words, if the result of the test is that the null hypothesis is not rejected, the current structure is categorized as healthy. Otherwise, if the null hypothesis is rejected in favor of the alternative, this would indicate the presence of some structural changes or faults in the structure.

The test is based on the statistic \(T^2\)—also called Hotelling’s \(T^2\)—and it is summarized below. When a multivariate random sample of size \(\nu \in \mathbb {N}\) is taken from a multivariate normal distribution \(N_s(\varvec{\mu }_{\text {h}},\varvec{\varSigma })\), the random variable

is distributed as

where \(F_{s,\nu -s}\) denotes a random variable with an F-distribution with s and \(\nu -s\) degrees of freedom, \(\bar{\mathbf {X}}\) is the sample vector mean as a multivariate random variable; and \(\frac{1}{n}\mathbf {S}\in \mathscr {M}_{s\,{\times }\, s}(\mathbb R)\) is the estimated covariance matrix of \(\bar{\mathbf {X}}\).

At the \(\alpha \) level of significance, we reject \(H_0\) in favor of \(H_1\) if the observed

is greater than \(\frac{(\nu -1)s}{\nu -s}F_{s,\nu -s}(\alpha )\), where \(F_{s,\nu -s}(\alpha )\) is the upper \((100\alpha )\)th percentile of the \(F_{s,\nu -s}\) distribution. In other words, the quantity \(t^2_{\text {obs}}\) is the damage or fault indicator and the test is summarized as follows:

where \(F_{s,\nu -s}(\alpha )\) is such that

where \(\mathbb {P}\) is a probability measure. More precisely, we fail to reject the null hypothesis if \(t^2_{\text {obs}}\le \frac{(\nu -1)s}{\nu -s}F_{s,\nu -s}(\alpha )\), thus indicating that no structural changes or faults in the structure have been found. Otherwise, the null hypothesis is rejected in favor of the alternative hypothesis if \(t^2_{\text {obs}}> \frac{(\nu -1)s}{\nu -s}F_{s,\nu -s}(\alpha )\), thus indicating the existence of some structural changes or faults in the structure.

4 Results

In this section, the damage and fault detection strategies described in Sects. 3.2 and 3.3 are applied to both an aluminium plate and a simulated wind turbine. The experimental results of the damage detection strategy applied to the aluminium plate using the univariate and multivariate hypothesis testing are presented in Sects. 4.1 and 4.2, respectively. Similarly, the simulation results of the fault detection strategy applied to the wind turbine using the univariate and multivariate hypothesis testing are presented in Sects. 4.3 and 4.4, respectively.

4.1 Aluminum Plate and Univariate HT

Some experiments were performed in order to test the methods presented in Sect. 3.2. In these experiments, four piezoelectric transducer discs (PZTs) were attached to the surface of a thin aluminum plate, with dimensions 25 cm \(\times \) 25 cm \(\times \) 0.2 cm. Those PZTs formed a square with 144 mm per side. The plate was suspended by two elastic ropes, being isolated from environmental influences. Figures 1 (left) and 2 shows the plate hanging on the elastic ropes.

The experiments are performed in 4 independent phases: (i) piezoelectric transducer 1 (PZT1) is configured as actuator and the rest of PZTs as sensors; (ii) PZT2 as actuator; (iii) PZT3 as actuator; and (iv) PZT4 as actuator. In order to analyze the influence of each projection to the PCA model (score), the results of the first three scores have been considered. In this way, a total of 12 scenarios were examined. For each scenario, a total of 50 samples of 10 experiments each one (5 for the undamaged structure and 5 for the damaged structure with respect to each of the 9 different types of damages) plus the baseline are used to test for the equality of means, with a level of significance \(\alpha =0.30\) (the choice of this level of significance will be later on). Each set of 50 testing samples are categorized as follows: (i) number of samples from the healthy structure (undamaged sample) which were classified by the hypothesis test as ‘healthy’ (fail to reject \(H_0\)); (ii) undamaged sample classified by the test as ‘damaged’ (reject \(H_0\)); (iii) samples from the damaged structure (damaged sample) classified as ‘healthy’; and (iv) damaged sample classified as ‘damaged’. The results for the 12 different scenarios presented in Table 5 are organized according to the scheme in Table 4. It can be stressed from each scenario in Table 5 that the sum of the columns is constant: 5 samples in the first column (undamaged structure) and 45 samples in the second column (damaged structure).

In this table, it is worth noting that two kinds of misclassification are presented which are denoted as follows:

-

1.

Type I error (or false positive), when the structure is healthy but the null hypothesis is rejected and therefore classified as damaged. The probability of committing a type I error is \(\alpha \), the level of significance.

-

2.

Type II error (or false negative), when the structure is damaged but the null hypothesis is not rejected and therefore classified as healthy. The probability of committing a type II error is called \(\beta \).

4.1.1 Sensitivity and Specificity

Two statistical measures can be employed here to study the performance of the test: the sensitivity and the specificity. The sensitivity, also called as the power of the test, is defined, in the context of this work, as the proportion of samples from the damaged structure which are correctly identified as such. Thus, the sensitivity can be computed as \(1-\beta \). The specificity of the test is defined, also in this context, as the proportion of samples from the undamaged structure that are correctly identified and can be expressed as \(1-\alpha \).

The sensitivity and the specificity of the test with respect the 50 samples in each scenario have been included in Table 7. For each scenario in this table, the results are organized as shown in Table 6.

It is worth noting that type I errors are frequently considered to be more serious than type II errors. However, in this application a type II error is related to a missing fault whereas a type I error is related to a false alarm. In consequence, type II errors should be minimized. Therefore a small level of significance of \(1, 5\%\) or even \(10\%\) would lead to a reduced number of false alarms but to a higher rate of missing faults. That is the reason of the choice of a level of significance of \(30\%\) in the hypothesis test.

The results show that the sensitivity of the test \(1-\beta \) is close to \(100\%\), as desired, with an average value of \(86.58\%\). The sensitivity with respect to the projection onto the second and third component (second and third score) is increased, in mean, to a \(91.50\%\). The average value of the specificity is \(68.33\%\), which is very close to the expected value of \(1-\alpha =70\%\).

4.1.2 Reliability of the Results

The results in Table 9 are computed using the scheme in Table 8. This table is based on the Bayes’ theorem [29], where \(P(H_1 | \text {accept } H_0\)) is the proportion of samples from the damaged structure that have been incorrectly classified as healthy (true rate of false negatives) and \(P(H_0 | \text {accept } H_1\)) is the proportion of samples from the undamaged structure that have been incorrectly classified as damaged (true rate of false positives).

Since these two true rates are not a function of the accuracy of the test alone, but also a function of the actual rate or frequency of occurrence within the test population, some of the results are not as good as desired. The results in Table 9 can be improved without affecting the results in Table 7 by considering an equal number of samples from the healthy structure and from the damaged structure.

4.1.3 The Receiver Operating Curves (ROC)

An additional study has been developed based on the ROC curves to determine the overall accuracy of the proposed method. These curves represent the trade-off between the false positive rate and the sensitivity in Table 6 for different values of the level of significance that is used in the statistical hypothesis testing. Note that the false positive rate is defined as the complementary of the specificity, and therefore these curves can also be used to visualize the close relationship between specificity and sensitivity. It can also be remarked that the sensitivity is also called true positive rate or probability of detection [30]. More precisely, for each scenario and for a given level of significance the pair of numbers

is plotted. We have considered 49 levels of significance within the range [0.2, 0.98] and with a difference of 0.02. Therefore, for each scenario 49 connected points are depicted, as can be seen in Fig. 14.

The placement of these points can be interpreted as follows. Since we are interested in minimizing the number of false positives while we maximize the number of true positives, these points must be placed in the upper-left corner as much as possible. However, this is impossible because there is also a relationship between the level of significance and the false positive rate. Therefore, a method can be considered acceptable if those points lie within the upper-left half-plane.

As said before, the ROC curves for all possible scenarios are depicted in Fig. 14. On one hand, in phase 1 (PZT1 as actuator) and phase 4 (PZT4 as actuator), the first score (diamonds) presents the worst performance because some points are very close to the diagonal or even below it. However, in the same phases, second and third scores present better results. It may be surprising that the results related to the first score are not as good as those related to the rest of scores, but in Sect. 4.1.4 this will be justified. On the other hand, all scores in phases 2 and 3 present a very good performance to detect damages.

The curves are similar to stepped functions because we have considered 5 samples from the undamaged structure and therefore the possible values for the false positive rate (the values in the x-axis) are 0, 0.2, 0.4, 0.6, 0.8 and 1. Finally, we can say that the ROC curves provide a statistical assessment of the efficacy of a method and can be used to visualize and compare the performance of multiple scenarios.

4.1.4 Analysis and Discussion

Although the first score has the highest proportion of variance, it is not possible to visually separate between the baseline and the test. Each of the subfigures in Fig. 8 shows the comparison between the first score of the baseline experiments and the test experiment for each damage. A similar comparison can be found in Fig. 15 where all the observation points (first score of each experiment) are depicted in a single chart. The rest of the scores neither allow a visual grouping.

One of the scenarios with the worst results is the one that considers the PZT1 as actuator and the first score, because the false negative rate is 33%, the false positive rate is 20% and the true rate of false negatives is 79% (see Tables 7 and 9). These results, which are extracted from Table 5, are illustrated for each state of the structure separately in Fig. 15. Just one of the five samples of the healthy structure has been wrongly rejected (false alarm) whereas all the samples of the structure with damage D1 have been wrongly not rejected (missing fault). Only one of the five samples of the structure with damage D2 has been correctly rejected (correct decision). In this case, however, the bad result can be due to the lack of normality (Fig. 16). This lack of normality leads to results that cannot be reliable. In fact, these samples should not have been used for a hypothesis test. The samples of the structure with damage D7 are not normally distributed, although in this case the results are right. This problem can be solved by repeating the test excluding experiments with those damages (D2 and D7) or eliminating the outliers.

Results of the chi-square goodness-of-fit test applied to the samples described Sect. 4.1. ‘BL’ stands for baseline projection, ‘Un’ for the sample obtained from the undamaged structure and ‘Di’ for the damage number i, where \(i=1,2,\ldots ,9\). It can be shown by observing the upper-left barplot diagram that all the samples are normally distributed except those corresponding to damages D2 and D7

Contrary to what may seem reasonable, the projection on the first component (which represents the larger variance of the original data) is not always the best option to detect and distinguish damages. This fact can be explained because the PCA model is built using the data from the healthy structure and, therefore, the first component captures the maximal variance of these data. However, when new data are projected in this model, there is no longer guarantee of the existence of maximal variance in these new data.

4.2 Aluminum Plate and Multivariate HT

As in Sect. 4.1, some experiments were performed in order to test the method presented in Sect. 3.3.

In this case, 500 experiments were performed over the healthy structure, and another 500 experiments were performed over the damaged structure with 5 damage types (100 experiments per damage type). Figure 17 shows the position of damages 1 to 5 (D1 to D5). As excitation, a 50 kHz sinusoidal signal modulated by a hamming window were used. Figure 18 shows the excitation signal and an example of the signal collected by PZT 1.

4.2.1 Multivariate Normality

As said in Sect. 4.1, the experiments are performed in 4 independent phases: (i) piezoelectric transducer 1 (PZT1) is configured as actuator and the rest of PZTs as sensors; (ii) PZT2 as actuator; (iii) PZT3 as actuator; and (iv) PZT4 as actuator. In order to analyze the influence of each set of projections to the PCA model (score), the results of scores 1 to 3 (jointly), scores 1 to 5 (jointly) and scores 1 to 10 (jointly) have been considered. In this way, a total of 12 scenarios were examined.

The multivariate normality tests described in Sect. 3.3.2 were performed for all the data. We summarize in Table 10 the results of the multivariate normality test when considering the first three principal components (PC1–PC3) for all the actuator phases.

Some examples of Q–Q plots for the data we consider in this paper are shown on Fig. 19. It can be observed that the points are distributed closely following the bisectrix, thus indicating the multivariate normality of the data as stated in Table 10.

Q–Q plots corresponding to: (i) fourth set of undamaged data to test, using the first three principal components (PC1–PC3) in the actuator phase 4 (left) and (ii) damage 2 data, using the first three principal components (PC1–PC3) in the actuator phase 1 (right). The points of these Q–Q plots are close to the line \(y=x\) thus indicating the multivariate normality of the data

Moreover, some other examples of contour plots for the data we consider in this Section are given in Figs. 20 and 21. These plots are similar to the contour plot of the bivariate normal distribution in Fig. 13.

Contour plot for undamaged case (fourth set to test), PZT4 act., PC1–PC2. The contour lines are similar to ellipses of normal bivariate distribution from Fig. 13 that means that the distribution in this case is normal

Contour plot for case D3, PZT1 act., PC1–PC2. The contour lines are similar to ellipses of normal bivariate distribution from Fig. 13 that means that the distribution in this case is normal

Finally, the univariate normality for each principal component and for each actuator phase is also tested. The results are presented in Table 11. As it can be observed, the univariate data is normally distributed in most of the cases. However, this does not imply multivariate normality.

4.2.2 Type I and Type II Errors

For each scenario, a total of 50 samples of 20 experiments each one (25 for the undamaged structure and 5 for the damaged structure with respect to each of the 5 different types of damages) plus the baseline are used to test for the plausibility of a value for a normal population mean vector, with a level of significance \(\alpha =0.60\). Each set of 50 testing samples are categorized as follows: (i) number of samples from the healthy structure (undamaged sample) which were classified by the hypothesis test as ‘healthy’ (fail to reject \(H_0\)); (ii) undamaged sample classified by the test as ‘damaged’ (reject \(H_0\)); (iii) samples from the damaged structure (damaged sample) classified as ‘healthy’; and (iv) damaged sample classified as ‘damaged’. The results for the 12 different scenarios presented in Table 12 are organized according to the scheme in Table 4. It can be stressed from each scenario in Table 12 that the sum of the columns is constant: 25 samples in the first column (undamaged structure) and 25 more samples in the second column (damaged structure).

As in Sect. 4.1, in Table 12 two kinds of misclassification are presented: (i) type I errors (or false positive), when the structure is healthy but the null hypothesis is rejected and therefore classified as damaged; and (ii) type II errors (or false negative), when the structure is damaged but the null hypothesis is not rejected and therefore classified as healthy.

It can be observed from Table 12 that Type I errors (false alarms) appear only when we consider scores 1 to 3 (jointly) and scores 1 to 5 (jointly), while in the last case (scores 1 to 10), all the decisions are correct.

4.2.3 Sensitivity and Specificity

The sensitivity and the specificity of the test with respect to the 50 samples in each scenario have been included in Table 13. For each scenario in this table, the results are organized as shown in Table 6.

It is worth noting that type I errors are frequently considered to be more serious than type II errors. However, in this application a type II error is related to a missing fault whereas a type I error is related to a false alarm. In consequence, type II errors should be minimized. Therefore a small level of significance of \(1, 5\%\) or even \(10\%\) would lead to a reduced number of false alarms but to a higher rate of missing faults. That is the reason of the choice of a level of significance of \(60\%\) in the hypothesis test.

The results show that the sensitivity of the test \(1-\beta \) is close to \(100\%\), as desired, with an average value of \(78\%\). The sensitivity with respect to Score 1 to 5 and Score 1 to 10 is increased, in mean, to a \(100\%\). The average value of the specificity is \(90\%\).

4.2.4 Reliability of the Results

The results in Table 14 are computed using the scheme in Table 8. As in Sect. 4.1.2, Table 14 is based on the Bayes’ theorem [29], where P(\(H_1 | \text {accept } H_0\)) is the proportion of samples from the damaged structure that have been incorrectly classified as healthy (true rate of false negatives) and P(\(H_0 | \text {accept } H_1\)) is the proportion of samples from the undamaged structure that have been incorrectly classified as damaged (true rate of false positives).

4.2.5 The Receiver Operating Characteristic (ROC) Curves

An additional study has been developed based on the ROC curves to determine the overall accuracy of the proposed method. More precisely, for each scenario and for a given level of significance the pair of numbers

is plotted. We have considered 99 levels of significance within the range [0.01, 0.99] and with a difference of 0.01. Therefore, for each scenario 99 connected points are depicted, as can be seen in Figs. 22, 23 and 24 when we consider scores 1 to 3 (jointly), scores 1 to 5 (jointly) and scores 1 to 10 (respectively).

As said before, the ROC curves for the 12 possible scenarios are depicted in Figs. 22, 23 and 24. The best performance is achieved for the case of scores 1 to 3 in phase 1 (Fig. 22) because all of the points are placed in the upper-left corner. In phases 2–4, the points lie in the upper left half-plain but not in the corner, which represents a very good behavior of the proposed method. When we consider the case of scores 1 to 5 (jointly) in Fig. 23 and the case of scores 1 to 10 (jointly) in Fig. 24 it can be observed that the area under the ROC curves is close to 1 in all of the actuator phases thus representing an excellent test.

4.2.6 Analysis and Discussion

Multivariate tests allow to get better results in damage detection than univariate tests. This is perfectly illustrated in Fig. 25 where a correct or wrong detections is represented as a function of the level of significance \(\alpha \) used in the test. We can clearly characterize four different regions:

-

\(0<\alpha \le 0.13\). In this region, all the five univariate tests and the multivariate statistical inference pass (correct decision).

-

\(0.13<\alpha \le 0.62\). In this region, some of the five univariate tests fail (wrong decision) while the multivariate statistical inference pass (correct decision).

-

\(0.62<\alpha \le 0.71\). In this region, all the five univariate tests fail (wrong decision) while the multivariate statistical inference pass (correct decision).

-

\(0.71<\alpha <1\). In this region, all the five univariate tests and the multivariate statistical inference fail (wrong decision).

It is worth noting that in the region \(0.62<\alpha \le 0.71\), that is, when the level of significance lies within the range (0.62, 0.71] the multivariate statistical inference using scores 1 to 5 (jointly) is able to offer a correct decision even though all of the univariate tests make a wrong decision.

The scenarios with the best results are those that considers scores 1 to 10, because the false negative rate is \(0\%\) and the false positive rate is \(0\%\) for all the actuator phases. The results for scores 1 to 5 (jointly) are quite good, because the false negative rate is \(0\%\) for all actuators and the false positive rate is 7–17%.

4.3 Wind Turbine and Univariate HT

To validate the fault detection strategy presented in Sect. 3.2 using a simulated wind turbine, we first consider a total of 24 samples of \(\nu =50\) elements each, according to the following distribution:

-

16 samples of a healthy wind turbine; and

-

8 samples of a faulty wind turbine with respect to each of the eight different fault scenarios described in Table 3.

In the numerical simulations in this section, each sample of \(\nu =50\) elements is composed by the measures obtained from the \(N=13\) sensors detailed in Table 2 during \((\nu \cdot L-1)\varDelta =312.4875\) seconds, where \(L=500\) and the sampling time \(\varDelta =0.0125\) s. The measures of each sample are then arranged in a \(\nu \,{\times }\, (N\cdot L)\) matrix as in Eq. (27).

4.3.1 Type I and Type II Errors

For the first three principal components (score 1 to score 3), these 24 samples plus the baseline sample of \(n=50\) elements are used to test for the equality of means, with a level of significance \(\alpha =0.36\) (the choice of this level of significance will be justified in Sect. 4.3.2). Each sample of \(\nu =50\) elements is categorized as follows: (i) number of samples from the healthy wind turbine (healthy sample) which were classified by the hypothesis test as ‘healthy’ (fail to reject \(H_0\)); (ii) faulty sample classified by the test as “faulty” (reject \(H_0\)); (iii) samples from the faulty structure (faulty sample) classified as “healthy”; and (iv) faulty sample classified as “faulty”. The results for the first four principal components presented in Table 15 are organized according to the scheme in Table 4. It can be stressed from each principal component in Table 15 that the sum of the columns is constant: 16 samples in the first column (healthy wind turbine) and 8 more samples in the second column (faulty wind turbine).

It can be observed from Table 15 that, in the numerical simulations, Type I errors (false alarms) and Type II errors (missing faults) appear only when scores 2, 3 or 4 are considered, while when the first score is used all the decisions are correct. The better performance of the first score is an expected result in the sense that the first principal component is the component that accounts for the largest possible variance.

4.3.2 Sensitivity and Specificity

The sensitivity and the specificity of the test with respect to the 24 samples and for each of the first four principal components have been included in Table 16. For each principal component in this table, the results are organized as shown in Table 6.

The results in Table 16 show that the sensitivity of the test \(1-\gamma \) is close to \(100\%\), as desired, with an average value of \(78.00\%\). The sensitivity with respect to the first, second and fourth principal component is increased, in mean, to a \(91.33\%\). The average value of the specificity is \(75.00\%\), which is very close to the expected value of \(1-\alpha =64\%\).

4.3.3 Reliability of the Results

The results in Table 17 are computed using the scheme in Table 8. This table is based on the Bayes’ theorem [29], where P(\(H_1 | \text {accept } H_0\)) is the proportion of samples from the faulty wind turbine that have been incorrectly classified as healthy (true rate of false negatives) and P(\(H_0 | \text {accept } H_1\)) is the proportion of samples from the healthy wind turbine that have been incorrectly classified as faulty (true rate of false positives).

4.3.4 The Receiver Operating Curves (ROC)

The ROC curves are also used in this section to determine the overall accuracy of the proposed method for the fault detection in wind turbines. We have considered 49 levels of significance within the range [0.02, 0.98] and with a difference of 0.02. Therefore, for each of the first four principal components, 49 connected points are depicted, as can be seen in Fig. 26.

The results presented in Fig. 26, particularly with respect to score 1, are quite remarkable. The overall behavior of scores 2 and 4 are also acceptable, while the results of score 3 cannot be considered, in this case, as satisfactory.

In Figs. 27 and 28 a further study is performed. While in Fig. 26 we present the ROCs when the size of the samples to diagnose is \(\nu =50\), in Fig. 27 the reliability of the method is analyzed in terms of 48 samples of \(\nu =25\) elements each and in Fig. 28 the reliability of the method is analyzed in terms of 120 samples of \(\nu =10\) elements each. The effect of reducing the number of elements in each sample is the reduction in the total time needed for a diagnostic. More precisely, if we keep \(L=500\), when the size of the samples is reduced from \(\nu =50\) to \(\nu =25\) and \(\nu =10\), the total time needed for a diagnostic is reduced from about 312 s to 156 and 62 s, respectively. Another effect of the reduction in the number of elements in each sample is a slight deterioration of the overall accuracy of the detection method. However, the results of scores 1 and 2 in Figs. 27 and 28 are perfectly acceptable.

A very interesting alternative to keep a very good performance of the method without almost no degradation in its accuracy is by reducing L –the number of time instants per row per sensor— instead of reducing the number of elements per sample \(\nu \). This way, if we keep \(\nu =50\), when L is reduced from 500 to 50, the total time needed for a diagnostic is reduced from about 312 s to 31 s. Finally, with the goal to reduce the computational effort of the fault detection method, a sensor selection algorithm can be applied [16].

4.4 Wind Turbine and Multivariate HT

To validate the fault detection strategy presented in Sect. 3.3 using a simulated wind turbine, we consider —as in Sect. 4.3— a total of 24 samples of \(\nu =50\) elements each, according to the following distribution:

-

16 samples of a healthy wind turbine; and

-

8 samples of a faulty wind turbine with respect to each of the eight different fault scenarios described in Table 3.