Abstract

At present, there were two classical approaches commonly used for line simplification. One was Douglas-Peucker (D-P) algorithm and the other was Li-Openshaw (L-O) algorithm. Although the former was able to preferably reserve characteristic bending points of the curve and compress other non-feature points, the simplified result was excessively inflexible and sharp corners were also generated on feature points. As for the latter, not only can the corner of a line be smoothed, instead of becoming over inflexible, but feature point smoothing was also carried out by it for the line. Therefore, based on analyzing characteristics of such two algorithms, an improved algorithm was presented in this first place by means of combining the both together. To be specific, feature points of curves for generalized simplification were figured out by the D-P algorithm, while the L-O algorithm was used to perform curve processing by adjusting radius R of a circle SVO. In the end, real data were applied to carry out experimental verification contrasts for the modified algorithm and the existing two additional algorithms. Experimental results indicated that such a modified algorithm that combined them together exhibited their advantages and had the capability to reserve feature points and simplify other parts simultaneously. Moreover, it could be effectively applied in automated mapping.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Line element that occupies a rather large proportion in map features is mostly used to express extremely important geographic elements such as roads, rivers and contour lines, etc. Hence, simplification related to line element is not only studied in most cases, it has the maximum number of algorithms and the best effects during cartographic generalization. As for the cartographic generalization, the basic thought of simple line element simplification algorithm is to reduce storage of line factors provided that shape features of the line are remained to the greatest extent, such as the common Douglas-Peucker algorithm [1], the Li-Openshaw algorithm, the modified Li-Openshaw algorithm [2] and the arc to chord algorithm [3] etc. Among them, the Douglas-Peucker algorithm is the most well-known line element simplification approach that is able to reserve characteristic bending points of the curve in a better manner and compress its other non-feature points despite that the simplification results are over inflexible and sharp corners are caused at feature points. In comparison, the Li-Openshaw algorithm can smooth corners of the line without being excessively inflexible. Besides, feature points of the line are also smoothed by it. Currently, none of them can be completely up to generalization requirements in the process of simplification.

Specific to diversity of the line element and complexity of local shape features related to bending points, a simple curve simplification method was presented in this paper by combining Douglas-Peucker and Li-Openshaw approaches together to guarantee that feature points of the curve can be reserved and other parts of it can also be smoothed and simplified at the time of linear feature generalization.

2 Combination of Douglas-Peucker and Li-Openshaw

2.1 Douglas-Peucker (D-P) Algorithm

As an algorithm emerging at an earlier stage, the D-P algorithm has extensive applications in compression of lines and is also referred to as a very classical algorithm. Compression carried out based on this algorithm is mainly targeted at points on the curve on the premise of reserving main features of the line. In addition to high efficiency, no redundant points can be generated.

Principle of the D-P algorithm is shown in Fig. 1(a). Both ends A and G of a broken line ABCDEFG are connected into a straight line; then, the maximum vertical dimension d from point C on this broken line to segment AG is figured out. If the vertical dimension dc from C to AG is less than the given integrated threshold of distance, the segment AG is seen as an approximate curve of this broken line. However, in the case of dc > threshold, point B is utilized to divide such a broken line into CA and CG. Subsequently, the above procedures are repeated. In the end, all break points are successively connected together into a broken line serving as the approximation of the original broken line. For example, in Fig. 1, ACEF is the broken line after simplification of the original broken line. It can be found that employing the D-P algorithm to simplify segment can remain feature points of the original segment and generalize such an original segment at the same time without smoothing and generalizing those other than feature points. Consequently, it becomes rather difficult for such an algorithm to be widely applied into simplification of geographical line elements.

2.2 Douglas-Peucker (D-P) Algorithm

The Li-Openshaw algorithm is a self-adaptive line synthesis algorithm based on natural laws. Basic thought of this algorithm is that under the circumstance of fixed resolution, a rather large object may turn into a small one with the reduction in measuring scale; and, after it is up to a certain limit, the object can become a point or disappear completely. Algorithm simplification process is as follows:

-

The dimension R of the smallest visual object (SVO) of a circle can be estimated in line with the target and the original scales. Where, \( S_{t} \) refers to the simplified target scale required and \( S_{f} \) to the original scale. As for D, it is a parameter of the simplified SVO on the map. In the opinion of Muller [7], the value of D on the map should be taken as 0.4 mm to ensure the minimum visual resolution.

$$ R = S_{t} \times D \times \left({1 - \frac{{S_{f}}}{{S_{t}}}} \right) $$(1) -

Determine the initial position of a circular SVO. Generally, the starting point of a curve for synthesis serves as the first center of the circle, as presented in Fig. 1(b); then, point A and dimension R of the circular SVO are used as the center of a circle and its diameter respectively to draw a circle additionally, and curves intersect at point Q. thus, midpoint of AQ can be selected as the selection point after synthesis.

-

Start from point Q to repeat Step 2. Besides, the processing only arrives at a bending point R1 that serves as the center of a circle. Together with a radius denoted by R, another circle is drawn and it intersects with the original broken line at R2. The midpoint F of R1 and R2 is chosen to be the selection point after synthesis.

-

Start from point R2 to repeat steps 2 and 3 up until an endpoint D is not terminated within the circle SVO.

-

In Fig. 1(b), a broken line ABCD is the original segment without simplification and AEFGHID is a broken line after generalized simplification. It is evident in this figure that L-O algorithm can be adopted to smooth and generalize inflection points of the broken line and make its local features artistic. However, the relevant feature points are also generalized, which cause such an algorithm to be not applicable to line element simplifications during which feature points related should be reserved.

2.3 An Algorithm Combining Douglas-Peucker and Li-Openshaw

Based on the above analysis, it can be found that the individual application of D-P algorithm gives rise to rough and inflexible line element simplification results while the individual employment of the L-O algorithm can lead to circumstances of reserved points in chaos and overall curve shape deformation, etc. Considering that line simplification is aimed at maintaining feature points of line element, guaranteeing shape features of line element and obtaining smooth and artistic line element simplification results, a simple curve simplification method combining D-P and L-O algorithms together is put forward in this paper. The corresponding modifications to line simplification algorithms are as follows.

-

As for a curve for generalized simplification, D-P algorithm is adopted to figure out an inflection point with a vertical dimension larger than the distance threshold on the broken line, as shown in Fig. 3. B, C, D and F are all inflection points meeting the above condition.

-

Dependent on target and original scales, Eq. (1) is used to estimate the dimension R of SVO.

-

Starting from a circular SCO with a center of the circle of A and a radius of R, L-O algorithm is utilized to simplify the broken line. At a gathering point of inflection points (e.g., the inflection point B given in Fig. 3), it can be processed as follows. Point B is used as the center of a circle to draw a circle O with a radius of R1 (R1 = R). If inflection points (C & D) before/after the point B are inside the circle O, C and D are noted down for processing as the circumstances may require. In the case that both of them should be processed, the radius of SVO should be changed (e.g., 1/2 R) by regarding point B as the center of another circle that should be drawn again, followed by smoothing and generalization of C and B. If not required, smoothing can be ignored. At a point where inflection points are sparsely distributed (e.g., inflection point F in Fig. 3), it should be processed as follows. Point F and radius R1 (R1 = R) are used as the center of a circle and its radius respectively to draw a circle O that should be smoothed subsequently.

-

Relevant processing is carried out in order up until I reaching the terminal ends. In Fig. 2, ABCEFI is the original curve while ABCDEGHI is the curve simplified.

3 Analysis and Evaluation of Algorithm

3.1 Simplification Results of the Modified Algorithm

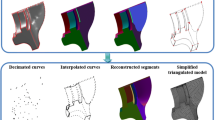

Data generalization simplification of map roads and river networks for a city in South China is adopted as the research case in this paper. Moreover, dependent on WJ-III map workstation on the NewMap platform developed by the Chinese Academy of Surveying and Mapping (CASM), the modified line simplification method proposed here is inserted to make a comprehensive choice for a map whose scale is changed into 1:100000 from 1:10000 initially. At the same time, comparative analysis is also conducted between this algorithm and Douglas-Peucker and Li-Openshaw algorithms. In Figs. 3 and 4, data related to roads and rivers are intercepted, so are results of their simplifications based on such 3 algorithms on the premise of identical parameters.

In line with the generalized simplification thoughts of this algorithm, its simplification results become more similar to the original curve if compared to the additional two algorithms. Visually, the results conform to those that can be obtained according to the initial thoughts. In Fig. 3(a), original feature points are reserved by the D-P algorithm at the time of road simplification and the relevant line elements are also compressed in a better manner, despite that it fails to be strongly applicable to cartographic generalization as smoothing processing is required by geographical line elements in most cases. As for Fig. 3(b), road lines before and after simplification based on the L-O algorithm are compared. In this figure, it is clear that this algorithm succeeds in smoothing filtering in the process of road line generalization simplification. However, during practical cartographic generalization, not only smoothing is required, it should also be guaranteed that no processing or minor processing is conducted for the original feature bending. In Fig. 3(c), the modified algorithm that combines D-P and L-O algorithms together is used to draw a comparative graph for road lines before and after generalization. According to this figure, their structures after generalized simplification are superior to simplification results of the above two figures. In detail, not only is smoothing performed for road line element simplification, but the original feature bending is also reserved together with local maximum value points precisely. Hence, the applicability of such a modified algorithm in cartographic generation is substantially improved.

With regard to Fig. 4, they are data simplification results for 1:10000 river networks in a city of South China. By comparing such results achieved based on three algorithms, it is verified again that the modified algorithm presented here combines advantages of two classical simplification algorithms and reserves macro-bending features.

3.2 Result Evaluation

In terms of cartographic generalization for line elements, influences of algorithm simplification on line element precision are mainly embodied in overall and local displacements of curves. Therefore, evaluation indexes such as vector displacement, area displacement, displacement standard deviation and position error, etc. are given to compare and assess the modified algorithm and another two algorithms of Douglas-Peucker and Li-Openshaw.

Both vector displacement and area displacement are two evaluation indexes presented by White for geographical line element simplification [4]. The former refers to the position deviation of corresponding points on the simplified and the original curves; while, the latter is the area of a part enclosed by the simplified and the original curves [5, 6]. In this paper, the average offset value and the area deformation value are respectively taken for the vector displacement and the area displacement to carry out the relevant evaluations, as shown in Fig. 5. Moreover, both values are quantitative indexes.

Three algorithms are adopted to perform generalized simplification for road lines and river networks separately. After simple comparisons based on simplification results (see Table 1), it is found that the modified algorithm is not only better than another two algorithms in terms of visual effects, but far less than and superior to the them as far as the average offset values and area deformation values of their vectors are concerned. Such a result indicates that the modified algorithm is more appropriate to maintain shape features of the line element.

Muller puts forward a concept of Standardized Measure of Displacement (SMD) to calculate displacement [7]. The corresponding computing formula is the Eq. (2) below.

Where, S refers to the distance from a point with the maximum displacement on the original curve to the connecting line of its start and end points after the algorithm simplification, and D is the displacement value of this point before/after simplification. Evaluations based on SMD are primarily targeted at the local maximum value. Therefore, displacement position error is further employed in this paper to evaluate the global displacement before and after simplification. The displacement position is the ratio between the original curve length and the area enclosed by intersections of curves before and after simplification. It can be calculated according to Eq. (3) below [8,9,10].

Where, \( \Delta s \) is the area enclosed by intersections of curves before and after simplification; and L is the length of the original curve.

Table 2 shows displacement standard deviation and position error evaluation results of data related to roads and river networks based on the modified algorithm as well as D-P and L-O algorithms. From Table 2, it can be seen that the modified algorithm is provided with a preferable performance and occupies an optimal position in terms of displacement standard deviation and position error if compared with another two algorithms. Especially for the index of position error, it is particularly excellent considering that its value is the least one. Such phenomena indicate that the modified algorithm is able to validly maintain the overall shape features of road and river network data.

4 Conclusions and Expectations

Considering that line simplification is aimed at maintaining feature points of line element, guaranteeing shape features of line element and obtaining smooth and artistic line element simplification results, a simple curve simplification method combining D-P and L-O algorithms together is put forward in this paper. On one hand, such 3 algorithms are used to simplify 2 types of line element objects; on the other hand, 4 quantitative indexes are adopted to evaluate and analyze algorithm simplification effects. Experimental results signify that the modified algorithm presented in this paper is able to maintain local maximum value points of the curve in the process of 1:10000 road and river network data generalized into a 1:100000 scale. In addition, synthetic data are also smoothed so that the overall shape of the curve can be reserved preferably and a synthesis result better than those obtained based on the original algorithm is also achieved.

At present, only generalized simplification of simple linear elements is realized in this paper regardless of adjacent intersections among dense line groups and complex line elements. Moreover, impacts of diverse scales before and after the corresponding generalization on the modified algorithm are also not taken into account. These problems need to be further studied.

References

Douglas, D.H., Peucker, T.K.: Algorithms for the reduction of the number of points required to represent a digitized line or caricature. Can. Cartogr. 10(2), 112–122 (1973)

Li, Z.L., Openshaw, S.: Automatic synthesis algorithm for line elements based on objective laws of nature. Transl. Wuhan Univ. (1) 49–58 (1994)

Nako, B., Mitropoulos, V.: Local length ratio as a measure of critical point detection for line simplification. In: The Symposium of the 5th ICA Workshop on Progress in Automated Map Generalization, pp. 28–30 (2003)

White, E.: Assessment of line generalization algorithms using characteristics points. Am. Cartogr. 12(1), 17–27 (1985)

Liu, H., Fan, Z., Xu, Z., Deng, M.: Arc to chord algorithm modification and evaluation for curve simplification. Geogr. Geo-Inf. Sci. 27(1), 45–48 (2011)

Deng, M., Chen, J., Li, Z., et al.: An improved local measure method for the importance of vertices in curve simplification. Geogr. Geo-Inf. Sci. 25(1), 40–43 (2009)

Muller, J.C.: Fractal and automated line generalization. Cartogr. J. 24(1), 27–34 (1987)

Joao, E.M.: Causes and Consequences of Map Generalization. Taylor and Francis, London (1998)

Zhu, K., Wu, F., Wang, H., et al.: Li-Openshaw algorithm modification and evaluations. Acta Geodaet. Cartogr. Sin. 36(4), 450–456 (2007)

Wu, F., Zhu, K.: Evaluation on geometric accuracy of line element simplification algorithm. Geomat. Inf. Sci. Wuhan Univ. 33(6), 600–603 (2008)

Acknowledgment

Sponsor acknowledgments: Special Scientific Research Fund of Surveying and Mapping Geographic Information Public Welfare Profession (201512027); National SciTech Support Plan (2015BAJ06B01); Basic Scientific Research Business Expense Project of the Chinese Academy of Surveying & Mapping (7771606).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Li, C., Wu, P., Gu, T., Liu, X. (2017). A Study on Curve Simplification Method Combining Douglas-Peucker with Li-Openshaw. In: Yuan, H., Geng, J., Bian, F. (eds) Geo-Spatial Knowledge and Intelligence. GRMSE 2016. Communications in Computer and Information Science, vol 699. Springer, Singapore. https://doi.org/10.1007/978-981-10-3969-0_33

Download citation

DOI: https://doi.org/10.1007/978-981-10-3969-0_33

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-3968-3

Online ISBN: 978-981-10-3969-0

eBook Packages: Computer ScienceComputer Science (R0)