Abstract

Images captured in foggy weather conditions often suffer from bad visibility. Therefore, in this paper, we propose an efficient and automatic method to remove hazes from a single input image. Our method benefits much from an exploration on the priori image geometry on the transmission function. This prior, combined with an edge-preserving texture-smoothing filtering, is applied to estimate the unknown transmission map. Next, the restored image is obtained by taking the estimated transmission map and the atmospheric light into the image degradation model to recover the scene radiance. The proposed method is controlled by just a few parameters and can restore a high-quality haze-free image with fine image details. The experimental results demonstrate that our method shows competitive performance against other methods.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The images captured in hazy or foggy weather conditions often suffer from poor visibility. The distant objects in the fog lose the contrasts and get blurred with their surroundings, as illustrated in Fig. 1(a). This is because the reflected light from these objects, before it reaches the camera, is attenuated in the air and further blended with the atmospheric light scattered by some aerosols. Also for this reason, the colors of these objects get faded and become much similar to the fog, the similarity of which depending on the distances of them to the camera. As can be seen in Fig. 1(a), the image contrast is reduced and the surface colors become faint. There are many circumstances that effective dehazing algorithms are needed. In computer vision, most automatic systems for surveillance, intelligent vehicles, object recognition, etc., assume that the input images have clear visibility. However, this is not always true in bad weather.

Previous methods for haze removal mainly rely on additional depth information or multiple observations of the same scene. Representative works include [1–4]. For example, Schechner et al. [1] develop a method to reduce hazes by using two images taken through a polarizer at different angles. Narasimhan et al. propose a physics-based scattering model [2, 3]. Using this model, the scene structure can be recovered from two or more images with different weather. Kopf et al. [5] propose to remove haze from an image by using the scene depth information directly accessible in the geo-referenced digital terrain or city models. However, the multiple images or the user-interaction that needed in these methods limit their applications.

Recently, some significant progresses have also been achieved [6–11] for a more challenging single image dehazing problem. These progresses benefit much from the insightful explorations on new image models and priors. For example, Fattal’s work [15] was based on the assumption that the transmission and the surface shading are locally uncorrelated. Under this assumption, Fattal estimated the albedo of the scene and then inferred the medium transmission. This approach is physically sound and can produce impressive results. However, the method fails when handling heavy haze images. Tan’s work [13] was based on the observations that the clear-day images have higher contrast compared with the input haze image and he removed the haze by maximizing the local contrast of the restored image. The visual results are visually compelling but may not physical valid. He et al. [8] proposed the dark channel prior to solve the single image dehazing problem. The prior is based on the observation that most local patches in haze-free outdoor images contain some pixels which have very low intensities in at least one color channel. Using this prior, estimated transmission map and the value of atmospheric light can be obtained. For a better purpose, soft matting is used for the estimated transmission map. Combined with the haze image model, a good haze-free image can be recovered by this approach. Tarel et al. [9] used a fast median filter to infer the atmospheric veil, and further estimated the transmission map. However, the method is unable to remove the fog between small objects, and the color of the scene objects is unnatural for some situations. Kratz et al. [10] proposed a probabilistic model that fully leverages natural statistics of both the albedo and depth of the scene. The key idea of the method is to model the image with a factorial Markov random field in which the scene albedo and depth are two statistically independent latent layers. Kristofor et al. [11] made an investigation of the dehazing effects on image and video coding for surveillance systems. They first proposed a method for single image, and then consider the dehazing effects in compression.

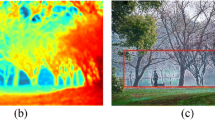

From the above dehazing algorithms, we can deduce that single image dehazing is essentially an under-constrained problem, and the general principle of solving such problems is therefore to explore additional priors. Following this idea, we begin our study in this paper by deriving inherent priori image geometry for estimating the initial scene transmission map, as show in Fig. 1(c). This constraint, combined with an edge-preserving texture-smoothing filtering, is applied to recover the final unknown transmission, as shown in Fig. 1(d). Experimental results show that our method can restore a haze-free image of high quality with fine edge details. Figure 1(b) illustrates an example of our dehazing result.

Our method mainly uses two techniques to remove image haze. The first is a priori image on the scene transmission. This image prior, which has a clear geometric interpretation, is proved to be effective to estimate initial transmission map. The second technique is a new edge-preserving filtering that enables us to obtain the final transmission map. The filter helps in attenuating the image noises and enhancing some useful image structures, such as jump edges and corners. Experimental results show that the proposed algorithm can remove haze more thoroughly without producing any halo artifacts, and the color of the restored images appears natural in most cases for a variety of real captured haze images.

2 Background

2.1 Atmospheric Scatting Model

The haze image model (also called image degradation model) consists of a direct attenuation model and an air light model. The direct attenuation model describes the scene radiance and its decay in the medium, while the air light results from previously scattered light. The formation of a haze image model is as follows [8, 12]:

where I(x) is the input foggy image, J(x) is the recovered scene radiance, A is the global atmospheric light, and t(x) is the transmission map. The transmission function t(x) (\( 0 \le t(x) \le 1 \)) is correlated with the scene depth. Let assume that the haze is homogenous, the t(x) can this be written as:

In (2), d(x) is the scene depth, and β is the medium extinction coefficient. Therefore, the goal of image dehazing is to recover the scene radiance J(x) from the input image I(x) according to Eq. (1). This requires us to estimate the transmission function t(x) and the global atmospheric light A. As can be seen from Eq. (1), this problem is an ill-posed problem since the number of unknowns is much greater than the number of available equations. Thus, additional assumptions or priors need to be introduced to solve it. In this paper, the priori image geometry and edge-preserving filtering are applied to obtain our final transmission map t(x).

2.2 Priori Image Geometry and Edge-Preserving Filtering

For priori image geometry, a pixel I(x) contaminated by fog will be “pushed” towards the global atmospheric light A according to Eq. (1). Thus, we can reverse this process and recover the clean pixel J(x) by a linear extrapolation from A to I(x). The appropriate amount of extrapolation is given by [13]:

Consider that the scene radiance of a given image is always bounded, that is,

where C 0 and C 1 are two constant vectors that are relevant to the given image. For any x, a natural requirement is that the extrapolation of J(x) must be located in the radiance cube bounded by C 0 and C 1. The above requirement on J(x) imposes a boundary constraint on t(x). Suppose that the global atmospheric light A is given. Thus, for each x, we can compute the corresponding boundary constraint point J b (x). Then, a lower bound of t(x) can be determined by using Eqs. (3) and (4), leading to the following boundary constraint on t(x):

where t b (x) is the lower bound of t(x), given by

where I c, A c, \( C_{0}^{c} \) and \( C_{1}^{c} \) are the color channel of I, A, C 0 and C 1, respectively. In our experiment, the boundary constraint map that used for obtaining out initial transmission map is computed from Eq. (6) by setting the radiance bounds C 0 = (20, 20, 20)T and C 1 = (300, 300, 300)T.

In the proposed method, the above priori image geometry is used for estimating the initial transmission map and an edge-preserving filtering method is performed over the estimated initial transmission map to obtain a refined one. The reason why the filtering process needs preserving edge is that the wrong edge of scene objects in transmission map will cause halo artifacts in final restoration results. For the edge-preserving filtering, the typical filters that can be used for removing the redundant details of the transmission map are Gaussian filter, median filter and bilateral filter [14]. The Gaussian smoothing operator is a 2-D convolution operator that is used to ‘blur’ images and remove detail and noise. It uses a different kernel that represents the shape of a Gaussian (‘bell-shaped’) hump to achieve this purpose. The main idea of median filtering is to run through all pixels, replacing each pixel value with the median of neighboring pixel values. Bilateral filtering [15] is done by replacing the intensity value of a pixel by the average of the values of other pixels weighted by their spatial distance and intensity similarity to the original pixel. Specifically, the bilateral filter is defined a normalized convolution in which the weighting for each pixel q is determined by the spatial distance from the center pixel p, as well as it relative difference in intensity. Let I p be the intensity at pixel p and \( I_{p}^{F} \) be the filter value, the filter value can thus be written as [14]:

where the spatial and range weighting functions \( G_{{\sigma_{S'} }} \) and \( G_{R} \) are often Gaussian, and \( \sigma_{R} \) and \( \sigma_{S'} \) are the range and spatial variances, respectively. Median filter has strong smooth ability but can blur edges, while bilateral filter with small range variance cannot achieve enough smoothing on textured regions in the haze removal algorithm and that large range variance will also blur edges. Therefore, in this paper, a edge-preserving texture-smoothing filtering is adopted which can generate better results in transmission map refined than existing filters. For the new edge-preserving filtering, the image intensities are normalized such that \( I_{p} \in [0,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} 1] \) and \( \sigma_{R} \) is normally chosen between [0, 1]. Let the image width and height be w and h, we choose \( \sigma_{S'} = \sigma_{S} \cdot \hbox{min} (h,w) \), such that \( \sigma_{S} \) is also normally chosen between [0, 1]. Besides bilateral filter, we also use \( \sigma_{S'} \) to represent the spatial variance of Gaussian filter and the radius of median filter in this paper.

3 The Proposed Algorithm

3.1 The Algorithm Flowchart

The flowchart of the proposed method is depicted in Fig. 2. One can clearly see that the proposed algorithm employs three steps in removing haze from a single image. The first one involves computing the atmospheric light according to the three distinctive features of the sky region. The second step involves the computing of the initial transmission map with the priori image geometry and the edge-preserving filtering. The goal of this step is to obtain the initial transmission map using the boundary constraint from radiance cube and to smooth textured regions while preserving large scene depth discontinuities using the edge-preserving filtering. Finally, with the estimated atmospheric light and the refined transmission map, the scene radiance can be recovered according to the image degradation model.

3.2 Atmospheric Light Estimation

Estimating atmospheric light A should be the first step to restore the hazy image. To estimate A, the three distinctive features of sky region are considered here, which is more robust than the “brightest pixel” method. The distinctive features of sky region are: (i) bright minimal dark channel, (ii) flat intensity, and (iii) upper position. For the first feature, the pixels that belong to the sky region should satisfy I min(x) > T v , where I min(x) is the dark channel and T v is the 95 % of the maximum value of I min(x). For the second feature, the pixels should satisfy the constraint N edge(x) < T p where N edge(x) is the edge ratio map and T p is the flatness threshold. Due to the third feature, the sky region can be determined by searching for the first connected component from top to bottom. Thus, the atmospheric light A is estimated as the maximum value of the corresponding region in the foggy image I(x).

3.3 Initial Transmission Map Estimation

The boundary constraint of t(x) provides a new geometric perspective to the famous dark channel prior [8]. Let C 0 = 0 and suppose the global atmospheric light A is brighter than any pixel in the haze image. One can directly compute t b (x) from Eq. (1) by assuming the pixel-wise dark channel of J(x) to be zero. Similarly, assuming that the transmission in a local image patch is constant, one can quickly derive the patch-wise transmission \( \tilde{t}(x) \) in He et al.’s method [8] by applying a maximum filtering on t b (x), i.e.,

where ω x is a local patch centered at x. It is worth noting that the boundary constraint is more fundamental. In most cases, the optimal global atmospheric light is a little darker than the brightest pixels in the image. Those brighter pixels often come from some light sources in the scene, e.g., the bright sky or the headlights of cars. In these cases, the dark channel prior will fail to those pixels, while the proposed boundary constraint still holds. Note that the commonly used constant assumption on the transmission within a local image patch is somewhat demanding. For this reason, the patch-wise transmission \( \tilde{t}(x) \) based on this assumption in [8] is often underestimated. Here, a more accurate patch-wise transmission is adopted in the proposed method, which relaxes the above assumption and allows the transmissions in a local patch to be slightly different. The new patch-wise transmission is given as below:

The above patch-wise transmission \( \hat{t}(x) \) can be conveniently computed by directly applying a morphological closing on t b (x). Figure 3 illustrates a comparison of the dehazing results by directly using the transmissions map derived from dark channel prior and the boundary constraint map, respectively. One can observe that the estimated transmission map from dark channel prior works not well since the dehazing result contains some halo artifacts, as shown in Fig. 3(b). In comparison, the new patch-wise transmission map derived from the boundary constraint map can produces fewer halo artifacts, as shown in Fig. 3(c).

Image dehazing by directly using the patch-wise transmissions from dark channel prior and boundary constraint map, respectively. (a) Foggy image. (b) Dehazing result by dark channel prior. (c) Dehazing result by boundary constraint. (d) Estimated transmission map from dark channel. (e) Estimated transmission map from boundary constraint map.

3.4 Refined Transmission Map Estimation

After obtaining the initial transmission map, an edge-preserving filtering is used here to refine the initial transmission map.

Specifically, given a gray-scale image I (if the input is multi-channel image, we process each channel independently), we first detect the range of the image intensity values, say [I min , I max ]. Next, we sweep a family of planes at different image intensity levels \( I_{k} \in [I_{\hbox{min} } ,I_{\hbox{max} } ] \), k = {0, 1, …, N − 1} across the image intensity range space. The distance between neighboring planes is set to \( (I_{\hbox{max} } - I_{\hbox{min} } )/(N - 1) \), where N is a constant. Smaller N results in larger quantization error while larger N increases the running time. When N →+∞ or N = 256 for 8-bit grayscale images, the quantization error is zero. Also, stronger smoothing usually requires smaller N. We define the distance function of a voxel [I(x, y), x, y] on a plane with intensity level \( I_{k} \) as the truncated Euclidean distance between the voxel and the plane. The process can be written as:

where \( \eta \in (0,1] \) is a constant used to reject outliers and when η = 1, the distance function is equal to their Euclidean distance. At each intensity plane, we obtain a 2D distance map D(I k ). A low pass filter F is then used to smoothen this distance map to suppress noise:

Then, the plane with minimum distance value at each pixel location [x, y] is located by calculating

Let intensity levels i 0 = I K(x, y), i + = I K(x, y)+1, i − = I K(x, y)−1. Assume that the smoothed distance function D F(I k , x, y) at each pixel location [x, y] is quadratic polynomial with respect to the intensity value I k , the intensity value corresponding to the minimum of the distance function can be approximated using quadratic polynomial interpolation (curve fitting):

In (13), I F(x, y) is the final filtered result of our framework at each pixel location [x, y]. Apparently, any filter F can be integrated into this framework by using it to smoothen the distance map D(I k ) as shown in Eq. (11). Figure 4 presents the experimental filtered results of our framework for a synthetic noisy image. In this experiment, the number of intensity planes N is set to 16 and η = 0.1. The parameter settings for low-pass filters are σ S = 0.05 and as for bilateral filter, σ R = 0.2. As can be seen in Fig. 4(f), compared with other filtering methods, our refined transmission map maintains the sharp intensity edges and greatly suppresses the noise inside each region.

3.5 Scene Radiance Recovery

Since, now, we already know the input haze image I(x), the final refined transmission map t(x) and the global atmospheric light A, we can obtain the final haze removal image J(x) according to the image degradation model, as shown in Eq. (1). The final dehazing result J(x) is recovered by:

where t 0 is application-based and is used to adjust the haze remaining at only the farthest reaches of the image. If the value of t 0 is too large, the result has only a slight haze removal effect, and if the value is too small, the color of the haze removal result seems over saturated. Experiments show that when t 0 is set between 0.1 and 0.5, we can get visually pleasing results in most cases. An illustrative example is shown in Fig. 5. In the figure, Fig. 5(a) shows the input hazy images, Fig. 5(b) shows the refined transmission map estimated by using the proposed method, and Fig. 5(c) is our final haze removal result obtained by using the refined transmission map.

4 Experimental Results

In order to verify the effectiveness and validity of the proposed image dehazing method, two criteria have been considered: (i) qualitative comparison, and (ii) quantitative evaluation. In the experiments, all the dehazing results are obtained by executing MATLAB R2008a on a PC with a 3.10-GHz Intel® CoreTM i5-2400 CPU.

4.1 Qualitative Comparison

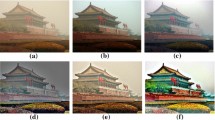

Figure 6 illustrates some examples of our dehazing results and the recovered scene transmission functions. As can be seen from the results, our method can recover rich details of images with vivid color information in the haze regions. One can clearly see that the image contrast and detail are greatly improved compared with original foggy images, especially in the distant region with dense fog. Besides, the transmission function also reflects the density of the hazes in the captured scene. From the Fig. 6, we can see the estimated transmissions by our method are quite consistent with our intuitions.

We also compare our method with several state-of-the-art existing methods. Figure 7 illustrates the comparisons of our method with Tan’s work [7]. Tan’s method can augment the image details and greatly enhance the image visual effect. However, the colors in the recovered images are often over saturated, since the method is not a physically based approach and the transmission may thus be underestimated. For example, one can clearly see that the color of traffic sign in Fig. 7 has changed to orange after dehazing, while our results have no such problem. Moreover, some significant halo artifacts usually appear around the recovered sharp edges (e.g., trees). The proposed method can improve the visual effect of image structures in very dense haze regions while restoring the natural colors, and the halo artifacts in our results are also smaller, as shown in Fig. 7.

In Fig. 8, we compare our approach with Fattal’s method [6]. Fattal’s method can produce a visually pleasing result. However, the method is based on statistics and requires sufficient color information and variance. When the haze is dense, the color information that needed in Fattal’s method is not enough for the method to reliably estimate the transmission. For example, the enhanced result obtained by Fattal’s method in Fig. 8 still remains some fog in the region far away. In comparison, our results are much visually pleasing, and the haze at the farthest reaches of the image can be largely removed.

Figure 9 shows the comparisons of our approach with Tarel et al.’s method [9]. Tarel et al.’s method is a filtering based approach. They estimate the atmospheric veil by applying a fast median filter to the minimum components of the observed image. The main advantages of the method is its speed, while the weakness is the haze removal results always contain some halo artifacts and the color seems not very natural. As shown in Fig. 9, the color of the sky and maintain seem too dark. Compared with Tarel’s method, the color of our method seems much more natural.

We also compare our method with He et al.’s work [8] in Fig. 10. As can be seen in the figure, both methods produce comparable results in regions with heavy hazes (e.g., the distant buildings). However, in regions with many depth jumps (e.g., trees and grasses at close range), our method performs better. The color in our haze removal result also seems more vivid and colorful. Moreover, our method tends to generate a clearer result of image details, as illustrated in Fig. 10. These benefits from the incorporation of a filter bank into image dehazing. These filters can help to exploit and augment the interesting image structures, e.g., jump edges and corners.

Figures 11 and 12 allow the comparison of our results with four state of the art visibility restoration algorithms: Tan [7], Fattal [6], Tarel [9] and He [8]. One can clearly see that all the dehazing methods can effectively remove the haze in both near and far regions for the testing images. However, the results obtained with our algorithm seems visually close to the result obtained by He, with better color fidelity and less halo artifacts compared with Tan. However, we find, depending on the image, each algorithm is a trade-off between color fidelity and contrast enhancement. Results on a variety of haze or fog images show that the proposed method can achieve a better enhancement effect in terms of both image color and the profile of the scene objects.

4.2 Quantitative Evaluation

An assessment method dedicated to visibility restoration proposed in [16] is used here to measure the dehazing effect. We first transform the color level image to the gray level image, and use three indicators to compare the two gray level images: the input image and the haze removal image. The visible edges in the image before and after restoration are selected by a 5 % contrast threshold according to the meteorological visibility distance proposed by the international commission of illumination (CIE). To implement this definition of contrast between two adjacent regions, the method of visible edges segmentation proposed in [17] has been used.

Once the map of visible edges is obtained, we can compute the rate e of edges newly visible after restoration. Then, the mean \( \bar{r} \) over these edges of the ratio of the gradient norms after and before restoration is computed. This indicator \( \bar{r} \) estimates the average visibility enhancement obtained by the restoration algorithm. At last, the percentage of pixels σ which becomes completely black or completely white after restoration is computed. Since the assessment method is based on the definition of visibility distance, the evaluation conclusion which complies with human vision characteristic can be drawn.

These indicators e, \( \bar{r} \) and σ are evaluated for Tan [7], Fattal [6], Tarel [9], He [8] and our method on six images (see Table 1). For each method, the aim is to increase the contrast without losing some visual information. Hence, good results are described by high values of e and \( \bar{r} \) and low value of σ. From Table 1, we deduce that depending on the image, Tan’s algorithm generally has more visible edges than our method, Tarel’s, Fattal’s and He’s algorithms. Besides, generally we can order the five algorithms in a decreasing order with respect to average increase of contrast on visible edges: Tan, our method, Fattal, Tarel and He algorithms. This confirms our observations on Figs. 7, 8, 9, 10, 11 and 12. Table 1 also gives the percentages of pixels becoming completely black or white after restoration. Compared to others, the proposed algorithm generally gives the smallest percentage.

Computation time is also a very important criterion to evaluate algorithm performance. For an image of s x × s y , the fastest algorithm is Tarel’s method. The complexity of Tarel’s algorithm is O(s x s y ), which implies that the complexity is a linear function of the number of input image pixels. Thus, only 2 s are needed to process an image of size 600 × 400. For He’s method, its time complexity is relatively high since the matting Laplacian matrix L in the method is so huge that for an image of size s x × s y , the size of Lis s x s y × s x s y , so 20 s is needed to process a 600-by-400 pixels image. The computational times of Fattal’s and Tan’s methods are even longer than He’s method. They take about 40 s and 5 to 7 min to process an image which is of size 600 × 400, respectively. Our proposed method has a relatively faster speed, 17 s is needed to obtain a haze removal image of the same size by using our method. This can be further improved by using a GPU-based parallel algorithm.

5 Conclusion and Future Work

In this paper, we have proposed an efficient method to remove hazes from a single image. Our method benefits much from an exploration on the priori image geometry on the transmission function. This prior, together with an edge-preserving filtering, is applied to recover the unknown transmission. Experimental results show that in comparison with the state-of-the-arts, our method can generate quite visually pleasing results with finer image details and structures.

However, single image dehazing is not an easy task since it often suffers from the problem of ambiguity between image color and depth. A clean pixel may have the same color with a fog-contaminated pixel due to the effects of hazes. Therefore, without sufficient priors, these pixels are difficult to be reliably recognized as fog-contaminated or not fog-contaminated. This ambiguity revealing the unconstraint nature of single image dehazing often leads to excessive or inadequate enhancements on the scene objects. Therefore, in this paper a priori image geometry is adopted to estimate the transmission map from the radiance cube of an image. Although the constraint imposes a much weak constraint on the dehazing process, it proves to be effective when combined with the edge-preserving filtering to remove fog from most natural images. Another way to address the ambiguity problem is to adopt more sound constraints or develop new image priors. Therefore, in the future we intend to use the scene geometry or directly incorporate the available depth information into the estimation of scene transmission.

References

Schechner, Y.Y., Narasimhan, S.G., Nayar, S.K.: Instant dehazing of images using polarization. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 325–332 (2001)

Narasimhan, S.G., Nayar, S.K.: Vision and the atmosphere. Int. J. Comput. Vision 48(3), 233–254 (2002)

Narasimhan, S.G., Nayar, S.K.: Contrast restoration of weather degraded images. IEEE Trans. Pattern Anal. Mach. Intell. 25(6), 713–724 (2003)

Shwartz, S., Namer, E., Schechner, Y.Y.: Blind haze separation. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1984–1991 (2006)

Kopf, J., Neubert, B., Chen, B., Cohen, M., Cohen-Or, D., Deussen, O., Uyttendaele, M., Lischinski, D.: Deep photo: model-based photograph enhancement and viewing. In: ACM SIGGRAPH Asia, pp. 116:1–116:10 (2008)

Fattal, R.: Single image dehazing. In: ACM SIGGRAPH, pp. 72:1–72:9 (2008)

Tan,R.T.: Visibility in bad weather from a single image. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1–8 (2008)

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1956–1963 (2009)

Tarel, J.P., Hautiere, N.: Fast visibility restoration from a single color or gray level image. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2201–2208 (2009)

Kratz, L., Nishino, K.: Factorizing scene albedo and depth from a single foggy image. In: IEEE International Conference on Computer Vision (ICCV), pp. 1701–1708 (2009)

Kristofor, B.G., Dung, T.V., Truong, Q.N.: An investigation of dehazing effects on image and video coding. IEEE Trans. Image Process. 21(2), 662–673 (2012)

Middleton, W.: Vision through the atmosphere. University of Toronto Press, Toronto (1952)

Meng, G.F., Wang, Y., Duan, J.Y., Xiang, S.M., Pan, G.H.: Efficient image dehazing with boundary constraint and contextual regularization. In: IEEE International Conference on Computer Vision (ICCV), pp. 617–624 (2013)

Bao, L.C., Song, Y.B., Yang, Q.X., Ahuja, N.: An edge-preserving filtering framework for visibility restoration. In: 21st International Conference on Pattern Recognition (ICPR), pp. 384–387 (2012)

Tomasi, C., Manduchi, R.: Bilateral filtering for gray and color images. In: IEEE International Conference on Computer Vision (ICCV), pp. 839–846 (1998)

Hautiere, N., Tarel, J.P., Aubert, D.D., Dumont, E.: Blind contrast enhancement assessment by gradient ratioing at visible edges. Image Anal. Stereol. J. 27(2), 87–95 (2008)

Kohler, R.: A segmentation system based on thresholding. Comput. Graph. Image Process. 15(3), 319–338 (1981)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (61502537), and the Postdoctoral Science Foundation of Central South University (No. 126648).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Guo, F., Zhu, C. (2016). Single Image Haze Removal Based on Priori Image Geometry and Edge-Preserving Filtering. In: Tan, T., Li, X., Chen, X., Zhou, J., Yang, J., Cheng, H. (eds) Pattern Recognition. CCPR 2016. Communications in Computer and Information Science, vol 663. Springer, Singapore. https://doi.org/10.1007/978-981-10-3005-5_3

Download citation

DOI: https://doi.org/10.1007/978-981-10-3005-5_3

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-3004-8

Online ISBN: 978-981-10-3005-5

eBook Packages: Computer ScienceComputer Science (R0)