Abstract

Starting from a definition of risk governance, the paper examines a number of environmental contingencies and crises where traditional risk assessments based on expert calculations and computer models proved inadequate. While the growing inclusion of uncertainty in scientific descriptions and forecasts is recognized, the tendency to express it in probabilistic terms is criticized because it fails to account for the complexity of natural phenomena and their interactions with human actions. The paper argues that contributions from different disciplines and other types of knowledge need to be integrated for improving disaster prevention and response. This implies a redefinition of the role of science in society as well as a general change of attitude, revisiting Descartes’ idea of humans as “masters and possessors of nature”.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Defining and Contextualizing Risk Governance

The term “governance” which was once used in restricted disciplinary or professional circles to denote areas of common theoretical or practical interest (e.g. corporate governance) was extended gradually in its scope, becoming of common use but, I suspect, not of common understanding. A trendy utterance doesn’t necessarily denote a new concept generating innovative practices. Some of the new users adopt the word as an unnecessary and inappropriate substitute for government, others as a declaration of intents, a statement of principles. For example, in its White Paper, the European Commission (CEC 2001) refers to “European governance” as the rules, processes and behavior that affect the way in which powers are exercised at European level, particularly as regards openness, participation, accountability, effectiveness and coherence. It also adds that these five “principles of good governance” reinforce those of subsidiarity and proportionality. In this case the term acquires a positive connotation (good governance) indicating a path away from obsolete or unsatisfactory practices, but its explanation seems to me rather contorted and somewhat circular.

Here I will adopt the 1995 definition of the Commission on Global Governance; its main merit is of being stated in comprehensible and unambiguous terms, thus facilitating either accord or disagreement with its content. “Governance is the sum of the many ways individuals and institutions, public and private, manage their common affairs. It is a continuing process through which conflicting or diverse interests may be accommodated and cooperative action may be taken. It includes formal institutions and regimes empowered to enforce compliance, as well as informal arrangements that people and institutions either have agreed to or perceive to be in their interest.” (Commission on Global Governance 1995, p. 2).

This is a very comprehensive characterization, which includes multiple social actors and diverse social practices and applies to different levels of aggregation, ranging from small interest groups to the international arena. It also acknowledges the presence of both conflict and cooperation in human endeavours and incorporates the idea of ongoing change in the design and implementation of strategies for managing “common affairs” in a democratic setting. The definition has also the advantage of being perfectly applicable, with virtually no change, to a wide range of fields and topics, including that of risk. Thus “risk governance” can be described as the various ways in which all interested subjects manage their common “risk affairs”, more specifically, within the purpose of this book, those related to the environment.

2 From Expert Calculations to Integrated Approaches

Risk is conceived technically as something that can be calculated and expressed quantitatively, most commonly in probabilistic terms. Risk assessment styles differ according to the issues at hand and the disciplinary fields involved, but they are all based on calculations which should produce scientifically sound results possibly applicable to policy, management and communication. The distinction between risk assessment and risk management was traditionally based on the pretended exclusively scientific nature of the former vs. the politically and value constrained character of the latter. Risk communication, the last phase of a linear process, was customarily devoted to correct the distorted perceptions of lay people, unable or unwilling to accept the verdict of the experts.

It has been a long time now, since a number of scientists engaged in risk assessment have recognized the uncertainties involved in the endeavour, thus entering a debate about the relation between facts and values that in the previous decades had been restricted to philosophers of science (e.g. Rudner 1953). This new awareness is well represented by the nuclear physicist Alvin Weinberg who coined the term trans-science (Weinberg 1972) to describe problems which can be expressed scientifically but cannot be solved scientifically. In the same year, Harvey Brooks (1972) then dean at Harvard school of Engineering and Applied Sciences, argued that trans-science was not restricted to normal scientific practices (laboratory and field experiments) but also to the then new techniques of computer simulation models. Some years later, the definition of trans-science was made widely popular by William Ruckelshaus (1984) who had served as EPA (Environmental Protection Agency) administrator when it was created in 1970 and then again in the early 1980s. He claimed that most of the problems he had to face during his tenure in office shared these characteristics.

Although positions remained distant and often incompatible (Jasanoff 1987), an open discussion on the role of scientific inputs in policy decisions progressively became to be perceived as both legitimate and urgent. Moreover it was not limited to risk issues but moved across disciplinary fields and policy issues to embrace the overall relation between science and society. By now a very rich literature exists on themes such as the past and present co-evolution of science and the Modern State; the rational-actor paradigm in decision-making and its limitations, and the use of science and expertise in the legislative, judicial and administrative contexts (Jasanoff 2004; Tallacchini 2005; Cranor 2006; Wynne et al. 2007). Much of it points to the problematic nature of expert advice, and highlights the differences between curiosity-generated research and mandated (Salter 1988) or issue-driven science (Funtowicz and Ravetz 2008) where the criterion of quality shifts from truth or Popperian falsification to robustness (Nowotny et al. 2001).

The relatively new expression “risk governance” seems to reflect this novel state of things where experts must justify their theoretical assumptions and technical procedures when they present risk assessment results and illustrate their implications for policy. Moreover, they must defend their advice or testimony in public, facing questions about both the results and the methods of their work. In other words, no longer does “science speaks truth to power” (Wildavsky 1979), rather it is one among many legitimate perspectives and inputs in policy processes. This of course doesn’t mean that anyone can come to the forum advancing all kinds of ideas. It simply means that expert contributions, usually expressed quantitatively, are not easily accepted as objective facts but they are carefully scrutinized, starting from the criteria which were considered in the very framing of the problem (health, economy, ecology, ethics, etc.) and the weights which were assigned to them.

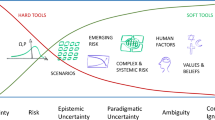

Appraisal, analysis, management, communication, and education remain separate activities, but none of them can be performed in isolation by some expert group without been exposed to public scrutiny and deliberation. Moreover, no one of them can be performed based only on objective facts, which are now seen as inevitably conditioned by value judgments. As Funtowicz and Ravetz put it in their characterization of Post Normal Science, “In the sorts of issue-driven science relating to the protection of health and the environment, typically facts are uncertain, values in dispute, stakes high, and decisions urgent. The traditional distinction between ‘hard’, objective scientific facts and ‘soft’, subjective value-judgments is now inverted. All too often, we must make hard policy decisions where our only scientific inputs are irremediably soft.” (Funtowicz and Ravetz 2008).

If this claim is correct, as I will try to illustrate, the idea of risk governance cannot be reduced to calculable quantitative risk but must be interpreted broadly, as referring to situations characterized by uncertainty, even ignorance, and complexity, implying a plurality of irreducible perspectives.

3 Complex Systems and Risk Surprises

A quarter century ago, the German sociologist Ulrich Beck coined the term Risikogesellschaft in the book by the same title, which become very popular when its English translation, The risk society, was published (Beck 1986/1992). Since then, ideas that were previously aired only in restricted circles became broadly discussed: first and foremost those concerning the unforeseen negative effects of technologies intended to improve our quality of life and/or to respond to environmental challenges.

The existence of risks generated by technologies which were not purposely designed to be aggressive (like in the military field) had been already experimented through a long list of accidents; Seveso in 1976 and Bhopal in 1984 being just two of those which captured wide public attention and which also influenced the regulation of “major-accident hazards” in Europe, starting from the early 1980s onwards. The debate of “manufactured risks” (Giddens 1999) become progressively more reflexive, questioning not only the experts’ capacity of control over supposedly well known technological systems, but the very possibility of understanding their overall functioning, including in their interactions with the natural world.

Coming from organizational studies, the sociologist Charles Perrow contributed greatly to promote the idea that complex systems cannot be addressed with the analytical and practical tools used for simple ones. In his influential book Normal accidents (Perrow 1984/1999) he claimed that with “high risk technologies”, i.e. systems characterized by high complexity and tight coupling, accidents are inevitable, though not necessarily frequent. Discrete failures can interact in unexpected and unrecognized ways and move from one part of the system to another, possibly leading to its breakdown before those in charge are even able to detect the origin of the problem and the ways in which it escalated. Redundancy, which is included in well designed systems so that a single fault doesn’t prevent their functioning, also contributes to increasing their complexity and consequently their vulnerability. To illustrate his thesis Perrow discusses a number of accidents in many sectors, including chemical, petrochemical and nuclear plants, air and marine traffic, dams, mines, etc.

In the early 1990s the expression “natech” was coined to signify natural events which trigger technological emergencies s and has since entered the vocabulary of analysts and practitioners (Showalter and Fran Myers 1992; Steinberg et al. 2008; Menoni and Margottini 2011). The list of “natechs” is endless, as virtually any severe natural event which impacts on a human system has the potential to disrupt its technological devices, including domestic appliances, industrial equipments, lifelines, etc. And the more technologically advanced is the system, the greater is the damage potential. The failure of the nuclear plant at Fukushima Dai-ichi following the earthquake and tsunami that struck Japan on 11 March 2011 is one of the last dramatic examples of what can happen in a highly industrialized and technologically advanced society. Interestingly enough, in a book published 4 years before such catastrophe, Perrow provided a very detailed description of a similar possible failure, also based on chronicles of poor maintenance, lack of foresight and culpable negligence in the US, where accidents were sometimes avoided just out of pure luck (Perrow 2007).

Another very instructive example dates from May 2010, when the eruption of Iceland volcano Eyjafjallajökull disrupted aviation traffic for many weeks. Besides confirming the disquieting power of nature, that episode showed how tightly interconnected is the world in which we live and how vulnerable we all are, independent on where the crisis starts. The technological transport system that daily moves millions of people and enormous quantities of goods from one part to another of the globe did not operate because of something happening in an island located at remote latitude on the atlas and possibly absent from the mental maps of a large majority of people. The obvious fact of finding everyday consumption products on supermarkets’ shelves was no longer so obvious with, for example, consumers in Europe starting to realize that coffee is not produced at their latitudes and Ecuador cultivators of long stem roses favored by Russian purchasers worrying about the impossibility to ship them to destination. Also, those travelling for work or leisure suddenly found it hopeless to calculate distance in terms of hours of flight. Reverting to a metrics of miles meant experiencing one’s destination as not only more remote, but also not assured.

Taking inspiration from Perrow (1984) and reflecting of episodes such as the ones just mentioned, it seems advisable to start conceptualizing the world as a complex, tightly coupled system, where unexpected or unforeseen interactions between apparently separate units may generate high risk surprises. Natural systems in themselves are extremely complex and our capacity of understanding them (to say nothing of controlling them) is limited rather than increased by our more and more pervasive interferences (accidental or carefully designed) with their functioning. Indeed such interventions cannot but increase system complexity and consequently amplify our ignorance.

4 Recognizing Uncertainty and Ignorance

Climate change is a paradigmatic example of the pitfalls involved both in problem framing and the collection of undisputable evidence. Originally framed in terms of atmospheric chemistry, the issue was gradually redefined as a multidimensional one, with anthropic pressure as a key feature. It followed that the contributions of a number of disciplines and a multiplicity of expertise were required for its understanding and effective management. In a process, perceived as urgent, controversy between parties became harsher and harsher, with a polarization of positions which has been simplistically described (sometimes by the same contenders) as a confrontation between two opposing factions: believers vs. skeptics. Many of the stakeholders have qualified the issue as one of Post-Normal Science as there is no way to solve the problem technically and controversy revolves mainly around conflicting values and the treatment of the “uncertainty monster” (Van der Sluijs 2005), i.e. on how to deal with “the confusion and ambiguity associated with knowledge versus ignorance, objectivity versus subjectivity, facts versus values, prediction versus speculation, and science versus policy.” (Curry and Webster 2011).

Recently, the IPCC addressed the issue of extreme events and disasters in relation to climate change. In the summary for policy makers (IPCC 2011) two metrics are used for communicating the degree of certainty in key findings: the former expressed in qualitative terms, the latter in quantitative ones. Literally: (a) “Confidence in the validity of a finding based on the type, amount, quality, and consistency of evidence (e.g., mechanistic understanding, theory, data, models, expert judgment) and the degree of agreement.” and (b) “Quantified measures of uncertainty in a finding expressed probabilistically (based on statistical analysis of observations or model results, or expert judgment)” (IPCC 2011, p. 16). The degrees of confidence are illustrated by different shades of grey in a double entry table with evidence on the x axis and agreement on the y axis. The levels of certainty instead are summarized in a template where seven verbal expressions are coded into seven probability intervals. Thus, for example, the top extreme, “virtually certain” indicates that a certain outcome has a 99–100 % probability; the lowest one, “exceptionally unlikely” that it has a 0–1 % probability. In the middle of the continuum, the expression “about as likely as not” signals a 33–66 % probability. These equivalences bring back uncertainty in the domain of risk, ignoring Knight’s distinction between the two, based precisely on the possibility of quantifying the latter but not the former (Knight 1921). Moreover, as Risbey and O’Kane remarked already, ignorance is left out of the picture. “Ignorance – they write – is an inevitable component of climate change research, and yet it has not been specifically catered for in standard uncertainty guidance documents for climate assessments” (Risbey and O’Kane 2011, p 755).

The quantification of uncertainty in terms of probability remains a quite arbitrary operation, whereas the main contribution of the report resides in its attempt to integrate “perspectives from several historically distinct research communities studying climate science, climate impacts, adaptation to climate change, and disaster risk management.” (IPCC 2011, p. 1). For example many contributions from the social sciences are derived from individual case studies conducted with qualitative techniques of investigation, which neither produce data amenable to statistical treatment nor allow for extensive generalizations. Nonetheless such contributions often provide descriptions and insights as important as those derived from data amenable to numerical treatment. Precisely because “Each community brings different viewpoints, vocabularies, approaches, and goals” (Ibidem), collapsing their findings into the language of probabilities conceals the irreducible nature of uncertainty, which is intrinsic to the phenomena under consideration, dynamic and subject to constant change.

More generally and from a pragmatic point of view, if action in risk matters is justifiable only in terms of predictions of the future, “legitimate doubt about the predictions may remain until they have been empirically verified, that is, when it is too late” (Funtowicz and Strand 2011, p. 2). The authors thus call for a different principle of legitimating public action, “decoupling the concept of responsibility from the aspirations of control over Nature and the future” (Funtowicz and Strand 2011, p. 1).

Since the early days of their involvement in disaster research, social scientists addressed the reciprocal influences between humans and their environment. For most pioneers, the main concern was not the precise quantification of such interactions but the detection of clear signs of their existence, in a time when research on natural hazards was the almost exclusive domain of the physical sciences.

As early as 1934 the geographer Gilbert White, who later funded the Hazard Center at the University of Colorado, wrote in his Ph.D. dissertation that “Floods are acts of God, but flood losses are largely acts of man”. Progress in theoretical speculation and empirical research prompted the idea that humans are to blame not only for the consequences, but sometimes also for the causes of disasters previously conceived as “natural”. Within the tradition of sociology of disasters, scholars always looked at natural and human phenomena as connected and conceived of them as a combination of hazard and vulnerability, both needing consideration for promoting effective preparation and response (Quarantelli 1998). Nowadays, as geographer James Mitchell has effectively described, “Urban hazards and disasters are becoming an interactive mix of natural, technological, and social events” (Mitchell 1999, p. 484). This definition can be extended beyond the urban setting the author specifically refers to, especially if one takes into account that the geographical and temporal space in which the perverse consequences of the combination of such events appear is not necessarily the same as the one in which the events of any such type have occurred.

Many examples can be added to those already mentioned, an instructive one being the Katrina disaster. Over several decades and possibly centuries the territory of New Orleans and its surroundings was misused and abused so that vulnerability to floods and hurricanes was largely increased (Colten 2005). Over the years the engineering system devised to protect the city became more and more complex, due to subsequent and often uncoordinated changes and additions and also more and more obsolete, due to lack of maintenance and scarce resources. A “normal accident” was inevitable in such a poorly known and poorly managed highly complex and tightly coupled system. Failures in the levees were the sudden manifestation of events long waiting to happen, so that the Katrina disaster was a “highly anticipated surprise” (Colten and De Marchi 2009).

Although the scientific uncertainty on a phenomena such as hurricanes has been considerably reduced and we have achieved good knowledge and an increased capacity of forecast and monitoring, still a great deal of ignorance remains on the vulnerability of the system under threat, of the myriad of its physical and human components and their multiple interactions. In spite of massive resources put in their prevention, disasters continue to cause extended economic losses, disruption of ecological systems and huge suffering for individuals, communities and societies. We are still largely unprepared to face events that we have seen occurring repeatedly for decades and in some cases for centuries such as hurricanes, earthquakes, volcanic eruptions, oil spills, chemical and nuclear accidents, etc. And yet we nourish the hope (or the illusion) that we can anticipate all possible occurrences by the use of sophisticated models and precise calculations, that we can prevent and restore damage by more and more advanced technologies.

5 Different Types of Knowledge

The considerations exposed so far are not to be taken as statements against scientific research and technological development. On the contrary, they are an invitation to reconsider the context in which they presently take place, when the most urgent problems seem to be of remediation, facing the perverse effects, the unwanted consequences of technological progress and economic development. As stated before, the present state of affairs seems incompatible with a framing of hazardous contingencies only in terms of calculable risks. If progress in knowledge has shed light on many previously obscure phenomena, it has also continually revealed new areas of ignorance, which are likely to be increased rather than reduced by our growing power of manipulation of nature. The question is then how to act in the face of the irreducible uncertainty embedded in many present day threats; uncertainty, that is, which cannot be reduced by progress in research. If taming the “uncertainty monster” proves impossible, we must learn to live with it, and recognize that it must be given an explicit place in tackling present risks (Van der Sluijs 2005). This change requires a deep revision, though definitely not the abandonment, of the beliefs that have accompanied the success of Western civilization for over three centuries. Whilst we can still subscribe to Descartes’ plea for acquiring knowledge which is useful for life (parvenir à des connaissances qui soient fort utiles à la vie) the time has come to revisit his idea of the purpose of such knowledge and at the same time ask ourselves which kind of knowledge is presently useful for life. Can we still be sure, in the twenty-first century, that it is possible and desirable for us to become “masters and possessors of nature” (maîtres et possesseurs de la nature) as the French philosopher asserted in his 1637 Discours de la méthode? (Descartes 1637). Or should we reflect with humility on both our successes and failures and extract lessons for moving towards a desirable and sustainable future?

I maintain that some lessons might be gained also from a re-consideration of traditional, local knowledge, which is instead ignored and discredited when progress is equated to scientific discovery and technology driven control. It would be a mistake to assume that local knowledge is necessarily contrary or alternative to scientific knowledge or, put it the other way around, that the latter is contrary or alternative to the former. Although they are achieved by different means and may be grounded on different types of evidence, they both provide clues to be taken into consideration in decisions about risk issues. Not necessarily can they be reconciled, but the a-priori dismissal of popular beliefs on the assumption that they have no scientific grounding is definitely to be avoided.

The very names of certain localities, for instance, evoke that they have been either dangerous or safe places at the occasion of past events, such as floods or landslides (De Marchi and Scolobig 2012). A telling and tragic example is that of the “Monte Toc” in the Italian Alps, the site of “the most deadly landslide in Europe in recorded history” (Petley 2008). In the local dialect the name of the mountain hinted to a loose terrain and embodied a knowledge gained through centuries of experience and oral transmission. Such knowledge was not dissimilar to the one derived from observations and calculations performed by the (few) geologists who discouraged the construction of a huge dam in that location. Both went unheard and on 9th October 1963 an enormous mass of material from the mountain slope slid into the Vajont reservoir generating a wave of water of about 30 million cubic metres, which destroyed several villages causing 2,500 deaths, immense economic and environmental damage, and everlasting grief. A mixture of technological hubris and selfish interests thus were at the origin of what at first sight might appear as a “natech” disaster, but on further reflection is definitely to be considered of a “techna” type. It was indeed because of the dam construction works that a landslide of gigantic proportions occurred, destroying everything … except the dam, which is still standing, idle and useless, in a moonlike landscape.

There are very many examples of the tendency of experts to ignore so called lay knowledge (which indeed is a different kind of specialized knowledge) and I will mention just a few. In his by now classical article about sheep farming in Cumbria after the Chernobyl nuclear accident, Brian Wynne (1996) shows how the experts sent by the government to assess the radioactive fallout failed to consider the shepherds’ knowledge about the composition of the local terrains and the grazing habits of the cattle. This resulted in inaccurate evaluations with negative repercussions on the local farming economy.

I learned of a similar case of lack of humility from the part of the experts when I was invited to a workshop at Værøy, in the Lofoten islands, a few years ago. After the sessions, I used to walk with fellow participants along a deserted runway to a trendy bar for a beer. The bar had been the former check-in hall of the now dismissed local airport, whose story was summarised on a text hanging on a wall. It read approximately as follows: “The Værøy airport was built at Nordland despite strong warnings from local residents about the area being exposed to stormy weather, in particular strong gusts of wind along the mountainside. It was inaugurated in 1986, with great publicity. After 4 years, in 1990, a plane crashed, five people died and the Aviation Authority recommended the airport be closed. And it has remained close since. Already during an Episcopal visitation in 1750, the bishop had noticed that braces had been placed on Værøy church’s northern wall in order to support it against the gusts from a terribly high mountain”. I saw the same piece of writing in other locations in the island, and the memory of both the accident and the warnings seemed to be lively and shared.

Another example of the importance of local knowledge, in this case for preventing damage, was brought to public attention after the tsunami that hit Japan in March 2011 by a journalist who signaled the existence of a number of stone tablets on the hillside by the coast. “Carved on their face – he writes - are stark warnings such as: ‘Do not build your homes below this point’, or ‘seek higher ground after a strong earthquake’. All such tablets are over a century old and most were erected after a tsunami that killed 22,000 people in 1896” (Fackler 2011).

Present lifestyles, including increased mobility, tend to make traditional knowledge less and less important, not so much with regard to its content (as the examples above show) but because of the ways it is usually transmitted, i.e. orally from one generation to the next or through written documents of limited and informal circulation. Yet, even when the original witnesses of past occurrences are no longer there and their heirs have moved away, there is room for the social sciences and the humanities to use their tools of investigation not only for exploring present attitudes, perceptions and behaviors, but also for digging into the past, interrogating ancient chronicles and testimonies.

6 Implications for Risk Communication

Attention to different types of knowledge and inclusion of multiple perspectives in the management of “common risk affairs” will confirm the multi-faceted nature of the issues at hand and reveal the impossibility to do away with uncertainty and ignorance. It is very unlikely that different needs, interests and understandings are easily reconciled, and by no means that would occur on the basis of quantitative assessments and numerical calculations. We have to live with the awareness that there are no simple solutions to complex problems. Actually, we have to become suspicious of simple solutions, as they might be the right answers to the wrong questions.

A change of attitude towards the “uncertainty monster” will have relevant implications for risk governance, including risk communication. As to the latter, approaches in terms of calculable risks have implied the progressive contraposition between a superior and an inferior form of knowledge (or perhaps between knowledge and superstition) as well as between competent experts and ignorant lay citizens. As stated by Mary Douglas, in risk analysis a great effort was put in “trying to turn uncertainty into probabilities” (Douglas 1985, p. 42). And the idea of calculable risks goes hand in hand with that of expert systems (Giddens 1990) which can regulate and control them.

Ian Hacking has asked about the circumstances that made it possible for probability to be “discovered”, studied, and partially formalized centuries before, to become so largely applied in the nineteenth century. The systematic collection of statistical data – he answered – originated the possibility of finding regularities in a world where the deterministic vision had been progressively eroding, opening up the frightening possibility of a lawlessness world dominated by chance. Statistics and probabilities were applied extensively to both natural and human phenomena which thus were brought “under the control of natural or social law” (Hacking 1990, p. 10).

Frightening as it might have been, the thought of having to deal with chance solicited observation and attention to environmental signals and promoted collaboration for alerting and protecting those exposed to it. Until not so long ago, even at our latitudes different tones of the church bells informed people of impending dangers and the necessity to take previously arranged actions. Alert systems are nowadays much more sophisticated, often to the point of being transformed into expert systems not directly accessible to the general public. Consequently their usefulness is subject to the capacity of specialized disaster management organizations of instantly translating digital warnings into practical information and advice and of transmitting them to those at risk. Whereas successful transmission depends largely on technical factors, effective translation requires the sharing of linguistic and cultural codes, which frame reciprocal expectations about personal engagement, agency, trust, and responsibility.

Recent research has shown that people’s personal involvement in risk prevention activities is related to perception of expert systems, broadly defined. Among residents exposed to flood risk, a strong tendency was observed to overlook personal protection measures and to delegate responsibility for safety to appointed emergency services (Kuhlicke et al. 2011; De Marchi and Scolobig 2012). Thus, paradoxically, the more efficient are professional organizations, the more people conceive of risk prevention has someone else’s business, consequently becoming more vulnerable and dependent on external aid. Similarly, the existence of structural devices (dams, levees, barriers, etc.) tends to discourage the respect of non structural measures, such as regulations and restrictions to land use. By their very presence engineering works convey the message that a technological fix is possible, that danger can be eliminated once translated into calculable and manageable risk. Once this passage is interiorised, it is difficult to accept, or even to understand the existence of what the experts call “residual risk”, an expression which prompts associations with concepts and experiences that people were encouraged to forget such as danger, fear, uncertainty and lack of control. Engineering artifacts not only perform a material function (e.g. containing a river) but also a communicative one, symbolizing the control of humans over nature.

Of course the point is not to do away with dedicated agencies of engineering solutions, but to combine them with citizens’ awareness and preparation. In this endeavor, traditional knowledge about local dangers and connected caution would prove precious, had they not been largely dispelled by the language or risk, as well as changes in demography, mobility and life styles.

When notions of danger, uncertainty, and ignorance are exorcised as it has long been the case, the collapse of levees and dams, the accidental releases of dangerous substances from laboratories, chemical or nuclear sites not only cause physical harm, but also affect confidence in expert systems and diminish trust in designers, regulators and managers. As Nobel laureate Joseph Stiglitz recently put it, “Experts in both the nuclear and finance industries assured us that new technology had all but eliminated the risk of catastrophe. Events proved them wrong: not only did the risks exist, but their consequences were so enormous that they easily erased all the supposed benefits of the systems that industry leaders promoted.” (Stiglitz 2011).

7 Which Tasks? Whose Responsibility?

Recent major disasters, such as the Deepwater Horizon oil spill in 2010 and the Fukushima Dai-ichi nuclear accident in 2011 clearly showed that high technology systems can fail due to inappropriate design coupled with “lack of imagination” in foreseeing likely future contingencies. They also dramatically revealed that when something goes wrong nobody knows what to do, except reassuring people that actually things are not that bad after all, as denounced, among others, by journalists Tom Diemer (2010) and Geoffrey Lean (2011).

On August 3rd, 2010, over 3 months from the April 20 rig explosion that generated the still uncontained Gulf of Mexico oil spill, an irritated President Obama denounced on the Today show both the attempts to minimize its consequences from the part of the BP and the incompetence of the experts. As to the latter, he used a colorful expression which reveals his conception of their role and responsibilities: “We talk to these folks [experts] because they potentially have the best answers, so I know whose ass to kick” (Diemer 2010). President Obama’s expectation was that those who (should) know better are capable if not of avoiding accidents, at least of understanding their dynamics and implementing effective containment measures.

In situations like these, the relation between scientific expertise and policy seems to shift from advice to blame, highlighting the key importance of the science-society co-evolution (Jasanoff 2004), as hinted in Sect. 9.2 of this chapter.

The contested characterization of such relation popped up for public scrutiny even more dramatically in connection with a 2009 earthquake in Italy. The story is worth telling for its many and different implications. On April 6th, a 6.3 moment magnitude Mw earthquake devastated the city of L’Aquila, capital of the Abruzzo region and some neighboring municipalities. More than 300 people were killed, about 1,600 injured and tens of thousands left homeless, with an estimated damage of some 10 billion Euros.

The main shock had been preceded by a large number of sporadic low magnitude tremors (technically a seismic swarm) over the previous months, which had understandably alarmed the residents. In such tense atmosphere, an unofficial warning by a technician formerly working in a laboratory of the National Research Council captured large media attention. On the basis of measurements of radon he had performed, he insisted that a major earthquake was soon going to occur. This outraged the then head of the Civil Protection Department, Guido Bertolaso who reported him to the authorities for diffusing alarming news and subsequently convened a meeting of the Commissione Grandi Rischi (Major Risks Commission), a consultative organ of the National Service for Civil Protection composed of experts in seismic, volcanic, hydrological and other risks.Footnote 1 The meeting took place on March 31st in L’Aquila and was followed by a press conference where no specific measures of protection were suggested to the citizens while it was reaffirmed that no scientifically sound method exists to predict earthquakes. Repeated reassurance was provided to journalists and residents that the seismic situation in L’Aquila was normal and actually favorable because of the continuous discharge of energy due to the seismic swarm (Hall 2011).

Following the major earthquake which occurred just a week after, some relatives of the victims brought five members of the Commission and two government officers to court, on the accusation of multiple manslaughter (omicidio colposo plurimo) and injuries for failing to provide complete and precise information which might have saved many people’s lives. In particular, the allegation was of not having taken into account (or duly communicated) the elements of risk derived, for example, from the state of vulnerable buildings, including public ones, which could and should have been monitored from close and possibly evacuated. The trial began in late September 2011 and about a year later the seven defendants were sentenced to six years in prison and to pay huge compensations to the victims. The verdict has not been applied, pending the outcome of the appeal presented by the defendants.

This affair has at least two points of major relevance in relation to the topics discussed in this paper, one very specific and one more general. The first one is which was the question posed (explicitly or implicitly) to the Commissione Grandi Rischi. Certainly it was not about the possibility of a short term prediction, as the negative answer was already known and it would not have been necessary to consult the Commission in that respect. Then, was it about the measures to be taken to minimize the potential damage to the local residents? Or was it, as some circumstances seem to indicate, about ways of reducing their psychological stress, after months of minor tremors? In other words, was the problem framed in terms of public safety or of public control? Some relatives of the victims now claim that because they believed the official information (science speaking truth to power), their dear ones and themselves failed to apply the protective measures that had been transmitted from one generation to the next as part of the local culture of a seismic area.

The question comes to mind whether for saving lives fear in an impending, unpredictable danger may be more effective than belief in scientific probabilistic assessments. And indeed fear is a mechanism that keeps animals (including humans) alert in case of danger, as opposed to panic, which triggers life threatening behaviours. Unfortunately, avoiding panic is such a major concern for public authorities that, as sociologists discovered long ago, they often fail to make the appropriate distinctions, addressing their attention in the wrong direction (Quarantelli and Dynes 1972).

The second point worth exploring, concerns the tasks and the responsibilities of scientists when appointed as advisers in matters of risk and safety. Their expertise is requested not simply to report information on the present state of knowledge in their disciplinary field, which moreover is often incomplete or controversial as it was with seismic swarms in the case just discussed (Grandori and Guagenti 2009). Often, they are expected to provide an informed opinion taking into account not only the evidence derived from their specific discipline, but also the contextual factors which favour or constrain different courses of action. This is not a one person endeavor and permanent communication needs to be established between various disciplines, forms of expertise and types of knowledge, including local one. Certainly the ultimate responsibility on what is to be done doesn’t rest with the scientists alone, but the quality of a decision cannot be evaluated separately from the process which led to it, which requires transparency and accountability.

In the case of the Deepwater Horizon mentioned above, we saw how Obama shifted register, from advice to blame. It is not surprising then that Willy Aspinall, professor in natural hazards and risk science at Bristol University, commenting the L’Aquila case recommends seismologists (and other scientists as well) to check their legal position before providing advice (Aspinall 2011).

Nowadays scientific inputs are key in virtually any area of policy and scientists themselves vindicate not only that they must be heard, but also that their research must be publicly funded (be it in seismology, genetics or anything else). Their requests are not advanced on the ground that research is interesting for investigators themselves, but that it is useful for society at large. This implies that the demarcation between value-free scientific assessments and politically constrained decisions is often very fuzzy and moreover suggests that “the scientist should consider a broadened professional role in which he or she is obliged to take on board the wider uncertainties in the professional’s decision-making role” (Faulkner et al. 2007, p. 696).

8 Conclusion

The governance of present day risks requires the recognition of their complex nature and the awareness that they cannot be fully understood nor managed with traditional risk assessment tools. Similar to past civilizations, also our advanced technological one must come to grips with uncertainty and ignorance. The claim that “Integration of local knowledge with additional scientific and technical knowledge can improve disaster risk reduction and climate change adaptation” (IPCC 2011, p. 14) is now part of official documents and policy orientations.

The awareness of living in a dangerous world calls for personal and collective memory of past experiences and transfer mechanisms from one generation to the next, through oral transmission, written chronicles, symbols and signs enshrined in languages and dialects.

While revisiting our past to learn useful lessons, we must also realize that previous events and actions constrain our choices and ways forward. As a human species, we have colonized the planet to its most unfriendly areas and have grown exponentially in numbers, also thanks to our ability to manipulate our environment. The use of the territory has changed significantly worldwide (e.g. with the progressive abandonment of agriculture) redesigning the maps of vulnerability and inequality and exposing more and more people, especially the poor, to old and new risks.

As stated before, with increased social and geographical mobility, local knowledge often becomes obsolete and is no longer transmitted from one generation to the next. However new “knowledge communities” have been emerging of people who, despite physical distance, share similar concerns about risk issues. Any new disaster offers inputs for reflection on both past and future trends, like in the words pronounced by Iceland President Ólafur Grímsson after the Eyjafjallajökull volcano eruption. In an interview to the Daily Telegraph he said: “In modern societies like Britain and Europe, there has been disengagement between people and nature. There has been a belief that the forces of nature can’t impact the functioning of technologically advanced societies … But, in Iceland, we learn from childhood that forces of nature are stronger than ourselves, and they remind us who are the masters of the universe.” (Sherwell and Sawer 2010).

Some three and a half centuries from Descartes’ death, we need to reconsider with some humility his (and our) conception of humans as the alleged “masters and possessors of nature”.

Notes

- 1.

In the official website of the Italian Civil Protection Agency, the Commissione Grandi Rischi (Major Risks Commission), short for Commissione Nazionale per la Previsione e Prevenzione dei Grandi Rischi (National Commission for Forecast and Prevention of Major Risks) is defined as the formal liaison structure (struttura di collegamento) between the National Service of Civil Protection and the scientific community. Its activities are of a techno-scientific and advisory type and include providing guidance in connection with the forecast and prevention of the different risk situations (attività consultiva, tecnico-scientifica e propositiva in materia di previsione e prevenzione delle varie situazioni di rischio).

http://www.protezionecivile.gov.it/jcms/it/commissione_grandi_rischi.wp

References

Aspinall, W. 2011. Check your legal position before advising others. Nature 477: 251. doi:10.1038/477251a. (Published online 14 September).

Beck, U. 1986. Risikogesellschaft. Frankfurt: Suhrkamp. (Risk society, towards a new modernity. London: Sage, 1992).

Brooks, H. 1972. Letter to the editor (Science and trans-science). Minerva 10: 484–486.

CEC (Commission of the European Communities). 2001. European governance. A white paper. COM (2001) 428 final. Brussels. 25 July 2001. http://eurlex.europa.eu/LexUriServ/site/en/com/2001/com2001_0428en01.pdf

Colten, C. 2005. An unnatural metropolis: Wresting New Orleans from nature. Baton Rouge: LSU Press.

Colten, C.C., and B. De Marchi. 2009. Hurricane Katrina: The highly anticipated surprise. In Città salute e sicurezza. Strumenti di governo e casi di studio, ed. M.C. Treu, 638–667. Sant’Arcangelo di Romagna: Politecnica Maggioli.

Commission on Global Governance. 1995. Our global neighbourhood. Oxford: Oxford University Press.

Cranor, C. 2006. Toxic torts: Science, law and the possibility of justice. Cambridge, MA: Cambridge University Press.

Curry, J.A., and P. Webster. 2011. Climate science and the uncertainty monster. Bulletin of the American Meteorological Society 92(12): 1667–1682. doi:10.1175/2011BAMS3139.1.

De Marchi, B., and A. Scolobig. 2012. Experts’ and residents’ views on social vulnerability to flash floods in an Alpine region. Disasters 36(2): 316–337. doi:10.1111/j.1467-7717.2011.01252.x.

Descartes, R. 1637. Discours de la méthode. Sixième partie. http://www.webphilo.com/textes/voir.php?numero=453061240.

Diemer, T. 2010. Obama sought to know ‘whose ass to kick’ on oil spill. Politics Daily, August 6. http://www.politicsdaily.com/2010/06/08/obama-sought-to-know-whose-ass-to-kick-on-oil-spill/.

Douglas, M. 1985. Risk acceptability according to the social sciences. London: Routledge and Kegan Paul.

Fackler, M. 2011. Tsunami warnings, written in stone. New York Times online, April 20.

Faulkner, H., D. Parker, C. Green, and K. Beven. 2007. Developing a translational discourse to communicate uncertainty in flood risk between science and the practitioner. Ambio 36(7): 692–703.

Funtowicz, S., and J. Ravetz. 2008. Post-normal science. The encyclopedia of earth. http://www.eoearth.org/article/Post-Normal_Science.

Funtowicz, S., and R. Strand. 2011. Change and commitment: Beyond risk and responsibility. Journal of Risk Research 14(8): 995–1003. doi:10.1080/13669877.2011.571784.

Giddens, A. 1999. Risk and responsibility. Modern Law Review 62(1): 1–10.

Grandori, G., and E. Guagenti. 2009. Prevedere i terremoti: la lezione dell’Abruzzo. Ingegneria Sismica XXVI(3): 56–61. (With an extended English abstract, Earthquake Prediction. Lesson from l’Aquila Earthquake).

Hacking, I. 1990. The taming of chance. Cambridge: Cambridge University Press.

Hall, S.S. 2011. Scientists on trial: At fault? Nature 477: 264–269. doi:10.1038/477264a. (Published online 14 September).

IPCC. 2011. Summary for policymakers of the special report on managing the risks of extreme events and disasters to advance climate change adaptation (SREX). http://ipcc-wg2.gov/SREX and www.ipcc.ch

Jasanoff, S. 1987. Contested boundaries in policy-relevant science. Social Studies of Science 17(2): 195–230.

Jasanoff, S. (ed.). 2004. States of knowledge. The co-production of science and the social order. London/New York: Routledge.

Kuhlicke, C., A. Scolobig, S. Tapsell, A. Steinführer, and B. De Marchi. 2011. Contextualizing social vulnerability: Findings from case studies across Europe. Natural Hazards 58: 789–810.

Lean, G. 2011. The nuclear industry must understand that the unexpected can happen – Even in Britain. The Telegraph, April 5.

Menoni, S., and C. Margottini (eds.). 2011. Inside risk strategies for sustainable risk mitigation. Milan: Springer.

Mitchell, J.K. (ed.). 1999. Crucibles of hazard: Megacities and disasters in transition. Tokyo: United Nations University Press.

Nowotny, H., P. Scott, and M. Gibbons. 2001. Re-thinking science. Knowledge and the public in an age of uncertainty. Cambridge: Polity.

Perrow, Ch. 1984/1999. Normal accidents: Living with high-risk technologies. New York: Basic Books. Republished in 1999 with a new afterword and a postscript on the Y2K problem. Princeton: Princeton University Press.

Perrow, Ch. 2007. The next catastrophe: Reducing our vulnerabilities to natural, industrial, and terrorist disasters. Princeton: Princeton University Press.

Petley, D. 2008. The Vaiont (Vajont) landslide of 1963. The Landslide blog. www.landslideblog.org/2008/12/vaiont-vajont-landslide-of-1963.html. Last accessed July 2012.

Quarantelli, E.L. (ed.). 1998. What is a disaster? A dozen perspectives on the question. New York: Routledge.

Quarantelli, E.L., and R.R. Dynes. 1972. When disaster strikes (It isn’t much like what you’ve heard or read about). Psychology Today, 67–70.

Risbey, J.S., and T.J. O’Kane. 2011. Sources of knowledge and ignorance in climate research. Climatic Change 108: 755–773. doi:10.1007/s10584-011-0186-6.

Ruckelshaus, W. 1984. Risk in a free society. Risk Analysis 4: 157–162.

Rudner, R. 1953. The scientist qua scientist makes value judgments. Philosophy of Science 20(1): 1–6.

Salter, L. 1988. Mandated science. Science and scientists in the making of science. Dordrecht: Kluwer Academic.

Sherwell, P., and P. Sawer. 2010. Volcano crisis: Why did they land us in a mess like this? Interview to Iceland President Ólafur Grímsson, The Sunday Telegraph, published online: 6:38PM BST, April 24.

Showalter, P., and M. Fran Myers. 1992. Natural disasters as the cause of technological emergencies: A review of the decade 1980–1989. Boulder: Natural Hazard Research and Applications Center, University of Colorado.

Steinberg, L.J., H. Sengul, and A.M. Cruz. 2008. Natech risk and management: An assessment of the state of the art. Natural Hazards 46(2): 143–152.

Stiglitz, J.E. 2011. Gambling with the planet. http://www.project-syndicate.org/commentary/stiglitz137/English. 2011-04-06.

Tallacchini, M. 2005. Legalising science. Health Care Analysis 10(3): 329–337.

Van der Sluijs, J. 2005. Uncertainty as a monster in the science-policy interface: Four coping strategies. Water Science and Technology 52: 87–92.

Weinberg, A. 1972. Science and trans-science. Minerva 10: 209–222.

Wildavsky, A. 1979. Speaking truth to power: The art and craft of policy analysis. Boston: Little Brown & Co.

Wynne, B. 1996. May the sheep safely graze? A reflexive view of the expert-lay knowledge divide. In Risk, environment and modernity: Towards a new ecology, ed. S. Lash, B. Szerszynski, and B. Wynne, 44–83. London/Beverly-Hills: Sage.

Wynne, B., U. Felt, M. Callon, M.E. Gonçalves, S. Jasanoff, M. Jepsen, P.-B. Joly, Z. Konopasek, S. May, C. Neubauer, A. Rip, K. Siune, A. Stirling, and M. Tallacchini. 2007. Science and governance. Taking European knowledge society seriously. European Commission Directorate-General for Research, EUR 22700, Office for Official Publications of the European Communities.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer Science+Business Media Dordrecht

About this chapter

Cite this chapter

De Marchi, B. (2015). Risk Governance and the Integration of Different Types of Knowledge. In: Fra.Paleo, U. (eds) Risk Governance. Springer, Dordrecht. https://doi.org/10.1007/978-94-017-9328-5_9

Download citation

DOI: https://doi.org/10.1007/978-94-017-9328-5_9

Published:

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-017-9327-8

Online ISBN: 978-94-017-9328-5

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)