Abstract

Three different approaches to the concept of probability dominate the teaching of stochastics: the classical, the frequentistic and the subjectivistic approach. Compared with each other they provide considerably different possibilities to interpret situations with randomness. With regard to teaching probability, it is useful to clarify interrelations and differences between these three approaches. Thus, students’ probabilistic reasoning in specific random situations could be characterized, classified and finally, understood in more detail. In this chapter, we propose examples that potentially illustrate both, interrelations and differences of the three approaches to probability mentioned above. Thereby, we strictly focus on an educational perspective.

At first, we briefly outline a proposal for relevant teachers’ content knowledge concerning the construct of probability. In this short overview, we focus on three approaches to probability, namely the classical, the frequentistic and the subjectivistic approach. Afterwards, we briefly discuss existing research concerning teachers’ knowledge and beliefs about probability approaches. Further, we outline our normative focus on teachers’ potential pedagogical content knowledge concerning the construct of probability. For this, we discuss the construct of probability within a modelling perspective, with regard to a theoretical perspective on the one side and with regard to classroom activities on the other side. We further emphasize considerations about situations which are potentially meaningful with regard to different approaches to probability. Finally, we focus on technological pedagogical content knowledge. Within the perspective of teaching probability, this kind of knowledge is about the question of how technology and, especially simulation, supports students understanding of probabilities.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Probability

- Classical probability

- Frequentistic probability

- Subjectivistic probability

- Randomness

- Modeling

- Teachers’ stochastical knowledge

- Teachers’ knowledge

- Data driven probability

- Problem situation

- Virtual problem situation

- Real world problem situation

- Virtual real world problem situation

- Simulation

1 Introduction

The success of any probability curriculum for developing students’ probabilistic reasoning depends greatly on teachers’ understanding of probability as well as much deeper understanding of issues such as students’ misconceptions […]. (Stohl 2005, p. 345)

With these words Stohl (2005) begins her review on teachers’ understanding of probability. The first part of this quote seems to be self-evident. However, probability is known as a difficult mathematical concept yielding “counterintuitive results […] even at very elementary levels” (Batanero and Sanchez 2005, p. 241). Even to grasp the construct of probability itself lasted centuries from elaborating the classical approach (Pascal 1654, cited in Schneider 1988), the frequentistic approach (von Mises 1952) to an axiomatic definition by Kolmogoroff (1933). Moreover, the subjectivistic approach that fits Kolmogoroff’s axioms but in some sense negate the existence of objective probabilities (De Fineti 1974; Wickmann 1990; Batanero et al. 2005a), complement the set of very different approaches to the construct of probability. Particularly when the different approaches of probability that dominate the stochastics curricula (Jones et al. 2007) are analysed a crucial question arises (Stohl 2005): What must teachers know about the construct of probability?

In this chapter, we will deduce an answer to this question from a pure educational perspective. For this we use a currently widely accepted model of Ball et al. (2008). Following this model, teacher should have knowledge of the content (CK: content knowledge; Fig. 1) and as part of CK, a knowledge that is “not typically needed for purposes other than teaching” (SCK: specialized content knowledge; Ball et al. 2008, p. 400). Teachers further should have pedagogical knowledge which combines knowledge about students (KCS), curriculum, and content and teaching (KCT) which “combines knowing about teaching and knowing about mathematics” (Ball et al. 2008, p. 401).

Different kinds of knowledge which teachers should have according to Ball et al. (2008)

Some of the relevant domains of stochastical knowledge (cf. Ball et al. 2008) are discussed in this volume, e.g. the horizon content knowledge represented by philosophical or mathematical considerations. In addition, the knowledge we gained about students (KCS) is reviewed extensively elsewhere (e.g. Jones et al. 2007). For this reason we focus on aspects of the teachers’ content knowledge (KC) with a specific perspective on the teachers’ specialized content knowledge (KCS). We center on the teachers’ pedagogical knowledge and, in particular, on the teachers’ knowledge of content and teaching (KCT) from a normative perspective. This normative perspective we expand partly referring to results of empirical research concerning students’ knowledge (Jones et al. 2007) and teachers’ knowledge (Stohl 2005).

2 Three Different Approaches to Probability (Content Knowledge)

We restrict the discussion about different approaches of probability to a brief overview of knowledge that teachers should have since deeper analyses exists already (e.g. Borovcnik 1992 or Batanero et al. 2005a). Highlighting the central ideas of each approach from their underlying philosophical points of view (including the historical context of their emerging) conduces to set out the crucial aspects, which teachers have to consider when they think about relevant conditions of teaching probability. Thus, there is a base for argumentation when outlining pedagogical ideas of teaching probability by means of specific random situations.

2.1 The Classical Approach

Although there were many other well-known historic figures dealing and thinking about probability, the classical approach is namely connected with Pierre Simon Laplace and his groundbreaking work “Essai Philosophique sur les Probabilités” from 1814. One very essential point of this work is the definition of probability, which we today know about as “Laplace-probability”: “Probability is thus simply a fraction whose numerator is the number of favorable cases and whose denominator is the number of all cases possible.” (Laplace 1814/1995, p. ix cited by Batanero et al. 2005a, p. 22) The underlying theoretical precondition of this definition is the equiprobability given for all possible outcomes of the random process being regarded. This modelling principle of having no reason for doubting on equiprobability can mostly be maintained by using artificial, symmetrical random generators like a fair die, an urn containing balls, which are not distinguishable from each other with regard to be drawn by chance, or a symmetrical spinning wheel and treating them as being theoretically perfect. Because of these preconditions the term “a priori” is connected to the classical approach to probability measurement (e.g. Chernoff 2008; Chaput et al. 2011). “A priori” means that, together with the underlying theoretical hypothesis of equiprobability being aligned before, there is no need to conduct the experiment with a random generator. In fact, probabilities that refer to a random generator can be calculated deductively without throwing a die or spinning a wheel. From a mathematical point of view, Laplace-probability is only applicable in a finite set of possible outcomes of a random process; in case of an infinite set it is not possible to adopt this approach of probability.

2.2 The Frequentistic Approach

With its strong preconditions the classical approach of probability measurement is an approach rooted in a theoretical world allowing for predictions within the empirical world. What is its advantage on the one side is its disadvantage on the other side: many random phenomena of the empirical world (more exactly said, phenomena explained as being caused by random) are not suitable to be purely theoretically regarded as a set of equiprobable outcomes of a random generator. For example, in case of throwing a pushpin: Before throwing the pushpin the first time, no theoretical arguments can be reasonably deduced so that the probability for landing on the pushpin’s needle can exactly be predicted. But already during the time of Laplace the empirical fact was well known that the frequencies of a random experiment’s outcome are increasingly stabilizing the more identical repetitions of the random experiment are taken into account. This empirical fact, called “empirical law of large numbers”, served as a basis to estimate an unknown probability as the number around which the relative frequency of the considered event fluctuates (Renyi 1992). Since the stabilization is observable independently by different subjects, the frequentist approach was postulated as being an objective approach to probability. It was Bernoulli who justified the frequentist approach of probability by formulating a corresponding theorem: Given that there is a random experiment being repeated enough times, the probability that the distance between the observed frequency of one event and its probability is smaller than a given value can approach 1 as closely as desired. Von Mises (1952) tried to take a further step forward and defined probability as the hypothetical number towards which the relative frequency tends during the stabilization process when disorderly sequences are regarded. This definition was not without criticism: It was argued that the kind and conditions of the underlying limit generation was not sufficient with regard to a theoretical point of view of the mathematical method.

From a practical point of view, it was criticized that this empirical oriented approach is only useful for events which allow for repeating the underlying experiment as often as needed, at least in theory. However, within phenomena of the real world this is often neither possible nor practicable. Apart from that, in principle, no number can be fixed to ensure an optimal estimation for the interesting probability. Nevertheless, the frequentistic approach enriched the discussion about probability because of its empirical rootedness. Because of this the term “a posteriori” is connoted with the frequentistic approach to probability (e.g. Chernoff 2008). According to the frequentistic approach, it is necessary to gain data (or rather relative frequencies) concerning the outcomes of a random generator for estimating corresponding probabilities. Within this experimental approach, probability can be interpreted as being a physical magnitude which can be measured, at least principally, as exactly as needed.

2.3 The Subjectivistic Approach

Within the subjectivist approach, probability is described as a degree of belief, based on personal judgement and information about a situation that includes objectively no randomness. One example to illustrate this fact concerns HIV infection (cf. Eichler and Vogel 2009). For a specific person the objective probability to be infected with HIV is 0 or 1. Thus, the infection of a specific person comprises no randomness. However, a specific person could hold an individual degree of belief about his (or her) status of infection (may be oriented to the base rate of infection). This degree of belief can change by information, e.g. a result of a diagnostic test. Thus, subjectivistic probabilities depend on several factors, e.g. the knowledge of the subject, the conditions of this person’s observation, the kind of event whose uncertainty is reflected on, and available data about the random phenomena.

The subjectivist point of view on probability is closely connected with the Bayesian formula being published in the late eighteenth century. The Bayesian formula allowed for revising an “a-priori”-estimation of probability by processing new information and for estimating a new “a-posteriori” probability. In general, the subjectivistic approach calls the objective character postulated by the frequentist approach or the classical approach into question. With this regard Batanero et al. (2005a, p. 24) state: “Therefore, we cannot say that probability exists in reality without confusing this reality with the theoretical model chosen to describe it.” Thus, following the subjectivistic approach, it is not possible to treat probability as being a physical magnitude and to measure it accordingly in an objective way. Beyond this fundamental difficulty, Wickmann (1990) finds fault with the frequentistic approach in being very limited with regard to its applicability within real world problems because the theoretical precondition of an unlimited number (\(n \in \mathbb{N}\)) of an experiment’s repetitions is not given within limitedness of the real world. Mostly, in reality this number is comparably small being not sufficiently large for an objective estimation of probability.

3 Teachers’ Knowledge About Probability

In the last section, we have not differentiated between common content knowledge (CCK) and a specific teacher’s specialized content knowledge (SCK). Nevertheless, we interpret knowledge concerning the distinction and connections between “a priori” probability (classical approach) and “a posteriori” probability (frequentist approach) as well as the combination of “a priori” and “a posteriori” probability (subjectivist approach) as knowledge teachers must have. In particular, the knowledge of connection between the mentioned three approaches of probability has been often claimed (e.g. Steinbring 1991; Riemer 1991; Stohl 2005; Jones et al. 2007).

However, research has yielded only scarce results of what teachers actually know about probability and about how to teach probability in the classroom (Shaughnessy 1992; Jones et al. 2007). Begg and Edwards (1999) reported weak mathematical knowledge of 34 practicing and pre-service elementary school teachers concerning the construct of probability (classical and frequentistic approach). Further, Jones et al. (2007) reported (citing the research of Carnell 1997) on difficulties which 13 secondary school teachers had when they were asked to interpret conditional probabilities that are a prerequisite for teaching the subjectivistic approach of probability. Stohl (2005) and Jones et al. (2007) do not mention further research that underlines the difficulties teachers seem to have when dealing with probabilities.

Similarly, research in stochastics education has provided scarce results about how teachers teach the three mentioned approaches of probability and, thus, about the teachers pedagogical content knowledge. A quantitative study in Germany referring to 107 upper secondary teachers showed that all of them teach the classical approach (Eichler 2008b). While 72 % of these teachers indicated to teach the frequentist approach 27 % indicated to teach the subjectivist approach. This research yielded isolated evidence that although the European classroom practice showed a strong emphasis to probability (Broers 2006) the challenge to connect all three approaches of probability is still not achieved (Chaput et al. 2011). Further, a study of Eichler (2008a, 2011) showed that even when teachers include the frequentistic approach in their classroom practice the way to teach this content can considerably differ. While one teacher emphasizes the frequentist approach by experiments with artificial random generators (urns, dice, cards) another teacher uses real data sets. Doing so, the latter teacher conforms to the paradigm of teaching the frequentist approach mentioned by Burril and Biehler (2011). Finally, Stohl (2005) reported that teachers’ knowledge about connecting different approaches of probability may be due to a limited content knowledge. However, Dugdale (2001) could show that pre-service teachers benefit from computer simulation to realize connections between the classical and the frequentist approach of probability.

Although the empirical knowledge about teachers’ content knowledge and pedagogical content knowledge concerning the three approaches of probability is low, in the following we use existing research to structure a normative proposal for teaching these three approaches of probability. Of course, such a proposal needs development along a coherent stochastics curriculum across several years including the development of the underlying mathematical competencies. Referring to this proposal, we firstly outline three situations of throwing dice (artificial random generator) that provide the three approaches of probability. We connect these three situations using a modelling perspective. Afterwards, we focus on situations which do not base on artificial random generators to emphasize the modelling perspective on the three approaches of probability. Finally, we discuss computer simulations that potentially could facilitate students’ understanding of the three approaches of probability.

4 Probability Measurement Within the Modelling Perspective

An important competency for the thinking and teaching of probability is the competency of modelling (e.g. Wild and Pfannkuch 1999). Instead of differentiating distinct phases of modelling (e.g. Blum 2002), we restrict our self to the core of mathematical modelling, i.e. the transfer between the empirical world (of data) and the theoretical world (of probabilities). The discussion about the different types of probability measurement shows that each approach of probability is touching both the theoretical world and the empirical world as well as the interrelationship of these worlds.

One very essential characteristic of the concept of probability is its orientation towards future—probabilities are used to quantify the possibility of occurring a certain event’s outcomes which still hasn’t happened. The situational circumstances and the available information (including the knowledge of the probability estimating person) are decisively determining how the process of quantifying probability is possible to go on. In principle, three different scenarios of mutual processes of looking ahead and looking back can be distinguished when the modelling process is considered as transfer between the empirical and the theoretical world (cf. Vogel and Eichler 2011). We will illustrate each kind of modelling by means of possible classroom activities. In doing so, we use rolling dice to validate these modelling processes quantitatively. Concerning these classroom activities, we restrict the discussion on secondary schools, although in primary schools in some sense the teaching of probability is also possible.

4.1 Modelling Structure with Regard to the Classical Approach

With regard to the classical approach, the modelling structure principally includes the following four steps (Fig. 2):

-

1.

Problem: Defining and structuring the problem

-

2.

Looking ahead: Building a theoretical model based on available information and making a prediction on base of this theoretical model (looking ahead; theoretical world)

-

3.

Looking back: Validating this prediction by producing data (for example, by doing simulations) based on this theoretical model and analysing these data in a step of reviewing (looking back)

-

4.

Findings: Drawing conclusions and formulating findings

A classroom activity concerning the mentioned four steps is as follows:

Looking ahead: Most of the students, who reached secondary school, presumably, do not doubt that the chance given for each side is “a priori” 1/6 (for example, by analysing the symmetrical architecture of the cube). Given that the students have experienced the variability of statistical data (Wild and Pfannkuch 1999), they might predict the frequency to get a six by 1/6 accepting qualitatively small differences between the real frequency and the predicted frequency (which corresponds to the “a priori” estimated probability) of 1/6.

Looking back: To be able to decide if the predictions are appropriate, the students have to throw the die. This can be conducted by hand or by computer simulation, following to the law of large numbers: “the more the merrier.” The empirical data represent at most qualitatively the \(\frac{1}{\sqrt{n}}\)-law (Fig. 3).

Findings: The intervals of frequencies of the six get smaller when the series of throws gets bigger. Thus, the frequencies of the six will fit the model of 1/6 better the more throws are performed. In another similar experiment, these findings lead to the empirical law of large numbers when the relative frequencies of series of throws are cumulated (Fig. 4).

All the findings mentioned above are based on the theoretical model of the probability of the six “a priori.” Thus, coping with the given problem starts by considerations into the theoretical world of probabilities that have to be validated into the empirical world of data.

4.2 Modelling Structure with Regard to the Frequentistic Approach

With regard to problems following the frequentistic approach, the modelling structure includes the following five steps (Fig. 5):

-

1.

Problem: Defining and structuring the problem

-

2.

Looking back: Analysing available empirical data of the interesting phenomena for detecting patterns of variability that might be characteristic

-

3.

Looking ahead: Building a theoretical model based on available information and extrapolating these patterns for purposes of prediction or generalization of this phenomena

-

4.

Looking back: Validating this prediction by producing new data (for example, by doing simulations) based on this theoretical model and analysing these data in a further step of reviewing

-

5.

Findings: Drawing conclusions and formulating findings

A classroom activity concerning the mentioned five steps is as follows:

Looking back: Although it is possible to hypothesize about a model concerning the cuboid die, i.e. the probability distribution, at the end nothing more can be done except throwing the cuboid die. Before that some students tend to estimate probabilities according to the relative ratio between the area of one side of the cuboid and the whole surface (there might be also other reasonable possibilities). However, such a model will not endure the validation. Thus, students get a model by throwing the cuboid die as many times as possible to get reliable information about the relative frequency of the different outcomes using the empirical law of large numbers. The students might be confident with this approach: When they follow the same procedure using the ordinary die (see above), they can get an empirical affirmation because they know the probabilities’ distribution of the ordinary die.

Looking ahead: The empirical realizations are the base for estimating probabilities for each of the cuboid’s sides. With regard to the modelling process it is crucial to remark that the relative frequencies cannot be simply adopted as probabilities. In the given problem, the geometrical architecture of the cuboid die gives a reason for assigning the same probabilities to each pair of opposite sides of the cuboid. For example, if the distribution of the relative frequencies of the cuboid is given by h(1)=0.05; h(2)=0.09; h(3)=0.34; h(4)=0.36; h(5)=0.11; h(6)=0.05, then the students should think about what to do with these unsymmetrical empirical results. One appropriate consideration obviously seems to be to take the mean of two corresponding sides and to estimate the probabilities based on the two corresponding frequencies (but not adopting one of the frequencies). Accordingly, with regard to the example mentioned before, a reasonable estimation for the distribution of probabilities could be: P(1):=P(6):=0.05; P(2):=P(5):=0.10; P(3):=P(4):=0.35. In this way, the students deduce patterns of probabilities from the available data and readjust them with regard to theoretical considerations within the modelling process.

Looking back: The validation process in the case of the cuboid die equals to the validation process of the ordinary die.

Findings: Since long series of throwing the ordinary die potentially yield in confidence concerning the stabilization of relative frequencies, relative frequencies of throwing the cuboid die might serve as an appropriate basis to estimate the probability distribution. An estimation of probabilities is not done by simply adopting the relative frequency. This is an essential finding that a partly symmetrical random generator like the cuboid die provokes. Thus, the cuboid die seems to be more appropriate than a random generator like the pushpin for which the estimation of a probability is potentially equal to the relative frequency.

4.3 Modelling Structure with Regard to the Subjectivistic Approach

With regard to problems following the subjectivist approach, the modelling structure is not restricted. Thus this structure could potentially include user-defined steps since the number of alterations between the theoretical world and the empirical world is not defined (Fig. 6).

-

1.

Problem: Defining and structuring the problem

-

2.

Looking back: Subjective estimation (theoretically or empirically based, not in general exactly distinguishable) based on given information

-

3.

Looking ahead: Coming to a provisional decision and making a subjective but theoretically based prediction

-

4.

Looking back: Inspecting the first and provisional decision by generating and processing new empirical data

…

(Now, the process of modelling alternates between the steps 3 and 4 as long as an appropriate basis to decide between two or more hypothesis is reached. The assessment of being appropriate is subjective, the prediction is the more objective the more information is processed.)

…

n. Findings: Drawing conclusions and formulating findings

A classroom activity concerning the mentioned steps is as follows:

Looking ahead: Because there are two dice and because the students have no information about the referee’s preference, the first estimation (“a priori”) could yield P(R)=0.5 for the cuboid die and P(O)=0.5 for the ordinary die.

Looking back: The referee informs the students about having thrown a 1.

Looking ahead: Qualitatively, this outcome gives evidence for the ordinary die since this die should produce a 1 more often than the cuboid die (P(1|O)>P(1|R)). By using the Bayesian formula, this information can help to get a new estimation of probability for the referee’s die-choice at the beginning:

Correspondingly there is a new estimation a posteriori with P(O):=0.769 and P(R):=0.231.

Looking back: The referee informs the students about having thrown a 2. The a posteriori-estimation of the first round of the game simultaneously is the a priori-estimation of the following second round.

Looking ahead: Qualitatively, this outcome gives again evidence for the ordinary die since this die should produce a 2 more often than the cuboid die (P(2|O)>P(2|R)). Using the Bayes formula again results in:

Correspondingly, there is a new estimation a posteriori with P(O)=0.847 and P(R)=0.153.

Looking alternating back and ahead: A series of further throws is shown in Fig. 7.

This Fig. 7 clearly shows that the step-by-step processing yields at the end a good basis for the decision.

Findings: The processing of information according to the Bayesian formula could yield an empirical based decision concerning different alternative hypotheses. The decision is not necessarily correct. Given the results above and given that the referee used covertly a third die (a cuboid die for which the 1 and 6 are on the biggest sides, and the 3 and 4 on the smallest sides), the decision for the ordinary die would actually be false in an objective sense. But within the subjective perspective, it is the optimal decision based on available information.

4.4 Some Principles of Modelling Concerning Probability

With regard to the three situations mentioned above, it is to remark that, in general, there is no fixed rule how to model a problem, it depends on kind and circumstances of the problem, on the one side, and on the problem solver’s abilities and knowledge, on the other side (cf. Riemer 1991). The basic idea of modelling is to desist from the complexity of the original question and to concentrate on aspects which are considered as being useful for getting an answer. This is at the core of modelling: A model is useful for someone within a specific situation to remedy specific demands (cf. Riemer 1991). Thus, all models cannot be judged by categories of being right or wrong, they have to be judged by their utility for solving a specific problem (cf. Eichler and Vogel 2009).

However, concerning modelling there is a fundamental difference among using a model of objective probabilities (classical and frequentistic approach) and subjectivistic probabilities. Since the former approach could validate by collecting new data, in the latter approach the validation is integrated in every step of readjusting a probability for a decision to one of different (and potentially incorrect) models.

In the cases when data can be interpreted as being empirical realizations of random processes, the probability modelling process could be considered within the paradigm of Borovcnik’s (Stachowiak) structural equation for modelling data (cf. 1973 Schupp): an unknown probability is assumed to be immanent in a random process (cf. 1988 Box and Draper) as well as to be appropriate to quantify a certain outcome of this process. Regardless of the different approaches mentioned above, the modelling process in general aims to get a reliable estimation for this unknown probability. The estimation is a modelling pattern approximating the unknown probability. It means: This estimation results from pattern which the modelling person detects by analysing the random process and its different outcomes. According to Stachowiak (1987), there is always a difference between a model and the modelled original. In the light of Borovcnik’s (2005) structural equation, this difference and the modelling pattern mentioned above sum up to the unknown probability. Thus, it could be highlighted that not the unknown probability itself is captured by the modeller but what he (or she) perceives, what he already knows about different types of random processes and accordingly reads into this process.

5 Problem Situations Concerning Three Approaches of Probability

Following Greer and Mukhopadhyay (Eichler and Vogel), for many years a well-known prejudice against teaching stochastics is the fact that stochastics is applied only by formula-based calculating within the world of simple random generators. This world is represented by random processes like throwing dice as discussed above as well as spinning wheels of fortune, throwing coins, etc. However, the world of gambling is merely connecting the theoretical world of probability with the empirical world if real data are regarded representing the statistical part of teaching stochastics. With reference to Steinbring and Strässer (2009) Schupp (Sill) states: “Statistics without probability is blind […], probability without statistics is empty” (translated by the authors). Burril and Biehler (1993, p. 61) claimed that “probability should not be taught data-free, but with a view towards its role in statistics.”

At a first view, our “dice world” considerations of Sect. 4 seem to contradict these statements. Nevertheless, our concern of emphasizing the three approaches to probability using throwing dice is to be interpreted in a slightly different perspective. Within a pedagogical point of view, the “dice world” must not be avoided at all: This world also contains data (e.g. empirical realizations of random processes like throwing dice) and provides situations which are reduced from complex aspects of real world contexts. Thus, such situations might facilitate students’ understanding of different approaches to probability. However, remaining in such a “dice world” must be avoided: Although this artificial world fulfils a crucial role for the students’ mathematical comprehension of probability, teaching probability must be enriched by situations outside the pure world of gambling. Thus, the students can deepen their understanding of probability by reflecting on its role in applying statistics. By this, probability can become more meaningful to the students. So, changing the citation of Schupp (1973) a little, it can be said that probability without accounting for realistic situations and real data stays empty for students.

Our statement is underlined from psychology with regard to theories of situated learning and anchored instruction. “Situations might be said to co-produce knowledge through activity. Learning and cognition, it is now possible to argue, are fundamentally situated.” (2005 2005, p. 32) Essential characteristics (among others) of this situational oriented approach are interesting and adequate complex problems to be mastered as well as realistic (not necessary real) and authentic learning surroundings containing multiple possible perspectives on the problem (cf. 1981 1982). By following the goal to prevent the so-called inert knowledge, the research group Cognition and Technology Group at Vanderbilt (2011) developed and applied in practical oriented research the theory of anchored instruction. The central characteristic of this approach is its narrative anchor by which the interest of the learners should be produced and the problem should be embedded in the context so that situated learning can take place. Within this perspective, we suggest that emphasizing only the “dice world” would not meet the demands of a perspective of situated learning or anchored instruction briefly outlined above.

Considering the different situations that could be regarded concerning approaches of probability measurement and different roles data are playing during the modelling process, we differentiate three kinds of problem situations (1982 Brown et al.):

-

Problem situations which contain all necessary information and represent a stochastic concept like an approach to probability in a strongly reduced context (that is usually not given within the complex circumstances of real world problems) we call virtual problem situations.

-

Problem situations which demand analysing a situation’s context that is more “authentic” and provide a “narrative anchor” (see above), but still provide demands that do not aim to reconstruct real world problems we call virtual real world problem situations.

-

Problem situations that include the aim to reproduce real problems of a society we call real world problem situations.

In the following, we exemplify three situations of the latter two kinds of problems representing the three approaches of probability outside the world of gambling.

5.1 A Virtual Problem Situation Concerning the Classical Approach

A computer applet concerning the so-called Cliffhanger Problem could be seen as an example (source: http://mste.illinois.edu/activity/cliff/ (12.2.2012)).

With regard to the underlying mathematical structure, this problem situation is one variation of the so-called random walk problems. Defined by the question for the unknown probability, it is comparable to the task to find out what the probabilities of a fair dice, or rather a fair coin, are.

A normative solution for this situation represents the classical approach of probability. Given the positions measured in steps from the edge by 0, 1, etc., and denoting probabilities for falling down at different positions by p 1,p 2,… , the probability of falling down starting at the position 1 is represented by: p 1=1/3+2/3p 2. Further, the probability of moving from position 2 to position 1 is the same as moving from position 1 to position 0 (i.e. falling down), namely p 1. Thus, the probability p 2 of falling down at the position 2 equals to \({p}_{1}^{2}\), i.e. \({p}_{2} = {p}_{1}^{2}\). Combing these two equations leads to the quadratic equation \({p}_{1} = 1/3 + 2/3 {p}_{1}^{2}\), which is solved by p 1=0.5 (the second solution 1 apparently does not make sense within the given situation).

Those students who are able to find a theoretical solution afterwards have the opportunity to check their considerations by using a simulation which is enclosed in the computer application. Thus, (virtual) empirical data could help validate the theoretical considerations.

However, the situation must not be analysed by using the classical approach of probability. Students who do not get along well with the classical approach have the opportunity first to use the computer application for simulating and thus, for getting a concrete image of the problem situation and its functioning as well as an idea what the solution could be. In this way, their attempt to find a theoretical explanation is guided by their empirically funded assumption of about 0.5 being the right solution.

As already mentioned above, there is no fixed rule how to solve such problem situations: starting with theoretical considerations followed by data based validating or starting with data based assumptions followed by theoretical explanations or even multiple changing between theoretical considerations and data based experiences, it is a modelling question by aiming to make decisions and predictions within the given computer based problem situation.

The Cliffhanger Problem is structurally equivalent to the problem of throwing an ordinary die. It provides also artificial simplifications within a given virtual situation, but emphasizes approaches to probability in a situation beyond the “dice world” mentioned above. Students have the opportunity to apply their stochastic knowledge and mathematical techniques according to a situated learning arrangement prepared by the teacher. Thus, students do not only learn something about the situation’s stochastic content but also about techniques of problem solving. In this sense, such virtual problem situations could allow for speaking of learning about stochastic modelling in a mathematically protected area under laboratory conditions.

5.2 A Virtual Real World Problem Situation Concerning the Frequentistic Approach

The candy bags contain six different colors. By focusing on only one color, the complexity could be reduced to a situation which in a teacher’s forethought could allow for modelling by using a binomial distribution (assuming that the mathematical pre-conditions are given). Provided that there is no further information available (e.g. about production process of the candy bags), only a theory-based approach to the problem seems not to be given. The empirical character of the problem situation indicates that data is needed (1981 Mandl et al.) before reliable hypothesizing about the colors’ distribution is possible.

Opening of 100 candy bags has produced data which are represented in statistics of Fig. 8.

To found a theoretical model on these descriptive analyses it is necessary to name the underlying assumptions: For example, with regard to color each candy bag is modelled as being filled independently from the others. Further, the probability of drawing one candy with a specific color is assumed not to change. Beyond that a further modelling assumption is that all candy bags contain the same number of candies. Accepting these modelling assumptions and thus, assuming there is an equal distribution of all six colors founding an underlying theoretical model, this leads to a data-based estimation of probability, e.g. P(red)=1/6 for drawing a red colored candy and P(not red)=5/6 for drawing another color. Based on these assumptions, it is possible to model the situation by a binomially distributed random variable X that counts the number r of red colored candies:

Using this model, the students can calculate the different probabilities for different numbers of red candies as well as fictive absolute numbers of candy bags containing r red colored candies. Figure 9 (left) contains the probability distribution and the distribution of absolute frequencies resulting from calculating with 10 000 fictive opened candy bags. If students do not know the binomial distribution, they could use a computer simulation (prepared by the teacher). Thus, they are able to simulate 10 000 fictive candy bags and to calculate the absolute as well as the relative frequencies of different numbers of red colored candies (Fig. 9, right).

The arrays of Fig. 9 could be used to derive criteria for validating the underlying theoretical model of equal color distribution with regard to reality. For example, the students could decide to reject the model when there are 0 or 8 (or even more) red colored candies in a new real candy bag. In this case, they would be wrong by refusing the underlying theoretical model in about 5 % of cases.

This color distribution problem is structurally equivalent to the situation of throwing the cuboid die. Thus, to model the problem situation of the candy bags, the frequentistic approach of probability seems to be necessary. A theoretical model could not be convincingly found without a sample of real data which is big enough. Data are again needed to validate the theoretical model and its implications.

In comparison with the Cliffhanger Problem, the situation of the candy bags is much less artificial. Although this problem includes modelling real data, the situation contains, however, a virtual problem of the real world. In fact, the color distribution of candy bags is not a real problem if students’ life or society is regarded. Nevertheless, within a pedagogical point of view, such data based problem situations represent situated learning (1997 1990). They are further to be considered worthwhile because they are anchored in the real world of students’ daily life and provoke students’ activity. Activity-based methods have been recognized to be a pedagogically extremely valuable approach to teaching statistical concepts (e.g. Eichler and Vogel 2009; Wild and Pfannkuch 1999). By this, such a data based approach should encounter learning scenarios concerning probability which were criticized by Greer and Mukhopadhyay (Vosniadou, p. 314) as consisting of “exposition and routine application of a set of formulas to stereotyped problems”.

5.3 A Real World Problem Situation Concerning the Subjectivist Approach

The characteristics of the rapid test represent objective frequentistic probabilities that should be based on empirical studies. However, for modelling the given situation, the situation must be regarded from a subjective perspective. By doing this, the relevant question concerns the probability of being infected with HIV given a positive test result. This situation comprises no randomness since a specific person is either infected or not infected. If this specific person has no information about his status of infection, he or she might hold an individual degree of belief, i.e. a subjective probability, of being infected or not. This subjective probability might be based on an official statement of the prevalence in a specific country, e.g. the prevalence in the Western European countries is about 0.1 %. However, this subjective probability could be higher (or lower), if a person takes into account his individual environment. Processing the test characteristics using the base rate of 0.1 % as “a priori” probability yields:

Although the information of a positive test result yields a bigger “a posteriori” probability concerning an individual degree of belief referring to the status of infection, the probability to actually be infected given a positive test result is very low. However, the test itself is not bad, but the test applied concerning a tiny base rate. Given a higher base rate, i.e. a subjective probability “a priori”, yields fundamentally different results. For example, the base rate of 25 %, which is an existing estimation of prevalence in some countries (www.unaids.com), results in P(I|+)≈0.943.

The structure of this real world problem is similar to the die game we discussed in Sect. 4.3. Thus, a model “a priori” (looking ahead) is revised by information (looking back), resulting in a model “a posteriori” (looking ahead). The difference between the rapid test and the die game is that the die could produce further information being independent from that known earlier. By contrast, it is not appropriate to repeat the rapid test to gain more than a single piece of information since the results of repeated tests could reasonably not be modelled as being independent.

As opposed to the former two problem situations, the HIV task comprises a real world problem that is relevant to society (equivalent problem situations are breast cancer and mammography, BSE, or even doping). The problem situation shows the impact of the base rate on decision making using the subjectivistic approach of probability. On the one hand, such a problem situation aims to illustrate that elementary stochastics provides methods to reconstruct problems that are relevant to life or society. On the other hand, such a problem complies with the demands of situated learning as well as anchored instruction (see above). Thus HIV infection involves an authentic and interesting real world problem situation aiming at students’ comprehension of the subjectivistic approach of probability.

6 The Role of Simulations

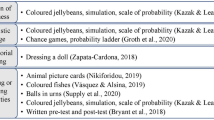

By reflecting on the different kinds of randomness concerning problem situations which are described above, simulations could play different roles within the underlying modelling activities. As well as, e.g. Girard (1994); Coutinho (Scheaffer et al.); Zimmermann (1997); Engel (Rossman); Biehler (1997); Batanero et al. (2005, 1997); Pfannkuch (2001); Eichler and Vogel (2002, 2002); Biehler and Prömmel (2003), we see possible advantages within a simulation-based approach to model situations with randomness, although there are also possible disadvantages to be kept in mind (e.g. 2005a 2005b). Within the problem situations discussed above, we identify three different roles, i.e. simulation as a tool to:

-

1.

Explore a model that already exists,

-

2.

Develop an unknown model approximately, and

-

3.

Represent data generation.

We briefly discuss these three roles of simulation including aspects of what Mishra and Koehler (2005) call technological pedagogical content knowledge (TCPK) (with regard to probabilities, see also 2009 2011; 2011 Countinho; 2001 2006).

6.1 Simulation as a Tool to Explore an Existing Model

Concerning all problem situations we have discussed, simulation could yield relevant insights into the process of data generation within a model (Heitele 1975). We emphasized this aspect concerning throwing the ordinary die: Simulation could illustrate possible results of relative frequencies dependent on the underlying model. In particular, simulation could (qualitatively or quantitatively) yield insight into the \(\frac{1}{\sqrt{n}}\)-law as well as into the empirical law of large numbers and, thus, yield “a deeper understandings of how theoretical probability […] can be used to make inferences” Biehler.

Simulations concerning a well-known model (the ordinary die) could support students when they have to make the transfer from the estimation of future frequencies based on such a well-known model to the estimation of a frequentistic probability based on relative frequencies concerning a (large) data sample. Thus, the theoretical world of probability and the empirical world of data could be convincingly connected. This is, in our opinion, one of the central pedagogical impacts of simulations: allowing for bridging these two worlds (cf. 1991 Pfannkuch; 2005 Stohl and Tarr; 2002 (Stohl and Tarr 2002, p. 322)).

6.2 Simulation as a Tool to Explore an Unknown Model

In case of the virtual problem situation of Cliffhanger, computer-based simulations producing virtual empirical data could support students by assuring them of their theoretical considerations before, during or after the modelling process. Further, simulation could be used to explore the distribution of red candies even if the (theoretical) binomial distribution is unknown. In general, simulations could help build a model where irrelevant features are disregarded, and the mathematical phenomenon is condensed in time and available to the students’ work (Eichler and Vogel 2011). Thus, formal mathematics could be reduced to a minimum, allowing students to explore underlying concepts and to experiment with varying set-ups. In this regard, the didactical role of simulations, which in sense of Coutinho (Vogel and Eichler) could be considered as being pseudo-concrete, is to implicitly introduce a theoretical model to the student, even when mathematical formalization is not possible (cf. 2011 Batanero et al.). Simulations are at the same time physical and algorithmic models of reality since they allow an intuitive work on the relevant model that facilitates later mathematical formalization (cf. 2005b Engel and Vogel). Moreover, understanding of methods of inferential statistics could be facilitated by simulating a virtual but empirical world of data (cf. 2004 2001).

6.3 Simulation as a Tool for Visualizing Data Generation

In the former two sections, we described simulation as a tool to facilitate and to enhance students’ learning of objective probabilities. Actually, the benefit of simulation concerning the subjectivistic approach of probability seems to be not as important as if objective probability is considered. Thus, regarding, for instance, the HIV infection problem simulation conducted with educational software like Excel, Fathom, Geogebra, Nspire CAS, and even professional software like R yields frequencies of an arbitrary number of tested persons. However, these simulation results seem to have no advantage in opposite to graphical visualizations like the tree with absolute frequencies (Henry 1997). By contrast, visualizing the process of data generation by simulation might facilitate the comprehension of a problem’s structure concerning the subjectivistic approach (e.g. the HIV infection problem).

Educational software like Tinkerplots is suitable to visualize the process of data generation. In the box on the left side, the population according to the base rate is represented. In the middle, the characteristics of a diagnostic test are shown. On the right side, the results are represented using a two by two table (the test characteristics concern mammography and breast cancer; Batanero et al. 2005b). The advantage of such a simulation is to be seen in running the process of data generation in front of the learner’s eyes. With regard to recent and current research in multimedia supported learning environments, there is a lot of empirical evidence (e.g. Pfannkuch 2005; Wassner and Martignon 2002; Hoffrage 2003; Salomon 1993) that using them the learners can be successfully supported in building adequate mental models of the problem domain, here in learning about conditional probabilities.

7 Conclusion

There are three approaches of modelling situations with randomness: the classical approach, the frequentistic approach and the subjectivistic approach. With regard to a pure educational perspective, we asked for what teachers must know about the underlying concept of probability. To answer this question, we first made a short proposal of essentials of a basic content knowledge teachers should have and contrasted it with empirical findings of existing research concerning teachers’ knowledge and beliefs about probability approaches. On this base, we developed a normative proposal for teaching the three approaches of probability: firstly, we outlined our normative focus on teachers’ potential pedagogical content knowledge (PCK) concerning the construct of probability, and secondly, we elaborated the underlying ideas by examples of three different classroom activities of throwing dice that provide the three approaches mentioned above. Thereby we used a modelling perspective which allows for interpreting the different approaches to probability as being different kinds of modelling a problem situation of uncertainty.

This modelling perspective also allows for leaving the gambling world and for applying different approaches to probability in problem situations which could be categorized along a scale between a virtual world, on the one side, and the real world, on the other side. In a nutshell, we finally focused on technological pedagogical content knowledge (TCPK). By discussing the question how technology, and simulation in particular, can enhance students understanding of probabilities, we distinguished three different roles which simulations can play. Especially computer-based simulations are estimated to being worthwhile because of their power of producing new data which could be interpreted as empirical realizations of a random process. But by using digital tools in modelling situations with randomness, new research questions arise.

References

Ball, D. L., Thames, M. H., & Phelps, G. (2008). Content knowledge for teaching. Journal of Teacher Education, 59(5), 389–407.

Batanero, C., & Sanchez, E. (2005). What is the nature of high school students’ conceptions and misconceptions about probability. In G. A. Jones (Ed.), Exploring probability in school: challenges for teaching and learning (pp. 241–266). New York: Springer.

Batanero, C., Henry, M., & Parzysz, B. (2005a). The nature of chance and probability. In G. A. Jones (Ed.), Exploring probability in school: challenges for teaching and learning (pp. 15–37). New York: Springer.

Batanero, C., Biehler, R., Maxara, C., Engel, J., & Vogel, M. (2005b). Using simulation to bridge teachers content and pedagogical knowledge in probability. Paper presented at the 15th ICMI study conference: the professional education and development of teachers of mathematics. Aguas de Lindoia, Brazil.

Begg, A., & Edwards, R. (1999). Teachers’ ideas about teaching statistics. In Proceedings of the 1999 combined conference of the Australian association for research in education and the New Zealand association for research in education. Melbourne: AARE & NZARE. Online: www.aare.edu.au/99pap/.

Biehler, R. (1991). Computers in probability education. In R. Kapadia & M. Borovcnik (Eds.), Chance encounters. Probability in education (pp. 169–211). Amsterdam: Kluwer Academic.

Biehler, R. (2003). Simulation als systematischer Strang im Stochastikcurriculum [Simulation as systematic strand in stochastics teaching]. In Beiträge zum Mathematikunterricht (pp. 109–112).

Biehler, R., & Prömmel, A. (2011). Mit Simulationen zum Wahrscheinlichkeitsbegriff [With simulations towards the concept of probability]. PM. Praxis der Mathematik in der Schule, 53(39), 14–18.

Blum, W. (2002). ICMI-study 14: applications and modelling in mathematics education—discussion document. Educational Studies in Mathematics, 51, 149–171.

Borovcnik, M. (1992). Stochastik im Wechselspiel von Intuitionen und Mathematik [Stochastics in the interplay between intuitions and mathematics]. Mannheim: BI-Wissenschaftsverlag.

Borovcnik, M. (2005). Probabilistic and statistical thinking. In Proceedings of the fourth congress of the European society for research in mathematics education, Sant Feliu de Guíxols, Spain (pp. 485–506).

Box, G., & Draper, N. (1987). Empirical model-building and response surfaces. New York: Wiley.

Brown, J. S., Collins, A., & Duguid, P. (1981). Situated cognition and the culture of learning. Educational Researcher, 18(1), 32–42.

Burill, G., & Biehler, R. (2011). Fundamental statistical ideas in the school curriculum and in teacher training. In C. Batanero, G. Burrill, & C. Reading (Eds.), Teaching statistics in school mathematics—challenges for teaching and teacher education (pp. 57–70). New York: Springer.

Broers, N. J. (2006). Learning goals: the primacy of statistical knowledge. In A. Rossman & B. Chance (Eds.), Proceedings of the seventh international conference on teaching statistics, Salvador: International Statistical Institute and International Association for Statistical Education. Online: www.stat.auckland.ac.nz/~iase/publications.

Carnell, L. J. (1997). Characteristics of reasoning about conditional probability (preservice teachers). Unpublished doctoral dissertation, University of North Carolina-Greensboro.

Chaput, B., Girard, J.-C., & Henry, M. (2011). Frequentistic approach: modelling and simulation in statistics and probability teaching. In C. Batanero, G. Burrill, & C. Reading (Eds.), Teaching statistics in school mathematics—challenges for teaching and teacher education (pp. 85–96). New York: Springer.

Chernoff, E. J. (2008). The state of probability measurement in mathematics education: a first approximation. Philosophy of Mathematics Education Journal, 23.

Cognition Technology Group at Vanderbilt (1990). Anchored instruction and its relationship to situated cognition. Educational Researcher, 19(6), 2–10.

Countinho, C. (2001). Introduction aux situations aléatoires dés le Collége: de la modélisation à la simulation d’experiences de Bernoulli dans l’environment informatique Cabri-géomètre-II [Introduction to random situations in high school: from modelling to simulation of Bernoulli experiences with Cabri-géomètre-II]. Unpublished doctoral dissertation, University of Grénoble, France.

De Fineti, B. (1974). Theory of probability. London: Wiley.

Dugdale, S. (2001). Pre-service teachers’ use of computer simulation to explore probability. Computers in the Schools, 17(1/2), 173–182.

Eichler, A. (2008a). Teachers’ classroom practice and students’ learning. In C. Batanero, G. Burrill, C. Reading, & A. Rossman (Eds.), Joint ICMI/IASE study: teaching statistics in school mathematics. Challenges for teaching and teacher education. Proceedings of the ICMI study 18 and 2008 IASE round table conference. Monterrey: ICMI and IASE. Online: www.stat.auckland.ac.nz/~iase/publications.

Eichler, A. (2008b). Statistics teaching in German secondary high schools. In C. Batanero, G. Burrill, C. Reading, & A. Rossman (Eds.), Joint ICMI/IASE study: teaching statistics in school mathematics. Challenges for teaching and teacher education. Proceedings of the ICMI study 18 and 2008 IASE round table conference. Monterrey: ICMI and IASE. Online: www.stat.auckland.ac.nz/~iase/publications.

Eichler, A. (2011). Statistics teachers and classroom practices. In C. Batanero, G. Burrill, & C. Reading (Eds.), Teaching statistics in school mathematics—challenges for teaching and teacher education (pp. 175–186). New York: Springer.

Eichler, A., & Vogel, M. (2009). Leitidee Daten und Zufall [Key idea of data and chance]. Wiesbaden: Vieweg + Teubner.

Eichler, A., & Vogel, M. (2011). Leitifaden Daten und Zufall [Compendium of data and chance]. Wiesbaden: Vieweg + Teubner.

Engel, J. (2002). Activity-based statistics, computer simulation and formal mathematics. In B. Phillips (Ed.), Developing a statistically literate society. Proceedings of the sixth international conference on teaching statistics (ICOTS6, July, 2002), Cape Town, South Africa.

Engel, J., & Vogel, M. (2004). Mathematical problem solving as modeling process. In W. Blum, P. L. Galbraith, H.-W. Henn, & M. Niss (Eds.), ICMI-study 14: application and modeling in mathematics education (pp. 83–88). New York: Springer.

Girard, J. C. (1997). Modélisation, simulation et experience aléatoire [Modeling, simulation and random experience]. In Enseigner les probabilités au lycée (pp. 73–76). Reims: Commission Inter-IREM Statistique et Probabilités.

Greer, B., & Mukhopadhyay, S. (2005). Teaching and learning the mathematization of uncertainty: historical, cultural, social and political contexts. In G. A. Jones (Ed.), Exploring probability in school: challenges for teaching and learning (pp. 297–324). New York: Springer.

Heitele, D. (1975). An epistemological view on fundamental stochastic ideas. Educational Studies in Mathematics, 2, 187–205.

Henry, M. (1997). Notion de modéle et modélization en l’enseignement [Notion of model and modelling in teaching]. In Enseigner les probabilités au lycée (pp. 77–84). Reims: Commission Inter-IREM.

Hoffrage, U. (2003). Risikokommunikation bei Brustkrebsfrüherkennung und Hormonersatztherapie [Risk communication in breast cancer screening and hormone replacement therapy]. Zeitschrift für Gesundheitspsychologie, 11(3), 76–86.

Jones, G. A., Langrall, C. W., & Mooney, E. S. (2007). Research in probability. In F. K. Lester (Ed.), Second handbook of research on mathematics teaching and learning (pp. 909–956). Charlotte: Information Age Publishing.

Kolmogoroff, A. (1933). Grundbegriffe der Wahrscheinlichkeitsrechnung [Fundamental terms of probability]. Berlin: Springer. (Reprint 1973).

Mandl, H., Gruber, H., & Renkl, A. (1997). Situiertes Lernen in multimedialen Lernumgebungen [Situated learning in multimedia learning environments]. In L. J. Issing & P. Klimsa (Eds.), Information und Lernen mit Multimedia (pp. 166–178). Weinheim: Psychologie Verlags Union.

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: a framework for teacher knowledge. Teachers College Record, 108(6), 1017–1054.

Pfannkuch, M. (2005). Probability and statistical inference: how can teachers enable students to make the connection? In G. A. Jones (Ed.), Exploring probability in school: challenges for teaching and learning (pp. 267–294). New York: Springer.

Renyi, A. (1992). Calcul des probabilités [Probability calculus]. Paris: Jacques Gabay. (Trans: L. Félix. Original work published 1966).

Riemer, W. (1991). Stochastische Probleme aus elementarer Sicht [Stochastic problems from an elementary perspective]. Mannheim: BI-Wissenschaftsverlag.

Rossman, A. (1997). Workshop statistics. New York: Springer.

Salomon, G. (1993). Distributed cognitions: psychological and educational considerations. New York: Cambridge University Press.

Scheaffer, R., Gnanadesikan, M., Watkins, A., & Witmer, J. (1997). Activity-based statistics. New York: Springer.

Schneider, I. (1988). Die Entwicklung der Wahrscheinlichkeitstheorie von den Anfängen bis 1933 [Development of probability from beginnings to 1933]. Darmstadt: Wissenschaftliche Buchgesellschaft.

Schnotz, W. (2002). Enabling, facilitating, and inhibiting effects in learning from animated pictures. In R. Ploetzner (Ed.), Online-proceedings of the international workshop on dynamic visualizations and learning (pp. 1–9). Tübingen: Knowledge Media Research Center.

Schupp, H. (1982). Zum Verhältnis statistischer und wahrscheinlichkeitstheoretischer Komponenten im Stochastik-Unterricht der Sekundarstufe I [Concerning the relation between statistical and probabilistic components of middle school stochastics education]. Journal für Mathematik-Didaktik, 3(3/4), 207–226.

Schupp, H. (1988). Anwendungsorientierter Mathematikunterricht in der Sekundarstufe I zwischen Tradition und neuen Impulsen [Application-oriented mathematics lessons in the lower secondary between tradition and new impulses]. Der Mathematikunterricht, 34(6), 5–16.

Shaughnessy, M. (1992). Research in probability and statistics. In D. A. Grouws (Ed.), Handbook of research in mathematics teaching and learning (pp. 465–494). New York: Macmillan.

Sill, H.-D. (1993). Zum Zufallsbegriff in der stochastischen Allgemeinbildung [About the concepts of chance and randomness in statistics education]. ZDM. Zentralblatt für Didaktik der Mathematik, 2, 84–88.

Stachowiak, H. (1973). Allgemeine Modelltheorie [General model theory]. Berlin: Springer.

Steinbring, H. (1991). The theoretical nature of probability in the classroom. In R. Kapadia & M. Borovcnik (Eds.), Chance encounters. Probability in education (pp. 135–168). Amsterdam: Kluwer Academic.

Steinbring, H. & Strässer, R. (Hrsg.) (1981). Rezensionen von Stochastik-Lehrbüchern [Reviews of stochastic textbooks]. ZDM. Zentralblatt für Didaktik der Mathematik, 13, 236–286.

Stohl, H. (2005). Probability in teacher education and development. In G. A. Jones (Ed.), Exploring probability in school: challenges for teaching and learning (pp. 345–366). New York: Springer.

Stohl, H., & Tarr, J. E. (2002). Developing notions of inference using probability simulation tools. The Journal of Mathematical Behavior, 21, 319–337.

Vogel, M. (2006). Mathematisieren funktionaler Zusammenhänge mit multimediabasierter Supplantation [Mathematization of functional relations using multimedia supplantation]. Hildesheim: Franzbecker.

Vogel, M., & Eichler, A. (2011). Das kann doch kein Zufall sein! Wahrscheinlichkeitsmuster in Daten finden [It cannot be chance! Finding patterns of probability in data]. PM. Praxis der Mathematik in der Schule, 53(39), 2–8.

von Mises, R. (1952). Wahrscheinlichkeit, Statistik und Wahrheit [Probability, statistics and truth]. Wien: Springer.

Vosniadou, S. (1994). From cognitive theory to educational technology. In S. Vosniadou, E. D. Corte, & H. Mandl (Eds.), Technology-based learning environments (pp. 11–18). Berlin: Springer.

Wassner, C., & Martignon, L. (2002). Teaching decision making and statistical thinking with natural frequencies. In B. Phillips (Ed.), Developing a statistically literate society. Proceedings of the sixth international conference on teaching statistics (ICOTS6, July, 2002). Cape Town, South Africa.

Wickmann, D. (1990). Bayes-Statistik [Bayesian statistics]. Mannheim: BI-Wissenschaftsverlag.

Wild, C., & Pfannkuch, M. (1999). Statistical thinking in empirical enquiry. International Statistical Review, 67(3), 223–248.

Zhang, J. (1997). The nature of external representations in problem solving. Cognitive Science, 21(2), 179–217.

Zimmermann, G. M. (2002). Students reasoning about probability simulation during instruction. Unpublished doctoral dissertation, Illinois State University, Normal.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media Dordrecht

About this chapter

Cite this chapter

Eichler, A., Vogel, M. (2014). Three Approaches for Modelling Situations with Randomness. In: Chernoff, E., Sriraman, B. (eds) Probabilistic Thinking. Advances in Mathematics Education. Springer, Dordrecht. https://doi.org/10.1007/978-94-007-7155-0_4

Download citation

DOI: https://doi.org/10.1007/978-94-007-7155-0_4

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-007-7154-3

Online ISBN: 978-94-007-7155-0

eBook Packages: Humanities, Social Sciences and LawEducation (R0)