Abstract

The concept of ‘information’ in five different realms – technological, physical, biological, social and philosophical – is briefly examined. The ‘gaps’ between these conceptions are discussed, and unifying frameworks of diverse nature, including those of Shannon/Wiener, Landauer, Stonier, Bates and Floridi, are examined. The value of attempting to bridge the gaps, while avoiding shallow analogies, is explained. With information physics gaining general acceptance, and biology gaining the status of an information science, it seems rational to look for links, relationships, analogies and even helpful metaphors between them and the library/information sciences. Prospects for doing so, involving concepts of complexity and emergence, are suggested.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

It is hardly to be expected that a single concept of information would satisfactorily account for the numerous possible applications of this general field.

(Claude Shannon)

Information is information, not matter or energy.

(Norbert Wiener)

Shannon and Wiener and I

Have found it confusing to try

To measure sagacity

And channel capacity

By ∑ pi log p.

(Anonymous, Behavioural Science, 1962, 7(July issue), p. 395)

Life, language, human beings, society, culture – all owe their existence to the intrinsic ability of matter and energy to process information.

(Seth Lloyd)

6.1 Introduction

‘Information’ is a notoriously slippery and multifaceted concept. Not only has the word had many different meanings over the years – its entry in the full Oxford English Dictionary of 2010, which shows its usage over time, runs to nearly 10,000 words – but it is used with different connotations in various domains. For overviews of the mutability and diversity of the information concept, see Belkin (1978), Machlup and Mansfield (1983), Qvortrup (1993), Bawden (2001), Capurro and Hjørland (2003), Gleick (2011), Ma (2012), and Bawden and Robinson (2012).

In this chapter, we will focus on usage in different domains and disciplines. As Capurro and Hjørland (2003: 356, 396) say: “almost every scientific discipline uses the concept of information within its own context and with regard to specific phenomena …. There are many concepts of information, and they are embedded in more or less explicit theoretical structures”. Our concern will be to examine these different concepts of information, and in particular the ‘gaps’ between them. By ‘gap’, we mean the discontinuities in understanding which make it difficult to understand whether the ‘information’ being spoken of in different contexts is in any way ‘the same thing’, or at least ‘the same sort of thing’; and if not, in what way – if any – the different meanings of information relate to one another. Given the current enthusiasm for ‘information physics’, exemplified by writings of Zurek, Vedral, Lloyd and others cited in Sect. 6.2.2, we place particular stress on the information concept in the physical sciences. We have also tried to emphasise the historical perspective of these ideas.

We will focus particularly on the implications of these considerations for the idea of information in the field of library/information science. Perhaps because information is at its centre, there has been particular debate about the issue in this discipline; see Belkin and Robertson (1976) for an early account and Cornelius (2002), Bates (2005), and the reviews cited above, for overviews of the on-going debate. A Delphi study carried out by Zins (2007) presents many definitions of information for information science, typically relating information to data and/or knowledge.

Indeed, it is the relationship between these concepts that is a constant concern, perhaps even an obsession, within the information sciences. This has led to two main classes of model (Bawden and Robinson 2012; Ma 2012). The first, based in Karl Popper’s ‘objective epistemology’ uses ‘knowledge’ to denote Popper’s ‘World 2’, the subjective knowledge within an individual person’s mind. ‘Information’ is used to denote communicable knowledge, recorded or directly exchanged between people; this is Popper’s ‘World 3’ of objective knowledge, necessarily encoded in a ‘World 1’ document, or physical communication. Information, in this model, is ‘knowledge in transit’. The second regards information and knowledge as the same kind of entity, with knowledge viewed as ‘refined’ information, set into some form of larger structure. This is typically presented as a linear progression, or a pyramid, from ‘data’, or ‘capta’ – data in which we are interested – through ‘information’ to ‘knowledge’, perhaps with ‘wisdom’ or ‘action’ at the far end of the spectrum or the apex of the pyramid; see, for example, Checkland and Holwell (1998), Frické (2009), Rowley (2011), and Ma (2012).

The debate on the nature of information within the information sciences, somewhat limited in scope, has been widened by some wider visions, such as those of Buckland and of Bates, which will be discussed below. The purpose of this chapter is to attempt to widen perspectives still further; to attempt, in effect, to begin to answer John Wheeler’s question ‘What makes meaning?’, by considering conceptions of meaning-free and meaningful information, and the relations between them.

We begin with a brief consideration of the way in which information is viewed in several diverse domains.

6.2 Information in Various Domains

We will examine the concept of information in five domains, in each of which information has come to be regarded, at least by some, as a central concept: technological, physical, biological, social and philosophical. For reasons of space, the discussion must be cursory, and the reader is referred for more extensive treatments (at an accessible level in the case of the scientific perspective) to Gleick (2011), Greene (2011), Deutsch (2011), Floridi (2010a), Davies and Gregersen (2010), Vedral (2010, 2012), Lloyd (2006, 2010), von Baeyer (2004), Smolin (2000) and Leff and Rex (1990, 2002).

6.2.1 Information and Communication Technology

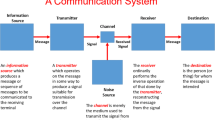

We begin with technology rather than the sciences, since the closest approach yet available to a universal formal account of information is ‘information theory’, originated by Claude Shannon and properly referred to as the Shannon-Weaver-Hartley theory in recognition of those who added to it and gave it its current form. Gleick (2011) gives a detailed account of these developments, which all occurred in Bell Laboratories, and which focused on communication network engineering issues.

The initial steps were taken by Harry Nyquist (1924), who showed how to estimate the amount of information that could be transmitted in a channel of given bandwidth – in his case, the telegraph. His ideas were developed by Ralph Hartley (1928), who established a quantitative measure of information, so as to compare the transmission capacities of different systems. Hartley (1928: 535) emphasised that this measure was “based on physical as contrasted with psychological considerations”. The meaning of the messages was not to be considered; information was regarded as being communicated successfully when the receiver could distinguish between sets of symbols sent by the originator. His measure of information, understood in this way, was the logarithm of the number of possible symbol sequences. For a single selection, the associated information, H, is the logarithm of the number of symbols:

This in turn was generalised in (1948) by Claude Shannon into a fuller theory of communication, which was later republished in book form (Shannon and Weaver 1949). This volume included a contribution by Warren Weaver that expounded the ideas in a non-mathematical and more wide-ranging manner. Weaver’s presentation arguably had greater influence in promoting information theory than any of its originators’ writings.

Following Nyquist and Hartley, Shannon defined the fundamental problem of communication as the accurate reproduction at one point of a message selected from another point. Meaning was to be ignored: as Weaver noted, “these semantic aspects of communication are irrelevant to the engineering problem” (Shannon and Weaver 1949: 3). The message in each case is one selected from the set of possible messages, and the system must cope with any selection. If the number of possible messages is finite, then the information associated with any message is a function of the number of possible messages.

Shannon derived his well-known formula for H, the measure of information

where pi is the probability of each symbol, and K is a constant defining the units. The minus sign is included to make the quantity of information, H, positive; this is necessary as a probability will be a positive number less than 1, and the log of such a number is negative.

Shannon pointed out that formulae of the general form \( \mathrm{H}\ {=} -\sum {{\mathrm{p}}_{\mathrm{i}}}\log {{\mathrm{p}}_{\mathrm{i}}} \) appear very often in information theory as measures of information, choice, and uncertainty; the three concepts seem almost synonymous for his purposes. Shannon then gave the name ‘entropy’ to his quantity H, since the form of its equation was that of entropy as defined in thermodynamics. It is usually said that the idea of using this name was suggested to him by John von Neumann. The original source for this story seems to be Myron Tribus who, citing a private discussion between himself and Shannon in Cambridge, Massachusetts, on March 30th 1961, gives the following account:

When Shannon discovered this function he was faced with the need to name it, for it occurred quite often in the theory of communication he was developing. He considered naming it ‘information’ but felt that this word had unfortunate popular interpretations that would interfere with his intended uses of it in his new theory. He was inclined towards naming it ‘uncertainty’, and discussed the matter with John Von Neumann. Von Neumann suggested that the function ought to be called ‘entropy’ since it was already in use in some treatises on statistical thermodynamics. Von Neumann, Shannon reports, suggested that there were two good reasons for calling the function ‘entropy’. ‘It is already in use under that name’, he is reported to have said, ‘and besides, it will give you a great edge in debates because nobody really knows what entropy is anyway’. Shannon called his function ‘entropy’ and used it as a measure of ‘uncertainty’, interchanging between the two words in his writings without discrimination (Tribus 1964: 354).

Whatever the truth of this, Shannon’s equating of information to entropy was controversial from the first. Specialists in thermodynamics, in particular, suggested that ‘uncertainty’, ‘spread’, or ‘dispersion’ were better terms, without the implications of ‘entropy’ (see, for example, Denbigh 1981). A particularly caustic view is expressed by Müller (2007: 124, 126): “No doubt Shannon and von Neumann thought that this was funny joke, but it is not – it merely exposes Shannon and von Neumann as intellectual snobs…. If von Neumann had a problem with entropy, he had no right to compound that problem for others … by suggesting that entropy has anything to do with information … [Entropy] is nothing by itself. It has to be seen and discussed in conjunction with temperature and heat, and energy and work. And, if there is to be an extrapolation of entropy to a foreign field, it must be accompanied by the appropriate extrapolations of temperature and heat and work”. This reminds us that, when we see later that there have been criticisms of the use of objective measures of information in the library/information sciences, these have been matched by criticisms regarding the arguably uncritical use of information concepts in the sciences.

Shannon’s was not the only attempt to derive a mathematical theory of information, based on ideas of probability and uncertainty. The British statistician R.A. Fisher derived such a measure, as did the American mathematician Norbert Wiener, the originator of cybernetics. The latter seems to have been irritated that the credit for the development was given mainly to Shannon; less than 10 years later, he was referring to “the Shannon-Wiener definition of quantity of information” and insisting that “it belongs to the two of us equally” (Wiener 1956: 63) His mathematical formalism was the same as Shannon’s but, significantly, he treated information as the negative of physical entropy, associating it with structure and order, the opposite of Shannon’s equating of information with entropy and disorder:

The notion of the amount of information attaches itself very naturally to a classical notion in statistical mechanics: that of entropy. Just as the amount of information in a system is a measure of its degree of organization, so the entropy of a system is a measure of its degree of disorganization; and the one is simply the negative of the other (Wiener 1948: 18).

Shannon’s information is, in effect, the opposite of Wiener’s, which has caused confusion ever since for those who seek to understand the meaning of the mathematics, as Qvortrup (1993) makes plain.

In Shannon’s sense, information, like physical entropy, is associated with lack of order. A set of index cards, ordered alphabetically, has low entropy, and little information; if we know the order of the alphabet, we know all there is to know about the ordering of the cards, and we can explain it to someone very briefly. If they are disordered, however, they contain, in Shannon’s sense, much more information, since we would need a much more lengthy statement to describe their arrangement.

By contrast, there is a long-standing idea that information should be associated with order and pattern, rather than its opposite; in essence, this view follows Wiener’s conception. Even Warren Weaver, arguing in support of Shannon, wrote that “the concept of information developed in this theory at first seems disappointing and bizarre – disappointing because it has nothing to do with meaning, and bizarre …. in these statistical terms the two words information and uncertainty find themselves to be partners” (Shannon and Weaver 1949: 116). Leon Brillouin, who pioneered the introduction of Shannon’s ideas into the sciences, in effect took Wiener’s stance, renaming Shannon’s entropy formulation as ‘negentropy’ (Brillouin1962). As we shall see later, Tom Stonier took the same approach, proposing a framework for a unified understanding of information in various domains.

Marcia Bates (2005) noted that the idea of ‘information as pattern/organisation’ was ‘endemic’ during the 1970s, and identified Parker (1974: 10) as the first to state explicitly in a library/information context that “information is the pattern or organization of matter and energy”. While this concept has gained some popularity, it is by no means universally accepted: Birger Hjørland (2008) speaks for those who doubt it, saying that such patterns are nothing more than patterns until they inform somebody about something. Reading (2011) exemplifies those who take a middle course, positing that such patterns are information, but ‘meaningless information’, in contrast to the ‘meaningful information’ encountered in social, and, arguably, in biological, systems.

We now consider how these ideas were applied to bring information as an entity into the physical sciences.

6.2.2 Information Physics

The idea of information as a feature of the physical world arose through studies of the thermodynamic property known as entropy. Usually understood as a measure of the disorder of a physical system, entropy has also come to be associated with the extent of our knowledge of it; the more disordered a system, the less detailed knowledge we have of where its components are or what they are doing. This idea was formalised by Zurek (1989), though it builds on earlier insights of scientists such as Ludwig Boltzmann and Leo Szilard who introduced information as a fundamental concept in science, though it was not named by them as such.

Boltzmann related the entropy of gases to their degree of disorder, measured in probability terms, showing that entropy was related to the probability of collisions between gas particles with different velocities. Hence it could be equated to the probability distribution of the states of a system, expressed by the formula

where k is Boltzmann’s constant, and W is a measure of the number of states of a system; i.e., the ways that molecules can be arranged, given a known total energy. This equation is certainly reminiscent of later information theory formalisms, but – although it is carved on his tombstone in the Vienna cemetery (actually using an Ω symbol instead of the more modern W) – Boltzmann never wrote it in this form, which is due to Max Planck (Atkins 2007). To suggest, as does von Baeyer (2003: 98), that “by identifying entropy with missing information, Boltzmann hurled the concept of information into the realm of physics” seems to be anachronistic, as well as over-dramatic.

Szilard (1929) analysed the well-worked thermodynamic problem of ‘Maxwell’s Demon’ (Leff and Rex 1990, 2002), in what was subsequently assessed as “the earliest known paper in the field of information theory” (Hargatti 2006: 46), though information is again not specifically mentioned. As Szilard himself later recalled:

… I wrote a little paper which was on a rather closely related subject [to a paper on the second law of thermodynamics]. It dealt with the problem of what is essential in the operations of the so-called Maxwell’s Demon, who guesses right and then does something, and by guessing right and doing something he can violate the second law of thermodynamics. This paper was a radical departure in thinking, because I said that the essential thing here is that the demon utilizes information – to be precise, information which is not really in his possession until he guesses it. I said that there is a relationship between information and entropy, and I computed what that relationship was. No one paid any attention to this paper until, after the war, information theory became fashionable. Then the paper was rediscovered. Now this old paper, to which for over 35 years nobody paid any attention, is a cornerstone of modern information theory (Weart and Szilard 1978: 11).

True information physics began decades later when the ideas of information theory were introduced into science, by pioneers such as Leon Brillouin (1962). In essence, this amounted to recognising a formal mathematical link between entropy and information, when information is defined in the way required by Shannon’s theory (although it should be noted that it was Wiener’s interpretation that was generally adopted) or, indeed, by other formalisms for defining information in objective and quantitative terms, such as Fisher information (Frieden 1999), a quantitative measure of information used most often in statistical analysis.

Subsequent analysis of the relation between information and physical entropy led Landauer (1991) to propose his well-known aphorism ‘information is physical’. Information must always be instantiated in some physical system; that is to say, in some kind of document, in the broadest sense. Information is subject to physical laws, and these laws can, in turn, be cast in information terms. The physical nature of information, and, in particular, its relation to entropy, continues to arouse debate; for early discussions, see Avramescu (1980) and Shaw and Davis (1983), and for recent contributions, see Duncan and Semura (2007) and Karnani, Pääkkönen, and Annila (2009).

The idea of information as a fundamental physical entity has received increasing attention in recent decades, inspired particularly by an association of information with complexity; see Zurek (1990) for papers from a seminal meeting which effectively launched this approach. Information has been proposed as a fundamental aspect of the physical universe, on a par with – or even more fundamental than – matter and energy. The American physicist John Wheeler is generally recognised as the originator of this approach, stemming from his focus on the foundations of physics, leading him to formulate what he termed his ‘Really Big Questions’, such as ‘How come existence?’ and ‘Why the quantum?’. Two of his questions involved information and meaning. In asking ‘It from bit?’, Wheeler queried whether information was a concept playing a significant role at the foundations of physics; whether it was a fundamental physical entity akin to, say, energy. Indeed, he divided his own intellectual career into three phases: from a starting belief that ‘Everything is particles’, he moved through a view that ‘Everything is fields’, to finally conclude that ‘Everything is information’, focusing on the idea that logic and information form the bedrock of physical theory (MacPherson 2008). In asking ‘What makes meaning?’, he invoked the idea of a ‘participatory universe’, in which conscious beings may play an active role in determining the nature of the physical universe. Wheeler’s views are surveyed, critiqued, and extended in papers in Barrow et al. (2004).

Other well-known contributors to the information physics approach are: Lee Smolin (2000), who has suggested that the idea of space itself may be replaceable by a ‘network of relations’ or a ‘web of information’; Seth Lloyd (2006, 2010), who argues that ‘the universe computes’ (specifically in the form of a quantum computer); and David Deutsch, who proposes that information flow determines the nature of everything that is. “The physical world is a multiverse”, writes Deutsch (2011: 304), “and its structure is determined by how information flows in it. In many regions of the multiverse, information flows in quasi-autonomous streams called histories, one of which we call our universe”. ‘Information flow’, in this account, may be (simplistically) regarded as what changes occur in what order. Finally, having mentioned the multiverse, we should note that the increasingly influential ‘many worlds’ interpretation of quantum mechanics is inextricably linked with information concepts (Byrne 2010; Saunders et al. 2010; Wallace 2012).

‘Information’ in the physical realm is invariably defined in an objective, meaning-free way. However, there has been a realisation that information content, as assessed by any of the formalisms, with randomness giving the highest information content by Shannon’s measure, is not an intuitively sensible measure. Interest has focused on ideas of complexity, and on the idea that it is from an interaction of order and randomness that complex systems, embodying ‘interesting’ information, emerge. This has led to alternative measures of complexity and order (Gell-Mann and Lloyd 1998; Lloyd 2001; 2006). Examples, with very informal explanations are: algorithmic information content (related to the length of the shortest algorithm which recreates the state; ordered systems need only short algorithms); logical depth (related to the running time of the simplest algorithm which recreates the state); and thermodynamic depth (related to the number of possible ways that a system may arrive at its present state; ‘deep’ systems are hard to create). These offer the promise of quantifying physical information in ways which, by contrast with the Shannon formalism, account for emergent properties, and lead to ‘interesting’ informational structures of potential relevance to biological and social domains, as well as providing powerful tools for explaining the physical world; for popular accounts see Gell-Mann (1995) and Barrow (2007).

At about the same time, in the 1940s, as the groundwork for an information perspective on the physical sciences was being developed, the same was happening in biology, and it is to that domain we now turn.

6.2.3 Information Biology

In biology, the discovery of the genetic code and the statement of the so-called ‘central dogma’ of molecular biology – that information flows from DNA to proteins – have led to the ideas that information is a fundamental biological property, and that the ability to process information may be a characteristic of living things as fundamental as, or more fundamental than, metabolism, reproduction, and other signifiers of life. Dartnell (2007) describes this as the Darwinian definition: life as information transmission. For this reason, it is sometimes stated that biology is now an information science; see, for example, Baltimore (2002), Maynard Smith (2010), and Terzis and Arp (2011).

Concepts of information in the biology domain are varied, and we make no attempt to summarise a complex area. Information may manifest in many contexts: the transmission of genetic information through the DNA code, the transmission of neural information, and the many and varied forms of communication and signalling between living things are just three examples. One vexed, and undecided, question is at what stage ‘meaning’ can be said to appear; some authors argue that it is sensible to speak of the meaning of a segment of DNA, while others allege that meaning is an accompaniment of consciousness. And there are those who suggest that consciousness itself is explicable in information terms; see, for instance, Tonioni’s (2008) ideas of consciousness as integrated information.

The analysis of living systems in information terms has been typically associated with a reductionist approach, with enthusiastic adoption of Shannon’s ‘meaning-free’ formulae to assess the information content of living things; see, for example Gatlin (1972). An idea similar to Wiener’s conception of information as an opposite of entropy had been proposed at an early stage by the German physicist Erwin Schrödinger (1944), one of the pioneers of quantum mechanics, who had suggested that living organisms fed upon such negative entropy. Later, the idea of information as the opposite of entropy was popularised, under the name of ‘negentropy’, by Brillouin (1962), and was adopted by researchers in several areas of biology, including ecology; for examples, see Patten (1961), Kier (1980), and Jaffe (1984).

However, such approaches, with their generally reductionist overtones, have not been particularly fruitful, leading some biologists to favour an approach focusing more on the emergence of complexity and, in various senses, meaning; see, for example, Hazen, Griffin, Carothers and Szostak (2007). Several authors have considered the ways in which information may both influence and be influenced by evolutionary processes relating this to the evolution of exosomatic meaningful information in the human realm; see, for example, Goonatilake (1994), Madden (2004), Auletta (2011), and Reading (2011).

Meaningful information, though not yet accepted as a central concept in biology, is certainly so in the realm of human, social, communicable information, to which we now turn.

6.2.4 Social Information

The social, or human, conception of information is, of course, prominent in library/information science. As such, it is likely to be most familiar to this book’s readers, and, accordingly, this section is relatively short. But information is also a significant concept in other human-centred disciplines, including psychology, semiotics, communication studies, and sociology. While the exact conceptions, and to a degree the terminology differ, all take a subjective and context-dependent view of information; one which is associated with knowledge and meaning. Information is regarded as something which is always and inevitably associated with human beings being informed about, and therefore knowing, something, and that information having a meaning to them. There are, of course, a variety of ways in which human-centred information may be conceptualised; some of these are discussed later in this chapter.

There have been attempts to bridge the gap between this conception of information and the scientific and technical perspective. A variety of means have been adopted to try to extend the kind of information theory pioneered by Shannon and by Wiener to deal with meaningful semantic information, and to develop mathematical models for information flow: see Dretske (1981) and Barwise and Seligman (1997) as examples, and see Cornelius (2002) and Floridi (2011a) for reviews. Some authors, such as Qvortrup (1993), have argued that the information theory formalisms in themselves are not as objective, external, and impersonal as suggested, but this view has not been generally accepted.

The ‘negentropy’ concept has been applied, some would argue unwisely, to such areas as economics, sociology, psychology, and theology. Müller (2007: 73), a scientist in the field of chemical thermodynamics, warns against “a lack of intellectual thoroughness in such extrapolations. Each one ought to be examined properly for mere shallow analogies”. The same is surely true for applications in the library/information sciences.

Finally, in this brief survey of information concepts in different domains, we consider philosophy. Although the sub-discipline of epistemology has studied the nature of knowledge for many centuries, information per se has not until recently been of major concern to philosophers.

6.2.5 Philosophy of Information

Before Luciano Floridi proposed his ‘philosophy of information’ in the late 1990s (as he recounts in Floridi 2010b), relatively few philosophers took any interest in information, at least in a way likely to be of value for library/information science; see Furner (2010) for an insightful overview. Knowledge, of course, is another matter; that has been studied for many centuries, as the subject matter of epistemology. The usual view in that context is that knowledge is to be understood as ‘justified, true belief’; that is to say, for something to count as knowledge, it must be believed by someone, for rational reasons, and it must be true. Information fits into epistemology in the form of testimony. This is a kind of evidence in which philosophers are becoming increasingly interested; see, for example, Audi (1997) and Adler (2010).

Apart from this, there have been a number of developments in philosophical thought which provide ways of viewing the relations between information and knowledge which offer different insights to the Popperian Three Worlds ‘objective knowledge’ model and the data-information-knowledge hierarchy, both of which were mentioned in Sect. 6.1, above. One is the work of philosophers such as Dretske (1981), who have attempted to extend Shannon theory into the area of semantic information. Another, and certainly the most ambitious to date, is that within Floridi’s ‘philosophy of information’, which will be discussed in detail later. We may also mention three other interesting ideas: David Deutsch’s (2011) concept of ‘explanatory knowledge’, which comprises our best rational explanations for the way the world is, with the understanding that such knowledge is inevitably fallible and imperfect, and our task is to improve it, not to justify it; Jonathan Kvanvig’s (2003) idea of knowledge as ‘understanding’, which allows for contradictions and inconsistencies; and Michael Polanyi’s (1962) ideas of ‘personal knowledge’ (somewhat similar to Popper’s World 2), which have been further developed within the context of library/information science; see, for example, Day (2005).

This concludes our cursory examination of information in different domains, and we now move to look specifically at the gaps between them.

6.3 Identifying the Gaps

We have noted the various ways in which the information concept can be used in five domains, and some of the attempts made to transfer concepts and formalisms between domains. We could add others, not least library/information science, but five is more than sufficient.

In principle, we could seek to describe the gap between the information concepts between each pair of domains, but a simpler and more sensible alternative is to hand. Consideration of the ways in which information is understood in the various domains leads us to two alternatives, both of which have been espoused in the literature.

The first is to consider a binary divide between those domains in which information is treated as something objective, quantitative, and mainly associated with data, and those in which it is treated as subjective, qualitative, and mainly associated with knowledge, meaning, and understanding. The former include physics and technology; the latter include the social realm. The biological treatment of information is ambiguous, lying somewhere between the two, though tending to the former the more information-centred the biological approach is, especially in the more reductive areas of genetics, genomics, and bioinformatics. The philosophical treatment depends on the philosopher; as we have seen, different philosophers and schools of philosophy take radically different views of the concept of information.

The second alternative is slightly more complex, and envisages a three-way demarcation, with the biological treatment of information occupying a distinct position between the other two extremes, physical and social.

Whichever of these alternatives is preferred, the basic question is the same: to what extent, if at all, are objective, quantitative, and ‘meaning-free’ notions of information ‘the same as’, emergent into, or at least in some way related to, subjective, qualitative, and ‘meaningful’ notions. This, we suggest, is in essence the same question as Wheeler framed when he asked ‘What makes meaning?’.

6.4 Bridging the Gaps

There have been a number of contributions to the literature suggesting, in general terms, that ‘gap bridging’ may be feasible and desirable, without giving any very definite suggestions as to how this may be done. One of the authors of this chapter has put forward a proposal of this vague nature, suggesting that information in human, biological, and physical realms is related through emergent properties in complex systems (Bawden 2007a, b). In this view, physical information is associated with pattern, biological information with meaning, and social information with understanding.

In an influential paper from 1991, Buckland (1991) distinguished three uses of the term ‘information’:

-

Information-as-thing, where the information is associated with a document;

-

Information-as-process, where the information is that which changes a person’s knowledge state;

-

Information-as-knowledge, where the information is equated with the knowledge which it imparts.

From the information-as-thing viewpoint, information is regarded as physical and objective, or at least as being ‘contained within’ physical documents and essentially equivalent to them. The other two meanings treat information as abstract and intangible. Buckland gives arguments in favour of the information-as-thing approach, as being very directly relevant to information science, since it deals primarily with information in the form of documents. Information-as-process underlies theories of information behaviour which have a focus on the experience of individuals, such as those of Dervin and Kuhlthau (Bawden and Robinson 2012). Information-as-knowledge invokes the idea, well-trodden in the library/information area, as noted above, that information and knowledge are closely related. The exact relation, however, is not an obvious one. How is knowledge to be understood here? As a ‘refined’, summarised, and evaluated form of information?; as a structured and contextualised form of information?; or information embedded within an individual’s knowledge structure? These, and other, ideas all have their supporters.

We will now look at three approaches to this kind of gap bridging which offer more concrete proposals: those of Tom Stonier, Marcia Bates, and Luciano Floridi.

Stonier, in a series of three books, advanced a model of information as an abstract force promoting organisation in systems of all kinds: physical, biological, mental, and social, including recorded information (Stonier 1990, 1992, 1997). This is a model envisaging the bridging of two distinct gaps, in the terms discussed above. Stonier regards information, in its most fundamental form, as a physical entity analogous to energy; whereas energy, in his view, is defined as the capacity to perform work, information is the capacity to organise a system, or to maintain it in a state of organisation. He regards a high-information state as one that is organised and of low physical entropy. This, he points out is the opposite of Shannon’s relation between information and entropy, which Stonier regards as an unfortunate metaphor. He links this concept of information to biological and human information, or as he prefers intelligence, and to meaning, through an evolutionary process. Salthe (2011) presents a somewhat similar viewpoint linking thermodynamic entropy and Shannon information through to meaning and semiotics.

Bates (2005, 2006) has advanced a similar all-encompassing model, which she characterises as ‘evolutionary’. It relies on identifying and interrelating a number of ‘information-like’ entities:

-

Information 1 – the pattern of organization of matter and energy

-

Information 2 – some pattern of organization of matter and energy given meaning by a living being

-

Data 1 – that portion of the entire information environment available to a sensing organism that is taken in, or processed, by that organism

-

Data 2 – information selected or generated by human beings for social purposes

-

Knowledge – information given meaning and integrated with other contents of understanding

This model, while all-encompassing and one of the more ambitious attempts at integrating information in all its contexts, remains at a conceptual and qualitative level, and introduces a potentially confusing multiplicity of forms of information and similar entities. In particular, the distinction between Information 1 and Information 2, without any clear indication of their relation, seems to perpetuate a gap, rather than bridge one. Bates describes her approach as evolutionary, and relates it to the approaches of Goonatilake (1991) and Madden (2004), mentioned earlier, though these latter start with information in the biological realm, rather than the (arguably more basic) physical world. She argues that the different forms of information are emergent, as animals – not just humans – can recognise patterns of physical information in their environment. Animals can assign meaning to such recognition, though not in a conscious act of labelling; this is reserved for the human realm. In contrast to Stonier, she argues that information is the order in the system, rather than its capacity to create order (both of which, we may remind ourselves, are the opposite of the Shannon conception). For Bates, knowing the degree of order of a system tells us how much information it contains; for Stonier, knowing how much information is in it tells us how it may be ordered.

Floridi (2010a, 2011b) has presented a General Definition of Information (GDI) as part of his Philosophy of Information, analysing the ways in which information may be understood, and opting to regard it from the semantic viewpoint, as “well-formed, meaningful and truthful data”. Data is understood here as simply a lack of uniformity; a noticeable difference or distinction in something. To count as information, individual data elements must be compiled into a collection which must be well-formed (put together correctly according to relevant syntax), meaningful (complying with relevant semantics), and truthful; the latter requires a detailed analysis of the nature of true information, as distinct from misinformation, pseudo-information and false information. Although Floridi takes account of Shannon’s formalism in the development of his conception of information, and argues that it “provides the necessary ground to understand other kinds of information” (Floridi 2010a, 78), he moves beyond it in discussing human, semantic information. His analysis also includes biological information in detail; noting that it is complex and multifaceted, he treats, for example, genetic and neural information separately. Meaningful information and knowledge are part of the same conceptual family. Information is converted to knowledge by being inter-related, a process that may be expressed through network theory. Informally, “what [knowledge] enjoys and [information] lacks … is the web of mutual relations that allow one part of it to account for another. Shatter that, and you are left with a pile of truths or a random list of bits of information that cannot help to make sense of the reality that they seek to address” (Floridi 2011b, 288). Furthermore, information that is meaningful must also be relevant in order to qualify as knowledge, and this aspect may be formally modelled, as also the distinction between ‘knowing’, ‘believing’, and ‘being informed’.

This is therefore a formalism – the only one of its kind thus far – which begins with a treatment of information in Shannon’s objective sense, and goes on, apparently seamlessly, to include subjectivity, meaning, and relevance. It provides a formal framework for understanding a variety of forms of information, and, while in itself an exercise in philosophical analysis, it may serve as a basis for other forms of consideration of information in various domains. It also, happily, includes and systematises library/information science’s pragmatic approaches to the information-knowledge relation, discussed earlier.

While undoubtedly valuable as a framework for understanding, Floridi’s conceptualisation does not of itself answer our basic question: which, if any, conceptions, and laws and principles, of information in one domain can be meaningfully applied in another? We will go on to consider this, but first we must ask: why bother?

6.5 Why Attempt to Bridge the Gaps?

The question then inevitably arises as to whether these various ideas of information have any relevance for the library/information sciences, whether it just happens that the English word ‘information’ is used to mean quite different things in different contexts, or whether any connections which there may be are so vague and limited as to be of little interest or value.

We believe that this is a question well worth investigating, and not just for the sake of having a neat and all-encompassing framework. If the gaps between different understandings of information can be bridged in some way, then there is a possibility for helpful interactions and synergies between the different conceptualisations. In particular, if it is correct that the principles of physics and of biology can be, to a significant extent, cast in information terms, then there should be the possibility, at the least, for analogies helpful to human-centred disciplines, including library/information science to be identified. This need not be in any sense a reductionist enterprise, attempting to ‘explain away’ social and human factors in physical and biological terms. Nor need it be just one way. If it is true, as some authors suggest, that there are some general principles, involving information, complexity, meaning, and similar entities and concepts, which operate very widely, beyond the scope of individual disciplines, then it is not beyond the bounds of possibility that insights from the library/information sciences could ‘feed back’ to inform physical and biological conceptions. No such examples have yet been reported, though one might envisages them coming from areas such as infometrics, information behaviour, and information organisation. This kind of feedback is, of course, in the opposite direction to the common reductive approach, by which physics informs chemistry, which informs biology, which in turn informs the social sciences. If it ever proved fruitful, it would have the potential to change the standing of the library/information sciences within the academic spectrum, giving it a place as a more fundamental discipline.

Let us, at the risk of seriously annoying those readers who will think this approach too naïve to be worth dignifying in print, give some examples of physical laws which could have ‘information analogies’; for a popular account of these laws, see Pickover (2008).

To begin with perhaps the simplest possible example, Ohm’s law states that the strength of an electric current, I, is proportional to the applied voltage, V, and inversely proportional to the resistance, R, of the material carrying the current; in appropriate units, \( \mathrm{I}=\mathrm{V}/\mathrm{R} \). We can easily envisage an information analogy, with information flow equating to current, the strength of the need for information equating to voltage, and a measure of difficulty of obtaining the necessary information equating to resistance. So, if we consider the situation of a doctor treating a seriously ill patient, and needing to know the appropriate drug treatment, we have a high value of V. If the doctor has in their pocket a mobile device giving immediate access to well-structured drug information, then we might say that R was low.

Too simple? How about Poiseille’s Law, which governs the rate of flow, Q, of a fluid with viscosity μ through a pipe of length L and internal radius r, when there is a pressure difference ∆P. The formula, assuming that the flow is smooth, without any turbulence, and that the density of the fluid never changes, is \( \mathrm{Q}=\uppi {{\mathrm{r}}^4}\Delta \mathrm{P}/8\;\upmu \mathrm{L} \). Again, we may amuse ourselves looking for information equivalents: the length of the pipe equates to the number of steps in a communication chain; its internal radius equates the amount of information which can be transferred; the viscosity equates to the difficulty in understanding the information; and so on. This is not such an odd idea: Qvortrup (1993) reminds us that Shannon’s theories are firmly based on the metaphor of information as water flowing through a pipe.

Another example is the use of the various scientific diffusion laws, which offer clear analogies with information dissemination. Avramescu (1980) gave an early example of this, using laws for the diffusion of heat in solids, equating temperature to the extent of interest in the information; Liu and Rousseau (2012) review this and other examples. Le Coadic (1987) mentions this, and similar attempts to use diffusion and transfer models drawn for both the physical and biological sciences, while cautioning against the uncritical use of such analogies. However, provided they are treated with due caution, such analogies with physical laws, even if it be accepted that there is no underlying common ‘meta-law’, may be of value as aids to teaching and learning, and to the early stages of the planning of research.

We must also mention quantum mechanics, the most fundamental scientific advance of the last century, of which both the mathematical formalism (directly) and concepts (by analogy) have been applied in a library/information science context; see, for example, Piwowarski et al. (2010, 2012) and Budd (2013).

It may be objected that this is too simplistic an approach. Physical laws are physical laws, and are too specific to their context to be adapted for human information, and do not take account of its dynamic nature, nor of the ability of humans to be more than passive recipients.

What, then, about a more general principle? In the physical sciences, the principle of least action occupies a central place, as does Zipf’s principle of least effort in the social, including library/information, sciences. Is it unreasonable to ask if there may be a reason for this, which would involve some common aspects of information in the two realms?

Or perhaps we should look rather at statistical regularities, whether these be called laws or not, and consider whether there may be some underlying reasons, if similar regularities are found in different realms. One example may be the fractal, or self-similar, nature of many physical systems, which, it is hypothesised, may also be found in technical and social information; see, for example, Ottaviani (1994) and Berners-Lee and Kagal (2008). Similarly the power law relationships underlying the main bibliometric laws (Egghe 2005) have their equivalents in power laws in the physical and biological sciences.

The important question is not which of these ideas or approaches is ‘right’. It is simply whether it is rational and appropriate to look at ideas of information in different domains, seeking for causal links, emergent properties, analogies, or perhaps just helpful metaphors. It is by no means certain that this is so. We have seen that some scientists, such as Müller, object to the use of information concepts in thermodynamics. And, conversely, many in the library/information sciences are concerned about the application of the term ‘information’ to objective, meaningless patterns. Le Coadic (1987), Cole (1994), Hjørland (2007, 2008), and Ma (2012), for example, argue in various ways against any equating of the idea of information as an objective and measurable ‘thing’ to the kind of information of interest in library and information science; this kind of information, such commentators argue, is subjective in nature, having meaning for a person in a particular context, and cannot be reduced to a single objective, still less quantifiable, definition. However, this perhaps overlooks some recent trends in the physical and biological sciences themselves: not merely the increased focus on information noted above, but a tendency towards conceptualisations involving non-linearity, systems thinking, complexity, and reflexivity. All these tend to make current scientific thinking a more amenable source of analogy for the library/information sciences, than heretofore.

It may also be objected that the physical, and to a degree the biological, sciences are necessarily mathematical in nature, whereas the library/information sciences are largely qualitative. While qualitative analysis is certainly necessary, and indeed arguably the best way of achieving understanding in this field (Bawden 2012), this is no reason not to seek for mathematical formalisms to increase and deepen such understanding. Over 30 years ago, Brookes (1980) argued that information science needed a different kind of mathematics; perhaps the library/information sciences still do.

Our view is that the questions are so intriguing that it is worth the attempt to bridge these gaps. And we believe that the valuable insights already gained from the kinds of approaches discussed above justifies this position. Wheeler’s Big Questions have not been answered yet, and it may be that studies of the relation between information as understood in the library/information sciences and as understood in other domains, may contribute to their solution.

6.6 Conclusions

We are faced with two kinds of gaps: the gaps between the concepts of information in different domains; and the gap between those who believe that it is worth trying to bridge such gaps and those who believe that such attempts are, for the most part at least, doomed to fail.

The authors of this chapter consider themselves in the first group. But we wish to be realistic about what can be attempted: as Jonathan Furner (2010, 174) puts it, “the outlook for those who would hold out for a ‘one size fits all’ transdisciplinary definition of information is not promising”. We should not look for, nor expect to find, direct and simplistic equivalences; rather we can hope to uncover more subtle linkages, perhaps to be found through the use of concepts such as complexity and emergence.

We would also do well to note Bates’ (2005) reminder that there are swings of fashion in this area, as in many other academic areas. The recent favouring of subjective and qualitative conceptions of information is perhaps a reaction to the strong objectivity of information science in preceding decades, which was itself a reaction to the perceived limitations of traditional subjectivist methods of library/information science (Bates 2005). Perhaps the time has come for something of a swing back, to allow a merging of views, and a place for different viewpoints in a holistic framework. A bridging of gaps, in fact. A number of authors have advocated this, though so far it has not happened.

At a time when other disciplines, particularly in the physical and biological sciences, are embracing information as a vital concept, it seems unwise for the library/information sciences to ignore potentially valuable insights, though we certainly wish to avoid the shallow analogies mentioned above.

Mind the gaps, certainly, but be aware of the insights that may be found within them.

References

Adler, J. 2010. Epistemological problems of testimony. In Stanford encyclopedia of philosophy, ed. E.N. Zalta (Winter 2010 edition) [online]. http://plato.stanford.edu/archives/win2010/entries/testimony-episprob. Accessed 6 Aug 2012.

Atkins, P. 2007. Four laws that drive the universe. Oxford: Oxford University Press.

Audi, R. 1997. The place of testimony in the fabric of knowledge and justification. American Philosophical Quarterly 34(4): 405–422.

Auletta, G. 2011. Cognitive biology: Dealing with information from bacteria to minds. Oxford: Oxford University Press.

Avramescu, A. 1980. Coherent information energy and entropy. Journal of Documentation 36(4): 293–312.

Baltimore, D. 2002. How biology became an information science. In The invisible future, ed. P.J. Denning, 43–55. New York: McGraw Hill.

Barrow, J.D. 2007. New theories of everything. Oxford: Oxford University Press.

Barrow, J.D., P.C.W. Davies, and C.L. Harper. 2004. Science and ultimate reality. Cambridge: Cambridge University Press.

Barwise, J., and J. Seligman. 1997. Information flow: The logic of distributed systems. Cambridge: Cambridge University Press.

Bates, M. J. 2005. Information and knowledge: An evolutionary framework for information. Information Research 10(4): paper 239 [online]. http://informationr.net/ir/10-4/paper239.html. Accessed 9 Sept 2012.

Bates, M.J. 2006. Fundamental forms of information. Journal of the American Society for Information Science and Technology 57(8): 1033–1045.

Bawden, D. 2001. The shifting terminologies of information. ASLIB Proceedings 53(3): 93–98.

Bawden, D. 2007a. Information as self-organised complexity: A unifying viewpoint. Information Research 12(4): paper colis31 [online]. http://informationr.net/ir/12-4/colis/colis31.html. Accessed 9 Sept 2012.

Bawden, D. 2007b. Organised complexity, meaning and understanding: An approach to a unified view of information for information science. ASLIB Proceedings 59(4/5): 307–327.

Bawden, D. 2012. On the gaining of understanding; syntheses, themes and information analysis. Library and Information Research 36(112): 147–162.

Bawden, D., and L. Robinson. 2012. Introduction to information science. London: Facet Publishing.

Belkin, N.J. 1978. Information concepts for information science. Journal of Documentation 34(1): 55–85.

Belkin, N.J., and S.E. Robertson. 1976. Information science and the phenomenon of information. Journal of the American Society for Information Science 27(4): 197–204.

Berners-Lee, T., and L. Kagal. 2008. The fractal nature of the semantic web. AI Magazine 29(3): 29–34.

Brillouin, L. 1962. Science and information theory, 2nd ed. New York: Academic.

Brookes, B.C. 1980. The foundations of information science. Part III. Quantitative aspects: Objective maps and subjective landscapes. Journal of Information Science 2(6): 269–275.

Buckland, M. 1991. Information as thing. Journal of the American Society for Information Science 42(5): 351–360.

Budd, J. 2013. Re-conceiving information studies: A quantum approach. Journal of Documentation 69(4), in press.

Byrne, P. 2010. The many worlds of Hugh Everett III. Oxford: Oxford University Press.

Capurro, R., and B. Hjørland. 2003. The concept of information. Annual Review of Information Science and Technology 37: 343–411.

Checkland, P., and S. Holwell. 1998. Information, systems and information systems: Making sense of the field. Chichester: Wiley.

Cole, C. 1994. Operationalizing the notion of information as a subjective construct. Journal of the American Society for Information Science 45(7): 465–476.

Cornelius, I. 2002. Theorising information for information science. Annual Review of Information Science and Technology 36: 393–425.

Dartnell, L. 2007. Life in the universe: A beginner’s guide. Oxford: Oneworld.

Davies, P., and N.H. Gregersen. 2010. Information and the nature of reality: From physics to metaphysics. Cambridge: Cambridge University Press.

Day, R.E. 2005. Clearing up “implicit knowledge”: Implications for knowledge management, information science, psychology, and social epistemology. Journal of the American Society for Information Science and Technology 56(6): 630–635.

Denbigh, K. 1981. How subjective is entropy? Chemistry in Britain 17(4): 168–185.

Deutsch, D. 2011. The beginning of infinity: Explanations that transform the world. London: Allen Lane.

Dretske, F.I. 1981. Knowledge and the flow of information. Oxford: Basil Blackwell.

Duncan, T.L., and J.S. Semura. 2007. Information loss as a foundational principle for the second law of thermodynamics. Foundations of Physics 37(12): 1767–1773.

Egghe, L. 2005. Power laws in the information production process: Lotkaian informetrics. Amsterdam: Elsevier.

Floridi, L. 2010a. Information: A very short introduction. Oxford: Oxford University Press.

Floridi, L. 2010b. The philosophy of information: Ten years later. Metaphilosophy 41(3): 402–419.

Floridi, L. 2011a. Semantic conceptions of information. In Stanford encyclopedia of philosophy, ed. E.N. Zalta (Spring 2011 edition) [online]. http://plato.stanford.edu/archives/spr2011/entries/information-semantic. Accessed 6 Aug 2012.

Floridi, L. 2011b. The philosophy of information. Oxford: Oxford University Press.

Frické, M. 2009. The knowledge pyramid: A critique of the DIKW hierarchy. Journal of Information Science 35(2): 131–142.

Frieden, B.R. 1999. Physics from Fisher information: A unification. Cambridge: Cambridge University Press.

Furner, J. 2010. Philosophy and information studies. Annual Review of Information Science and Technology 44: 161–200.

Gatlin, L.L. 1972. Information theory and the living system. New York: Columbia University Press.

Gell-Mann, M. 1995. The quark and the jaguar, revised ed. London: Abacus.

Gell-Mann, M., and S. Lloyd. 1998. Information measures, effective complexity and total information. Complexity 2(1): 44–52.

Gleick, J. 2011. The information: A history, a theory, a flood. London: Fourth Estate.

Goonatilake, S. 1991. The evolution of information: Lineages in gene, culture and artefact. London: Pinter Publishers.

Greene, B. 2011. The hidden reality: Parallel universes and the deep laws of the cosmos. New York: Knopf.

Hargatti, I. 2006. The Martians of science: Five physicists who changed the twentieth century. Oxford: Oxford University Press.

Hartley, R.V.L. 1928. Transmission of information. Bell System Technical Journal 7(3): 535–563.

Hazen, R.M., P.L. Griffin, J.M. Carothers, and J.W. Szostak. 2007. Functional information and the emergence of biocomplexity. Proceedings of the National Academy of Sciences 104(suppl. 1): 8574–8581.

Hjørland, B. 2007. Information: Objective or subjective/situational? Journal of the American Society for Information Science and Technology 58(10): 1448–1456.

Hjørland, B. 2008. The controversy over the concept of “information”: A rejoinder to Professor Bates. Journal of the American Society for Information Science and Technology 60(3): 643.

Jaffe, K. 1984. Negentropy and the evolution of chemical recruitment in ants (Hymenoptera: Formicidae). Journal of Theoretical Biology 106(4): 587–604.

Karnani, M., K. Pääkkönen, and A. Annila. 2009. The physical character of information. Proceedings of the Royal Society A 465(2107): 2155–2175.

Kier, L.B. 1980. Use of molecular negentropy to encode structure governing biological activity. Journal of Pharmaceutical Sciences 69(7): 807–810.

Kvanvig, J.L. 2003. The value of knowledge and the pursuit of understanding. Cambridge: Cambridge University Press.

Landauer, R. 1991. Information is physical. Physics Today 44(5): 23–29.

Le Coadic, Y.F. 1987. Modelling the communication, distribution, transmission or transfer of scientific information. Journal of Information Science 13(3): 143–148.

Leff, H.S., and A.F. Rex. 1990. Maxwell’s Demon: Entropy, information, computing. Bristol: IOP Publishing.

Leff, H.S., and A.F. Rex. 2002. Maxwell’s Demon 2: Entropy, classical and quantum information, computing. Bristol: IOP Publishing.

Liu, Y., and R. Rousseau. 2012. Towards a representation of diffusion and interaction of scientific ideas: The case of fiber optics communication. Information Processing and Management 48(4): 791–801.

Lloyd, S. 2001. Measures of complexity: A nonexhaustive list. IEEE Control Systems Magazine 21(4): 7–8.

Lloyd, S. 2006. Programming the universe. London: Jonathan Cape.

Lloyd, S. 2010. The computation universe. In Information and the nature of reality: From physics to metaphysics, ed. P. Davies and N.H. Gregersen, 92–103. Cambridge: Cambridge University Press.

Ma, L. 2012. Meanings of information: The assumptions and research consequences of three foundational LIS theories. Journal of the American Society for Information Science and Technology 63(4): 716–723.

Machlup, F., and U. Mansfield. 1983. The study of information; Interdisciplinary messages. New York: Wiley.

MacPherson, K. 2008. Leading physicist John Wheeler dies at age 96 [obituary]. Princeton University News Archive. http://www.princeton.edu/main/news/archive/S20/82/08G77. Accessed 15 May 2012.

Madden, D. 2004. Evolution and information. Journal of Documentation 60(1): 9–23.

Maynard Smith, J. 2010. The concept of information in biology. In Information and the nature of reality: From physics to metaphysics, ed. P. Davies and N.H. Gregersen, 123–145. Cambridge: Cambridge University Press.

Müller, I. 2007. A history of thermodynamics: The doctrine of energy and entropy. Berlin: Springer.

Nyquist, H. 1924. Certain factors affecting telegraph speed. Bell System Technical Journal 3(2): 324–346.

Ottaviani, J.S. 1994. The fractal nature of relevance: A hypothesis. Journal of the American Society for Information Science 45(4): 263–272.

Parker, E.B. 1974. Information and society. In Library and information service needs of the nation: Proceedings of a conference on the needs of occupational, ethnic and other groups in the United States, ed. C.A. Cuadra and M.J. Bates, 9–50. Washington, DC: U.S.G.P.O.

Patten, B.C. 1961. Negentropy flow in communities of plankton. Limnology and Oceanography 6(1): 26–30.

Pickover, C.A. 2008. Archimedes to Hawking: Laws of science and the great minds behind them. Oxford: Oxford University Press.

Piwowarski, B., L. Frommholz, M. Lalmas, and van K. Rijsbergen. 2010. What can quantum theory bring to information retrieval? In Proceedings of 19th ACM conference on information and knowledge management, 59–68. New York: ACM Press.

Piwowarski, B., M.R. Amini, and M. Lalmas. 2012. On using a quantum physics formalism for multidocument summarization. Journal of the American Society for Information Science and Technology 63(5): 865–888.

Polanyi, M. 1962. Personal knowledge. Chicago: University of Chicago Press.

Qvortrup, L. 1993. The controversy over the concept of information. An overview and a selected and annotated bibliography. Cybernetics and Human Knowing 1(4): 3–24.

Reading, A. 2011. Meaningful information: The bridge between biology, brain and behavior. Berlin: Springer.

Rowley, J. 2011. The wisdom hierarchy: Representations of the DIKW hierarchy. Journal of Information Science 33(2): 163–180.

Salthe, S.N. 2011. Naturalizing information. Information 2: 417–425 [online]. http://www.mdpi.com/2078-2489/2/3/417. Accessed 9 Sept 2012.

Saunders, S., J. Barrett, A. Kent, and D. Wallace (eds.). 2010. Many worlds? Everett, quantum theory and reality. Oxford: Oxford University Press.

Schrödinger, E. 1944. What is life? The physical aspect of the living cell. Cambridge: Cambridge University Press.

Shannon, C.E. 1948. A mathematical theory of communication. Bell System Technical Journal 27(3): 379–423.

Shannon, C.E., and W. Weaver. 1949. The mathematical theory of communication. Urbana: University of Illinois Press.

Shaw, D., and C.H. Davis. 1983. Entropy and information: A multidisciplinary overview. Journal of the American Society for Information Science 34(1): 67–74.

Smolin, L. 2000. Three roads to quantum gravity: A new understanding of space, time and the universe. London: Weidenfeld and Nicolson.

Stonier, T. 1990. Information and the internal structure of the universe. Berlin: Springer.

Stonier, T. 1992. Beyond information: The natural history of intelligence. Berlin: Springer.

Stonier, T. 1997. Information and meaning: An evolutionary perspective. Berlin: Springer.

Szilard, L. 1929. Über die Entropieverminderung in einem thermodynamischen System bei Eingriffen intelligenter Wesen. [On the decrease of entropy in a thermodynamic system by the intervention of intelligent beings.] Zeitschrift für Physik 53(6): 840–856. [Translated into English by A. Rapoport and M. Knoller, and reproduced in Leff and Rex (1990), pp 124–133.]

Terzis, G., and R. Arp (eds.). 2011. Information and living systems: Philosophical and scientific perspectives. Cambridge: MIT Press.

Tonioni, G. 2008. Consciousness as integrated information: A provisional manifesto. The Biological Bulletin 215(3): 216–242.

Tribus, M. 1964. Information theory and thermodynamics. In Heat transfer, thermodynamics and education: Boelter anniversary volume, ed. H.A. Johnson, 348–368. New York: McGraw Hill.

Vedral, V. 2010. Decoding reality: The universe as quantum information. Oxford: Oxford University Press.

Vedral, V. 2012. Information and physics. Information 3(2): 219–223.

Von Baeyer, C. 2004. Information: The new language of science. Cambridge: Harvard University Press.

Wallace, D. 2012. The emergent multiverse: Quantum theory according to the Everett interpretation. Oxford: Oxford University Press.

Weart, S.R., and G.W. Szilard (eds.). 1978. Leo Szilard: His version of the facts. Selected recollections and correspondence. Cambridge: MIT Press.

Wiener, N. 1948. Cybernetics, or control and communication in the animal and the machine. New York: Wiley.

Wiener, N. 1956. I am a mathematician. London: Victor Gollancz.

Zins, C. 2007. Conceptual approaches for defining data, information and knowledge. Journal of the American Society for Information Science and Technology 58(4): 479–493.

Zurek, W.H. 1989. Algorithmic randomness and physical entropy. Physical Review A 40(8): 4731–4751.

Zurek, W.H. (ed.). 1990. Complexity, entropy and the physics of information, Santa Fe institute series. Boulder: Westview Press.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer Science+Business Media B.V.

About this chapter

Cite this chapter

Robinson, L., Bawden, D. (2014). Mind the Gap: Transitions Between Concepts of Information in Varied Domains. In: Ibekwe-SanJuan, F., Dousa, T. (eds) Theories of Information, Communication and Knowledge. Studies in History and Philosophy of Science, vol 34. Springer, Dordrecht. https://doi.org/10.1007/978-94-007-6973-1_6

Download citation

DOI: https://doi.org/10.1007/978-94-007-6973-1_6

Published:

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-007-6972-4

Online ISBN: 978-94-007-6973-1

eBook Packages: Humanities, Social Sciences and LawPhilosophy and Religion (R0)