Abstract

I examine the ungulate remains from late Middle Paleolithic (MP) Kebara Cave (Israel) and offer evidence pointing to overhunting by Levantine Neanderthals toward the close of the MP: (1) the frequency of red deer and aurochs declined over the course of the sequence, largely independent of major fluctuations in the Levantine speleothem climate record; (2) the proportion of juvenile gazelles and fallow deer increased in the younger levels, as did the proportion of young adults; (3) upward in the sequence, hunters brought back fewer gazelle and fallow deer heads, suggesting that they either had to travel farther to hunt, or that they took many more animals per trip, perhaps in cooperative kills. Taken together, these observations, in conjunction with evidence from other sites in the region, suggest that the resource intensification characteristic of the “Broad Spectrum Revolution” (BSR), may already have begun in the latter part of the MP.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

A lot has been written about the “Broad Spectrum Revolution” (BSR) or, as it is sometimes called, the “Broadening Food Spectrum,” since the idea was first introduced over four decades ago by Kent Flannery (1969). As originally envisioned, the BSR denotes a fairly rapid shift in resource exploitation strategies toward the end of the Pleistocene stemming from an imbalance between available resources and the number of mouths to be fed. This imbalance was the result of an ongoing influx into marginal habitats of “daughter” groups that had budded off from growing “donor” populations in richer “core” areas. The immigration of surplus population into the less productive habitats gave rise to resource stresses to which the “recipient” populations responded by intensifying their reliance on lower-ranked plant and animal foods, and on more labor-intensive or costly methods of procuring and processing these foods. In the Near East these changes in foodways are generally seen as critical initial steps that ultimately led to the domestication of plants and animals and the emergence of village-based farming economies (e.g., Stiner et al. 2000; Stiner 2001; Piperno et al. 2004; Weiss et al. 2004a, b; Munro 2003, 2004, 2009a, b; Stutz et al. 2009; Speth 2010a).

Archaeologists often see the BSR, a stress-related response by foragers to an imbalance between available resources and population, as roughly synonymous with increasing diet breadth—that is, an expansion of the diet under increasing levels of stress to include a wider range of food resources with lower net return rates, such as small mammals, birds, marine mollusks, terrestrial snails, and a host of comparatively labor-intensive wild plant foods, including the wild progenitors of wheat and barley (Flannery 1969; Winterhalder 1981). Unlike most traditional diet-breadth models in behavioral ecology, however, archaeologists also recognize that the BSR entailed an increase in the intensity of food-processing, incorporating techniques like parching, grinding, winnowing, roasting, baking, boiling, and undoubtedly a host of other methods as well, many currently invisible to archaeology, designed to enhance the nutritional yield of these lower-order, sometimes poorly digestible or even toxic food resources.

In his original paper, Flannery (1969: 74, 77) suggested that the BSR got underway roughly midway through the Upper Paleolithic, sometime prior to about 22 kyr. Many subsequent studies tended to shift the focus of attention upward in time, placing the onset of the BSR closer to the end of the Pleistocene, which, in the Southern Levant, would be during the Epipaleolithic, and especially toward the end of this slice of the Near Eastern archaeological record, the Natufian, now dated between ~17 and ~12 cal kBP (Hayden 1981; Blockley and Pinhasi 2011: 99).

During the past decade or so archaeologists have presented new evidence, both faunal and botanical, which suggests that the BSR may already have been underway before the Natufian, probably beginning during the early stages of the Epipaleolithic, or even further back into the preceding UP (e.g., Stiner 2009a; Stutz et al. 2009)—in other words, more or less in the timeframe Flannery had originally suggested (but see Bar-Oz et al. 1999, and Bar-Oz and Dayan 2003 for recent taphonomic studies of faunal remains from Israeli Epipaleolithic sites which do not seem to show the expected dietary trend). Perhaps the most compelling evidence in the Southern Levant for significant Late Pleistocene broadening of the subsistence base comes from the ~23 kyr Israeli site of Ohalo II, with its abundant remains of wild cereals, fish, birds, and other low-ranking or “second-order” resources (Richards et al. 2001; Piperno et al. 2004; Weiss et al. 2004a, b; see also Stiner 2003 and Aranguren et al. 2007 for comparably early evidence from Europe).

Much of the faunal work that has been conducted since the concept of the BSR was first introduced has been concerned primarily with changes in the proportion of large versus small taxa. Zooarchaeologists have also looked at the frequency of juvenile versus adult individuals (e.g., Davis 1983; Bar-Oz et al. 1999; Bar-Oz 2004; Atici 2009; Munro 2009a, b). More recently, Mary Stiner, Natalie Munro, and colleagues have pointed out that the BSR involved more than simply incorporating more small-game animals into the diet, it involved a switch toward more difficult-to-catch small game, such as hares and birds, and in some areas toward greater use of freshwater and marine resources (Richards et al. 2001; Stiner et al. 2000; Stiner 2001, 2009a; Munro 2003, 2004, 2009a, b; see also Stiner and Munro 2011 for an interesting recent study of this same process in Greece).

There is now limited but intriguing evidence that the BSR in the Southern Levant may actually have gotten underway even earlier than the early- to mid-UP date that nowadays is commonly accepted. In 2004 and 2006 Jamie L. Clark and I (Speth 2004a, 2010a; Speth and Clark 2006) suggested, largely on the basis of data from Kebara Cave (Israel), that in the Southern Levant the beginning of this process of resource intensification, reflected in particular by the overhunting of the largest available ungulates and the targeting of adults and juveniles of smaller ungulate taxa, may have gotten underway as early as 50–55 kyr during the latter part of the MP—in other words, the BSR, in this region at least, may have been initiated by Neanderthals—and by their quasi-contemporary Anatomically Modern Human neighbors who appear to have been hunting the same basic suite of ungulates—well in advance of the timeframe usually envisioned by archaeologists, a possibility already anticipated nearly a quarter of a century ago by Simon Davis and colleagues (1988). The suggestion that MP hominins in the Near East might have overhunted their big-game resources certainly seemed quite far out on the proverbial limb when Davis first suggested the possibility in 1988, and remained so as recently as 2006 when Jamie Clark and I published a more detailed look at the same issue using data from the same site. In an academic environment that saw Neanderthals as a different species from our own, and a decidedly inferior one at that—an “archaic” hominin who opportunistically scavenged already dead carcasses rather than a strategizing hunter of big game—the idea that the BSR, a process which ultimately led to the origins of agriculture, could have been initiated by a less-than-human ancestor seemed unlikely, if not downright preposterous. But in the last few years the intellectual environment has undergone a radical transformation. Almost no one today questions whether Neanderthals and their contemporaries across Asia could hunt. We now see them as formidable hunters, capable of killing the biggest and most dangerous animals on the Pleistocene landscape (Bratlund 2000; Gaudzinski 1998, 2000, 2006; Speth and Tchernov 2001, 2007; Adler et al. 2006; Adler and Bar-Oz 2009; Gaudzinski-Windheuser and Niven 2009; Stiner 2009b; Zhang et al. 2009). In fact, largely on the basis of nitrogen isotope data, it has become fashionable nowadays to see Neanderthals as “top predators,” right up there with cave lions and hyenas (Bocherens 2009, 2011). Even more startling is the new genetic evidence that challenges the very foundations of the view that placed Neanderthals into a separate species of hominin. What may have gone extinct were populations of humans, not an entire species, and their disappearance left more than caves filled with artifacts and animal bones; Eurasians carry their genes as well (Garrigan and Kingan 2007; Hawks et al. 2008; Wall et al. 2009; Green et al. 2010; Yotova et al. 2011). So the idea that these “top predators” might have set the stage for the BSR by overexploiting their large-mammal resources seems less far-fetched today than it did only a few short years ago.

The major obstacle now to entertaining this sort of scenario is the common assumption that Neanderthal populations, as well as quasi-contemporary populations of Anatomically Modern Humans who likely occupied the same region throughout much of the 200–250 kyr span of the MP, were too small, too mobile, and too widely scattered to have had any detectable impact on their prey populations (Kuhn and Stiner 2006; Shea 2008). But how small is small? Smaller than Clovis (early North American Paleoindian) populations that many have argued were directly or indirectly responsible for the extinction of mammoths and other megafauna in late Pleistocene North America (e.g., Alroy 2001; Haynes 2002; Johnson 2002; Barnosky et al. 2004; Brook and Bowman 2004; Lyons et al. 2004; Martin 2005; Surovell et al. 2005)? Smaller than Aboriginal populations in the arid central and western deserts of Australia during the Late Pleistocene who, not long after their entry into Sahul, may have driven their own megafauna to extinction armed with only wooden-tipped spears, and aided perhaps by fire (White 1977: 26; Roberts et al. 2001; Turney et al. 2001; Kershaw et al. 2002)? While we lack solid estimates of just how big or small Neanderthal populations might have been, and their numbers undoubtedly varied considerably across both space and over time, there is evidence, albeit limited and indirect, that in Europe, at least, Middle Paleolithic populations did grow toward the end of the MP and that this growth did not simply track the paleoclimatic record (e.g., Richter 2000; Lahr and Foley 2003; Zilhão 2007; Bocquet-Appel and Tuffreau 2009).

After about 90–100 kyr, Levantine Neanderthal populations (and perhaps those of Anatomically Modern Humans) may have grown as well. Thus, not only does the number of late MP sites increase, but many cave sites show a marked increase in the depth of their culture-bearing deposits, reflecting recurrent visits by their human occupants to the same locality. Many of these sites also display significantly greater densities of artifacts and faunal remains, as well as hearths stacked one upon another, many showing clear evidence of rebuilding and reuse, raked-out ash lenses and ash dumps, and actual trash middens along the perimeter of the living areas (e.g., Hovers 2006; Meignen et al. 2006; Speth 2006; Shea 2008: 2264; Speth et al. 2012). And finally, burials of both Neanderthals and Anatomically Modern Humans, including multiple interments, are unusually common in the Levant by comparison to other regions inhabited by MP hominins, and may well point to larger populations, greater residential stability, emerging corporateness, and increasing delineation of territorial space:

Aquitaine and the Levant contain relatively large numbers of burials as well as places of multiple burial, which might suggest that burial was practised more widely in these areas, and that, by contrast, Neanderthals in other regions either did not bury their dead, or did not practise it frequently. These are regions where Mousterian archaeology suggests that Neanderthals were particularly numerous, and it is tempting to suggest that the practice of burial may have been connected to population size, and perhaps to a sense of territoriality (Pettitt 2010: 130).

It is intriguing to see how our preconceived notions color the way we interpret data of this sort. In eastern North America, archaeologists who deal with the Early and Middle Archaic, periods which fall squarely within the Holocene and are therefore unquestionably the product of fully modern humans like ourselves, almost universally see this sort of trend as compelling evidence for increasing populations, tighter packing of territories within a region, and declining overall levels of residential mobility, reflected most tellingly by limited use of non-local lithic raw materials, but also, at least initially, by the scarcity of other material evidence for intergroup interaction and exchange (Ford 1974; Speth 2004b). An analogous pattern is nicely documented in Australia by Pardoe (1988, 1994, 1995) using skeletal morphology and other data. So why is a scenario of this nature not even on the table for discussion when we think about late MP hominins? And if Neanderthals, as so many would argue, were “top predators,” killing aurochs, bison, mammoth, reindeer, horses, rhinos, cave bears, wild boar, and other large, very dangerous animals, and at times using communal methods to do so (Gaudzinski-Windheuser and Niven 2009; Rendu et al. 2012), what is so implausible about their having been sufficiently effective at what they did to be capable of overexploiting them, especially the largest ones with very slow reproductive rates?

And what if MP hominins used fire to manipulate or manage their landscape and resources, much as virtually all historically and ethnographically documented hunters and gatherers did, and in some places still do (e.g., Lewis 1982)? Based on both palynological data and the frequency of microscopic charcoal particles in ocean cores, as early as 50 kyr landscape burning as a form of resource management may have been employed by small hunting and gathering populations as they colonized Southeast Asia, New Guinea, and Australia (Fairbairn et al. 2006; Barker et al. 2007: 256, 257), and anthropogenic fire may even have played a role in resource management and megafaunal extinctions during the Paleoindian colonization of the Americas (Pinter et al. 2011). If Neanderthals did, in fact, use fire in this manner, their impact on wildlife populations could have been substantial even if their own populations were very small. All of this is quite speculative, of course, but not beyond the realm of plausibility. Daniau et al. (2010: 7), in fact, explored this issue in Western Europe using long-term charcoal records. While they found no compelling evidence that either Neanderthals or their modern human successors made intensive use of fire to manage their resources, they do not rule it out either:

At a macro level at least, the colonisation of Western Europe by Anatomically Modern Humans did not have a detectable impact on fire regimes. This, however, does not mean that Neanderthals and/or Modern Humans did not use fire for ecosystem management but rather that, if this were indeed the case, the impact on the environment of fire use is not detectable in our records, and was certainly not as pronounced as it was in the biomass burning history of Southeast Asia.

In the Levant there is fairly convincing evidence of humanly manipulated landscape burning extending back at least into the Epipaleolithic (Emery-Barbier and Thiébault 2005; Turner et al. 2010), but whether humans were using fire as a tool to manage their local resources prior to this remains an open question, one well worth exploring further.

Of course, even if it can be shown with some degree of certainty that the large-game resources exploited by Kebara’s Neanderthal inhabitants declined over time, this does not demonstrate that the change was the result of human overexploitation relative to what was available to them on the landscape. Rather than overhunting, the decline could reflect climate-driven environmental changes that reduced local game populations. However, as I will attempt to show momentarily, paleoclimatic data, particularly isotopic studies of Israeli speleothems, reveal no obvious correlation between major climatic fluctuations and the proportional representation of the larger taxa in the Kebara assemblage.

A more difficult problem to resolve is whether the late MP decline in the larger taxa is strictly a local phenomenon to be found only at Kebara and its immediate environs, or one that affected the region more generally, and further work elsewhere in the Levant could, in fact, show that it did not occur farther afield. Fortunately, this is a potentially tractable matter that can be addressed through more regionally focused comparative studies of MP faunas. This will not be an easy or straightforward task, however, as only a few other MP assemblages have been published in detail as yet, and these are far from sufficient to cover the entire region or the more than 200 kyr-span of the MP.

Moreover, there are many other factors that can intervene to complicate matters. For example, even if overhunting were, in fact, widespread in the region toward the close of the MP, the impact of such intensified hunting practices may not become evident everywhere at the same time, or at the same rate, or to the same extent (some of the more important reasons for variability of this sort are discussed at the end of the paper). In addition, depletion of large-game resources at the regional scale may be difficult to see if the sites that are being compared were occupied at different times of the year, and especially if the occupations represent different functional poses within their respective settlement systems (e.g., basecamps vs. short-term hunting stations or transitory camps). Differences in site function may become particularly problematic if comparisons are made between open-air hunting locations situated close to fixed watering points where large game could be ambushed, such as Biq’at Quneitra (Davis et al. 1988; Goren-Inbar 1990) or Far’ah II (Gilead and Grigson 1984), and basecamp occupations in caves such as Kebara or Amud (Hovers et al. 1991; Rabinovich and Hovers 2004) to which game had to be transported. Ethnographic studies among the Hadza (Tanzania) and the Kalahari San or Bushmen (Botswana/Namibia) show that remains of large game are likely to be over-represented in open-air ambush localities, while easily transportable small game will be more evident in cave sites (O’Connell et al. 1988, 1990; Brooks 1996). The contrast between caves and open-air kill localities is likely to be even more pronounced in sites predating the UP, the earliest time period for which we have reasonably convincing evidence in the form of fire-cracked rock that hunters began to transport large-mammal vertebrae and other bones of low meat or marrow utility back to camp expressly for the purpose of grease-rendering (Stiner 2003; Speth 2010a).

So my goal in this paper is quite modest. What I hope to show is that Kebara provides reasonably clear evidence for a steady decline after about 55–60 kyr in the proportional representation of large game, particularly red deer (Cervus elaphus) and aurochs (Bos primigenius), and a concomitant increase in the importance of gazelles (Gazella gazella) and fallow deer (Dama mesopotamica), including juveniles of these taxa; and that these changes are very likely unrelated or at best only loosely driven by climatic fluctuations during the Late Pleistocene. We must await future zooarchaeological work elsewhere in the Southern Levant and beyond to decide whether Kebara’s sequence is an isolated occurrence, or instead part of a broader trend brought about either by (1) growing human populations (via increases in fertility and/or survivorship of autochthonous residents, or the influx of MP hominins from more northerly regions of Eurasia where MIS 4 climatic conditions were deteriorating, as suggested many years ago by Bar-Yosef 1995: 516); or (2) by a significant change in the way Levantine hominins hunted large animals (e.g., the introduction of new hunting weapons, or a shift from reliance on solitary to communal methods of procurement, or possibly even the increased use of fire to procure or manage their plant and animal resources); or (3) some combination of these.

Kebara and Its Fauna

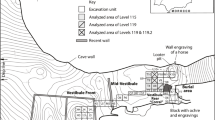

Kebara is a large cave on the western face of Mt. Carmel (Israel), about 30 km south of Haifa and 2.5 km east of the present-day Mediterranean shoreline (Fig. 3.1). Two major excavations at the site, the first conducted by Moshe Stekelis between 1951 and 1965 (Schick and Stekelis 1977), the second by a French-Israeli team co-directed by Ofer Bar-Yosef and Bernard Vandermeersch between 1982 and 1990 (Bar-Yosef 1991; Bar-Yosef et al. 1992), yielded many thousands of animal bones and stone tools from a 4-m deep sequence of MP deposits dating between approximately 60 and 48 kyr (Valladas et al. 1987).

Stekelis’s excavations were conducted within 2 × 2-m grid squares using arbitrary horizontal levels or “spits,” typically 10 or 20 cm in thickness. Almost all of the excavated deposits were screened and all faunal material, including unidentifiable bone fragments, were kept. Depths for levels were recorded in cm below a fixed datum. In the more recent work at the site, directed by Bar-Yosef and Vandermeersch, the excavators employed 1-m grid units (often divided into smaller subunits), many items (including fauna) were piece-plotted, and wherever possible they followed the natural stratigraphy of the deposits, using levels that seldom exceeded 5 cm in thickness. Depths were again recorded in cm below datum, using the same reference point that Stekelis had used. The newer excavations recognized nine natural stratigraphic levels (levels or couches) within the Mousterian sequence: level XIII (bottom) to level V (top). The Early Upper Paleolithic (EUP) sequence begins with level IV.

One of the problems that has plagued the analysis of the Kebara fauna from the outset is how to make effective use of all of the faunal materials recovered by both Stekelis and Bar-Yosef-Vandermeersch. The problem stems from their differing strategies for handling the site’s complex stratigraphy. As already noted, Stekelis, like many of his contemporaries in the 1950s, excavated the site using relatively thick, arbitrary, horizontal spits; whereas, the more recent work used much thinner levels that for the most part tracked the natural, sometimes irregular or discontinuous, sometimes sloping, stratigraphy of the deposits. It is clear therefore that Stekelis’s recovery methods pooled bones that in reality derived from stratigraphically different layers within the deposits. In an ideal world, we would be best off ignoring these materials altogether and working exclusively with the collections from the more recent excavations. Unfortunately, were we to do so, many of our analyses would be impossible because the Bar-Yosef-Vandermeersch assemblage, by itself, is simply too small.

Fortunately, both excavations used the same datum point to record the depth or z-coordinate of the artifacts and bones. So, for a number of our analyses we simply divide the depths into arbitrary half-meter increments. While admittedly crude, these arbitrarily pooled levels should suffice to reveal temporal patterns that are robust, of large magnitude, and unfold over long periods of time.

But for certain questions, especially those that seek to track change over time in the procurement and handling of the largest ungulates, or just the juveniles, even half-meter increments at times are precluded by small sample sizes. For these questions, we need to split the total MP assemblage into just two stratigraphic subsets or groups, a “lower” one and an “upper” one. In order to do this, however, we need a basis for deciding at what depth below datum to place the boundary between the two groups. As documented in previous publications (Speth 2006; Speth and Tchernov 2007), the Kebara MP sequence can be divided into a “midden period” (levels XI-IX), with evidence of intensive hunting that was most probably concentrated in the cooler months of the year, and the latest MP levels (levels VII-V), with little or no evidence of midden accumulation and less evidence of hunting activity (Speth and Clark 2006). This leaves us with the problem of where to place level VIII, which produced a small faunal assemblage that in many respects is transitional between the midden period and the later occupations. Although the decision is somewhat arbitrary, and I have vacillated over the years on this issue, my inclination is to group the bones from this level with the preceding midden period because it is not until level VII that midden accumulation dwindled to the point that it is no longer easily detectable in the composition or spatial patterning of the site’s faunal remains. Thus, in temporal comparisons where I need to maximize the size of the samples, I dichotomize the MP material into two stratigraphic groups, the “lower” one consisting of levels XIII-VIII, the “upper” one consisting of levels VII-V.

That still leaves the question of how to link the Stekelis materials with the faunal remains recovered by the Bar-Yosef/Vandermeersch excavations. In other words, what should we use as an approximate depth below datum for the boundary between the “lower” and “upper” MP groups, knowing that the MP deposits are not entirely horizontal throughout the site? My solution to this problem is again somewhat crude, though relatively straight-forward (see Speth and Clark 2006). I generated two histograms, one showing the frequency distribution of depths below datum for the MP bones in Bar-Yosef and Vandermeersch’s “lower” levels (XIII-VIII), the other showing the spread of depths for the material in their “upper” levels (VII-V). Fortunately, there is remarkably little overlap between the two histograms, with the boundary (at least in those parts of the site that Bar-Yosef and Vandermeersch were able to sample) lying at a depth of about 550 cm below datum. Thus, crude as it might be, the 550-cm figure will serve as the dividing point between the “lower” and “upper” MP assemblages in those temporal analyses where it is necessary to combine the Stekelis and Bar-Yosef/Vandermeersch assemblages.

I evaluate statistical significance using three methods: for the difference between two percentages, I use the arcsine transformation (ts), as defined by Sokal and Rohlf (1969: 607–610); to evaluate differences between means I use standard unpaired t-tests (t); and to assess correlation I use the non-parametric Spearman’s rho (rs).

Most of the larger mammal remains in Kebara’s MP deposits, expressed as percentages of total ungulate NISP values, derive from just two taxa—mountain gazelle (G. gazella, 46.2 %) and Persian fallow deer (D. mesopotamica, 28.3 %). Other animals, represented by smaller numbers of specimens (also using NISP), include aurochs (Bos primigenius, 12.2 %), red deer (Cervus elaphus, 6.8 %), wild boar (Sus scrofa, 3.2 %), small numbers of equid remains (1.9 %), very likely from more than one species, wild goat (Capra cf. aegagrus, 0.8 %), roe deer (Capreolus capreolus, 0.4 %), hartebeest (Alcelaphus bucephalus, 0.2 %), and rhinoceros (Dicerorhinus hemitoechus) (<0.1 %) (see Fig. 3.2; Davis 1977; Eisenmann 1992; Speth and Tchernov 1998, 2001; Tchernov 1998).

During the MP, nearly half of Kebara’s ungulate remains derive from an extremely dense concentration of bones which accumulated within a roughly 2 to 4-m wide zone close to the cave’s north wall (the “north-wall midden” or just “midden”), and particularly from levels IX-XI (the “midden period”). In the central floor area of the cave, separated by a gap of several meters from the north-wall midden, and most clearly evident in level X (the décapage), bones were encountered in small, discrete concentrations or patches, separated from each other by zones with few or no bones (Meignen et al. 1998: 230, 231; Speth et al. 2012). Studies of the sediments on the cave floor, using on-site Fourier transform infrared spectrometry, indicate that these localized bone concentrations reflect the original burial distribution, not the end-product of selective dissolution following burial (Weiner et al. 1993). While the origin of these curious circular bone concentrations still eludes us, their form and content are not unlike the ubiquitous trash-filled pits characteristic of the Holocene archaeological record in both the Old and New World (Speth et al. 2012).

While there is clear evidence throughout the cave’s MP sequence for the intermittent presence of carnivores, most notably spotted hyenas (Crocuta crocuta), the modest numbers of gnawed and punctured bones, the scarcity of gnaw-marks on midshaft fragments (Marean and Kim 1998: S84, S85), and the hundreds of cutmarked and burned bones, as well as hearths, ash lenses, and large numbers of lithic artifacts, clearly testify to the central role played by humans in the formation of the bone accumulations (see Speth and Tchernov 1998, 2001, 2007 for more detailed discussions of the taphonomy).

Evidence for Overhunting

Since several of the more obvious lines of evidence that are suggestive of overhunting at Kebara have been presented elsewhere (Speth 2004a; Speth and Clark 2006), here I will just briefly summarize them and introduce other data that I think further support this idea. I should hasten to point out, however, that the specter of equifinality is close at hand and will follow my arguments from beginning to end (Munro and Bar-Oz 2004). Since many of the changes that I view as likely evidence of overhunting, particularly the rapid fall off in the proportion of aurochs and red deer, occur at more or less the same time that midden accumulation declined or ceased in the cave, it is entirely conceivable that we are seeing a change in Kebara’s function as a settlement, and/or the time of year when Neanderthals occupied the cave, and/or a shift in favored hunting areas, and/or the use of new or different hunting technologies or strategies, such as more communal hunting of gazelles, and perhaps fallow deer, rather than solitary ambush hunting of aurochs and red deer. And of course the decline could reflect a reduction in the availability of large game within range of the site brought about by paleoenvironmental fluctuations. Some of these possibilities are easier to deal with than others. Thus, as I will show momentarily, the fall-off in the proportions of large taxa in Kebara’s ungulate assemblage is unlikely to be the result of major fluctuations in paleoclimate, since the decline in the frequency of these taxa continues unabated across major up and down oscillations in regional temperature and precipitation. Some of the other potential sources of equifinality, however, are not so easy to address and, as a consequence, cannot be convincingly dismissed.

One of the most striking features of Kebara’s faunal record, and perhaps the most obvious sign that local populations some 55–60 kyr may have begun to overhunt their preferred large-game resources, is the sharp decline over the 4-m-long late MP sequence in the frequency of the two principal large-bodied animals—aurochs (Bos primigenius) and red deer (Cervus elaphus) (Fig. 3.3). In this figure, I use the combined ungulate assemblages from the Stekelis and Bar-Yosef/Vandermeersch excavations in order to achieve the largest possible samples. As already discussed, this lumping procedure obviously comes with considerable loss of stratigraphic precision. Nevertheless, the pattern is very striking. Aurochs and red deer fall off sharply above about 600–650 cm below datum, which roughly corresponds to the end of midden accumulation in Kebara. The next figure (Fig. 3.4), which uses only the stratigraphically controlled materials for the MP fauna from the more recent Bar-Yosef/Vandermeersch excavations, but which as a consequence has to lump all of the large-bodied taxa into a single group in order to achieve adequate sample sizes, shows this pattern more clearly. Large prey decline sharply above level VIII, or after the period of midden formation.

When the MP assemblage is divided, as discussed earlier, into just two stratigraphic subsets or groups, “lower” (bones below 550 cm) and “upper” (bones above 550 cm), the decline in the proportion of large ungulates is substantial and statistically significant (“lower” MP, 30.8 %, “upper” MP, 11.9 %, ts = 20.37, p = 0.0000). Though handicapped by very small sample sizes, the proportion continues to drop slightly in the EUP (10.5 %), the difference nearly attaining statistical significance (ts = 1.71, p = 0.09).

As already noted, large-scale paleoenvironmental changes are unlikely to be the cause of this decline in large ungulates; the trend continues unabated across several major swings in regional paleoclimate that are clearly evident in the speleothem-based oxygen-isotope record from Soreq Cave in central Israel (Fig. 3.5; data from Bar-Matthews et al. 1998, 1999). Very similar patterns are seen in the speleothem records from two other caves—Peqiin Cave in northern Israel and Ma’ale Efrayim Cave in the rain shadow to the east of the central mountain ridge of Israel (Bar-Matthews et al. 2003; Vaks et al. 2003). The isotope data shown in this figure have been “smoothed” using a cubic spline statistical interpolation procedure. This technique estimates a value for y using four values of x at a time (i.e., fixed window width). The program uses “…a series of cubic (third-order) polynomials to fit a moving window of data, four points at a time” (SAS Institute 1998: 227). This smoothing procedure eliminates many of the minor oscillations in the data, thereby allowing one to more readily perceive the major trends. According to the Soreq record, ∂18O values were generally lower between roughly 48 and 54 kyr, denoting a shift toward somewhat warmer-moister conditions. Chronologically, this correlates (approximately) to Kebara’s levels VI and VII (Valladas et al. 1987). This interval is bracketed on either side by periods of generally higher ∂18O values indicative of colder-drier conditions corresponding, at least approximately, to levels XI-IX and the terminal MP (V) and EUP (IV) levels.

Smoothed oxygen-isotope record (∂18O ‰ PDB), derived from speleothems in Soreq Cave (Israel), for the period 45–60 kyr. Original data provided by M. Bar-Matthews (see Bar-Matthews et al. 1999: 88, their Fig. 1A for unsmoothed record)

Other evidence also suggests that climatic fluctuations during MIS 3 and 4 were not sufficient to have significantly altered the resources that would have been available to MP hominins in the area, and therefore may not have been the principal cause of the declining frequencies of the larger ungulates that we see at Kebara, Amud, and elsewhere. For example, the rodent faunas from Amud show little evidence of dramatic change in the nature and composition of the habitats surrounding the cave during the late MP.

The results of this study suggest that changes in relative abundance of micromammal species throughout the Amud Cave sequence are likely the result of taphonomic biases. Once such biases are addressed, there is no shift in the presence-absence; rank abundance and diversity measures of these communities in the time span 70-55 ka. The persistence of the micromammal community is consistent with low amplitude climate change. There is no indication for a decrease in productivity and aridification throughout the sequence of the cave, specifically toward the end of the sequence at 55 ka. The species present are suggestive of a mesic humid Oak woodland environment in Amud Cave and most of the contemporaneous Middle Paleolithic sites in northern Israel. Consequently, climate change may not have had a cause-and-effect relationship with the disappearance of the local Neanderthal populations from the southern Levant (Belmaker and Hovers 2011: 3207).

Carbon and oxygen isotope studies of goat (Capra aegagrus) and gazelle (G. gazella) tooth enamel from Amud Cave by Hallin et al. (2012: 71) reach very similar conclusions:

…the disappearance of Neandertals after MIS 3 does not appear to be due to climate forcing. Our isotope data from Amud Cave and the species composition of its micromammals (Belmaker and Hovers 2011) provide no support for major climate change during MIS 3….

Interestingly, in discussions of the BSR only a handful of studies consider increases in the hunting of immature animals as part and parcel of the intensification process (see, for example, Davis 1983; Koike and Ohtaishi 1985, 1987; Broughton 1994, 1997; Munro 2004; Stiner 2006; Lupo 2007; Wolverton 2008). In one of the most explicit recent looks at the frequency of juvenile animals as evidence for overhunting during the Levantine Epipaleolithic, Stutz et al. (2009: 300) report a major change in the proportion of unfused versus fused gazelle first phalanges from values below 10 % in Kebaran and Geometric Kebaran assemblages to values in excess of 35 % in assemblages from the Natufian.

Usually, however, the frequency of immature animals in an archaeological faunal assemblage is discussed either in terms of taphonomy (immature bones are under-represented because they do not preserve as well as the bones of adults), or in terms of seasonality (lots of juveniles means a focus of hunting activity during the fawning or calving season) (e.g., Monks 1981; Klein 1982; Lyman 1994; Pike-Tay and Cosgrove 2002; Munson and Garniewicz 2003; Munson and Marean 2003). But the frequency of immature animals can reflect factors other than these two customary ones. For example, in communal hunting the scarcity or absence of juveniles can also be an inadvertent consequence of the behavior of the animals as they are maneuvered toward and into a kill (Speth 1997). American Bison (Bison bison) provide a case in point. When they are “gathered” and moved (but not yet stampeded) from a collecting area toward a trap or cliff, the animals often become strung out into a line or column, somewhat akin to the manner in which dairy cows move when they return to the barn at the end of the day. Spatially, the column is not a random mix of all ages and both sexes. The leaders are generally adults, typically females, with the calves lagging behind, and some of the adult bulls guarding the rear. Once the animals reach the trap or cliff, the hunters stampede the line from the rear, which effectively blocks the animals at the front of the column from turning and escaping, and pushes them instead into the trap or over the precipice. The animals farther back in the line or column, which may include many of the calves, are far more likely to escape. As a result, juveniles may end up under-represented or missing altogether in a communal kill for reasons unrelated to either season or taphonomy.

As far as gazelles are concerned, from modern wildlife management studies we do know that they can be gathered into modest-sized herds and that they will move along low walls and even white plastic strips lying on the ground rather than stepping or jumping over them (Speth and Clark 2006; Holzer et al. 2010), a behavior very similar to what has been observed in caribou, reindeer, and many other ungulates (e.g., Stefansson 1921: 400–402; Wolfe et al. 2000; Benedict 2005; Brink 2005). Unfortunately, we know regrettably little (at least judging by the published literature) about how gazelles distribute themselves spatially by age and sex as they are gathered and maneuvered toward and into a corral or trap. Hence, we do not know whether juveniles would end up being under-represented in the take from a communal hunt, and of course we have no idea whether MP hunters in the Southern Levant exploited gazelles in this manner. We do know that Eurasian Neanderthals used communal tactics to hunt reindeer, bison, and other large animals, so there is no justification for assuming a priori that Levantine Neanderthals or Anatomically Modern Humans lacked the wherewithal to communally hunt gazelles (Gaudzinski 2006; Rendu et al. 2012).

Under most circumstances, however, I suspect that the targeting of juveniles is a deliberate choice made by hunters. Viewed from the perspective of diet breadth models, the hunters are faced with a decision: should they invest time and effort to pursue, kill, transport, and process a small-bodied juvenile with limited subcutaneous and marrow fat deposits, or forego that opportunity and go after a prime adult that provides larger quantities of meat and is much more likely to be endowed with substantial deposits of fat? Seen in this way, elevated numbers of immature animals in an assemblage provide a pretty good indication of stress-related resource intensification (see also Stutz et al. 2009).

If broad spectrum resources world-wide are generally expensive to collect and process relative to other foods, then, contrary to common argument, changes in their own abundance, however great, are unlikely to account for their adoption, not only in arid Australia, but anywhere (Edwards and O’Connell 1995: 775).

In a very dimorphic species, immature males grow much faster, and put on body fat sooner, than immature females, and hence hunters may treat them more like adults. This is testable in principle, but, unfortunately, in most cases archaeologists are hard put to sex the bones of juveniles, especially when the bones are in fragmentary condition (for an example of a bison kill where hunters did in fact selectively hunt juvenile males, see Speth and Clark 2006: 16, their Figs. 11, 12).

Viewed from a rather different vantage point, if one sees big-game hunting as an enterprise driven to any significant extent by social or political goals, such as prestige or costly signaling, one would come to more or less the same conclusion—hunters generally would be expected to focus their efforts on prime adults, not juveniles (e.g., Speth 2010b).

Of course there are some circumstances in which hunters will deliberately go after very young animals, even fetuses, primarily as delicacies because their meat is more tender than that of older adults. Soft skins may also be an important attraction (Binford 1978: 85, 86). It is doubtful, however, that hunting for either of these purposes in the MP or UP would have occurred often enough to produce recognizable signals in the archaeological record.

In sum, where preservation can be shown not to be a major factor, and setting aside the possibility that MP hominins hunted gazelles communally, some combination of seasonality and the degree of stress-related intensification will likely account for much if not most of the variability in the frequency of juvenile remains.

The frequency of juveniles at Kebara may well present just such a case. The proportion of immature remains among the smaller ungulates (gazelle, roe deer, fallow deer, and wild goat)—age in this case based upon the fusion state of postcranial elements and teeth of immature animals—increases over the course of the MP sequence. This trend can be seen in Figs. 3.6 and 3.7. In the first of these figures, I use just the bones recovered in the Bar-Yosef/Vandermeersch excavations. These materials, because they were excavated following the natural stratigraphy of the deposits, provide the most reliable data set. But in so doing I am forced to exclude the wealth of material from the Stekelis excavations. When the materials from both excavations are combined, as shown in the second figure (Fig. 3.7), the substantially enlarged assemblage reveals the increase in juveniles far more clearly. Comparing the aggregated “lower” and “upper” MP assemblages, as well as the EUP, the increase is significant, or nearly so, across all three samples (“lower” MP 8.7 % vs. “upper” MP 11.9 %, ts = 2.92, p < 0.01; “upper” MP vs. EUP 14.2 %, ts = 1.73, p = 0.08). Finally, Fig. 3.8 shows the increasing proportion of juveniles in the younger levels of Kebara’s MP sequence, but in this case gazelles and fallow deer are plotted separately. Both species show similar trends, although the increase is more pronounced in the latter.

Despite very small sample sizes, a similar pattern of increasing juvenile representation, based again on the fusion state of postcranial bones, also seems to occur in the larger ungulates (“lower” MP 4.3 %, “upper” MP 7.4 %, EUP 8.8 %), although the only comparison that attains statistical significance is between the “lower” MP and the EUP (ts = 2.56, p = 0.01). This result is very weakly echoed by the proportion of aurochs and red deer teeth (both taxa combined) from immature animals, using both eruption and crown height as described in Stiner (1994: 289–291; see also Speth and Tchernov 2007) to assign specimens to the immature and adult age classes. The proportion of juveniles is 14.9 % in the “lower” MP group, 27.3 % in the “upper” MP group, and 25.6 % in the EUP. None of these differences is statistically significant, however, given the miniscule sample sizes of these taxa in the younger levels, although if we combine the “upper” MP and EUP samples, the result approaches significance (ts = 1.84, p = 0.07).

The targeting of juveniles changes, at least in gazelle, in another interesting and admittedly unexpected way as well. To see this, I compare the average crown height of gazelle lower deciduous fourth premolars (dP4) in the “lower” and “upper” MP groups and the EUP. The average crown height provides a crude index of the age of immature animals, a smaller value indicating more heavily worn teeth or older animals, a larger value denoting less worn teeth or younger animals. In the Kebara assemblage, there is no significant difference in average crown height between the “lower” (5.0 mm) and “upper” (5.1 mm) MP stratigraphic groups (t = 0.31, p = 0.76), but both groups differ significantly, or nearly so, from the larger crown height value seen in the EUP (5.8 mm; “lower” MP vs. EUP, t = 2.72, p = 0.008; “upper” MP vs. EUP, t = 1.79, p = 0.08). These data suggest that, by the end of the MP, Kebara’s hunters had not only begun to target more juvenile gazelle but perhaps also younger individuals within the juvenile age classes. This assumes, of course, that increasing numbers of younger juveniles is not the result of a shift in the seasonal timing of gazelle hunting activities, one of those ever-present problems of equifinality. No comparable trend is evident in the dP4s of fallow deer.

Unfortunately, yet another issue of equifinality enters the picture here. The greater average crown height value seen in the EUP dP4s could also reflect climatic or environmental shifts that, following the MP, reduced the abrasiveness of the forage available to the gazelles. We see no obvious way at present to examine this possibility, but it is a factor that should be kept in mind.

Finally, the larger crown height value might also indicate that the gazelles themselves had become larger in the EUP. Measurements on over 400 of Kebara’s gazelle astragali (tali) suggest the opposite, however. The greatest lateral length (GLl in von den Driesch’s 1976 terminology), for example, declines from a mean of 2.95 cm (N = 293) in the MP (all levels combined) to 2.86 cm (N = 115) in the EUP, a change that is highly significant (t = 5.95, p < 0.0001). Similarly, the maximum distal breadth (Bd in von den Driesch’s terminology) declines from 1.76 cm (N = 329) in the MP to 1.72 cm (N = 124) in the EUP, again a significant change (t = 3.79, p = 0.0002). Whether the decline in the size of the astragali is due to an overall reduction in the average body size of the gazelles, or a higher proportion of females among the kills, or the increased representation of juveniles, or some combination of these, it seems clear that the increase in average crown height of the juvenile gazelles in the EUP is not likely to be due to an overall increase in the body size of the animals.

The gazelles reveal another interesting temporal pattern. Not only does the proportion of juveniles increase toward the end of the MP, as does the proportion of younger individuals among the juveniles, but the average age of the adult gazelles appears to decline as well. In Fig. 3.9, mean crown heights for the lower or mandibular third molar (M3) of adult gazelles are plotted by arbitrary 1-m thick levels. Such coarse stratigraphic lumping was necessary to get minimally adequate sample sizes. Comparable data for fallow deer are also included. This figure suggests that mean crown heights in adult gazelles increase from the beginning of the sequence into the EUP. While the graph shows what appears to be a reasonably clear trend, the sample sizes are small and only one of the pairwise comparisons—between the 400–500 cm MP level and the EUP—is significant, or nearly so (t = 1.75, p = 0.08). By pooling the data further and using only the two MP stratigraphic groups, the mean crown height values obtained for the M3 of adult gazelles in the “lower” and “upper” MP groups do not differ significantly from each other (t = 0.48, p = 0.63), but both differ from the EUP value (“lower” MP vs. EUP, t = 4.76, p < 0.0001; “upper” MP vs. EUP, t = 3.64, p < 0.001). Finally, when the individual crown height values for the M3 of adult gazelles are correlated with their actual depths below datum, the resulting coefficient, while not particularly strong, is negative, as expected, and statistically significant (rs = −0.23, p < 0.0001).

Thus, although these data are not as clear-cut as one might like, the implication of Fig. 3.9 would seem to be that toward the close of the MP Kebara’s Neanderthal hunters appear to have focused increasingly, not only on juvenile gazelles, but on younger adult gazelles as well, yet another likely sign of subsistence intensification. Interestingly, although the hunters also increased their reliance on juvenile fallow deer, as already shown, there is no evidence in the crown height data that would suggest their use of young adult deer increased toward the end of the sequence. Unfortunately, the sample of measurable red deer and aurochs M3s is much too small to see if similar targeting of younger adults might have occurred in these taxa as well.

The Kebara faunal record shows another interesting trend that also appears to cross-cut the speleothem paleoclimate record, and could therefore be another sign of resource intensification. Among the ungulates being hunted by Neanderthals at Kebara, gazelles are by far the smallest (the only other comparably small ungulate, roe deer, is exceptionally rare throughout the sequence). Modern male gazelles, on average, weigh only about 25.2 kg; females weigh about 18.3 kg (Baharav 1974; Mendelssohn and Yom-Tov 1999: 250; Martin 2000). Persian fallow deer, though larger than gazelles, are still relatively small animals. Unfortunately, while body-weight data for European fallow deer (Dama dama) are widely available for both farmed and wild animals, reliable information for the Persian form (D. mesopotamica) is virtually non-existent. Nevertheless, it is clear that the Near Eastern cervid is larger than its European cousin, with adult males often exceeding 100 kg and females falling somewhere around 60 or 70 kg (e.g., Haltenorth 1959; Chapman and Chapman 1975; Mendelssohn and Yom-Tov 1987; Nugent et al. 2001). There is fairly abundant evidence in the ethnographic literature that hunters commonly transport the entire carcass back to camp when they are dealing with prey in the size range of gazelles and fallow deer, but become increasingly selective in what body parts of the bigger, heavier animals they jettison and what they transport home. In prey the size of red deer and aurochs, heads are one of the parts most often discarded at the kill (O’Connell et al. 1988, 1992: 332; Domínguez-Rodrigo 1999: 21). Kebara’s data echo the ethnographic cases quite closely—there are proportionately many fewer heads of aurochs and red deer than there are of gazelle and fallow deer (gazelle, 17.1 %; fallow deer, 11.0 %; wild boar, 12.2 %; red deer, 8.4 %; aurochs, 9.4 %). Isolated teeth have been excluded in this comparison because they introduce their own bias—the proportion of teeth that are isolated from their bony sockets increases dramatically in the largest taxa, thereby greatly inflating the NISP counts for the heads of these animals (see Speth and Clark 2006).

Distance also enters into the hunters’ calculus of what to carry home and what to abandon at the kill. Thus, within a given body-size class, the farther the carcass has to be transported, the more likely the heads, which are bulky low-utility elements, will be jettisoned, quite likely after on-site processing to remove the tongue, brain, and other edible tissues. Figure 3.10 is interesting in this regard; it shows that, upward in the sequence, the proportional representation of gazelle heads (both mandibles and crania) falls off almost monotonically, suggesting that Kebara’s hunters may have had to travel increasingly greater distances to acquire these diminutive ungulates, increasing numbers of which were juveniles. Figure 3.11 shows that fallow deer heads also declined, although the pattern is much “noisier” than the trend for gazelles. Again, the implication is that the hunters toward the close of the MP had to travel farther to acquire deer, and, as in gazelles, despite the increasing transport costs they nonetheless brought back greater numbers of immature animals.

As usual, of course, issues of equifinality are never far away. If Neanderthal hunters toward the close of the MP began capturing larger numbers of gazelles, and possibly fallow deer, in communal drives, one might expect them to have abandoned more heads and other bulky, low-utility parts at the kill, since communal hunts would have necessitated more stringent culling decisions. Unfortunately, at the moment I see no reliable way to distinguish between these alternatives.

Discussion

As noted early on in this paper, until fairly recently it has been common in the Near East to place the onset of the BSR toward the end of the Epipaleolithic (i.e., within the Natufian), just a few millennia prior to the emergence of village-based farming economies. Exciting new work by both zooarchaeologists and paleoethnobotanists, however, is pushing the beginnings of resource intensification further back in time, back to at least 20–25 kyr, at the onset of the Kebaran, the period that marks the beginning of the Epipaleolithic (e.g., Piperno et al. 2004; Weiss et al. 2004a, b; Stiner 2009a; Stutz et al. 2009). The faunal data from Kebara Cave may push the beginnings of the process even further back in time, providing the first hint that significant resource intensification, at least in the Southern Levant, may in fact have already begun as much as 50–55 kyr, and reflect the impact of the region’s growing MP populations on their large-game resources.

Stutz et al. (2009) assembled faunal data from a number of Levantine sites dating to the Epipaleolithic. They expressed these data using a series of indices which show the abundance of small “big game” (gazelle, roe deer, wild goat), medium “big game” (fallow deer, wild boar, hartebeest), and large “big game” (aurochs, red deer, equids, rhino) relative to each other and to “small game” (hares, tortoises, birds). Using these indices, the decline in the abundance of both large and medium “big game” across the span of the Epipaleolithic is readily apparent. Applying two of these same indices to the Kebara data—the large “big-game” index (LbgI) and the medium “big-game” index (MbgI)—one can readily see that the fall-off in the largest taxa (aurochs and red deer) very likely began well before the Epipaleolithic, perhaps as much as 50–55 kyr during the final stages of the MP (see Fig. 3.12).

Decline in large “big game” index (LbgI) and medium “big-game” index (MbgI) at Kebara, and at a series of Levantine Epipaleolithic sites, using procedures and index values from Stutz et al. (2009). Chronological placement of the three Kebara assemblages is approximate

Incidentally, while I calculated the indices in the same way that Stutz and colleagues did, I have changed their labels in order to make them a little easier to recognize and remember (see Stutz et al. 2009, their Tables 3 and 4). I should also point out that in calculating the indices I included fragments that were identifiable only to skeletal element and approximate body-size class (e.g., gazelle-sized, fallow deer-sized, red deer-sized, aurochs-sized, etc.). One reason for doing this, aside from increasing overall sample sizes, was to reduce the bias against the largest taxa. At Kebara, very few bones of the biggest animals, most notably aurochs, could be identified to species with any degree of certainty. These bovids are represented mostly by large, unidentifiable pieces of cortical bone from limb shafts that, judging by the thickness of the shaft and its radius of curvature, clearly derived from an aurochs-sized animal. Had these fragments been excluded, red deer and especially aurochs would be severely under-represented in these analyses. Nor would reliance on teeth have eliminated the bias, because many fewer crania of the largest animals were transported back to the cave, a pattern widely documented among modern hunters and gatherers (Speth and Clark 2006: 19).

Returning now to our discussion of Fig. 3.12, the rapid decline at Kebara in the largest taxa is to some extent compensated for by a gradual increase in the medium-sized species, especially fallow deer, which, judging from Stutz et al.’s (2009) data, do not begin their own precipitous decline until later, during the Epipaleolithic, probably sometime after about 20 kyr. Gazelles, of course, are the most abundant ungulate species throughout the sequence at Kebara, varying between about 35 and 55 % of the total ungulate assemblage (based on NISP). Over this same period fallow deer make up some 25–35 % of the assemblage, while all of the other ungulate taxa together add up to only about a quarter of the total. The ascendancy of gazelles in Levantine faunal assemblages, in fact, seems to begin much earlier in the MP, perhaps as early as 200 kyr or more (e.g., ~38 % at Misliya Cave, Yeshurun et al. 2007; see also Stiner et al. 2011: 218 and Yeshurun 2013), and persists across major region-wide fluctuations in paleoclimate, suggesting that this animal’s abundance in archaeological sites may be more a reflection of human subsistence choices than paleoenvironmental factors (Rowland 2006; Marder et al. 2011). Given the small size of the gazelles, and given the widely accepted view that MP hominins were “top predators,” living at the apex of the food chain (Bocherens 2009, 2011), the fact that MP hunters in the Southern Levant already by ~200 kyr heavily focused their efforts on this diminutive ungulate could be an indication that the roots of the BSR pre-date the late MP, perhaps by a sizeable margin.

Despite their small size, gazelles may, of course, have become a favored target of MP hunters for largely pragmatic reasons. Although the behavior of G. gazella is not well understood, these animals may have been easier than other taxa to hunt successfully, perhaps because they could be stalked, snared, or trapped more readily than the larger ungulates, or because they were more easily taken in groups by parties of cooperating hunters (see also Yeshurun’s discussion, 2013, concerning the possible use of long-distance projectile weapons for hunting gazelles).

But obviously people hunt, and at times overhunt, for many reasons other than, or in addition to, the procurement of food. Some of the more obvious of these non-food motivations for hunting include: (1) procuring hides or other animal parts for clothing, footwear, shelter, containers, weapons, tools, shields, hunting disguises, ceremonial costumes, rattles, and glues (e.g., Wissler 1910; Ewers 1958: 14, 15; Gramly 1977; Klokkernes 2007); (2) gaining prestige or attaining other social or political goals (Sackett 1979; Wiessner 2002; Bliege Bird et al. 2009; Speth 2010b); (3) underwriting periodic communal aggregations, fulfilling needs and requirements of male initiation rites, vision quests, and various other ritual and ceremonial performances and observances (Ewers 1958; Sackett 1979; Potter 2000; Zedeño 2008; Bliege Bird et al. 2009); (4) controlling or eliminating dangerous predators or pests (Headland and Greene 2011); and (5) procuring meat, hides, hair, antlers, ivory, oils, scent glands, hooves, and other commodities for use as gifts or items of exchange (Lourandos 1997). Some of these motivations are unlikely to favor a small ungulate like the gazelle, and others may not be relevant to the remote time period we are considering here. However, hides for clothing, as well as footwear, shelter, and containers, may have been important to Near Eastern MP hominins and, historically at least, gazelle skins are noted as having been particularly valued for such purposes (see Bar-Oz et al. 2011). The non-pastoral nomadic Solubba provide an interesting case in point:

Several sources mention the distinctive Solubba dress…a garment made from 15 to 20 gazelle skins, the hair to the outside, open at the neck, with a hood, and long sleeves gathered at the wrist, extending down to cover the hands up to the fingers…. The men used to wear such garments either as their only clothing, or as shirts underneath the traditional bedouin [sic] costume. They reputedly were the only group to wear clothing made of skins (Betts 1989: 63).

I am not suggesting that MP hominins went around bedecked in fancy tailored garments fashioned from exquisitely tanned gazelle skins, each garment requiring in excess of a dozen animals for its manufacture! But if MP hunters and their families wore any clothing at all, and that seems increasingly plausible based on recent studies of the evolution of head and body (“clothing”) lice in humans (Kittler et al. 2003; Reed et al. 2004; Leo and Barker 2005; Toups et al. 2011), gazelles may have been important for precisely this reason, their diminutive size notwithstanding. Estimates vary considerably as to when human head and clothing lice diverged, with dates ranging from as recently as ca. 70–80 kyr to in excess of 1 Myr. These studies seem to be in somewhat greater agreement, however, when it comes to identifying where clothing first came into use, pointing not toward the arctic or subarctic, but toward sub-Saharan Africa as the most probable source. Thus, the presence of clothing during the MP in the Near East seems quite likely. If this is true, then the extent to which MP hominins might have placed pressure on gazelles (and other large game) for reasons above and beyond their use as a food resource would become a question of the number of hides needed to make the clothing, the number of individuals requiring such clothing, and the length of time the items lasted before they became unwearable and had to be replaced. And of course these hominins may have used gazelle skins for many other purposes as well, including footwear, bedding, carrying containers, shelter, and so forth. Gramly (1977) provides an intriguing study of deer hunting by the historic Huron, a North American “First Nation” (Indian) tribe living in Ontario, Canada—he shows that their need for deer hides to make clothing and moccasins often outstripped their need for meat.

Returning our focus once again to the Near Eastern MP faunal record, ungulate data from other sites in the region yield medium and large “big game” indices which are roughly compatible with the overhunting scenario suggested for Kebara. For example, the MP faunal remains from layers B1 and B2 of Amud (Rabinovich and Hovers 2004), a cave located close to the Sea of Galilee and dated by thermoluminescence to about 55 kyr (Valladas et al. 1999), yield very low LbgI values (0.01 and 0.02, respectively). The MbgI values are also low (0.14 and 0.13, respectively), and well below the values for medium big-game at Kebara. Moving back in time, Qafzeh Cave, dated to between 90 and 100 kyr (Valladas and Valladas 1991), produced an LbgI value (0.62) that is higher than Kebara’s (Rabinovich and Tchernov 1995). Qafzeh’s MbgI is also high (0.45) but more or less in line with Kebara’s values. Moving back further still to about ~200 kyr, Hayonim Cave’s MP levels yielded an LbgI of 0.28, a value not all that different from the LbgI value for the earlier part of Kebara’s MP sequence (Mercier et al. 2007; Stiner 2005: her Appendix 11). Hayonim’s MbgI of 0.51 is somewhat higher than Kebara’s. Taken together, the large and medium “big-game” indices from these sites support the view that the largest prey species declined in the Southern Levant in the latter part of the MP, sometime between about 60 and 50 kyr, with hunting increasingly focused on the medium- and small-sized ungulates, particularly fallow deer and gazelles, with fallow deer not declining significantly until sometime after about 30 kyr. Gazelles then became the principal “big-game” target of hunters in the region until the Prepottery Neolithic when their importance was rapidly eclipsed by domestic livestock (Davis 1982, 1983, 1989; Munro 2009a, b; Sapir-Hen et al. 2009).

Summary and Conclusions

The various patterns and relationships that over the years we have been able to tease out of the Kebara faunal data provide interesting insights into the hunting behavior of Southern Levantine Neanderthals during the last ten or so millennia prior to the UP (e.g., Speth and Tchernov 2001, 2007; Speth 2006; Speth and Clark 2006; Speth et al. 2012). The results of these studies shed light on how these rather enigmatic “pre-modern” humans went about earning their living, an interesting issue in its own right, and they have a bearing on our understanding of the MP-UP transition, the period when supposedly “archaic” foraging lifeways gave way to more or less “modern” hunters and gatherers. Here I briefly summarize what I feel are the most interesting findings of the present study, which has looked specifically at the possibility that Neanderthals (and, by implication, perhaps other quasi-contemporary MP hominins in the Near East), already some 50–60 kyr, if not before, began to overhunt their large-game resources, for food, very likely for hides, and possibly even for prestige or other social and political reasons, thereby initiating or augmenting a process of subsistence intensification—the BSR—that continued through the UP and into the early Holocene, ultimately setting the stage for the origins of agriculture.

(1) The two most common large taxa at Kebara—red deer and aurochs—decline steadily in numbers over the course of the sequence such that by the EUP they constitute a very small percentage of the total assemblage. This decline seems to be largely independent of the broad climatic swings that have been documented for the region in the speleothem isotope record. Overhunting, at least on a local scale, is strongly implicated by this pattern.

(2) The proportions of immature gazelles and fallow deer increase steadily over the course of the sequence. Because of their small size and limited body fat deposits, juveniles of both taxa were probably low-ranked resources by comparison to their adult counterparts and, as a consequence, MP hunters may often have excluded them from their “optimal diet.” Thus, while the presence of juvenile individuals may be informative about the approximate time of year when hunting took place, fluctuations in their numbers probably say more about shifts in encounter rates with more highly-ranked adult prey than about seasonality (it could instead reflect a shift from intercept or ambush hunting to a greater reliance on communal methods of procurement). However, in a relatively dimorphic species such as fallow deer, sub-adult males put on muscle mass and body fat much faster than their female counterparts, and therefore may have been targeted more often by hunters than sub-adult males in a less dimorphic species like gazelle. Thus, all other things being equal, sub-adult males of the more dimorphic species should be better represented in the faunal assemblage than juveniles of either sex of the less dimorphic taxon. While I lack sufficient data at present to sex the juvenile fallow deer remains at Kebara, it is interesting that there are more juvenile deer than juvenile gazelles, and their number increases hand-in-hand with the number of adult deer being taken in the upper part of the MP sequence. In other words, as red deer and aurochs declined, Kebara’s hunters increasingly focused their attention on fallow deer, taking both juveniles and adults. Someday, if reliable methods become available to sex the immature fallow deer remains, I would expect there to be a distinct bias toward older juvenile males.

(3) If one accepts the view that juveniles are low-ranked resources, regardless of their abundance on the landscape, then their increase in the younger MP horizons at Kebara points to a decline in encounter rates for higher-ranked adults.

(4) Based on average crown heights of gazelle lower deciduous fourth premolars (dP4), the Kebara hunters not only targeted increasing numbers of juveniles, but also younger ones. Similarly, based on average crown heights of permanent lower third molars (M3), the hunters also took greater numbers of young adult gazelles.

(5) In the younger levels of the MP and continuing into the EUP, Kebara‘s hunters brought back progressively fewer heads of both fallow deer and gazelles. Given their comparatively small body size, I had expected that the hunters would generally have transported complete or nearly complete carcasses of these animals back to the cave. The decline in heads toward the very end of the sequence, therefore, may suggest that the hunters had to travel greater distances to acquire these animals, forcing them to become more selective in which body parts they abandoned and which they transported home. Alternatively, of course, one could argue that, during the latter part of the MP sequence, many more of these animals were taken in mass drives, which might also necessitate greater selectivity on the part of the hunters. The implication might be similar, however, since communal hunting is commonly a response to resource depletion (Speth and Scott 1989).

It seems that communal hunting is most practical when obtaining a given amount of meat per day is more crucial than the increased work effort associated with communal hunting (i.e., under resource-poor or commercial hunting conditions) (Hayden 1981: 371).

(6) While published faunal assemblages from other MP sites in the region, particularly cave sites, are few and far between, what data there are fit reasonably well with the pattern seen at Kebara. Earlier assemblages, for example Hayonim‘s at about 200 kyr and Qafzeh’s at somewhere between 90 and 100 kyr, both have fairly high Large “Big-Game” Indices (LbgI), values that are more or less in line with Kebara‘s “lower” MP value. Amud Cave, whose faunal assemblages from Layers B1 and B2 are roughly the same age as Kebara’s “upper” MP group (i.e., around 55 kyr), have exceedingly low LbgI values, implying that Amud‘s MP occupants were already taking the largest mammals at a rate little different from what Stutz et al. (2009) observed during the Epipaleolithic.

Suggestive as these results may be, none of this is as yet sufficient to prove that the BSR had its roots in the MP or that Neanderthals played a significant role in the early stages of the process. But the idea is intriguing, and even more so now that we are finally admitting Neanderthals into the human family (e.g., Green et al. 2010). To see if this view has real substance, however, we obviously need a lot more faunal data from many more sites over a much broader region of the eastern Mediterranean. We also need to explore other lines of evidence in much greater detail. Prime among these is information on the plant-food component of Neanderthal and Anatomically Modern Human diet. This is obviously a much more difficult domain of research than the fauna, since plants are notoriously vulnerable to decay and, even where they are preserved, it is often difficult to tell food plants from background “noise” (Abbo et al. 2008). Kebara is again interesting in this regard. The MP deposits, especially in close proximity to the many hearths and associated ash lenses, produced thousands of charred seeds (>3,300), the vast majority of which were from legumes (family Papilionaceae). Was this normal Neanderthal fare, or do these plant foods also reflect late MP intensification, much as Ohalo II’s wild cereal grains and grinding slab with starch granules likely does for the early Epipaleolithic (Piperno et al. 2004)?

Our findings indicate that broad spectrum foraging was thus a long-established human behavior pattern and included wild legumes as well as wild grasses and other fruits and seeds. This concept calls for a reevaluation or a more detailed definition of the notion of ‘broad spectrum revolution’ as a precursor phase in human subsistence strategies prior to agricultural origins (Lev et al. 2005: 482).

Phytoliths from the mature spikelets of grasses have been recovered from late MP deposits at Amud Cave and provide surprisingly early evidence that Neanderthals harvested the ripened seeds of these plants:

Coupled with the presence of legumes in Kebara Cave, the Amud phytolith data constitute evidence that two families of plants, which would subsequently provide some of the earliest domesticates…, were already exploited in the Levant in the Late Middle Palaeolithic. The faunal and botanical records thus concur that exploitation of a broad spectrum of food resources was part of the Palaeolithic lifeways long before it became the foundation of, and a pre-requisite for, an economic revolution (Madella et al. 2002: 715).

Miller Rosen (2003), on the basis of phytoliths, starch grains, and spores, suggests that the late MP occupants of Tor Faraj in Jordan may have utilized wild dates (Phoenix dactylifera), horse-tail rush (Equisetum sp.), possibly pistachio nuts (Pistacia sp.), and probably other food plants as well, including roots and tubers.

Starch grains retrieved directly from Neanderthal dental calculus likewise point to widespread use of plant foods in MP hominin diet:

The timing of two major hominin dietary adaptations, cooking of plant foods and an expansion in dietary breadth or ‘broad spectrum revolution,’ which led to the incorporation of a diversity of plant foods such as grass and other seeds that are nutritionally rich but relatively costly to exploit, has been of central interest in anthropology…. Our evidence indicates that both adaptations had already taken place by the Late Middle Paleolithic, and thus the exploitation of this range of plant species was not a new strategy developed by early modern humans during the Upper Paleolithic or by later modern human groups that subsequently became the first farmers (Henry et al. 2011: 489, 490).

Likewise, recent macro- and microwear studies of the teeth of Near Eastern MP hominins point to eclectic diets that very likely included a substantial plant food component (e.g., El Zaatari 2007; Fiorenza et al. 2011). Even the nitrogen isotope data extracted from the collagen of European Neanderthals, the data that archaeologists and paleoanthropologists routinely cite nowadays as proof that these “archaic” humans were “top predators,” could mask a substantial dietary contribution of plant foods.

A small percent of meat already increases very significantly the \(\Updelta\) 15N value, and contributions of plant food as high as 50% do not yield \(\Updelta\) 15N values lower than 1 standard-deviation of the average hyena collagen \(\Updelta\) 15N value…. This example clearly illustrates that the collagen isotopic values of Neanderthal collagen provide data on the relative contribution of different protein resources, but it does not preclude a significant amount of plant food with low nitrogen content, as high as half the dry weight dietary intake (Bocherens 2009: 244; emphasis added).

Future Directions

Tantalizing as these data are, we still have a long ways to go to fully grasp the nature, timing, and cause(s) of the “broad spectrum revolution.” Most archaeologists today seem to agree that the BSR was a largely stress-driven response of foragers, late in the Pleistocene, to an increasing imbalance between available food resources and the number of mouths to be fed. More specifically, what most scholars see as being “broadened” was the number of different food types (i.e., taxa) that were added to the larder, usually by incorporating plant and animal resources with lower return rates, such as grass seeds, tubers, molluscs, snails, reptiles, birds, and small mammals, all resources that had been available before but largely or entirely ignored.

Addressing the animal component of the BSR, Mary Stiner and colleagues (e.g., Stiner et al. 1999; Stiner 2001: 6995; Munro 2003) sharpened our perspective by pointing out that a shift toward greater reliance on smaller, lower-ranked animal resources becomes evident “…only when small animals [are] classified according to development rates and predator escape strategies, rather than by counting species or genera or organizing prey taxa along a body-size gradient.” Similar arguments have been applied to the plant food component as well (Weiss et al. 2004a, b).