Abstract

Many of the findings of the Charney Report on CO2-induced climate change published in 1979 are still valid, even after 30 additional years of climate research and observations. This paper considers the reasons why the report was so prescient, and assesses the progress achieved since its publication. We suggest that emphasis on the importance of physical understanding gained through the use of theory and simple models, both in isolation and as an aid in the interpretation of the results of General Circulation Models, provided much of the authors’ insight at the time. Increased emphasis on these aspects of research is likely to continue to be productive in the future, and even to constitute one of the most efficient routes towards improved climate change assessments.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Climate change assessment

- Charney report

- Hierarchical climate modeling

- Physical understanding

- Climate projections

- Climate processes, forcings and feedbacks

- General circulation models

1 Introduction

In 1896 Svante Arrhenius first suggested that increased CO2 in the atmosphere might affect climate. Observational evidence that CO2 concentrations were actually increasing in the atmosphere became available in the 1960s, thanks to the continuous measurement begun by Charles D. Keeling in 1958. In 1979 the US National Academy of Sciences asked a small work group of scientists led by Jule Charney to undertake a scientific assessment of the possible effects of CO2 on climate (Charney et al. 1979: “Carbon Dioxide and Climate: A Scientific Assessment”). Owing to the striking consistency of most of its conclusions with those of current assessments on climate change, the report (which became known as the “Charney Report”) arouses admiration but also inevitably makes us wonder: since then, what progress have we made in assessing the effects of CO2 on climate in the last 30 years? Where are the gaps? What are the implications for community efforts to improve assessments of future long-term climate change? This paper addresses these issues based on the personal reflections of a small group of scientists from a range of backgrounds and specialities.

After a brief presentation of the Charney report (Sect. 2), we discuss the scientific progress (or lack of progress) addressed in the key disciplines identified by this report (Sect. 3). In Sect. 4, we highlight lessons drawn from climate research over the last decades, and make some suggestions for further progress.

2 The Charney Report

In the foreword to the Charney report, Vern Suomi noted that scientists had known for more than a century that changing atmospheric composition could affect climate, that they now had “incontrovertible evidence” that atmospheric composition was indeed changing and that this had prompted a number of recent investigations of the implications of increasing CO2. Thus the Charney Report was written at an auspicious moment: 20 years of measurements at Mauna Loa had established beyond doubt that CO2 concentrations were rising, and general circulation models were just beginning to be applied to understanding the consequences.

The relatively high impact of the Charney Report might be partially attributable to its succinctness. The whole report is 16½ small pages long, and the main conclusions are summarized in an introductory section only 2¼ pages in length. The authors begin by estimating that CO2 concentrations would double by some time in the first half of the twenty-first century, and proceed to estimate the resultant change in equilibrium global mean surface temperature to be near 3°C with larger increases at higher latitudes. After discussing the uncertainties inherent in such an estimate, they state that it is significant, however, that none of the model calculations predicts negligible warming. While the report focuses on changes in global mean temperature, the authors note that

The evidence is that the variations in these anomalies with latitude, longitude, and season will be at least as great as the globally averaged changes themselves, and it would be misleading to predict regional climatic changes on the basis of global or zonal averages alone.

While the authors make it clear that their conclusions are based primarily on the results of three-dimensional general circulation models, they state that

Our confidence in our conclusion that a doubling of CO2 will eventually result in significant temperature increases and other climate changes is based on the fact that the results of the radiative-convective and heat-balance model studies can be understood in purely physical terms and are verified by the more complex GCM’s. [General Circulation Models]

The authors’ philosophy in using GCMs is emphasized again, later in the report:

In order to assess the climatic effects of increased atmospheric concentrations of CO2, we consider first the primary physical processes that influence the climatic system as a whole. These processes are best studied in simple models whose physical characteristics may readily be comprehended. The understanding derived from these studies enables one better to assess the performance of the three-dimensional circulation models on which accurate estimates must be based.

The authors discussed what they considered to be the primary obstacles to better projections of climate change, including the rates at which heat and CO2 are mixed into the deep ocean and the feedback effect of changing clouds. They also discussed their inability to say much about the regional patterns of climate change, given the large uncertainties associated with regional climate projections from GCMs. Such issues remain very much alive today.

What made the Charney Report so prescient? The emphasis on the importance of physical understanding gained through theory and simple models, both for its own sake, to facilitate the distillation of scientific knowledge, and to help interpret and check the results of GCMs, proved highly productive and led to a projection of the global mean temperature increase that is virtually identical to current projections, even though the authors did not have the benefit of a clear signal of warming in the observations at their disposal. For instance, the authors used a variety of approaches to estimate climate feedbacks, starting with simple physical principles and assumptions, working through one-dimensional models to make an initial quantification of feedbacks, and using full general circulation models to refine or extend that assessment. This meant that they had a good understanding of the main processes governing climate sensitivity, and could defend their range of answers without having to rely on complex models. This may be why their findings were accepted and have stood the test of time.

3 Key Areas of Progress (or Lack of Progress) Since the Charney Report

The importance of non-CO2 forcings such as methane or other long-lived greenhouse gases, ozone and aerosols, has been emphasized since the publication of the Charney Report, especially for interpreting the evolution of the twentieth century climate. However, we expect the increase in CO2 concentration to dominate the acceleration of the anthropogenic forcing over the next decades. Therefore, anticipating the effects of CO2 on climate remains a key issue. The progress achieved on that issue over the last three decades is discussed here by considering the different components of the CO2-induced climate change problem considered by the Charney report: the evolution of carbon in the atmosphere (Sect. 3.1), the CO2 radiative forcing (Sect. 3.2), climate sensitivity (Sect. 3.3), the physical processes important for climate feedbacks (Sect. 3.4), the role of the ocean (Sect. 3.5), and the credibility of GCM projections (Sect. 3.6).

3.1 Carbon in the Atmosphere

The Charney Report presented little new information on the global carbon cycle, only briefly summarizing its key features, based on a SCOPE review book published on the same year (Bolin et al. 1979). This includes comments that the “proper role of the deep sea as a potential sink for fossil-fuel CO2 has not been accurately assessed” and “whether some increase of carbon in the remaining world forests has occurred is not known”. Nevertheless, the report concluded that “Considering the uncertainties, it would appear that a doubling of atmospheric carbon dioxide will occur by about 2030 if the use of fossil fuels continues to grow at a rate of about 4 percent per year, as was the case until a few years ago. If the growth rate were 2 percent, the time for doubling would be delayed by 15 to 20 years, while a constant use of fossil fuels at today’s levels shifts the time for doubling well into the twenty-second century.” Although they do not say so explicitly, their main assumption appears to be that the ocean acts as the sole sink of anthropogenic carbon, and that the terrestrial biosphere remains neutral. Also, they report that “it has been customary to assume to begin with that about 50 percent of the emissions will stay in the atmosphere”.

We now have a clearer and much more quantitative picture of the global carbon cycle. Although deforestation is still recognized as a source of CO2 (LeQuéré et al. 2009; Friedlingstein et al. 2010), terrestrial ecosystems overall are now understood to be net sinks of anthropogenic CO2, absorbing about the same amount of CO2 as the global oceans. This is now well known from observations of combined changes in atmospheric CO2 and O2, top-down inversions of atmospheric CO2, and bottom-up modeling of ocean and terrestrial biogeochemistry (see Denman et al. 2007 for a review of these different methods).

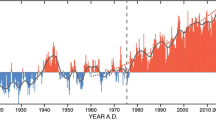

Over the last decade, work has also shown how climate change might affect the ability of both oceans and land ecosystems to absorb atmospheric CO2. Modeling studies performed this last decade have suggested a positive feedback between climate change and the global carbon cycle (Cox et al. 2000; Dufresne et al. 2002). Increased stratification of the upper-ocean due to warming at the surface reduces the export of carbon from the surface to the deep ocean, and hence limits the air-sea exchange of CO2. Declining productivity in tropical forests and a general increase in the rate of soil carbon decomposition (heterotrophic respiration) partially offset the land carbon uptake due to the CO2 fertilization effect. Despite the large uncertainty in the magnitude of the climate carbon cycle feedback (Friedlingstein et al. 2006), analysis of proxy-based temperature and CO2 from ice cores indicates that it is likely to be positive (Frank et al. 2010). The airborne fraction is expected to increase in the future as a result of sinks saturating with increasing CO2 and declining in a warmer world. Coupled climate carbon cycle models suggest the airborne fraction could rise from the current value of 45–62 % (median estimate). Analysis of the past 50 years seems to indicate that the airborne fraction has already increased (LeQuéré et al. 2009). In the context of the Charney report, this finding would not alter the estimate of the climate sensitivity, as it is based on the climate response to a prescribed doubling of the CO2 concentration, however, it would accelerate the timing of the CO2 doubling (Fig. 1).

Atmospheric CO2 concentration future projections assuming, as in the Charney report, future anthropogenic emissions to increase at a rate of 4 % per year (blue), 2 % per year (red) or to remain constant (green). Also shown (dotted lines) are the projected concentrations for these three cases accounting for a positive climate-carbon feedback, absent from the Charney’s calculations. The observed CO2 concentrations, and the twenty-first century CO2 concentrations projected for the four Representative Concentration Pathways (RCPs) used in CMIP5 are shown in black symbols

Perhaps the most important development has simply been the ice core CO2 records, which began to appear shortly after the Charney report (Delmas et al. 1980). The remarkable glacial-interglacial fluctuations of the CO2 provide constraints on climate sensitivity and pose a challenge to our understanding of the controls on the background carbon cycle that is being perturbed by anthropogenic emissions.

3.2 Radiative Forcing

The concepts of radiative forcing and equilibrium climate sensitivity were well established at the time of the Charney report. The major issues in estimating the radiative forcing for an atmosphere with fixed clouds and water vapor had already been addressed in the literature on which the Charney report is based (e.g. Ramanathan et al. 1979; Manabe and Wetherald 1967, 1975). The importance of using radiative fluxes at the tropopause rather than the surface, the stratospheric adjustment, the dependence of CO2 absorption on CO2 concentration, and the overlap between the H2O and CO2 absorbing bands were all discussed. The radiative forcing for a doubling of CO2 concentration was estimated in the report to be about 4 W m−2 within an uncertainty of ±25 %. The authors anticipated some of the difficulty of computing this forcing, and rejected much larger values in the available literature (e.g., MacDonald et al. (1979) estimated a radiative forcing of 6–8 W m−2) on methodological grounds.

Since the report, the radiative calculations underlying this computation have been regularly improved, with the number of absorption lines used in radiative transfer calculations increasing by a factor of several tens and a larger number of gas species taken into account, while the water vapor absorption continuum is better if still incompletely understood. For standard atmospheric profiles, the value of the CO2 radiative forcing estimated with different line-by-line radiation codes vary with only about a 2 % standard deviation, while estimates from GCM codes exhibit a larger standard deviation of about 10 % (Collins et al. 2006). These differences increase if one takes into account uncertainties in the specified cloud distribution and the fuzziness in the definition of the tropopause. Yet the current best estimate for this “classic” radiative forcing, 3.7 ± 0.3 W m−2 (Myhre et al. 2001; Gregory and Webb 2008), is fully consistent with the estimate in the Charney report, while the uncertainty has been considerably reduced.

However, the concept of radiative forcing continues to evolve, particularly owing to the recognition that the fast responses to a change in CO2 (responses that occur before the oceans and troposphere warm significantly) include not only the stratospheric adjustment but also tropospheric changes, particularly in cloud. This alters the definitions of both forcing and feedback (e.g., Hansen et al. 2002; Shine et al. 2003; Gregory et al 2004; Andrews and Forster 2008). These new concepts are proving valuable in sharpening our understanding of the spread of model responses (Gregory and Webb 2008; Williams et al. 2008), but in the process one loses the clean distinction between a “forcing” that can be computed from radiative processes alone and “feedbacks” that are model dependent.

3.3 Climate Sensitivity

The Charney report produced a range in equilibrium climate sensitivity of 1.5–4.5 °C, with a best guess of 3 °C. As is well known, the large range has proven difficult to reduce. IPCC AR4 (Meehl et al. 2007) states that the equilibrium climate sensitivity is “likely to be in the range 2–4.5 °C, with a best estimate of 3 °C”.

Since the Charney report, it has been emphasized how the definition of “equilibrium” depends on which relatively slow processes are considered, including the evolution of the Greenland and Antarctic ice sheets as well as the carbon and other biogeochemical cycles. It has been argued, in particular, that albedo feedback from the ice sheets can increase climate sensitivity substantially above that estimated from the relatively fast feedbacks considered in the Charney report (e.g., Hansen and Sato 2011).

A number of issues that dominate many current discussions of climate sensitivity do not appear in the Charney report. There is no discussion of transient climate sensitivity or appreciation of the multi-century time scales required to approach these equilibrium responses (see Sect. 3.4). There is also little discussion of observational constraints on climate sensitivity – such as the response to volcanic aerosol in the stratosphere, the response to the 11 year solar cycle, and the glacial-interglacial responses to orbital parameter variations (and many other paleoclimate observations), and most, obviously, the warming trends over the past century itself – and the role of models in interpreting these observations, for example, by determining how a response to the Pinatubo volcano relates to responses to more slowly evolving greenhouse gas forcings. And the report reads very differently from recent assessments in that there is no discussion of detection and attribution, and consistently, no discussion of non-CO2 anthropogenic forcings (greenhouse gases other than CO2, aerosols, land-use changes). Nevertheless, the power of the climate sensitivity concept highlighted by the report is likely to have influenced the current thinking about the effect of non-CO2 forcing agents on climate.

Finally, there is little or no attempt to discuss the hydrological cycle or regional climate changes or climate extremes. Was this a flaw in the report? Why should we care about global mean climate sensitivity? We return to this question in Sect. 4 below.

3.4 Principal Feedbacks

The Charney Report clearly outlined the main feedback mechanisms within the physical climate system and endeavored to estimate the climate sensitivity through their quantification. The report’s focus was on the water vapor and surface albedo changes, as these were the best known feedback mechanisms (e.g. Manabe and Wetherald 1967), and the nature or sign of each could be inferred based on simple physical arguments; one expects the absolute humidity to increase as the atmosphere warms while maintaining an approximately constant relative humidity, and the surface albedo to decrease as snow and ice retreat with surface warming. Based on model studies that incorporated this reasoning, the Charney Report estimated the magnitude of the water-vapor feedback to be 2.0 W m−2 K−1 and gave 0.3 W m−2 K−1 as the most likely value for the surface albedo feedback. For reference the water vapor and lapse rate feedbacks as most recently assessed by the IPCC are 1.8 ± 0.18 and 0.26 ± 0.08 W m−2 K−1 respectively (Randall et al. 2007). Thus while our best estimate of the magnitude of these important feedbacks has changed little since the Charney Report, considerable effort and progress has been made in establishing the robustness of the physical reasoning that underpinned their assessment, and in assessing it using observations (e.g. Soden et al. 2005).

The Charney Report also recognized possible changes in cloudiness, relative humidity, and temperature lapse rates as the leading sources of uncertainty in their estimate of climate sensitivity, associating a feedback strength of 0 ±0.5 W m−2 K−1, with the combined effects of such processes. The report is not at all clear as to how its authors arrived at this number, although it seems likely that the magnitude of the water vapor feedback which was and is generally believed to be “the most important and obvious of the feedback effects”, and a desire to maintain consistency with the general circulation model studies, may have played a role in their thinking. For reference, the IPCC most recently assessed the combined effect of the lapse rate and cloud feedbacks, each of which is estimated as somewhat stronger than 0.5 W m−2 K−1 but of opposing sign, as 0.15 ± 0.46 W m−2 K−1.

Admittedly little progress has been made in narrowing the uncertainty the Charney Report ascribed to the net effects of these climate feedbacks. Discussions about the potential role of cloud-aerosols interactions in these feedbacks have even complicated the issue. But this does not imply that progress in our understanding and estimation of climate feedbacks is out of reach (Bony et al. 2006; see also Hannart et al 2009 for a response to the argument of Roe and Baker (2007) that reducing this uncertainty will be very difficult for fundamental statistical reasons). Actually, important strides have been made towards developing better physical understanding of physical mechanisms associated with climate feedbacks. At the time of the Charney Report there seems to have been little more than a vague idea as to why cloudiness should change with either increasing concentrations of greenhouse gases or surface temperatures. The intervening decades have seen an articulation of a wide variety of mechanisms, ranging from the tendency for clouds to shift upward as the climate warms (e.g. Hansen et al. 1984; Wetherald and Manabe 1988; Mitchell and Ingram 1992), hypotheses that link cloud liquid water to the lapse rate of liquid water (Somerville and Remer 1984), cloud amounts in the subtropics to the tropical temperature lapse rates (Klein and Hartmann 1993), these lapse-rates themselves having been linked to the behavior of deep convection (Zhang and Bretherton 2008). Ideas have also emerged as to why the storm tracks can be expected to migrate poleward in a warmer climate, and how this effect may redistribute clouds relative to the distribution of solar radiation, or how the increased surface fluxes and changing profiles of moist static energy demanded by an atmosphere that maintains a constant relative humidity might be expected to produce more precipitation, but fewer clouds (Held and Soden 2006; Brient and Bony 2013; Rieck et al. 2012).

3.5 Role of the Ocean

The Charney Report considered the primary role of the ocean in climate change as setting the timescale over which heat and carbon are sequestered into the ocean interior, and there was little appreciation for the role of the ocean in climate dynamics at decadal to centennial time scales. From a modern perspective, its treatment of the oceans is likely its weakest aspect.

While the report correctly anticipated the role of ocean intermediate and mode waters in controlling the rate at which the ocean takes up heat, there was little understanding of the physical mechanisms involved in this control (Fig. 2). Ocean heat content may change through passive ventilation, whereby a water parcel interacting with the atmosphere carries heat into the interior largely through isopycnal transport (e.g., Church et al. 1991). Additionally, ocean heat may be modified as stratification increases and overturning circulation decreases, so that interior ocean properties accumulate (Banks and Gregory 2006).

Understanding and quantifying the ocean’s role in climate change involves a variety of questions related to how physical processes impact the movement of tracers (e.g., heat, salt, carbon, nutrients) across the upper ocean interface and within the ocean interior. In general, processes move tracers across density surface (dianeutrally) or along neutral surfaces (epineutrally), with epineutral processes dominant in the interior, yet dianeutral processes directly impacting vertical stratification. This figure provides a schematic of such processes, including turbulent air-sea exchanges and upper ocean wave breaking and Langmuir circulations; gyre-scale, mesoscale, and submesoscale transport; high latitude convective and downslope shelf ventilation; and mixing induced by breaking internal gravity waves energized by winds and tides. Nearly all such processes are subgrid scale for present day global ocean climate simulations. The formulation of sensible parameterizations, including schemes that remain relevant under a changing climate (e.g., modifications to stratification), remains a key focus of oceanographic research efforts

Ocean observations and modeling capabilities were very rudimentary 30 years ago. The observational network, which formerly consisted of measurements by ship-based platforms, has been revolutionized by satellite measurements and profiling floats (Freeland et al. 2010). The density of the measurements in the upper 700 m of the ocean, while not covering the mode waters that ventilate at high latitudes, have nonetheless begun to make it possible to track changes in ocean heat content on decadal scales (Lyman et al. 2010). However, large uncertainties remain in current observational estimates of the ocean heat content. It is likely that difficulties in closing the Earth’s global heat budget (Trenberth and Fasullo 2010) partly result from these uncertainties, although Meehl et al. (2011) suggest that deep-ocean heat uptake may explain the apparent ‘missing heat’.

The oceanic component of climate models, though still possessing errors and limitations, has advanced greatly over the last decades. A new generation of models is now able to represent important processes such as mesoscale eddies (e.g., Farneti et al. 2010) and high latitude shelf and overflow processes (e.g., Legg et al. 2009) that regulate how the ocean transports heat and mass from the surface to its interior.

The incorporation of the new generation of measurements into both process and realistic ocean climate models now facilitates mechanistic interpretations of observations and physically based evaluation of more complex models (see, e.g., Griffies et al. 2010), thereby developing the type of robust understanding that must underlie our confidence in estimates of the ocean’s role in climate change.

Through this process, the role of stratification has emerged as a particularly important one. In addition to its role in the carbon cycle and net ocean heat uptake (mentioned in the Charney report), the stratification of the ocean may also modify much shorter time-scale processes ranging from decadal climate fluctuations, to ENSO, to the life-cycle of tropical cyclones which depend crucially on their ability to extract heat from the upper ocean. This contributes to our increasing appreciation of the importance of characterizing climate variability on the decadal to century time scales, and the potential for internal variability to complicate the attribution of observed climate changes to specific anthropogenic forcing agents.

3.6 Credibility of GCM Projections

The Charney Report considered only five models, and examined the key physical features of each to assess the most realistic and robust outcome. For example, in a model simulation with excessive sea-ice extents, it was assumed that the ice-albedo effect would be exaggerated, and this bias was accounted for in the final assessment. Over time, models have increased in number (model inter-comparisons can now involve more than 20 modeling groups and 40 models) and complexity, advancing opportunities to identify the robust features of complex model simulations, but linking individual model biases to a particular model process or feature has become more difficult. In view of this, intercomparisons increasingly make use of metrics to assess models rather than direct physical interpretation. Since there are so many potential metrics, and since different metrics often tell different stories as to which models are better or worse, a key problem for the field is to tailor metrics to particular predictions. An instructive example is Hall and Qu (2006), who show a clear relationship between simulated snow surface albedo/temperature feedback estimated from the current seasonal cycle and from climate change simulations. The climate feedback can then be calibrated using the observed seasonal cycle feedback. Research on the climatic response to the ozone hole has likewise isolated the persistence time for the Southern Annular mode as a key metric for predictions of the poleward movement of the westerlies and midlatitude storm track (Son et al. 2010).

The report did not consider changes in regional climate. It noted that due to lack of resolution and differences in parameterizations, two models could give very different changes in regional circulations such as the monsoon and related rainfall patterns, and therefore were unreliable. The use of regional models may improve regional detail, but is dependent on the driving model providing the correct change in large-scale circulation and with a few notable exceptions little progress has been made in identifying robust changes in regional circulations.

Higher resolution is invaluable in distinguishing between errors that are dependent on resolution and those that are not, sharpening focus on key physically based errors. The use of ensemble simulations, sampling the structural uncertainties among the world’s climate models and also the physical uncertainties obtained by systematically perturbing individual models, has helped identify some robust features of climate change (for example, in changes in precipitation), and prompted further research to explain the robustness in physical terms. These multi-model studies are indispensable for improving the quantification of some sources of uncertainty. However they do not necessarily produce insights into how to reduce uncertainty, unless they help in interpreting and understanding model errors or inter-model differences.

4 Lessons from Past Experience and Recommendations to WCRP

Looking back at the Charney report and at the progress (or lack of progress) in climate research and modeling achieved over the last few decades, several key lessons for the future can be drawn. A selection of them are highlighted below.

4.1 Several Key Fundamental Questions Raised by the Charney Report Remain Burning Issues

If the scope of current climate change assessments has broadened since the Charney report, some of the key questions recognized in 1979 as critical for assessing the effect of CO2 on climate remain with us. At least two striking examples are worth emphasizing:

(1) Climate sensitivity:

Should global climate sensitivity continue to be a focal point for climate research since impacts of climate change are dependent on regional scale transient responses in hydrology and extreme weather, rather than the globally averaged equilibrium response? We argue that it should and that this emphasis continues to be justified. The estimate of climate sensitivity matters for the evaluation of the economic cost of climate change and the design of climate stabilization scenarios (Caldeira et al. 2003; Yohe et al. 2004). It also conditions many other aspects of climate change.

Imagine that we aggregate our estimates of the impacts of climate change on societies and ecosystems into a globally aggregated cost function, C(R). Given an ensemble of model outputs R, it is reasonable to assume that C(R) will increase with increasing climate sensitivity, as climates are pushed farther into regimes to which societies and ecosystems would adjust with greater and greater difficulty. C(R) will of course also depend on regional changes of the climate system and their specific impacts on societies and ecosystems, but these will certainly scale with climate sensitivity. We do not have to trust detailed regional projections to make this argument, but only to assume that response magnitudes typically increase alongside the global mean temperature response, and that limits in our understanding of processes that control the equilibrium response of the system also influence its transient response (as justified by the analysis of Dufresne and Bony 2008).

There is, in fact, considerable coherence across models in the spatial and seasonal patterns of the temperature response, understandable in part due to the land/ocean configuration, sea ice and snow cover retreat, and (in transient responses) spatial structure in the strength of coupling of shallow to deeper ocean layers. Regional hydrological changes in models are less coherent, but common features still emerge that are understandable in part as responses to the pattern of warming and the accompanying increases in total atmospheric water content, and in part as responses to the CO 2 radiative forcing itself (Bony et al. 2013). Although much research is needed, we can hope to understand changes in weather extremes, in turn, as reactions to these changes in the larger scale temperature and water vapor environment and to changes in surface energy balances. We conclude that climate sensitivity continues to be a centrally important measure of the size, and significance, of climate response to CO 2. The aggregated impacts of climate change can be expected to scale superlinearly with climate sensitivity.

(2) “Inaccuracies of general circulation models are revealed much more in their regional climates owing to shortcomings in the representation of physical processes and the lack of resolution. The modelling of clouds remains one of the weakest links in the general circulation modelling efforts”.

As reaffirmed by a recent survey on “climate and weather models development and evaluation” organized across the World Climate and Weather Research Programmes (Pirani, Bony, Jakob, and van den Hurk, personal communication, 2011), model errors and biases remain a key limitation of the skill of model predictions over a wide range of time (weather to decadal) and space (regional to planetary) scales. It is not a new story, and the increase of model complexity has not solved the problem; on the contrary, shortcomings in the representation of basic fundamental processes such as convection, clouds and precipitation or ocean mixing often amplify the uncertainty associated with more complex processes added to make models more comprehensive. For example, inaccurate representations of clouds and moist processes lead to precipitation errors which may result in inaccurate atmospheric loadings of aerosols or chemical species, inaccurate climate-carbon feedbacks over land, the wrong regional impacts of climate change, and so on.

There is ample evidence that the increase in resolution (horizontal and vertical) is beneficial for some aspects of climate modeling (e.g., the latitudinal position of jets and storm tracks or the magnitude of extreme events) that matter for regional climate projections. However, many model biases turn out to be fairly insensitive to resolution and seem rather rooted in the physical content of models, although separating the role of dynamical errors from physical errors through use of high resolution models or short initialized forecasts (e.g., Boyle and Klein 2010) has helped to elucidate this. Promoting improvements in the representation of basic physical processes in GCMs thus remains a crucial necessity.

Relatively little was known at the time of the Charney report about how clouds and convection couple to the climate system let alone why or how this picture might change. However, coming as it did at the dawn of the satellite era, and in the early days of cloud-resolving modeling studies, it is interesting that the report did not emphasize the importance of these emerging technologies for our understanding of the susceptibility of the climate system to cloud changes (e.g., Hartmann and Short 1980; Held et al. 1993). Indeed the reports oversight in this respect is matched only by its prescience in recognizing the extent to which the modeling of clouds would remain one of the “weakest links in the general circulation modelling efforts”. To narrow the uncertainty in estimates of the response of the climate system to increasing concentration of greenhouse gases will require a determined effort to address this “weak link.” Our best hope of doing so is to connect the revolution the Charney report missed with the crisis it anticipated.

4.2 Improvements of Long-Term Climate Change Assessments Disproportionately Depend on the Development of Physical Understanding

The pressure put on the scientific community to provide improved assessments of how climate will change in the future, including at the regional scale, has never been as high as it is today. Climate models play a key role in these assessments, and conventional wisdom often suggests that models of highest realism (higher resolution, more complexity) are likely to have wider and better predictive capabilities. Consequently, Earth System Models increasingly contribute to climate change assessments, especially in the 5th round of the Coupled Model Intercomparison Project (CMIP5). However, past experience shows that the spread of GCM projections did not decrease as they became more complex; instead this complexity (e.g. climate-carbon cycle feedbacks) introduces new uncertainties often by amplifying existing uncertainty.

About the large uncertainties associated with regional climate projections from GCMs, the Charney report stated its authors’ optimistic belief that “this situation may be expected to improve gradually as greater scientific understanding is acquired and faster computers are built”. Previous discussion (Sect. 3.6) suggests that increased computing resources (necessary to increase resolution, complexity and the number of ensemble simulations) have helped to confirm inferences from simple models or back-of-the-envelope estimates (e.g. the “dry get drier, wet get wetter” behavior of large-scale precipitation changes or the poleward shift of the storm tracks in a warmer climate), and thus have increased our confidence in the credibility of some robust aspects of the climate change signal. However, the current difficulty of identifying robust changes in regional circulations (e.g. monsoons) or phenomena (e.g. El-Nino) suggests that improved assessments of many aspects of regional climate change will depend more on our ability to develop greater scientific understanding than to acquire faster computers.

Looking into the future, many hold out hope for global non-hydrostatic atmospheric modeling in which the energy-containing eddies or dominating deep moist convection begin to be resolved explicitly, and for global ocean models with more explicit representations of mesoscale eddy spectrum. These efforts do need to be pushed vigorously, but what we already know of the importance of turbulence within clouds, cloud microphysical assumptions, small-scale ocean mixing, and the biological complexity of land carbon cycling indicate that increasing resolution alone will not be a panacea.

Progress should be measured not by the complexity of our models, but rather the clarity of the concepts they are used to help develop. This inevitably requires the development and sophisticated use of a spectrum of models and experimental frameworks, designed to adumbrate the basic processes governing the dynamics of the climate system (Fig. 4). This point of view gains weight when it is realized that unlike in numerical weather prediction (for which fairly direct evaluations of the predictive abilities of models are possible), observational tests applied to climate models are not adequate for constraining the long-term climate response to anthropogenic forcings. Indeed observations are generally not fully discriminating of long-term climate projections (Fig. 3). How well a model encapsulates the present state of the climate system, a question to which more ‘realistic’ models lend themselves, provides an insufficient measure of how well such models can represent hypothesized changes in the climate system. Paleoclimatic studies, while invaluable in providing additional constraints, also do not provide close enough analogues to fully discriminate between alternative futures. The outcome of humanities ongoing and inadvertent experiment on the Earth’s climate may come too late help us usefully discriminate among models. Hence the reliability of our models will remain difficult to establish and the confidence in our predictions will remain disproportionately dependent on the development of understanding.

Unlike weather prediction, there are limited opportunities to evaluate long-term projections (or climate sensitivity as an example) using observations. Multi model analysis show that many of the observational tests applied to climate models are not discriminating of long-term projections and may not be adequate for constraining them. Short-term climate variations may not be considered as an analog of the long-term response to anthropogenic forcings as the processes that primarily control the short-term climate variations may differ from those that dominate the long-term response. By improving our physical understanding of how the climate system works using observations, theory and modeling, we will better identify the processes which are likely to be key players in the long-term climate response. It will help to determine how to use observations for constraining the long-term response

The formulation of clear hypotheses about mechanisms or processes thought to be critical for climate feedbacks or climate dynamics helps make complex problems more tractable and encourages the development of targeted observational tests. Moreover, it helps define how the wealth of available observations may be used to address key climate questions and evaluate models through relevant observational tests (Fig. 3). For instance, Hartmann and Larson (2002) formulated the Fixed Anvil Temperature hypothesis to explain and predict the response of upper-level clouds and associated radiative feedbacks in climate change. The support of this hypothesis by several observational (e.g. Eitzen et al. 2009) and numerical investigations with idealized high-resolution process models (Kuang and Hartmann 2007) together with its connection to basic physical principles gives us confidence in at least one component of the positive cloud feedback in models under global warming (Zelinka and Hartmann 2010). Similarly, the recent recognition of the fast response of clouds to CO2 radiative forcing (Gregory and Webb 2008; Colman and McAvaney 2010) promises progress in our understanding of the cloud response to climate change and our interpretation of inter-model differences in climate sensitivity. Thus we see many reasons for confidence that progress will be made on pieces of the “cloud problem” – as for numerous other problems – seasoned by a realization of many remaining difficulties.

The long-term robustness of the Charney report’s conclusions actually demonstrates the power of physical understanding combined with judicious use of simple and complex models in making high-quality assessments of future climate change several decades in advance.

4.3 The Balance Between Prediction and Understanding Should Be Improved in Climate Modeling

With the growing use of numerical modeling in meteorology, a vigorous debate emerged in the 1950s and 1960s (between J. Charney, A. Eliassen and E. Lorentz among others) around the question of whether atmospheric models were to be used mainly for prediction or for understanding (see Dahan-Dalmedico 2001 for an analysis of this debate). A similar debate remains very much alive today with regard to climate change research. As discussed by Held (2005), one witnesses a growing gap between simulation and understanding.

Communication with scientists, stakeholders and society about the reasons for our confidence (or lack of confidence) in different aspects of climate change modeling remains a very difficult task. This level of confidence is based on an elaborate assessment combining physical arguments and a complex appreciation of the various strengths and limits of model capabilities. Improving our physical interpretation of climate change and of the different model results would greatly facilitate this communication. In particular it would help in conveying the idea that the evolution of climate change assessments resembles more the construction of a puzzle in which a number of key pieces are already in place than a house of cards in which a new piece of data can easily destroy the entire edifice.

Consistent with previous discussions recognizing the crucial importance of physical understanding in the elaboration of climate change assessments, our research community should strive to fill this gap. For instance, graduate education in climate science should promote the use of a spectrum of models and theories to address scientific issues and interpret the results from complex models. Besides the basic need to promote fundamental research, filling the gap between simulation and understanding also implies a number of adjustments or practical recommendations to the climate modeling community.

4.4 Recommendations

The lessons discussed above lead us to the following recommendations:

-

1.

Recognize the necessity of better understanding how the Earth system works in terms of basic physical principles as elucidated through the use of a spectrum of models, theories and concepts of different complexities. So doing requires the community to avoid the illusion that progress in climate change assessments necessitates the growth in complexity of the models upon which they are based. Thirty years of experience in climate change research suggests that a lack of understanding continues to be the greatest obstacle to our progress, and that often what is left out of a model is a better indication of our understanding than what is put in to it. In striving to connect our climate projections to our understanding (what we call the Platonosphere in Fig. 4), the promotion and inclusion of highly idealized or simplified experiments in model intercomparison projects must play a vital role. Very comprehensive and complex modeling plays a vital role in this spectrum of modeling activity, but it should not be thought of as an end in itself, subsuming all other climate modeling studies.

Fig. 4 Distribution of models in a space defined by increasing model simplicity (relative to the system it aims to represent) on the vertical axis and system complexity on the horizontal axis. Our attempt to realistically represent the earth system is both computationally and conceptually limited, and conceptual problems that arise in less realistic models are compounded as we move to complex models, with the result being that adding more complexity to models does not make necessarily make them more realistic, or bring them closer to the earth system. Understanding is developed by working outward from a particular starting point, through a spectrum of models, toward the Platonosphere, which is the realm of the Laws. Reliability is measured by empirical adequacy of our models, which is manifest in the fidelity of their predictions to the world as we know it. To accelerate progress we should work to close conceptual gaps at their source, and try to advance understanding by developing a conceptual framework that allows us to connect behavior among models with differing amounts of realism/simplification. As time and technical capacity evolve models may move around in this abstraction-complexity space

-

2.

Promote research devoted to better understanding interactions between cloud and moist processes, the general circulation and radiative forcings. Research since the Charney report has shown us that such an understanding is key (i) to better assess how anthropogenic forcings will affect the hydrological cycle, large-scale patterns and regional changes in precipitation, and natural modes of climate variability; (ii) to interpret systematic biases of model simulations at regional and planetary scales; (iii) to understand teleconnection mechanisms and potential sources of climate predictability over a large range of time scales (intraseasonal to decadal); and (iv) to understand and predict biogeochemical feedbacks in the climate system.

-

3.

Promote research that improves the physical content of comprehensive GCMs, especially in the representation of fundamental processes such as convection, clouds, ocean mixing and land hydrology. So doing is necessary to address the gaps in our understanding, as in many respects our models remain inadequate to address important questions raised in our first two recommendations. More generally, model failures to simulate observed climate features should be viewed as opportunities to improve our understanding of climate, and to improve our assessment of the reliability of model projections. WCRP should be pro-active in encouraging the community to tackle long-standing, difficult problems in addition to new uncharted problems. A strategy for doing so may include Climate Process Teams now in use in the USA.

-

4.

Prioritize community efforts and experimental methodologies that help identify which processes are robust vs which lead to the greatest uncertainty in projections and use this information to communicate with society, to guide future research and to identify needs for specific observations. When analyzing climate projections from multi-model ensembles, a greater emphasis should be placed on identifying robust behaviors and interpreting them based on physical principles. The analysis of inter-model differences should also be encouraged, particularly to the extent that such analyses advance a physical interpretation of the differences among models. For this purpose, fostering creativity and developing new approaches or analysis methods that connect the behavior of complex models to concepts, theories or the behavior of simpler model results should be strongly encouraged. This process of distillation is central to the scientific process, and thus vital for our discipline.

5 Conclusion

Societal demands for useful regional predictions are commensurate to the great scientific challenge that the climate research community has to address. Climate prediction is still very much a research topic. Unlike weather prediction, there are limited opportunities to evaluate predictions against observed changes, and there is little evidence so far that increased resolution and complexity of climate models helps to narrow uncertainties in climate projections. Hence, and as demonstrated by the impressive robustness of the Charney report’s conclusions, in the foreseeable future the credibility of model projections and our ability to anticipate future climate changes will depend primarily on our ability to improve basic physical understanding about how the climate system works.

Climate modeling, together with observations and theory, plays an essential role in this endeavor. In particular, our ability to better understand climate dynamics and physics will depend on efforts to improve the physical basis of general circulation models, to develop and use a spectrum of models of different complexities and resolutions, and to design simplified numerical experiments focused on specific scientific questions. Accelerating progress in climate science and in the quality of climate change assessments, should not only benefit scientific knowledge but also climate services and all sectors of our society that need guidance about future climate changes. One aspect of basic research that is often overlooked, is its role in providing a framework for answering questions that policy makers have yet to think of – in this respect the search for understanding is crucial to the general social development.

Finally, and more practically, to ensure that the frequency of assessments is consistent with the rate of scientific progress, which may vary from one topic to another, we suggest that in the future, the World Climate Research Programme play a larger role in organizing focused scientific assessments associated with specific aspects of climate change.

References

Andrews T, Forster PM (2008) CO2 forcing induces semi-direct effects with consequences for climate feedback interpretations. Geophys Res Lett 35, L04802. doi:10.1029/2007GL032273

Banks HT, Gregory JM (2006) Mechanisms of ocean heat uptake in a coupled climate model and the implications for tracer based predictions of ocean heat uptake. Geophys Res Lett 33(7), L07608. doi:10.1029/2005gl025352

Bolin B, Degens ET, Kempe S, Ketner P (eds) (1979) The global carbon cycle. SCOPE 13, Scientific Committee on Problems of the Environment, International Council of Scientific Unions, Wiley, New York, 491 pp

Bony S, Colman R, Kattsov VM, Allan RP, Bretherton CS, Dufresne JL, Hall A, Hallegatte S, Holland MM, Ingram W, Randall DA, Soden BJ, Tselioudis G, Webb MJ (2006) How well do we understand and evaluate climate change feedback processes ? J Clim 19(15):3445–3482

Bony S, Bellon G, Klocke D, Sherwood S, Fermepin S, Denvil S (2013) Robust direct effect of carbon dioxide on tropical circulation and regional precipitation. Nat Geosci. doi: 10.1038/ngeo1799

Boyle J, Klein SA (2010) Impact of horizontal resolution on climate model forecasts of tropical precipitation and diabatic heating for the TWP-ICE period. J Geophys Res 115, D23113

Brient F, Bony S (2013) Interpretation of the positive low-cloud feedback predicted by a climate model under global warming. Clim Dyn 40(9–10):2415–2431. doi:10.1007/s00382-011-1279-7. ISSN: 0930-7575

Caldeira K, Jain AK, Hoffert MI (2003) Climate sensitivity uncertainty and the need for energy without CO2 emission. Science 299:2052–2054

Charney JG, Coauthors (1979) Carbon dioxide and climate: a scientific assessment. National Academy of Science, Washington, DC, 22 pp

Church JA, Godfrey JS, Jackett DR, McDougall TJ (1991) A model of sea-level rise caused by ocean thermal expansion. J Cli 4(4):438–456

Collins WD et al (2006) Radiative forcing by well-mixed greenhouse gases: estimates from climate models in the Intergovernmental Panel on Climate Change (IPCC) fourth assessment report (AR4). J Geophys Res 111, D14317. doi:10.1029/2005JD006713

Colman RA, McAvaney BJ (2010) On tropospheric adjustment to forcing and climate feedbacks. Clim Dyn 36(9–10):1649–1658

Cox PM, Betts RA, Jones CD, Spall SA, Totterdell IJ (2000) Acceleration of global warming due to carbon-cycle feedbacks in a coupled climate model. Nature 408:184–187

Dahan-Dalmedico A (2001) History and epistemology of models: meteorology (1946–1963) as a case study. Arch Hist Exact Sci 55:395–422, Springer, pp 395–422

Delmas RJ, Ascencio J-M, Legrand M (1980) Polar ice evidence that atmospheric CO2 20,000 yr BP was 50% of present. Nature 284:155–157

Denman KL, Brasseur G, Chidthaisong A, Ciais P, Cox PM, Dickinson RE, Hauglustaine D, Heinze C, Holland E, Jacob D et al (2007) Couplings between changes in the climate system and biogeochemistry. In: Solomon S, Qin Q, Manning M, Chen Z, Marquis M, Averyt KB, Tignor M, Miller HL (eds) Climate change, 2007: the physical science basis. Contribution of working group I to the fourth assessment report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge/New York

Dufresne J-L, Bony S (2008) An assessment of the primary sources of spread of global warming estimates from coupled ocean-atmosphere models. J Clim 21(19):5135–5144

Dufresne J-L, Friedlingstein P, Berthelot M, Bopp L, Ciais P, Fairhead L, Le Treut H, Monfray P (2002) On the magnitude of positive feedback between future climate change and the carbon cycle. Geophys Res Lett 29(10). doi:10.1029/2001GL013777A

Eitzen ZA, Xu KM, Wong T (2009) Cloud and radiative characteristics of tropical deep convective systems in extended cloud objects from CERES observations. J Clim 22:5983–6000

Farneti R, Delworth TL, Rosati AJ, Griffies SM, Zeng F (2010) The role of mesoscale eddies in the rectification of the Southern ocean response to climate change. J Phys Oceanogr 40:1539–1557. doi:10.1175/2010JPO4353.1

Frank DC, Esper J, Raible CC, Buntgen U, Trouet V, Stocker B, Joos F (2010) Ensemble reconstruction constraints on the global carbon cycle sensitivity to climate. Nature 463:527–530

Freeland H et al (2010) Argo – a decade of progress. In: Hall J, Harrison DE, Stammer D (eds) Proceedings of OceanObs’09: sustained ocean observations and information for society, vol 2, Venice, Italy, 21–25 Sept 2009. ESA Publication WPP-306, Roma. doi:10.5270/OceanObs09.cwp.32

Friedlingstein P et al (2006) Climate – carbon cycle feedback analysis, results from the C4MIP model intercomparison. J Clim 19:3337–3353

Friedlingstein P, Houghton RA, Marland G, Hackler J, Boden TA, Conway TJ, Canadell JG, Raupach MR, Ciais P, Le Quéré C (2010) Update on CO2 emissions. Nat Geosci 3:811–812

Gregory JM, Webb M (2008) Tropospheric adjustment induces a cloud component in CO2 forcing. J Clim 21:58–71

Gregory JM et al (2004) A new method for diagnosing radiative forcing and climate sensitivity. Geophys Res Lett 31, L03205. doi:10.1029/2003GL018747

Griffies S et al (2010) Problems and prospects in large-scale ocean circulation models. In: Hall J, Harrison D E, Stammer D (eds) Proceedings of OceanObs’09: sustained ocean observations and information for society, vol 2, Venice, Italy, 21–25 Sept 2009. ESA Publication WPP-306, Roma. doi:10.5270/OceanObs09.cwp.38

Hall A, Qu X (2006) Using the current seasonal cycle to constrain snow albedo feedback in future climate change. Geophys Res Lett 33, L03502. doi:10.1029/2005GL025127

Hannart A, Dufresne J-L, Naveau P (2009) Why climate sensitivity may not be so unpredictable. Geophys Res Lett 36, L16707. doi:10.1029/2009GL039640

Hansen JE, Sato M (2011) Paleoclimate implications for human-made climate change. In: Berger A, Mesinger F, Šijački D (eds) Climate change at the eve of the second decade of the century: inferences from paleoclimate and regional aspects: Proceedings of Milutin Milankovitch 130th anniversary symposium. Springer.

Hansen J, Lacis A, Rind D, Russell G, Stone P, Fung I, Ruedy R, Lerner J (1984) Climate sensitivity: analysis of feedback mechanisms. In: Climate processes and climate sensitivity, Geophysical Monograph. American Geophysical Union, Washington, DC, pp 130–163

Hansen J, Sato M, Nazarenko L, Ruedy R, Lacis A, Koch D, Tegen I, Hall T, Shindell D, Santer B, Stone P, Novakov T, Thomason T, Wang R, Wang Y, Jacob D, Hollandsworth S, Bishop L, Logan J, Thompson A, Stolarski R, Lean J, Willson R, Levitus S, Antonov J, Rayner N, Parker D, Christy J (2002) Climate forcings in Goddard Institute for space studies SI2000 simulations. J Geophys Res 107(D18):4347. doi:10.1029/2001JD001143

Hartmann DL, Larson K (2002) An important constraint on tropical cloud-climate feedback. Geophys Res Lett 29:1951–1954

Hartmann DL, Short DA (1980) On the use of earth radiation budget statistics for studies of clouds and climate. J Atmos Sci 37:1233–1250

Held IM (2005) The gap between simulation and understanding in climate modeling. Bull Am Meteorol Soc 86:1609–1614

Held IM, Soden BJ (2006) Robust responses of the hydrological cycle to global warming. J Climate 19:5686–5699. doi:10.1175/JCLI3990.1

Held IM, Hemler RS, Ramaswamy V (1993) Radiative-convective equilibrium with explicit two-dimensional moist convection. J Atmos Sci 50:3909–3927

Klein SA, Hartmann DL (1993) The seasonal cycle of low stratiform clouds. J Clim 6(8):1587–1606

Kuang Z, Hartmann DL (2007) Testing the fixed anvil temperature hypothesis in a cloud-resolving model. J Clim 20:2051–2057

Le Quéré C, Raupach MR, Canadell JG, Marland G, Bopp L, Ciais P, Conway TJ, Doney SC, Feely RA, Foster P, Friedlingstein P, Gurney K, Houghton RA, House J, Huntingford C, Levy PE, Lomas MR, Majkut J, Metzl N, Ometto JP, Peters GPII, Prentice C, Randerson JT, Running SW, Sarmiento JL, Schuster U, Sitch S, Takahashi T, Viovy N, Van Der Werf GR, Woodward FI (2009) Trends in the sources and sinks of carbon dioxide. Nat Geosci 2:831–836

Legg S et al (2009) Improving oceanic overflow representation in climate models: the gravity current entrainment climate process team. Bull Am Meteorol Soc 90:657–670

Lyman JM, Good SA, Gouretski VV, Ishii M, Johnson GC, Palmer MD, Smith DM, Willis JK (2010) Robust warming of the global upper ocean. Nature 465:334–337. doi:10.1038/nature09043

MacDonald GF, Abarbanel H, Carruthers P, Chamberlain J, Foley H, Munk W, Nierenberg W, Rothaus O, Ruderman M, Vesecky J, Zachariasen F (1979) The long term impact of atmospheric carbon dioxide on climate, JASON technical report JSR-78-07, SRI International, Arlington

Manabe S, Wetherald RT (1967) Thermal equilibrium of the atmosphere with a given distribution of relative humidity. J Atmos Sci 24:241–259

Manabe S, Wetherald RT (1975) The effects of doubling the CO2 concentration on the climate of a general circulation model. J Atmos Sci 32:3–15

Meehl GA et al (2007) Global climate projections. In: Solomon S, Qin D, Manning M, Chen Z, Marquis M, Averyt KB, Tignor M, Miller HL (eds) Climate change 2007: the physical science basis. Contribution of working group 1 to the fourth assessment report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, UK/New York

Meehl GA, Arblaster JM, Fasullo JT, Hu A, Trenberth KE (2011) Model-based evidence of deep-ocean heat uptake during surface-temperature hiatus periods. Nat Clim Change 1:360–364

Mitchell JFB, Ingram WJ (1992) Carbon dioxide and climate: mechanisms of changes in cloud. J Clim 5:5–21

Myhre G, Myhre A, Stordal F (2001) Historical time evolution of total radiative forcing. Atmos Environ 35:2361–2373

Ramanathan V, Lian MS, Cess RD (1979) Increased atmospheric CO2: zonal and seasonal estimates of the effect on radiative energy balance and surface temperature. J Geophys Res 84(C8):4949–4958

Randall DA et al (2007) Climate models and their evaluation. In: Solomon S, Qin D, Manning M, Chen Z, Marquis M, Averyt KB, Tignor M, Miller HL (eds) Climate change 2007: the physical science basis. Contribution of working group 1 to the fourth assessment report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, UK/New York

Rieck M, Nuijens L, Stevens B (2012) Marine boundary layer cloud feedbacks in a constant relative humidity atmosphere. J Atmos Sci 69:2538–2550. doi:10.1175/JAS-D-11-0203.1

Roe GH, Baker MB (2007) Why is climate sensitivity so unpredictable? Science 318(5850):629–632. doi:10.1029/2009GL039640

Shine KP, Cook J, Highwood EJ, Joshi MM (2003) An alternative to radiative forcing for estimating the relative importance of climate change mechanisms. Geophys Res Lett 30(20):2047. doi:10.1029/2003GL018141

Soden BJ, Jackson DL, Ramaswamy V, Schwarzkopf MD, Huang X (2005) The radiative signature of upper tropospheric moistening. Science 310:841–844

Somerville RCJ, Remer LA (1984) Cloud optical thickness feedbacks in the CO2 climate problem. J Geophys Res 89(D6):9668–9672. doi:10.1029/JD089iD06p09668

Son S-W, Gerber EP, Perlwitz J, Polvani LM, Gillett N, Seo K-H, CCMVal Co-authors (2010) Impact of stratospheric ozone on the southern hemisphere circulation change: a multimodel assessment. J Geophys Res 115:D00M07. doi:10.1029/2010JD014271

Trenberth KE, Fasullo JT (2010) Tracking earth’s energy. Science 328:316–317

Wetherald R, Manabe S (1988) Cloud feedback processes in a general circulation model. J Atmos Sci 45:1397–1415

Williams KD, Ingram WJ, Gregory JM (2008) Time variation of effective climate sensitivity in GCMs. J Clim 21:5076–5090. doi:10.1175/2008JCLI2371.1

Yohe G, Andronova N, Schlesinger M (2004) To hedge or not against an uncertain climate future? Science 306:416–417

Zelinka MD, Hartmann DL (2010) Why is longwave cloud feedback positive ? J Geophys Res 115, D16117. doi:10.1029/2010JD013817

Zhang M, Bretherton C (2008) Mechanisms of low cloud–climate feedback in idealized single-column simulations with the community atmospheric model, version 3 (CAM3). J Clim 21(18):4859–4878. doi:10.1175/2008JCLI2237.1

Acknowledgments

We thank Hervé Le Treut and Amy Dahan-Dalmedico for valuable comments and discussions with who helped us put the discussions of this opinion paper into an historical context. We are grateful to V. Ramaswamy, two anonymous reviewers and several participants of the WCRP Open Science Conference (Denver, CO, October 2011) for thorough comments and suggestions that helped improve the manuscript. This paper was supported by the French ANR project ClimaConf.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media Dordrecht

About this chapter

Cite this chapter

Bony, S. et al. (2013). Carbon Dioxide and Climate: Perspectives on a Scientific Assessment. In: Asrar, G., Hurrell, J. (eds) Climate Science for Serving Society. Springer, Dordrecht. https://doi.org/10.1007/978-94-007-6692-1_14

Download citation

DOI: https://doi.org/10.1007/978-94-007-6692-1_14

Published:

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-007-6691-4

Online ISBN: 978-94-007-6692-1

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)