Abstract

Policymakers are becoming increasingly adamant that the research they use to evaluate educational interventions, practices, and programs can support statements about cause and effect. An instrumental variables (IV) estimation approach, which has historically been employed by economists, can be utilized to make causal statements regarding a predictor and an outcome when randomized trials are not feasible. In this chapter, we provide an overview of the IV approach as we explore the causal relationship between taking Algebra II in high school and degree attainment in college. We discuss concepts and terminology related to conducting experimental and quasi-experimental work, present the assumptions that should be met by an IV before a researcher can use it to make causal inferences, and demonstrate several IV estimation strategies. Additionally, we provide the reader with annotated Stata code to facilitate the application of this underutilized methodological approach in higher education research.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Ordinary Little Square

- Degree Attainment

- College Completion

- Local Labor Market Condition

- Local Average Treatment Effect

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Introduction

In response to falling high school graduation rates and concerns about college readiness and workforce development over the past decade, 20 states have increased high school graduation requirements. While these requirements vary across states, most mandate that students complete 4 years of math and English coursework in order to graduate (Achieve, 2011).

Michigan is one example of a state implementing curricular changes in favor of more demanding coursework for high school students. In 2006, legislators implemented a statewide college preparatory high school curriculum—the Michigan Merit Curriculum (MMC), one of the most comprehensive sets of high school graduation requirements in the nation. The new courses required in order to graduate are intensive and specific: Algebra II, Geometry, Biology, and Chemistry or Physics and at least 2 years of a foreign language (Michigan Department of Education, 2006).

The curriculum’s focus on math and science is based on historically low enrollments in the state in advanced courses in these areas. Prior to the implementation of the MMC, only one-third of Michigan’s school districts required students to take 4 years of math. As such, only 1 out of 8 students took Algebra II, instead favoring less intensive math courses, or no math courses at all (Michigan Department of Education, 2006).

There is an ample body of research that supports states’ decisions to make these curricular changes and to support an emphasis on math coursework to meet goals related to college and workforce readiness. Research demonstrates that students who take and succeed in intensive math courses have an increased likelihood of attending college and have improved long-term labor market outcomes (Adelman, 1999; Goodman, 2008; Levine & Zimmerman, 1995; Rose & Betts, 2004; Sadler & Tai, 2007; Sells, 1973; Simpkins, Davis-Kean, & Eccles, 2006).

One of the most powerful levers driving these changes to high school curricula in Michigan and throughout the nation is Answers in the Toolbox and The Toolbox Revisited, publications of the United States Department of Education that assert that students who take more intensive math courses, particularly those who take Algebra II or higher, are more likely than their peers who take less intensive math courses to attend a college or university and to attain a degree. The discussion surrounding these publications served as an inspiration for a number of states to begin adopting more intensive graduation requirements, particularly related to math preparation (Adelman, 2006).

It is important to note that the majority of the literature on which the curricular reforms in Michigan and around the nation were based is correlational in nature. The relationship between intensive math courses (e.g., Algebra II) and postsecondary access and completion maybe influenced by many other factors that are not accounted for in the studies touting the merits of students completing challenging math courses. We will provide an example of the influence of other factors in the case of Grace and Adam below.

Consider Grace and Adam, two high school students in Michigan. Prior to the implementation of the MMC, Grace chose to take Algebra II, whereas Adam did not. Grace, a straight A student, was recommended for the course by her guidance counselor, whereas Adam’s teachers suggested that he may be better suited for a lower-level math course. Grace and Adam have different abilities and motivations, and, as such, the highest level math course they choose to take differs.

The methodological issue in the case of Grace and Adam, as well as with some of the studies mentioned above, is one of self-selection. Students like Grace, who chose to take a more intensive math course, are quite likely different than students like Adam, who chose to take a less demanding course. Students like Grace may possess greater academic abilities or may be more motivated to take challenging courses than their peers like Adam. As such, studies that do not account for these differences in student characteristics are making comparisons between groups of students that are not comparable. It is problematic, therefore, when the findings of studies that do not consider these differences in student characteristics are used to drive education policymaking.

The highest level of math that students like Grace and Adam choose to take (Algebra II for the former, something less intensive, like Consumer Math, for the latter) may be related to whether or not they complete college following their graduation from high school. Or, stated differently, the factors that drive them to take a challenging or less challenging math course may also influence college outcomes. However, it is difficult to state, given the differences in Grace’s and Adam’s academic characteristics and motivation, that the highest math course they took caused them to complete college or not. To better determine if a causal relationship exists between a student’s highest math course in high school and college completion, education researchers can employ a number of statistical methods to investigate the variation in an outcome (college completion) that is caused by a particular program or policy—in this case, taking an intensive math course in high school. To be clear, we are interested in determining a causal effect (rather than a simple association) so that policies related to the outcome of interest are made appropriately and efficiently, such that resources are not wasted on a program or intervention that may not have the intended results.

To investigate the causal impacts of educational interventions and policies on student outcomes, many educational researchers have recently begun to employ experimental and quasi-experimental methodologies (Abdulkadiroglu, Angrist, Dynarski, Kane, & Pathak, 2011; Attewell & Domina, 2008; Bettinger & Baker, 2011; Dynarski, Hyman, & Schanzenbach, 2011). In the study presented in this chapter, we follow this lead by employing methods that can help us establish whether a causal relationship exists between taking Algebra II or higher in high school and college completion.

Experimental research is considered the “gold standard” of causal analysis (United States Department of Education, 2008). Performing a random experiment would, in theory, be the most effective way to determine the causal effect of taking Algebra II on academic outcomes (e.g., high school completion, postsecondary attendance and completion, life-course events). For example, students could be randomly assigned to take Algebra II or a less intense math course, and their postsecondary enrollment and completion patterns following graduation could be examined to determine the causal impact of Algebra II. Assuming that the randomization was done properly, the two groups would be, on average, identical on all observable and unobservable outcomes. If so, one could simply compare the rates of degree attainment between the treatment (Algebra II) and control (lower-level math) groups in order to determine the causal effect of high school course taking (McCall & Bielby, 2012).

Experimental research is, however, often difficult or impossible to do in educational settings because of logistical, cost, and ethical constraints. For example, often times educators cannot in good conscience randomly assign students to courses that will disadvantage some students. If an administrator suspects, for example, that enrolling a student in a small class with an outstanding teacher will dramatically improve his learning, how can this administrator support an experiment that will withhold this “treatment” from some students? Randomized trials can also be very costly to conduct or difficult to implement in educational settings. Given these difficulties, researchers have begun to rely on quasi-experimental methods, to be explored in greater depth below, to determine the impact of various education interventions, including those related to intensive math course taking in high school.

The objective of this chapter is to provide the reader with an introduction to the application of one such technique, instrumental variable (IV) estimation, designed to remedy the inferential problem discussed above. We provide the reader with a description of relevant literature and conceptual issues, the terminology used when discussing IV analyses, and how this method can be applied to educational issues. To inform the latter, throughout the chapter, we provide an example of the application of IV methods to study whether taking Algebra II in high school has a causal effect on college completion.

Conceptual Background

Education stakeholders have been concerned about student course taking at the secondary level and its potential impact on educational and labor market outcomes for decades. In A Nation at Risk (National Commission on Excellence in Education, 1983), the American high school was famously characterized as providing a “smorgasbord” of curricular options that were detrimental to the majority of students, as many oversampled the “desserts” (e.g., physical education courses) and left the “main course” (e.g., college prep courses) untouched. Since the 1980s, a widespread increase in the state- and district-mandated minimum number of core academic courses students must complete to graduate has increased the number of units they complete in math, science, English, and other nonvocational subjects (Planty, Provasnik, & Daniel, 2007). However, the intensity of the coursework that students complete within these domains varies considerably.Footnote 1

Researchers have documented disparities in the highest level of math coursework taken between racial/ethnic groups and social classes. Analysis of course taking trends in national data indicates that although Black and White students earn the same number of math credits in high school, White students are significantly more likely than Black students to have earned these credits in advanced courses such as Precalculus or Calculus (Dalton, Ingels, Downing, & Bozick, R, 2007). There are also disparities between students from low- and high-socioeconomic (SES) backgrounds in both the number and type of math credits earned. These statistics suggest that access to coursework is distributed through mechanisms that differentially impact students from various backgrounds.

Two mechanisms may determine student access to high school coursework: structural forces and individual choices (Lee, Chow-Hoy, Burkam, Geverdt, & Smerdon, 1998). Structural forces are factors outside the student’s control that serve to constrain his or her options. These include placement into curricular tracks by school personnel or the availability of coursework within their particular school. When schools have fewer structural constraints on course options, students are able to exercise greater individual choice by choosing their coursework from a menu of options that provide credits toward the high school diploma. The following sections discuss how structural and individual factors influence the coursework that high school students take.

Structural Forces

Student course taking patterns are strongly influenced by the options available to them. Schools may vary in their willingness and ability to offer a range of courses that are viewed as solid preparation for college. For instance, analysis of national transcript data indicates that Midwestern, small, rural, and predominately White high schools are the least likely to offer advanced placement (AP) coursework (Planty et al., 2007). The practice of “tracking” in K-12 education, or the grouping of students into curricular pathways based on their perceived academic ability, can also serve to constrain student course taking options (Gamoran, 1987). Research on how tracking decisions are made by high school staff indicates that placement decisions are largely a function of a student’s position in the distribution of standardized test scores, their perceived level of motivation, recommendations from middle school teachers, and the availability of school resources (Hallinan, 1994; Oakes & Guiton, 1995). Also, parent wishes may be accommodated when making track placements, although middle- and upper-class parents are likely to have an advantage in advocating for their preferences, as they more often possess the social capital needed to navigate bureaucratic educational environments (Useem, 1992).

Although formal tracking policies have been abolished in many schools, students may continue to experience barriers to unrestricted enrollment in coursework. This is often due to disparities in information about course options and uneven enforcement of course prerequisites across racial/ethnic, social class, and ability groups (Yonezawa, Wells, & Serna, 2002). Course prerequisites play a significant role in restricting access to math coursework because the courses are typically hierarchically arranged in a specific sequence (e.g., Algebra I is followed by Algebra II) beginning in middle school or even earlier (Schneider, Swanson, & Riegle-Crumb, 1998; Useem, 1992). Therefore, it should come as no surprise that middle school math achievement is one of the most significant predictors of taking advanced math courses in high school (Attewell & Domina, 2008).

Disparities in course placement practices and the availability of information about course options within schools may partially explain the finding that disadvantaged students who attend integrated schools take less intensive coursework than their peers who attend segregated schools (Crosnoe, 2009; Kelly, 2009). For instance, Crosnoe finds that low-income students who attend predominantly middle- or high-income schools take lower levels of coursework than low-income students who attend predominantly low-income schools. Similarly, Kelly finds that the greater the proportion of White students in a school, the lower the representation of Black students in the two highest math courses. These results demonstrate that in addition to the allocation of access to intensive courses across schools, the distribution of access within a school plays a key role in structuring student course taking patterns.

Individual Choices

Despite formal or de facto tracking, most students have the ability to choose some of their high school coursework. Schools are more likely to condone downward “track mobility” than upward, allowing students to choose lower-level coursework than originally assigned (Oakes & Guiton, 1995). Additionally, once minimum graduation requirements are met in each subject, students have the option to continue taking advanced coursework if they have demonstrated competency in previous courses. Researchers often examine the progress of students through the sequence of math courses (the mathematics “pipeline”) to determine the highest level of mathematics coursework students are able to take (Burkam & Lee, 2003; Lee et al., 1998). National data indicate that a large proportion of students—44%—choose to drop out of the pipeline at either Algebra I or Algebra II (Dalton et al., 2007).

Educational aspirations also play a key role in determining the coursework that students pursue. High school freshman and sophomores who report having college aspirations are more likely to take advanced math coursework during subsequent years than their peers with lower educational aspirations (Bozick & Ingels, 2008; Frank et al., 2008). Parent aspirations for their children are important as well. After controlling for confounding factors, parent educational expectations significantly predict whether students take advanced mathematics in the senior year of high school—a year when many students choose to stop taking advanced mathematics (Ma, 2001). Additionally, peers can influence course selection. Frank et al. find that females progress farther in the math pipeline when other females in their “local position” (a cluster of students who tend to take the same sets of courses) also advance in their math coursework. (Note: Peer effects on course taking may have implications for our empirical strategy. We will return to this point later in the chapter)

Factors that are beyond the control of students, parents, and educators may also influence the intensity of coursework that students choose to take. For instance, variations in labor market conditions may modify student postsecondary enrollment plans. Students could infer from a strong labor market that ample employment for the noncollege educated exists, which may tend to decrease their interest in courses that lead to college enrollment. The availability of plentiful and well-paying local jobs for young people may also encourage students to take less intensive courses that allow more time for working while in high school, thus ensuring higher immediate earnings. Economic research on the impact of increasing the minimum wage on high school enrollments indicates that a student’s education decision-making is indeed responsive to labor market conditions. For example, the commitment of lower-ability and lower-income students to completing a high school diploma declines in response to increases in the minimum wage (Ehrenberg & Marcus, 1982; Neumark & Wascher, 1995). Therefore, it is possible that college preparatory course taking and the strength of the local labor market are negatively related.

As the prior literature demonstrates, students’ course taking is conditional on many factors, including their educational aspirations, parental expectations, school resources, and local labor market conditions. In the next section, we present frameworks that offer competing explanations for how student course taking is related to their subsequent educational outcomes. We also examine research on the relationship between high school course taking and educational attainment and consider how research has attempted to isolate the effect of courses from related factors (e.g., student characteristics, school context) that may also influence postsecondary outcomes. The theoretical frameworks and course taking effects in the literature provide justification for our quasi-experimental approach when examining the impact of high school course taking on postsecondary success.

Potential Explanations for High School Course Taking Effects

Research demonstrates that students who take a more intensive secondary curriculum are more likely to persist through college and earn a degree than students who take a less intensive curriculum (Adelman, 1999, 2006; Choy, 2001; Horn & Kojaku, 2001). There are at least two potential explanations for this relationship. The first explanation is that high school courses develop a student’s human capital, providing him or her with skills and knowledge to be parlayed into future success (Rose & Betts, 2004). For instance, Algebra II may provide students with content knowledge that improves their performance in college-level quantitative coursework (Long, Iatarola, & Conger, 2009)—particularly general education math coursework that is required to earn a degree (Rech & Harrington, 2000). In turn, improved academic performance could lead students to integrate into college and commit to degree attainment (Bean, 1980; Tinto, 1975). Human capital development is related to the differential coursework hypothesis put forth by Karl Alexander and colleagues, which served as the basis for Adelman’s Toolbox studies (1999, 2006). Alexander and colleagues propose that a student’s academic preparation in high school is the most salient factor in his or her future educational attainment—much more salient than background characteristics such as race, class, and gender (Alexander, Riordan, Fennessey, & Pallas, 1982; Pallas & Alexander, 1983). When policymakers propose increased course taking requirements, they implicitly assume that higher-level courses lead to improved educational and labor market outcomes for students of all backgrounds by developing their human capital.

Another potential explanation for the relationship between curricular intensity and degree attainment is student self-selection. As we demonstrated in our discussion above, random assignment is not the typical mechanism determining student course placements or course choice. Students elect to take particular courses or are placed into courses according to a number of factors, including their prior achievement, scores on placement examinations, work ethic, parental involvement in the educational process, and the racial and social class composition of their schools. If these factors are also correlated with degree attainment, self-selection into courses during high school may positively bias our estimates of the causal effect of course taking on attainment (i.e., the results are upwardly biased).

It is important for researchers to determine if student self-selection or human capital development is largely responsible for any (hypothesized) positive relationship between curricular intensity and degree attainment because effective policymaking often requires a sound understanding of which practices improve educational outcomes. In studies that use observational data and analytical methods that do not strongly support causal inference, the greater the role of selection, the more the estimates of curricular intensity’s effects on degree attainment may be biased. If positive selection bias is present, the individuals who are the most likely to experience the outcome of interest (e.g., graduate from college) are the individuals who are also the most likely to receive the treatment (e.g., select into taking Algebra II). Practices such as K-12 tracking increase the likelihood that only the most able and motivated students take intensive courses. If, prior to enrolling in Algebra II, these students are more dedicated to earning a bachelor’s degree than their peers who take less intensive coursework, the observed positive association between course taking and educational attainment is attributable to the qualities of students who take intensive courses and not the courses per se. If positive selection bias is largely responsible for any observed relationship between curricular intensity and educational attainment, then state policies such as the Michigan Merit Curriculum that mandate a college preparatory curriculum for all students are unlikely to have the expected impact on college access and success.

However, positive selection is not the only potential reason for bias in studies of course taking effects. Negative selection occurs when the individuals who are the most likely to experience the outcome of interest (e.g., graduate from college) are the individuals who are the least likely to receive the treatment (e.g., select into taking Algebra II). For example, in states that offer merit-based financial aid programs that are distributed according to secondary (and postsecondary) GPAs, high school students who aspire to attend college and earn a degree may avoid challenging courses in high school to gain eligibility for financial aid. While we are unaware of a rigorous study that examines merit aid programs’ impact on high school students’ course taking behavior, Cornwell, Lee, and Mustard find evidence that the Georgia HOPE Scholarship causes some college students to take fewer general education courses in math and science (2006) and to reduce their course load and increase their rate of course withdrawals (2005). If negative selection biases the estimates in studies of course taking effects, policies like the Michigan Merit Curriculum may actually have a larger impact on college access and success than research that does not adjust for such selection would indicate.

High School Coursework and Postsecondary Educational Attainment

Many researchers have attempted to account for confounding factors in order to determine the causal impact of intensive coursework on the likelihood of completing a bachelor’s degree. Arguably the most well-known and influential studies that address this topic are Adelman’s Answers in the Toolbox (1999) and The Toolbox Revisited (2006). In these studies, Adelman uses High School and Beyond (HSB) and National Education Longitudinal Study (NELS:88) data to examine the effect of student effort and high school course taking on their likelihood of college completion. As part of his analyses, he examines the impact of the highest level of math coursework taken by a student on his or her odds of degree attainment, controlling only for socioeconomic status. The results from the 1999 study using HSB data suggest that taking Algebra II or higher has a positive impact on degree completion. However, when analyzing NELS:88 data in 2006, Adelman suggests that taking Trigonometry or above has a positive effect on degree attainment, while taking Algebra II or lower has a negative effect. In summarizing his two studies, Adelman concludes that “the academic intensity of a student’s high school curriculum still counts more than anything else in pre-collegiate history in providing momentum toward completing a bachelor’s degree” (Adelman, 2006, p. xviii). This is a strong claim, given that the studies’ regressions of highest level of math coursework do not account for precollegiate factors beyond socioeconomic status that are hypothesized to impact degree attainment, such as student educational aspirations or their high school contexts.

Like Adelman (1999, 2006), other researchers find that students who take higher-level courses in high school have more successful postsecondary outcomes than their counterparts who take lower-level courses (Bishop & Mane, 2005; Choy, 2001; Fletcher & Zirkle, 2009; Horn & Kojaku, 2001; Rose & Betts, 2001). The majority of these studies employ standard logistic/probit or multinomial regression techniques and control for several (possibly) confounding factors.Footnote 2 Like Adelman (1999), Rose and Betts (2001) employ High School and Beyond (HSB) survey data and find that math course taking influences students’ bachelor’s degree attainment, even after they control for observable factors such as student background, high school characteristics (including student-teacher ratio, high school size, and average per-pupil spending), and prior math course and standardized test performance. Their results suggest that an average student whose highest level of math is Algebra II is 12% more likely to earn a bachelor’s degree than a similar student who only completes Algebra and Geometry.

However, other studies find that accounting for an array of background, academic, and/or state characteristics negates the relationship between taking intensive courses in high school and postsecondary persistence (Bishop & Mane, 2004; Geiser & Santelices, 2004). Using University of California (UC) and College Board data, Geiser and Santelices examine if taking advanced placement (AP) and honors courses in high school affects second-year persistence in college. They find that when high school GPA, socioeconomic indicators, and standardized test scores are included in their models, honors and AP courses are not significantly related to whether UC students remain enrolled into their sophomore year. Similarly, after accounting for high school- and college-level factors—including the non-AP coursework taken by students—Klopfenstein and Thomas (2009) find a null effect of advanced placement coursework, including AP Calculus, on postsecondary persistence using Texas student unit record data.

Bishop and Mane (2004) examine the impact of high school curriculum policies on postsecondary outcomes using the same NELS:88 dataset that Adelman used in his 2006 study. They control for factors unaccounted for in other non-quasi-experimental studies, including Adelman’s, such as student locus of control and state unemployment rates. They find that, controlling for student- and state-level variables, increases in the number of academic courses required to graduate from high school is not associated with college degree attainment. This result suggests that requiring all secondary students to take additional years of academic coursework will not increase college graduation rates, a result congruent with the explanation that selection is largely responsible for the positive association between course intensity and educational attainment.

As the aforementioned studies indicate, research provides conflicting evidence about whether high school courses have a causal impact on postsecondary completion. This conflicting evidence may arise for several reasons. First, the researchers use different datasets to investigate course taking effects. The datasets range from nationally representative to state specific and the points in time in which the surveys were administered span decades. Additionally, among researchers that use the same dataset, their effective samples often differ. For example, Adelman (2006) restricts his analysis of NELS:88 to students who attended high school through the 12th grade. This restriction excludes many dropouts, early graduates, and GED completers who may experience different effects of math coursework than traditional high school graduates. Conversely, Bishop and Mane (2004) include all students who were in the 8th grade in 1988 in their analysis of NELS data. Therefore, Adelman’s and Bishop and Mane’s estimates are based on very different samples.

Second, there is no clearly defined and universally agreed-upon theoretical model of high school course taking and educational attainment. As a result, each researcher proposes a different analytical model with a different set of controls for confounding variables, which means that each study likely contains a different degree of omitted variable bias.Footnote 3 It is almost certain that these nonexperimental studies suffer from omitted variable bias because it is improbable that researchers are able to control for every covariate that is correlated with both high school course taking and degree attainment. However, some researchers may have been more effective than others in accounting for confounding factors in their models and therefore may provide less biased estimates of the causal effect of course taking on degree attainment. For instance, our review of the literature demonstrates that students who attend rural schools have less access to college preparatory courses than students who attend nonrural schools (Planty et al., 2007). Data indicate that rural residents also have lower levels of degree completion than nonrural residents (United States Department of Agriculture, 2004). Therefore, the urbanicity of students’ communities could be a confounding factor in studies of the effect of course taking on degree attainment. Yet only two of the studies reviewed above control for the impact of hailing from a rural community.Footnote 4

Although it is important to attend to the potential of omitted variable bias by inserting controls, such as urbanicity, we would like to caution readers against including controls that would not be theoretically expected to confound the effects of the treatment variable. The inclusion of such variables would have the potential to negatively impact the model in two ways. First, adding control variables to a regression that are correlated with other omitted predictors could introduce additional bias. If so, the coefficients of the newly added variables will not be accurate because they suffer from omitted variable bias also, due to their relation to other still excluded variables. Additionally, the inclusion of additional variables that are not significant predictors is likely to result in a loss of statistical efficiency and inflate standard errors. This will reduce the accuracy of all estimates in the model. Therefore, it is important to select control variables that are founded in the theoretical underpinnings of the model at hand. Absent knowledge of the true structural model of course taking and degree attainment in the population, it is impossible to know which of the course taking effects studies we reviewed provides the most accurate representation of the factors that predict college completion.

An additional issue with the aforementioned studies is that none employ strategies to eliminate the influence of unobservable factors on course taking and attainment. Some student characteristics may be difficult or impossible to obtain information about in observational datasets, but this does not change the fact that they are confounding factors (Cellini, 2008). Examples of potential unobservable factors in course taking effects research include a student’s enjoyment of the learning process and a student’s desire to undertake and persevere through challenges. It is likely that these unobservable factors contribute to student selection into high school courses and a student’s subsequent choice to attain a bachelor’s degree. However, none of the studies we examined that employ a standard regression approach accounted for a student’s intrinsic love of learning or ability to endure through difficulties; the failure to account for these unobserved factors may bias the estimates that result from these studies.

To minimize omitted variable and selection bias to make stronger causal claims, researchers have recently employed quasi-experimental methods to examine the link between high school course taking and educational attainment. Attewell and Domina (2008) use propensity score matching (PSM) to study the impact of high school curriculum on student outcomes (for an example of the use of PSM in education research, see Reynolds and DesJardins (2009)). PSM may be an improvement over standard regression techniques because it allows researchers to compare outcomes only among students who had similar characteristics before receiving a “treatment”—for example, a high school course or a series of courses—thereby potentially reducing the confounding effects of other observable factors. Attewell and Domina find that PSM estimates of course taking effects are generally smaller than those produced by previous studies, including Adelman’s, that are produced with standard regression. This suggests that a portion of the positive relationship observed between college preparatory courses and educational attainment in correlational studies may be due to the qualities of students who elect to take an intensive curriculum. However, as with all PSM studies, Attewell and Domina are unlikely to completely eliminate selection bias, as their propensity scores were based on a set of observable student background characteristics that may not adequately control for unobservable differences across students.

Altonji (1995) applied an instrumental variables approach in his study of high school curriculum effects on years of postsecondary education. Using data from the National Longitudinal Survey (NLS:72), he first estimates a standard OLS regression model, controlling for confounding student- and school-level factors. His results indicate that each additional year of high school math increases enrollment in postsecondary education by approximately one-quarter of a year. However, when he employs IV techniques using the average number of courses taken in a student’s high school as an instrument, his point estimates change: The effects of additional years of math coursework on degree attainment become minimal to nonexistent. Altonji’s results suggest that studies that fail to control for selection are upwardly biased. However, as Altonji notes, his IV is not optimal. The course taking behavior of students in specific high schools is likely related to unobserved characteristics of their communities, such as neighborhood or school district resources that in turn may influence the future educational outcomes of these students. Therefore, his IV estimates of course taking on years of postsecondary schooling may still be contaminated by selection bias. Including controls for community-level factors could help to mitigate this problem.

Many other researchers (mostly economists) have also employed instrumental variables to answer questions about postsecondary enrollment and attainment (Angrist and Krueger 1991; Card, 1995; Kane & Rouse, 1993; Lemieux & Card, 1998; Staiger and Stock 1997). To investigate the relationship between postsecondary attainment and earnings, for example, Card (1995) considers the distance from a student’s home to the nearest 2- or 4-year institution as an instrument for his or her likelihood of attending college. While his OLS estimates assert that those who attend college earn 7% more over a lifetime than those who do not, his IV model yields estimates closer to 13%—a difference of almost 50%.

Overview of the Empirical Example

Given the inconclusive results of prior studies, an important policy question remains unanswered: What is the causal effect of high school courses on college completion? To address this question, we focus our analysis on the effect of taking Algebra II on a student’s likelihood of earning a bachelor’s degree. We selected the Algebra II course taking margin because, in the hopes of better preparing students for college and career success, almost half of the states in the United States currently mandate that students complete Algebra II in order to earn a high school diploma (Achieve, 2011). Consequently, a large portion of the nation’s high school students stop taking math courses after taking Algebra II (Bozick and Ingels 2008; Dalton et al., 2007; Planty et al. 2007). Given the prominent role that Algebra II plays in educational policy, it is important to determine if this commonly mandated course improves student educational attainment. Previous research on the effects of specific math courses on degree attainment has been inconclusive, with earlier studies finding that taking Algebra II as the highest math course taken improves a student’s odds of degree attainment (Adelman, 1999; Rose & Betts, 2001) and a later study finding that it does not (Adelman, 2006). These inconclusive results may reflect changing standards for math preparation over time if courses higher than Algebra II have become necessary for college-level success (Adelman). Additionally, the inconclusive results could be caused by differences in the samples used and the degree of omitted variable and selection bias present in their estimates.

To determine the causal effect of taking Algebra II on degree attainment over time, we employ data from two nationally representative surveys conducted a decade apart by the National Center for Education Statistics (NCES): the National Education Longitudinal Study of 1988 (NELS:88) and the Education Longitudinal Study of 2002 (ELS:02). Both surveys contain detailed high school transcript information for survey respondents. NELS follows a cohort of students who were in the eighth grade in 1988 through their sophomore year in 1990, their senior year in high school in 1992, and into college and the labor market in 2000. This allows us to observe which students complete a bachelor’s degree in a reasonable time frame. ELS follows a cohort of students who were in the tenth grade in 2002. This cohort was issued follow-up surveys in their senior year of high school in 2004 and 2 years following high school graduation in 2006. Although ELS provides the most recent national data on high school student course taking, it does not contain information on bachelor’s degree attainment because NCES has not yet released the third follow-up survey data.Footnote 5 Therefore, we use persistence to the second year of college as a proxy for degree completion in the ELS data.

To address omitted variable and selection bias, we will conduct our analysis using an instrumental variables approach, to be discussed at length below. We will exploit the influence of local labor market conditions and youth labor laws early in a student’s high school career to account (instrument) for his or her willingness to attempt math courses at the Algebra II level. These local labor market conditions are unlikely to remain fixed as students persist through high school and college and are thus unlikely to impact a student’s ultimate educational attainment. In subsequent sections, we demonstrate how causal inferences can be made about course taking effects using an instrumental variables approach and local labor market conditions and youth labor laws as IVs. As a first step, we present a general introduction to concepts and terminology related to instrumental variable estimation approaches.

Instrumental Variables: Concepts and Terminology

Our goal is to determine whether taking Algebra II (or higher) has a causal effect on student postsecondary completion. However, a more utilitarian goal is to provide some guidance on the proper use of methods that will allow education researchers to make strong inferential statements about the effects of such “treatments” on student outcomes. If successful, we will provide higher education researchers with additional tools for their analytic “toolbox” so that their empirical work will be of the highest quality and able to inform policymakers about the likely effects of practices, interventions, and policies (e.g., high school curriculum standards) on student academic and labor market outcomes. The “wrench” we will add to the toolbox is known as instrumental variable estimation.

As noted earlier in the chapter, students take different levels of math courses while in high school and do so for a variety of reasons including differences in ability, motivation, and encouragement from others. The nonrandom assignment of students into courses presents the researcher with a challenge when attempting to determine the causal effect of a treatment (e.g., whether the student took Algebra II or higher or not) on an outcome (e.g., college completion) because observable (e.g., grades) and unobservable (e.g., motivation) factors may confound the typical multivariate analysis of the relationship between the outcome and the treatment. By employing an instrumental variable estimation strategy, we hope to mitigate this inferential problem.

Before diving into our investigation of the causal effect of taking Algebra II or higher on college completion, we will first discuss some important concepts and terminology related to making causal assertions using an instrumental variables approach. We will attempt to explain each of the concepts and terms using narrative, equations, and figures.

The Concept of a Counterfactual

Perhaps one of the most challenging issues in conducting causal research is determining the correct counterfactual—the group against which the outcomes of the treatment group (e.g., those who take Algebra II) are compared. Using a counterfactual allows researchers to think about the outcomes of those receiving treatment, had the treatment never occurred. In our case, the counterfactual helps researchers to explore the question, “what would the postsecondary outcomes of students who took Algebra II be had they not taken Algebra II?”

The concept of the counterfactual relies on the idea of potential outcomes. A potential outcome is defined as each of the possible outcomes of the dependent variable (e.g., whether or not a student completes college) in different states of the world—provided, of course, that observing different states of the world were possible. In our context, the “different states of the world” are whether or not the student takes Algebra II or higher or not.Footnote 6

Consider again the example of Grace and Adam. Grace, as you recall, takes Algebra II in high school, whereas Adam does not. The best counterfactual for these two students would be themselves: Grace takes Algebra II in high school, and her eventual college completion is measured. Assuming the invention of a time machine, the researcher turns back the clock to high school and Grace takes a lower-level math course instead of Algebra II, and the researcher measures whether she completes college or not. The same strategy could be used for Adam: He takes Consumer Math, the clock is turned back to high school, he takes Algebra II instead, and we measure whether he completes college or not. We would then be able to compare Grace’s and Adam’s outcomes (college completion) under both conditions: taking Algebra II and not taking Algebra II. Absent time travel, this scenario is impossible.

We can also discuss the concept of the counterfactual formally. Let the outcome for Grace be \( {Y}_{i}^{1}\)if the she is exposed to the treatment (e.g., Algebra II) and be \( {Y}_{i}^{0}\)if she is not (e.g., does not take Algebra II). Let T i be a dichotomous variable that equals 1 if Grace takes Algebra II:

or

The value \( ({Y}_{i}^{1}-{Y}_{i}^{0})\)is the causal effect of taking Algebra II. However, the fundamental problem of causal inference, as mentioned above, is that we cannot observe both of these values of Y (takes Algebra II and does not take Algebra II) for Grace or Adam (Angrist & Pischke, 2009; Holland, 1986; McCall & Bielby, 2012; Rubin, 1974). A student either takes Algebra II, allowing us to observe \( {Y}_{i}^{1}\)(which we would call the “factual”) but not \( {Y}_{i}^{0}\)(which we would call the “counterfactual”), or, if they do not take Algebra II, we are able to observe \( {Y}_{i}^{0}\)but not \( {Y}_{i}^{1}\).

Absent an experiment, a “naïve” solution to this problem is to compare the average value of Y for all of the students who take Algebra II to the average value of Y for those who do not:

However, it is demonstrable that

Each of the elements is defined as above. The first term in brackets on the right-hand side of Eq. 6.4 is the average causal effect of Algebra II on those who took Algebra II. The second bracketed term is the difference in what the average value of Y would have been had the treated remained untreated (e.g., those who took Algebra II had not taken it) and the average value of Y for the untreated. In other words, the second bracketed term shows the difference in outcomes between the treated and the untreated that is due to students’ background characteristics and other variables and not the treatment (Algebra II) itself. This second bracketed term represents selection bias, which will be discussed at greater length below (see McCall & Bielby, 2012, for additional details).

We can also think about the counterfactual as a missing data problem. That is, we have information about the effect of Algebra II on those who took it but are missing this information for those who did not. Conversely, we have information about the control condition for those who did not take Algebra II but are missing this information for those who did. This is depicted in Fig. 6.1.

The concept of the counterfactual as a missing data problem (Owing to Murnane & Willett, 2011)

Endogeneity (“The Selection Problem”) and Exogeneity

As noted above, high school students self-select into specific courses for a variety of reasons. Because the characteristics that lead to specific course selection are internal to the student, their selection into treatment (Algebra II) is endogenous. By this, we mean that a student’s choosing to take Algebra II is the result of his or her own action (or possibly the action of his or her teachers or parents) who exist within the system (in this case, the education system) being investigated (Murnane & Willett, 2011). Endogeneity (which, for our purposes, is a synonym for selection) hinders our ability to make causal assertions about the impact of a program or policy on a given outcome because it is unclear whether it is a student characteristic (observable or otherwise) that influences his or her outcome (college completion) or the treatment itself (Algebra II).

Consider our example in equation form:

where:

-

y = postsecondary completion (the outcome of interest)

-

x 1 = an exogenous control variable (e.g., parents’ education)

-

t i = whether a student takes Algebra II or not (the treatment)

-

e = error term

The betas \( ({\beta }_{0}\), \( {\beta }_{1}\), and \( {\beta }_{2})\) are parameters to be estimated—\( {\beta }_{0}\)represents the Y intercept, \( {\beta }_{1}\)is a coefficient for the relationship between our exogenous predictor and postsecondary completion, and \( {\beta }_{2}\)is a coefficient on whether a student takes Algebra II. In the absence of random assignment to math classes, it is likely that many student characteristics that are excluded from this regression (ability, motivation, encouragement from parents—which can be assumed to be included in the error term) are related to a student’s decision to take Algebra II. Therefore, ti is an endogenous variable, and its coefficient \(\left({\beta }_{2}\right)\) cannot be used to make causal claims about the relationship between Algebra II and college completion. Herein, we dub this the “naïve” statistical model because it does not account for the endogenous relationship between t i and y.

Endogeneity in the regressor of interest (whether a student takes Algebra II) can potentially bias the magnitude of its estimate (\( {\beta }_{2}\)). In Eq. 6.5 above, it is likely that \( {\beta }_{2}\)is too high—that the relationship between taking Algebra II and college completion is upwardly biased. Upward bias means that the relationship between Algebra II and college completion appears to be too strong. There are likely many factors other than taking Algebra II (ability, motivation, and encouragement) that may influence whether or not a student completes college. On the other hand, the estimate (\( {\beta }_{2}\)) will be biased downward if it underestimates the relationship that exists between taking Algebra II and college completion.

Exogeneity exists when assignment to treatment (taking Algebra II) happens through a mechanism that is outside the system being investigated: when a lottery, for example, or an otherwise random draw assigns students to a particular math class. Under this condition, assignment is unrelated to student characteristics, the opinions and/or encouragement of teachers and parents, and the characteristics of the math classes themselves. Exogenous variation, to continue with our example, would mean that students are assigned to take Algebra II or a lower-level math class in a way that has nothing to do with their ability, motivation, or how much encouragement they receive from their parents.

Consider Eq. (6.5) above, now assuming that students are assigned to treatment exogenously. Because students are randomly assigned to Algebra II or a lower-level math course, all of their observed and unobserved characteristics should, on average, be statistically identical. This means that we should have treatment and control groups that are identical on average. If so, any bias in the estimates of the effect of Algebra II on college completion will be eliminated—a stark difference from when assignment to treatment is endogenous—and should yield estimates of the relationship between the treatment (Algebra II) and the outcome (college completion) that are much more accurate.

Instrument

To address issues of endogeneity (selection) when attempting to make causal assertions about the relationship between taking Algebra II in high school and completing college, it may be useful to employ an instrumental variable (an “instrument” or an “IV”). An instrument is defined as a variable that is unrelated to the error term and related to the outcome only through the treatment variable. Again, consider Equation 6.5 above. An appropriate instrument must be unrelated to e (the error term) and related to y (postsecondary completion) only through ti (whether a student takes Algebra II). An instrument allows a researcher to minimize bias due to endogeneity by identifying a source of exogenous variation and uses this exogenous variation to determine the impact of a treatment, policy, or program (e.g., Algebra II) on an outcome (e.g., postsecondary completion).

Using our example, we consider both labor market conditions and youth labor laws during the student’s 10th grade year as instruments for their probability of taking Algebra II. While students are enrolled in high school, local labor market conditions may affect their college preparation decisions, and these decisions may subsequently alter their chances for college access/completion. For example, a strong local labor market when a student is in 10th grade may entice students to avoid a college preparatory curriculum, reasoning that many job opportunities will exist without a college education. On the other hand, an identical student facing a weaker labor market in 10th grade may be more likely to enroll in a college preparatory curriculum, as employment prospects will likely dim without a college education. Additionally, youth labor laws when a student is in high school may influence the amount of time he or she is able to work outside of school. These opportunities for work (or lack thereof) may also influence student decisions about taking (or not) college preparatory coursework, as they may choose to spend time working as opposed to focusing on more challenging coursework.

It is important to note that although the IV approach is an econometric method used by many researchers, there is considerable debate about the application of this methodology. We will discuss this debate below, as well as alternative approaches for making causal claims about the relationship between treatments and outcomes.

Historically, methodologists and researchers considered an instrument to be valid if it met the following two conditions:

-

1.

The exogeneity condition: The instrument must be correlated with Yi only through ti and must be uncorrelated with any omitted variables. The key assumption when using an IV is that the only way the instrument affects the outcome is through the treatment (Newhouse & McClellan, 1998).

-

2.

The relevance condition: The instrument must be correlated with ti, the treatment (Algebra II).

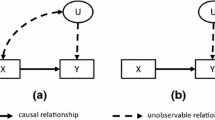

These relationships are depicted in the figure below (Fig. 6.2).

The relevance condition can be verified empirically by determining whether and, if so, how strongly the instrument is correlated with the policy variable of interest (in our case, whether students take Algebra II.) If a strong correlation exists, the relevance condition is met. The exogeneity condition, however, cannot be tested empirically because it is stated in terms of the relationship between the instrument and the population parameters. Population parameters cannot be observed, as researchers have access only to sample data. As such, it is impossible to investigate correlational relationships between the instrument and unobservable parameters. Therefore, this condition requires that researchers think about the potential relationships between the IV, the omitted variables, the treatment, and the outcome. In our running example, some questions to be asked might be the following: How do local labor markets impact college going among high school graduates? How do they impact the quality of the neighborhoods in which students live, a variable that may be omitted from the model? If a logical case can be made and defended, the exogeneity condition is considered to have been met as well. Absent random assignment, this assumption is more challenging to justify than the relevance assumption, and IV exogeneity is often contested among communities of scholars.

Methods to Employ the IV Framework

One can employ an instrumental variables approach using a variety of regression-based techniques, some of which will be discussed at length below. One very common method that researchers use is two-stage least squares (2SLS) regression. 2SLS is performed in two steps that happen sequentially: In the first stage, the main variable of interest (Algebra II) is regressed on the instrumental variable and any other variables that we think might help explain why students take Algebra II. The results of this regression yield a probability of taking Algebra II for all students in the sample. These predicted values are then used in place of the (in our case) dichotomous treatment variable (Algebra II) in a second stage. This and other methods for using IV will be discussed in much greater detail below, in light of the methods employed to explore our causal question of interest: What is the causal relationship between high school course taking and college going?Footnote 7

Data

Before entering into a discussion of the procedures for estimating IV models, we will briefly describe the data and computational methods we employed to apply and test different modeling approaches to IV analysis. The running example will employ data from two National Center for Education Statistics (NCES) datasets: the National Educational Longitudinal Survey of 1988 (NELS:88) and the Educational Longitudinal Survey of 2002 (ELS:02). These datasets provide nationally representative samples of students who are longitudinally tracked beginning in their eighth grade year. NELS:88 tracks students through high school and postsecondary education and into the workplace. ELS:02, the most currently available of the NCES longitudinal datasets, has collected and distributed its most recent survey 1.5 years after students were expected to complete high school. The data provides detailed information on students’ high school-level course taking in addition to a number of other academic preparation variables, demographic variables, and a range of postsecondary outcomes of interest. We leverage this detailed longitudinal data to construct a number of models testing the influence of high school-level course taking, specifically Algebra II, on student probabilities of obtaining a bachelor’s degree.

In our analyses, we focus on two particular outcomes. Our primary outcome of interest is bachelor’s degree attainment; however, this outcome is only available in the NELS:88 data because the most recently available wave of the ELS:02 data only interviewed students 1.5 years after their expected high school graduation date. Therefore, using the ELS:02 data, we use as a proxy for degree completion a variable indicating whether a student persisted from the first to second year of postsecondary education. We will reestimate this model when college completion data is available.

Our variable for bachelor’s degree attainment was constructed from the NELS Postsecondary Education Transcript Study (PETS) data file. The variable was coded as a dummy variable, “1” if a student attained a bachelor’s degree within 8 years of their expected high school graduation and “0” otherwise.

The first- to second-year persistence variable for ELS:02 was developed in two stages. First, a variable was created to indicate the first month, in 2004 or 2005, that a student was enrolled in a postsecondary institution. Then, a dummy variable was created to indicate if that student was enrolled in a postsecondary institution 12 months after their initial month of enrollment, coded “1” if the student was still enrolled 12 months later and “0” otherwise.

One concern with each of these dependent variables, as is a concern with any regression-based modeling technique, is that they are both dichotomous and therefore might not be appropriately estimated using techniques based on ordinary least squares (OLS; or “linear”) regression. While strong arguments have been made in favor of using OLS with dichotomous dependent variables (see Angrist & Pischke, 2009, p. 103) and we do so in this study, we also estimate some IV models using methods that deal with the nonlinearity when estimating dichotomous dependent variables.

The Endogenous Independent Variable

The independent variable of interest in our analysis is high school-level mathematics course taking, specifically whether or not a student took an Algebra II course (or higher) or not in high school. It is operationalized as a dummy variable, coded “1” if a student took more than 0.5 Carnegie units, equivalent to high school credits, in Algebra II while in high school and “0” otherwise.

As was discussed above, this variable is expected to be endogenously related to postsecondary persistence and degree attainment because students self-select into high school courses. In an attempt to account for this endogeneity, we employ instrumental variables in order to more accurately estimate the causal relationship between course taking and degree attainment. The selection of the instrumental variables employed is discussed in detail below.

Exogenous Independent Variables

A set of exogenous controls were incorporated in each model estimated. The inclusion of controls that are expected to significantly predict the outcome variable is an important aspect of reinforcing the exogeneity of the other variables in the model. If factors that are truly related to the dependent variable were excluded, the risk of omitted variable bias in the model estimates would be increased. Therefore, controls included in our models were selected based on their expected relationship with both the dependent variable of interest and the treatment variable. These controls include mathematical ability, measured as a student’s quartile ranking on the NCES-standardized high school mathematics exam (8th grade for NELS and 10th grade for ELS), race/ethnicity, mother’s level of education, and socioeconomic status quartile. State and birth year fixed effects were also included to account for impacts of policies that may differentially influence students’ decisions and/or outcomes based on age and/or state of residence. Each of these controls is included as a predictor in both the first and second stage equations, as will be discussed below. See Table 6.1 for descriptive statistics for variables included in our models.

Software and Syntax

To conduct this analysis, we chose to use the statistical program Stata. Stata is one of many statistical programs capable of performing the analyses conducted herein (e.g., SPSS, SAS, or R). However, Stata provides a number of preprogrammed instrumental variable modules that are readily accessible and accompanied by clearly written help files and interactive examples that provide a better gateway to IV modeling than might be available in other programs. Additionally, advanced programming options and Stata’s use of open source user-created routines allow for a great deal of flexibility in the number of approaches that can be applied.

Along with each of our analyses, we provide a set of annotated Stata syntax (see Appendix A) that provides step-by-step examples of the code that is necessary to estimate the IV models discussed below.

Assumptions of IV models

As is discussed above, the general objective of applying IV methods is to account for potential bias in traditional regression estimates that are due to the presence of endogeneity. Below, we provide a detailed discussion of a number of assumptions that IV models and instruments are required to meet in order to account for endogeneity and provide more accurate estimates. We begin by discussing tests for the presence of endogeneity in the model. We then move on to discuss the traditional two-assumption approach to IV modeling that dominated IV literature in econometrics for much of the twentieth century. Next, we introduce a relatively new five-assumption approach that acknowledges potential issues with relying only on the two-assumption approach and expands our thinking about the role of assumptions when estimating treatment effects. We then evaluate our empirical example using the five-assumption approach, thereby providing conceptual and empirical support (or not) about our ability to estimate the causal effect of Algebra II course taking on first- to second-year persistence and bachelor’s degree attainment.

Testing for Endogeneity

The application of IV modeling techniques is driven by the assumption that at least one of the independent variables in a model, here Algebra 2 course taking, is endogenous. When there is endogeneity present, naïve regression-based techniques (see Eq. (6.5)) produce inconsistent estimates of all coefficients (Wooldridge, 2002). However, employing IV techniques also results in a loss of statistical efficiency (i.e., inflation of standard errors) when compared to linear regression, so it is important to be certain that the variables that are thought to be endogenous are in fact so. If we knew the true population parameters were not endogenous, then the application of IV approaches would reduce efficiency without accounting for bias; thus, one would be better off to apply a simple (naïve) OLS regression.

There are a number of tests that can be applied to assess the endogeneity of explanatory variables. Many are made available through Stata’s estat endogenous postestimation command (see StataCorp, 2009, p. 757). Additionally, the estimation strategy of some IV approaches, namely, control function approaches (discussed in detail below), directly tests the endogeneity of the independent variable of interest (e.g., Algebra II). Conducting these tests is an essential step when applying the IV approach. If we find that Algebra II is in fact exogenous (in the population), then the use of an IV estimator would be inefficient, inflating our standard errors, without accounting for any potential bias from the more efficient OLS estimator. However, these endogeneity tests are sensitive to the strength of our instruments. If the instrumental variables are only weakly related to the treatment, there is a high potential to falsely reject the endogeneity assumption and assume that the treatment variable is exogenous. Therefore, it is always of primary importance to consider not only statistical tests but conceptual evidence when evaluating the endogeneity of a variable. While the statistical tests might not fully support the presence of endogeneity, this may be largely due to a lack of statistical power in the test, not a truly exogenous treatment variable.

The Two-Assumption Approach

Traditional conceptions of IV models (e.g., Cameron & Trivedi, 2005; Greene, 2011; Wooldridge, 2002) required that instrumental variables meet two assumptions in order to be considered valid. Assume the following simple linear regression:

If the researcher believes that t is endogenous, then the estimates of \( {\beta }_{0}\), \( {\beta }_{1}\), and \( {\beta }_{2}\)will be biased if standard OLS regression methods are employed. One way to remove this bias is to apply an IV method. To do so, we must find an instrument, z, that meets the following assumptions:

A1. Exclusion Restriction

This assumption requires that the instrumental variable is appropriately excluded from the estimation of the dependent variable of interest. When this assumption is satisfied, it guarantees that the instrument, z, only affects the dependent variable, y, through its effect on t.

More formally, there must be no correlation between z and e in Eq. (6.7):

This assumption is the basic requirement that all exogenous variables in Eq. (6.7) are required to meet. Additionally, the exclusion of z from Eq. (6.7) provides that z has zero effect on the dependent variable, y, when controlling for the effect of all other independent variables. Combining the lack of correlation between z and e and the exclusion of z from (6.7), assumption A1 guarantees that the only effect of z on y is through its effect on t.

A2. Nonzero Partial Correlation with Endogenous Variable

The second assumption requires that the instrument (z) has a measurable effect on the endogenous variable (t). To examine this relationship, the endogenous variable (t) is regressed on the instrument (z) and the other predictor variables (x 1) from Eq. (6.7) in what is referred to as the reduced form equation, below:

This assumption requires that θ 1 0. At the most basic level, this means that the instrument must be correlated with the endogenous variable, that is, that the coefficient on the IV (θ 1) in Equation (6.8) must be nonzero after controlling for all other exogenous variables (x 1) in the model. Meeting assumptions A1 and A2 is argued to ensure that the IV model is appropriately identified (see Wooldridge, 2002, p. 85) and the instrument is valid.

Although assumptions A1 and A2 have been used to judge whether an instrument is valid, advances in econometrics have driven an interest in applying IV models to estimate causal effects of endogenous variables (t) on dependent variables of interest (y). In order to accomplish this, the traditional IV model must be situated within a broader causal framework based on counterfactuals discussed above. This requires that IV models meet a set of five assumptions in order to estimate causal relationships.

The Five-Assumption Approach

An underlying assumption of the two-assumption model is that the effect of a treatment is the same for all individuals in the sample. No matter who the individual is that receives the treatment, the average influence of the treatment on their outcome of interest is expected to be the same. If this assumption holds, then we are able to estimate the average treatment effect (ATE) for all individuals in the sample. However, Angrist, Imbens and Rubin (1996) argue that treatment effects are likely to be heterogeneous, such that treatments will have differential effects on four different groups of individuals: always-takers, never-takers, defiers, and compliers. Always-takers and never-takers are unaffected by the instrument, such that they will always behave in the same way given a particular treatment. In our example, and using only the county-level employment (not the labor market laws IV) as an example of our instrument, always-taker students will always take Algebra II, whereas never-takers will never take Algebra II, regardless of local labor market conditions. Defiers behave in a manner that is opposite to expectations. Defiers would not take Algebra II when county-level unemployment rates were high but would take Algebra II when unemployment rates were low. Compliers behave according to expectations. When unemployment rates are high, they are more likely to take Algebra II, and when unemployment rates are low, they are less likely to take Algebra II, because they will be entering the labor market after high school instead of attending college.

Among these treatment groups, a causal IV model is only able to estimate the effect of the treatment on compliers, and this estimate is referred to as the local average treatment effect (LATE) (Angrist et al., 1996; Angrist & Pischke, 2009). To estimate the LATE, Angrist et al. argue that the traditional IV model must be embedded within a broader causal structural model referred to as the Rubin causal model (Holland, 1986). Our discussion earlier of causal effects and counterfactuals is a simplified version of the Rubin causal model. This model expands on the traditional two-assumption approach and employs a set of five assumptions that, when met, allow for the estimation of a causal LATE using an IV method. The five assumptions are:

A1b. Stable Unit Treatment Value Assumption (SUTVA)

This assumption requires that the influence of the treatment be the same for all individuals and that the treatment of one individual is not influenced by other individuals being treated. There are two primary concerns when evaluating SUTVA. First, Angrist et al. (1996) and Porter (2012) cite circumstances where groups of individuals are treated as a unit, as opposed to treatment to each individual independently, as possible violations of this assumption. For example, if we randomized students into treatment and control groups by classroom within a school, then we would expect that there might be interactions among teachers instructing the control and treatment group classes. These effects, which are often referred to as “spillovers,” alter the impact of the treatment and controls if the treatment or control teachers alter their administration of the treatment based on their contact with the other teachers.

The second concern deals with how the treatment itself is administered. The SUTVA requires that the implementation of the treatment must be consistent across all treatment groups. Using a clinical example, if the treatment is a drug administered in pill form, then each of the pills given to the treatment group must be exactly the same. If some pills had differing levels of chemicals than other pills, SUTVA would be violated. Therefore, we must consider how the administration of treatments may differ in order to evaluate our model with respect to A1b.

A2b. Random Assignment

This assumption requires that the distribution of the instrumental variable across individuals be comparable to what would be the case given random assignment. In the case of a dichotomous treatment, this can be described as each individual having an equal probability of being treated or untreated. More formally,

where Pr(t = 1) is the probability of being treated and Pr(t = 0) is the probability of not being treated. Any situation in which an individual would have an influence on their level of the instrument would violate this assumption. For example, a student’s college major (Pike, Hansen, & Lin, 2011) would not satisfy this assumption because the student plays a role in selecting the instrument.

A3b. Exclusion Restriction

This assumption parallels assumption A1 in the two-assumption approach from the previous section in that the instrument (z) needs to be uncorrelated with the error term (e) in the second stage equation (6.7). More plainly, assumption A3b requires that the instrument (z) is appropriately excluded from the second stage equation (6.7). As discussed above, this assumption ensures that the only effect that the instrument, z, has on the dependent variable, y, is through its effect on the endogenous independent variable, t, in the reduced form Eq. (6.8).

A4b. Nonzero Average Causal Effect of the Instrument on the Treatment

Also drawing from the two-assumption approach (the “relevance” condition), this assumption requires that there be a nonzero relationship, and preferably a strong relationship, between the instrumental variable and the endogenous independent (or treatment) variable, such that θ 1 = 0 in Eq. (6.8).

A5b. Monotonicity

Monotonicity assumes that the instrument, z, has a unidirectional effect on the endogenous variable, t. This requires that the relationship between the instrument, z, and the endogenous variable, t, meet one of the following criteria:

or

What is required for this to be the case is that the relationship between t and z, as represented by θ 2, must have only one sign, either positive or negative, for all individuals in the sample.

This assumption stems from our discussion of heterogeneous treatment effects from above. Angrist et al. (1996) describe four groups: always-takers, never-takers, compliers, and defiers. Always-takers and never-takers have predetermined patterns of behavior that are uninfluenced by the instrument. In our running example, always-takers will always take Algebra 2 and never-takers will never take it, and the instrument (local labor market conditions and/or labor laws) will have no influence on these students’ decision. Compliers’ and defiers’ behavior is, however, influenced by the instrument. Compliers will alter their behavior in the direction we would expect from the underlying theory. Using our running example, we would expect compliers’ probability of taking Algebra 2 to rise (fall) as the local unemployment rate increases (decreases). Defiers behave, however, in ways that do not conform to a priori expectations. Using our example, if defiers existed (and we do not believe they do in our case, to be explained in more detail below), we would expect that as the local unemployment rate increased (decreased), their probability of taking Algebra 2 would fall (rise). In order for the assumption of monotonicity to hold, defiers cannot exist because the influence of the instrument on the treatment would not be unidirectional.

In many cases, the assumption of no defiers is a reasonable one, because their behavior would be in contradiction to their own interests. Considering our empirical example, the behavior of a defier would decrease their expected wages and employment prospects. Students with more promising job prospects while in high school would not take advantage of them but instead invest more time in school, whereas students with worse employment prospects in high school would reduce their investment in schooling to increase work time at low-wage jobs or time looking for nonexistent jobs. In both cases, defiers reduce the potential utility they could obtain from the way they allocate their time.