Abstract

This paper reports an analysis of features of mathematics assessment items developed for the OECD’s Programme for International Student Assessment survey (PISA) in relation to a set of six mathematical competencies. These competencies have underpinned the PISA mathematics framework since the inception of the PISA survey; they have been used to drive mathematics curriculum and assessment review and reform in several countries; and the results of the study are therefore likely to be of interest to the broad mathematics education community.

We present a scheme used to describe this set of mathematical competencies, to quantify the extent to which solution of each assessment item calls for the activation of those competencies, and to investigate how the demand for activation of those competencies relates to the difficulty of the items. We find that the scheme can be used effectively, and that ratings of items according to their demand for activation of the competencies are highly predictive of the difficulty of the items.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

What are the factors that influence the difficulty of PISA mathematics survey items? The publication of data from the PISA 2003 survey (OECD, 2004), when mathematics was the major survey domain, has enabled a deep study of cognitive factors that influence the difficulty of mathematics items. The framework on which that survey was based (OECD, 2003) outlines a set of mathematical competencies originally described in the work of Mogens Niss and his Danish colleagues (see Niss, 2003; Niss & Hoejgaard, 2011). Such an understanding of item difficulty has the potential to guide the construction of new items to better assess the full range of the PISA mathematics scale, as well as to enhance the reporting of student performance associated with PISA assessments.

To the extent that these “Niss competencies” have resonance in various national curricula (e.g. in Denmark; see the official guidelines from the Ministry of Education: www.ug.dk/uddannelser/professionsbacheloruddannelse/enkeltfag), have been used to evaluate curriculum outcomes and even have acted as drivers of curriculum and assessment reform (e.g. in Germany; see Blum, Drueke-Noe, Hartung, & Köller, 2006, and in Catalonia, Spain; see Planas, 2010), an understanding of their influence on the difficulty of mathematics items will have far wider relevance than just within the PISA context, and will contribute more generally to an important area of knowledge in mathematics education.

The authors led an investigation that has extended over several years, beginning in October 2003. They built on earlier work aiming at understanding student achievement in mathematics developed by de Lange (1987), Niss (1999), and Neubrand et al. (2001). The investigation has focused on six mathematical competencies which are a re-configuration of the set of competencies which have been at the heart of the Mathematics Framework for PISA from the beginning (see OECD, 2003, 2006). These competencies describe the essential activities when solving mathematical problems and are regarded as necessary prerequisites for students to successfully engage in “making sense” of situations where mathematics might add to understanding and solutions. These six competencies were:

-

Reasoning and argumentation

-

Communication

-

Modelling

-

Representation

-

Solving problems mathematically (referred to as Problem solving)

-

Using symbolic, formal and technical language and operations (referred to as Symbols and formalism).

These competencies are not meant to be sharply disjoint. Rather, they overlap to a certain degree, and mostly they have to be activated jointly in the process of solving mathematical problems.

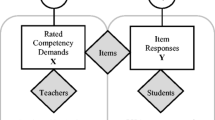

The initial investigation has consisted in developing operational definitions of these six competencies, and in describing four levels of demand for activation of each competency (see Sect. 2.2). PISA survey items have been analysed in relation to those definitions and level descriptions, by the application of a set of rating values to each item for each competency. The resulting ratings have then been analysed as predictor variables in a regression on the empirical difficulty of the items, derived from the PISA 2003 survey data. The item ratings have been found to be highly predictive of the difficulty of the items (see Sect. 2.3). In addition, statistical studies have been conducted examining other variables, such as the four PISA mathematical content strands (quantity, space and shape, change and relationships, and uncertainty), the PISA contexts in which the item items are presented to students (personal, education/occupational, public, scientific, and intra-mathematical), as well as the item formats themselves (various forms and combinations of multiple-choice, closed constructed-response, and open constructed-response items). None of these studies showed that these variables, acting singly or in combination with one another, explained significant proportions of the variation observed in item difficulty.

In this paper, we will present those competency definitions and level descriptions as well as the essential outcomes of the analysis conducted.

2 The Competency Related Variables

The material following in Table 2.1 contains the definitions and difficulty level descriptions of the six mathematical competencies used in this investigation so far. Each of the six competencies has an operational definition bounding what constitutes the competency as it might appear in PISA mathematics assessment items and then four described levels (labeled as levels 0, 1, 2, and 3) of each variable.

3 Analysis of the Application of the MEG Item Difficulty Framework

The following analyses provide an examination of the efficacy of the MEG Item Difficulty Framework in explaining the variability present in student performance on the 48 items common to the PISA 2003 and PISA 2006 mathematics assessments. We examine this efficacy from a number of perspectives: correlation of variable code average values, coder consistency, percentage of variance explained, consistency across assessments, and factor structure.

3.1 Psychometric Quality

3.1.1 Correlation of Variable Average Code Values

Table 2.2 contains the results of a correlation of the coding data associated with each of the six competency-based variables. Note that in this and subsequent tables, the competency labels are abbreviated as follows: REA for Reasoning and Argument; PS for Problem Solving; MOD for Modelling; COM for Communication; REP for Representation; SYM for Symbols and Formalism. The prefix ‘AVG’ indicates the average code value across the eight coders on the relevant competency.

3.1.2 Coder Consistency

Coder consistency can be approached from two perspectives. The first is the degree to which coders’ actual coding of the items correlated with codings they had initially given the items in another coding of the same items 2-years previously. This would be an analysis of intra-coder consistency. The other examination of coder consistency would be an examination of the degree to which the eight coders tended to code in common for a given item relative to the competencies. Such consistency would be an example of inter-coder consistency.

Intra-coder consistency. Within-coder data only exist for four of the eight coders in our sample. In addition, there have been minor changes in the description of the competency-related codes for some of the variables that may have slightly altered the use of the codes during the intervening 2 years. These cautions notwithstanding, correlations were conducted for the six competency-related variables for each of the coders for whom there was complete data for the two separate codings of the 48 items. The results are in Table 2.3 Note that all of the observed correlations were significantly different from 0 at the 0.05 level.

There was an interesting pattern in the intra-coder correlations of the coders’ work. The coders are numbered in ascending order from most consistent to least consistent. This ordering also matches the ordering of amount of experience and coding the four coders had with using the MEG Item Difficulty Framework. This suggests, perhaps, that coders become more consistent with increased familiarity with the framework and its use, and that training in the use of the framework will be an important issue for the future.

Inter-coder consistency. A second approach to coder consistency lies in examining the degree to which the eight coders actually give the same code to an item for a given competency. In essence, this is asking to what degree the eight individual MEG coders give the same numerical code to an item for any one of the six competency-related variables. This analysis can be approached from a variety of perspectives. Historically, most researchers have been satisfied with finding the Pearson product moment correlation of the coders over the set of items related to a given competency area. More recently, researchers dealing with content coding and curricular studies have shifted toward the use of Cronbach’s α along with more emphasis on individual item and coder patterns of behaviour (Cronbach, Gleser, Nanda, & Rajaratnam, 1972; Shrout & Fleiss, 1979; von Eye & Mun, 2005).

The data showing the codes each of the eight individual coders awarded for each item have been collected and analysed to determine the consistency of the eight coders for each of the six competency-based variables. Table 2.4 contains a variety of information points for each item. In addition to Cronbach’s α value for each competency-based variable, data are provided showing the distribution of ranges between minimum and maximum codes given to individual items for the competency variable in the coding. Note that a range of 3 for an individual item indicates that it was coded as being at both Level 0 and Level 3 by different coders.

The examination of the values of Cronbach’s α for the six competency-based variables shows considerable consistency with the exception of the Reasoning and argumentation variable. An examination of the individual item and response data in general did not immediately indicate a reason for the lower consistency value observed.

The next row in the table indicates the average coding value given across each of the 48 items in each of the competency-based variables’ actual coding for this study.

The remaining rows of the table provide a great deal of information about how the 48 items were coded within each of the competency-based variables. The term “Code Range” in the left hand column refers to the range of codes, which is the value of maximum code awarded minus the value of the minimum code. For example a code range of 0 would indicate that all eight coders agreed on the code awarded an item. A code range of 1 would indicate that all coders were awarding one of two adjacent codes. A code range of 3, however, would indicate that at least one coder had awarded a code of 0 while another coder had awarded a code of 3 to an item. Items for which this occurred were flagged for extra analysis. The coders were numbered C1–C8 and the final row in the table indicates which coders were “outliers” in the coding of the individual item receiving a code range of 3.

3.2 Results of Difficulty Analyses

3.2.1 Predicting Variance Explained

The degree to which the six competency related variables add to the explanation of variance in the item difficulty scores associated with student performance for the PISA 2003 and PISA 2006 mathematics surveys was analysed using first the “best subsets” approach, and then through a separate multivariate regression analysis of the data.

Best Subset Regressions: Analysis of the PISA 2003 data, the implementation of the “best subset” regression approach, which is sometimes called the “all possible regressions” approach, resulted in the information shown in Table 2.5 (Chatterjee & Price, 1977; Draper & Smith, 1966).

The values in each row show data associated with various sets of predictor variables and the percentage of variance in the PISA 2003 item difficulty variability they predict. This percentage is given by the value in the Adjusted R-square column. The data show the best predictor variables as the number of variables used in the mode goes from 1 to 6.

An examination of the table provides a number of observations. First, the percent of variance predicted (Adjusted R-Squared value) increases up to a four-variable model and then decreases slightly thereafter for the best models with five or six predictor variables. The degree to which the increased value of prediction increases will be discussed later.

It is interesting that the one best competency predictor is the Reasoning and argumentation variable. The entrance of additional predictor variables in building best models with more variables show the entry order of Symbols and formalism, Problem solving, Communication, Modelling, and Representation. The latter two variables do not appear to add to the explanatory power achieved using only the first four.

Table 2.6 shows the same analysis conducted using the PISA 2006 item difficulty estimates. The results are very similar in that the four-variable model appears the best in numerical value and the first four variables entering are the same: Reasoning and argumentation, Symbols and formalism, Problem solving, and Communication. However, there is a slight difference in the order of the entrance of the remaining two variables into the predictor models. Here the next is Representation, followed then by Modelling. However, the data suggest that the addition of these latter two variables does not improve the prediction based on the four variables common to both the PISA 2003 and PISA 2006 data.

Overall, these best subset regression analyses indicate that the four variables of Reasoning and argumentation, Symbols and formalism, Problem solving, and Communication provide the best structure for maximizing the prediction of item difficulty in PISA as defined by item logit values. Additional analysis of the relative contributions of each of these will appear in the next analyses.

Multiple regressions: Table 2.7 contains the results of a stepwise regression employing all possible competency-based variables for the prediction of the PISA 2003 item difficulty logit values. The algorithm was structured to select the best single predictor, and then add the next best single predictor that would add a significant amount of explanatory power. This process iterates, adding variables to the regression equation until the point when the addition of any other variable to the regression equation would no longer make a statistically significant increase in the amount of item difficulty variance explained.

This regression equation indicates that the three competency-based variables, in order of explanatory power are Reasoning and argumentation, Symbols and formalism, and Problem solving. This model predicts 70.5% of the variability in the PISA item difficulty data, when the R-squared value is adjusted. While the addition of the variable Communication would have pushed the R-squared value to 71.8, the gain would not have been statistically significant over the variance explained by this three-variable model.

Carrying out the same stepwise regression approach using the PISA 2006 item difficulty logit data as the dependent variable, we obtain the results shown in Table 2.8. As in the case of the PISA 2003 data, the same three variables, Reasoning and argumentation, Symbols and formalism, and Problem solving enter in the same order. In this case, the three variables explain 71.4% of the variability in the item difficulty values when the adjusted R-squared value is computed.

A comparison of the coefficients show that there is no difference between the models developed from the PISA 2003 and PISA 2006 data. In like manner, there is no difference in the ascending order in which the three statistically significant predictor variables enter into the equations. In both cases, the calculation of the Durbin-Watson statistic and other residual diagnostics indicate that these models are sound and free of common biasing factors sometimes found in regression model building.

3.2.2 Factor Analysis

A factor analysis was conducted to examine the structure of the space spanned by the six competency-based variables. A principal components factor analysis of the correlation matrix of the competency-based variable scores for the 48 items revealed the findings shown in Table 2.9. An examination of the data indicates that there were two factors having eigenvalues greater than one. Given that each variable contributes a value of 1 to the eigenvalues total, only the factors having eigenvalues in the end greater than one are considered significant and retained for further study.

An examination of the percent of variance described by the first two factors show that they account for a total of 64% of the variance in the codings. Factor 1’s strongest loadings are Reasoning and argumentation, Modelling, Problem solving, and Symbols and formalism. This might be considered, given the values, a balanced factor similar to a generalised academic demand factor. Factor 2’s strongest loadings are Symbols and formalism decreased by Representation and Problem solving. This second factor might be considered as describing increased item demand related to the requirement to decode and deal with Symbols and formalism and Communication in the absence of Problem solving and the demand to interpret and manipulate Representations. One might liken this to adding demand for reading and symbol manipulation as it occurs without enacting problem solving strategies or multiple representations of mathematical concepts or operations.

An important remark: It might seem that a certain subset of those six competencies will already serve all purposes and that the others are unnecessary. However, a subset of competencies proved to be sufficient only for explaining item difficulty and only in the particular case of PISA tests. In other cases, other subsets might have more explanatory power. More importantly, the competencies serve a much broader purpose than only explaining item difficulty. For the most important purpose, that is describing proper mathematical activities and thus formulating the essential aims that students ought to achieve through school mathematics, all competencies are indispensable.

4 Present Status of the Study

The foregoing data provide sufficiently strong evidence of the role played by the mathematical competencies, as defined in Table 1, in influencing variability in item difficulty on the PISA mathematics survey items. At present, illustrations of the way the competencies play out to influence difficulty in particular items are being developed, along with an elaborated coding manual for researchers who have not been involved in the development of the MEG model. This coding manual will be central in the next stage of the study, as it will be used with researchers unfamiliar with it and the coding of PISA items, but familiar with coding structures. They will be asked to code the 48 PISA items and their results will be compared with those of the MEG members.

The planned next steps are as follows. Based on this experience and revisions that may result from observing these coders and their work, a broader field test shall be conducted where new individuals, familiar with the PISA project, will be asked to use the coding instruction manual without any other assistance to code the 48 items. Their coding results and written comments shall again be used to further the development of the model and manual for either one more round of field testing or release as a PISA technical report.

Two further developments of this study might be to investigate the extent to which the scheme could be used to predict the difficulty of newly developed PISA mathematics items; and to investigate its applicability to other (non-PISA) mathematics items.

Curriculum statements in many countries reflect the importance of the competencies on which this study has focused. It can be expected that the relationship between cognitive demand for the activation of these competencies and the empirical difficulty of the mathematical tasks that call for such activation, whether in the PISA context or in other contexts, will be of deep interest to teachers, teacher educators and others involved in mathematics education around the world.

References

Blum, W., Drueke-Noe, C., Hartung, R., & Köller, O. (2006). Bildungsstandards Mathematik: konkret. Berlin: Cornelsen-Scriptor.

Chatterjee, S., & Price, B. (1977). Regression Analysis by Example. New York: Wiley.

Cronbach, L. J., Gleser, G. C., Nanda, H., & Rajaratnam, N. (1972). The dependability of behavioral measurements. New York: Wiley.

De Lange, J. (1987). Mathematics, Insight and Meaning. Utrecht: CD-Press.

Draper, N. R., & Smith, H. (1966). Applied Regression Analysis. New York: Wiley.

Neubrand, M., Biehler, R., Blum, W., Cohors-Fresenborg, E., Flade, L., Knoche, N., et al. (2001). Grundlagen der Ergänzung des internationalen OECD/PISA-Mathematik-Tests in der deutschen Zusatzerhebung. Zentralblatt für Didaktik der Mathematik, 33(2), 45–59.

Niss, M. (1999). Kompetencer og uddannelsesbeskrivelse. Uddannelse, 9, 21–29.

Niss, M. (2003). Mathematical Competencies and the Learning of Mathematics: The Danish KOM Project. In A. Gagatsis & S. Papastavridis (Eds.), 3rd Mediterranean Conference on Mathematical Education (pp. 115–124). Athens: The Hellenic Mathematical Society.

Niss, M., & Hoejgaard, T. (eds.) (2011) Competencies and mathematical learning. Ideas and inspiration for the development of mathematics teaching and learning in Denmark. English edition, October 2011 (IMFUFAtekst no. 485). Roskilde: Roskilde University.

OECD. (2003). The PISA 2003 Assessment Framework: Mathematics, Reading, Science and Problem Solving Knowledge and Skills. Paris: Directorate for Education, OECD.

OECD. (2004). Learning for Tomorrow's World – First Results from PISA 2003. Paris: Directorate for Education, OECD.

OECD. (2006). Assessing Scientific, Reading and Mathematical Literacy: A Framework for PISA 2006. Paris: Directorate for Education, OECD.

Planas, N. (2010). Pensar i comunicar matemàtiques. Barcelona: Fundació Prepedagogic.

Shrout, P. E., & Fleiss, J. L. (1979). Intraclass Correlations: Uses in Assessing Rater Reliability. Psychological Bulletin, 86(2), 420–428.

Von Eye, A., & Mun, E. Y. (2005). Analyzing rater agreement: manifest variable methods. Mahwah: Lawrence Erlbaum. www.ug.dk/uddannelser/professionsbacheloruddannelse/enkeltfag. Visited 19 May 2011.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Science+Business Media Dordrecht

About this chapter

Cite this chapter

Turner, R., Dossey, J., Blum, W., Niss, M. (2013). Using Mathematical Competencies to Predict Item Difficulty in PISA: A MEG Study. In: Prenzel, M., Kobarg, M., Schöps, K., Rönnebeck, S. (eds) Research on PISA. Springer, Dordrecht. https://doi.org/10.1007/978-94-007-4458-5_2

Download citation

DOI: https://doi.org/10.1007/978-94-007-4458-5_2

Published:

Publisher Name: Springer, Dordrecht

Print ISBN: 978-94-007-4457-8

Online ISBN: 978-94-007-4458-5

eBook Packages: Humanities, Social Sciences and LawEducation (R0)