Abstract

Satellite-based remote sensing applications require collection of high volumes of image data of which hyperspectral images are a particular type. Hyperspectral images are collected by high-resolution instruments over a very large number of wavelengths on board a satellite/airborne vehicle and then sent onwards to a ground station for further processing. Compression of hyperspectral images is undertaken to reduce the on-board memory requirement, communication channel capacity, and the download time. Compression algorithms can be either lossless or lossy. The purpose of this paper is to review a number of compression techniques employed for onsite processing of hyperspectral image data, to reduce the transmission overhead. A review of the theory of hyperspectral images and the compression techniques employed therein with emphasis on recent research developments is presented. Recent research on video compression techniques for hyperspectral imaging (HSI) is also discussed.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

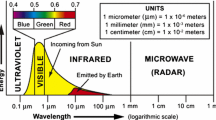

Spectral imaging is a study of partial or complete spectral information collected at every location in an image plane. This spectral information can be based on the range and resolution of the spectrum, number, width, and adjacency of bands. Based on these various distinctions, we have different terms namely multilayer imaging, multispectral imaging, super spectral imaging, and hyperspectral imaging (HSI). Spectral images are often represented as an image cube, a type of data cube. Spectral imaging enlarges the size of a pixel until it includes an area for which a spectral response can be obtained for the surface cover to be discriminated. The pixels need not be placed immediately adjacent to each other in order to facilitate identification of the pixel contents, as the identification of a pixel’s contents could be based on the spectral response of that pixel only. This helps in bringing down to a large extent the number of pixels needed to represent a given area. A hyperspectral image can be regarded as a stack of individual images of the same spatial scene. Each such image represents the scene viewed in a narrow portion of the electromagnetic spectrum [1] as depicted in Fig. 1. These individual images are referred to as spectral bands. Hyperspectral images typically consist of more than 200 spectral bands; the voluminous amounts of data comprising hyperspectral images make them good candidates for data compression. In HSI, each pixel records the intensity at different wavelengths of the light reflected by a specified area, which is divided into a number of consecutive bands. The hyperspectral images are represented as a two dimensional matrix or image cube [2] as shown in Fig. 2, where each pixel is a vector having one component for each spectral band. Hyperspectral images that provide high resolution spectral information are useful for many remote sensing applications. They generate massively large image data sets. Hence, capture and onward transport of large hyperspectral image data sets entail efficient processing, storage, and transmission capabilities. One way to address this problem is through image compression.

2 Conventional Compression Algorithms

Compression algorithms take digital image files as input. This input can also be a text or sound file. It then constructs an efficient encoded representation using as few bits as possible so that it can be transmitted with minimum usage of bandwidth and reconstructed at the destination with minimum loss of bits. Depending on the quality of reconstruction desired, compression algorithms can be classified as lossless or lossy. In lossless compression schemes, the image formed at the end of the compression process should be an exact replica of the original image. Lossless algorithms strive to transmit the image data without any loss of information. Due to this constraint, only a modest amount of compression is possible. In general, lossless compression is employed for character data, numerical data, icons, and executable components. On the other hand, lossy algorithms are based on the presumption that it is not necessary to transmit the image file in its entirety as not all parts of the image are significant. The extraneous or insignificant image parts could be removed without affecting the visual impact of the image. In addition, the redundant information need not be transmitted hence can be completely discarded. Therefore, the lossy compression algorithms provide a higher degree of compression, albeit with some loss of information and degradation in the image quality. Therefore, lossy compression has been extensively employed for the compression of multimedia files and has been the trigger for the immense popularity of multimedia. This has been possible due to the fact that the human visual and hearing system is insensitive to certain kinds and levels of distortion. Thus, selective loss of information can be used to significantly enhance the compression performance of the lossy algorithms.

3 Classification of Compression Algorithms

Some of the important classifications [3] of compression algorithms are as follows:

-

A.

Statistical (SI)

The idea of statistical coding or the statistical approach is to use statistical information to replace a fixed-size code of symbols/patterns by a, hopefully, shorter variable-sized code. Generally, most common patterns are replaced with shorter patterns and least common ones with longer patterns.

-

B.

Dictionary (DI)

DI approaches build a list of frequently occurring words and phrases and associate an index with them. While compressing, it replaces the words/phrases with the respective indices.

-

C.

Pattern finding (PF)

PF approaches seek to find and extract regular patterns from the file and perform compression based on these patterns. Most common PF approach namely run length coding takes the consecutive symbols or patterns (runs) that occur recurrently in the source file and replaces them with an 8-bit ASCII code pair. This code pair can be either the symbol that is being repeated and the number of times it occurs or a sequence of symbols that are non-repetitive.

-

D.

Approximation (AP)

Approximate solutions approach employs quantization. For a given set of data, certain computations are applied and approximations are arrived at and a simpler form of the data is generated.

-

E.

Programmable solutions (PR)

Programming solutions entail creation of an entirely new programming language for encoding the data and thereby reducing the file size.

-

F.

Wavelet solutions (WA)

A wavelet-based compression solution creates AP for the data by adding together a number of basic patterns known as wavelets that are weighted by different coefficients. These coefficients replace the original patterns and make the input file compact. Cosine function is the most prevalent wavelet in use.

-

G.

Adaptive solutions (AD)

AD try to adapt to local conditions in an input file. Certain patterns may be found only in the beginning, certain in the middle, and certain only in the end. AD may adapt accordingly while performing compression.

In general, DI-based compression algorithms are better suited for text data. Statistical methods are better suited for image data. Some statistical-based algorithms such as Huffman coding or Arithmetic coding are used for text compression too. But, whenever a file has fairly long words or phrases, DI-based algorithms score above Huffman coding and arithmetic coding. DI schemes, however, would fare worse in situations where there are no long repeated words and phrases. Adaptive techniques are generally used when the amount of overhead in a file has to be reduced. Statistical methods will usually ship some table summarizing the statistical information at the beginning of a file. Adaptive methods can reduce this amount, in some cases, because they often do not ship any additional data. Most statistical approaches can be changed, or adapted through one simple idea. Instead of building a statistical model for all of the characters or tags in a file, the model is constructed for a small group and updated frequently. Programming approach entails compressing data with executable files mostly in situations where the data are well structured. It is not suited for audio files.

4 Compression Techniques for Hyperspectral Images

A typical compression algorithm utilizes statistical structure in the image data set. In hyperspectral images, two types of correlation are possible. One is spatial correlation that exists between adjacent pixels in the same band, and the other is spectral correlation that exists between pixels in adjacent bands. We have considered only spectral correlation-based studies for our review.

4.1 Lossless Compression Techniques for Hyperspectral Imaging

In HSI, considerable research has been done using lossless compression techniques. In the following section, we describe various techniques employed and the results obtained. Keyan Wang et al. employ adaptive edge-based prediction in [4]. This is done by concentrating on increased affinity between the pixels present in the edge of the images to put forth a new high performance compression algorithm. The algorithm uses three different types of predictors for different modes of prediction. Intraband prediction is done using an improved median predictor (IMP) that can detect the diagonal edge. An adaptive edge-based predictor (AEP) is used for interband prediction that takes into account the redundancy in the spectrum. In case of the band that does not have any prediction mode, the pixels are entropy coded. This algorithm shows an improvement in the lossless compression ratio for both standard airborne visible/infrared imaging spectrometer (AVIRIS) 1997 hyperspectral images and the newer consultative committee for space data systems (CCSDS) test images. Quang Zhang et al. discuss a new double-random projection method in [5] that uses randomized dimensionality reduction techniques for efficiently capturing global correlation structures and residual encoding. This new method extended from the randomized matrix decomposition methods for achieving rapid lossless compression and reconstruction of HSI data ensures effective lossless compression of HSI data. It shows empirically that the entropy of the residual image decreases significantly for HSI data. It expects that the conventional entropy-based methods for integer coding will perform well on these low-entropy residuals. The paper concludes that the integration of advanced residual coding algorithms with the randomized algorithm can be an important research topic for the future study. A transform-based lossless compression for hyperspectral images is presented by Kai-jen Cheng et al in [6]. which is inspired by Shapiro’s (1993) embedded zerotree wavelet (EZW) algorithm. The method that is proposed for compression employs a hybrid transform which is a combination of an integer Karhunen–Loève transform (KLT) and integer discrete wavelet transform (DWT). The proposed method was applied to AVIRIS images and compared to other state-of-the-art image compression techniques. It is shown that the lossless compression algorithm proposed has higher efficiency and compression ratio than other similar algorithms. Nor Rizuan Mat Noor et al. propose an algorithm in [7] that performs lossless hyperspectral image compression by employing the Integer KLT, an integer AP of the KLT. This paper explores the effect of single-bit errors on the performance of the integer KLT. This algorithm is of a low complexity and is capable of detecting and correcting a single-bit error. Changguo Li et al. discuss in [8] how their algorithm can exploit both interband and intraband statistical correlations, and in turn give superior compression performance as compared to existing classical algorithms. In this paper, linear prediction is combined with a gradient adjusted prediction and an interband version of gradient-adjusted prediction (GAP) is proposed. Jinwei Song et al. in [9] propose a lossless compression algorithm that is based on a recursive least squares (RLS) filter. They show that this algorithm generates state-of-the-art performance with relatively low complexity. It looks at lots of conventional lossless compression algorithms for red–green–blue images, such as JPEG-lossless (LS), Diff.JPEG and clustered-differential pulse code modulation. Jarno Mielikainen in [10] makes use of lookup tables to propose an algorithm for hyperspectral image compression. By using interband correlation as a means of prediction, this low-complexity algorithm achieves good compression ratio and makes it highly suitable for onboard image compression. Lin BAI et al. present in [11] context prediction-based lossless compression algorithm for hyperspectral images. The algorithm performs sequentially linear prediction, followed by 3D context prediction followed by arithmetic coding and exhibits low complexity and a compression ratio of 3.01 which is better than many state-of-art algorithms. Aaron B. Kiely et al. compare [12] the compression performance of standard AVIRIS hyperspectral raw images that do not contain appreciable calibration-induced artifacts and the images containing calibrated artifacts and concludes that raw images achieve a worse compression performance than processed images.

4.2 Lossy Compression Techniques for Hyperspectral Imaging

Lucana Santos et al. in [13] address the urgent need for defining new hardware architectures for the implementation of algorithms for hyperspectral image compression onboard satellites. Their algorithms demonstrate the use of graphics processing units (GPUs) for enhancing greatly the computation speeds for parallel tasks and data. The algorithm also specifies the parallelization strategy used and discusses solutions to the probable problems that are encountered during attempts at acceleration of hyperspectral compression algorithms. It also throws light on the effects of various configuration parameters on the compression algorithms. An architecture is proposed by Lucana Santos et al. in [14] that enables hastening of the compression task by employing a parallel algorithm for onboard lossy hyperspectral image compression by implementing it on the GPU. The algorithm uses block-based operation and provides an optimized GPU implementation incurring a negligible overhead in comparison with the original single-threaded version. Barbara Penna et al. in [15] propose a lossy algorithm for pixel anomaly detection which uses a hybrid of spectral KLT + spatial JPEG2000 and achieves better distortion rate. It can be employed for both coding and decoding of images.

4.3 Lossless and Lossy Compression Techniques for Hyperspectral Imaging

Yongjian Nian et al. talk about distributed source coding (DSC) compression algorithms in [16] for both lossy and lossless compressions. The complexity of the algorithm is found to be very low. It uses regression model to improve the compression performance of distributed lossless compression algorithm, and optimal scalar quantization for distributed lossy compression. The proposed algorithm is on par with other similar algorithms on competitive compression performance. This encoder has low complexity, and hence, it is most suitable for onboard compression (Table 1).

5 Comparative Study of Hyperspectral Compression Algorithms

6 Video Compression Algorithms

Video compression algorithms have gained widespread popularity on account of their simplicity, cost, and interoperability. Efforts have been made in order to explore the feasibility of employing these algorithms for the compression of hyperspectral images. Table 2 summarizes some of these algorithms. Timothy S. Wikinson et al. have compared in [17] various compression techniques that can be applied for HSI. Zixiang Xiong discusses the compression of video images based on DSC principles in [18]. Lucana Santos et al. in [19] evaluate the performance of H.264/AVC video coding standard for lossy compression of hyperspectral images and conclude that it can reduce the future design complexities of such encoders.

7 Conclusion

Although a wide variety of compression algorithms are available, there is no single algorithmic solution that is universally applicable. Non-adaptive algorithms do not modify their operations based on the characteristic of input, whereas the perceptive algorithms take into consideration the special characteristics of the data and modify their operations accordingly. Symmetric algorithms use the same algorithm for both compression and decompression but in reverse order of each other and non-symmetric ones use different algorithms for compression and decompression. The researchers mostly combine a number of the algorithms mentioned in Sect. 3 to arrive at a hybrid algorithm that is most suited to their imaging application. Commercially available software packages also do the same. Most of the papers considered for this study have employed statistical or wavelet-based compression algorithms or a combination of both. In addition, the algorithm proposed in most of the studies is lossless and focuses on encoding schemes only. Further, video compression algorithms can reduce the design complexities present in the hyperspectral image data compression algorithms.

References

Canada Centre for Remote Sensing, Fundamentals of Remote Sensing. Remote Sensing Tutorial (2013)

http://commons.wikimedia.org/wiki/File:HyperspectralCube.jpg

P. Wayner, Data Compression for Real Programmers (Morgan Kaufman Inc., Los Altos, 1999)

K. Wang, L. Wang, H. Liao, J. Song, Y. Li, Lossless compression of hyperspectral images using adaptive edge-based prediction. Proceedings of SPIE 8871, satellite data compression, communications, and processing IX, 887105 (2013). doi:10.1117/12.2022426

Q. Zhang, V.P. Pauca, R. Plemmons, Randomized methods in lossless compression of hyperspectral data. J. Appl. Remote Sens. 7(1), 074599–1 (2013)

K.-J. Cheng, J. Dill, Hyperspectral images lossless compression using the 3D binary EZW algorithm. in Proceedings of SPIE 8655, Image Processing: algorithms and Systems XI, vol. 8655, ed. by K.O. Egiazarian, S.S. Agaian, A.P. Gotchev (Burlingame, California, 2013), p. 8655. doi:10.1117/12.2002820

N. Rizuan, M. Noor, T. Vladimirova, Parallelised fault-tolerant integer KLT implementation for lossless hyperspectral image compression on board satellites. 2013 NASA/ESA conference on adaptive hardware and systems (AHS 2013)

C. Li, K. Guo, Lossless compression of hyperspectral images using interband gradient adjusted prediction. 4th IEEE international conference on software engineering and service science (ICSESS 2013), 23–25 May 2013. ISBN:978-1-4673-5000-6/13. doi:10.1109/ICSESS2013.6615408

J. Song, Z. Zhang, X. Chen, Lossless compression of hyperspectral imagery via RLS filter. Electron. Lett. 49(16) (2013)

J. Mielikainen, Lossless compression of hyperspectral images using lookup tables. IEEE Signal Process Lett. 13(3), 157–160 (2006)

L. Bai, M. He, Y. Dai, Lossless compression of hyperspectral images based on 3D context prediction. 3rd IEEE conference on industrial electronics and applications (2008), pp. 1845–1848. ISBN:978-1-4244-1717-9

A.B. Kiely, M.A. Klimesh, Exploiting calibration-induced artifacts in lossless compression of hyperspectral imagery. IEEE Geosci. Remote Sens. Lett. 47(8), 2672–2678 (2009)

L. Santos, E. Magli, R. Vitulli, A. Núñez, J.F. López, R. Sarmiento, Lossy hyperspectral image compression on a graphics processing unit: parallelization strategy and performance evaluation. J. Appl. Remote Sens. doi:10.1117/1.JRS.7.074599

L. Santos, E. Magli, R. Vitulli, J.F. López, R. Sarmiento, Highly-parallel GPU architecture for lossy hyperspectral image compression. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sen. 6(2), 670–681 (2013)

B. Penna, T. Tillo, E. Magli, G. Olmo, Hyperspectral image compression employing a model of anomalous pixels. IEEE Geosci. Remote Sens. Lett. 4(4), 664 (2007)

Y. Nian, M. He, J. Wan, Low-complexity compression algorithm for hyperspectral images based on distributed source coding. Math. Probl. Eng., Article ID 825673 (2013)

T.S. Wilkinson, V.D. Vaughn, Application of video based coding to hyperspectral imagery. Proceedings of SPIE 2821, hyperspectral remote sensing and applications, vol. 44 (1996). doi:10.1117/12.257183

Z. Xiong, Video compression based on distributed source coding principles. Conference record of the forty-third Asilomar conference on signals, systems and computers (2009)

L. Santos, G.M. Callicó, J.F. Lopez, R. Sarmiento, Performance evaluation of the H.264/AVC video coding standard for lossy hyperspectral image compression. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 5(2), 451–461 (2012). doi:10.1109/JSTARS.2011.2173906

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer India

About this paper

Cite this paper

Babu, K.S., Ramachandran, V., Thyagharajan, K.K., Santhosh, G. (2015). Hyperspectral Image Compression Algorithms—A Review. In: Suresh, L., Dash, S., Panigrahi, B. (eds) Artificial Intelligence and Evolutionary Algorithms in Engineering Systems. Advances in Intelligent Systems and Computing, vol 325. Springer, New Delhi. https://doi.org/10.1007/978-81-322-2135-7_15

Download citation

DOI: https://doi.org/10.1007/978-81-322-2135-7_15

Published:

Publisher Name: Springer, New Delhi

Print ISBN: 978-81-322-2134-0

Online ISBN: 978-81-322-2135-7

eBook Packages: EngineeringEngineering (R0)