Abstract

Recently, a new lattice basis reduction notion, called diagonal reduction, was proposed for lattice-reduction-aided detection (LRAD) of multiinput multioutput (MIMO) systems. In this paper, we improve the efficiency of the diagonal reduction algorithm by using the fast Givens transformations. The technique of the fast Givens is applicable to a family of LLL-type lattice reduction methods to improve efficiency. Also, in this paper, we investigate dual diagonal reduction and derive an upper bound of the proximity factors for a family of dual reduction aided successive interference cancelation (SIC) decoding. Our upper bound not only extends an existing bound for dual LLL reduction to a family of dual reduction methods, but also improves the existing bound.

973 Program Project Under Grant 2010CB327900.

National Natural Science Foundation of China Under Grant 11271084.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Lattice basis reduction plays an important role in the detection of wireless multiple-input multiple-output (MIMO) systems. For detection problems of lattice type, the optimal maximum-likelihood (ML) decoding can be modeled as the closest vector problem (CVP) [1, 16], which has been proved to be NP-hard [2]. Although many algorithms, like the sphere decoding algorithm [5, 14], can solve CVP exactly, the complexity of these algorithms increases exponentially with the number of transmit antennas [1, 5, 6]. Thus, such optimal solvers are infeasible for real-time systems, where timing is critical. To satisfy the time constraint, many suboptimal solvers with polynomial complexity, like the successive interference cancelation (SIC) decoding, have been proposed [3, 12]. However, the suboptimal detectors may suffer from heavy performance loss at a low signal-to-noise ratio (SNR). It has been found that lattice reduction, used as an efficient preprocessor, has the potential to achieve high performance for suboptimal decoding algorithms. Recently, many reduction algorithms, such as the Lenstra-Lenstra-Lovász (LLL) algorithm [7], effective LLL algorithm [10], partial LLL algorithm [11, 17], and diagonal reduction algorithm [19], have been proposed for SIC decoding. It is proved in [9, 18] that SIC decoding aided by the above reduction notions can achieve the same receive diversity order as the infinite lattice decoding (ILD).

Of all the aforementioned lattice reduction algorithms, the diagonal reduction algorithm is the most efficient one. From our observation [19], the total computation of the diagonal reduction is dominated by the computation of the Givens rotations. Thus, in this paper, we propose to improve the efficiency of the diagonal reduction by replacing the Givens rotation with the more efficient and mathematically equivalent fast Givens transformation [4, p. 218]. The improvement is achieved by substantially reducing the number of multiplication operations required, because two entries of the 2-by-2 fast Givens matrix equal 1. Moreover, the fast Givens technique is general in that it can be incorporated into all the LLL-type lattice reduction methods to enhance performance.

Also, we investigate the basis reduction for dual lattices. In [9], the LLL and effective LLL algorithms for dual lattices are presented. In this paper, we investigate the diagonal reduction for dual lattices and prove that the dual basis of a diagonal reduced basis is also diagonal reduced. In addition, we derive an upper bound for the proximity factors of a family of dual LLL-type reduction aided SIC decoding. Our upper bound not only extends an existing bound for LLL reduction in [9] to a family of reduction methods, but also improves the existing one.

The rest of the paper is organized as follows. In Sect. 2, we briefly introduce the systems model and review the diagonal reduction algorithm. The new algorithm using the fast Givens is given in Sect. 3. Section 4 presents the diagonal reduction for dual lattices and our new upper bound for the proximity factors. In Sect. 5, we demonstrate our simulation results.

Notations: \(\mathbf {B}^\mathrm{T}\), \(\mathbf {B}^{\dag }\), and \(\det (\mathbf {B})\) denote the transpose, the Moore-Penrose inverse, and the determinant of a matrix \(\mathbf {B}\) respectively, \(\mathfrak {R}(z)\) and \(\mathfrak {I}(z)\) the real and imaginary parts of a complex number \(z\), \(\lfloor a \rceil \) the integer nearest to a real number \(a\).

2 Lattice Basis Reduction

2.1 System Model

Consider a MIMO system consisting of \(n_T\) transmit antennas and \(m_T\) receive antennas. The relationship between the \(n_T\,\times \,1\) transmitted signal vector \(\mathbf {x}\) and the \(m_T\,\times \,1\) received signal vector \(\mathbf {y}\) is given by

where \(\mathbf {H}\), \(\mathbf {y}, \mathbf {n}\) represent the channel matrix, the received and additive noise signals, respectively. In general, the entries of both \(\mathbf {H}\) and \(\mathbf {n}\) are assumed to be complex-valued independently and identically distributed (i.i.d.) Gaussian variables. Treating the real and imaginary parts of (1) separately, an equivalent real-valued system of doubled size can be obtained:

where

Given a MIMO system modeled as (2), the optimum ML decoding is equivalent to the following CVP:

where the constellation \(\fancyscript{A}\) is of lattice type. Unfortunately, CVP has been proved to be NP-hard [2], and all existing algorithms for solving (3) have an exponential complexity with the lattice dimension \(n\) [5, 6]. Recently, lattice-reduction-aided SIC decoding turned out to be extremely promising, since its bit-error-rate (BER) performance can effectively approximate the ML decoding with a complexity of only \(O(n^3)\) operations [9, 15].

2.2 Diagonal Reduction Algorithm

In this section, we first introduce some concepts of lattices and the SIC decoding, then we describe the diagonal reduction method [19].

Given a matrix \(\mathbf {B}\in \mathbb {R}^{m\,\times \,n}\) (\(n\le m\)) of full column rank, then a lattice generated by \(\mathbf {B}\) is defined by \( L(\mathbf {B}) = \{ \mathbf {B}\mathbf {z} : \mathbf {z} \in \mathbb {Z}^n \}\). The columns of \(\mathbf {B}\) form a basis for the lattice \(L(\mathbf {B})\). An integer matrix \(\mathbf {Z}\in \mathbb {Z}^{n\,\times \,n}\) is called unimodular if \(| \det (\mathbf {Z}) |=1\). The columns of a matrix \(\mathbf {B}'\) can form a basis for \(L(\mathbf {B})\) if and only if there exists a unimodular matrix \(\mathbf {Z}\) such that \(\mathbf {B}'=\mathbf {B}\mathbf {Z}\). The volume of \(L(\mathbf {B})\) is defined as \(vol (L(\mathbf {B}))=\sqrt{\det (\mathbf {B}^\mathrm{T}\mathbf {B})}\), which is independent of the choice of basis. Let \(\lambda (L)\) be the Euclidean length of the shortest nonzero vector in a lattice \(L\), then it is well known that \(\lambda (L)/ \hbox {vol}(L)^{1/n}\) is upper bounded over all \(n\)-dimension lattices \(L\), and the Hermite’s constant \(\gamma _n\) is defined as the supremum of \(\lambda (L)^2/ \hbox {vol}(L)^{2/n}\) over all \(n\)-dimension lattices. Finding the exact value of \(\gamma _n\) is very difficult. The exact value of \(\gamma _n\) is only known for \(1\le n \le 8\) and \(n=24\) [13, p. 33]. For an arbitrary dimension \(n\), an upper bound of the Hermite’s constant is given in [13, p. 35]:

A lattice reduction algorithm finds a unimodular matrix \(\mathbf {Z}\) for a given \(\mathbf {B}\) such that the columns of \(\mathbf {B}\mathbf {Z}\) are reasonably short. Lattice reduction has now become a powerful tool for enhancing the performance of suboptimal MIMO detectors, since it can significantly improve the orthogonality of the channel matrix.

Given a lattice generator matrix \(\mathbf {B}\in \mathbb {R}^{m\,\times \,n}\) and its QR decomposition \(\mathbf {B}=\mathbf {QR}\), where \(\mathbf {Q}\in \mathbb {R}^{m\,\times \,n}\) has orthonormal columns and \(\mathbf {R}\in \mathbb {R}^{n\,\times \,n}\) is upper triangular. From [8, 17], the efficiency of sphere decoding and the performance of SIC decoding is determined by the arrangement of the diagonal elements of \(\mathbf {R}\). Based on this fact, various reduction notions, such as the LLL reduction [7], effective LLL reduction [10], partial LLL reduction [11, 17], and diagonal reduction [19], have been proposed. Among all the aforementioned reduction notions, the diagonal reduction is the weakest, consequently, the least computationally demanding.

Definition 1

(Diagonal reduction [19]) A basis matrix \(\mathbf {B}\in \mathbb {R}^{m\,\times \,n}\) is said to be diagonal reduced with the parameter \(\omega \) (\(1/4<\omega <1\)), if the entries \(r_{i,j}\) of the upper triangular factor \(\mathbf {R}\) in its QR decomposition \(\mathbf {B}=\mathbf {QR}\) satisfy

for all \(1< k \le n\), where \(\mu _k = \lfloor r_{k-1,k}/r_{k-1,k-1} \rceil \).

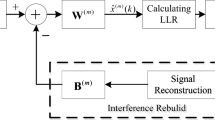

From the above definition, diagonal reduction only imposes one simple constraint on the diagonal entries of \(\mathbf {R}\). However, it is proved in [19] that diagonal-reduction-aided SIC decoding has identical performance as LLL-reduction-aided SIC decoding. A generic implementation of diagonal reduction can be found in Fig. 1.

Diagonal reduction algorithm (DR) [19]

3 Diagonal Reduction Using Fast Givens

From Fig. 1, the computational cost of the diagonal reduction algorithm includes two parts: the size-reduction (lines 7–8) and the Givens rotation (lines 11–13). The simulation results in [19] indicate that the overall complexity of the algorithm is dominated by the Givens rotations as the lattice dimension \(n\) increases. Thus, we propose the use of the fast Givens transformation in place of the Givens rotations to speed up the diagonal reduction algorithm.

Like the Givens rotation, the fast Given can be used to introduce zeros into selected positions. Specifically, given a lattice generator matrix \(\mathbf {B}\), the fast Givens transformation is based on the following decomposition:

where \(\mathbf {D}=\mathrm{diag}(d_i)\) is a positive diagonal matrix, \(\mathbf {F} \mathbf {D}^{-1/2}\) represents the orthogonal factor in the QR decomposition of \(\mathbf {B}\), and \(\mathbf {D}^{-1/2} \mathbf {R}\) represents the upper triangular factor.

How can the fast Givens introduce zeros? In the 2-by-2 case, given \(\mathbf {x} = [x_1 , x_2]^\mathrm{T}\) and the corresponding diagonal elements \(d_1, d_2 > 0\), we first compute

When \(\gamma \le 1\), we have the type 1 fast Givens:

and update \(d_1\) and \(d_2\):

When \(\gamma > 1\), setting

we have the type 2 fast Givens:

and update \(d_1\) and \(d_2\):

Then it can be verified that

and

is orthogonal.

In our fast Givens-based diagonal reduction algorithm, all the transformations are based on the decomposition (6). In the beginning, we compute the QR decomposition \(\mathbf {B}=\mathbf {QR}\) and set \(\mathbf {F}=\mathbf {Q}\) and \(\mathbf {D}=\mathbf {I}_n\). Thus, in this case, the size-reduction in each iteration is the same as lines 7–8 of Fig. 1. But the diagonal reduction condition (5) becomes

for \(1< k \le n\). The diagonal reduction algorithm using fast Givens (DRFG) is summarized in Fig. 2.

In comparison with the original diagonal reduction algorithm, DRFG saves a substantial number of multiplication operations, since two entries of the 2-by-2 fast Givens matrix are equal to 1. However, DRFG introduces overhead, such as the computations in line 14 and line 20. Our simulation results presented in Sect. 5 show that overall DRFG is more efficient than DR.

4 Dual Diagonal Reduction

In this section, after a brief introduction to dual basis, we first investigate diagonal reduction of dual basis and prove that if a primal basis is diagonal reduced, then its dual basis is also diagonal reduced. Then we derive an upper bound of proximity factor of SIC decoding, which not only improves an existing bound for the dual LLL reduction in [9], but also extends it to a family of dual LLL-type reductions.

4.1 Dual Lattice Reduction

Let \(L\) be an \(n\)-dimensional lattice in \(\mathbb {R}^{m}\), then the dual lattice \(L^*\) of \(L\) is defined as the set

where \(\langle \mathbf {u}, \mathbf {v}\rangle \) is the inner product of \(\mathbf {u}\) and \(\mathbf {v}\). Suppose that \(\mathbf {B}\) is a primal basis matrix of \(L\), then it is obvious that the columns of \(\mathbf {B}^{\dag \mathrm T}\) form a basis for its dual lattice \(L^*\). In this paper, we adopt the definition of the dual basis \(\mathbf {B}^*\triangleq \mathbf {B}^{\dag \mathrm T}\mathbf {J}\) [9], where

A dual lattice is closely related to its corresponding primal lattice. For instance, we have \(L^{**} = L\) and \(\det (L^*) = 1/ \det (L)\).

Given a primal basis matrix \(\mathbf {B}\), then the dual basis reduction is to perform a lattice reduction algorithm on its dual basis \(\mathbf {B}^*\). Like the primal basis reduction, dual basis reduction can also return a well reduced basis of the primal lattice. Suppose that \(\mathbf {Z}^*\) is the unimodular matrix that reduces the dual basis \(\mathbf {B}^*\), then the corresponding reduced primal basis is given by

where \(\mathbf {J}(\mathbf {Z}^*)^{\dag \mathrm T}\mathbf {J}\) is the unimodular matrix associated with the primal lattice.

To study the reduction properties of diagonal reduction on dual lattices, the following result is essential.

Lemma 1

Let \(\mathbf {B}=\mathbf {QR}\) and \(\mathbf {B}^*=\mathbf {Q}^*\mathbf {R}^*\) be the QR decompositions of the primal basis \(\mathbf {B}\) and its dual basis \(\mathbf {B}^*\), respectively. Then

Proof

It is easy to verify that \(\mathbf {B}^{\dag }=\mathbf {R}^{-1}\mathbf {Q}^\mathrm{T}\). Thus, we have

Obviously, \(\mathbf {QJ}\) has orthonormal columns and \(\mathbf {J}\mathbf {R}^{-\mathrm T}\mathbf {J}\) is an upper triangular matrix, thus the proof is completed.

Based on the above lemma, we can obtain the following result.

Proposition 1

If the lattice basis matrix \(\mathbf {B}\) is diagonal reduced, then its dual basis \(\mathbf {B}^{*}\) is also diagonal reduced.

Proof

Let \(\mathbf {R}=[r_{i,j}]\) and \(\mathbf {R}^{*}\) be the upper triangular factors of \(\mathbf {B}\) and \(\mathbf {B}^{*}\), respectively. Then from Lemma 1,

Since \(\mathbf {B}\) is diagonal reduced, we then have

for all \(1< k \le n\). Multiplying the both sides of (16) with \(\frac{1}{(r_{k-1,k-1}r_{k,k})^2}\), we obtain

which implies that \(\mathbf {R}^*\) is also diagonal reduced.

4.2 Proximity Factor

To characterize the performance gap between suboptimal decoding and ILD, a proximity factor was defined in [8] and further discussed in [9, 18]. Given a lattice generator matrix \(\mathbf {B}=[\mathbf {b}_1,...,\mathbf {b}_n]\), denote \(\phi _i\) the acute angle between \(\mathbf {b}_i\) and the linear space spanned by the previous \(i-1\) basis vectors, then the proximity factor of SIC decoding is defined as:

where the supremum is taken over the set \(\fancyscript{B}_{Red }\) of bases satisfying a certain reduction notion for any \(n\)-dim lattice \(L\). We further define \(\rho \triangleq \max _i \{\rho _{i}\}\). From [9], the average error probability of SIC decoding can be bounded by

for arbitrary SNR.

Denote \(\rho _{\text {LLL}}\), \(\rho _{\text {DLLL}}\), \(\rho _{\text {DR}}\), and \(\rho _{\text {DDR}}\) the proximity factors of SIC decoding aided by LLL reduction, dual LLL reduction, diagonal reduction, and dual diagonal reduction, respectively. An upper bound of \(\rho _{\text {LLL}}\) is given in [18]:

where \(\beta = 1/(\omega -1/4)\ge 4/3\). Following the argument in [19], it is easy to prove that

Thus,

For dual reduction, an upper bound of \(\rho _{\text {DLLL}}\) is given in [9]:

In the following, we improve the upper bound (21). From (19) and Proposition 1, we can obtain that

Thus,

Following the above argument, it is easy to prove that the proximity factors of SIC decoding aided by all dual LLL-type reduction notions, such as dual LLL reduction, dual effective LLL reduction, and dual partial LLL reduction, can be upper bounded by the right-hand side of (23). Comparing (23) with (20), SIC decoding aided by primal and dual diagonal reductions are expected to have the same performance. This shall be confirmed by the simulation results presented in Sect. 5.

5 Simulation Results

In this section, we present our simulation results on comparing the efficiency of the proposed algorithm DRFG with the original algorithm DR. All experiments were performed on matrices with random entries, drawn from an i.i.d. zero-mean, unit variance Gaussian distribution. Without loss of generality, all testing matrices were set to square matrices. For each size, we generated 1,000 random matrices and took an average. The parameter \(\omega \) in the reduction algorithms was set to \(0.99\).

Although the new algorithm DRFG is expected to be faster than the original algorithm DR, the computations in line 14 and line 20 of Fig. 2 introduce overhead. To compare the overall complexity of the algorithms, we experimented on the floating-point operations (flops)Footnote 1 carried out by the algorithms. Figure 3 depicts our results on the average numbers of flops performed by the reduction algorithms. The figure shows that in both cases of primal and dual lattice reduction, DRFG is more efficient than DR, and the performance gap between them widens quickly as the dimension increases. This indicates that the overhead introduced by the fast Givens is insignificant. Also note that the DR (DRFG) algorithm is slightly faster than its dual counter part dual DR (dual DRFG) algorithm. This is due to the additional computation, for instance, the calculation of \(\mathbf {B}^{\dag }\), required by the dual reduction.

We also investigated the reduction quality of different reduction algorithms measured by the BER performance of the SIC decoding. Specifically, using a 64-QAM constellation, Fig. 4 depicts the simulated BER curves of lattice-reduction-aided SIC over an \(8\,\times \,8\) uncoded MIMO fading channel. We have found that the SIC aided by the four diagonal reduction algorithms have identical BER performance to that aided by the LLL algorithm. This is consistent with the theoretical analysis presented in Sect. 4.2.

Notes

- 1.

Flop count: addition/multiplication/division/max/rounding, 1 flop.

References

Agrell, E., Eriksson, T., Vardy, A., Zeger, K.: Closest point search in lattices. IEEE Trans. Inf. Theory 48, 2201–2214 (2002)

Arora, S., Babai, L., Stern, J.: The hardness of approximate optima in lattices, codes, and systems of linear equations. J. Comput. Syst. Sci. 54, 317–331 (1997)

Babai, L.: On Lovász’s lattice reduction and the nearest lattice point problem. Combinatorica 6, 1–13 (1986)

Golub, G.H., Van Loan, C.F.: Matrix Computations. The Johns Hopkins University Press, Baltimore (1996)

Hassibi, B., Vikalo, H.: On the sphere-decoding algorithm I: Expected complexity. IEEE Trans. Signal Process. 53, 2806–2818 (2005)

Jaldén, J., Ottersen, B.: On the complexity of sphere decoding in digital communications. IEEE Trans. Signal Process. 53, 1474–1484 (2005)

Lenstra, A.K., Lenstra, H.W., Lovász, L.: Factorizing polynomials with rational coefficients. Math. Ann. 261, 515–534 (1982)

Ling, C.: Towards characterizing the performance of approximate lattice decoding. Proc. Int. Symp. Turbo Codes/Int. Conf. Source Channel Coding ’06. Munich, Germany (2006).

Ling, C.: On the proximity factors of lattice reduction-aided decoding. IEEE Trans. Signal Process. 59, 2795–2808 (2011)

Ling, C., Howgrave-Graham, N.: Effective LLL reduction for lattice decoding. In: Proceedings IEEE Int. Symp. Inf. Theory (ISIT). Nice, France (2007).

Ling, C., Mow, W.H., Gan, L.: Dual-lattice ordering and partial lattice reduction for SIC-based MIMO detection. IEEE J. Sel. Topics Signal Process. 3, 975–985 (2009)

Mow, W.H.: Universal lattice decoding: Principle and recent advances. Wireless Wirel. Commun. Mobile Comput. 3, 553–569 (2003). (Special Issue on Coding and Its Applications Wireless CDMA System)

Nguyen, P.Q., Vallée, B.: The LLL Algorithm: Survey and Applications. Springer, Berlin (2009)

Schnorr, C.P., Euchner, M.: Lattice basis reduction: Improved practical algorithms and solving subset sum problems. Math. Progr. 66, 181–199 (1994)

Taherzadeh, M., Mobasher, A., Khandani, A.K.: LLL reduction achieves the receive diversity in MIMO decoding. IEEE Trans. Inf. Theory 53, 4801–4805 (2007)

Wübben, D., Seethaler, D., Jaldén, J., Marz, G.: Lattice reduction: a survey with applications in wireless communications. IEEE Signal Process. Mag. 28, 70–91 (2011)

Xie, X., Chang, X.W., Borno, M.A.: Partial LLL reduction. In: Proceedings of IEEE GLOBECOM (2011)

Zhang, W., Qiao, S., Wei, Y.: HKZ and Minkowski reduction algorithms for lattice-reduction- aided MIMO detection. IEEE Trans. Signal Process. 60, 5963–5976 (2012)

Zhang, W., Qiao, S., Wei, Y.: A diagonal lattice reduction algorithm for MIMO detection. IEEE Signal Process. Lett. 19, 311–314 (2012)

Acknowledgments

We would like to thank Professor Lihong Zhi and referees for their useful comments.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Zhang, W., Qiao, S., Wei, Y. (2014). The Diagonal Reduction Algorithm Using Fast Givens. In: Feng, R., Lee, Ws., Sato, Y. (eds) Computer Mathematics. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-43799-5_30

Download citation

DOI: https://doi.org/10.1007/978-3-662-43799-5_30

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-43798-8

Online ISBN: 978-3-662-43799-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)