Abstract

Given a finite set of arbitrarily distributed points in affine space with multiplicity structures, we present an algorithm to compute the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of the vanishing ideal under the lexicographic order. We split the problem into several smaller ones which can be solved by induction over variables and then use our new algorithm for intersection of ideals to compute the result of the original problem. The new algorithm for intersection of ideals is mainly based on the Extended Euclidean Algorithm. Our method discloses the essential geometric connection between the relative position of the points with multiplicity structures and the leading monomials of the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of the vanishing ideal.

This work was supported by National Natural Science Foundation of China under Grant No. 11271156.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Vanishing ideal

- Points with multiplicity structures

- Reduced Gr\({\ddot{\mathrm{o}}}\)bner basis

- Intersection of ideals

1 Introduction

To describe the problem, first we give the definitions below.

Definition 1

\(D\subseteq \mathbb {N}_{0}^{n}\) is called a lower set in \(n\) dimensional affine space as long as \(\forall d\in D\) if \(d_{i}\ne 0\), \(d-e_{i}\) lies in \(D\) where \(e_{i}=(0, \ldots , 0, 1, 0, \ldots , 0)\) with the 1 situated at the \(i\)th position (\(1\le i\le n\)). For a lower set \(D\), we define its limiting set \(E(D)\) to be the set of all \(\beta \in \mathbb {N}_{0}^{n}-D\) such that whenever \(\beta _{i}\ne 0\), then \(\beta -e_{i}\in D\).

As showed in Fig. 1, there are three lower sets and their limiting sets. The elements of the lower sets are marked by solid circles and the elements of the limiting sets are marked by blank circles.

Let \(k\) be a field and \(p\) be a point in the affine space \(k^{n}\), i.e. \(p=(p_{1}, \ldots , p_{n})\in k^{n}\). Let \(k[X]\) be the polynomial ring over \(k\), where we write \(X=(X_{1},X_{2},\ldots ,X_{n})\) for brevity’s sake.

Definition 2

\(\langle p, D\rangle \) represents a point \(p\) with multiplicity structure \(D\), where \(p\) is a point in affine space \(k^{n}\) and \(D\subseteq \mathbb {N}_{0}^{n}\) is a lower set. \(\sharp D\) is called the multiplicity of point \(p\) (here we use the definition in [1]). For each \(d=(d_{1},\ldots ,d_{n})\in D\), we define a corresponding functional

Hence for any given finite set of points with multiplicity structures \(H=\{\langle p_{1}, D_{1}\rangle , \ldots , \langle p_{t}, D_{t}\rangle \}\), \(m\) functionals \(\{L_{i};i=1,\ldots ,m\}\) can be defined where \(m\triangleq \sharp D_{1} +\cdots +\sharp D_{t}\). We call

the vanishing ideal of the set of the points \(H\). The vanishing ideal problem we are focusing on is to compute the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of the vanishing ideal for any given finite set of points \(H\), which arises in several applications, for example, see [2] for statistics, [3] for biology, and [4–6] for coding theory.

A polynomial time algorithm for this problem was first given by Buchberger and M\({\ddot{\mathrm{o}}}\)ller [7], then significantly improved by Marinari et al. [8], and Abbott et al. [9]. These algorithms perform Gauss elimination on a generalized Vandermonde matrix and have a polynomial time complexity in the number of points and in the number of variables. Jeffrey and Gao [10] presented a new algorithm that is essentially a generalization of Newton interpolation for univariate polynomial and has a good computational performance when the number of variables is small relative to the number of points.

In this paper the problem we consider is under the Lexicographical order with \(X_1\succ X_2\succ \cdots X_n\) and a more transparent algorithm will be given. The ideas are summed-up as follows:

-

Construct the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of \(I(H)\) and get the quotient basis by induction over variables (define \(\{M;M\) is a monomial and it is not divisible by the leading monomial for any polynomial in \(I(H)\}\) as the quotient basis for the vanishing ideal \(I(H)\)).

-

Get the quotient basis of the vanishing ideal purely according to the geometric distribution of the points with multiplicity structures.

-

Split the original \(n\)-variable problem into smaller ones which can be solved by converting them into \((n-1)\)-variable problems.

-

Compute the intersection of the ideals of the smaller problems by using Extended Euclidean Algorithm.

Our algorithm can get a lower set by induction over variables for any given set of points with multiplicity structures, and by constructing the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis at the same time we can prove that the lower set is the quotient basis. There are several publications which have a strong connection to the our work although they are all only focusing on the quotient basis, ignoring the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of the vanishing ideal. Paper [11] gives a computationally efficient algorithm to get the quotient basis of the vanishing ideal over a set of points with no multiplicity structures and the authors introduce the interesting lex game to describe the problem and the algorithm. Paper [12] offers a purely combinatorial algorithm to obtain the quotient basis and the algorithm can handle the set of points with multiplicity structures as well.

The advantage of our method is insight rather than efficient computation. The computation cost depends closely on the structure of the given set of points and a full complexity analysis would be infeasible. Our method may not be particularly efficient, but is geometrically intuitive and appealing. The clear geometric meaning of our method reveals the essential connection between the relative position of the points with multiplicity structures and the quotient basis of the vanishing ideal, providing us a new perspective of view to look into the vanishing ideal problem and helping study the structure of the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of zero dimensional ideal under lexicographic order. What’s more, our method leads to the discovery of a new algorithm to compute the intersection of two zero dimensional ideals.

Since one important feature of our method is the clear geometric meaning, to demonstrate it we present an example in Sect. 2 together with some auxiliary pictures which can make the algorithms and conclusions in this paper easier to understand. In Sects. 3 and 4 some definitions and notions are given. Sections 5 and 6 are devoted to our main algorithms of computing the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis and the quotient basis together with the proofs. In Sect. 7 we demonstrate the algorithm to compute the intersection of two ideals and some applications.

2 Example

We will use two different forms to represent the set of points with multiplicity structures \(H\) in this paper.

For easier description, we introduce the matrix form which consists of two matrices \(\langle \fancyscript{P}=(p_{i, j})_{m\times n}, \fancyscript{D}=(d_{i, j})_{m\times n}\rangle \) with \(\fancyscript{P}_{i}, \fancyscript{D}_{i}\) denoting the \(i\)th row vectors of \(\fancyscript{P}\) and \(\fancyscript{D}\) respectively. Each pair \(\{\fancyscript{P}_{i}, \fancyscript{D}_{i}\}\) \((1\le i\le m)\) defines a functional in the following way.

And the functional set defined here is the same with that defined by the way in Sect. 1 with respect to \(H\).

For example, given a set of three points with their multiplicity structures \(\{\langle p_{1}, D_{1}\rangle , \langle p_{2}, D_{2}\rangle , \langle p_{3}, D_{3}\rangle \}\), where \(p_{1}=(1, 1), p_{2}=(2, 1), p_{3}=(0, 2), D_{1}=\{(0, 0), (0, 1), (1, 0)\},D_{2}=\{(0,0),(0,1),(1,0),(1,1)\}, D_{3}=\{(0, 0), (1, 0)\}\), the matrix form is like the follows.

For intuition’s sake, we also represent the points with multiplicity structures in a more intuitive way as showed in the left picture of Fig. 2 where each lower set that represents the multiplicity structure of the corresponding point \(p\) is also put in the affine space with the zero element (0,0) situated at \(p\). This intuitive representing form is the basis of the geometric interpretation of our algorithm.

We take the example above to show how our method works and what the geometric interpretation of our algorithm is like:

Step 1: Define mapping \(\pi :H\mapsto k\) such that \(\langle p=(p_1,\ldots ,p_n),D\rangle \in H\) is mapped to \(p_n\in k\). So \(H=\{\langle p_{1},D_{1}\rangle , \langle p_{2}, D_{2}\rangle , \langle p_{3},D_{3}\rangle \}\) consists of two \(\pi \)-fibres: \(H_{1}=\{\langle p_{1}, D_{1}\rangle , \langle p_{2}, D_{2}\rangle \}\) and \(H_{2}=\{\langle p_{3}, D_{3}\rangle \}\) as showed in the middle and the right pictures in Fig. 2. Each fibre defines a new problem, so we split the original problem defined by \(H\) into two small ones defined by \(H_1\) and \(H_2\) respectively.

Step 2: Solve the small problems. Take the problem defined by \(H_{1}\) for example.

First, it’s easy to write down one element of \(I(H_{1})\):

The geometry interpretation is: we draw two lines sharing the same equation of \(X_{2}-1=0\) to cover all the points as illustrated in the left picture in Fig. 3 and the corresponding polynomial is \(f_1\).

According to the middle and the right pictures in Fig. 3, we can write down another two polynomials in \(I(H_{1})\):

It can be checked that \(G_{1}=\{f_{1},f_{2},f_{3}\}\) is the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of \(I(H_{1})\), and the quotient basis is \(\{ 1,X_{1},X_{2},X_{1}X_{2},X_{1}^{2},X_{2}X_{1}^{2},X_{1}^{3}\}\). In the following, we don’t distinguish explicitly an \(n\)-variable monomial \(X_1^{d_1}X_2^{d_2}\ldots X_n^{d_n}\) with the element \((d_1,d_2,\ldots , d_n)\) in \(\mathbb {N}_{0}^{n}\). Hence this quotient basis can be written as a subset of \(\mathbb {N}_{0}^{n}\): \(\{(0,0),(1,0),(0,1), (1,1),(2,0), (2,1), (3,0)\}\), i.e. a lower set, denoted by \(D^{\prime }\).

In fact we can get the lower set in a more direct way by pushing the points with multiplicity structures leftward which is illustrated in the picture below (lower set \(D^{\prime }\) is positioned in the right part of the picture with the (0,0) element situated at point (0,1)). The elements of the lower set \(D^{\prime }\) in the right picture in Fig. 4 are marked by solid circles. The blank circles constitute the limiting set \(E(D^{\prime })\) and they are the leading terms of the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis \(\{f_{1},f_{2},f_{3}\}\).

In the same way, we can get the Gr\({\ddot{\mathrm{o}}}\)bner basis \(G_{2}=\{h_{1},h_{2}\}\) and a lower set \(D^{\prime \prime }\) for the problem defined by \(H_{2}\), where \(h_{1}=(X_{2}-2),h_{2}=X_{1}^{2},D^{\prime \prime }=\{(0,0),(1,0)\}\).

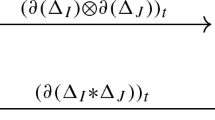

Step 3: Compute the intersection of the ideals \(I(H_{1})\) and \(I(H_{2})\) to get the result for the problem defined by \(H\).

First, we construct a new lower set \(D\) based on \(D^{\prime },D^{\prime \prime }\) in an intuitive way: let the solid circles fall down and the elements of \(D^{\prime \prime }\) rest on the elements of \(D^{\prime }\) to form a new lower set \(D\) which is showed in the right part of Fig. 5 and the blank circles represent the elements of the limiting set \(E(D)\).

Then we need to find \(\sharp E(D)\) polynomials vanishing on \(H\) with leading terms being the elements of \(E(D)\). Take \(X_{1}^{3}X_{2}\in E(D)\) for example to show the general way we do it.

We need two polynomials which vanish on \(H_{1}\) and \(H_{2}\) respectively, and their leading terms both have the same degrees of \(X_{1}\) with that of the desired monomial \(X_{1}^{3}X_{2}\) and both have the minimal degrees of \(X_{2}\). Notice that \(f_{2}\) and \(X_{1}\cdot h_{2}\) satisfy the requirement. And then we multiply \(f_{2}\) and \(X_{1}\cdot h_{2}\) with \(h_{1},f_{1}\) respectively which are all univariate polynomials in \(X_{2}\) to get two polynomials \(q_{1},q_{2}\) such that \(q_{1}\) and \(q_{2}\) both vanish on \(H\). Obviously \(q_{1}\) and \(q_{2}\) still have the same degrees of \(X_{1}\) with that of the desired monomial \(X_{1}^{3}X_{2}\).

Next try to find two univariate polynomials in \(X_{2}\): \(r_{1},r_{2}\) such that \(q_{1}\cdot r_{1}+q_{2}\cdot r_{2}\) vanishes on \(H\) (which is obviously true already) and has the desired leading term \(X_{1}^{3}X_{2}\).

To settle the leading term issue, write \(q_{1},q_{2}\) as univariate polynomials in \(X_{1}\) as above. Because \(X_{2}\prec X_{1}\) and the highest degrees of \(X_{1}\) of the leading terms of \(q_{1},q_{2}\) are both \(3\), we know that as long as the leading term of \((X_{2}-2)(X_{2}-1)X_{1}^{3}\cdot r_{1}+(X_{2}-1)^{2}X_{1}^{3}\cdot r_{2}\) is \(X_{1}^{3}X_{2}\), the leading term of \(q_{1}\cdot r_{1}+q_{2}\cdot r_{2}\) is also \(X_{1}^{3}X_{2}\).

Obviously if and only if \((X_{2}-2)\cdot r_{1}+(X_{2}-1)\cdot r_{2}=1\) we can keep the leading term of \(q_{1}\cdot r_{1}+q_{2}\cdot r_{2}\) to be \(X_{1}^{3}X_{2}\). In this case \(r_{1}=-1\) and \(r_{2}=1\) will be just perfect. In our algorithm we use Extended Euclidean Algorithm to compute \(r_{1},r_{2}\).

Finally, we obtain

which vanishes on \(H\) and has \(X_{1}^{3}X_{2}\) as its leading term.

In the same way, we can get \(g_{1}=(X_{2}-1)^{2}(X_{2}-2)\) for \(X_{2}^{3}\), \(g_{2}=(X_{2}-1)^{2}X_{1}^{2}\) for \(X_{1}^{2}X_{2}^{2}\) and \(g_{4}=X_{1}^{4}+6(X_{2}^{2}-2X_{2})X_{1}^{3}-13(X_{2}^{2}-2X_{2})X_{1}^{2}+12(X_{2}^{2}-2X_{2})X_{1}-4(X_{2}^{2}-2X_{2})\) for \(X_{1}^{4}\). In fact we need to compute \(g_{1},~g_{2},~g_{3}\) and \(g_{4}\) in turn according to the lexicographic order because we need reduce \(g_{2}\) by \(g_{1}\), reduce \(g_{3}\) by \(g_{2}\) and \(g_{1}\), and reduce \(g_4\) by \(g_1\), \(g_2\) and \(g_3\).

The reduced polynomial set can be proved in Sect. 6 to be the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of the intersection of two ideals which is exactly the vanishing ideal over \(H\), and \(D\) is the quotient basis.

This example shows what the geometric interpretation of our method is like: for any given point with multiplicity structure \(\langle p_i, D_i\rangle \), we put the lower set \(D_i\) into the affine space with the (0,0) element situated at \(p_i\) to intuitively represent it, and it can be imagined as \(\sharp D_i\) small balls in the affine space; for bivariate problem, we first push the balls along the \(X_1\)-axis, then along the \(X_2\)-axis to get a lower set as we did in the example above; the lower set is exactly the quotient basis and the limiting set of the lower set is the set of the leading monomials of the reduced G\({\ddot{\mathrm{o}}}\)bner basis. This intuitive understanding can be applied to the \(n\)-variable problem and can help us understand the algorithm better in the following.

3 Notions

First, we define the following mappings:

\(~~~~\mathrm{proj}:\mathbb {N}_{0}^{n} \longrightarrow \mathbb {N}_{0}\)

\(~~~~~~~~~~(d_{1},\ldots ,d_{n})\longrightarrow d_{n}\).

\(~~~~\widehat{\mathrm{proj}}:\mathbb {N}_{0}^{n}\longrightarrow \mathbb {N}_{0}^{n-1}\)

\(~~~~~~~~~~~(d_{1},\ldots ,d_{n})\longrightarrow (d_{1},\ldots ,d_{n-1})\).

\(~~~~\mathrm{embed}_{c}:\mathbb {N}_{0}^{n-1}\longrightarrow \mathbb {N}_{0}^{n}\)

\(~~~~~~~~~~(d_{1},\ldots ,d_{n-1})\longrightarrow (d_{1},\ldots ,d_{n-1},c)\).

Let \(D\subset \mathbb {N}_0^{n}\), and naturally we define \(\widehat{\mathrm{proj}}(D)=\{\widehat{\mathrm{proj}}(d)|d\in D\}\), and \(\mathrm{embed}_{c}(D^{\prime }) =\{\mathrm{embed}_{c}(d)|d\in D^{\prime }\}\) where \(D^{\prime }\subset \mathbb {N}_0^{n-1}\). In fact we can apply these mappings to any set \(O\subset k^{n}\) or any matrix of \(n\) columns, because there is no danger of confusion. For example, let \(M\) be a matrix of \(n\) columns, and \(\widehat{\mathrm{proj}}(M)\) is a matrix of \(n-1\) columns with the first \(n-1\) columns of \(M\) reserved and the last one eliminated.

The \(\mathrm{embed}_c\) mapping embeds a lower set of the \(n-1\) dimensional space into the \(n\) dimensional space. When the \(\mathrm{embed}_c\) operation parameter \(c\) is zero, we can get a lower set of \(\mathbb {N}_0^{n}\) by mapping each element \(d=(d_{1},\ldots ,d_{n-1})\) to \(d=(d_{1},\ldots ,d_{n-1},0)\) as showed below.

Blank circles represent the elements of the limiting sets. Note that after the \(\mathrm{embed}_c\) mapping, there is one more blank circle. In this case, the limiting set is always increased by one element \((0,\ldots ,0,1)\).

In the case the \(\mathrm{embed}_c\) operation parameter \(c\) is not zero, it is obvious that what we got is not a lower set any more. But there is another intuitive fact we should realize (Fig. 6).

Theorem 1

Assume \(D_{0},D_{1},\ldots ,D_{\ell }\) are lower sets in \(n-1\) dimensional space, and \(D_{0}\supseteq D_{1}\supseteq \cdots \supseteq D_{\ell }\). Let \(\hat{D}_{i}=embed_{i}(D_{i}),i=0,\ldots ,\ell .\) Then \(D=\bigcup _{i=0}^{\ell }\hat{D}_{i}\) is a lower set in \(n\) dimensional space, and \(E(D)\subseteq C\) where \(C=\bigcup _{i=0}^{\ell }embed_{i}(E(D_{i}))\bigcup \{(0,\ldots ,0, \ell +1)\}\).

Proof

First to prove \(D\) is a lower set. \(\forall d\in D,\) let \(i=\mathrm{proj}(d)\), then \(d\in \hat{D}_{i}\) i.e. \(\widehat{\mathrm{proj}}(d)\in \widehat{\mathrm{proj}}(\hat{D}_i)=D_i\). Because \(D_{i}\) is a lower set, hence for \(j=1,\ldots ,n-1,\) if \(d_{j}\ne 0\), then \(\widehat{\mathrm{proj}}(d)-\widehat{\mathrm{proj}}(e_{j})\in D_{i}\) where \(e_{j}=(0, \ldots , 0, 1, 0, \ldots , 0)\) with the 1 situated at the \(j\)th position. So \(d-e_{j}\in \hat{D}_{i}\subseteq D\). For \(j=n\), if \(i=0\), we are finished. If \(i\ne 0\), there must be \(d-e_{n}\in \hat{D}_{i-1}\subseteq D\). Because if \(d-e_{n}\notin \hat{D}_{i-1}\), we have \(\widehat{\mathrm{proj}}(d)\notin D_{i-1}\). Since we already have \(\widehat{\mathrm{proj}}(d)\in D_{i}\), this is contradictory to \(D_{i}\subseteq D_{i-1}\).

Second, assume \(\forall d\in E(D)\), \(\widehat{\mathrm{proj}}(d)\notin D_{i},i=0,\ldots ,\ell \). If \(\widehat{\mathrm{proj}}(d)\) is a zero tuple, then \(d_{n}\) must be \(\ell +1\), that is \(d\in C.\) If \(\widehat{\mathrm{proj}}(d)\) is not a zero tuple, then we know \(d_{n}<\ell +1\). If \(d_{j}\ne 0,~j=1,\ldots ,n-1\) , then \(d-e_{j}\in \mathrm{embed}_{d_{n}}(D_{d_{n}})\). Then \(\widehat{\mathrm{proj}}(d) -\widehat{\mathrm{proj}}(e_{j})\in D_{d_{n}}\), that is \(\widehat{\mathrm{proj}}(d)\in E(D_{d_{n}})\). Finally with the \(\mathrm{embed}_{d_{n}}\) operation we have \(d\in \mathrm{embed}_{d_{n}}(E(D_{d_{n}}))\) where \(d_{n}<\ell +1\). So \(d\in C\). \(\square \)

4 Addition of Lower Sets

In this section, we define the addition of lower sets which is the same with that in [13], the following paragraph and Fig. 7 are basically excerpted from that paper with a little modification of expression.

To get a visual impression of what the addition of lower sets are, look at the example in Fig. 7. What is depicted there can be generalized to arbitrary lower sets \(D_{1}\), \(D_{2}\) and arbitrary dimension \(n\). The process can be described as follows. Draw a coordinate system of \(\mathbb {N}_{0}^{n}\) and insert \(D_{1}\). Place a translate of \(D_{2}\) somewhere on the \(X_2\)-axis. The translate has to be sufficiently far out, so that \(D_{1}\) and the translate \(D_{2}\) do not intersect. Then push the elements of the translate of \(D_{2}\) down along the \(X_2\)-axis until on room remains between them and the elements of \(D_{1}\). The resulting lower set is denoted by \(D_{1}+D_{2}\).

Intuitively, we define algorithm ALS (short for Addition of Lower Sets) to realize the addition of lower sets.

Algorithm ALS: Given two lower sets in \(n\) dimensional space \(D_{1},D_{2}\), determine another lower set as the addition of \(D_{1},D_{2}\), denoted by \(D:=D_{1}+D_{2}\).

- Step 1:

-

\(D:=D_{1}\);

- Step 2:

-

If \(\sharp D_{2}=0\) return \(D\). Else pick \(a\in D_{2},D_{2}:=D_{2}\setminus \{a\}.\)

- Step 2.1:

-

If \(a\in D\), add the last coordinate of \(a\) with \(1\). Go to Step 2.1.

Else

\(D:=D\bigcup \{a\}\), go to Step 2.

Given three lower sets \(D_{1},D_{2},D_{3}\), the addition we defined satisfies:

-

1.

\(D_{1}+D_{2}=D_{2}+D_{1},\)

-

2.

\((D_{1}+D_{2})+D_{3}=D_{1}+(D_{2}+D_{3}),\)

-

3.

\(D_{1}+D_{2}\) is a lower set,

-

4.

\(\sharp (D_{1}+D_{2})=\sharp D_{1}+\sharp D_{2}.\)

These are all the same with that in [13]. And the proof can be referred to it.

As implied in the example of Sect. 2, when we want to get a polynomial with leading term \(d_{3}\) showed in the right part of Fig. 8, we need two polynomials with the leading terms \(d_{1},d_{2}\) which are not the elements of the lower sets and have the same degrees of \(X_{1}\) as \(d_3\) and the minimal degrees of \(X_{2}\) as showed in the left part of Fig. 8. In other words, \(d_{1}\notin D_{1},~d_{2}\notin D_{2},~\widehat{\mathrm{proj}}(d_{1})=\widehat{\mathrm{proj}}(d_{2})=\widehat{\mathrm{proj}}(d_{3})\), \(\mathrm{proj}(d_{1})+\mathrm{proj}(d_{2})=\mathrm{proj}(d_{3})\). It’s easy to understand that these equations hold for the addition of three or even more lower sets.

We use algorithm CLT (short of Computing the Leading Term) to get the leading terms \(d_{1}\) and \(d_{2}\) from \(d_3\) respectively.

Algorithm CLT: Given \(a\in \mathbb {N}_{0}^{n}\), and a lower set \(D\) in \(n\) dimensional space satisfying \(a\notin D\). Determine another \(r=(r_1,\ldots ,r_n)\in \mathbb {N}_{0}^{n}\) which satisfies that \(r\notin D\), \(\widehat{\mathrm{proj}}(r)=\widehat{\mathrm{proj}}(a)\) and \((r_1,\ldots ,r_{n-1},r_n-1)\in D\), denoted by \(r:=\mathrm{CLT}(a,D)\).

- Step 1:

-

Initialize \(r\) such as \(\widehat{\mathrm{proj}}(r)=\widehat{\mathrm{proj}}(a)\) and \(\mathrm{proj}(r)=0\).

- Step 2:

-

if \(r\notin D\), return r, else \(r_n:=r_n+1\), go to Step 2.

Then \(d_{1}=\mathrm{CLT}(d_{3},D_{1}),~d_{2}=\mathrm{CLT}(d_{3},D_{2}).\)

Definition 3

For any \(f\in k[X]\), view it as an element in \(k(X_{n})[X_{1},\ldots ,X_{n-1}]\) and define \(\mathrm{LC}_n(f)\) to be the leading coefficient of \(f\) which is a univariate polynomial in \(X_{n}\).

Here is the algorithm CP (short for Computing the Polynomial) which can compute the polynomial with the leading term returned by algorithm CLT.

Algorithm CP: \(D\) is a lower set in \(n\) dimensional space, \(a\in \mathbb {N}_{0}^{n}\) and \(a\notin D\), \(G:=\{f_{ed}\in k[X];\)the leading term of \(f_{ed}\) is \(ed, ~ed\in E(D)\}\), algorithm CP returns a polynomial \(p\) whose leading term is \(\mathrm{CLT}(a,D).\) Denoted by \(p:=\mathrm{CP}(a,D,G).\)

- Step 1:

-

\(c:=\mathrm{CLT}(a,D)\).

- Step 2:

-

Select \(c^{\prime }\in E(D),s.t.~c^{\prime }\) is a factor of \(c.~~d:=\frac{c}{c^{\prime }}\). (\(d\) is well defined because \(c\notin D\)).

- Step 3:

-

\(p:=f_{c^{\prime }}\cdot d\) where \(f_{c^{\prime }}\) is an element of \(G\) whose leading term is \(c^{\prime }\in E(D)\).

(\(p\) is well defined because \(d\) is well defined).

Remark 1

\(\mathrm{LC}_n(f_{c^{\prime }})=\mathrm{LC}_n(p)\) in Step 3. Since \(c\) has the minimal degree of \(X_n\) according to algorithm CLT, there exists no element \(c^{\prime \prime }\in E(D)\) which is a factor of \(c\) satisfying \(\mathrm{proj}(c^{\prime \prime })<\mathrm{proj}(c)\). Hence monomial \(d\) in the algorithm does not involve the variable \(X_n\).

5 Associate a Lower set \(D(H)\) to a set of Points \(H\) with Multiplicity Structures

For any given set of points \(H\) with multiplicity structures in \(n\) dimensional space, we can construct a lower set \(D(H)\) in \(n\) dimensional space by induction.

Univariate case: Assume \(H=\{\langle p_{1},D_{1}\rangle ,\ldots ,\langle p_{t},D_{t}\rangle \}\) is a set of points with multiplicity structures in one dimensional space, then the lower set is \(D(H)=\{0,1,\ldots ,\sum _{i=1}^{t}\sharp D_{i}\}\).

Assume the \(n-1\) \((n>1)\) dimensional problem has been solved, now for the \(n\) dimensional situation, we first focus on the Special case.

Special case: Assume \(H=\{\langle p_{1},D_{1}\rangle ,\ldots ,\langle p_{t},D_{t}\rangle \}\) is a set of points with multiplicity structures in the \(n\) (\(n>1\)) dimensional space where all the points share the same \(X_{n}\) coordinate. Write \(H\) in matrix form as \(\langle \fancyscript{P},\fancyscript{D}\rangle \) and all the entries in the last column of matrix \(\fancyscript{P}\) have the same value. Classify the row vectors of \(\langle \fancyscript{P},\fancyscript{D}\rangle \) to get \(\{\langle \fancyscript{P}_{0},\fancyscript{D}_{0}\rangle ,\ldots ,\langle \fancyscript{P}_{w},\fancyscript{D}_{w}\rangle \}\) according to the values of the entries in the last column of matrix \(\fancyscript{D}\) and we guarantee the corresponding relationship between the row vectors of matrix \(\fancyscript{P}\) and matrix \(\fancyscript{D}\) holds in \(\langle \fancyscript{P}_{i},\fancyscript{D}_{i}\rangle \) (\(0\le i\le w\)). All the entries in the last column of \(\fancyscript{D}_{i}\) are the same \(i\) and the entries of the last column of \(\fancyscript{P}_{i}\) stay the same too. Then eliminate the last columns of \(\fancyscript{P}_{i}\) and \(\fancyscript{D}_{i}\) to get \(\langle \widehat{\mathrm{proj}}(\fancyscript{P}_{i}),\widehat{\mathrm{proj}}(\fancyscript{D}_{i})\rangle \) which represents a set of points with multiplicity structures in \(n-1\) dimensional space, by induction we get a lower set \(\hat{D}_{i}\) in \(n-1\) dimensional space. Then we set

Next we deal with the General case.

General case: Assume \(H=\{\langle p_{1},D_{1}\rangle ,\ldots ,\langle p_{t},D_{t}\rangle \}\) is a set of points with multiplicity structures in the \(n\) (\(n>1\)) dimensional space. Split the set of points: \(H=H_{1}\bigcup H_{2}\bigcup \cdots \bigcup H_{s}\) such that the points of each \(H_{i}\) are in the same \(\pi \)-fibre, i.e. they have the same \(X_{n}\) coordinate \(c_{i}\), \(i=1,\ldots ,s\),and \(c_{i}\ne c_{j},\forall i,j=1,\ldots ,s,i\ne j.\) According to the Special case, for each \(i=1,\ldots ,s\), we can get a lower set \(D(H_{i})\), then we set

We now prove \(D(H)\) is a lower set although it is easy to understand as long as the geometric interpretation involves. Since it is obviously true for Univariate case, induction over dimension would be helpful for the proof.

Proof

Assume \(D(H)\) is a lower set for the \(n-1\) dimensional situation and now we prove the conclusion for \(n\) dimensional situation (\(n>1\)).

First to prove \(D(H)\) of the Special case is a lower set.

We claim that \(\langle \widehat{\mathrm{proj}}(\fancyscript{P}_{i}),\widehat{\mathrm{proj}}(\fancyscript{D}_{i})\rangle \) represents a set of points with multiplicity structures in \(n-1\) dimensional space (\(i=0,\ldots ,w\)). For any \(D\subset \mathbb {N}_{0}^{n}\), define \(F_{a}(D)=\{d\in D|~\mathrm{proj}(d)=a\}.\) Let \(U=\{u|u\in \{1,\ldots ,t\},F_i(D_u)\ne \varnothing \}.\) So \(\langle \widehat{\mathrm{proj}}(\fancyscript{P}_{i}),\widehat{\mathrm{proj}}(\fancyscript{D}_{i})\rangle \) can be written in the form of \(\{\langle \widehat{\mathrm{proj}}(p_{u}), \widehat{\mathrm{proj}}(F_{i}(D_{u})) \rangle | u\in U\}\). It is apparent that \(\widehat{\mathrm{proj}}(F_{i}(D_{u}))\) is a lower set in \(n-1\) dimensional space and can be viewed as the multiplicity structure of the point \(\widehat{\mathrm{proj}}(p_{u})\). Hence \(\langle \widehat{\mathrm{proj}}(\fancyscript{P}_{i}),\widehat{\mathrm{proj}}(\fancyscript{D}_{i})\rangle \) is a set of points with multiplicity structures in \(n-1\) dimensional space.

What’s more, we assert \(\widehat{\mathrm{proj}}(\fancyscript{P}_{j})\) is a sub-matrix of \(\widehat{\mathrm{proj}}(\fancyscript{P}_{i}),\) and \(\widehat{\mathrm{proj}}(\fancyscript{D}_{j})\) is a sub-matrix of \(\widehat{\mathrm{proj}}(\fancyscript{D}_{i}),0\le i<j\le w.\) Because of the corresponding relationship between the row vectors in \(\fancyscript{P}\) and \(\fancyscript{D}\), we need only to prove \(\widehat{\mathrm{proj}}(\fancyscript{D}_{j})\) is a sub-matrix of \(\widehat{\mathrm{proj}}(\fancyscript{D}_{i})\). If it is not true, there exists a row vector \(g\) of \(\widehat{\mathrm{proj}}(\fancyscript{D}_{j})\) which is not a row vector of \(\widehat{\mathrm{proj}}(\fancyscript{D}_{i})\). That is, there exists \(b\) (\(1\le b\le t\)) such that \(\mathrm{embed}_j(g)\) is an element of the lower set \(D_{b}\), and \(\mathrm{embed}_i(g)\) is not included in any lower set \(D_{a}\) (\(1\le a\le t\)). However since \(i<j\) and \(\mathrm{embed}_j(g)\in D_b\), \(\mathrm{embed}_i(g)\) must be included in \(D_b\). Hence our assertion is true.

Since \(\widehat{\mathrm{proj}}(\fancyscript{P}_{j})\) is a sub-matrix of \(\widehat{\mathrm{proj}}(\fancyscript{P}_{i}),\) and \(\widehat{\mathrm{proj}}(\fancyscript{D}_{j})\) is a sub-matrix of \(\widehat{\mathrm{proj}}(\fancyscript{D}_{i}),0\le i<j\le w.\) According to the assumption of induction and the way we construct \(D(H)\), we have \(\hat{D}_{i}\supseteq \hat{D}_{j},0\le i<j\le w,\) where \(\hat{D}_{i},\hat{D}_{j}\) are both lower sets. Based on the Theorem 1 in Sect. 3, \(D(H)=\bigcup _{i=0}^{w}\mathrm{embed}_{i}(\hat{D}_{i})\) is a lower set, and \(E(D(H))\subseteq \bigcup _{i=0}^{w} \mathrm{embed}_{i}(E(\hat{D}_{i}))\bigcup \{(0,\ldots ,0,w+1)\}\).

Then to prove \(D(H)\) of General case is a lower set. Since \(D(H_{i}),i=1,\ldots ,s\) are lower sets, and the addition of lower sets is also a lower set according to Sect. 4, \(D(H)\) is obviously a lower set. \(\square \)

6 Associate a set of Polynomials \(\mathrm{poly}(H)\) to \(D(H)\)

For every lower set constructed during the induction procedure showed in the last section, we associate a set of polynomials to it.

We begin with the univariate problem as we did in the last section.

6.1 Univariate Problem

P-Univariate case:

Assume \(H=\{\langle p_{1},D_{1}\rangle ,\ldots ,\langle p_{t},D_{t}\rangle \}\) is a set of points with multiplicity structures in one dimensional space, and \(D(H)=\{0,1,\ldots ,\sum _{i=1}^{t}\sharp D_{i}\}\). Then the set of univariate polynomials associated to \(D(H)\) is \(poly(H)=\{\prod _{i=1}^{t}(X_{1}-p_{i})^{\sharp D_{i}}\}\).

Obviously \(poly(H)\) of P-Univariate case satisfies the following Assumption.

Assumption

For any given set of points with multiplicity structures \(H\) in the \(n-1\) \((n>1)\) dimensional space, there are the following properties. For any \(\lambda \in E(D(H))\), there exists a polynomial \(f_{\lambda }\in k[X]\) where \(X=(X_{1},\ldots ,X_{n-1})\) such that

-

The leading term of \(f_{\lambda }\) under lexicographic order is \(X^{\lambda }\).

-

The exponents of all lower terms of \(f_{\lambda }\) lies in \(D(H)\).

-

\(f_{\lambda }\) vanishes on \(H\).

-

\(\mathrm{poly}(H)=\{f_{\lambda }|\lambda \in E(D(H))\}\).

Now assume the \((n-1)\)-variable \((n>1)\) problem has been solved i.e. for any given set of points with multiplicity structures \(H\) in \(n-1\) dimensional space, we can construct a set of polynomial \(\mathrm{poly}(H)\) which satisfies the Assumption. And then to tackle the \(n\)-variable problem, we still begin with the special case.

6.2 Special Case of the \(n\)-variable \((n>1)\) Problem

P-Special case:

Given a set of points with multiplicity structures in \(n~(n>1)\) dimensional space \(H=\{\langle p_{1},D_{1}\rangle ,\ldots ,\langle p_{t},D_{t}\rangle \}\) or in matrix form \(\langle \fancyscript{P}=(p_{ij})_{m\times n},\fancyscript{D}=(d_{ij})_{m\times n}\rangle \). All the given points have the same \(X_{n}\) coordinate, i.e. the entries in the last column of \(\fancyscript{P}\) are the same. We compute \(\mathrm{poly}(H)\) with the following steps.

- Step 1:

-

\(c:=p_{1n};\) \(w=\mathrm{max}\{d_{in};i=1,\ldots ,m\}.\)

- Step 2:

-

\(\forall i=0,\ldots ,w\), define \(\fancyscript{SD}_{i}\) as a sub-matrix of \(\fancyscript{D}\) containing all the row vectors whose last coordinates equal \(i\). Extract the corresponding row vectors of \(\fancyscript{P}\) to form matrix \(\fancyscript{SP}_{i}\), and the corresponding relationship between the row vectors in \(\fancyscript{P}\) and \(\fancyscript{D}\) holds for \(\fancyscript{SP}_{i}\) and \(\fancyscript{SD}_{i}\).

- Step 3:

-

\(\forall i=0,\ldots ,w\), eliminate the last columns of \(\fancyscript{SP}_{i}\) and \(\fancyscript{SD}_{i}\) to get \(\langle \tilde{\fancyscript{SP}_{i}}, \tilde{\fancyscript{SD}_{i}}\rangle \) which represents a set of points in \(n-1\) dimensional space with multiplicity structures.According to the induction assumption, we have the polynomial set \(\tilde{G}_{i}=\mathrm{poly}(\langle \tilde{\fancyscript{SP}_{i}}\),\(\tilde{\fancyscript{SD}_{i}} \rangle )\) associated to the lower set \(\tilde{D}_{i}=D(\langle \tilde{\fancyscript{SP}_{i}}\),\(\tilde{\fancyscript{SD}_{i}} \rangle )\).

- Step 4:

-

\( D:=\bigcup _{i=0}^{w}\mathrm{embed}_{i}(\tilde{D}_{i}).\) Multiply every element of \(\tilde{G}_{i}\) with \((X_{n}-c)^{i}\) to get \(G_{i}.\) \(\tilde{G}:=\bigcup _{i=0}^{w}G_{i}\bigcup \{(X_{n}-c)^{w+1}\}\).

- Step 5:

-

Eliminate the polynomials in \(\tilde{G}\) whose leading term is not included in \(E(D)\) to get \(\mathrm{poly}(H)\).

Theorem 2

The \(\mathrm{poly}(H)\) obtained in P-Special case satisfies the Assumption.

Proof

According to the Sect. 5, \(\langle \tilde{\fancyscript{SP}_{i}}\),\(\tilde{\fancyscript{SD}_{i}} \rangle \) represents a set of points with multiplicity structures in \(n-1\) dimensional space for \(i=0,\ldots ,w.\) And \(\tilde{D}_{j}\supseteq \tilde{D}_{i}, 0\le j\le i\le w\). \(D\) is a lower set and \(E(D)\subseteq \bigcup _{i=0}^{w}\mathrm{embed}_{i}(E(\tilde{D}_{i}))\bigcup \{(0,\ldots ,0,w+1)\}\).

For \(\lambda =(0,\ldots ,0,w+1)\in E(D)\), we have \(f_\lambda =(X_{n}-c)^{w+1}\). It is easy to check that it satisfies the first three terms of the Assumption.

For any other element \(ed\) of \(E(D)\), \(\exists \ell ~s.t.~ed\in \mathrm{embed}_{\ell }E(\tilde{D}_{\ell })\). So let \(\tilde{ed}\) be the element in \(E(\tilde{D}_{\ell })\) such that \(ed=\mathrm{embed}_{\ell }(\tilde{ed})\). We have \(f_{\tilde{ed}}\) vanishes on \(\langle \tilde{\fancyscript{SP}_{\ell }},\tilde{\fancyscript{SD}_{\ell }}\rangle \) whose leading term is \(\tilde{ed}\in E(\tilde{D}_{\ell })\) and the lower terms belong to \(\tilde{D}_{\ell }\). According to the algorithm \(f_{ed}=(X_{n}-c)^{\ell }\cdot f_{\tilde{ed}}\in \mathrm{poly}(H)\) .

First it is easy to check that the leading term of \(f_{ed}\) is \(ed\) since \(ed=\mathrm{embed}_{\ell }(\tilde{ed})\).

Second, the lower terms of \(f_{ed}\) are all in the set \(S=\bigcup _{j=0}^{\ell }\mathrm{embed}_{j}(\tilde{D}_{\ell })\) because all the lower terms of \(f_{\tilde{ed}}\) are in the set \(\tilde{D}_{\ell }\). \(\tilde{D}_{0}\supseteq \tilde{D}_{1}\supseteq \ldots \tilde{D}_{\ell }\), so \(\mathrm{embed}_j(\tilde{D}_{\ell })\subset \mathrm{embed}_j(\tilde{D}_{j})~(0\le j\le \ell )\), hence \(S\subseteq D=\bigcup _{j=0}^{w}\mathrm{embed}_{j}(\tilde{D}_{j})\) and the second term of the Assumption is satisfied.

Third, we are going to prove that \(f_{ed}\) vanishes on all the functionals defined by \(\langle \fancyscript{P},\fancyscript{D}\rangle \), i.e. all the functionals defined by \(\langle \fancyscript{SP}_i,\fancyscript{SD}_i\rangle ~(i=0,\ldots ,w).\) Write all the functionals defined by \(\langle \fancyscript{SP}_i,\fancyscript{SD}_i\rangle \) in this form: \(L^{\prime }\cdot \frac{\partial ^{i}}{\partial X_n^{i}}|_{X_n=c}\) where \(L^{\prime }\) is an \(n-1\) variable functional. Substitute the zeroes and use the fact that \(f_{\tilde{ed}}\) vanishes on \(\langle \tilde{\fancyscript{SP}_{\ell }},\tilde{\fancyscript{SD}_{\ell }}\rangle \), it’s apparent that \(f_{ed}=(X_{n}-c)^{\ell }\cdot f_{\tilde{ed}}\) vanishes on these functionals.

So \(f_{ed}\) vanishes on \(H\), and satisfies the first three terms of the Assumption.

In summary \(\mathrm{poly}(H)\) satisfies the Assumption. \(\square \)

Remark 2

For \(f_{\lambda }\in \mathrm{poly}(H),\lambda \in E(D)\) where \(\mathrm{poly}(H)\) is the result gotten in the algorithm above, we have the conclusion that \(\mathrm{LC}_n(f_{\lambda })=(X_{n}-c)^{\mathrm{proj}(\lambda )}\).

6.3 General Case of the \(n\)-variable \((n>1)\) Problem

P-General case:

Given a set of points with multiplicity structures in \(n\) \((n>1)\) dimensional space \(H=\{\langle p_{1},D_{1}\rangle ,\ldots ,\langle p_{t},D_{t}\rangle \}\) or in matrix form \(\langle \fancyscript{P}=(p_{ij})_{m\times n},\fancyscript{D}=\) \((d_{ij})_{m\times n}\rangle \), we are going to get \(\mathrm{poly}(H)\).

- Step 1:

-

Write \(H\) as \(H=H_{1}\bigcup H_{2}\bigcup \cdots \bigcup H_{s}\) where \(H_{i}~(1\le i\le s)\) is a \(\pi \)-fibre (\(\pi :H\mapsto k\) such that \(\langle p=(p_1,\ldots ,p_n),D\rangle \in H\) is mapped to \(p_n\in k\)) i.e. the points of \(H_{i}\) have the same \(X_{n}\) coordinate \(c_{i}\), \(i=1,\ldots ,s\), and \(c_{i}\ne c_{j},\forall i,j=1,\ldots ,s,i\ne j.\)

- Step 2:

-

According to the P-Special case, we have \(D^{\prime }_{i}=D(H_i), G_{i}=\mathrm{poly}(H_{i})\). Write \(H_{i}\) as \(\langle \fancyscript{P}_{i},\fancyscript{D}_{i}\rangle \), and define \(w_{i}\) as the maximum value of the elements in the last column of \(\fancyscript{D}_{i}\).

- Step 3:

-

\(D:=D^{\prime }_{1}, G:=G_{1},i:=2\).

- Step 4:

-

If \(i>s\), go to Step 5. Else

- Step 4.1:

-

\(D:=D+D^{\prime }_{i};\) \(\hat{G}:=\varnothing \). View \(E(D)\) as a monomial set \(MS:=E(D)\).

- Step 4.2:

-

If \(\sharp MS= 0\), go to Step 4.7, else select the minimal element of \(MS\) under lexicographic order, denoted by \(LT\). \( MS:=MS\setminus \{LT\}\).

- Step 4.3:

-

$$f_{1}:=\mathrm{CP}(LT,D,G),f_{2}:=\mathrm{CP}(LT,D_{i}^{\prime },G_{i}).$$

\(v_{\wp }:=\mathrm{proj}(g_{\wp })\), where \(g_{\wp }:=\mathrm{CLT}(LT,D_{\wp }^{\prime })\), \(\wp =1,\ldots ,i\).

- Step 4.4:

-

$$q_{1}:=f_{1}\cdot (X_{n}-c_{i})^{w_{i}+1};~~q_{2}:=f_{2}\cdot \prod _{\wp =1}^{i-1}(X_{n}-c_{\wp })^{w_{\wp }+1}.$$$$pp1:=(X_{n}-c_{i})^{w_{i}+1-v_{i}};~~pp2:=\prod _{\wp =1}^{i-1}(X_{n}-c_{\wp })^{w_{\wp }+1-v_{\wp }}.$$

- Step 4.5:

-

Use Extended Euclidean Algorithm to compute \(r_{1}\) and \(r_{2}\) s.t. \(r_{1}\cdot pp_{1} +r_{2}\cdot pp_{2}=1\).

- Step 4.6:

-

\(f:=r_{1}\cdot q_{1}+r_{2}\cdot q_{2}\). Reduce \(f\) with the elements in \(\hat{G}\) to get \(f^{\prime }\); \(\hat{G}:=\hat{G}\bigcup \{f^{\prime }\}.\) Go to Step 4.2.

- Step 4.7:

-

\(G:=\hat{G}.\) \(i:=i+1.\) Go to Step 4.

- Step 5:

-

\(\mathrm{poly}(H):=G\).

Theorem 3

The \({poly}(H)\) obtained in P-General case satisfies the Assumption.

Proof

It is easy to know \(v_\wp \le w_\wp +1\) according to their definitions, so the polynomials \(pp1\) and \(pp2\) in Step 4.4 do make sense. And to prove Theorem 3, we need only to prove the situation that \(s\ge 2\) in Step 1.

For \(i=2\), \(D=D_{1}^{\prime }+D_{2}^{\prime }, ~\forall ed\in E(D)\), \(v:=\mathrm{proj}(ed)\) and \(X_0:=\frac{X^{ed}}{X_n^{v}}\). According to Sect. 4, we have \(v=v_{1}+v_{2}\). Based on the Remarks 1 and 2, \(f_{1}\) and \(f_{2}\) can be written as polynomials of \(k(X_{n})[X_{1},\ldots ,X_{n-1}]:\) \(f_{1}=X_{0}\cdot (X_{n}-c_{1})^{v_{1}}+\textit{the rest}\) and \(f_{2}=X_{0}\cdot (X_{n}-c_{2})^{v_{2}}+\textit{the rest}\) and none of the monomials in \(\textit{the rest}\) is greater than or equal to \(X_{0}.\) Because \(f_{1}\) and \((X_{n}-c_{1})^{w_{1}+1}\) vanish on \(H_{1}\), \(f_{2}\) and \((X_{n}-c_{2})^{w_{2}+1}\) vanish on \(H_{2}\), we know that \(q_{1}=f_{1}\cdot (X_{n}-c_{2})^{w_{2}+1}\) and \(q_{2}=f_{2}\cdot (X_{n}-c_{1})^{w_{1}+1}\) both vanish on \(H_{1}\bigcup H_{2}\). Then \(f\) vanishes on \(H_{1}\bigcup H_{2}\) where

None monomial in \(\textit{the rest}\) is greater than or equal to \(X_{0}\) , so the leading term of \(f\) is obviously \(X_{0}\cdot X_{n}^{v}\) which is equal to \(ed\). Naturally \(\mathrm{LC}_n(f)=\prod _{j=1}^{i}(X_{n}-c_{j})^{v_{j}}\) for \(i=2\).

We assert that for any \(i\), the polynomial \(f\) in [step 4.6] satisfies that \(\mathrm{LC}_n(f) =\prod _{j=1}^{i}(X_{n}-c_{j})^{v_{j}}\).

When \(i>2\), assume the assertion above holds for \(i-1\). \(\forall ~ ed\in E(D)\), \(v:=\mathrm{proj}(ed)\) and \(X_0:=\frac{X^{ed}}{X_n^{v}}\). According to Sect. 4, we have \(v=v_{1}+\cdots +v_{i}\). Based on the assertion for \(i-1\), Remarks 1 and 2, \(f_{1}\) and \(f_{2}\) can be written as polynomials of \(k(X_{n})[X_{1},\ldots ,X_{n-1}]:\)

and none of the monomials in \(\textit{the rest}\) is greater than or equal to \(X_{0}\). Because \(f_{1}\) and \(\prod _{j=1}^{i-1}(X_{n}-c_{j})^{w_{j}+1}\) vanish on \(\bigcup _{j=1}^{i-1}H_{j}\), \(f_{2}\) and \((X_{n}-c_{i})^{w_{i}+1}\) vanish on \(H_{i}\), we know that \(q_{1}=f_{1}\cdot (X_{n}-c_{i})^{w_{i}+1}\) and \(q_{2}=f_{2}\cdot \prod _{j=1}^{i-1}(X_{n}-c_{j})^{w_{j}+1}\) both vanish on \(\bigcup _{j=1}^{i} H_{j}\). Then \(f\) vanishes on \(\bigcup _{j=1}^{i} H_{j}\) where

None monomial in \(\textit{the rest}\) is greater than or equal to \(X_{0}\) and the leading term of \(f\) is obviously \(X_{0}\cdot X_{n}^{v}\) which is equal to \(ed\). Hence the assertion holds for arbitrary \(i\).

Therefore we have proved that for any element \(ed\in E(D)\), \(f_{ed}:=f\) vanishes on \(H\) and the leading term is \(ed\). In the algorithm, we compute \(f_{ed}\) in turn according to the lexicographic order of the elements of \(E(D)\). Once we get a polynomial, we use the polynomials obtained previously to reduce it (refer to Step 4.6). Now to prove the lower terms of the polynomial are all in \(D\) after such a reduction operation.

Let \(D\) be a lower set, \(a\) be a monomial, define \(L(a,D)=\{b\in \mathbb {N}_{0}^{n};b\prec a,b\in D\}\). Given any \(d\notin D\), there exist only two situations: \(d\in E(D)\) or \(d\notin E(D)\) but \(\exists d^{\prime }\in E(D),~ s.t.~d^{\prime }\) is a factor of \(d\). Of course \(d^{\prime }\prec d\).

Consider the sequence \(\mathfrak {R}=\{T_1,T_2,T_3,\ldots \}\) of all the monomials with the elements of \(D\) discarded and all the elements are arranged according to the lexicographic order, use induction on it to prove that for every element \(T_t\) \((t>0)\) we can construct a vanishing polynomial with the leading term \(T_t\) and all the lower terms are in \(D\), i.e. \(T_t\) can be represented as the linear combination of the elements of \(L(T_t,D)\).

The very first vanishing polynomial we got in the algorithm is a univariate polynomial in \(X_{n}\) whose leading term is exactly \(T_1\). It is obvious that the lower terms are in \(D\), i.e. \(T_1\) can be represented as the combination of the elements of \(L(T_1,D)\).

Assume that \(T_{m-1}\) \((m\ge 2)\) can be written as the combination of the elements of \(L(T_{m-1},D)\), now to prove it is true for \(T_m\).

If \(T_m\in E(D)\), the algorithm provides us a vanishing polynomial whose leading term is \(T_m\), i.e. \(T_m\) can be represented as the combination of the terms which are all smaller than \(T_m\). According to the induction assumption, for any lower term \(T\notin D\) of the polynomial, \(T\) can be represented as the linear combination of the elements of \(L(T,D)\), then \(T_m\) can be represented as the linear combination of the elements of \(L(T_m,D).\)

If \(T_m\notin E(D)\), there exists \(d^{\prime }\in E(D)\) s.t. \(T_m=T_m^{\prime }\cdot d^{\prime }\). Since \(d^{\prime }\prec T_m\), according to the assumption, we can substitute \(d^{\prime }\) with the linear combination of the elements of \(L(d^{\prime },D)\). Since all the elements in \(L(d^{\prime },D)\) are smaller than \(d^{\prime }\), then \(T_m\) can be represented as the combination of elements which are all smaller than \(T_m\). Then for the same reason described in the last paragraph, \(T_m\) can be represented as the linear combination of the elements of \(L(T_m,D).\)

Therefore for every element \(T_t\) \((t>0)\) we can construct a vanishing polynomial with the leading term \(T_t\) and all the lower terms are in \(D\). Particularly for any \(ed\in E(D)\), all the lower terms of the polynomial \(f_{ed}\) we got in the algorithm after the reduction operation are in \(D\). \(\square \)

Remark 3

According to the proof of Theorem 3, \(f\) and \(f^{\prime }\) in Step 4.6 for arbitrary \(i\) satisfy that \(\mathrm{LC}_n(f)=\mathrm{LC}_n(f^{\prime })=\prod _{j=1}^{i}(X_{n}-c_{j})^{v_{j}}\).

Theorem 4

Given a set of points \(H\) with multiplicity structures, \({poly}(H)\) is the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of the vanishing ideal \(I(H)\) and \(D(H)\) is the quotient basis under lexicographic order.

Proof

Let \(m\) be the number of functionals defined by \(H\) and then \(m=\mathrm{dim}(k[X]/I(H))\). Denote by \(J\) the ideal generated by \(\mathrm{poly}(H)\). According to the Assumption, \(\mathrm{poly}(H) \subseteq I(H)\). So \(\mathrm{dim}(k[X]/I(H))\le \mathrm{dim}(k[X]/J)\). Let \(C\) be the set of the leading terms of polynomials in \(J\) under lexicographic order, then \(C\supseteq \bigcup _{\beta \in E(D(H))}(\beta +\mathbb {N}_{0}^{n})\) where the latter union is equal to \(\mathbb {N}_{0}^{n}\backslash D(H)\). Then we can get \(C^{\prime }=\mathbb {N}_{0}^{n}\backslash C\subseteq D(H)\). Because \(k[X]/J\) is isomorphic as a \(k\)-vector space to the \(k\)-span of \(C^{\prime }\), here \(C^{\prime }\) is viewed as a monomial set. So \(\mathrm{dim}(k[X]/J)\le \sharp D(H)=m\). Hence we have

Therefore \(J=I(H)\), where \(J=\langle \mathrm{poly}(H)\rangle \). Hence it is easy to know that \(\mathrm{poly}(H)\) is exactly the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of the vanishing ideal under lexicographic order, and \(D(H)\) is the quotient basis. \(\square \)

Based on Remark 3 and Theorem 4, we can naturally get the following lemma.

Lemma 1

Assume \(G\) is the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of some zero dimensional \(n\)-variable polynomial ideal under lexicographic order with \(X_{1}\succ X_{2}\succ \cdots \succ X_{n}\). Define \(p_{0}(G)\) as the univariate polynomial in \(X_{n}\) of \(G\). View \(g\in G\) as polynomial of \(k(X_{n})[X_{1},\ldots ,X_{n-1}]\) and define \({LC}_n(g)\) to be the leading coefficient of \(g\) which is a univariate polynomial in \(X_{n}\) and we have the conclusion that \({LC}_n(g)\) is always a factor of \(p_{0}(G)\).

Proof

In fact for any given zero dimensional \(n\)-variable polynomial ideal, its reduced Gr\({\ddot{\mathrm{o}}}\)bner basis \(G\) can be constructed from its zeros in the way our algorithm provides. Because the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis under lexicographic order is unique, Remark 3 holds for all the elements of \(G\) i.e. \(\forall g\in G\), \(\mathrm{LC}_n(g)=\prod _{j=1}^{s}(X_{n}-c_{j})^{v_{j}}\) and particularly \(p_{0}(G)=\prod _{j=1}^{s}(X_{n}-c_{j})^{w_{j}+1}\) (refer the algorithm of P-General case for the symbols \(c_j,v_j,w_j\)). Because \(v_{j}\le w_{j}+1\), \(\mathrm{LC}_n(g)\) is a factor of \(p_{0}(G)\). \(\square \)

7 Intersection of Ideals

Based on Lemma 1 and the algorithm of P-General case in Sect. 6, we present a new algorithm named Intersection to compute the intersection of two ideals \(I_1\) and \(I_2\) satisfying that the greatest common divisor of \(p_{0}(G_{1})\) and \(p_{0}(G_{2})\) equals 1 where \(G_1\) and \(G_2\) are respectively the reduced Gr\({\ddot{\mathrm{o}}}\)bner bases of \(I_1\) and \(I_2\) under the lexicographic order i.e. satisfying that the zeros of \(I_1\) and that of \(I_2\) does not share even one same \(X_n\) coordinate.

Denote by \(Q(G)\) the quotient basis where \(G\) is the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis. The following algorithm CPI (short for \(\textit{Computing the Polynomial for Intersection}\)) is a sub-algorithm called in algorithm Intersection.

Algorithm CPI: \(G\) is a reduced Gr\({\ddot{\mathrm{o}}}\)bner basis, for any given monomial \(LT\) which is not in \(Q(G)\), we get a polynomial \(p\) in \(\langle G\rangle \) whose leading term is a factor of \(LT\): the \(X_{1},\ldots ,X_{n-1}\) components of the leading term are the same with that of \(LT\) and the \(X_{n}\) component has the lowest degree. Denoted by \(p:=\mathrm{CPI}(LT,G).\)

- Step 1:

-

\(G^{\prime }:=\{g\in G|\) the leading monomial of \(g\) is a factor of \(LT\) \(\}\).

- Step 2:

-

\(G^{\prime \prime }:=\{g\in G^{\prime }| \) the leading monomial of \(g\) has the smallest degree of \(X_n\) for that of all the elements in \(G^{\prime }\}\).

- Step 3:

-

Select one element of \(G^{\prime \prime }\) and multiply it by a monomial of \(X_{1},\ldots ,X_{n-1}\) to get \(p\) whose leading monomial is \(LT\).

Algorithm Intersection: \(G_{1}\) and \(G_{2}\) are the reduced Gr\({\ddot{\mathrm{o}}}\)bner bases of two different ideals satisfying that \(\mathrm{GCD}(p_{0}(G_{1}),p_{0}(G_{2}))=1\). Return the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of the intersection of these two ideals, denoted by \(G:=\mathrm{Intersection}(G_{1},G_{2})\).

- Step 1:

-

\(D:=Q(G_{1})+Q(G_{2})\). View \(E(D)\) as a monomial set. \(G:=\varnothing \).

- Step 2:

-

If \(E(D)=\varnothing \), the algorithm is done. Else select the minimal element of \(E(D)\), denoted by \(T\). \(E(D):=E(D)\backslash \{T\}\).

- Step 3:

-

$$ f_{1}:=\mathrm{CPI}(T,G_{1}),~f_{2}:=\mathrm{CPI}(T,G_{2}). $$$$ q_{1}:=f_{1}\cdot p_{2},~q_{2}:=f_{2}\cdot p_{1}. $$

- Step 4:

-

$$t_{1}:=\frac{p_{0}(G_2)}{\mathrm{LC}_n(f_{2})},~t_{2}:=\frac{p_{0}(G_1)}{\mathrm{LC}_n(f_{1})}.$$

- Step 5:

-

Use Extended Euclidean Algorithm to find \(r_{1},r_{2}\) s.t.

$$r_{1}\cdot t_{1}+r_{2}\cdot t_{2}=1.$$ - Step 6:

-

\(f:=q_{1}\cdot r_{1}+q_{2}\cdot r_{2}\). Reduce \(f\) with \(G\) to get \(f^{\prime }\), and \(G:=G\bigcup \{f^{\prime }\}\). Go to Step 2.

Remark 4

This algorithm is essentially the same with Step 4.1–Step 4.7 of P-General case in Sect. 6, so it is obvious that \(r_{1},r_{2}\) in Step 5 do exist and the polynomials in the algorithm are all well defined. Besides, \(D\) in Step 1 is not empty, so it is easy to know the result of this algorithm can never be empty.

Because this algorithm is essentially the same with Step 4.1–Step 4.7 of P-General case in Sect. 6, here we omit the proof. And in return, the algorithm of P-General case in Sect. 6 can be simplified according to this Intersection algorithm: we can delete the last sentence in Step 2 and replace Step 4.3 and Step 4.4 respectively by:

- Step 4.3\(^{\prime }\) :

-

$$f_{1}:=\mathrm{CP}(LT,D,G),f_{2}:=\mathrm{CP}(LT,D_{i}^{\prime },G_{i}).$$

- Step 4.4\(^{\prime }\) :

-

$$q_{1}:=f_{1}\cdot p_0(G_i);~~q_{2}:=f_{2}\cdot p_0(G).$$$$pp1:=\frac{p_0(G_i)}{\mathrm{LC}_n(f_{2})};~~pp2:=\frac{p_0(G)}{\mathrm{LC}_n(f_{1})}.$$

8 Conclusion

During the induction of the algorithm in Sect. 6, we can record the leading coefficients for later use to save the computation cost and the computation cost is mainly on the Extended Euclidean Algorithm. But it’s hard to compute how many times we need to use the Extended Euclidean Algorithm for a given problem, and the computation cost depends closely on the structures of the given set of points.

The benefit is the explicit geometric interpretation. For any given point with multiplicity structure \(\langle p, D\rangle \), we put the lower set \(D\) into the affine space with the (0, 0) element situated at \(p\) to intuitively represent it, and it can be imagined as \(\sharp D_i\) small balls in the affine space. Given a set of points with multiplicity structures in \(n\) dimensional space, in the way showed in Sect. 2, we push the balls first along \(X_1\)-axis, then along \(X_2\)-axis, and so on, at last along \(X_n\)-axis to finally get a lower set which turns out to be exactly the quotient basis under the lexicographic order with \(X_1\succ X_2\succ \cdots \succ X_n\). In the future, we will try to apply this geometric interpretation to our previous work on Birkhoff problem [14].

The geometric interpretation in this paper reveals the essential connection between the relative position of the points with multiplicity structures and the quotient basis of the vanishing ideal. It provides us a new perspective of view to look into the vanishing ideal problem and helps study the structure of the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of zero dimensional ideal under lexicographic order. The new algorithm Intersection which computes the intersection of two ideals and Lemma 1 are the direct byproducts of our algorithm. Lemma 1 reveals important property of the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis under lexicographic order, which is necessary for a set of polynomials to be a reduced Gr\({\ddot{\mathrm{o}}}\)bner basis. Lemma 1 can also help us to solve the polynomial system. It is well-known that the Gr\({\ddot{\mathrm{o}}}\)bner basis of an ideal under lexicographic order holds good algebraic structures and hence is convenient to use for polynomial system solving [15]. Once we get the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis \(G\) of a zero dimensional ideal, to solve the polynomial system, we need first compute the roots of \(p_0(G)\). Since \(\mathrm{LC}_n(g)\) (\(g\ne p_0(G),~g\in G\)) is a factor of \(p_0(G)\), computing the roots of \(\mathrm{LC}_n(g)\) which has a smaller degree would be helpful for saving the computation cost.

Lederer [13] presented an algorithm to compute the reduced Gr\({\ddot{\mathrm{o}}}\)bner basis of the vanishing ideal over a set of points with no multiplicity structures. The author splits the problem into several small ones and combines the results of the small problems by using Lagrange interpolation method to get the final result and the idea really inspired us a lot. Because the problem considered here concerning the points with multiplicity structures, we have to consider P-Special case and P-General case, and the Lagrange interpolation method is not available any more, we use the Extended Euclidean Algorithm instead.

References

Stetter, H.J.: Numerical Polynomial Algebra. SIAM, Philadelphia (2004)

Pistone, G., Riccomagno, E., Wynn, H.P.: Algebraic Statistics: Computational Commutative Algebra in Statistics. Monographs on statistics & applied probability. Chapman & Hall/CRC, Boca Raton (2001)

Laubenbacher, R., Stigler, B.: A computational algebra approach to the reverse engineering of gene regulatory networks. J. Theor. Biol. 229, 523–537 (2004)

Guruswami, V., Sudan, M.: Improved decoding of Reed-Solomon and algebraic geometric codes. IEEE Trans. Inf. Theory 46, 1757–1767 (1999)

Koetter, R., Vardy, A.: Algebraic soft-decision decoding of Reed-Solomon codes. IEEE Trans. Inf. Theory 49, 2809–2825 (2003)

Sudan, M.: Decoding of Reed-Solomon codes beyond the error-correction bound. J. Complex. 13, 180–193 (1997)

Buchberger, B., M\({\ddot{\rm o}}\)ller, H.M.: The construction of multivariate polynomials with preassigned zeros. In: Computer Algebra, EUROCAM’82. Lecture Notes in Computer Science, vol. 144, Springer, Berlin, pp. 24–31 (1982)

Marinari, M.G., Möller, H.M., Mora, T.: Gr\(\rm \ddot{o}\)bner basis of ideals defined by functionals with an application to ideals of projective points. Appl. Algebra Eng. Commun. Comput. 4, 103–145 (1993)

Abbott, J., Bigatti, A., Kreuzer, M., Robbiano, L.: Computing ideals of points. J. Symb. Comput. 30, 341–356 (2000)

Farr, J., Gao, S.: Computing Gr\({\ddot{\rm o}}\)bner bases for vanishing ideals of finite sets of points. In: Proceedings of the Conference, ACA (2003)

Felszeghy, B., Ráth, B., Rónyai, L.: The lex game and some applications. J. Symb. Comput. 41, 663–681 (2006)

Cerlienco, L., Mureddu, M.: From algebraic sets to monomial linear bases by means of combinatorial algorithms. Discret. Math. 139, 73–87 (1995)

Lederer, M.: The vanishing ideal of a finite set of closed points in affine space. J. Pure Appl. Algebra 212, 1116–1133 (2008)

Lei, N., Chai, J., Xia, P., Li, Y.: A fast algorithm for the multivariate Birkhoff interpolation problem. J. Comp. Appl. Math. 236, 1656–1666 (2011)

Cox, D., Little, J., OShea, D.: Ideals, Varieties, and Algorithms. Springer, New York (1997)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Lei, N., Zheng, X., Ren, Y. (2014). The Vanishing Ideal of a Finite Set of Points with Multiplicity Structures. In: Feng, R., Lee, Ws., Sato, Y. (eds) Computer Mathematics. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-662-43799-5_21

Download citation

DOI: https://doi.org/10.1007/978-3-662-43799-5_21

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-662-43798-8

Online ISBN: 978-3-662-43799-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)