Abstract

This chapter presents a novel automated two-step algorithm for segmentation of the entire arterial tree in 3D contrast-enhanced Computed Tomography Angiography (CTA) datasets. In the first stage of the proposed algorithm, the main branches of the coronary arteries are extracted from the volume datasets based on a generalised active contour model by utilising both local and global intensity features. The use of local regional information allows for accommodating uneven brightness distribution across the image. The global energy term, derived from the histogram distribution of the input images, is used to deform the contour towards to desired boundaries without being trapped in local stationary points. Possible outliers, such as kissing vessel artefacts, are removed in the following stage by the proposed slice-by-slice correction algorithm. Experimental results on real clinical datasets have shown that our method is able to extract the major branches of the coronaries with an average distance of 0.7 voxels to the manually defined reference data. Furthermore, in the presence of kissing vessel artefacts, the outer surface of the coronary tree extracted by the proposed system is smooth and contains less erroneous segmentation as compared to the initial segmentation.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

According to the most recent statistics by the World Health Organization (WHO), coronary artery disease (CAD) is one of the most prevalent causes of death in the world. Approximately 17 millions people are killed due to one or more types of CAD every year worldwide [1]. Atherosclerosis is a common cause of CAD, where plaque builds up on the insides of arteries. Plaques are typically made up of fat, cholesterol, calcium and other substances found in the blood. If left untreated, it will harden and narrow the arteries over a period of years. As a consequence, the flow of oxygen-rich blood to organs and other parts of body will be reduced thus leading to serious problems, including myocardial/cerebral infarction, or even death. Figure 5.1 shows a comparison before and after plaque builds up within a blood vessel.

Effect of plaque builds up. a Shows a normal artery with normal blood flow. b Shows an artery with plaque buildup [2]

Coronary arteries are the network of blood vessels which supply blood to the myocardium. CAD occurs when the coronaries are occluded and become constricted, which makes the heart become starved for oxygen and other nutrients and eventually stop beating. As illustrated in Fig. 5.2, the arterial tree basically consists of two main branches, namely the Left Coronary Artery (LCA) and Right Coronary Artery (RCA), which arise from the root of the aorta, near the top of the heart. In LCA, the initial segment between the aorta and the first bifurcation is called the left main (LM) coronary. The LM typically branches into the left anterior descending (LAD) and the left circumflex (LCX) arteries. On the other hand, the RCA normally originates from the right coronary cusp and travels to the posterior interventricular branch. In 85 % of cases, the RCA is the dominant vessel and supplies the posterior descending branch which travels in the PIV groove. However, the exact anatomy of the coronary arteries could exhibit large differences from individual to individual [2].

In order to evaluate the development of coronary artery disease, efficient screening procedures are urgently needed for early diagnosis and ultimately timely treatment. From the clinician’s point of view, it is essential to detect and visualise the branches of the arteries, which may be associated to stenosis (narrowing of the arteries). Then, potentially carrying out haemodynamic studies to establish the risk of a patient developing a heart attack, followed by the application of appropriate treatment based on medication or surgical procedures, such as diagnostic cardiac catheterisation and stent implantation [3, 4].

Recent advances in vascular imaging technology using multiple-slice Computed Tomography (CT) provide volumetric datasets with high spatial and temporal resolution. This has given rise to CT becoming a frequently used and reliable imaging modality for the early diagnosis and quantification of atherosclerosis in clinical practice. However, clinical diagnosis of CAD by means of CT imaging is difficult because of the huge amount of data produced in the cardiac CT scanning process (typically, more than 300 slices of axial CT images are produced for a patient). Interpretation of a CT study is a labour-intensive and time-consuming task even with the assistance of semi-automatic software packages [5], as the radiologist has to track and carefully examine each branch of the arteries, segment by segment. Therefore, automated algorithms for segmentation of the coronaries in CT angiograms will facilitate and enhance the accuracy and reliability of the clinical evaluation.

In the following section, we provide an overview of recent developments in the field of the research. Next, we present the proposed framework in Sect. 5.3. This is followed by the presentation of results demonstrating the benefits of the proposed approach. Finally, the conclusions and future developments of this research are presented.

2 State of the Arts in Vessel Segmentation

Despite numerous past and on-going research efforts in the past decade, segmentation of vascular structures from CT images remains a challenging topic due to the small size of vessels in medical images and the complex pathology. Since their introduction as a means of front propagation-based segmentation method, active contour models (also known as snakes) received a great amount of attention by the medical image processing community [6–12]. Active contour models for image segmentation iteratively deform a contour in order to minimise a user-defined energy functional, which often depends on the shape of the contour and its position in the image. Such methods are usually implemented using level sets [13], where the boundaries of the object to be segmented are embedded as the zero level of a higher dimensional level set function. Due to their ability to deal with topological changes, such as region merging and splitting, level sets-based active contour models are usually employed in segmentation of the vascular structures in medical images. Active contour-based methods can be categorised in two folders, when considering image-driven energy: edge-based and region-based models.

In the early edge-based models [11, 14, 15], the active contour deforms with a speed F based on the derivatives of the image, which approaches zero at high image gradients. These methods make use of the local edge information to stop curve deformation, which makes them robust to region inhomogeneities. On the other hand, they are sensitive to image noise and contour initialization due to the use of local gradients. Yang and her colleagues proposed an improved algorithm to segment coronary arteries based on a Bayesian probabilistic framework [16]. In their work, the image force is redefined using posterior probabilities, calculated from the global histogram distributions, to more accurately terminate the surfaces at desired boundaries. In their method, the posterior probabilities are obtained from global statistics, which cannot handle the varying brightness and contrast changes over the image. Thus, their method is not capable of segmenting small and distal segments of the coronary arteries due to their relatively low intensity contrast. In medical images, the borders between different objects are not always clearly defined by the gradients, and thus, the contour may leak into adjacent regions, when using the edge function as the stopping criterion. Nain et al. [17] incorporate a soft shape prior into the conventional active contour model. They propose the application of a shape filter to locally describe the shape of the segmented region. As illustrated in Fig. 5.3, the shape filter is defined as a ball structure centred on each point along the contour with radius r. This measures the percentage of voxels belonging to both the ball and the object (i.e. the regions inside the contour). The output of the shape filter is high when the current point belongs to a region corresponding to leakage. Conversely, lower values of the filter’s output indicate that the current point is within the vessel. The filter response then serves as the external energy of the active contour, penalising leakages during the curve evolution. However, the shape filter cannot discriminate vessel bifurcations from leakage areas, and may result in undesired gaps in the vicinity of vessel bifurcations.

Graphic demonstrating the effect for the shape filter. a Shows of the shape filter centred at x and with the radius R. b The output of the shape filter [17]

Region-based image segmentation methods, which utilise intensity information obtained from image regions, are more robust to image noise. In these methods, region statistics along the contour are calculated to drive the segmentation process. Under the assumption that the object and the background are approximately uniformly distributed, Chan and Vese [6] proposed an active contour model using regional statistics to segment the object of interest in two-phase images. Their work was later extended to multiple-phase images [18], where the N regions (phases) are represented by log2 N level set functions. However, empty regions will be produced when less than N regions are present in the image. To handle more complex intensity distributions, non-parametric method is applied to estimate regional statistics [19]. The aforementioned methods, however, solely based on global intensity statistics, are inefficient in cases where regional statistics are spatially varying across the image. Localised approaches [20–22], where regional statistics are calculated in a neighbourhood of the active contour, have recently emerged to overcome this problem. Such models are more robust to local variations of the region of interest and therefore improve the overall segmentation results. However, segmentation based on local decisions alone may not be sufficient to drive the contour to stop at the desired boundaries, since the contour may be trapped in undesired local stationary points. Moreover, the selection of appropriate scales also poses additional difficulties.

Another issue in the segmentation of vascular structures in coronary CT images is the so called ‘kissing vessel artefacts’, i.e. the non-coronary vessels in close proximity to the arteries. This is a partial volume problem which is often encountered in CT angiograms [23]. It may result to artificial vessel junctions and thus distort the geometry of the vessels. To address this problem, Wong and Chung [24] proposed the tracing of vessel centrelines and segmentation of their cross-sections based on a probabilistic vessel-axis tracing framework. Their algorithm allows user’s interaction to produce the desired traces through the abnormal regions, which contain the kissing vessels, lesion vessels (e.g. the stenosis) and vessel junctions. The final segmentation in these regions is then derived from the axis of the cross-sections.

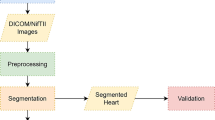

3 Proposed Framework

This section presents the proposed two-step system for segmentation of coronary arteries in CTA images by using both global and local intensity statistics. We deal with the varying image brightness characteristics by computing regional statistics locally, in the neighbourhood of the active contour. The global intensity constraint, on the other hand, is utilised to evolve the contour to the desired boundaries without being trapped in local minima. The proposed approach is implemented in a Bayesian probabilistic framework to incorporate these two homogeneity constraints. Possible outliers, such as kissing vessel artefacts, are removed in the subsequent stage by a novel slice-by-slice correction scheme, which uses multiple regions competition.

3.1 Coronary Arteries Segmentation

We commence our analysis by assuming that voxels in contrast-enhanced CTA images fall into three categories, i.e. the air in the lungs, soft tissues and blood-filled regions. Then, we use a Gaussian Mixture Model (GMM) to fit the histogram of the input CTA volume to estimate the probability density function for each class, as shown in Fig. 5.4a. The mean and variance for each class are estimated using the Expectation–Maximization (EM) method. We use prior anatomical knowledge that coronaries are located on the outer surface of the heart, and thus, we neglect the class corresponds to the air to obtain a bi-modal histogram (see Fig. 5.4b). The first peak (T 1) in the fitted histogram corresponds to soft tissues in the heart, which reflect the intensity distribution of the background pixels. According to the assumption that voxels with intensity values less than T 1 as belonging to the background, while voxels with intensity values greater than this threshold are treated as potential objects of interest (i.e. blood-filled regions), we assign each voxel in the volumetric data with a fuzzy label, which indicates the probability of the voxel belonging to the object.

In this research, we formulate the labelling function as a normalised cumulative density function of the histogram. We normalise the labelling function between −1 and 0 for voxels with intensity values between 0 and T 1, and the output of the labelling function bounded between 0 and 1 for the input voxels with intensity values greater than T 1. Thus, the function is defined as follows:

Let Ω x denote a neighbourhood with a radius r centred at x on the active contour C(x).The localised image, Ω x , can be partitioned into two sub-regions by the active contour, i.e. the regions inside and outside the active contour, respectively. Hence, we define the probability of a voxel being classified as belonging to the region Ω i as follows:

where \(\left\{ {\Omega_{i} |i = 1,2} \right\}\) denote the regions inside and outside the contour. I(y) is the image intensity at y, μ i and σ i represent the mean and the variance derived from region Ω i , respectively. Note that, we use x and y as two independent spatial variables to represent a single point in the image domain. Let C(x) denotes a contour, representing the boundary of the object to be segmented. For each point along the contour, given its local image Ω x and the labelling function L(y), the posterior probability of a voxel y being classified as belonging to the sub-region Ω i ∩ Ω x can be defined as:

where P(y ∈ Ω i ∩Ω x i = 1,2) is the prior probability of the current voxel being assigned to region Ω i among all the possible partitions within the local image Ω x . This term can be ignored, if equal probabilities are assumed for all partitions of the image. P(I(y), L(y)) denotes the joint probability density distribution of the grey level value I(y) and the labelling function L(y), which is independent of the segmentation of the image and can therefore be neglected. We assume that the voxel labels and the grey level intensity distribution are independent. The posterior probability for each voxel can thus be computed as:

The prior probability of P(I(y)|y ∈ Ω i ∩Ω x ) has been already defined in Eq. 5.2. In order to compute the posterior probabilities in Eq. 5.4, the prior probability of the labelling function should be known. In this research, we model the prior probability distribution of the labels as:

where:

represents the weighting kernel, which is a decaying function of the distance between x and y. v 2 is the overall weight that determines the influence of the labels on the segmentation. R(x) is a normalised Boolean function indicating whether the current voxel x is located inside the contour C(x) within the local image Ω x . According to Eq. 5.5, when a voxel, located at position y, is classified as belonging to the object, i.e. R(x) = 1, then a point x in its vicinity has a high probability of being marked as the object (i.e. L(y) → 1) and is less likely to be considered as the background (L(y) → −1).

Maximising the posterior distribution in Eq. 5.4 is equivalent to minimising its negative logarithm, and thus the energy function can be presented as:

where M 1(ϕ(y)) = H(ϕ) and M 2(ϕ(y)) = 1−H(ϕ), and H′(·) denotes the derivative of Heaviside function:

and the associated Euler–Lagrange equation can be then obtained as:

where p 1 and p 2 denote the probability density distribution of the object and background, respectively.

3.2 Slice-by-Slice Correction

The segmentation results obtained from the first stage are rather accurate. However, due to the complexity of medical images and associated artefacts, these may contain outliers, such as kissing non-arterial vessels and other fragments (e.g. as shown in Fig. 5.5a). In order to further improve segmentation performance, we propose a novel slice-by-slice correction scheme to the resulting images obtained in the first stage of the segmentation. We assume that the coronary arteries can be modelled as a tree structure, originating from the ostium (located in the descenting aorta at the top of the heart) and that their transaxial cross-sectional segments taken from the top to the bottom can only split but not merge over the frame sequence (see Fig. 5.6).

Segmentation results obtained from the first stage of the proposed algorithm. a 3D surface reconstruction of the coronary arteries with a touching non-arterial vessel (shown by the arrow). b The cross-sectional image taken from the volume. The closed up image shows the intensity characteristics of the vessel in an axial image. The non-arterial vessel (arrowed) appears darker than the artery

It can be observed from the zoomed image at the right of Fig. 5.5b that the non-arterial vessel appears darker than the coronary, and therefore, it can be distinguished from the coronary by intensity difference. However, two-phase level set methods (i.e. using one level set function for segmentation) can only separate two homogenous regions. Images with more than one object regions cannot be correctly segmented using such models. In this research, we employ a multiple regions competition-based level sets method to correct the resulting image slice-by-slice. In the proposed method, each homogenous region (object) is represented by a level set function, and thus, other non-arterial structures can be identified and removed from the segmentation. As described by Brox and Weickert [25], for a fixed number of objects to be segmented, the evolution equations of multiple regions level sets for image segmentation can be defined as:

Since the term log(p 1 ) is always negative, the evolution of a contour based on this force alone will eventually lead to the level set becoming negative everywhere, and thus eventually shrinking to a single point. The maximum operator ensures that the contour expands outwards with a constant speed, when there is no competition around the zero level set of the current embedding function. If there are multiple regions within a narrow band of current zero levels of the embedding function, the contour will evolve according to the maximal force in the interface. However, the scheme would quickly expand the current contour if only one curve is present, thus, moving the contour away from its initial location and capturing undesired boundaries. By making use of the fact that the segmentation obtained in the first stage is almost accurate, we allow the curve to evolve according to multiple-phase energy when there is no competition nearby, while, when multiple contours are presented in an interface, the points in the interface will move together subject to the strongest force across all the regions. The steps of the slice-by-slice correction algorithm are as follows:

-

1.

Determine the first axial image which contains coronary arteries using the segmented images obtained in the first stage.

-

2.

Assign each connected object to a level set function in the starting slice.

-

3.

Evolve the curves in terms of the energy function defined in Eq. 5.9. To speed up convergence, the segmentation mask obtained from this slice is used as an initial estimate in the following slice.

-

4.

Detection of non-arterial objects. We compare the initialization against the segmentation mask obtained from the first stage. If there is a connected component which does not touch any known components in the initialization mask, then the object is considered as a non-arterial component, and a level set is assigned to it.

-

5.

If the area of the level sets is less than one pixel, we remove such level set and decrease the number of level sets functions.

-

6.

If the current frame is not the last frame containing coronaries, go to step 3.

4 Experiments and Results

The proposed method was tested on real clinical datasets. Twelve coronary CT volumes were acquired from St Thomas’ and Guys’ Hospitals, London, UK. Two of them were obtained with a 16-slice CT scanner (Brilliance, Philips), and the remaining volumes were acquired with a Philips ICT-256 workstation. The mean size of the images is 512 × 512 × 286 with an average in-plane resolution of 0.40 mm × 0.40 mm, and the mean voxel size in the z-axis is 0.42 mm. For each CTA image, four major coronary arterial branches, namely, Right Coronary Artery (RCA), Left Anterior Descending Artery (LAD), Left Circumflex artery (LCX) and one large side branch of the coronaries were evaluated. To quantify the performance of the resulting segmentation, four metrics were used to validate the results, specifically:

where the ground truth N R is a binary image with voxels labelled to one for the object and zero for others, N B indicates the voxels, which are segmented as the object by the aforementioned algorithms. TP, FN and FP denote the true positive, false negative and false positive metrics, respectively. OM represents the overlapping metric defined in [26], which is close to 1, when the segmentation is well matched to the reference ground truth and approaches zero when the results have no similarity to the reference.

The ground truth data were obtained through manual delineation with the assistance of interactive software, developed in our centre. To construct the ground truth data, the CT volume was firstly resampled as a successive cross-sections, which is perpendicular to the course of the arteries, as shown in Fig. 5.7. Then, the luminal area was manually annotated by trained biomedical engineering research students (see Fig. 5.8a). The software will fit the manually delineated curve to a circle by solving the associated least squares problem (see Fig. 5.8b). The software then records the coordinates of the centre and the associated radius. In order to ensure the correct generation of the ground truth data, the luminal area of the artery is required to be specified by the observer at least every 3 mm. By doing so, the ground truth data for each major branch of the arterial tree contains on average 48 central axis points and the associated radii, which takes approximately half an hour to complete. Next, the centreline points were uniformly resampled with a distance at 0.05 mm (roughly 0.1 voxel), and the associated radii were determined via linear interpolation. To construct a closed surface of the ground truth data, we firstly generate the boundary points of the artery based on the centreline and radius information, which is depicted in Fig. 5.9a. Then, the outer surface of the artery can be reconstructed using the ball pivoting algorithm, as illustrated in Fig. 5.9b.

Illustration of the resampled cross-sectional images of the CTA data for the construction of the ground truth data. a The segmented coronary artery is represented as 3D surface (semi-transparent structure), b Cross-sectional images of the artery, obtained by slicing the volume data using the planes perpendicular to the orientation of the vessel

Determination of the ground truth data based on the successive cross-sections. a An example of the annotation of the observer (show in blue), the red circles are the control points determined by the observer (the square denotes the starting control point), b The resulting ground truth data determined by the software in red. The red dot indicates the centre and the circle represents the radius of the artery at this cross section

Construction of the ground truth surface from manually delineated vessel boundaries. a The boundary points of the left coronary artery constructed using the centreline and the corresponding radius information obtained via manual annotation, b The outer surface of the artery reconstructed based on the boundary points shown in (a)

Since the manual segmentation procedure is very time consuming, only four major branches, i.e. right coronary artery (RCA), left anterior descending artery (LAD), left circumflex artery (LCX) and one large side branch of the coronaries, were chosen for evaluation. In addition to the metrics defined in Eq. 5.10, the Hausdorff distance was also applied to measure the difference between the segmented vessel surface and the manually delineated ground truth data. The Hausdorff distance is defined as:

where X, Y are the vertices of the mesh surfaces of the arteries corresponding to the segmentation results and the ground truth, respectively, and d(x, y) measures the Euclidean distance between points x and y belonging to vertices X and Y. The mesh surface of the arteries was obtained by extracting the isosurface of the binary volume obtained from the segmentation/manual delineation, using the marching cube algorithm.

To demonstrate the efficiency of incorporating local intensity features into active contour functional, we compare the performance of the proposed method with the work proposed by [16], which utilises global intensity information alone, in extraction of the arteries in clinical images. In Figs. 5.10 and 5.11, Tables 5.1, 5.2 and 5.3, we present the comparison of the resulting segmentation obtained using the proposed technique and Yang et al.’s method [16], with respect to the ground truth data. The initial surface for the active contour models was obtained through the application of a Hessian-based vessel enhancement filter [27]. The tuning parameters of both of the two techniques were empirically determined from a training set, which consisted of three CT studies randomly selected from the available datasets. Specifically, for the proposed approach, we set u = 0.2, v = 0.1 and the radius of localised kernel was set to 7 voxels. The proposed approach was implemented in MATLAB (R2010b) on a standard specification PC (Dell Precision T3500, Inter(R) Xeon(R) CPU at 2.67 GHz), and the average execution time was found to be 80 s for extraction of the entire coronary trees. Yang et al.’s algorithm, on the other hand, requires roughly 45 s to carry out the same process.

Comparison of the resulting segmentation for datasets #3, #5 and #11 obtained using the proposed model (a), (c) and (e) and Yang et al. [16] technique (b), (d) and (f), with respect to the ground truth data. The semi-transparent surface denotes the outer surface of the ground truth data, and the resulting segmentation is depicted in red

2D transaxial images illustrating the segmentation results. a and b depict the resulting segmentation on 2D cross-sectional images randomly taken from dataset #3, c and d depict the resulting segmentation on 2D axial image from dataset #5, The examples of the segmentation results on data #11 are illustrated in (e) and (f). The red contour represents the manually delineated ground truth, while the segmentation obtained from the proposed method and Yang et al. [16] method are shown in blue and black contours, respectively. Note that, the cross-sectional images were up-sampled by a factor of five using linear interpolation to increase the resolution

As shown in Table 5.1, the mean TP rate and OM metric for the proposed method were found to be 91.1 % and 0.776, respectively, which indicate that the proposed method is able to correctly extract the major branches of the coronary arteries (see Fig. 3.10a–c). Meanwhile, the high values of the FP rate (39.2 % on average) mean that the proposed method over-segments the arteries, as illustrated in Fig. 5.11, where the segmentation results were shown on the 2D axial image as contours. In these axial images, the red contours represent the ground truth boundary, and the blue and black curves represent the segmentation obtained using the proposed method and Yang et al. [16] algorithm, respectively. Since the ground truth data were modelled as circular cross-sectional tubes, this would lead to the resulting ground truth data under-estimating the true vessel surfaces. However, in terms of the voxel-wise measurements, the average value of the Hausdorff distance metric was found to be 0.73 voxels, which implies that the proposed method is capable of extracting the luminal surfaces of the arteries with sub-voxel accuracy.

In terms of the FP rate, the Yang et al. [16] method outperforms the proposed approach, with the average value found to be 16.9 %. However, we note that the TP and OM metrics of their segmentation results are significantly lower than those of the proposed technique, with the average values being 53.8 % and 0.556, respectively. In addition, by observing the statistics presented in Tables 5.2 and 5.3, we note that both the TP rate and the OM metric vary significantly, with the TP rate ranging from 23.4 to 89.9 %, while the minimum and maximum values of the OM metric were found to be 0.318 and 0.717, respectively. These observations imply that Yang et al. [16] model under-segments the coronary arteries and is only able to extract partial branches of the arterial tree, which is illustrated in Figs. 5.10b, d, e. The reason for this is that the technique, solely relying on global intensity statistics, is sensitive to image contrast and brightness changes. The method is able to correctly extract the arteries when the intensity densities are evenly distributed along the vessel. However, uneven intensity distribution is commonly encountered in coronary CTA, because of the concentration attenuation of the contrast agent and acquisition noise. In this case, the Yang et al. [16] approach can only extract the proximal segments of the arteries, since distal segments have relatively lower intensity values and lack image contrast. In addition, we also found that the TP and OM rates of the proposed method tend to decrease when approaching the distal and small segments of the arteries. However, clinically significant coronary lesions are usually identified in the main and proximal branches of the arteries, which can be well defined by the proposed method. Nevertheless, we can conclude that our technique is able to delineate the vessel boundaries in clinically important coronary segments with a level of variability similar to those obtained through manual segmentation.

Two CTA studies were affected by the presence of kissing vessels (i.e. datasets #7 and #11). The slice-by-slice correction scheme was subsequently applied after the first step of the segmentation. Figure 5.12 illustrates the 3D surface reconstruction image before and after applying the slice-by-slice correction algorithm. Figure 5.13a shows the first slice, which contains the cross-sectional segments of the coronary arteries. The false positives, i.e. the kissing vessel artefacts, were identified and then tracked in Fig. 5.13b–e. The boundaries of the coronary arteries are shown in red, and the green curve represents the kissing vessel structures. In Fig. 5.13f, we illustrate the initial segmentation of the axial image shown in Fig. 5.13e prior to the correction.

Transaxial slices illustrate the slice-by-slice correction algorithm. a The first slice contains the artery (delineated in red), b The artery is tracked through slices, c The binary image obtained from the first stage segmentation, this is the first slice that contains the kissing vessel (arrowed), d and e Kissing vessel is identified and tracked over slices (depicted in green contours), f The original segmentation in same transaxial slice as shown in (e)

Instead of using the entire volume to quantify the performance of the slice-by-slice correction, we conduct the assessment on a 2D axial image basis. The resulting segmentation with and without the slice-by-slice correction is illustrated in Fig. 5.14, where the boundaries of the artery before and after correction are depicted in black and blue, respectively, and the manual segmentation is shown in red. The four metrics, defined in Eq. 5.10, were used to quantify the performance and the results are shown in Table 5.4. It can be observed from the table that the FP rate, which is primarily caused by the kissing vessels, is dramatically reduced after the application of the slice-by-slice correction algorithm (reduced from 294 to 22.8 % for arterial segments containing kissing vessels, e.g. the vessel segment shown in Fig. 5.12a, within the circle).

The efficiency of the proposed slice-by-slice correction algorithm demonstrated by cross-sectional view image. a 3D volume data and b Cross-sectional view image randomly taken from the 3D volume data, illustrating the resulting segmentations with and without the correction step are shown in blue and black, respectively. The red contour represents the reference boundaries of the vessel obtained through manual delineation

5 Conclusions and Future Work

Accurate segmentation of vascular structures is an essential and fundamental step for various clinical tasks, such as stenosis grading and surgical planning. In this chapter, we presented a computer vision system which contributes towards the development of the proposed framework for segmentation of coronary arteries in 3D CTA images. In particular, a novel two-step algorithm was proposed to efficiently segment coronary arteries from CT images by making use of both global and local intensity statistics. The global energy was designed as a normalised CDF based on the histogram of the input image data, which adapts its contribution to the overall active contour energy by considering the spatially varying properties of the artery. The kissing vessels were identified and tracked throughout the axial slices in the second stage of the segmentation based on a multi-region competition algorithm. Experimental results show that the proposed approach is able to correctly segment the major branches of the arterial tree, with an average voxel-wise distance of 0.73 voxels to the manually delineated ground truth. Furthermore, in the presence of kissing vessel artefacts, the overall performance of the segmentation can be significantly improved by the slice-by-slice correction scheme. The FP rate from these cross-sections containing kissing vessels was reduced from 294 to 22.8 %.

In terms of future research, we intend to introduce spatial/intensity correlations between frames to the correction process, since the information extracted from previous frames can be used to assist in segmentation of coronary arteries in the subsequent frame. Based on the 3D surface reconstructed from the segmentation, the geometric parameters along each of the main branches of the coronary arteries can be precisely computed, which are very useful for diagnostic purposes, such as stenosis detection and grading.

Abbreviations

- CTA:

-

Computed tomography angiography

- CAD:

-

Coronary artery disease

- CT:

-

Computed tomography

- EM:

-

Expectation maximisation

- GMM:

-

Gaussian mixture model

- LAD:

-

Left anterior descending

- LCA:

-

Left coronary artery

- LCX:

-

Left circumflex

- LM:

-

Left main coronary

- RCA:

-

Right coronary artery

- WHO:

-

World Health Organization

References

WHO. Coronary Heart Disease (2010)

Netter, F.H.: Atlas of Human Anatomy, 2nd edn. Rittenhouse Book Distributors Inc, King of Prussia (1997)

Abe, T., Hirohata, M., Tanaka, N., et al.: Clinical benefits of totational 3D angiography in endovascular treatment of ruptured cerbral aneurysm. Am. J. Neuroradiol. 23, 686–688 (2002)

Tillich, M., Hill, B.B., Paik, D.S., et al.: Prediction of aorotoiliac stent graft length: comparison of measurement methods. Radiology 220(2), 475–483 (2001)

Philips. Brilliance iCT scanner. http://www.healthcare.philips.com/us/products/ct/products/ct_brilliance_ict/index.wpd (2011)

Chan, T.F., Vese, L.A.: Active contours without edges. IEEE Trans. Image Process 10(2), 266–277 (2001)

Kompatsiaris, I., Tzovaras, D., Koutkias, V., et al.: Deformable boundary detection of stents in angiographic images. IEEE Trans. Med. Imaging 19(6), 656–662 (2000)

Pieta, L., Tomczyk, A., Szczepaniak, P.S.: Medical image analysis using potential active contours. Inf. Technol. Biomed. Adv. Soft Comput. 47, 66–73 (2008)

Ray, N., Acton, S.T., Altes, T.: Merging parametric active contours within homogeneous image regions for MRI based lung segmentation. IEEE Trans. Med. Imaging 22(2), 189–199 (2003)

Sum, K.W., Chueng, Y.S.: Vessel extraction under nonuniform illumination: a level set approach. IEEE Trans. Biomed. Eng. 55(1), 359–361 (2008)

Yezzi, A., Kichenassamy, S., Kumar, A., et al.: A geometric snake model for segmentation of medical imagery. IEEE Trans. Med. Imaging 16(2), 199–209 (1997)

Yezzi, A., Tsai, A., Willsky, A.: A statistical approach to snakes for bimodal and trimodal imagery. In: The Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra (1999)

Osher, S., Fediww, R.: Level Set Methods and Dynamic Implicit Surface. Springer, New York (1999)

Caselles, V., Kimmel, R., Sapiro, G.: Geodesic active contours. Int. J. Comput. Vision 22(1), 61–79 (1997)

Kichenassamy, S., Kumar, A., Olver, P., et al.: Gradient flows and geometric active contour models. In: Proceedings of Fifth International Conference on Computer Vision, Boston (1995)

Yang, Y., Tannenbaum, A., Giddens, D., et al.: Automatic segmentation of coronary arteries using bayesian driven implicit surfaces. In: Proceedings of 4th IEEE International Symposium on Biomedical Imaging (2007)

Nain, D., Yezzi, A., Turk, G.: Vessel segmentation using a shape driven flow. In: Proceedings of Medical Image Computing and Computer-Assisted Intervention—MICCAI 2004 (2004)

Chan, T.F., Vese, L.A.: A multiphase level set framework for image segmentation using the Mumford and Shah model. Int. J. Comput. Vision 50(3), 271–293 (2002)

Kim, J., Fisher, J., Yezzi, A., et al.: A non-parametric statistical methods from image segmentation using information theory and curve evolution. IEEE Trans. Image Process. 14(10), 1486–1502 (2005)

Lankton, S., Tannenbaum, A.: Localizing region-based active contours. IEEE Trans. Image Process. 17(11), 2029–2039 (2008)

Li, C., Kao, C.Y., Gore, J.C., et al.: Minimization of region scalable fitting energy for image segmentation. IEEE Trans. Image Process. 17(10), 1940–1949 (2008)

Wang, L., He, L., Mishra, A., et al.: Active contours driven by local gaussian distribution fitting energy. Signal Process. 89, 2435–2447 (2009)

Tomandl, B.F., Hastreiter, P., Eberhardt, K.E., et al.: The kissing vessel-artifact: a problem occurring in the visualization of intracranial aneurysms using volume rendering and virtual endoscopy. J. Radiol. 213, 311–314 (1999)

Wong, W.C.K., Chung, A.C.S.: Probabilistic vessel axis tracing and its application on vessel segmentation with stream surface and minimum cost paths. Med. Image Anal. 11, 567–587 (2007)

Brox, T., Weickert, J.: Level set segmentation with multiple regions. IEEE Trans. Image Process. 15(10), 3213–3218 (2006)

Zijdenbos, A., Dawant, B., Margolin, R., et al.: Morphometric analysis of white matter lesions in MR images: methods and validation. IEEE Trans. Med. Imaging 13(4), 716–724 (1994)

Frangi, A.F., Niessen, W., Hoogeveen, R.M., et al.: Model based quatitiation of 3-D magnetic resonance angiographic images. IEEE Trans. Med. Imaging 18(10), 946–956 (1999)

Acknowledgments

The authors would like to acknowledge the support of City University, which enabled this research through the award of a University Research Studentship and Dr Gerry Carr-White and Rebecca Preston at St Thomas and Guys Hospitals for their invaluable advice and the provision of the CTA datasets.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Wang, Y., Liatsis, P. (2014). An Automated System for 3D Segmentation of CT Angiograms. In: Rodrigues Leta, F. (eds) Visual Computing. Augmented Vision and Reality, vol 4. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-55131-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-642-55131-4_5

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-55130-7

Online ISBN: 978-3-642-55131-4

eBook Packages: EngineeringEngineering (R0)