Abstract

Pilot error–human error–operating error–fatigue–these are all terms we hear about and read about over and over again in connection with hazardous events and even accidents. They all allude to the facts and may shed light on different aspects related to the cause but, at the same time, they insinuate that a person made a mistake while operating a machine.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

3.1 Introduction

Errare humanum est. (Cicero) | Piloti humani sunt. (VC) |

Pilot error–human error–operating error–fatigue–these are all terms we hear about and read about over and over again in connection with hazardous events and even accidents. They all allude to the facts and may shed light on different aspects related to the cause but, at the same time, they insinuate that a person made a mistake while operating a machine.

As we know, the statistics ascribe a high percentage of civil aviation accidents to these failings (Lufthansa 1999).

Every accident is followed by lengthy efforts to identify the “guilty party” and the cause of his “wrongdoing”. Once these are discovered, an attempt is be made to eliminate any of the weak areas identified, optimize the regulations and procedures, modify the operating environment and, sometimes, introduce technical changes and innovations, as well. Such an approach confines itself to the reactionary level instead of deliberately initiating a preventative process. This is especially true when, rather than a technical fault, an elusive “human factor” appears to be the cause. A technical fault is quite often easy to identify, comprehend and rectify. Human errors and their causes, on the other hand, are oftentimes difficult to comprehend.

Why is it so difficult to get to the root of these failings, with which we are reputed to have so much “experience”?

Everyone is familiar with the mix-ups, misunderstandings and operating errors that take place on a daily basis. Why was an incorrect “squawk” code dialed in, even though it was readback correctly? Why has no one noticed that the ILS has been set to the wrong frequency and now the airport appears from the right side, and not from the left side as expected? These and similar situations occur time and again, and we know that even with the utmost attention they cannot be completely eliminated.

Everyone should know that fatigue can easily lead to a degree of inattentiveness that can make it difficult to set control knobs, which are too small to begin with, or that can obstruct the view for what is essential with routine tasks, which there is an overabundance of.

Yet, as obvious as these examples are, the more unexplainable other occurrences seem to be. This, however, is only because an analytical investigation, analogous to those done for technical failures, is, for whatever reason, not performed.

We must be aware that not only do technical failures have clear-cut causes, but human error, as well, can be traced back to its root causes. It usually doesn’t occur abruptly or coincidentally, but will be the result of a long chain of causal events. Certain schemata, situations and preconditions exist for it, too, that lead to the same error-prone situations time and again.

It is therefore all the more important to not just accept the error as being the end product of a series of coincidences. It should not suffice to merely search for the error in the system after the fact, but we must concern ourselves with the system in the error before it takes place. Only in this manner can a working environment be created that facilitates the early recognition of error-prone situations as they arise, as well as the appropriate response. Only with a better understanding of the origin of errors can they be specifically targeted, their frequency reduced and their damaging effects minimized.

Which factors are actually at work, where can the underlying problems be found and where should the focus be placed in order to create such an error-tolerant working environment? Only a careful analysis of the human working environment will help answer these questions.

The SHELL model introduced by Prof. Eldwin Edwards and Capt. Frank Hawkins (1987) describes the individual components of this working environment and their mutual interactions.

We will subsequently make an effort to classify errors in order to more closely address their causes. This knowledge will then be applied to the model of the working pilot prior to addressing the error chain and the pathway from the error to the accident.

The chapter concludes with a look at the potential for error prevention and error management. Both paths must be pursued, because optimal results can be expected only when they are dealt with together.

Because to err is human, the consequence of error must be limited through sensible workplace design.

3.2 SHELL Model

The person occupies the centre position in the SHELL Model (ICAO 1989) (see Fig. 3.1).

Liveware I

| The capabilities of this central component have been studied to a great extent. Size and shape, eating and sleeping habits, information assimilation and processing, expression potential, responsiveness and adaptability; these are all known. They are factors that form natural limits for the resilience of the overall system. The remaining components must be carefully adapted to these limits in order to avoid abrasive losses and error. These components are: |

Liveware II

| Colleagues on board the aircraft and on the ground, air traffic controllers, technicians and many others with whom he interacts; each within his own respective area. They supply him with information, issue instructions and provide support in the form of knowledge and cooperation. Naturally, they, too, are at the centre of their own SHELL systems and, just like him, they are human beings with their own weaknesses and limitations, albeit with their own unsurpassed capabilities and flexibilities, as well. |

Hardware

| The aircraft and its systems, displays and operating units, with which he works and which supply him with information and related faults to be dealt with. Of key importance is not only the technical potential of this equipment, however, but its ergonomics, as well, which plays a significant role in the usefulness, the practicability and, ultimately, the safety of the equipment. |

Software

| Guidelines and procedures, as well as all information such as flight plans, Notams, charts, etc., required for the work at hand. Comprehensible procedures, readable chart materials and Notams, as well as standardized and sensible work processes are good examples. |

Environment

| The person’s surroundings, the geographic and climatic conditions, as well as other external components that influence flight operations. Similarly, other factors that can be influenced only to a limited degree, such as duty time and rest period regulations, or economical and even political stipulations. |

It is particularly within this Hardware–Liveware relationship that aircraft and avionics manufacturers are challenged more than ever before to orient their products, not only on the technical options, but on the capabilities and the potential of the user, as well. This also applies to the Software–Liveware interface, where the sensible selection of, and the unambiguous presentation of available information are of particular importance.

In addition to the key interfacing links between the human being at the centre and the four factors encompassing him, there is a fifth interface that also should be added:

Liveware–Liveware (L–L)

| This refers to the personal circumstances, the mental and physical condition, that can greatly influence job-related performance. |

3.3 Classification of Errors

The classification of errors into groups and categories can be helpful for a better recognition of their backgrounds and causes. As in just about every case, a classification in this context is seldom clear because there are a multitude of possibilities with partially overlapping divisions, of which we will introduce only a few.

3.3.1 Error Forms and Error Types

While error forms describe the theoretical basis for errors, error types are oriented on the course of action taken in practice (Reason 1990). Error forms provide the theoretical background for this.

3.3.1.1 Error Forms

Error forms are errors whose causes are related to the manner, in which information is stored and processed in the brain. They are the result of the way our brain functions and are therefore the basis for every human error.

They are fundamentally based on cognitive mechanisms. The capacity for remembering and the processes for accessing and processing stored knowledge play a central role in this.

Reason refers primarily to two models for describing how our long-term memory functions: Similarity matching and frequency gambling.

When putting the individual pieces of information together into an overall picture, stored situation (schema) is initially called upon to provide a foundation, into which the greatest amount of available information most closely resembling the current situation fits (similarity matching).

When several compatible possibilities exist, the schema used most frequently to this point will be favoured (frequency gambling).

Example: A generator fails during descent. Without further information, a schema is activated containing a whole range of possible consequences and actions. Additional information (e.g. low oil pressure) invokes a completely different schema; engine failure.

Tversky and Kahnemann refer to these two mechanisms as the Rule of Availability and the Rule of Representativeness (Tversky and Kahnemann 1973, 1974).

They will be discussed in more detail in connection with the decision process in Sect. 3.4.2, Decision making. The term “schema” will be addressed in somewhat more detail in Sect. 3.4.1.

3.3.1.2 Error Types

Error types are the possible errors assigned to a phase of activity. An activity in this context is divided into three phases:

-

Planning

-

Processing

-

Execution

The “Unsafe acts” phrase used in Sect. 3.2 below connects the three fundamental error types, value-free, with the violations. It appears once again with the error chain in Sect. 3.5.1 (Fig. 3.2).

Basic error types (Reason 1991)

While lapses and slips “happen”, mistakes are “made”. Mistakes made intentionally are called violations.

3.3.1.3 Mistakes and Violations

Mistakes are made during the planning phase, perhaps due to erroneous planning data or incorrect conclusions. One refers to:

Rule-based mistakes, when the wrong rule is applied to a known situation, or when the right rule is applied incorrectly,

Knowledge-based mistakes, when a known situation is assessed incorrectly due to insufficient or wrong information (incorrect checklist, unknown airport).

Violations are comprised of all types of rule infringements (procedural shortcuts due to routine, well intentioned optimization, emergency authority). Violations are not basic error types, because their onset requires planning, processing and execution in each case.

3.3.1.4 Lapses and Slips

Errors during information processing: Lapses usually have something to do with the way humans assimilate and process information (forgetting, remembering incorrectly). The entire range of possible errors described under error forms can be related to this.

Errors during execution: Slips are behaviour patterns that are accessed at the wrong time (mix-ups, omissions, operating errors). These refer to the multifaceted errors attributed to acquired behaviourisms, the so-called motor programs, such as a new captain referring to himself as the co-pilot during the passenger announcement, or any slip of the tongue, among others.

3.3.2 Further Classifications

Active Failure and Latent Failure

This classification (Reason 1990) differentiates according to the temporal relationship between the activity and its effect.

An active failure usually has immediate negative consequences. It normally occurs during daily operations. “Gear up” instead of “Flaps up” during a touch-and-go is probably the best known example of this.

A latent failure is usually committed long before the actual accident. It is the result of a decision or an action, whose consequences remained undiscovered for some time. These failures are often caused by people who are far removed from the actual mission in terms of time and space. Examples of this may be found in management, the legislative process or operational procedures.

Commission, Omission, Substitution

This classification (Hawkins 1987) is broken down according to the fundamental type of failure. Commission is where an action is carried out that is not appropriate at the present point in time. Omission is where an appropriate action is forgotten, while substitution is where it is carried out on the wrong object.

Reversible, Irreversible

In this context, the classification is determined by the consequences. An error that can be undone and, for this reason, may not necessarily have serious repercussions, is referred to as being reversible (e.g. incorrect squawk code, frequency or flap setting). The consequences of an irreversible error, on the other hand, can no longer be influenced (fuel dumping).

Design Induced, Operator Induced

A failure at the Liveware–Hardware or Liveware–Software interface can result, for instance, from an operating system being inadequately adapted to the user (Hawkins 1987). This is primarily a problem of ergonomics and is therefore referred to as a “design induced” error. There are many examples of this, such as switches and levers being too closely positioned or too similar in appearance, or information being presented in a manner that can be easily misunderstood.

Similarly, the cause may be solely “operator induced” if dealing with simple operating errors not influenced from the outside.

Random, Systematic, Sporadic

With respect to their frequency and distribution, errors are distinguished as being

-

Random haphazard error distribution

-

Systematic orderly error distribution

-

Sporadic intermittent error distribution

With haphazard or arbitrary dispersion (random), the scope of possible errors is very broad, while with orderly dispersion it is very narrow. Sporadic errors are the most difficult to combat. Their emergence is not foreseeable and can have various causes (see Fig. 3.3).

Classification of error (Hawkins 1987)

3.4 Simplified Model of a Pilot at Work

The extremely simplified flowchart in Fig. 3.4 depicting work in the cockpit should make one thing particularly clear:

As with the SHELL Model, the potential for error exists at each and every link in the action chain, as well as at the junctions between them.

Some points will only be noted briefly here, as they are described in more detail in their respective chapters.

3.4.1 Information Assimilation and Information Processing

We can only perceive that which we can conceive (Green et al. 1991).

Schemata (Mental Models)

Just as the receptiveness of the eye is limited to the range of visible light, our mind can perceive only those things that correspond to its conception of the world. Conversely, all perceptions are pressed into an existing model of the world, even if they don’t actually fit.

Our mind’s model of the world is comprised of a multitude of individual models, or so-called schemata (Reason 1990; Bartlett 1932). These have been stored in long-term memory from the earliest childhood on and are activated there through key stimuli.

Schemata reduce the effort of collecting information by providing ready-made mental models, within which only particular aspects will need to be modified or adapted.

The word “room”, for example, calls up a schema already containing the basic characteristics of a room (four walls, door, windows, ceiling, etc.). This picture can now be filled in with the additional information provided. As already addressed in Sect. 3.3.1, Error forms, the selection of schemata takes place by means of frequency gambling or similarity matching. The less familiar the situation, the lower the probability of encountering a valid schema.

When a compatible schema doesn’t exist (unknown situation), a very elaborate and labour-intensive process begins that ultimately results in the formation of a new schema. Albeit, where time and decision pressures exist, optimal results will very rarely be realized.

The potential for error obviously exists where the schema contains information missing in the real world. We are very adept when it comes to introducing new data to a schema when it has been called up, yet it is very difficult to remove details from that schema or to distinguish between gathered and stored information in retrospect. Furthermore, once activated, a schema is very long-lived because confirmations are constantly being pursued while inconsistencies are ignored.

Information Processing by Humans

Although the human is an exquisite processor of information by almost any measure, all of these means of acquiring information are subject to error. Thus, it is not only possible but likely that pilots will suffer lapses in their ability to maintain an adequate theory of the situation (Bohlman 1979).

The chart below (see Fig. 3.5) depicts one of four possible models that attempt to describe the working function of the human brain as it assimilates and processes information and should serve merely to provide an overview in this context.

These types of charts exist in a staggering degree of diversity and complexity depending on the respective phenomenon they attempt to illustrate. This model comprises a “central decision maker” (CDM) who executes the task at hand virtually in series. This explains the limited capacity of human beings for accommodating information very nicely.

When the CDM is working at the limits of his capacity, important information must be stored temporarily. Each of the senses (seeing, hearing, feeling, etc.) has its own small, short-term memory, albeit with greatly limited capacity. Because of this, a sensory stimulus (e.g. noise) may still be perceived (e.g. heard) under certain conditions even though it is no longer physically present. When the stimulus is then received by the CDM, it can be placed into temporary storage once again for final processing. This takes place in the so-called working memory or short-term memory, which, as we know all too well, has a very low capacity, with the lifespan of the information retained therein being very short.

Newer models also use a parallel method of processing information.

Errors During Information Assimilation

Our direct senses are often compelling indicators of the state of the world, even when they are in error (Nagel 1988).

With respect to information assimilation in the cockpit, the following three senses play the greatest roles:

-

Sight

-

Hearing

-

Sense of equilibrium (equilibrium organ and sense of force)

Related errors, misunderstandings and illusions can also be generated as a result of these. The causes for errors occurring during information assimilation can oftentimes be found at the Software–Hardware interface. False information or information provided at the wrong time cannot be assimilated either.

-

Sight (see Table 3.1)

Table 3.1 Sight -

Hearing (see Table 3.2)

Table 3.2 Hearing About 85 % of all errors originate within the verbal communication used for transmitting information (Nagel 1988).

Our ability to communicate takes on overriding significance because we work with people in all technical areas (crews, air traffic control, handling, etc.). The problems associated with the L–L interface are described in more detail in the chapter on Communication.

-

Sense of equilibrium and force

Generally speaking today, a growing tendency can be seen in the volume of visual information that must be assimilated (FMS, EFIS, etc.). In order to avoid overloading this channel of acquisition, or rather to acquire an increased capacity for handling critical situations, a better distribution of the information, even to the other senses, would be desirable. At the same time, the tactile sense is oftentimes disregarded or its significance is underrated (moveable throttle, autopilot-control connection, interconnected controls, etc.).

Once the visual channel has reached its maximum receptive capacity in high workload situations, the processing of additional information will be possible only through other channels. One example would be the auto speedbrake.

The visual workload during a landing in critical weather conditions is extremely high. For this reason, it does make a difference whether one hears the function of an automatic system, perceives it through a correspondingly large movement in the peripheral visual field or must verify it through a focused glance at (and making inward note of) a display indication.

When the Autothrottle is activated, a thrust lever moving by itself provides the pilot with information about the thrust control function through the sense of force, thereby relieving his sense of sight.

Because every sense has its own small short-term memory, as already pointed out, such distribution will lead to an improvement in the overall capacity, as well.

3.4.2 Decision Making and Mental Models

The ability to make quick decisions in flight is of vital importance. Nevertheless, the mechanism used to make these decisions is no different than that used by other decision makers.

Experts tend to make the same errors as do the rest of us under certain circumstances (Nisbett 1988).

The information gathered by the senses is processed into a mental mode by the brain, as already described.

3.4.2.1 Decision Making with Insufficient Data

The lesser the information provided by the senses, the more imprecise the corresponding model will naturally be. The mystery is that the brain will supplement the missing pieces of the mosaic. It uses the long-term memory as a “database” for this purpose by accessing the schemata already mentioned. Because of the inadequacies of this database, however, it is only natural that errors will occur. Furthermore, it is understandable that differences in the databases exist, meaning that different people exposed to the same situation may develop different models.

Unfortunately, we tend to hang on to our own models, being constantly on the lookout for new sensory data to support them. At the same time, we tend to initially suppress any evidence produced that might refute a model, rationalizing that it “doesn’t fit into the picture”, referring to our mental model.

This is one of the keys to understanding that crew behaviour, which, after the fact, is oftentimes incomprehensible.

Once having made a decision, people persevere in that course of action, even when evidence is substantial that the decision was the improper one (Nagel 1988).

3.4.2.2 Decision Making by Rule of Thumb

Our brain makes things relatively simple at first glance. Research has shown that it does not process highly complex algorithmic solutions, but forms simple logical relationships. Decisions are based on so-called “heuristics” or, in plain English, rules of thumb (Nagel 1988).

Examples for how these rules of thumb work can be found in the functioning principles of our brain, as already mentioned in Sect. 3.3.1, Error forms.

-

Rule of representativeness

Situation B follows situation A because that’s how it worked the last time. Obviously, this method may have a certain statistical likelihood of success, but little more.

-

Rule of availability

Long-term memory stores events and information (schemata) just like they would be stored in a file cabinet. The older the event, the further to the rear will it be filed with its activation being that much more complex.

When events and information are needed in order to construct a new mental model, the brain will favour the simplest path to more recent memories, even when experiences lying further in the past may be better suited to the current situation. Critical information must be “re-filed” to the front time and again in order to warrant optimal decision making. But even the best decisions can be wrong. Murphy’s Law, scientifically anchored in the chaos theory, also applies to decisions once they are made.

One way out of the dead-end is to consciously turn away from certain patterns of behaviour. The key concept here is situational awareness, which will be addressed in more detail further on in the text. Within the context of a mental model, this means that support for the current model of the surrounding environment should not be pursued through new data but, just the opposite, data should be sought out that will refute it. Decisions should be subject to review time and again because:

Most all of us are more confident in our decisions than we typically have any right to be (Bohlman 1979).

3.5 From a Simple Error to the Accident

3.5.1 The Error Chain

“One error comes seldom alone” and “one error, alone, doesn’t cause an accident” are both commonly used expressions. In fact, it is very rare that an accident or incident can be traced back to one single causal error (Reason 1990, 1991).

A vast number of pre-conditions are required in a chain of events in order to generate the momentum needed to break through even the last line of defence.

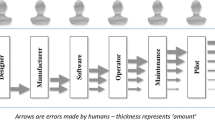

James Reason describes the funnel-like course this process takes (see Fig. 3.6) from its origin to the final triggering event, after which we read about it in the newspapers.

Elements of an organizational accident (Reason 1991)

The first snowfall lays the foundation for a subsequent avalanche. Similarly, the error process can already begin at, and assume its overall breadth at the organisational level. Legal provisions such as flight duty time regulations and manufacturing specifications, aircraft manufacturer organisational structures, as well as their cultural differences, manufacturing philosophies and internal organisations, themselves, are all examples.

The task at hand and the work environment needed to accomplish it make up the next link in the chain. The environment must be very carefully adapted to both the task and to those who are assigned to carry it out in order to facilitate low work-related error rates.

The individual actor, with all his strengths and weaknesses, occupies the last position in the chain. As the last resort along the pathway to the accident, he is still in a position to compensate for built-in errors and system weaknesses or, through his own active failure, to trigger the avalanche referred to above.

Failures and weak points in the individual system groups are cumulative and weaken the tolerance for error throughout the overall system. These have been designated as latent failures (hidden weak points) in our classification.

3.5.2 Error Producing Conditions

Just how much influence his environment, latent failures and specific difficulties can have on an individual’s frequency of error is depicted in Table 3.3 Footnote 1 (Williams 1988).

The significance of the interfaces within the SHELL Model can also be clearly determined.

Most of these circumstances cannot be influenced by the pilot. Therefore, it is that much more important that they be regarded at the corresponding locations within the overall system.

3.5.3 Violation Producing Conditions

Violations are often the cause of accidents, although the corresponding relationships have not yet been well researched. The following table (Reason 1991) reveals that the overall system can also be mutually responsible for an individual’s behaviour in this context; entirely in keeping with latent failures.

The external conditions are listed below, as well as the personal attitudes considered to be crucial for determining a violation, meaning a conscious or an accepted failure to fulfil or remain within applicable regulations or limits, in many of the accidents investigated.

-

Manifest lack of organisational safety culture

-

Conflict between management and staff

-

Poor morale

-

Poor supervision and checking

-

Group norms condoning violations

-

Misperception of hazards

-

Perceived lack of management care and concern

-

Little élan or pride in work

-

A macho culture that encourages risk-taking

-

Reliefs that bad outcomes won’t happen

-

Low self-esteem

-

Learned helplessness (“Who gives a damn anyway?”)

-

Perceived license to bend rules

-

Ambiguous or apparently meaningless rules

-

Age and Sex: young men violate

One example might be the various ways pilots from differing societies and different nationalities wear their uniforms more or less “correctly”. Even though not necessarily accident-relevant, the disposition to commit such social “violations” can be traced back to several of the points above.

3.5.4 Hazardous Thoughts

A person contributes to the onset of error through his personal attitude about himself and his surroundings. His judgement and his conduct are influenced by five underlying attitudes in this model (Eberstein 1990), which are present and pronounced to varying degrees in everyone.

Too great an emphasis on individual components in this context transform the person, himself, into a latent failure. A simplified model defining the seven underlying attitudes is found in Table 3.4 (with a more elaborate discussion found in the chapter on Decision making under the section title “Hazardous attitudes”):

Every person can determine his own personal behaviour through simple tests, critical self-monitoring and feedback, and then influence it through conscious control efforts. Each of these attitudes is assigned an opposing thought (antidote), through which, when deliberately applied, the underlying attitudes that have become too pronounced can be defused (see Table 3.5).

3.6 Error Prevention and Error Management

If you always do, what you always did, you will always get, what you always got (Wiener 1993).

Accordingly, all the research into identifying the causes won’t amount to anything as long as the results aren’t introduced into the daily work environment. In order to learn from the mistakes of the past, not only the crews, but all the players in the commercial aviation industry, as well, must check their work and actions against new issues and adapt them to the new demands. The data collected from years of accident research and the resulting theories developed about the underlying processes must be converted into the safe, error-free execution of flight.

Hawkins (1987) divides the process into two steps:

-

Error prevention (minimizing the occurrence of errors)

-

Error treatment (reducing the consequences of errors)

The first priority is to take precautions in order to make the eventuality of an error as unlikely as at all possible. Then, because errors can never be discounted altogether, the second step must be to take counteractive measures to keep their consequences as slight as possible.

3.6.1 Error Prevention

SHELL Interface

A series of initiatives aimed at preventing errors has resulted from examining the SHELL Model and its critical points of abrasion. These have been discussed in part already and are therefore mentioned only briefly here. For the most part, they deal with requirements that must be fulfilled, though not by the crews in this case, however, but by the manufacturers, the responsible airline personnel, the authorities and the legislators.

Even if the user, himself, does not have a direct influence over aspects such as design-related deficiencies, himself, he should repeatedly draw attention to specific areas of weakness in order to affect long-term changes. Only in this manner can system-intrinsic weaknesses—latent failures—be eliminated over the course of time. The points listed below represent only a small selection of all the possible and relevant aspects related to optimizing the work environment, and virtually everyone could add to this list out of their own experience. With closer examination, however, it is that much more astonishing to discover that even to most » self-evident « rules are disregarded at times to some extent.

Liveware–Hardware, System Design

Adaptation of the machine to the man

-

clearly readable displays presenting the proper scope of information in a well-arranged and easily interpretable manner

-

standardized system of switches and operating controls that eliminates confusion and mix-ups

More and more, manufacturers are coming to the realization that the cockpit is not only a collection of system displays, but that its layout and configuration contribute significantly to the safe conduct of a flight. Admittedly, the spectrum of criteria that needs to be considered is very large. It starts with the size and shape of the switches, their positions, the structuring of the individual control units and displays, the presentation of information on the monitoring screens, just to name a few, and continues through to the size and lighting of the overall cockpit.

Even though scientific research into the design of displays was conducted as far back as 1968 (Roscoe), neither the manufacturers nor the national authorities have been able to agree upon unified set of international standards to date. Short-term economic considerations take priority time and again. At the same time, design engineers orient themselves more closely on the technical options and less on what is expedient for the person.

Liveware–Software, Software Design

Well-arranged presentation of all information

-

clearly arranged organization of chart materials, Notams, aircraft documents and other sources of information

-

sensible, comprehensible procedures

-

well organized checklists

The software component is by far not as difficult to influence or to modify over the short-term as the hardware components might be; that is if one believes the promises made by numerous aircraft manufacturers. Actual practice shows, however, that modifications to software are at least as difficult to realize as the installation of new hardware. Intervention into highly complex, certified software is accompanied by a large financial commitment and always conceals the risk that new sources of error may be programmed in. Correspondingly, minor points of friction at the user interface won’t be rectified when they can be remedied on the user-side much less expensively by issuing new operating instructions; meaning procedures.

An example of this might be the database for the Flight Management System, whose weaknesses pilots have long learned to live with. Because in this case as well: software and databases are created by humans, and humans simply do make mistakes. We encounter on a daily basis the portrayal of information that is either difficult to understand, unclear, incomplete, ambiguous or not suited to the situation.

The design of the checklist should not merely be oriented on whether all systems are correctly activated for the respective situation, but also on whether the workload is sensibly distributed, whether the peak workloads have been balanced out and whether it is conceived in such a manner that the crew members are encouraged work together.

Many research groups working in this field (communication design) have compiled and published related findings in recent years. NASA, for example, has released a collection of reports about accidents that can primarily be traced back to poor documentation. Accordingly, anyone who publishes information on paper or through other media in the aviation industry must, in fact, have sufficient resources available to ensure its optimal presentation with respect to human factors research.

It would considerably more convenient to be able to read a NOTAM date, for instance, not as a numerical series such as 0602080630, but rather in an easily discernable form such as 8 February 2006 beginning at 06:30. It not only simplifies the task, but it also helps prevent the errors associated with converting these numerical groupings.

Liveware–Environment, Environmental Shaping

We require an error-tolerant and stress-free working environment

-

quiet and comfortable cockpits

-

stress-free working relationships among crew members

-

congenial working atmosphere

Unfortunately, we are not able to change many of the external circumstances. CAVOK conditions 365 days a year and a worldwide topography comparable to the north German plains would certainly be desirable. Other aspects, however, must be thought through anew and reassessed accordingly, as to whether they may be contributing to an unsafe, even error-conducive environment, incompatible to humans. One of the main human strengths is indeed his flexibility, but what about his physical and mental needs, without the fulfilment of which he won’t remain motivated or effective for very long?

A working environment must be created that does not demand his flexibility from the outset, but is one in which he can work in a relaxed and concentrated manner, allowing him to call upon all his reserves in the event of an emergency. This includes reasonable statutory and operating regulations, optimized physical conditions with respect to noise, temperature and humidity, as well as a suitable “working atmosphere”.

Liveware–Liveware, Inter-Human Relations

Perhaps the best countermeasure is constant vigilance concerning the potential for errors in the entire process of communication, whether it is between pilot and controller or pilot and first officer (Bohlman 1979).

Influence over the interpersonal working atmosphere and optimization of the working relationship through:

-

Crew resource management

-

Crew coordination

-

Crew performance instead of pilot performance

There is hardly a large company these days that can afford not to offer its employees advanced training opportunities, even when it is not necessarily related to their professional qualifications. This includes seminars related to self-assessment, working in groups, crew resource management and leadership. Besides mere technical knowledge and manual skills, it is also becoming more and more important to understand psychological correlations, as well as to adopt social and communicative skills. This subject will be discussed in more detail in the chapter on Communication.

Standard Operating Procedures

In today’s two-man cockpits, the loss of a crew member means the loss of 50 % capacity with a loss of 100 % redundancy.

A crew member is considered to be lost when his mental model no longer agrees with reality. It has been shown in this model that the memory serves as a database.

If we use predominantly the same database, it also follows that any deviations in the mental models will be minor. As long as both pilots have the same SOP stored in their memories, the mental model, such as an ILS approach, will be generally in agreement without requiring a great deal of additional communicative effort.

SOPs can therefore be seen as a form of anticipated communication, or a type of elementary understanding about the working function presumed to be known from the outset.

When a deviation from a SOP is being planned, it necessarily results in the colleague being notified about this intent so he’ll be able to adapt his behaviour (mental model) accordingly or assert his objections beforehand.

In the optimal case, the colleague should call for this information when he becomes aware that a deviation from his mental model is likely. It is the captain’s responsibility to ensure a working atmosphere where the demand for this type of information is facilitated and supported at all times.

Selection, Training, Motivation

Selection

Darwin’s theory is based on a form of natural selection. When choosing transport pilots, however, this type of selection should be avoided. Aptitude tests initially provide a financial benefit from the perspective of the one who will ultimately pay for the training, whether it is the airline or the applicant.

Oftentimes, it is not the occupational aptitude in the proper sense that is ascertained, however, but rather the statistical probability of successfully completing the pilot training program in order to reduce the financial risk and to ensure a high degree of productivity.

At the same time, just as the cockpit working environment has changed, so, too, is the demand profile imposed on today’s pilots different from that of 20 years ago. In modern cockpits, it is no longer the manual skills that are of sole significance, but more often the management skills, the ability to work in teams and to coordinate processes, along with the flexibility needed to keep pace with technical innovations. The trend is moving away from “tradesman” and towards “manager with flying skills”.

The selection of persons according to specific criteria will always result in a homogeneous professional group. Correspondingly, in the case of commercial airline pilots, this can lead to a lower susceptibility to error as long as team behaviour, communication skills and motivation are encouraged within this professional group.

In view of human factors research, it is particularly important to seek out people for this profession from the outset who demonstrate a high degree of conformance to the profile demands in order to prevent latent errors during the early stages of the career. The questions, as to which characteristics are testable and which criteria should be chosen in order to realize optimal results, have been the focus of many years of research and must be regularly adapted to the occupational profile, just as later commercial airline pilots must adapt to the changing demands of their profession.

It is in the interest of all involved parties, including the passengers, that the aviation authorities and legislators do their part to enhance aviation safety in this respect, assuming there is an interest to do so.

Training

As with visual errors associated with the approach and landing, disorientation conditions are both compelling and avoidable if pilots are properly educated to the hazards and trained to either avoid the precursor conditions or to properly use flight instrumentation to provide guidance information (Bohlman 1979).

It is obvious that good flying skills and technical knowledge make up the cornerstone for safe flight operations. In the traditional sense, training means gaining proficiency over the aircraft’s technical systems and its “standard abnormals”.

It is evident from the increased activity in the field of crew coordination that other basic, more socially and psychologically oriented competencies, over and above the fundamental skills, are taking on greater significance and therefore should be integrated into the training program from the outset. In addition to this new content, other aspects of academic instruction and practical training should also be reconsidered and modified where needed.

In one study, it was verified that students were better able to successfully complete their training if they were given the opportunity to commit errors, recognize them on their own, and thereupon develop their own solutions (Frese and Altmann 1988). This is in contrast to the comparative group, in which errors were identified and doctrine was taught according to the classical model.

It is evidently needful to learn how to deal with one’s own errors, as well, and not only with system-related faults or failures. This new form of error management plays an especially important role in connection with the LOFT program, in which students must accomplish a flight in real-time without help or suggestions from outside and, above all, without interruption.

Frese and Altmann (1988) describe this new approach in the following manner:

Change the attitude of trainees from » I mustn’t make errors « to » let me see what I can learn from this error « .

This must also be put into practice by the trainers. In addition to the mere technical material being taught, didactical, pedagogical and psychological skills must also be conveyed.

Motivation

Many accidents blamed on human failure could neither be ascribed to poor design or unfavourable working conditions, nor to a lack of crew knowledge or skills. Oftentimes, the causes can’t be accounted for at all.

In these cases, one encounters what psychologists relate to as motivation: Why does someone act the way they do? Motivation, in this sense of the word, denotes the difference between that which someone can do, and that which he does. There are various theories, as to which structures form the basis for this behaviour. It is generally agreed upon that there are several levels of desires and needs, whose craving for satisfaction differs in intensity. Maslow distinguishes between five levels (see Fig. 3.7):

The further down the unsatisfied need resides, the more expedient is its satisfaction; only after which, will the superordinate needs play a role. Differing behaviourisms can be influenced by various factors simultaneously and in opposing manners. A person’s degree of satisfaction, and thereby his motivation, is greatly influenced by his corresponding field of work and working environment.

Relating this to the aviation industry means that an environment must be created that facilitates the highest degree of professional fulfilment and satisfactorily warrants the quality of the professional life. Three primary goals must be pursued in order to realize a safe and error-reducing work attitude:

-

Prevention of complacency

-

Attainment of a professional attitude

-

Maintenance of discipline

These three points are the primary causes of those accidents that can be traced back to insufficient motivation.

Management is responsible for creating a work environment that facilitates motivated working. If they show up too late for work or leave before everybody else, it can be assumed that their employees with adopt the same attitude towards their work.

Not only does the personal role model leave an impact, however, but recognition of the employee, his capabilities and his commitment, as well.

Other factors also play major roles, such as the acceptance of the profession by society, the rules governing leisure time and rest periods, the structuring of the duty schedule, the general corporate working climate and, not least, the income.

However, it would be improper to merely push off the responsibility and need for action onto “the others”. Each person must examine himself, as to whether exorbitant expectations and a basic negative attitude have, themselves, become stumbling blocks along the way, ultimately leading to demotivation.

3.6.2 Error Management

System design

One option for limiting the consequences of error is to reverse them wherever possible. There is an array of examples, in which this concept finds application. For instance, when entering data into a display terminal by means of a so-called “scratch pad”, all inputs can be checked initially before they are entered into the computer. Devices such as the “Gear Interlock System”, or those that deactivate certain systems in the flight or ground mode, help prevent improper operations while facilitating the reversal of its consequences.

Wherever possible, systems whose improper operation could have grave consequences should be designed in such a manner that operator inputs can be reversed.

Humans are increasingly being monitored by technical systems. These range from simple interlocks and warnings for exceeding operational limits (altitude alert, speed clacker, etc.) to the sophisticated Ground Proximity Warning System.

Pilot inputs in “fly-by-wire” technology are electronically checked for plausibility and their magnitude limited to pre-programmed values. In certain defined phases of flight, the person merely has access to “permissible” functions in order to eliminate the possibility of operational error. The potential for error therein shifts from the cockpit to the software developer’s office; from the active failure to the latent failure.

Redundancy

A large number of functioning individual components are needed to ensure the error-free operation of a complex system such as an aircraft, a power station or a chemical factory. From this perspective, man, himself, also becomes part of that system.

In order to prevent the failure of any one component in the system from putting the entire operation at risk, it must be promptly identified and its function taken over by other means.

Many different initiatives have been undertaken aimed at achieving this goal. “Fail Operational” and “Fail Passive” are examples of concepts for providing redundancy.

Technical redundancy is oftentimes realized through multiple systems assuming the same task. Depending on the significance of the task within the system, the changeover of systems will takes place more or less automatically.

Relating this to the human being, and particularly to the person in the cockpit, we are living today with a simplified form of redundancy. The simplest example of such a situation would be the complete discernable loss of a pilot. Yet, a functional redundancy in the event of “partial losses”, overloads or errors will be dependent on many pre-conditions. A balanced working environment, an optimal hierarchical gradient, the ability to communicate effectively and Standard Operating Procedures (SOPs) must first be provided for, only after which is mutual monitoring possible. The key phrases here are Crew Coordination, Crew Resource Management and Communication.

3.7 Error Prevention in Practice

Accept your own susceptibility to error

Cognitively, our susceptibility to error is the reverse side of our most important attribute as pilots: our flexibility.

They are unavoidable in principle

Errors occur more frequently under time constraints, with a new task and when one overestimates his own capabilities (“young men make accidents”). Therefore, an attempt should be made to confront the emergence of error preventatively by:

-

Avoiding time pressures

Don’t accept rushed approaches or an approach before completing its final preparations. If the aircraft is too high or too fast, a 360° manoeuvre should be flown, delay vectors requested or a missed approached executed.

-

Theoretical knowledge

All the “need-to-know” items should be readily available. The ignorance of procedures or limitations is unprofessional. Unknown situations, such as the first approach to a new airport, should be prepared for according to appropriate regulations.

-

Dedication to the procedures

Deviations from SOPs are permitted only when they are absolutely necessary; and then only after prior agreement. A lax attitude towards the SOPs is not a sign of “expertise”, but one of (potentially gross) negligence. All SOPs are the result of safety-relevant incidents; even accidents in most cases.

Poor judgement chain

If an error occurs despite the preventative measures, it will commonly be experienced as a personal failure. A failure inevitably leads to an increase in the level of stress. An increased level of stress leads to an increased susceptibility to error (see above), which quickly leads to the next error and raises the level of stress even further, which leads to yet another error. This vicious cycle is known as the “poor judgement chain” (see Fig. 3.8).

The increase in stress in this error chain can further lead to an unintentional disregard for SOPs and approach minima. Such has been observed in numerous incidents and accidents. For this reason, every unnecessary or non-agreed to deviation from the SOPs is a potential sign of entering into a dangerous error chain.

-

For this reason, each first occurrence of an error must be addressed and rectified immediately.

-

Never sweep an error “under the rug”. There is no reason for this as long as the error prevention process is in order. Errors that then still occur are unavoidable.

-

SOP deviations that have not been arranged in advance should immediately be called out immediately by the PM.

3.8 Summary

Everybody makes mistakes! This is the basic assertion behind our observations. In the course of evolution, the human has adapted himself optimally to his environment. In the process, he has not become error-free, but he has been able to survive superbly despite this shortcoming. Perhaps it is even a crucial part of his capacity for innovation because, as is well known, he is able to learn from his mistakes.

In the era of the Industrial Revolution, people began to take a systematic interest in their flaws. While the consequence of error prior to that time would have been limited to the erring person, himself, or, at the most, to a small circle of people, the use of machinery and technology meant that mistakes made by an individual would have ever greater consequences. Steam engines, railways and the beginning of the automotive society are a few examples.

This development has intensified considerably right into our own time. Just what consequences the failures of a few people can have on the entire human race was made clear through the accidents in Chernobyl, BhopalFootnote 2 and Tenerife.Footnote 3 These accidents gave research into the field of human error a further boost.

We have discussed the classification of errors, whereby perhaps the most important breakdown into active and latent failures originated from James Reason.

Not only in aviation but in every industry, the spotlight focuses on that one person who formed the last link in the error chain. Of course, it is advantageous to have a perpetrator. Hardly a newspaper reader will be interested in reading about the complicated combination of circumstances. But, in order to still learn from mistakes today, it is important to not only spend time delving into causal research at the surface, but also to illuminate the background causes; an area where there has long been a significant deficit.

We have studied the potential for error associated with the cockpit working environment in a simplified model. The potential for error exists at every step along the path from the assimilation of information through its processing to the decision made as a result, right up to the action taken. It must be emphasized again and again that it is the human design, the way he functions, that allows him to make mistakes. At the same time, it is also this human design that makes it possible for him to be so versatile, intuitive and quick to react to unknown situations. One can’t be had without the other.

We have shown that an error develops over many preliminary stages. It can be made while sitting at the desk, while developing an aircraft or in conjunction with the establishment of statutory rulings and regulations. It can come at the hand of company management, a department supervisor and, ultimately, from the mechanics, pilots or another person. In the context above, however, it is seldom just one individual error, but invariably an ominous combination of many such errors that lead to disaster.

It is therefore not sufficient to focus on just one position in the error chain in order to prevent that disaster. The overall system must be improved upon, and not just the last link in the chain.

The reduction of error and its related potential for disaster must be a continuous process. It is not possible to completely prevent latent failures, and while not flying at all still provides the highest degree of safety, it is not an option. Therefore, we must optimize the system.

Thus, both steps must be pursued:

-

Errors can’t be prevented altogether, but reduced.

-

Although errors can’t be prevented, their effects must be minimized.

Yet, with all the discussion about the human susceptibility to error, one thing should not be ignored:

Today, as in the past, it is the human being that, thanks to his unique abilities, is able to guarantee and increase the level of aviation safety in a manner that cannot be approximated by any machine. Any attempt to incapacitate, replace or curtail him will prove to be of only limited suitability. Development must not be directed against, but rather towards the person. It may be that the cause of 75 % of all aviation accidents can be traced in one way or another back to the pilots, but it is statistically impossible to determine just how many accidents were prevented by these very same pilots.

Notes

- 1.

The factor indicates just how much the probability of error for a specific activity increases when the referenced condition exists.

- 2.

Poisonous gas discharges from a chemical factory in Bhopal, India in 1984 resulted in over 20,000 deaths.

- 3.

The worst accident in the history of aviation resulted in 583 deaths in 1977.

References

Bartlett FC (1932) Remembering: a study in experimental and social psychology. Cambridge University Press, Cambridge

Bohlman L (1979) Aircraft accidents and the theory of the situation, resource management on the flight deck. In: Proceedings of a NASA/industry workshop, NASA conference proceedings 2120

Eberstein (1990) Deutsche Lufthansa AG senior first officer seminar, Seeheim

Frese M, Altmann A (1988) The treatment of errors in learning and training. Department of Psychology, University of Munich

Green RG, Muir H, James M, Gradwell D, Green RL (1991) Human factors for pilots. Avebury Technical

Hawkins FH (1987) Human factors in flight. Ashgate, Aldershot

ICAO (1989) Human factors digest no. l, ICAO circular 216-AN/131

Lufthansa (1999) Cockpit safety survey. Department of FRA CF, Frankfurt a.M.

Maslow H (1943) A theory of human motivation. Psychol Rev 50:370–396

Nagel C (1988) Human error in aviation operations. In: Human factors in aviation. Academic, New York

Nisbett R, Ross C (1980) Human inference: strategies and shortcomings of social judgement. Prentice Hall, Engelwood Cliffs

Reason J (1990) Human error. Cambridge University Press, Cambridge

Reason J (1991) Identifying the latent causes of aircraft accidents before and after the event. In: Lecture at the 22nd annual seminar “the international society of air safety investigators”, Canberra

Roscoe SN (1968) Airborne displays for flight and navigation. Human factors

Tversky A, Kahnemann D (1973) Availability, a heuristic for judgement of frequency and probability. Cogn Psychol 5:207–232

Tversky A, Kahnemann D (1974) Judgement under uncertainty, heuristics and biases. Science 211:453–458

Wiener E (1993) Lecture at the flight safety foundation IASS, Kuala Lumpur

Williams J (1988) A data-based method for assessing and reducing human error to improve operational performance. In: Hagen W (ed) IEEE fourth conference on human factors and power plants, Institute of Electrical and Electronic Engineers, New York

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Wiedemann, R. (2013). Human Error. In: Ebermann, HJ., Scheiderer, J. (eds) Human Factors on the Flight Deck. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-31733-0_3

Download citation

DOI: https://doi.org/10.1007/978-3-642-31733-0_3

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-31732-3

Online ISBN: 978-3-642-31733-0

eBook Packages: EngineeringEngineering (R0)