Abstract

In the analysis of ore particle size based on images, ore segmentation is a key link. After accurate segmentation, the geometric parameters such as the contour of these blocks, the external rectangle, the center of mass and the invariant moment can be further obtained, and the ideal ore particle size can be obtained effectively. A method of ore image segmentation based on deep learning is proposed in this paper. The method focuses on solving the problem of inaccuracy caused by mutual adhesion and shadow on the ore image. Firstly, complex environment image data set is obtained by using high resolution webcam; Next, we use the annotation data set to train HED (Holistically - Nested Edge Detection) model. This model can extract the image edge feature with strong robustness. Then, thinning edge is extracted using table lookup algorithm. The final step is labeling the connected region and getting segmented results. Our method is compared with the Watershed method based on gradient correction, and experimental results show the effectiveness and superiority of the proposed method.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Ore particle size measurement is mainly aiming at the separation of ore images on the conveyor belt before the mine blasting and carrying into the crusher. In early days, size measurement was based on manual measurement, which not only required a large amount of manpower and material resources, but also had low accuracy and efficiency. Then researchers proposed image based method for automatic ore size measurement, which was hoped to be more accurate. Therefore, ore particle size based on images is a common and automatic means, among which the ore segmentation is a key link. The accuracy of ore segmentation can affect the subsequent production process and benefit.

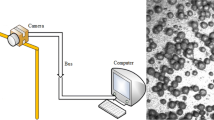

The environment of ore particle size measurement based on images is shown in Fig. 1.

In the ore image data collected in outdoor environment, we can find that due to the different time period and the different intensity of light, the image will be changed in shadow and brightness. In order to meet the environmental protection department requirements, workers need to spray water on the conveyor belt to reduce the pollution caused by high dust. This causes the effect of ore moisture (dry/wet/mixture), which leads that the edge of ore in image is blurred. In addition, due to the different mine break point and different amount of explosive, the ore has irregular shape, too large or too small. When it meets the colored mine, the uneven color will appear. All these phenomenons bring great difficulty for the separation of ore particle based on images. Thus, we need to solve the above problems by using a method with high segmentation accuracy and fast speed. It is instructive to improve the efficiency of mine production and save a lot of energy.

Ore image segmentation refers to dividing the image into a number of non-overlapping areas according to the characteristics of grayscale, color, texture and shape.

At present, the ore particle segmentation methods can be categorized into the following categories:

-

(1)

The segmentation method based on threshold value. It determines the optimal gray threshold value according to a certain criterion function. Ref. [1, 2] applied double window one-dimensional information and the gray value and variance information of adjacent pixel to determine the threshold. Although some noise effects could be overcome, it was still difficult to accurately divide the ore images with high similarity and fuzzy boundary.

-

(2)

The segmentation method based on region. It can be mainly divided into Watershed transform, region merging and so on. In Ref. [3], by using the bilateral filter to smooth image, the marker Watershed transform was implemented based on the distance transform and morphological reconstruction. This method could effectively reduce the over-segmentation rate, but most of the threshold values in the algorithm were selected by manual debugging, and the robustness was poor. In addition, the ore images on the conveyor belt have the problems such as large similarity between target and background, and the shadow region, etc. This method was not ideal for handling these problems.

-

(3)

The segmentation method based on particular theories. Ref. [4] presented a method for the segmentation of adhesion ores with concave points. After the Harris angle points detection, it matched the concave point of the circular template, and found the best matching by the rectangular limit. This method was novel, but the limitation of the Harris algorithm limited the segmentation accuracy. Ref. [5] represented an automatic segmentation method based on multi-scale strategy to generate the pyramid and initial marker, and ore segmentation was generated by using the marker-based regional merging algorithm. Ref. [6] carried out a comparative study of the existing super-pixel algorithms on ore images. It proposed to transform the Watershed to generate super-pixels, but the unrobustness of the Watershed and the time consuming of super-pixel will have an impact on the algorithm.

These traditional ore image segmentation methods are mostly based on the low order visual information of the image pixel itself. Such methods do not have an algorithm training phase, so the computational complexity is often not high. It is difficult to achieve a satisfactory effect on the more difficult segmentation tasks (if no artificial auxiliary information is provided). The semantic segmentation based on deep learning is a new idea of image segmentation in the last two years. By establishing the multi-level algorithm structure model through a large number of samples learning, it can obtain the deep semantic features with strong robustness [7,8,9]. This paper is focused on solving the difficulties mentioned above for the ore partical segmentations based on image. The appropriate convolution network HED (holier-nested Edge Detection) [10] is selected to detect ore edges. By using the multi-scale and multi-level feature learning algorithm of HED, the precise segmentation of different stones in the ore image is achieved. Experimental results on the collected images from the work site show that the proposed method is effective for the segmentation of complex ore images.

The structure of this article is as follows: Sect. 2 introduces the system structure and detailed principle of ore image segmentation based on deep learning. Section 3 details the experimental results and compares our proposed method with the Watershed method based on gradient correction. Section 4 concludes the paper.

2 Ore Image Segmentation Based on Deep Learning

2.1 System Description

In this paper, we propose a two-stage method for ore image segmentation. During the training stage, the HED network is applied to train the model of images collected in actual production process. During the ore partial segmentation stage, the edge of the test image is extracted using the trained model. Then, after thinning edge, connected region is further obtained to realize the ore segmentation. The overall system flow chart is shown in Fig. 2.

The system flow describes some brief steps of the method proposed in this paper. Firstly, ore images in complex environment are collected and the edge data set is generated. Next, we use these annotation data to train the HED network, and choose the model with the best parameters. Then, we select some images that are different from the images in train set and use the trained network for edge detection. Next, for the convenience of subsequent operation the edge results are thinned. The last step is to label connected region and get segmented results.

This process eliminates the need of complicated image pre-processing, and gets rid of the disturbance of parameter adjustment, and overcomes the influence of severe noise. It not only has good detection effect in complex and changing environment, but also improves image processing speed and has high practical application value.

2.2 HED (Holistically-Nested Edge Detection) Network [10]

HED algorithm mainly solves two problems through the deep learning model, which is based on the training and prediction of the whole image, as well as the multi-scale and multi-level feature learning. Through the learning of rich classification features, HED can make a more detailed edge detection. The algorithm is improved based on the VGG network [11] and FCN network. It connects the side output layer after the last convolution layer in each stage. It also removes the last pooling of the VGG network and all the subsequent full connection layers to reduce memory resource consumption. ‘Holistically’ represents that the result of edge detection is based on the process of image to image, end-to-end. However, ‘nested’ emphasizes the process of continuously inheriting and learning from the generated output process and getting the accurate edge prediction graph. It is similar to an independent multi-network, multi-scale prediction system, while finally using the weighting strategy to fuse the multi-scale output layer into a single deep network. The network structure is shown in Fig. 3.

During the training phase, in the ground truth, the ratio of the number of non-edge pixels to the edge number is greater than 9:1. In this case, a pixel - based class-balancing weight was introduced to solve the loss balance of positive and negative samples. The formula for the loss function is:

Where \( \beta = {{\left| {\mathop Y\nolimits_{ - } } \right|} \mathord{\left/ {\vphantom {{\left| {\mathop Y\nolimits_{ - } } \right|} {\left| Y \right|}}} \right. \kern-0pt} {\left| Y \right|}} \), \( 1 - \beta = {{\left| {\mathop Y\nolimits_{ + } } \right|} \mathord{\left/ {\vphantom {{\left| {\mathop Y\nolimits_{ + } } \right|} {\left| Y \right|}}} \right. \kern-0pt} {\left| Y \right|}} \), \( \left| {\mathop Y\nolimits_{ - } } \right| \) and \( \left| {\mathop Y\nolimits_{ + } } \right| \) Indicates the edge and non-edge of the label set respectively. Using sigmoid function \( \sigma \left( \cdot \right) \) to compute \( \mathop P\nolimits_{r} \left( {\mathop y\nolimits_{j} = 1\left| {X;W,\mathop w\nolimits^{\left( m \right)} } \right.} \right) \), m represents layers.

The weighted fusion layer is added to the network, and the weight value of the fusion is continuously learned during the training process, which predictions can be used directly and effectively from the side output. At this point, the fusion layer loss function is:

Among them \( \mathop {\mathop Y\limits^{ \wedge } }\nolimits_{fuse} \equiv \sigma \left( {\sum\nolimits_{m = 1}^{M} {\mathop h\nolimits_{m} \mathop {\mathop A\limits^{ \wedge } }\nolimits_{side}^{\left( m \right)} } } \right) \), The weight is \( h = \left( {\mathop h\nolimits_{1} , \cdots ,\mathop h\nolimits_{M} } \right) \), The difference between the prediction of fusion layer and the real annotation is indicated by Dist(•,•).

By the standard stochastic gradient descent method, the minimized objective function is obtained.

In the test phase, the side output layer of different scales and the weighted-fusion layer generate edge detection result.

CNN(•) is the edge detection generated by HED network.

2.3 Thinning Process

After the HED edge detection, the fuse layer result is shown in Fig. 4.

It can be seen that the edge of the line is uneven, and most of it is very thick. When the edge is measured, its thickness is about 9 pixels, which can not be processed later, so it needs to be refined. Thinning generally refers to the operation of the skeletonization of binary images. After layers of peeling, some points are removed from the original image, but the original shape remains to be maintained until the skeleton of the image is obtained.

There are many kinds of thinning algorithms [12,13,14], and in this paper, the table lookup method is applied. The criterion to decide whether a point can be removed is according to the situation of eight adjacent points (eight connected). For black pixels, this method assigns different values to the eight points around it, and maps all points to the index table of 0–255 in this way. According to the situation of 8 points around, corresponding item in the index table is considered to decide whether or not to keep it. In each line of horizontal scanning, it judges the left and right “neighbors” of each point. If all are black, the point is not processed; If a black point is deleted, this method skips the right neighbor to handle the next point. Then, a vertical scan is performed. The above steps are repeated several times until the graph is no longer changed.

The thinning edge detection results are shown in Fig. 5.

2.4 Connected Region Labeling

In order to further obtain the geometric parameters such as contour, external rectangle and center of mass in the ore image, we need to label the connected region on the thinning edge image.

There are many kinds of algorithms for labeling connected regions. Some algorithms only need one image traversal, and some require two or more. The method used in this paper is to establish two levels of contour. The upper layer is the four edges of the image, and the inner layer is the information of the ore connected in the image. Each disconnected region is assigned a different mark.

The core is the contour search algorithm, which marks the whole image by locating the inner and outer contour of the connected region. The specific steps of the algorithm are as follows:

-

Step1: make image traversal from top to bottom, from left to right;

-

Step 2: if A is an outer contour point and has not been marked, give A new tag. Start from point A, follow certain rules to track all the outer contour points of A, then go back to point A, and mark all points on the path as the label of A.

-

Step 3: if an outer contour point \( A^{\prime } \) has been marked, mark points right beside it, until encountering a black pixel.

-

Step 4: if a marked point B is the point of the inner contours, start from point B, track the inner outline, and the points on the path are set to B’s label. Because B has been marked with the same as A, the inner and outer outline will mark the same label.

-

Step 5: if the points on the inner outline has been walked through, mark the point on the right side with the contour mark until the black pixel is encountered.

3 Experiments

The experiment of HED edge detection is conducted under the deep learning framework Caffe. The network model is trained by GPU with the model Geforce GTX TITAN X. Edge thinning and connected region labeling are programmed by combining Opencv, Skimage, Image and other image processing libraries in Python2.7 environment. The final segmentation are compared with the results of the Watershed transform based on gradient correction method [15,16,17], and the validity of this method is proved.

3.1 Image Data Set Preparation

In order to solve the problem of inaccuracy caused by mutual adhesion and shadow on the ore image, we collect multi-type images in a changing environment from the production site. There are mainly two types of challenging images as shown below.

-

(1)

Ore images taken different time periods: The ore images will show different intensity and shading situations at different time periods. As shown in Fig. 6, the left image is taken in the morning, and the right one is taken in the afternoon.

-

(2)

Ore images taken with different degree of dryness and humidity as shown in Fig. 7. The ores are sprayed with water to reduce the dust.

The image size collected from the live webcam is 1920*1080. In actual processing, it occupies too much memory which leads to long time of the processing. Therefore, the image is interpolated and resized to 960*540. The collected different types of images are uniformly distributed in the annotation data set, and edge lines are manually depicted to form the label sets. The number of the labeled images in the annotation data set is 285.

3.2 HED Model Training and the Segmentation Process

HED network model is trained with the above mentioned annotation data set, and its training parameters are shown in Table 1. The ‘\( {\text{base}}\_{\text{lr}} \)’ represents the basic learning rate; ‘\( {\text{gamma}} \)’ means the change index of learning rate; ‘iter_size’ represents the number of images per iteration; ‘\( {\text{Ir}}\_{\text{policy}} \)’ means the learning rate attenuation strategy; ‘momentum’ represents the network momentum, generally using experience values; ‘\( {\text{weight}}\_{\text{decay}} \)’ represents weight attenuation to prevent overfitting. Stochastic Gradient Descent (SGD) is used in the experiment. Maximum number of iterations max_iter = 30001. Because of large size of images, we use lower ‘\( {\text{base}}\_{\text{lr}} \)’ in order to prevent gradient explosion and Loss = Nan.

Each iteration takes approximately 9 s. When the training iteration has reached 50,000, the convergence is basically completed. Then we apply the trained model on test images, we typically selects colorful stone images collected at night and the images with shadows acquired during the day, as shown in Fig. 8. Figure 9 shows the output of the HED network fusion layer. It can be seen that the edge detection is superior, robust and granular. However, the skeleton of the edge is thick and noisy, so it needs to be thinned for subsequent processing. Figure 10 shows the effect of edge thinning by using the table lookup. Figure 11 shows the results that using the find Contours function in the Opencv library to draw the thinning edge to the original image. In this way, we can see the segmentation effect more intuitively and form the connected region. Figure 12 is the final segmentation result of the method proposed in this paper.

3.3 Comparison with the Watershed Segmentation Based on Gradient Correction

In order to demonstrate the effectiveness of the segmentation method proposed in this paper, a Watershed algorithm based on gradient correction is applied on the same ore images in Fig. 8 and the results are compared.

The basic idea of Watershed ore segmentation based on gradient correction is as follows. The Watershed algorithm has a good response to weak edges, so the noise in the ore image and the slight change of gray level on the surface of the ore will cause the over-segmentation problem. To address this problem, a series of image pre-processing is performed before the Watershed, such as adaptive histogram equalization, bilateral filter, morphological open and closed operations. Then, the foreground and background markers of the image are marked. At last, the gradient map is used to modify the Watershed algorithm. The segmentation effect is shown in Fig. 13.

From Figs. 12 and 13, we can see that our proposed method performs better on extracting the ores. In complex environment, pre-processing steps of the Watershed method based on gradient correction are complicated. The size of structural elements in open and closed reconstruction requires empirical debugging. The segmentation result of ambiguous edge is not ideal. While our method does not involve the debugging of parameters, and has better robustness, speed and segmentation effect.

4 Conclusion

Aiming at the characteristics of ambiguous irregular, mutual adhesion and severe illumination of ore image edges, this paper collects multi-type ore images, makes edge annotated data sets, and uses the HED model based on neural network for edge detection. After thinning processing, the connected region is formed. This process achieves the purpose of segmentation of outdoor environment ore images. The comparison with the Watershed segmentation based on gradient correction shows that the proposed algorithm can accurately detect the ores and improve the segmentation effect.

References

Zhang, G., Qiu, B., Liu, G.: Ore image segmentation using the one-dimensional entropy adaptive threshold based on bi-windows. In: International Conference on Information, Services and Management Engineering (2011)

Zhu, S., Xia, X., Zhang, Q., et al.: An image segmentation algorithm in image processing based on threshold segmentation. In: International IEEE Conference on Signal-Image Technologies and Internet-Based System, pp. 673–678. IEEE (2007)

Zhang, W., Jiang, D.L.: The marker-based watershed segmentation algorithm of ore image. In: International Conference on Communication Software and Networks, pp. 472–474. IEEE (2011)

Zhigang, N., Wenbin, S., Xiong, C.: Adhesion ore image separation method based on concave points matching. In: Balas, V.E., Jain, Lakhmi C., Zhao, X. (eds.) Information Technology and Intelligent Transportation Systems. AISC, vol. 455, pp. 153–164. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-38771-0_15

Yang, G.Q., Wang, H.G., Wen-Li, X.U., et al.: Ore particle image region segmentation based on multilevel strategy. Chin. J. Anal. Lab. 35(24), 202–204 (2014)

Malladi, S.R.S.P., Ram, S., Rodriguez, J.J.: Superpixels using morphology for rock image segmentation. In: Image Analysis and Interpretation, pp. 145–148. IEEE (2014)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(4), 640–651 (2017)

Badrinarayanan, V., Kendall, A., Cipolla, R.: SegNet: a deep convolutional encoder-decoder architecture for scene segmentation. IEEE Trans. Pattern Anal. Mach. Intell. PP(99), 1 (2015)

Chen, L.C., Papandreou, G., Kokkinos, I., et al.: DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. PP(99), 1 (2017)

Xie, S., Tu, Z.: Holistically-nested edge detection. Int. J. Comput. Vis. 125(1–3), 1–16 (2015)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. Comput. Sci. (2014)

Zhang, T.Y., Suen, C.Y.: Suen, C.Y.: A fast parallel algorithm for thinning digital patterns. Commun. ACM 27(3), 236–239 (1984)

Wang, P.S.P., Zhang, Y.Y.: A fast and flexible thinning algorithm. IEEE Trans. Comput. 38(5), 741–745 (1989)

Lam, L., Suen, C.Y.: An evaluation of parallel thinning algorithms for character recognition. IEEE Trans. Pattern Anal. Mach. Intell. 17(9), 914–919 (2002)

Liu, Y., Zhao, Q.: An improved watershed algorithm based on multi-scale gradient and distance transformation. In: International Congress on Image and Signal Processing, pp. 3750–3754. IEEE (2010)

Zhang, J.M., Ju, Z., Wang, J.: Watershed segmentation algorithm based on gradient modification and region merging. J. Comput. Appl. 31(2), 369–371 (2011)

Wang, X.-p., Li, J., Liu, Y.: Watershed segmentation based on gradient relief modification using variant structuring element. Optoelectron. Lett. 10(2), 152–156 (2014)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Yuan, L., Duan, Y. (2018). A Method of Ore Image Segmentation Based on Deep Learning. In: Huang, DS., Gromiha, M., Han, K., Hussain, A. (eds) Intelligent Computing Methodologies. ICIC 2018. Lecture Notes in Computer Science(), vol 10956. Springer, Cham. https://doi.org/10.1007/978-3-319-95957-3_53

Download citation

DOI: https://doi.org/10.1007/978-3-319-95957-3_53

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-95956-6

Online ISBN: 978-3-319-95957-3

eBook Packages: Computer ScienceComputer Science (R0)