Abstract

In today’s world which is subject to an increasing number of stores and level of rivalry on a daily basis, decisions concerning a store’s location are considered highly important. Over the years, researchers and marketers have used a variety of different approaches for solving the optimal store location problem. Like many other research areas, earlier methods for site selection involved the use of statistical data whereas recent methods rely on the rich content which can be extracted from big data via modern data analysis techniques. In this paper, we begin with assessing the historical precedent of the most accepted and applied traditional computational methods for determining a desirable place for a store. We proceed by discussing some of the technological advancements that has led to the advent of more cutting-edge data-driven methods. Finally, we extend a review of some of the most recent, location based social network data-based approaches, to solving the store site selection problem.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Computational movement analysis

- Location based social network data

- Geo-marketing

- Retail store location

- Site selection

1 Introduction

Determining retail store popularity and studying the variables influencing it, has always been one of the hottest research topics noticed in many different scientific domains. From a marketing perspective, if retail store popularity from a target customer’s point of view is what one is after, it can be controlled and even enhanced through accurate planning of the marketing mix elements. The marketing mix for production businesses was defined by Kotler as “a set of controllable marketing variables – product, price, place and promotion – that the firm can use to get a desired response from their target customers” (Rafiq and Ahmed 1992). Booms and Bittner (1981, pp. 47–51), later modified the marketing mix concept to better fit the marketing aspects of services by adding three new elements to the mix: process, physical evidence and participants. Accurate planning for the marketing mix elements includes making important decisions about a number of other factors. For example, planning for the “place” element, includes making decisions about factors like store location, distribution channels, accessibility, distribution network coverage, sales domains, inventory placement and transportation facilities. Store placement, especially for service providers and retail stores, has always been considered as one of the most important business decisions a firm can make, since it is a critical factor contributing to a business’s overall chance for success. “No matter how good its offering, merchandising, or customer service, every retail company still has to contend with three critical elements of success: location, location, and location” (Taneja 1999, pp. 136–137). There are many different approaches to support decision making in case of retail store placement. Some of these approaches including relying on experience and the use of checklists, analogues and ratios have been around and used by marketing managers for many years (Hernández and Bennison 2005). Such techniques are favored by some managers since they require minimum levels of budget, technical expertise and data, yet their downfall lies in the high level of subjectivity in decision making and the fact that they are almost incompatible with GIS (Hernandez et al. 1998). Other techniques including approaches based on the central place theory, gravity models, the theory of minimum differentiation and data driven approaches such as feature selection are more computational and therefor need a higher level of expertise and resources, but at the same time offer a superior level of predictability and are not bound by a high amount of subjectivity.

In this paper, the main goal is to review the evolution of computational approaches to solving the retail store placement problem. Consequently, the principal contributions of this work can be listed as follows.

-

An investigation of the origins and main principles of the most accepted theories that attempted to explain the relationship between location and store popularity and a review on some of the research that has been inspired by these theories over the past century.

-

An exploration of the technological and scientific developments that led to the emergence of more data-driven and analytical approaches and an explanation of some of the challenges and advantages of using location based social networkFootnote 1 data for site selection.

-

A review of the LSBN-based feature selection frameworks proposed by a number of scientists over the past decade, an introduction to some of the most practical features they used to tackle the store placement problem and an assessment of the outcomes of their works.

-

A discussion on the importance of accurate location placement and possible directions for future research on this subject.

2 Computational Techniques

Over the years, several theories have been proposed attempting to explain the circumstances of the effects of location on store popularity and success. While some of the traditional approaches such as the use of the central place theory, spatial interaction theory and the principal of minimum differentiation have been widely accepted and applied, they were mostly reliant on the use of statistical data, required high levels of specialty in terms of model building and they rely on unrealistic assumptions (Chen and Tsai 2016; Hernandez et al. 1998). On the other hand, recent technological advancements have led to the advent of new techniques based on the analysis of big data. Consequently, in this section, by considering a historical-methodological approach, we begin with a historical review of the most acknowledged traditional methods for site selection, proceed by discussing the developments that caused the emergence of new sources of data, hence the evolution of traditional methods into modern techniques and end with a methodological review of the new feature selection based approaches.

2.1 Traditional Methods

The question of placing chain stores across the network of a city in a way that optimizes the overall sales and customer attraction has been of interest to researchers, managers and other planning authorities for many years. Seventy-five years ago (in 1933), Walter Christaller proposed the central place theory while studying the central places in southern Germany. Although the significance of his theory was not appreciated until years later, according to Brown (1993), his theory became the basis for the retail planning policies of several countries. Around the same time, the spatial interaction theory (Reilly, 1929–1931) and the principle of minimum differentiation (Hotelling 1929) were introduced which together with the central place theory, shape the three main fundamental concepts in traditional retail location research (Litz 2014). Despite their shortcomings, including being normative and requiring a list of unrealistic assumptions, they still tend to attract a vast amount of academic attention (Brown 1993; Chen and Tsai 2016). Therefore, this section begins with a brief review of each of the aforementioned theories, continues with comparing the main goals, limitations and assumptions of them and ends with a review of some of the most significant papers inspired by the concepts of these theories.

The Central Place Theory

Scientists have tried to describe and characterize the regionalization of urban space in a hierarchical manner for almost a century now. “A hierarchy emerges with respect to the types of relationships that exist given the cluster size, whether the cluster is a village, a town or a city” (Arcaute et al. 2015; Berry et al. 2014). One of the most famous examples of this type of approach is the Central place hierarchies (Boventer 1969) introduced by Christaller (Arcaute et al. 2015). The origins of Christaller’s central place theory dates back to 1933, when this German researcher first suggested that there is a reverse relationship between the demand for a product and the distance from the source of supply in a manner that leads to zero demand for distances farther than a certain range which is called the “range of a good”. This theory is based on the importance of transportation costs for the customers and focuses on describing the number, spacing, size and functional composition of retail centers while assuming that all customers are rational decision makers, all sellers enjoy equivalent costs, free entry and fair pricing in a perfectly competitive market and shopping trips are single-purpose (Brown 1993).

The Principle of Minimum Differentiation

This theory was presented by Hotelling (Hotelling 1929), and focuses on the importance of a store’s proximity to its main rivals and argues that distance from rivals is more important than distance from customers. Numerous researchers have tried to improve the principle of minimum differentiation ever since by considering variations in the basic underlying assumptions (Ali and Greenbaum 1977; Hartwick and Hartwick 1971; Lerner and Singer 1937; Nelson 1958; Smithies 1941). In 1958, based on Hotelling’s theory, Nelson suggested that while suppliers of a given product or service are located near one another, demand rises (Litz 2014). Later, this theory was considered as the basis for multiple other approaches such as space syntax analysis (Hillier and Hanson 1984), natural movement (Hillier et al. 1993) and the multiple centrality assessment (Porta et al. 2009).

The Spatial Interaction Theory

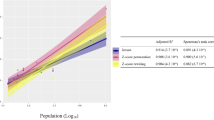

Gravity models can be considered as the most distinguished and accepted solution to the retail store location problem for many years now. These models emphasize on a customer’s perspective on availability and accessibility of a given store. The development of the first version of a gravity model was inspired in late 1930s by the work of Reilly, an American researcher (Kubis and Hartmann 2007). Reilly suggested that customers may make tradeoffs between the specific features and the overall attractiveness of a store’s main product and the store’s location (Litz 2014). Although the empirical tests demonstrated that under practical circumstances, the gravity model performs acceptably, there were also a number of researchers that argued that the variables used in the model, population and road distance, fail to perform well in some situations (Brown 1993; Huff 1962). Consequently, many researchers have tried to improve the gravity model by introducing more applicable and better performing variables into the model ever since. Wilson’s model based on entropy-maximization and Huff’s probabilistic potential model are two of the most accepted modified versions of Reilly’s theory.

In 1967, Wilson introduced a model for spatial distribution (Wilson 1967) describing the flow of money from population centroids to retail centers (Wilson and Oulton 1983). In Wilson’s model, survival of a retail center is dependent on its ability to compete for the limited amount of available resources (customers) (Piovani et al. 2017). Huff (1963, 1964) suggested that customers may prefer shopping areas based on their overall utility (Brown 1993). By dividing gravity models into two different general groups of qualitative and quantitative models and considering that quantitative models are again divided into two groups of deterministic and probabilistic models. While deterministic models usually calculate an estimation of accounting variables such as turnover or return on investment to present to marketing managers to decide upon, probabilistic models attempt to model the probability of a consumer that lives at location i to purchase products at location j and Huff’s model is the perfect example of a probabilistic gravity model (Litz 2014) (Table 1).

A comparison of the Three Main Theories in Traditional Computational Site Selection

A Schematic Review of the Historical Trend of Some of the Research Advancements Made, Based on the Three Main Theories in Traditional Computational Site Selection

2.2 The Emergence of LSBNs; Opportunities and Challenges

In the past decade, different factors like the advancements made in wireless communication technologies, the growing universal acceptance of location-aware technologies including mobile phones and smart tablets equipped with GPSFootnote 2 receivers, Sensors placed inside these devices, attached to cars and embedded in infrastructures, remote sensors transported by aerial and satellite platforms and RFIDFootnote 3 tags attached to objects was complemented by the development of GISFootnote 4 technologies to result in the availability of an increasing amount of data with content richness which can be exploited by analysts. With the emergence and growing popularity of social networks and location-aware services, the next step was combining these two technologies which resulted in the introduction of location based social networks (Kheiri et al. 2016). Since such networks act as a bridge establishing a connection between a user’s real life and online activities (Kheiri et al. 2016), data obtained from them is considered among one of the most important resources of spatial data and presents a unique opportunity for researchers in business-related fields to precisely study consumer’s behavioral patterns.

With the advancements mentioned above, the question of optimal store placement like many other scientific problems has entered a new era with fast, diverse and voluminous data, terms that are usually used to describe big data. The simplicity of capturing, recording and processing of data obtained from digital sources like LSBNs, is shaping a phenomenon which is being referred to as the fourth major scientific paradigm following empirical science describing natural phenomena, theoretical science using models and generalization, and computational science simulating complex systems (Miller and Goodchild 2016). Since optimal retail store placement clearly has a geographic nature, the introduction of LSBNs that are considered rich sources of geo-tagged data can be a rare and valuable opportunity for scientists and marketers. Consequently, Liu and his colleagues (Liu et al. 2015), introduced the term “social sensing” for describing the process and different approaches of analyzing spatial big data. The use of the term “sensing” in describing this process, represents two different aspects of such data. First, this kind of analog data can be considered as a complementary source of information for remote sensing data, because they can record the socio-economic characteristics of users whereas remote sensing data can never offer these kind of descriptive information. Second, such data follow the concept of Volunteered geographic informationFootnote 5 (introduced by (Goodchild 2006)), meaning that every individual person in today’s world can be considered as a sensor transmitting data as they move. However, like any other scientific advancement, the application of these new data sources is accompanied by a mixture of opportunities and challenges. For example, the small proportion of LSBN users in comparison to the overall retail store customers and the average age of these users which ranges from 15 to 30 years of old, may lead to some unwanted sampling errors (Lloyd and Cheshire 2017). Moreover, such data are naturally heterogeneous and disrupted by noise and deviation. Therefore, using LSBN data should always include a pre-processing step including applying specific methods for eliminating noise and irrelevant data. For instance, in some cases, researchers consider eliminating duplicate check-ins and data related to users that only checked-in once, in order to get access to more homogenous and noise-free set of data (Kheiri et al. 2016).

Nevertheless, LSBN data offer vast opportunities as well. First of all, LBSN data offer high levels of temporal granularity in a worldwide scale and they can also be accessed really quickly (Lloyd and Cheshire 2017). Furthermore, LSBN data offer a more detailed description of geographic objects and spatial interactions despite the fact that they seem like weak sources of information at first. For example, two adjoining restaurants may be hard to distinguish based on traditional geotagged data, but in LSBNs, as they offer additional information such as venue classification, user generated comments and recommendations for popular venues, one can easily differentiate between adjacent venues (Kheiri et al. 2016). On the other hand, traditional data such as demographic, tax and land use data are recorded in standard spatial units and aggregation based on pre-assumed units may be subject to the famous modifiable aerial unit problemFootnote 6 error. Whereas in LSBNs, instead of defining venues inside traditional administrative boundaries, each venue is marked by the exact location in which it was built in (Zhou and Zhang 2016). Therefore, analyzing LSBN data and extracting meaningful and practical patterns from them may help businesses attract more customers and enhance their financial and operational outcomes (Papalexakis et al. 2011). Accordingly, Researchers in the past decade have focused some of their efforts on exploiting LSBN data to solve the retail store placement problem.

Other than one or two cases, most of the research using LSBN data for site selection, has taken advantage of the new advancements in feature selection. Based on the unique attributes and the type of information that can be retrieved from LBSN data, a number of features that influence retail store popularity are defined and then used to predict the popularity of given stores. Such techniques will be discussed in the next section.

2.3 LSBN Based Feature Selection Approaches

Using LSBN data as a source for defining a set of features in order to study the factors that influence retail store popularity is a rather new approach to solving the problem of store site selection. In 2013, Karamshuk and his colleagues (Karamshuk et al. 2013), presented a framework based on this approach for the first time. They attempted to assess the popularity of three different coffee shop and restaurant chains in New York city via data retrieved from the popular LSBN; FoursquareFootnote 7. To accomplish this goal, they introduce a number of different features that capitalize upon the main characteristics of Foursquare data and then classify these features into two major groups; geographic and mobility features. Finally, they suggest two different approaches for using these features to assess the popularity of a given store: using each individual feature for popularity prediction and combining the features with a number of different techniques, including a machine learning feature selection technique (RankNet algorithm). They compare the results obtained by these different approaches and conclude that using a combination of features using RankNet offers more accuracy. The accuracy level evaluation is based on the NDCG@kFootnote 8 approach which measures the percentage of accurately predicted popular stores in a list of “k” places by comparing the results to the actual popularity of stores.

Yu and his colleagues (2016), attempt to tackle another aspect of the store placement problem; choosing a shop-type from a list of candidate types for a given location. Based on the feature selection approach suggested by Karamshuk et al. they present a list of intended features for assessing the popularity of the candidate types of stores. They extract the needed information from two different LSBNs; BaiduFootnote 9 and DianpingFootnote 10. In their framework, they utilize a matrix factorization technique to combine the selected features to recommend the best possible shop type (Popularity-wise) for a specific location. Finally, they evaluate the suggested framework by calculating its prediction accuracy and comparing it to the results of baseline methods including logistic regression, decision tree, SVM and Bayesian classification. Results suggest that the matrix factorization method is superior than baseline methods in terms of recommendation precision.

Wang and Chen (2016), propose a framework that forecasts the popularity a number of given candidates for a new restaurant specifically based on user generated reviews. They extract restaurant reviews on YelpFootnote 11 to assess the prediction power of their framework which is based on the application of three different regression models (Ridge regression, support vector regression and gradient boosted regression trees) for feature combination. For performance evaluation, they use Rooted Mean Square ErrorFootnote 12 to test predictability precision, Spearman’s rank correlation coefficient to measure the prediction accuracy relative to the ground-truth and Mean Average PrecisionFootnote 13 to evaluate ranking accuracy of relevant locations for a specific restaurant chain.

Rahman and Nayeem (2017), take advantage of a similar framework to the ones described before, to select a location for live campaigns. They exploit Foursquare data in order to compare the results of the direct use of features and a combination of features offered by a support vector machine regression, and demonstrate that the application of the regression model for feature selection offers more accuracy and better predictability (Table 2).

2.4 Discussion and Future Research

By investigating the related literature in retail store site selection, it is clear that while some researchers are still attempting to exploit the advantages of traditional theories in order to solve the question of finding an optimal location for stores, in the past years, a number of researchers have focused their work on presenting a more modern and data-driven framework that is built upon the idea of feature selection based on the information mined from LSBN data. Assessing the comparisons done in the later articles, between the results obtained from the direct use of defined features and using different techniques for feature combination, shows that exploiting different combination methods for feature selection offers more precision and accuracy than the use of direct features as prediction tools. Although Karamshuk et al. (Karamshuk et al. 2013) argued and proved that using a method of machine learning for feature selection may offer better results than some baseline methods such as regression models, the particular method they used (RankNet) is not considered state of the art as it does not take advantage of ensemble learning techniques in order to maximize the accuracy of feature selection. Moreover, there hasn’t been a clear answer to the question of choosing the best possible approach out of the methods used for site selection in the recent papers. Hereupon, investigating and comparing the outcomes of different feature combination techniques including new approaches of ensemble machine learning, matrix factorization and different regression models in order to determine the best possible framework may be an interesting direction for future research.

3 Conclusion

For a retail store manager, one of the most complicated yet important decisions may be the determination and constant improving of the store placement strategy. Choosing the right location, inevitably effects the overall success or failure of a store. Hence, figuring out an optimal approach for making this important decision has been an interesting subject for researchers and managers over the years. The application of checklists, analogues, ratios and computational approaches based on the central place theory, gravity models and theory of minimum differentiation, are some of the traditional techniques that have been proposed by researchers and utilized by marketers to make better decisions considering a store’s location over the past century (Brown 1993; Hernandez et al. 1998; Litz 2014).

In recent years, with the technological advancements made which led to the emergence of LSBNs, data retrieved from these networks has been noticed and exploited by researchers in many scientific fields, including marketing researchers looking for new data-driven approaches for optimal retail store placement. Despite the fact that LSBN data may force researchers to deal with a number of new challenges, the opportunities and advantages they offer seem too valuable to ignore. Therefore, researchers have tried to capitalize on the unique characteristics of LSBN data in the past years in order to present a new approach for solving the century-old question of optimal store placement, by defining a set of features and combining them using different algorithms. Although the results of the such researches can be deemed promising in terms of accuracy of prediction, there is still room to complete the presented frameworks by using new and improved data mining and machine learning algorithms and techniques for feature combination in order to achieve the best possible results.

Notes

- 1.

LSBN.

- 2.

Global Positioning System.

- 3.

Radio Frequency Identification.

- 4.

Geospatial Information Systems.

- 5.

VGI.

- 6.

MAUP.

- 7.

- 8.

Normalized Discounted Cumulative Gain approach.

- 9.

- 10.

- 11.

- 12.

RMSE.

- 13.

MAP.

References

Ali, M., Greenbaum, S.: A spatial model of the banking industry. J. Finan. XXXII(4), 1283–1303 (1977)

Aoyagi, M., Okabe, A.: Spatial competition of firms in a two dimensional bounded market. Reg. Sci. Urban Econ. 23, 259–289 (1993)

Arcaute, E., Molinero, C., Hatna, E., Murcio, R., Vargas-ruiz, C., Masucci, A.P., Batty, M.: Cities and Regions in Britain through hierarchical percolation (2015)

Berry, B.J.L., Garrison, W.L., Berry, B.J.L., Garrison, W.L.: The functional bases of the central place hierarchy. Econ. Geogr. 34(2), 145–154 (2014)

Birkin, M.: Customer targeting, geodemographics and lifestyle approaches. GIS for Bus. Serv. Plan. 104–138 (1995). https://www.amazon.com/Business-Service-Planning-Paul-Longley/dp/0470235101

Boventer, E.: Walter christaller’s central places and peripheral areas: the central place theory in retrospect. J. Reg. Sci. 9(1), 117–124 (1969)

Brown, S.: Retail location theory: evolution and evaluation Retail location theory: evolution and evaluation. Int. Rev. Retail Distrib. Consum. Res. 3, 185–229 (1993)

Brunner, J.A., Mason, J.L.: The influence of time upon driving shopping preference. J. Mark. 32(2), 57–61 (1968)

Booms, B.H., Bitner, M.J.: Marketing strategies and organization structures for service firms. In: Donnelly, J.H., George, W.R. (eds.) Marketing of Services, pp. 47–51. American Marketing Association, Chicago, IL (1981)

Cadwallader, M.: Towards a cognitive gravity model: the case of consumer spatial behaviour. Reg. Stud. 15(4), 37–41 (1981). https://doi.org/10.1080/09595238100185281

Cardillo, A., Scellato, S., Latora, V., Porta, S.: Structural properties of planar graphs of urban street patterns, pp. 1–8 (2006). https://doi.org/10.1103/PhysRevE.73.066107

Chen, L., Tsai, C.: Data mining framework based on rough set theory to improve location selection decisions: a case study of a restaurant chain. Tourism Manag. 53, 197–199 (2016)

Daniels, M.J.: Central place theory and sport tourism impacts, 34(2), 332–347 (2007). https://doi.org/10.1016/j.annals.2006.09.004

Devletoglou, N.E.: A dissenting view of duopoly and spatial competition. Economica 32(126), 140–160 (1965)

Drezner, Z.: Competitive location strategies for two facilities. Reg. Sci. Urban Econ. 12(4), 485–493 (1982). https://doi.org/10.1016/0166-0462(82)90003-5

González-Benito, Ó., Muñoz-Gallego, P.A., Kopalle, P.K.: Asymmetric competition in retail store formats: evaluating inter- and intra-format spatial effects. J. Retail. 81(1), 59–73 (2005). https://doi.org/10.1016/j.jretai.2005.01.004

Goodchild, M.F.: Citizens As Sensors: The World Of Volunteered Geography, pp. 1–15 (2006)

Harris, B.: A note on the probability. J. Reg. Sci. 5(2), 31–35 (1964)

Hartwick, J.M., Hartwick, P.G.: Duopoly in space. Can. J. Econ. 4(4), 485–505 (1971)

Hehenkamp, B., Wambach, A.: Survival at the center-the stability of minimum differentiation. J. Econ. Behav. Organ. 76(3), 853–858 (2010). https://doi.org/10.1016/j.jebo.2010.09.018

Hernandez, T., Bennison, D., Cornelius, S.: The organisational context of retail locational planning. GeoJournal 45, 299–300 (1998)

Hernández, T., Bennison, D.: The art and science of retail location decisions. Int. J. Retail Distrib. Manag. Emerald 28(8), 357–367 (2005)

Hillier, B., Hanson, J.: The Social Logic Of Space. Cambridge University Press (1984)

Hillier, B., Perm, A., Hanson, J., Grajewski, T., Xu, J.: Natural movement: or, configuration and attraction in urban pedestrian movement. Environ. Plan. B: Plan. Des. 20, 29–66 (1993)

Hotelling, H.: Stability in competition. Econ. J. 39(153), 41–57 (1929)

Huff, D.: A note on the limitations of intraurban gravity models. Land Econ. 38(1), 64–66 (1962)

Huff, D.: A probabilistic analysis of shopping center trade areas. Land Econ. 39(1), 81–90 (1963)

Huff, D.L.: Defining and estimating a trading area. J. Mark. 28, 34–38 (1964)

Karamshuk, D., et al.: Geo-Spotting: Mining Online Location-based Services for optimal retail store placement (2013)

Kheiri, A., Karimipour, F., Forghani, M.: Intra-urban movement pattern estimation based on location based social networking data. J. Geomat. Sci. Technol. 6(1), 141–158 (2016)

Kubis, A., Hartmann, M.: Analysis of location of large-area shopping centres, a probabilistic gravity model for the Halle–Leipzig Area. Jahrbuch F¨ Ur Regionalwissenschaft 27, 43–57 (2007). https://doi.org/10.1007/s10037-006-0010-3

Lakshmanan, J.R., Hansen, W.G.: A retail market potential model. J. Am. Inst. Plan. 31(2), 134–143 (1965). https://doi.org/10.1080/01944366508978155

Lerner, A.P., Singer, H.W.: Some notes on duopoly and spatial competition. J. Polit. Econ. 45(2), 145–186 (1937)

Li, Y., Liu, L.: Assessing the impact of retail location on store performance: a comparison of Wal-Mart and Kmart stores in Cincinnati. Appl. Geogr. 32(2), 591–600 (2012). https://doi.org/10.1016/j.apgeog.2011.07.006

Litz, R.A.: Does small store location matter? A test of three classic theories of retail location. J, Small Bus. Entrepreneurship, 37–41 (2014). https://doi.org/10.1080/08276331.2008.10593436

Liu, Y., Liu, X., Gao, S., Gong, L., Kang, C., Zhi, Y., Chi, G.: Social sensing: a new approach to understanding our socioeconomic environments. Ann. Assoc. Am. Geogr. 37–41, May, 2015. https://doi.org/10.1080/00045608.2015.1018773

Lloyd, A., Cheshire, J.: Computers, environment and urban systems deriving retail centre locations and catchments from geo-tagged Twitter data. CEUS 61, 108–118 (2017). https://doi.org/10.1016/j.compenvurbsys.2016.09.006

Miller, H., Goodchild.: Data-driven geography Data-driven geography. GeoJournal, August 2015. https://doi.org/10.1007/s10708-014-9602-6

Nakamura, D.: Social participation and social capital with equity and efficiency: an approach from central-place theory q. Appl. Geogr. 49, 54–57 (2014). https://doi.org/10.1016/j.apgeog.2013.09.008

Nelson, R.L.: The selection of retail locations. F.W. Dodge Corp., New York (1958)

Nogueira, M., Crocco, M., Figueiredo, A. T., Diniz, G.: Financial hierarchy and banking strategies : a regional analysis for the Brazilian case, pp. 1–18 (2014). https://doi.org/10.1093/cje/beu008

Ottino-loffler, B., Stonedahl, F., Wilensky, U.: Spatial Competition with Interacting Agents, pp. 1–16 (2017)

Papalexakis, E.E., Pelechrinis, K., Faloutsos, C.: Location Based Social Network Analysis Using Tensors and Signal Processing Tools (2011)

Piovani, D., Molinero, C., Wilson, A.: Urban retail dynamics : insights from percolation theory and spatial interaction modelling, pp. 1–11 (2017)

Porta, S., Strano, E., Iacoviello, V., Messora, R., Latora, V., Cardillo, A., Scellato, S.: Street centrality and densities of retail and services in Bologna, Italy 36, 450–466 (2009). https://doi.org/10.1068/b34098

Rafiq, M., Ahmed, P.K.: Using the 7Ps as a generic marketing mix. Mark. Intell. Plan. 13(9), 4–15 (1992)

Rahman, K., Nayeem, M.A.: Finding suitable places for live campaigns using location-based services, pp. 1–6 (2017)

Rushton, G.: Analysis of spatial behavior by revealed space preference. Annals. Assoc. Am. Geogr. 59, 391–400 (1969)

Reilly, W.J.: Methods for the Study of Retail Relationships. University of Texas, Bureau of Business Research, Bulletin No. 2944, Austin (1929)

Satani, N., Uchida, A., Deguchi, A., Ohgai, A., Sato, S., Hagishima, S.: Commercial facility location model using multiple regression analysis. Science 22(3), 219–240 (1998)

Smithies, A.: Optimum location in spatial competition. J. Polit. Econ. 49(3), 423–439 (1941)

Suárez-Vega, R., Santos-Peñate, D.R., Dorta-González, P., Rodríguez-Díaz, M.: A multi-criteria GIS based procedure to solve a network competitive location problem. Appl. Geogr. 31(1), 282–291 (2011). https://doi.org/10.1016/j.apgeog.2010.06.002

Tabuchi, T.: Two-stage two-dimensional spatial competition between two firms. Reg. Sci. Urban Econ. 24(2), 207–227 (1994). https://doi.org/10.1016/0166-0462(93)02031-W

Taneja, S.: Technology Moves. In: Chain Store Age, pp. 136–137 (1999)

Teller, C., Reutterer, T.: The evolving concept of retail attractiveness: what makes retail agglomerations attractive when customers shop at them? J. Retail. Consum. Serv. 15(3), 127–143 (2008). https://doi.org/10.1016/j.jretconser.2007.03.003

Voorhees, A.: Geography of Prices and Spatial Interaction (1957)

Wang, F., Chen, C., Xiu, C., Zhang, P.: Location analysis of retail stores in Changchun, China: a street centrality perspective. Cities 41, 54–63 (2014). https://doi.org/10.1016/j.cities.2014.05.005

Wang, F., Chen, L.: Where to Place Your Next Restaurant? Optimal Restaurant Placement via Leveraging User-Generated Reviews, pp. 2371–2376 (2016)

Warnts, W.: Geography of prices and spatial interaction. In: Papers and Proceedings of the Regional Science Association, vol. 3 (1957)

Wilson, A.G.: A statistical theory of spatial distribution models. Transp. Res. 1(3), 253–269 (1967)

Wilson, A.G., Oulton, M.J.: The corner-shop to supermarket transition in retailing: the beginnings of empirical evidence. Environ. Plan. A 15, 265–274 (1983)

Xu, Y., Liu, L.: Gis Based Analysis of Store Closure : a Case Study of an Office Depot Store in Cincinnati, pp. 7–9, June 2004

Yu, Z., Tian, M., Wang, Z.H.U., Guo, B.I.N.: Shop-type recommendation leveraging the data from social media and location-based services. ACM Trans. Knowl. Discov. Data (TKDD) 11(1), 1–21 (2016)

Zhou, X., Zhang, L.: Crowdsourcing functions of the living city from Twitter and Foursquare data, 406, February 2016. https://doi.org/10.1080/15230406.2015.1128852

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Damavandi, H., Abdolvand, N., Karimipour, F. (2018). The Computational Techniques for Optimal Store Placement: A Review. In: Gervasi, O., et al. Computational Science and Its Applications – ICCSA 2018. ICCSA 2018. Lecture Notes in Computer Science(), vol 10961. Springer, Cham. https://doi.org/10.1007/978-3-319-95165-2_31

Download citation

DOI: https://doi.org/10.1007/978-3-319-95165-2_31

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-95164-5

Online ISBN: 978-3-319-95165-2

eBook Packages: Computer ScienceComputer Science (R0)