Abstract

The fast Fourier transform (FFT) is a widely used algorithm in signal processing applications. FFT hardware architectures are designed to meet the requirements of the most demanding applications in terms of performance, circuit area, and/or power consumption. This chapter summarizes the research on FFT hardware architectures by presenting the FFT algorithms, the building blocks in FFT hardware architectures, the architectures themselves, and the bit reversal algorithm.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Reverse Algorithm

- Twiddle Factors

- Triangular Matrix Representation

- CORDIC Algorithm

- Cooley-Tukey Algorithm

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The Fourier transform is one of the most important tools in digital signal processing. It is used to convert continuous signals in time domain into frequency domain. For discrete data, such as that in digital systems, the discrete Fourier transform (DFT) is used instead. The DFT transforms a finite sequence of equally spaced samples to a corresponding frequency domain representation as follows:

where N denotes the DFT size, x[n] is the input signal in the time domain, and X[k] is the output signal in the frequency domain, which is defined for the frequencies k ∈ [0, N − 1]. Note that both x[n] and X[k] are discrete signals. The coefficients \(W_N^{nk}\) are called twiddle factors and correspond to rotations in the complex plane defined as

where j denotes the imaginary unit.

The original signal x[n] can be recovered from X[k] by calculating the inverse discrete Fourier transform (IDFT):

The arithmetic complexity of the DFT in (1) is O(N 2). However, the DFT contains redundant operations.

The term fast Fourier transform (FFT) refers to various methods that reduce the computational complexity of the DFT. The most popular one is the Cooley-Tukey algorithm [16]. Section 2 discusses FFT algorithms and representations.

For the implementation of FFT hardware architectures, Sect. 3 discusses the building blocks that they consist of, i.e., butterflies, rotators and shuffling circuits. Later, Sect. 4 presents the FFT hardware architectures. They are divided into fully parallel, iterative and pipelined FFTs. The outputs of FFT hardware architectures are generally provided in bit-reversed order. Section 5 explain the bit reversal algorithm used to sort them out. Finally, Sect. 6 summarizes the main conclusions of this chapter.

2 FFT Algorithms

2.1 The Cooley-Tukey Algorithm

The Cooley-Tukey algorithm [16] decomposes the DFT into a set of smaller DFTs, when N is not a prime number. Let us assume that N = N 2 ⋅ N 1 and consider that n and k are calculated as

Then, (1) can be written as

By exploiting the periodicity of the twiddle factors, i.e., \(W_N^{\phi N_2} =W_{N_1}^{\phi }\), \(W_{N}^{\phi N_1}=W_{N_2}^{\phi }\) and \(W_N^{\phi N_1 N_2}= 1\), the equation is transformed into

which finally results in

where the N 1-point and N 2-point DFTs are referred to as inner and outer DFTs, respectively. As a result, an N-point DFT is broken down into N 2 DFTs of size N 1 and N 1 FFTs of size N 2, with twiddle factor multiplications in the middle. This is illustrated in Fig. 1 for N = 16, N 2 = 8 and N 1 = 2.

In general, N can be the product of several numbers, i.e., N = N m−1 ⋅ N m−2 ⋅… ⋅ N 0. Radix-r refers to the case in which N i = r, ∀i = 0…m − 1. The radix-r FFT is derived by expressing n and k as

This results in m =logr N nested r-point DFTs with twiddle factors in between. When r = 2, each nested 2-point DFT is calculated on N∕2 pairs of data and requires a total of N additions. This leads to an arithmetic complexity of \(O(N \log N)\) for the entire FFT, compared to O(N 2) in the DFT in (1).

Finally, the recursive application of Cooley-Tukey principle can be done by starting from the time domain sequence, which results in a decimation-in-time (DIT) decomposition. In a similar fashion, the decimation-in-frequency (DIF) decomposition is obtained by starting from the frequency sequence.

2.2 Representation Using Flow Graphs

Figures 2 and 3 show the flow graphs of the radix-2 DIF and DIT FFT algorithms, respectively. The numbers at the input of the graph represent the indexes of the input sequence, x[n], whereas those at the output are the frequencies, k, of the output signal X[k]. The flow graphs consist of a series of n stages, s ∈{1…n}. At each stage, additions, subtractions and rotations are calculated. Additions and subtractions come in pairs, forming the so called butterflies, which have the shape △ ▽. The upper output of the butterfly provides the sum of the inputs and the lower

part subtracts the lower input from the upper one.

Each number ϕ in between butterflies represents a rotation, which corresponds to a complex multiplication by

Rotations by ϕ ∈{0, N∕4, N∕2, 3N∕4} are called trivial rotations, due to the fact that they correspond to multiplications by 1, −j, −1 or j. Trivial rotations can easily be calculated by interchanging the real and imaginary parts of the inputs and/or changing their signs.

Finally, an index I is added to the left of the flow graph, together with its binary representation b n−1 b n−2…b 1 b 0. This index together with the stage is used to refer to the rotations in the flow graph. For instance, the rotation with index I = 14 at stage s = 1 in Fig. 2 is ϕ s(I) = ϕ 1(14) = 6. Through the chapter, the symbol (≡) is used to relate decimal numbers and their binary representation, e.g., I ≡ b n−1 b n−2…b 1 b 0.

When the FFT size N is large, it is not feasible to represent the FFT by a flow graph. In this case, the binary tree and the triangular matrix representations are useful tools to represent the algorithms in a simple manner.

Note that any FFT flow graph of the same radix will look the same. The only difference is where the twiddle factor multiplications are positioned. This can be seen by comparing Figs. 2 and 3. Hence, all the following algorithms only differ in the twiddle factor multiplications, although this may provide significant differences when implemented.

2.3 Binary Tree Representation

The binary tree representation [61, 83] is a generalization of the Cooley-Tukey algorithm. In the Cooley-Tukey algorithm, N is decomposed into a product of factors, i.e., N = N m−1 ⋅ N m−2 ⋅… ⋅ N 0. This results in nested DFTs where each DFT contains the previous one. Conversely, in the binary tree representation, N is only split in two factors, i.e., N = P ⋅ Q, which is analogous to (7). Then, both P and Q are again decomposed in two factors each. This process repeats iteratively forming a tree in which each node is split in two unless it is a leaf node.

A binary tree diagram [61] is an effective way of understanding the difference between FFT algorithms. For instance, Fig. 4 shows all the binary tree representations for N = 32. Note that each node has at most two branches.

In the binary tree representation, the upper node is assigned a value n to represent the 2n-point DFT. Then, n is split into p and q, where n = p + q, P = 2p and Q = 2q. After the first iteration, the remaining DFTs are again divided into smaller DFTs using the same procedure, so that the value of a node is the same as the sum of the sub-nodes.

The values of the sub-nodes can be chosen arbitrarily at each iteration. This leads to a large number of alternatives. In general, for N = 2n, the number of N-point FFT algorithms generated by using binary trees is [83]

which comes from all the possible selections at each iteration. For a 32-point FFT, the number of FFT algorithms according to (10) is 14, which corresponds to the cases in Fig. 4.

The twiddle factors at each stages are directly obtained from the binary tree. This is done by going across the binary tree from left to right. In this process, the final leafs of the tree represented by 1 are skipped, as they correspond to radix-2 DFT operations. For example, the sequence of numbers in Fig. 4a is 5, 4, 3, 2. Given this sequence, the number of angles of the corresponding twiddle factors is the power of these numbers. Thus, for this example the twiddle factors are W 32, W 16, W 8, and W 4. This corresponds to the DIF decomposition, as it is obtained by decimating a 2-point DFT at each iteration from the input samples towards the output frequencies. How to generate the rotation coefficients ϕ for any FFT stage is described in [83].

2.4 Triangular Matrix Representation

The triangular matrix representation [24] is based on the ideas that rotations can be moved among FFT stages. The triangular matrix representation of some typical 16-point FFT algorithms is shown in Fig. 5. Rows are numbered as x = 1, …, n − 1 from top to bottom and columns as y = 1, …, n − 1 from left to right. Each element in row x and column y, M xy, corresponds to a set of rotations that can be moved through several stages. Its value is the stage where these rotations are placed, which must be in the range x ≤ M xy ≤ y.

Accordingly, the rotation coefficients ϕ s(I) at any FFT stage s are calculated as

Note that the numbering of rows and columns differs from the original paper [24], where the variables i = n − x and j = n − y − 1 are used instead of x and y.

By applying Eq. (11) to Fig. 5a, the rotation coefficients are obtained as

which correspond to the radix-2 DIF algorithm in Fig. 2. For instance, if s = 1 and I = 14 ≡ 1110 = b 3 b 2 b 1 b 0, then according to (12), ϕ 1(14) = b 3 ⋅ [b 2 b 1 b 0] = 1 ⋅ [110] = 6. This corresponds to the rotation by 6 for s = 1 and I = 14 in Fig. 2. The rotations for the other algorithms in Fig. 5 can be derived in the same way.

In the triangular matrix representation, the radix-2 DIF FFT is the case where all the rotations are in the lowest possible stage, i.e., ∀x, y, M xy = x. Analogously, the radix-2 DIT FFT is the case where all the rotations are in the highest possible stage, i.e., ∀x, y, M xy = y. Finally, the radix-22 DIF FFT is the case where the rotations of the radix-2 DIF algorithm in odd stages are moved to the next even stage, except for those in the main diagonal, which cannot be moved.

Given that x ≤ M xy ≤ y, each element M xy can take y − x + 1 different values. By multiplying all the alternatives, the total number of algorithms that are represented by the triangular matrix as a function of n is

This is a large number of algorithms that includes, among others, all the algorithms representable by a binary tree.

2.5 The Radix in FFTs

The concept of radix has been used since the beginning of the FFT. It serves to distinguish different FFT algorithms. The radix is represented with a base and an exponent, i.e., r = ρ α. The base ρ indicates the size of the butterflies, i.e., the smallest DFT size that is used for the decomposition. Both base and exponent together provide the rotations at the FFT stages.

The first radices to be considered were radix-2, radix-4 and higher powers of two. These algorithms can be derived by the Cooley-Tukey algorithms as in (8).

Split radix [20] was also proposed in the early days. It combines radix-2 and radix-4 into an L-shaped butterfly. Split radix is known for having the least number of non-trivial rotations among FFT algorithms. However, it is seldom used in FFT hardware architectures due to the irregularity in the distribution of rotation.

Some years later, radix-22 was introduced for FFT hardware architectures [45]. From the algorithmic point of view, radix-22 is the same algorithm as radix-4 as both carry out exactly the same arithmetic operations [24]. However, both radices lead to different FFT architectures, due to the fact that radix-22 groups the calculations into 2-point butterflies and radix-4 groups them into 4-point butterflies.

The binary tree decomposition provides radix-2k, for \(k \in {\mathbb {N}}\). Radix-2k is obtained when any node v of the binary tree is split into k and v − k, being k constant. Notice that the concept of radix is not unique any more, as radix-2k may refer to different trees. Furthermore, many FFT algorithms based on the binary tree cannot be described by a single radix, but by a mixed radix, such as radix-24,23. As a result, many algorithms are better referred to by their tree than by their radix. The same happens to the triangular matrix representation, where there are even algorithms that cannot be described by a radix.

2.6 Non-power-of-two and Mixed-Radix FFTs

Most of the FFT designs consider FFT sizes that are powers of two and the rest of the chapter focuses on them. However, there are cases when N is a non-power-of-two and/or is a combination of powers of different radices [11, 88, 105, 106, 111, 112].

When N is a power of the radix, i.e., N = r k, the Cooley-Tukey algorithm is the most suitable approach, even when it is a non-power-of-two. When N is a product of powers of coprime numbers, i.e.,

where r i and r j are coprime ∀i ≠ j, then the Cooley-Tukey algorithm leads to twiddle factors between blocks of different radices. Conversely, the twiddle factors in between those blocks do not appear when using the prime factor algorithm (PFA) [6, 38]. Therefore, the PFA algorithm is recommended under these circumstances.

3 Building Blocks for FFT Hardware Architectures

FFT hardware architectures consist of butterflies, rotators and circuits for data shuffling. In the architectures, butterflies/rotators may be used to calculate one or several of the butterflies/rotations of the flow graph. Circuits for data shuffling are used to generate the data order to the butterflies and rotators in the architecture.

3.1 Butterflies

Butterflies in FFT architectures are characterized by their radix. A radix-ρ butterfly is a circuit with ρ inputs and ρ outputs that calculates an ρ-point DFT. Therefore, it corresponds to a direct implementation of the ρ-point DFT flow graph, where each addition/rotation in the flow graph is translated into an adder/rotator.

Radix-ρ butterflies are used in radix-ρ α FFTs, α ≥ 0. The most common radices for butterflies are radix-2 and radix-4, which cover all radix-2k and radix-4k FFTs. Radix-2 and radix-4 butterflies have the advantage that they only consist of adders, and no rotator is needed. In case of radix-4, its trivial rotation by − j can be embedded by changing the routing of the signals and the signs in the butterfly operations. Conversely, higher-radix butterflies include non-trivial rotators, which increases their cost.

3.2 Rotators

In a digital system with complex signals, a rotation by an angle α can be described as

where X + jY is the result of the rotation and \(C, S \in \mathbb {Z}\) are the real and imaginary part of the rotation coefficient C + jS.

Due the finite word length effects, the rotation by C + jS provides an approximation of the angle α with a certain error, being

where 𝜖 c and 𝜖 s are the relative approximation errors of the cosine and sine components, respectively, and R is the magnitude. The approximation error for a given rotation can then be calculated as [30]

Here, it should be noted that although it is common that R is a power-of-two, any magnitude can be used for the rotators, as long as the magnitude is the same for all the rotators at the same FFT stage [34].

It is often common to map the angle range into one or two quadrants. In this way, the rotators can often be simplified at the cost of a W 2 or W 4 rotator at the end. Which in turn sometimes may be integrated with the preceding butterfly as discussed above. Hence, in the following, the quadrant discussion is sometimes neglected.

The sine and cosine values are typically either stored in a memory or computed using an approximation, e.g., one of the methods discussed in the chapter “Arithmetic” [44]. The size of the sine and cosine memory can be reduced by utilizing octave symmetry, i.e.,

In this way, only sin and cos values for inputs between 0 and \(\frac {\pi }{4}\) must be stored or approximated.

Rotators in FFT hardware architecture usually calculate rotations by different angles at different time instants. These rotation angles are part of a the set of rotations

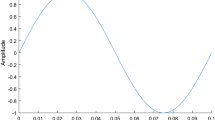

where L is the resolution of the twiddle factor. Note that W L refers to the entire set of L angles, whereas \(W_{L}^{\phi }\) refers to a single angle which is the ϕ-th angle of the set. Any twiddle factor W L divides the circumference in L equal parts and it contains the angles that create these divisions. The twiddle factors W 4, W 8 and W 16 are shown in Fig. 6.

The number of different rotation angles that a rotator has to compute depends on the selected algorithm and architecture. When this number is large, general rotators are used. When the number of angles is small, the rotators may be simplified. Next sections describe different techniques to implement general and simplified rotators. They are mainly based on the use of multipliers or the CORDIC algorithm. An overview of techniques to implement rotators can be found in [73].

3.2.1 Multiplier-Based General Rotators

The most straightforward approach is the direct implementation of Eq. (15), i.e.,

This requires four real-valued multipliers and two real-valued additions, as shown in Fig. 7a.

C and S are generally obtained as \(C = \lfloor R \cos \alpha \rceil \) and \(S = \lfloor R \sin \alpha \rceil \), where ⌊⋅⌉ represents a rounding operation and being R a power of 2. Allowing R to be a non-power-of-two, widens the search for efficient rotation coefficients and the approximation errors can be reduced [30].

Other alternatives are based on rewriting (20) [101] to reduced the number of multipliers from four to three. Among them, the more interesting ones are

and

Both cases include a common term in the equations for X and Y that only need to be calculated once. Thus, when C + S and C − S are precomputed, these cases require three real-valued multiplications and three real-valued additions. Otherwise, they require three real-valued multiplications and five real-valued additions. The structure for (21) is shown in Fig. 7b.

Lifting-based rotators [8, 41, 79] are another way to obtain low-complexity rotations. There exist several different ways to rewrite (15) using lifting, one being

where \(S = \sin {}(\alpha )\) and \(D = \frac {1 - \cos {}(\alpha )}{\sin {}(\alpha )} = \tan {}(\alpha /2)\). This requires three real-valued multiplications and three real-valued additions as shown in Fig. 7c. The other three standard alternatives have a similar form, but differ in how the coefficient corresponding to D is derived. Based on the angle, a structure can be selected to make sure that \(|D| \leq \frac {1-\cos \left (\frac {\pi }{4}\right )}{\sin \left (\frac {\pi }{4}\right )} \approx 0.414\). This avoids the large magnitudes obtained for certain angles, as in the example structure D →∞ when α → π.

3.2.2 Multi-Stage General Rotators

As opposed to the multiplier-based rotators in the previous section, multi-stage rotators perform only a part of the total rotation in each stage. Step k of such a rotator can rotate with a set of angles, typically δ k α k, where δ k ∈{−1, 1} or δ k ∈{−1, 0, 1}, i.e., a fixed angle, α k, can be rotated in either direction or rotated in either direction or no rotation at all. Based on the remaining angle to be rotated, z k, δ k is determined and the remaining angle after the rotation, z k+1, is updated.

The general structure of a rotation stage includes the calculation of x k+1, y k+1 and z k+1 and is shown in Fig. 8. Multi-stage rotators mainly involve the CORDIC algorithm and its variations.

The total angle of rotation is then

where 𝜖 ϕ is the phase error of the approximation.

The available techniques optimize for different goals. For example, the CORDIC algorithm initially discussed, selects the angle of rotation such that the rotator becomes simple. A later discussed technique instead selects the angles to be suited for FFT computations with length power-of-two, i.e., the angle resolution is on a grid with power-of-two resolution.

In the CORDIC algorithm [97], discussed from a general perspective in the chapter “Arithmetic” [44], each stage multiply with the coefficients C k + jS k = 2k + jδ k, where δ k ∈{−1, 1}. The corresponding angles are then

These angles have the property that any angle α can be expressed as a sum of them. This enables the CORDIC algorithm to rotate by any rotation angle. Furthermore, due to the simplicity of the coefficients C k + jS k, each rotation stage is calculated with only 2 adders/subtracters as

According to this, the scaling of each rotation stage is

The term 2k is generally compensated by removing the k LSBs after each rotation stage. The product of the scalings by \(1/\cos {}(\alpha _k)\) at all the stages produces a total scaling of approximately 1.64, which can be compensated by multiplying the output of the CORDIC rotator by

The CORDIC assumes that the initial angle z 0 = α is in the interval [−90∘, 90∘]. Otherwise, this is easily achieved by a trivial rotation by 180∘. Then, direction of the rotations δ k are calculated for k = 0, …, M according to

where z k is the remainder rotation angle at the input of stage k, z M+1 = 𝜖 ϕ, and sign(η) = 1 if η ≥ 0 and sign(η) = −1 if η < 0.

There are multiple variations of the CORDIC algorithm. Some of the main modifications to the CORDIC algorithms are introduced next, and surveys on CORDIC techniques can be found in [2, 76]. For some of the mentioned approaches it is not straightforward to determine the rotation parameters at run-time. Hence, for these methods it is required to perform the design offline and store the control signals in memory rather than the angles. This approach is naturally possible for all techniques, and, as the sequence of angles is often known beforehand, most likely advantageous compared to storing the angle values.

The redundant CORDIC [63, 91] considers that δ k ∈{−1, 0, 1} [91] or even δ k ∈{−2, −1, 0, 1, 2} [63]. This enables several rotation angles at each CORDIC stage. However, the scaling for different angles is different, which demands a specific circuit for scaling compensation. The extended elementary angle set (EEAS) CORDIC [104] and the mixed-scaling-rotation (MSR) CORDIC [66, 81] also follow the idea of increasing the number of rotation angles per rotation stage.

The memoryless CORDIC [27] removes the need for rotation memory to store the FFT rotation angles. Instead, the control signals δ k are generated from a counter. This is advantageous for large FFTs, which have stages with a large number of rotations.

The modified vector rotational (MRV) CORDIC [103] allows for skipping and repeating CORDIC stages, whereas the hybrid CORDIC [47, 89] divides the rotations into a coarse and a fine rotations. These techniques reduce the number of stages and, therefore, the latency of the CORDIC.

The CORDIC II [33] proposes new types of rotation stages: Friend angles, uniformly scaled redundant (USR) CORDIC and nanorotations. They allow for both a low latency and a small number of adders.

Finally, the base-3 rotators [57] consider an elementary angle set that is different to that of the CORDIC. All the rotations are generated by combining a small set of FFT angles. This set fits better the rotation angles of the FFT than that of the CORDIC, which results in a reduction in the rotation error, number of adders and latency of the circuit.

3.2.3 Simplified Multiplier-Based Rotators

Naturally, the real-valued multipliers in Sect. 3.2.1 can be implemented using shift-and-add multiplication, as discussed in the chapter “Arithmetic” [44]. This is specially useful when the rotator only needs to rotate by a single angle.

Initially, consider a rotation by \(\frac {\pi }{4}\). As \(\sin \left (\frac {\pi }{4}\right ) = \cos \left (\frac {\pi }{4}\right )\) only one multiplication coefficient is required for each input (or output). Hence, either each input is multiplied by \(\sin \left (\frac {\pi }{4}\right )\) and then the results are added and subtracted, as shown in Fig. 9a, or the inputs are first added and subtracted and then multiplied by \(\sin \left (\frac {\pi }{4}\right )\), as shown in shown in Fig. 9b. The constant multiplication can be implemented using a optimal single constant multiplier from [42], while the best shift-and-add approximation with a given number of additions for the exact coefficient \(\sin \left (\frac {\pi }{4}\right )\) can be found using the approach in [43].

For a general angle, the rotator shown in Fig. 7a can be used as a starting point. Now, both the multipliers sharing the same input can be simultaneously realized using multiple constant multiplication (MCM) techniques. This is illustrated in Fig. 10a, where the dashed box indicates the two multipliers realized using MCM. An identical MCM block is used for the other input. General MCM algorithms include [40, 98], while the algorithm in [18] is specifically tailored for two constants. An alternative view of the problem is to implement a sum-of-product block for the two multipliers going to the same output, as illustrated in Fig. 10b. However, as the MCM and the sum-of-product problems are dual to each other, exactly the same number of adders are expected. A third option is to consider the problem as a constant matrix multiplication problem [5, 60].

For the rotators in Fig. 7b, c, no sharing can be done between the multipliers. However, due to the initially reduced complexity of the rotator, it may still happen that the total complexity is reduced. It should also be noted that the computations of the lifting-based structure in (23) can be merged to one matrix.

where E = 1 + DS and F = D(2 + SD). This forms another option to realize in any of the ways mentioned above.

When more than one angle is considered it is still possible to use shift-and-add techniques. Consider a W 8 rotator with indices ϕ ∈{0, 1}. For ϕ = 0, the inputs are simply bypassed to the outputs. For ϕ = 1 one of the approaches in Fig. 9 can be used. Then, the correct rotation result is selected by using a multiplexer, as shown in Fig. 11, where the \(\frac {\pi }{4}\)-rotator in Fig. 9a is used.

W 8 rotator based on Fig. 9a

For several non-trivial angles, the naïve approach is to implement all different coefficients by using shift-and-add as an MCM problem and then select the correct angle by a multiplexer. However, the multiplexers can be merged with the shift-and-add network to significantly reduce the complexity [77, 96].

Of special interest is to note that it may be possible to find coefficients with longer word length, but with the same or smaller number of adders [34, 43]. Also, as earlier discussed, the magnitude may be selected as a non-power-of-two to further simplify the computations [34].

3.2.4 Simplified Multi-Stage Rotators

All the techniques mentioned in Sect. 3.2.2 are based on simple rotator stages. Clearly, for a constant coefficient the best selection of stages can be found. This has been explicitly proposed for CORDIC-based rotators [48, 75], although it can be easily generalized to arbitrary types of stages. If several angles are to be realized at different time instances it is of benefit having similar structure for the stages such that multiplexers can be easily introduced.

3.2.5 Rotators Based on Trigonometric Identities

Approaches based on trigonometrical identities [78, 84] search for expressions that are shared among different rotation angles. As a result, a simplified version of the rotator is obtained, which includes a reduced number of adders, multiplexers and multiplications by real constants. For instance, the twiddle factor W 16 can be reduced to constant multiplications by \(\cos (\pi /8)\) and/or \(\sin (\pi /8)\) [84].

3.3 Shuffling Circuits

The purpose of the shuffling circuits in FFT architectures is to provide the data in the correct order needed for FFT stages. At each FFT stage, butterflies operate on pairs of data whose index I differ in b n−s [29]. This can be observed in Fig. 2. For instance, the index of pairs of inputs to butterflies in stage 1 differs in b n−s = b 4−1 = b 3. As I ≡ b 3 b 2 b 1 b 0, I = 0 ≡ 0000 and I = 8 ≡ 1000 differ in b 3 and are processed together in a butterfly at the first stage.

As different FFT stages demand different data orders, circuits for data permutation need to be included in between stages. These circuits have been studied by using life-time analysis and register allocation [74, 80], Kronecker products [39, 52,53,54, 82, 87, 92, 93] and bit-dimension permutations [21,22,23]. The following explanation is based on the latter, and follows the theory in [23].

Bit-dimension permutations are permutations on a group of 2n data defined by a permutation of the n bits that represent the index of those data in binary. In FFTs, each stage processes N = 2n data that we index with I = b n−1…b 1 b 0 as in Fig. 2. For these data, we can define their position [23]. For instance, \({\mathcal {P}} \equiv b_0 b_3 b_2 b_1\) defines a specific data order, where each index I ≡ b 3 b 2 b 1 b 0 is in position \({\mathcal {P}}\). Thus, I = 8 ≡ 1000 is in \({\mathcal {P}} \equiv 0100\). For parallel data, a vertical bar separates serial and parallel data, e.g., \({\mathcal {P}} \equiv b_0 b_3 b_2 | b_1\). The first part of the position until the bar indicates the time of arrival, which is defined as the relative time to the arrival of the first sample to a given point of the circuit. The second part after the bar indicates the terminal of arrival. The number of parallel dimensions p corresponds to the number of bits after the bar. Thus, for \({\mathcal {P}} \equiv b_0 b_3 b_2 | b_1\), the number of parallel dimensions is p = 1 and I = 8 ≡ 1000 is in \({\mathcal {P}} = 010|0\), i.e., it arrives at terminal \(T({\mathcal {P}}) = 0\) at time \(t({\mathcal {P}}) = 2 \equiv 010\).

Different positions define different orders, and the data order is changed when permuting the bits of the position. This is achieved by the shuffling circuits in FFT architectures. Figure 12 shows the main shuffling circuits used in FFT hardware architectures.

The circuit in Fig. 12a is used to calculate a serial-serial bit-dimension permutation. It consists of a buffer of length L and two multiplexers. This circuit is used to change the position of pairs of data separated by L clock cycles. This is done when one of the data is at the input of the circuit and the other one is at the output of the buffer. Then S = 0 is selected so that the position change. Otherwise, when S = 1 data passes through the buffer maintaining the order.

The length of the buffer defines the bit-dimension permutation that is carried out. If x n−1 is the first bit from the left and x 0 is the last one, then a serial-serial permutation that interchanges x j and x k, j > k ≥ p has a buffer length [23]

The circuit in Fig. 12b carries out a serial-parallel permutation. Figure 12c shows how it works: The groups of data A, B, C and D have L data in series each. First, the L data A and C arrive to the circuit at the upper and lower inputs, respectively. Then the L data B and D arrive. The circuit first delays the lower data, then it swaps the groups B and C, and finally it delays the upper part. The result is that data in groups B and C are interchanged.

The length of the buffers for interchanging x j and x k, j ≥ p > k is [23]

Finally, parallel-parallel permutations interchange parallel data flows. This does not require any hardware, as it can be hard wired.

4 FFT Hardware Architectures

There are three main types of FFT hardware architectures: Pipelined, iterative and fully parallel. Next section discusses when to choose each type and the following sections describe the different types.

4.1 Architecture Selection

Table 1 classifies the types of FFT hardware architectures in terms of the input data flow and the number of parallel samples, P. The higher P, the higher the throughput and also the larger the area of the circuit.

Iterative FFT hardware architectures loads data into a memory, then processes them and finally outputs them. During the processing, new data cannot be loaded. Therefore, iterative FFT architectures are suitable for processing data bursts.

The other architectures process data in a continuous flow. The difference among them is the parallelization P. The selection of the architecture depends on the expected performance. The throughput is calculated as Th = P ⋅ f clk where f clk is the clock frequency of the system and P is generally a power of 2. Thus, if the minimum throughput that the system must achieve is Thmin, then P is obtained as

4.2 Fully Parallel FFT

Fully parallel FFT architectures correspond to the direct implementation of the FFT flow graph, i.e., each multiplication/addition in the flow graph is implemented by a separated multiplier/adder. Therefore, the number of hardware components is of order \(O(N \log N)\). Fully parallel FFT architectures offer the maximum parallelization of the FFT algorithm. As a consequence, they provide the maximum throughput and the minimum latency among FFT architectures.

The implementation of rotators as shift-and-add and the selection of the FFT algorithm play an important role in the design of fully parallel FFTs. As each rotator calculates a rotation by a single angle, it is beneficial to use simplified rotators. Complementary to this, the selection of the FFT algorithms determines the number of non-trivial rotations in the fully parallel FFT. Therefore, algorithms with a small number of non-trivial rotations lead to more hardware-efficient implementations.

4.3 Iterative FFT Architectures

Iterative FFT architectures [15, 35, 46, 49, 55, 71, 72, 85, 93, 95] are also called memory-based or in-place FFT architectures. We suggest to call them iterative, as this is the term that reflects their nature better. Although the term memory-based is widely used, we prefer to avoid it due to the facts that non-iterative FFTs may also use memories, and iterative FFTs may use delays instead of memories.

Iterative FFT architectures generally consist of a memory or bank of data memories. Data are read from memory, processed by butterflies and rotators, and stored again in memory. This process repeats iteratively until all the stages of the FFT algorithm are calculated. The advantage of iterative FFTs is the reduction in the number of butterflies and rotators, as they are reused for different stages of the FFT.

A simple iterative FFT architecture is shown in Fig. 13. It consists of a memory and a processing element (PE), which computes the butterfly and rotation. As seen in Fig. 13, after every iteration data are stored in memory so it is necessary to compute whole FFT before it receives new samples. Thus, the memory-based architecture is unable to compute the FFT when data arrives continuously at the input. For a continuous flow, the iterative FFT requires additional memory to store the incoming data while the FFT is being calculated. If the processing time is longer than the time between FFTs, a larger processing element is also needed in order to handle the data flow.

Considering that the processing element is a radix-r butterfly and a set of rotators, the number of iterations of an iterative FFT architecture is calculated as

and the number of clock cycles to process each iteration is N∕r, which leads to a total processing time of

The loading time depends on the FFT size and on the number of input samples that are loaded in parallel to the memory bank:

When reading output data from the memories and writing the new input data are done simultaneously, the latency of the iterative FFT in clock cycles is

and, as N samples are processed every Lat clock cycles, the throughput in samples per clock cycle is

To increase the throughput and decrease the latency in iterative FFT architectures, high-radix processing elements are used. For instance, radix-16 is used in [49]. However, this also increases the amount of hardware of the FFT. Therefore, there is a trade-off between performance and hardware complexity.

The highest degree of parallelization for iterative FFT architectures consists in calculating simultaneously all the operations of the same stage of the FFT. This is done by the so called column FFT [3, 90], which computes the FFT by means of a column of processing elements composed of butterflies and rotators.. This architecture allows fast computation of the FFT, but requires a large number of hardware components, being the number of butterflies and rotators of order O(N).

Finally, the radix-r butterfly in the PE processes r samples in parallel. Thus, r samples must be read from and written to memory each clock cycle. This requires to divide the memory into r memory banks that are accessed simultaneously. Furthermore, data must be written in memory in the same addresses that are read. This demands a conflict-free access strategy. Such memory organization can be studied from [15, 35, 46, 49, 55, 71, 72, 85, 93, 95].

4.4 Pipelined FFT Architectures

Pipelined FFT architectures [1, 13, 14, 17, 23, 25, 26, 29, 31, 32, 36, 45, 51, 58, 62, 64, 65, 67,68,69, 86, 94, 99, 107,108,109] consist of a set of n =logρ N stages connected in series, where ρ is the base of the radix r = ρ α. In a pipelined FFT, each stage of the architecture computes one stage of the FFT algorithm. The main advantage of pipelined architectures is that they process a continuous flow of data, with a good trade-off between performance and resources.

There are three main types of pipelined FFT architectures: feedback (FB), feedforward (FF) and serial commutator (SC). First, feedback architectures [13, 14, 17, 26, 45, 62, 64, 68, 69, 86, 94, 99, 107, 109] are characterized by their feedback loops, i.e., some outputs of the butterflies are fed back to the memories at the same stage. Feedback architectures are divided into single-path delay feedback (SDF) [17, 26, 45, 86, 109], which process a continuous flow of one sample per clock cycle, and multi-path delay feedback (MDF) [13, 14, 62, 64, 68, 69, 94, 99, 107], which process several samples in parallel. Second, feedforward architectures [1, 9, 10, 17, 25, 29, 31, 36, 45, 51, 58, 70, 86, 108] do not have feedback loops and each stage passes the processed data to the next stage. Single-delay commutator (SDC) FFTs are used for serial data [9, 10, 17, 70] and, multi-path delay commutator (MDC) FFTs [1, 25, 29, 31, 36, 45, 51, 58, 86, 108] are used to process several data in parallel. Finally, SC FFT architectures [32] are characterized by the use of circuits for bit-dimension permutation of serial data.

Pipelined FFT architectures can also be classified into serial pipelined and parallel pipelined FFT architectures. Next sections use this classification, which allows for comparing the hardware resources of FFTs with the same performance.

4.4.1 Serial Pipelined FFT Architectures

Serial pipelined FFT architectures consists of SDF, SDC and SC FFT architectures. These architectures are characterized by their relatively low number of components (adders, rotators and memory) and a throughput of 1 sample per clock cycle, which allows for high data rates of MSamples/s.

An example of SDF FFT architecture is shown in Fig. 14 for N = 64. It consists of n =log2 N stages with radix-2 butterflies (R2), rotators and buffers. This architecture can implement radix-2k FFT algorithms, including radix-2. The difference among FFT algorithms is reflected in the rotations calculated at each stage. Table 2 shows the twiddle factors for typical DIF algorithms. Note that W 4 corresponds to trivial rotations, which leads to simple rotators. Thus, the complexity of radices 22, 23 and 24 is smaller than that of radix-2.

The twiddle factors for DIT algorithms are the same as DIF ones, where the twiddle factor at stage s in the DIT case corresponds to that one in stage n − s in the DIF case.

The internal structure of a SDF stage is shown in Fig. 15. It consists of a buffer, a radix-2 butterfly, two multiplexers, a rotator and eventually its rotation memory.

The buffer at stage s has length

The reason is that L s input data are loaded to the buffer, causing a delay of those data. After L s clock cycles, the output of the buffer is processed in the butterfly together with the input data for L s clock cycles. During these clock cycles, one output of the butterfly is sent to the rotator and the other output of the butterfly is fed back to the buffer. Later, the values that go through the buffer are sent to the rotator, while a new sequence is loaded to the buffer. Therefore, the buffer is reused for inputting and outputting data.

This data management can be related to the data flow of the FFT algorithm: If we consider data arriving in series from top to bottom in the data flow of Fig. 2 then, at each stage, groups of L s data must be delayed L s clock cycles. This makes them arrive to the input of the butterfly at the same time as the data that they have to be operated with. For instance, if sample x[0] at stage s = 1 is delayed L s = 2n−s = 24−1 = 8 clock cycles, then it may be input to the butterfly together with x[8]. After the butterfly calculation, data are ordered in series again by delaying the lowest output of the butterfly L s clock cycles.

In terms of hardware components, the radix-2k SDF FFT requires one butterfly per stage, which results in 2log2 N complex adders, a total memory of N − 1, which is the sum of all the buffer lengths, and a number of rotators that depends on the algorithm itself.

Figure 16 shows a 64-point radix-4 SDF FFT architecture. In this case, the number of stages is logρ N =log464 = 3. The data management is analogous to the radix-2k SDF FFT: Data are delayed so that all 4 data into a butterfly arrive at the same clock cycle.

At each stage, the radix-4 butterfly requires 8 complex adders, leading to a total of 8(log4 N) complex adders for the entire FFT. For the data, radix-4 uses 3 memories of size 4n−s at stage s, which leads to a total FFT memory of N − 1.

The second type of serial FFT architectures is SDC [9, 10, 17, 70]. It is based on separating the data stream in two parallel data streams with the real and imaginary parts of the samples, respectively. An explanation of radix-2k SDC and SDF architectures can be found in [17]. Generally, SDF FFTs are preferred to SDC ones, due to the larger data memory in SDC FFT architectures.

The third type of serial FFT architectures is SC [32]. Figure 17 shows a 16-point DIF serial commutator FFT. It uses circuits for bit-dimension permutation of serial data, which were described in Sect. 3.3.

The SC FFT is based on the idea of placing in consecutive clock cycles pairs of data that must be processed in the butterflies. Figure 18 shows the data management of the SC FFT in Fig. 17. The last column in Fig. 18 shows the frequencies k of X[k]. The rest of the numbers represent the data index I according to the definition in Fig. 2. The order of arrival to each FFT stage is from top to bottom. Therefore, x[0] and x[8] are the first and second inputs to the first stage, respectively. Butterflies operate on consecutive data. This allows for calculating the butterflies in two clock cycles, which halves the hardware complexity with respect to SDF FFTs. Note that the architecture in Fig. 17 uses half-butterflies (1/2 R2) instead of (R2). Rotators are also calculated in two clock cycles and its hardware is halved. The shuffling circuits in Fig. 17 delay three, one, and seven clock cycles, respectively. This can be observed in Fig. 18, where data that are exchanged are separated these numbers of clock cycles at the corresponding stages. Further details are shown in [32].

Table 3 compares serial pipelined N-point FFT architectures. The table shows the trade-off between area and performance. Area is measured in terms of the number of complex rotators, adders and memory addresses, whereas performance is represented by throughput and latency. The throughput is 1 sample per clock cycle for all the architectures, which makes them comparable in terms of hardware resources.

As can be observed in the table, the order of magnitude of all parameters is the same for all architectures. The number of rotators and adders has order \(O(\log N)\) and the memory has order O(N). For large FFTs, the data memory takes up most of the area of the circuit, so it is preferable to use an architecture with a small memory. For small N, most of the FFT area is due to rotators, whose area is always larger than the area of the adders.

4.4.2 Parallel Pipelined FFT Architectures

This section discusses parallel FFT architectures, i.e., MDF and MDC. These architectures are characterized by their high throughputs. They can process P parallel samples in continuous flow and achieve a throughput of Th = P ⋅ f clk, reaching rates of GSamples/s.

MDF FFT architectures [13, 14, 62, 64, 68, 69, 94, 99, 102, 107] consists of multiple SDF paths in parallel. Thus, they work in a similar way as SDF FFT architectures. The only difference is that the parallel SDF paths are interconnected either at some intermediate stages [102] or in the last stages.

MDC FFT architectures [1, 25, 29, 31, 36, 45, 51, 58, 86, 108] forward data to the next stage instead of feeding it back to the buffer of the same stage. Figure 19 shows a 16-point 2-parallel radix-2 DIF MDC FFT architecture. It consists of radix-2 butterflies, rotators and shuffling circuits for serial-parallel permutations. This architecture processes 2 samples per clock cycle in a continuous flow.

Higher throughput is achieved by increasing the parallelization. Figure 20 shows a 16-point 4-parallel radix-22 feedforward architecture. This architecture processes 4 samples per clock cycle in a continuous flow.

Table 4 compares parallel pipelined FFT hardware architectures. The architectures are classified into 4-parallel and 8-parallel ones. The table includes the number of adders and rotators. W 16 and W 8 rotators are separated from general rotators due to its lower complexity. The number of complex adders is related to the architecture type: MDC FFTs have 100% utilization of butterflies and usually require less adders than MDF FFTs. The amount and complexity of the rotators depend on the radix and on the FFT size. Radices-24 and 23 are usually the best options. They achieve the least number of general rotators with some overhead of W 16 and W 8 rotators. New approaches focus on reducing the amount rotators in parallel pipelined FFT architectures even further [31]. Finally, for most parallel pipelined FFT architectures the total data memory size is N − P.

5 Bit Reversal for FFT Architectures

The outputs of FFT hardware architectures are generally provided in bit-reversed order. The bit reversal algorithm [37] is used to sort them out.

5.1 The Bit Reversal Algorithm

The bit reversal of N = 2n indexed data is an algorithm that reorders the data according to a reversing of the bits of the index [37]. This means that any sample with index I ≡ b n−1…b 1 b 0 moves to the place BR(I) ≡ b 0 b 1…b n−1. Note that the bit reversal is an inversion operation, i.e., BR(x) = BR−1(x). Therefore, if data are in natural order, the bit reversal algorithm obtains them in bit-reversed order and vice versa. For instance, the bit reversal of (0, 1, 2, 3, 4, 5, 6, 7) is (0, 4, 2, 6, 1, 5, 3, 7) and the bit reversal of the latter set is the former.

5.2 Bit Reversal for Serial Data

For a hardware circuit that receives a series of N data in bit-reversed order, the bit reversal of the data is calculated by the permutation:

A first option to calculate the bit reversal of a series of data is to use a double buffering strategy [9, 59]. This consists of 2 memories of size N where even and odd FFT output sequences are written alternatively in the memories. The bit reversal can also be calculated using a single memory of size N. This is achieved by generating the memory address in natural and bit-reversed order, alternatively for even an odd sequences [7]. For SDC FFT architectures, the output reordering can be calculated by using two memories of N∕2 addresses [9, 70]. Alternatively, the output reordering circuit can be integrated with the last stage of the FFT architecture [9, 10].

The optimum solution in terms of memory/delays for the bit reversal of serial data [28] consists of using a series of j = ⌊n∕2⌋− 1 circuits for serial-serial bit-dimension permutations. Each of them carries out a permutation of the bits x j and x n−1−j, which requires a buffer of length

By adding the buffer lengths, the total number of delays for even n is

and for odd n it is

5.3 Bit Reversal for Parallel Data

For parallel data, the bit reversal permutation is the same as for serial data, with the difference that the p less significant bits are parallel dimensions. As for serial data, some solutions are based on using memories [50, 108] and other ones are based on using buffers [12].

The optimum solution in terms of memory/delays for the bit reversal of parallel data [12] uses circuits for serial-serial permutations and parallel-parallel permutation. As in the bit reversal of serial data, these circuits are used to interchange bits x j and x n−1−j for j = ⌊n∕2⌋− 1. Assuming that p < n∕2, a serial-serial permutation is carried out when 0 ≤ j < p and a serial-parallel one when p ≤ j ≤⌊n∕2⌋− 1. As a result, the total numbers of delays for even n is

and for odd n it is

6 Conclusions

More than 50 years after the first FFT algorithms were proposed, the design of FFT hardware architectures is still an active research field that involves multiple research topics. They include the study and selection of FFT algorithms, the design of rotators in hardware, the design of new FFT architectures and the data shuffling, including the bit reversal algorithm. Nowadays, new FFT algorithms as well as new representations for these algorithms are explored. The most common FFT algorithms are radix-2k, and there is an increasing interest in non-power-of-two FFTs. The area in FFT architectures is reduced by implementing rotators as shift-and-add operations. Most approaches are based on simplifying a complex multiplier or on the CORDIC algorithm. New FFT hardware architectures that achieve fully utilization of butterflies and reduction of the number of rotators and their complexity have been proposed during the last years. Likewise, the optimum circuits for bit reversal have been proposed recently, and the research on shuffling circuits for the FFT is still an open research field.

References

Ahmed, T., Garrido, M., Gustafsson, O.: A 512-point 8-parallel pipelined feedforward FFT for WPAN. In: Proc. Asilomar Conf. Signals Syst. Comput., pp. 981–984 (2011)

Andraka, R.: A survey of CORDIC algorithms for FPGA based computers. In: Proc. ACM/SIGDA Int. Symp. Field-Programmable Gate Arrays, pp. 191–200. ACM (1998)

Argüello, F., Bruguera, J., Doallo, R., Zapata, E.: Parallel architecture for fast transforms with trigonometric kernel. IEEE Trans. Parallel Distrib. Syst. 5(10), 1091–1099 (1994)

Bi, G., Jones, E.: A pipelined FFT processor for word-sequential data. IEEE Trans. Acoust., Speech, Signal Process. 37(12), 1982–1985 (1989)

Boullis, N., Tisserand, A.: Some optimizations of hardware multiplication by constant matrices. IEEE Trans. Comput. 54(10), 1271–1282 (2005)

Burrus, C., Eschenbacher, P.: An in-place, in-order prime factor FFT algorithm. Proc. IEEE Int. Symp. Circuits Syst. 29(4), 806–817 (1981)

Chakraborty, T.S., Chakrabarti, S.: On output reorder buffer design of bit reversed pipelined continuous data FFT architecture. In: Proc. IEEE Asia-Pacific Conf. Circuits Syst., pp. 1132–1135. IEEE (2008)

Chan, S.C., Yiu, P.M.: An efficient multiplierless approximation of the fast Fourier transform using sum-of-powers-of-two (SOPOT) coefficients. IEEE Signal Process. Lett. 9(10), 322–325 (2002)

Chang, Y.N.: An efficient VLSI architecture for normal I/O order pipeline FFT design. IEEE Trans. Circuits Syst. II 55(12), 1234–1238 (2008)

Chang, Y.N.: Design of an 8192-point sequential I/O FFT chip. In: Proc. World Congress Eng. Comp. Science, vol. II (2012)

Chen, J., Hu, J., Lee, S., Sobelman, G.E.: Hardware efficient mixed radix-25/16/9 FFT for LTE systems. IEEE Trans. VLSI Syst. 23(2), 221–229 (2015)

Cheng, C., Yu, F.: An optimum architecture for continuous-flow parallel bit reversal. IEEE Signal Process. Lett. 22(12), 2334–2338 (2015)

Cho, S.I., Kang, K.M.: A low-complexity 128-point mixed-radix FFT processor for MB-OFDM UWB systems. ETRI J. 32(1), 1–10 (2010)

Cho, S.I., Kang, K.M., Choi, S.S.: Implementation of 128-point fast Fourier transform processor for UWB systems. In: Proc. Int. Wireless Comm. Mobile Comp. Conf., pp. 210–213 (2008)

Cohen, D.: Simplified control of FFT hardware. IEEE Trans. Acoust., Speech, Signal Process. 24(6), 577–579 (1976)

Cooley, J.W., Tukey, J.W.: An algorithm for the machine calculation of complex Fourier series. Math. Comput. 19, 297–301 (1965)

Cortés, A., Vélez, I., Sevillano, J.F.: Radix r k FFTs: Matricial representation and SDC/SDF pipeline implementation. IEEE Trans. Signal Process. 57(7), 2824–2839 (2009)

Dempster, A.G., Macleod, M.D.: Multiplication by two integers using the minimum number of adders. In: Proc. IEEE Int. Symp. Circuits Syst., vol. 2, pp. 1814–1817 (2005)

Despain, A.M.: Fourier transform computers using CORDIC iterations. IEEE Trans. Comput. C-23, 993–1001 (1974)

Duhamel, P., Hollmann, H.: ’Split radix’ FFT algorithm. Electron. Lett. 20(1), 14–16 (1984)

Edelman, A., Heller, S., Johnsson, L.: Index transformation algorithms in a linear algebra framework. IEEE Trans. Parallel Distrib. Syst. 5(12), 1302–1309 (1994)

Fraser, D.: Array permutation by index-digit permutation. J. Assoc. Comp. Machinery (ACM) 23(2), 298–309 (1976)

Garrido, M.: Efficient hardware architectures for the computation of the FFT and other related signal processing algorithms in real time. Ph.D. thesis, Universidad Politécnica de Madrid (2009)

Garrido, M.: A new representation of FFT algorithms using triangular matrices. IEEE Trans. Circuits Syst. I 63(10), 1737–1745 (2016)

Garrido, M., Acevedo, M., Ehliar, A., Gustafsson, O.: Challenging the limits of FFT performance on FPGAs. In: Int. Symp. Integrated Circuits, pp. 172–175 (2014)

Garrido, M., Andersson, R., Qureshi, F., Gustafsson, O.: Multiplierless unity-gain SDF FFTs. IEEE Trans. VLSI Syst. 24(9), 3003–3007 (2016)

Garrido, M., Grajal, J.: Efficient memoryless CORDIC for FFT computation. In: Proc. IEEE Int. Conf. Acoust. Speech Signal Process., vol. 2, pp. 113–116 (2007)

Garrido, M., Grajal, J., Gustafsson, O.: Optimum circuits for bit reversal. IEEE Trans. Circuits Syst. II 58(10), 657–661 (2011)

Garrido, M., Grajal, J., Sánchez, M.A., Gustafsson, O.: Pipelined radix-2k feedforward FFT architectures. IEEE Trans. VLSI Syst. 21(1), 23–32 (2013)

Garrido, M., Gustafsson, O., Grajal, J.: Accurate rotations based on coefficient scaling. IEEE Trans. Circuits Syst. II 58(10), 662–666 (2011)

Garrido, M., Huang, S.J., Chen, S.G.: Feedforward FFT hardware architectures based on rotator allocation. IEEE Trans. Circuits Syst. I 65(2), 581–592 (2018)

Garrido, M., Huang, S.J., Chen, S.G., Gustafsson, O.: The serial commutator (SC) FFT. IEEE Trans. Circuits Syst. II 63(10), 974–978 (2016)

Garrido, M., Källström, P., Kumm, M., Gustafsson, O.: CORDIC II: A new improved CORDIC algorithm. IEEE Trans. Circuits Syst. II 63(2), 186–190 (2016)

Garrido, M., Qureshi, F., Gustafsson, O.: Low-complexity multiplierless constant rotators based on combined coefficient selection and shift-and-add implementation (CCSSI). IEEE Trans. Circuits Syst. I 61(7), 2002–2012 (2014)

Garrido, M., Sánchez, M., López-Vallejo, M., Grajal, J.: A 4096-point radix-4 memory-based FFT using DSP slices. IEEE Trans. VLSI Syst. 25(1), 375–379 (2017)

Glittas, A.X., Sellathurai, M., Lakshminarayanan, G.: A normal I/O order radix-2 FFT architecture to process twin data streams for MIMO. IEEE Trans. VLSI Syst. 24(6), 2402–2406 (2016)

Gold, B., Rader, C.M.: Digital Processing of Signals. New York: McGraw Hill (1969)

Good, I.J.: The interaction algorithm and practical Fourier analysis. J. Royal Statistical Society B 20(2), 361–372 (1958)

Granata, J., Conner, M., Tolimieri, R.: Recursive fast algorithm and the role of the tensor product. IEEE Trans. Signal Process. 40(12), 2921–2930 (1992)

Gustafsson, O.: A difference based adder graph heuristic for multiple constant multiplication problems. In: Proc. IEEE Int. Symp. Circuits Syst., pp. 1097–1100. IEEE (2007)

Gustafsson, O.: On lifting-based fixed-point complex multiplications and rotations. In: Proc. IEEE Symp. Comput. Arithmetic (2017)

Gustafsson, O., Dempster, A.G., Johansson, K., Macleod, M.D., Wanhammar, L.: Simplified design of constant coefficient multipliers. Circuits Syst. Signal Process. 25(2), 225–251 (2006)

Gustafsson, O., Qureshi, F.: Addition aware quantization for low complexity and high precision constant multiplication. IEEE Signal Processing Letters 17(2), 173–176 (2010)

Gustafsson, O., Wanhammar, L.: Arithmetic. In: S.S. Bhattacharyya, E.F. Deprettere, R. Leupers, J. Takala (eds.) Handbook of Signal Processing Systems, third edn. Springer (2018)

He, S., Torkelson, M.: Design and implementation of a 1024-point pipeline FFT processor. pp. 131–134 (1998)

Hsiao, C.F., Chen, Y., Lee, C.Y.: A generalized mixed-radix algorithm for memory-based FFT processors. IEEE Trans. Circuits Syst. II 57(1), 26–30 (2010)

Hsiao, S.F., Lee, C.H., Cheng, Y.C., Lee, A.: Designs of angle-rotation in digital frequency synthesizer/mixer using multi-stage architectures. In: Proc. Asilomar Conf. Signals Syst. Comput., pp. 2181–2185 (2011)

Hu, Y., Naganathan, S.: An angle recoding method for CORDIC algorithm implementation. IEEE Trans. Comput. 42(1), 99–102 (1993)

Huang, S.J., Chen, S.G.: A high-throughput radix-16 FFT processor with parallel and normal input/output ordering for IEEE 802.15.3c systems. IEEE Trans. Circuits Syst. I 59(8), 1752–1765 (2012)

Huang, S.J., Chen, S.G., Garrido, M., Jou, S.J.: Continuous-flow parallel bit-reversal circuit for MDF and MDC FFT architectures. IEEE Trans. Circuits Syst. I 61(10), 2869–2877 (2014)

Jang, J.K., Kim, M.G., Sunwoo, M.H.: Efficient scheduling scheme for eight-parallel MDC FFT processor. In: Proc. Int. SoC Design Conf., pp. 277–278 (2015)

Järvinen, T.: Systematic methods for designing stride permutation interconnections. Ph.D. thesis, Tampere Univ. of Technology (2004)

Järvinen, T., Salmela, P., Sorokin, H., Takala, J.: Stride permutation networks for array processors. In: Proc. IEEE Int. Applicat.-Specific Syst. Arch. Processors Conf., pp. 376–386 (2004)

Järvinen, T., Salmela, P., Sorokin, H., Takala, J.: Stride permutation networks for array processors. J. VLSI Signal Process. Syst. 49(1), 51–71 (2007)

Jo, B.G., Sunwoo, M.H.: New continuous-flow mixed-radix (CFMR) FFT processor using novel in-place strategy. IEEE Trans. Circuits Syst. I 52(5), 911–919 (2005)

Johnston, J.A.: Parallel pipeline fast Fourier transformer. In: IEE Proc. F Comm. Radar Signal Process., vol. 130, pp. 564–572 (1983)

Källström, P., Garrido, M., Gustafsson, O.: Low-complexity rotators for the FFT using base-3 signed stages. In: Proc. IEEE Asia-Pacific Conf. Circuits Syst., pp. 519–522 (2012)

Kim, M.G., Shin, S.K., Sunwoo, M.H.: New parallel MDC FFT processor with efficient scheduling scheme. In: Proc. IEEE Asia-Pacific Conf. Circuits Syst., pp. 667–670 (2014)

Kristensen, F., Nilsson, P., Olsson, A.: Flexible baseband transmitter for OFDM. In: Proc. IASTED Conf. Circuits Signals Syst., pp. 356–361 (2003)

Kumm, M., Hardieck, M., Zipf, P.: Optimization of constant matrix multiplication with low power and high throughput. IEEE Transactions on Computers PP(99), 1–1 (2017)

Lee, H.Y., Park, I.C.: Balanced binary-tree decomposition for area-efficient pipelined FFT processing. IEEE Trans. Circuits Syst. I 54(4), 889–900 (2007)

Lee, J., Lee, H., in Cho, S., Choi, S.S.: A high-speed, low-complexity radix-24 FFT processor for MB-OFDM UWB systems. In: Proc. IEEE Int. Symp. Circuits Syst., pp. 210–213 (2006)

Li, C.C., Chen, S.G.: A radix-4 redundant CORDIC algorithm with fast on-line variable scale factor compensation. In: Proc. IEEE Int. Conf. Acoust. Speech Signal Process., vol. 1, pp. 639–642 (1997)

Li, N., van der Meijs, N.: A radix 22 based parallel pipeline FFT processor for MB-OFDM UWB system. In: Proc. IEEE Int. SOC Conf., pp. 383–386 (2009)

Li, S., Xu, H., Fan, W., Chen, Y., Zeng, X.: A 128/256-point pipeline FFT/IFFT processor for MIMO OFDM system IEEE 802.16e. In: Proc. IEEE Int. Symp. Circuits Syst., pp. 1488–1491 (2010)

Lin, C.H., Wu, A.Y.: Mixed-scaling-rotation CORDIC (MSR-CORDIC) algorithm and architecture for high-performance vector rotational DSP applications. IEEE Trans. Circuits Syst. I 52(11), 2385–2396 (2005)

Lin, Y.W., Lee, C.Y.: Design of an FFT/IFFT processor for MIMO OFDM systems. IEEE Trans. Circuits Syst. I 54(4), 807–815 (2007)

Liu, H., Lee, H.: A high performance four-parallel 128/64-point radix-24 FFT/IFFT processor for MIMO-OFDM systems. In: Proc. IEEE Asia Pacific Conf. Circuits Syst., pp. 834–837 (2008)

Liu, L., Ren, J., Wang, X., Ye, F.: Design of low-power, 1GS/s throughput FFT processor for MIMO-OFDM UWB communication system. In: Proc. IEEE Int. Symp. Circuits Syst., pp. 2594–2597 (2007)

Liu, X., Yu, F., Wang, Z.: A pipelined architecture for normal I/O order FFT. Journal of Zhejiang University - Science C 12(1), 76–82 (2011)

Ma, Y., Wanhammar, L.: A hardware efficient control of memory addressing for high-performance FFT processors. IEEE Trans. Signal Process. 48(3), 917–921 (2000)

Ma, Z.G., Yin, X.B., Yu, F.: A novel memory-based FFT architecture for real-valued signals based on a radix-2 decimation-in-frequency algorithm. IEEE Trans. Circuits Syst. II 62(9), 876–880 (2015)

Macleod, M.D.: Multiplierless implementation of rotators and FFTs. EURASIP J. Appl. Signal Process. 2005(17), 2903–2910 (2005)

Majumdar, M., Parhi, K.K.: Design of data format converters using two-dimensional register allocation. IEEE Trans. Circuits Syst. II 45(4), 504–508 (1998)

Meher, P.K., Park, S.Y.: CORDIC designs for fixed angle of rotation. IEEE Trans. VLSI Syst. 21(2), 217–228 (2013)

Meher, P.K., Valls, J., Juang, T.B., Sridharan, K., Maharatna, K.: 50 years of CORDIC: Algorithms, architectures, and applications. IEEE Trans. Circuits Syst. I 56(9), 1893–1907 (2009)

Möller, K., Kumm, M., Garrido, M., Zipf, P.: Optimal shift reassignment in reconfigurable constant multiplication circuits. IEEE Trans. Comput.-Aided Design Integr. Circuits Syst. (2017). Accepted for publication

Oh, J.Y., Lim, M.S.: New radix-2 to the 4th power pipeline FFT processor. IEICE Trans. Electron. E88-C(8), 1740–1746 (2005)

Paeth, A.W.: A fast algorithm for general raster rotation. In: Proc. Graphics Interface, pp. 77–81 (1986)

Parhi, K.K.: Systematic synthesis of DSP data format converters using life-time analysis and forward-backward register allocation. IEEE Trans. Circuits Syst. II 39(7), 423–440 (1992)

Park, S.Y., Yu, Y.J.: Fixed-point analysis and parameter selections of MSR-CORDIC with applications to FFT designs. IEEE Trans. Signal Process. 60(12), 6245–6256 (2012)

Püschel, M., Milder, P.A., Hoe, J.C.: Permuting streaming data using RAMs. J. ACM 56(2), 10:1–10:34 (2009)

Qureshi, F., Gustafsson, O.: Generation of all radix-2 fast Fourier transform algorithms using binary trees. In: Proc. Europ. Conf. Circuit Theory Design, pp. 677–680 (2011)

Qureshi, F., Gustafsson, O.: Low-complexity constant multiplication based on trigonometric identities with applications to FFTs. IEICE Trans. Fundamentals E94-A(11), 324–326 (2011)

Reisis, D., Vlassopoulos, N.: Conflict-free parallel memory accessing techniques for FFT architectures. IEEE Trans. Circuits Syst. I 55(11), 3438–3447 (2008)

Sánchez, M., Garrido, M., López, M., Grajal, J.: Implementing FFT-based digital channelized receivers on FPGA platforms. IEEE Trans. Aerosp. Electron. Syst. 44(4), 1567–1585 (2008)

Serre, F., Holenstein, T., Püschel, M.: Optimal circuits for streamed linear permutations using RAM. In: Proc. ACM/SIGDA Int. Symp. Field-Programmable Gate Arrays, pp. 215–223. ACM (2016)

Shih, X.Y., Liu, Y.Q., Chou, H.R.: 48-mode reconfigurable design of SDF FFT hardware architecture using radix-32 and radix-23 design approaches. IEEE Trans. Circuits Syst. I 64(6), 1456–1467 (2017)

Shukla, R., Ray, K.: Low latency hybrid CORDIC algorithm. IEEE Trans. Comput. 63(12), 3066–3078 (2014)

Stone, H.: Parallel processing with the perfect shuffle. IEEE Trans. Comput. C-20(2), 153–161 (1971)

Takagi, N., Asada, T., Yajima, S.: Redundant CORDIC methods with a constant scale factor for sine and cosine computation. IEEE Trans. Comput. 40(9), 989–995 (1991)

Takala, J., Järvinen, T.: Stride Permutation Access In Interleaved Memory Systems. Domain-Specific Processors: Systems, Architectures, Modeling, and Simulation, S. Bhattacharyya, E. Deprettere and J. Teich. CRC Press (2003)

Takala, J., Jarvinen, T., Sorokin, H.: Conflict-free parallel memory access scheme for FFT processors. In: Proc. IEEE Int. Symp. Circuits Syst., vol. 4, pp. 524–527 (2003)

Tang, S.N., Tsai, J.W., Chang, T.Y.: A 2.4-GS/s FFT processor for OFDM-based WPAN applications. IEEE Trans. Circuits Syst. II 57(6), 451–455 (2010)

Tsai, P.Y., Lin, C.Y.: A generalized conflict-free memory addressing scheme for continuous-flow parallel-processing FFT processors with rescheduling. IEEE Trans. VLSI Syst. 19(12), 2290–2302 (2011)

Tummeltshammer, P., Hoe, J.C., Püschel, M.: Time-multiplexed multiple-constant multiplication. IEEE Trans. Comput.-Aided Design Integr. Circuits Syst. 26(9), 1551–1563 (2007)

Volder, J.E.: The CORDIC trigonometric computing technique. IRE Trans. Electronic Computing EC-8, 330–334 (1959)

Voronenko, Y., Püschel, M.: Multiplierless multiple constant multiplication. ACM Trans. Algorithms 3, 1–39 (2007)

Wang, J., Xiong, C., Zhang, K., Wei, J.: A mixed-decimation MDF architecture for radix- 2k parallel FFT. IEEE Trans. VLSI Syst. 24(1), 67–78 (2016)

Wang, Z., Liu, X., He, B., Yu, F.: A combined SDC-SDF architecture for normal I/O pipelined radix-2 FFT. IEEE Trans. VLSI Syst. 23(5), 973–977 (2015)

Wenzler, A., Luder, E.: New structures for complex multipliers and their noise analysis. In: Proc. IEEE Int. Symp. Circuits Syst., vol. 2, pp. 1432–1435 (1995)

Wold, E., Despain, A.: Pipeline and parallel-pipeline FFT processors for VLSI implementations. IEEE Trans. Comput. C-33(5), 414–426 (1984)

Wu, C.S., Wu, A.Y.: Modified vector rotational CORDIC (MVR-CORDIC) algorithm and architecture. IEEE Trans. Circuits Syst. II 48(6), 548–561 (2001)

Wu, C.S., Wu, A.Y., Lin, C.H.: A high-performance/low-latency vector rotational CORDIC architecture based on extended elementary angle set and trellis-based searching schemes. IEEE Trans. Circuits Syst. II 50(9), 589–601 (2003)

Xia, K.F., Wu, B., Xiong, T., Ye, T.C.: A memory-based FFT processor design with generalized efficient conflict-free address schemes. IEEE Trans. VLSI Syst. 25(6), 1919–1929 (2017)

Xing, Q., Ma, Z., Xu, Y.: A novel conflict-free parallel memory access scheme for FFT processors. IEEE Trans. Circuits Syst. II (2017)

Xudong, W., Yu, L.: Special-purpose computer for 64-point FFT based on FPGA. In: Proc. Int. Conf. Wireless Comm. Signal Process., pp. 1–3 (2009)

Yang, K.J., Tsai, S.H., Chuang, G.: MDC FFT/IFFT processor with variable length for MIMO-OFDM systems. IEEE Trans. VLSI Syst. 21(4), 720–731 (2013)

Yang, L., Zhang, K., Liu, H., Huang, J., Huang, S.: An efficient locally pipelined FFT processor. IEEE Trans. Circuits Syst. II 53(7), 585–589 (2006)

Yeh, W.C., Jen, C.W.: High-speed and low-power split-radix FFT. IEEE Trans. Signal Process. 51(3), 864–874 (2003)

Yu, C., Yen, M.H.: Area-efficient 128- to 2048∕1536-point pipeline FFT processor for LTE and mobile WiMAX systems. IEEE Trans. VLSI Syst. 23(9), 1793–1800 (2015)

Zheng, W., Li, K.: Split radix algorithm for length 6m DFT. IEEE Signal Process. Lett. 20(7), 713–716 (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer International Publishing AG, part of Springer Nature

About this chapter

Cite this chapter

Garrido, M., Qureshi, F., Takala, J., Gustafsson, O. (2019). Hardware architectures for the fast Fourier transform. In: Bhattacharyya, S., Deprettere, E., Leupers, R., Takala, J. (eds) Handbook of Signal Processing Systems. Springer, Cham. https://doi.org/10.1007/978-3-319-91734-4_17

Download citation

DOI: https://doi.org/10.1007/978-3-319-91734-4_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91733-7

Online ISBN: 978-3-319-91734-4

eBook Packages: EngineeringEngineering (R0)