Abstract

This paper presents the use of omnidirectional cameras on underwater robots for the rapid high-resolution mapping of shipwrecks in marine archaeology applications. In collaboration with the University of Zadar, the methodology was recently demonstrated on the Gnalić shipwreck during the Breaking the Surface 2016 workshop held in Biograd na Moru (Croatia). The robot was programmed to survey the shipwreck and the data collected was used to build 360\(^\circ \) panoramic videos, topological panoramic maps and 3D optical reconstructions. The paper describes a recently built multicamera system comprising 5 compact, high resolution video cameras. It outlines the methodology used, reports the results obtained, and discusses the challenges that marine archaeology poses to underwater robotics, as well as the contribution that this technology may bring to the archaeology community.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Data collection in marine archaeology has traditionally been performed by scuba-divers, and hence seriously restricted on the depth reached and on the duration of the immersions. The use of underwater robots can reduce the constraints on depth and duration of the dives, as well as improve the localization and allow to carry heavier equipment. Typically underwater robots are classified in two groups: Remotely Operated Vehicles (ROVs) and Autonomous Underwater Vehicles (AUVs). The first type of robots are tethered vehicles that are piloted from the surface by an operator that observes live video feed from cameras placed in the vehicle. The second type is formed by those robots that can perform missions autonomously without requiring inputs from an operator. Among all sensors that underwater robots can deploy, optical and acoustic cameras have especial relevance for archaeologists as they allow to create accurate maps of wrecks and other areas of special interest.

Over the last few years, the use of omnidirectional cameras on land and in air has become very popular for both the scientific community and the general public. Many different cameras and applications can be found on the market for both research and entertainment purposes. For the scientific community, omnidirectional cameras have a high potential in tasks such as mapping, augmented reality, visual surveillance, motion estimation or simultaneous localization and mapping (SLAM) [11]. However, due to the unfavourable properties of the medium, their use in underwater environments is still very limited. Nevertheless, omnidirectional cameras are expected to have a high impact in fields as diverse as marine science, oil and gas industries or underwater archaeology.

The main motivation behind the use of omnidirectional cameras comes from the ability to easily produce panoramic images and videos of a scene. The viewer is able to experience a unique immersive feeling when panoramic media is displayed in a spherical viewer or in a Virtual Reality (VR) headset. Panoramic content can be useful for pilots of ROVs to mitigate spatial awareness problems due to the lack of landmarks and the monotony of underwater scenes [8, 14]. In that sense it could also help archaeologists on live inspection missions or during replays. Furthermore, panoramic content is a powerful tool for science dissemination as it attracts the attention of the public, especially among younger generations.

The use of multi-camera systems has also other advantages that should not be underestimated, such as its high potential to create 3D maps. The fact that, compared to a monocular or a stereo-camera, a multi-camera system records significantly more information with different points of view, makes it very valuable for 3D reconstruction [5, 6].

The contribution of this paper is two-fold. First, we present an omnidirectional camera developed at the Underwater Robotics Center of the University of Girona specifically for mapping purposes that can be used by divers or integrated in robotics platforms. Second, we demonstrate the utility of combining underwater robots with omnidirectional cameras by presenting the results of a survey over a 16th century shipwreck [13] located in Biograd na Moru, Croatia.

2 Omnidirectional Camera

The omnidirectional camera was designed and built during 2016 with the aim of generating high-resolution, high-quality imagery on a set-up that could be used by both divers and robots. The camera is a Multi-Camera System (MCS) capable of recording 27 M pixel omnidirectional video at 30 fps up to 200 m depth. The system (Fig. 1) has a cube-shaped structure and is formed by up to six independent camera modules which are rigidly attached. Each module houses a GoPro Hero 4 Black camera inside a Delrim® enclosure with an acrylic spherical view dome.

When designing waterproof housings, two different types of ports are widely used: flat or dome ports. Flat ports allow smaller designs, are easier to manufacture and assemble, and are generally less expensive. However they have two main drawbacks. Firstly, when used underwater, they significantly reduce the Field of View (FOV) due to light refraction. Secondly flat ports tend to introduce distortion and chromatic aberration in the images, which are more prominent on the image corners when using wide angle lenses.

Compared to flat ports, a design using dome ports tend to be bulkier and is more complex to manufacture. Nevertheless, dome port designs have the crucial advantage of preserving the FOV in the water, and to keep the image free of distortion when the non-parallax point of the camera is adequately centered with the dome. On the down side, dome ports tend to alter the camera focusing range, especially for small radius domes. To account for this phenomenon, an important aspect to take into consideration is the notion of the virtual image. In Underwater Optics, a virtual image is the image generated by the imaginary intersection of the light rays. A dome port generates a virtual image much closer than the real object and the camera has to be able to focus this virtual image to achieve a clear and sharp image. The distance between the camera and the virtual image s for an object placed at infinity depends on the radius of the dome port and is given by:

where r is the internal radius of the dome, \(\delta _r\) is the thickness of the port, and \(n_a\), \(n_p\), \(n_w\) are the refractive indexes of air, the dome material and water respectively. These entities are schematically represented in Fig. 2. Commonly Eq. 1 is approximated as \(s=4r\) [7]. The smaller the radius, closer the virtual image. Thus, the size of the dome port must be chosen by taking into account the minimum focus distance of the cameras to ensure sharp and focused images.

When using a dome port, a ray of light travelling through the optic axis does not change its direction when going through the port. However, a ray coming from infinity with an offset with respect to the optic axis changes its direction twice when passing from water to glass and from glass to air. When viewed from the air side of the dome, the ray appears to come from point F, and so the camera tries to focus on a virtual image placed at F.

Since our objective was to cover the maximum FOV with the minimum number of cameras, a dome port design was favored. In the interest of compactness, we chose the minimum port size that allows a focused image according to the minimum focus distance (MFD) of the camera.

The modular design of the system allows to use a different number of cameras depending on the needs of the mission. Using the six cameras allows to cover the full sphere. However using five of them covers 360\(^\circ \) horizontally and 135\(^\circ \) vertically and might be useful when the top view is not relevant, such as in the case of camera deployment directly under an AUV or ROV. When only four cameras are used, the full horizontal FOV is still covered (a full hemispherical view). When using the full 6-camera configuration the system is able to output a spherical equirectangular video, at 8 K resolution.

The modules have an external cable that provides power to extend GoPro’s battery autonomy. The cable is also used to switch on the cameras and start the programmed mode of acquisition, through an electric signal or an underwater switch for divers. The image data is recorded on the camera’s internal SD card, and can be recovered at the surface through a 5 Mb/s WiFi link. If needed, faster download speeds can be attained by disassembling the camera housings and assessing the memory cards directly, since the external cale is not prepared to transmit data in the current configuration. The system has a internal battery autonomy of nearly one hour when used by divers, or can record video for approximately 2.5 h (depending on the size of the internal memory card) when carried by a robot and powered externally.

The calibration of a multi-camera system typically estimates two different sets of parameters: intrinsic parameters, concerning the image formation geometry for each individual camera, and extrinsic parameters, which describe the relative positions and orientations between cameras. The calibration of the intrinsic parameters was done separately for each single camera in air and without the waterproof housing. Contrarily to other underwater imaging systems with different view port geometries, the intrinsic parameters can be calibrated in air for the case where the camera is concentrically aligned with a spherical dome. This is due to the fact that the rays reaching the camera do not significantly change direction when passing through the waterproof housing, both in air and in water.

The calibration of the extrinsic parameters is an important challenge for omnidirectional multi-camera systems, due to the usually small overlap between neighboring cameras. The calibration of the extrinsic parameters was also conducted in air, and followed very closely the procedure described in [2].

3 Survey Setup

The imaging surveys for the results presented in this paper were carried out in October 2016 in Biograd Na Moru, Croatia, during underwater robots demonstrations carried out as part of the Breaking the Surface 2016 Workshop.

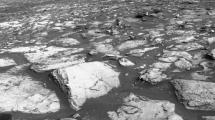

The mission goal was the creation of a map of a shipwreck located very close to the island of Gnalić. The wreck lays at about 25 m depth and it is thought to be of late 16th century [3, 13]. It was discovered in the 1960s and a large collection of artifacts has been recovered from this site since then, including anchors, guns, glass vessels, mirror glasses, window panes and brass chandeliers among others [12]. Given the importance of the findings, a museum was established in Biograd in 1970, to preserve and exhibit the retrieved materials.

The equipment used during the experiments consisted of the Girona 500 AUV [10] and the omnidirectional camera presented in the previous section. Both were designed and built in the Underwater Robotics Center (CIRS) of the University of Girona.

The Girona500 AUV (Fig. 3) is a reconfigurable vehicle rated for 500 m depth and is hovering-capable with 5 thrusters actuating in 4 DOFs: surge, sway, heave and yaw. It is stable in pitch and roll. The navigation sensor suite includes GPS, pressure sensor, a Doppler Velocity Log (DVL) and an attitude and heading reference unit (AHRS) that makes it able to navigate precisely. However, in long underwater missions, the vehicle can also be equipped with an ultra short base line (USBL) device to avoid drifting. The navigation is based on an extended Kalman filter (EKF) that combines the sensors to obtain a robust estimation of the AUV position and velocity [9]. The vehicle has a large payload area (up to 35 l) which allows to carry extra equipment. For this experiment, this area was used for the omnidirectional camera along with other sensing equipment.

The Girona500 AUV was deployed to the wreck site from a motorized marine platform equipped with a crane. The plan for the survey mission was created to cover the estimated area of the shipwreck determined beforehand by archaeologists. The mission plan was loaded to the Girona500 at the surface and executed autonomously during approximately one hour (Fig. 4). The vehicle was instructed to do terrain compliance by keeping constant altitude of 3 m above ground. Once the mission was finished the vehicle was recovered, and the data processed off-line.

4 Data Processing

As explained in Sect. 2, each one of the camera outputs a separate video file. The objective is to merge all independent video files into one single panoramic video. The process starts by retrieving and synchronizing the independent videos. Although the cameras are started simultaneously by hardware, perfect synchronization is not guaranteed due to small differences in their booting time. Satisfactory synchronization, required for producing good final results, can be achieved manually with the aid of acoustic and visual landmarks such as a short beep, a flashing light or a digital watch with high refresh rate.

Once synchronized, the videos are remapped and blended into a combined video. While different projection models exist (fisheye, stereographic, cubic [4]), the equirectangular is the most widely used for displaying omnidirectional pictures and videos (Fig. 5).

The equirectangular projection is a simple cartographic transform where the spherical coordinates of the viewing direction (azimuth and altitude) are linearly mapped into planar coordinates (u and v) of an image (Fig. 6). Since the range of the azimuth is double the range of the altitude, the equirectangular images have usually a 2:1 aspect ratio.

The procedure to generate an equirectangular panorama is as follows:

-

1.

For each pixel in the equirectangular panorama a 3D point is computed according to the equirectangular projection equations assuming a predetermined distance between the camera and the world.

-

2.

Each 3D point is projected into each individual camera of the MCS according to the calibration of the camera.

-

3.

Depending on the number of cameras that can see the 3D point and a predefined criterion, the final RGB values of the pixel are chosen.

This procedure uses the simplifying assumption that the world surrounding the camera is a perfect sphere. The fact that the real distances between the camera and the objects in the world are not considered, can introduced misalignments caused by the effect of parallax among cameras. This misalignment, as well discrepancies in color between the different cameras can be smoothed using blending techniques. Details regarding the generation of the panoramas and blending techniques can be found in [2].

5 Results

In this section different visualization possibilities of the panoramic data collected on the Gnalić survey are presented. For the sake of completeness, the section is complemented with a 3D reconstruction of the wreck created using separate images of the MCS.

5.1 Omnidirectional Video and Virtual Tour

Once the panoramic video has been generated it can be visualized in any standard spherical video player. The number of such video players is increasing rapidly along with the popularity of 360\(^\circ \) videos (e.g. VLC, Oculus Player, Total Cinema 360, etc.). Many social networks, such as Facebook, YouTube (Fig. 7) and Google Plus also allow the upload of 360\(^\circ \) videos in equirectangular format. The full video of the survey can be found in www.tiny.cc/gnalic.

Snapshot from the Gnalić shipwreck panoramic video on YouTube. The complete video can be found at: www.tiny.cc/gnalic.

To achieve an even more immersive experience, panoramic videos can be watched through Virtual Reality headsets, such as the Oculus Rift. These headsets evoke a unique feeling of immersion that could be used in schools, museums and interpretation centers.

Panoramic data can also be used to create static panoramic images which can be further augmented with their geolocation according to the navigation of the vehicle. Results can then be uploaded to platforms for virtual tours where users can virtually navigate through regions of special interest. Google Maps is one of the most known platforms that allow to create virtual tours, and where panoramas can achieve its maximum impact by reaching a very wide audience. This world-wide known web service allows users to contribute with their own 360\(^\circ \) panoramas and create links between them to create virtual constellations [1]. However, other Virtual Tour platforms have additional features that might be very useful for science dissemination such as the possibility to place augmented reality markers with relevant information in the image, or place a customized map or informative drawings with the location of the panoramas (Fig. 8).

Map of the virtual tour of the shipwreck available at: www.tiny.cc/gnalictour.

5.2 3D Reconstruction

To fully illustrate the advantages of data collection using omnidirectional cameras we present a 3D reconstruction of the shipwreck and surrounding area created using the same panoramic data. The offline reconstruction process is performed through multiple steps as described in detail in [5]. Each of the four main steps (structure from motion, multi-view stereo, surface reconstruction and texture mapping) can produce intermediate results usable for numerous applications. Combining the four steps allows the creation of a 3D textured model (Figs. 9 and 10).

Using such multi-camera system has the advantage of collecting data simultaneously from multiple points of view of the surveyed area. This notably reduces the required time for surveys, especially in scenarios with compound and irregular structures [6] where having different points of view allows for a better model of the scene. Moreover, when compared to single camera surveys, the additional geometric and temporal constraints obtained from image registration between the individual cameras further helps the model creation. To illustrate that the omnidirectional camera can be used as both a diver hand-held and robot-integrated system, the results of Figs. 9 and 10 where created from image data alone, without resorting to robot navigation data.

6 Conclusions and Future Work

This paper has presented two main contributions regarding the use of omnidirectional cameras underwater. Firstly, a new high resolution multi-camera system is described, which can be used integrated in a robot or as a standalone equipment for divers. Secondly, the results of a survey over a historical shipwreck are presented together with the main advantages and uses of omnidirectional cameras applied to marine archaeology.

A priority aspect for future work is to address the software synchronization of the independent cameras without the requirement for additional manual action. Furthermore, the authors will focus on using the camera for SLAM and structure from motion (SfM) taking into account the geometrical and temporal constraints between the cameras. At the time, the improvement will also further improve the panorama generation as the distance between the cameras and the world will be adjustable significantly reducing the misalignment errors due to parallax.

References

Bosch, J., Ridao, P., Ribas, D., Gracias, N.: Creating 360\(^\circ \) underwater virtual tours using an omnidirectional camera integrated in an AUV. In: Proceedings of the MTS/IEEE OCEANS 2015 Conference, May 2015

Bosch, J., Gracias, N., Ridao, P., Ribas, D.: Omnidirectional underwater camera design and calibration. Sensors 15(3), 6033–6065 (2015). http://www.mdpi.com/1424-8220/15/3/6033

Brill, R.H.: The Gnalić wreck: analyses of some glasses. J. Glass Stud. 15, 93–97 (1973)

Chang, C.H., Hu, M.C., Cheng, W.H., Chuang, Y.Y.: Rectangling stereographic projection for wide-angle image visualization. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2824–2831 (2013)

Hernández, J.D., Istenič, K., Gracias, N., Palomeras, N., Campos, R., Vidal, E., García, R., Carreras, M.: Autonomous underwater navigation and optical mapping in unknown natural environments. Sensors 16(8), 1174 (2016)

Istenič, K., Ila, V., Polok, L., Gracias, N., García, R.: Mission-time 3D reconstruction with quality estimation. In: Proceedings of the MTS/IEEE OCEANS 2017 Conference. MTS/IEEE, Aberdeen, June 2017

Menna, F., Nocerino, E., Fassi, F., Remondino, F.: Geometric and optic characterization of a hemispherical dome port for underwater photogrammetry. Sensors 16(48) (2016)

Negahdaripour, S., Zhang, H., Firoozfam, P., Oles, J.: Utilizing panoramic views for visually guided tasks in underwater robotics applications. In: OCEANS, 2001. MTS/IEEE Conference and Exhibition, vol. 4, pp. 2593–2600 (2001)

Palomeras, N., El-Fakdi, A., Carreras, M., Ridao, P.: COLA2: a control architecture for AUVs. IEEE J. Ocean. Eng. 37(4), 695–716 (2012)

Ribas, D., Palomeras, N., Ridao, P., Carreras, M., Mallios, A.: Girona 500 AUV: from survey to intervention. IEEE/ASME Trans. Mechatron. 17(1), 46–53 (2012)

Rituerto, A., Puig, L., Guerrero, J.: Visual SLAM with an omnidirectional camera. In: 2010 20th International Conference on Pattern Recognition (ICPR), pp. 348–351, August 2010

Rossi, I.R., Bondioli, M., Nicolardi, M., Brusić, Z., Coralić, L., de Castro, F.V.: The shipwreck at Gnalić: a mirror of renaissance Europe. In: Gnalić. Blago potonulog broda iz 16. stoljeca, pp. 65–95. Hrvatski povijesni muzej, Zagreb, Croatia, January 2013

Rossi, I.R., Castro, F.: The late sixteenth century shipwreck of Gnalić; preliminary results of 2012 research campaign and plans for the future. Histria Antiq. 22, 365–376 (2013)

Bosch, J., Ridao, P., Garcia, R., Gracias, N.: Towards omnidirectional immersion for ROV teleoperation. In: Proceedings of Jornadas de Automática, Madrid, Spain (2016)

Acknowledgements

This research was supported by the Spanish National Projects ARCHROV (Marine ARChaeology through HROV/AUV cooperation) under the agreement DPI2014-57746-C3-3-R and OMNIUS under the agreement CTM2013-46718-R, the ROBOCADEMY (European Academy for Marine and Underwater Robotics) EU FP7-Project under Grant Agreement 608096, and “la Secretaria d’Universitats i Recerca del Departament d’Economia i Coneixement de la Generalitat de Catalunya” (to J. Bosch). The Gnalic research is executed with the support of Croatian Science Foundation, in the framework of the AdriaS Project (Archaeology of Adriatic Shipbuilding and Seafaring).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this paper

Cite this paper

Bosch, J. et al. (2018). Immersive Touring for Marine Archaeology. Application of a New Compact Omnidirectional Camera to Mapping the Gnalić shipwreck with an AUV. In: Ollero, A., Sanfeliu, A., Montano, L., Lau, N., Cardeira, C. (eds) ROBOT 2017: Third Iberian Robotics Conference. ROBOT 2017. Advances in Intelligent Systems and Computing, vol 693. Springer, Cham. https://doi.org/10.1007/978-3-319-70833-1_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-70833-1_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-70832-4

Online ISBN: 978-3-319-70833-1

eBook Packages: EngineeringEngineering (R0)