Abstract

Software metrics measure the complexity and quality in many empirical case studies. Recent studies have shown that threshold values can be detected for some metrics and used to predict defect-prone system modules. The goal of this paper is to empirically validate the stability of threshold values. Our aim is to analyze a wider set of software metrics than it has been previously reported and to perform the analysis in the context of different levels of data imbalance. We replicate the case study of deriving thresholds for software metrics using a statistical model based on logistic regression. Furthermore, we analyze threshold stability in the context of varying level of data imbalance. The methodology is validated using a great number of subsequent releases of open source projects. We revealed that threshold values of some metrics could be used to effectively predict defect-prone modules. Moreover, threshold values of some metrics may be influenced by the level of data imbalance. The results of this case study give a valuable insight into the importance of software metrics and the presented methodology may also be used by software quality assurance practitioners.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

There are many different software metrics and each describes the program code from a different perspective [1, 2]. Software metrics that describe the object oriented code can be used to quantify its quality [3]. One of the most direct attributes of software quality is the number of defects that need to be handled after the implementation phase. Software defect prediction (SDP) is the research area that investigates the possibility to use these metrics to classify the software modules that contain defects. Contradictory results have been reported across studies that evaluated the classification accuracy of various software metrics [4]. There are indications that the choice of the prediction model has lower impact on accuracy than the choice of metrics [5]. However, certain software metrics proved to be efficient for defect prediction [6]. Moreover, some metrics exhibit threshold effect, i.e. a relationship can be identified between a metric’s threshold value and the occurrence of defects [7]. Threshold is the level of a metric, above which we classify the module to be faulty, i.e. to contain defects, and non-faulty otherwise.

Software defects are generally not distributed according to any probability distribution that could be described with a particular mathematical model [8]. That is why SDP community turned to machine learning methods. However, there is not any prediction model that is the best in all the application contexts [9]. Furthermore, most machine learning methods exhibit performance deterioration in high levels of data imbalance [10]. Data imbalance is the phenomenon in which one class of data greatly outnumber the other class or classes of data. The class that represents faulty software modules is outnumbered in SDP, making data imbalance its inherent feature [11]. Recent studies experimented with the use of genetic programming algorithms for classification, i.e. building the prediction model in SDP, and achieved some promising results [12]. The multi-objective framework of genetic programming improves the generalization ability by forming ensembles of diverse classifiers [13]. These algorithms combine all the metrics in a decision tree structure and train the prediction models using multiple fitness functions sensitive to data imbalance. In such configuration, every software metric is one additional dimension in the search space. Having a large search space with too many dimensions reduces the probability of finding the optimal solutions in any heuristic optimization algorithm [14]. Furthermore, these algorithms may be further improved by incorporating certain domain knowledge [15]. This may be done by using a predefined initial populations or by fine tuning its configuration.

This paper aims to empirically validate the stability of threshold values of software metrics for SDP. We replicate the threshold derivation model from the studies performed by [7, 16]. The stability of threshold values is examined in terms of rate of significance, spread of threshold values and the difference of central tendency between different datasets. The results of this case study research provides us with insight into the importance of individual software metric for SDP. This valuable domain knowledge will be used to improve the genetic algorithm configuration that achieved promising results in spite of data imbalance [12]. Software metrics not significant for defects prediction will be excluded and the significant ones will be given higher priority in the decision making process. Moreover, the threshold values of metrics that prove to be effective in prediction will be regarded as reference points in a multivariate model configuration. That is why it is important to perform a large scale case study and include all the possible software metrics, whereas previous research focused mainly on a small number of object-oriented metrics [7, 17]. This paper performs this study on 49 different metrics that are calculated according to the systematically defined collection procedure for SDP research [18].

The remainder of this paper is structured as follows: Sect. 2 presents an overview of related work; Sect. 3 describes the details of the case study we have conducted; Sect. 4 shows and discusses the results that we have obtained; Sect. 5 gives a conclusion and explains our future work intentions.

2 Related Work

Many studies searched for software metrics with strong association to software defect prediction. Majority of these studies concentrated on a small number of object oriented metrics like: coupling between objects (‘CBO’), response for class (‘RFC’), weighted method per class (‘WMC’), depth of inheritance tree (‘DIT’) and number of children (‘NOC’) [7]. Their results indicated that metrics like ‘CBO’, ‘WMC’ and ‘RFC’ were often significant for SDP and other metrics like ‘DIT’ and ‘NOC’ were rarely significant [7, 16]. A recent replicated study involved other metrics like: lack of cohesion between objects (‘LCOM’), maximum or average cyclomatic complexity (‘MAXCC’, ‘AVCC’) or lines of code (‘LOC’) and demonstrated that they may also be significant [16].

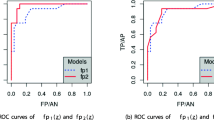

On the other hand, fewer studies experimented with threshold derivation [16]. That does not mean that practitioners do not use it. The senior software developers usually determine the threshold values based on their experience [19]. Their decision making process cannot be replicated nor reused and it is highly biased. Hence, researchers proposed several methods to perform it systematically. The most popular are the Bender method which is based on logistic prediction model and the method which is based on receiver operating curve (ROC) [16].

In most classification applications that suffer from data imbalance, the minority class is usually the one that is more important to find and this posses a problem to most classification algorithms [20]. The fact that defects in large and complex software systems are distributed according to the Pareto principle [11, 21, 22] makes data imbalance an inherent feature of SDP. The rate of faulty software modules is always lower than the rate of modules that are non-faulty and we refer to it as the level of imbalance in the rest of this paper. Different methods were proposed to deal with data imbalance. The ones that take into account the misclassification costs of unequally distributed classes were generally the most successful [12, 23]. In this paper we analyze whether data imbalance has an impact on threshold derivation method as well and use the proper evaluation metrics to take that into account.

3 Case Study

The goal of this paper is to empirically validate the stability of threshold values in different contexts. We want to see whether there are metrics significant for defect prediction with stable threshold values so that the practitioners could use them in software quality assurance. The threshold values are calculated by using the logistic regression model and Bender method, and evaluated by using the decision tree binary classification. The proposed methodology examines individually the strength of each metric for defect prediction. The stability of threshold values is examined in datasets with varying levels of imbalance by using 10-fold cross-validation. We used Matlab R2014a to perform the case study calculations.

3.1 Data

We use 14 datasets of two open source projects from the Eclipse community: JDT and PDE. Each dataset represents one release and there are 7 subsequent releases of each project in this case study. We used the BuCo Analyzer tool [24] to collect the datasets. BuCo is a tool that implements systematically defined data collection procedure [18]. The tool performs linking between bugs and commits using a technique that is based on regular expression [25]. The effectiveness of the linking is expressed by linking rate, i.e. the ratio of bugs that are linked to at least one commit. We present the linking rate, the number of reported bugs, the number of Java files, the ratio between non-faulty and faulty files and the number of software metrics for each dataset in Table 1.

3.2 Methodology

The methodology of this case study is presented in Fig. 1. In the first phase we sample the datasets into 10 equally distributed folds, according to the stratified sampling strategy of 10-fold cross-validation. We use the 10-fold cross-validation to validate the stability of the results that are going to be obtained in the following releases. The training dataset contains 9 folds and the testing dataset contains the remaining fold. This process is repeated 10 times, each time with different fold as the testing dataset but with equal ratio between the minorty and the majority class.

The following three phases belong to the threshold derivation model. The univariate logistic regression model is built for each dataset separately in the second phase. The logistic regression model is presented with the following equation [26]:

where \(\beta _{0}\) is the free coefficient, \(\beta _{1}\) is the regression slope coefficients for predictor X, X represents a particular metric and P(X) is the probability that a module is faulty. The coefficients \(\beta _{0}\) and \(\beta _{1}\) define the curvature and the non-linearity of the logistic regression output curve.

Base probability p(0) is the cutoff value that is required to transform probability P(X) into binary variable. It is often set to 0.5 [27], but it can be tuned to account for data imbalance present in the dataset [3]. Using the percentage of the majority class as the base probability accounts for the higher probability that a randomly selected module is non-faulty [3]. This is also applicable to the Bender method in the following phase so the majority class percentage is forwarded there. An intermediate step in the methodology is also the analysis of statistical significance. The null-hypothesis states there is no relationship between the logistic regression model of a particular metric and the defect-proneness of the examined software modules. This step forwards the metrics for which the null-hypothesis is rejected to the following phase.

We calculate the value of an acceptable risk level, i.e. the threshold values for each metric \(THR_{j}\), where j represents the metric, in the third phase. For the significant metrics, thresholds are derived using the following equation given by the Bender method [28]:

where \(\beta _{0}\) and \(\beta _{1}\) are coefficients obtained from the logistic regression model and p(0) is the base probability. As explained earlier, we used the percentage of majority class as the base probability (\(p_0 = p(0)\)) [16].

We evaluate the effectiveness of every threshold in the fourth phase. A simple decision tree is built using the obtained threshold values to classify the software modules from the testing dataset according to the following equation:

where i and j are the software module and the software metric indexes, respectively. In our study, the granularity level of software modules is the file level. The software metrics in our study describe the size and complexity of source code (like lines of code, cyclomatic complexity), the usage of object oriented principles (inheritance, encapsulation, abstraction and polymorphism), the design of source code (like coupling and cohesion), the programming style (like number of comments, blank lines of code) and more. The full list of metrics and their descriptions that we have used can be found in [12]. Then we compare the output of prediction with the actual values and evaluate its performance. The following subsection describes this in more details.

3.3 Evaluation

General importance of each metric for the defect prediction is evaluated upon building the model based on logistic regression. We test the statistical significance in a univariate model by making the opposite null-hypothesis that there is no relationship between the calculated coefficient and the defect-proneness of the modules. If the p-value is lower than 0.05, we reject the null-hypothesis and conclude that the observed metric is significant for prediction.

A more precise evaluation of the metrics that are statistically significant for prediction is done after building the decision trees. There are four possible outcomes when performing binary classification and they are present with the confusion matrix in Table 2. The faulty software modules constitute the positive (1) class, while non-faulty ones constitute the negative (0) class.

There are various evaluation metrics that can be computed using the true positive (TP), true negative (TN), false positive (FP) and false negative (FN) predictions. In this paper, we use the geometric mean accuracy (GM) to evaluate the performance of the decision tree classifier that is based on calculated level of threshold for each metric. GM is calculated as the geometric mean using the following equation:

where TPR (true positive rate) and TNR (true negative rate) are calculated as:

Its values are within a range of [0,1], higher results indicating better performance. The main advantage of this evaluation metric is its sensitivity to class imbalance [29]. We adopt the interpretation that metrics which achieve \(GM > 0.6\) are considered effective for prediction [7, 16].

4 Results

The first step in our case study was to build an univariate logistic regression model for each metric. We used a function built in the Matlab system for that. The statistical significance of computed coefficients is one of the outputs of that function. Metrics that had p-\(value > 0.05\) were not considered relevant for defect prediction and were reject from following analysis. Table 3 presents how many times the null-hypothesis was rejected in each of the analyzed releases. The maximum number of times a metric could be significant is 10 because we used 10-fold cross-validation. Column named sum presents the average rate of significance in the project. The table is vertically divided in two parts, left part representing JDT releases and right part representing PDE releases. The metrics are placed in rows and we categorized several types of metrics in the table based on their summed values:

-

Metrics significant in every release of both projects are marked bold,

-

Metrics significant in majority of releases of both projects are not marked,

-

Metrics rarely significant in both projects are marked in

color,

color, -

Metrics significant in every release of one project and rarely significant in the other are marked in

color.

color.

Threshold values are calculated and evaluated in terms of GM each time a metric is considered significant. We have analyzed the distribution of GM values for all the datasets. Due to a great number of datasets and space limit, we present the distribution of results only for all releases together. The box and whisker plots is given in Figs. 2 and 3. The central mark presents the median, the box is interquartile range, the whiskers extend to the non-outlier range, and outliers are plotted individually. Due to a great number of metrics, we omitted the ones that did not have 100% rate of significance in the previous analysis. The majority of presented metrics is considered effective, according to the (\(GM > 0.6\)) interpretation. Figure 2 presents the results obtained from the releases of JDT project. From the size of the box, we can see that the majority of metrics exhibits rather stable prediction performance. The best performing metrics achieve GM value above 0.7. Figure 3 presents the results obtained from the releases of PDE project. Comparing to the JDT project, the size of the box is wider and, hence, the performance of metrics is less stable. However, the best performing metrics achieve admirable GM values above 0.8.

The threshold values of metrics that are significant in terms of statistical test (p-\(value < 0.05\)) and prediction performance (\(GM > 0.6\)) are given in Tables 4 and 5. We presented the mean and the standard deviation (in brackets) of threshold values for each release. The metrics are placed in rows and the releases are placed in columns. The threshold values of metrics ‘HEFF’ and ‘HVOL’ in Table 5 need to be multiplied by \(10^4\). In both projects combined, 21 different metrics were significant for defect prediction. There are 13 significant metrics in JDT project and 16 significant metrics in PDE project. The following 8 out of 21 metrics were significant for both JDT and PDE:

-

‘LOC’ - lines of code,

-

‘SLOC-P’ - physical executable source lines of code

-

‘SLOC-L’ - logical source lines of code

-

‘BLOC’ - blank lines of code

-

‘NOS’ - total number of Java statements in class

-

‘MAXCC’ - maximum cyclomatic complexity of any method in the class

-

‘TCC’ - total cyclomatic complexity of all the methods in the class

-

‘HLTH’ - cumulative Halstead length of all the components in the class

The level of imbalance is expressed as the percentage of faulty software modules and it is shown below the corresponding release label in Table 5. We ordered the releases in the table according to the level of imbalance to get an insight into the effect of data imbalance on threshold values. The standard deviation of each threshold is at least two orders of magnitude lower than its mean value. Hence, we conclude that the threshold values are very stable within the 10 folds of each release.

4.1 Discussion

In this paper we wanted to analyze the impact of data imbalance on the stability of threshold values. For the JDT project, all the thresholds have the lowest values in the most balanced dataset (release 2.0.) and rather stable value in the remaining datasets. Considering that JDT 2.0. is the earliest release and its liking rate is the lowest, the more emphasized difference may not be caused by data imbalance. For the PDE project, almost all the thresholds have the lowest values in the most balanced dataset (release 3.2.). Unlike the JDT 2.0., PDE 3.2. release is not the earliest nor does it have the lowest linking rate. Moreover, some metrics in PDE project exhibit a predominantly increasing rate of threshold values with higher levels of imbalance (for example ‘LOC’, ‘SLOC-P’, ‘HLTH’). That is why we suspect that the data imbalance may have an impact on the threshold values for some metrics, after all. On the other hand, some metrics have a rather stable threshold value regardless of imbalance (‘No_methods’ ‘MAXCC’, ‘NCO’ and ‘CCOM’ for JDT and ‘HBUG’, ‘UWCS’, ‘MAXCC’, ‘RFC’ and ‘TCC’ for PDE).

Some of the metrics that are significant in both projects do have similar threshold values. For example, the range of threshold values for ‘NOS’ is [61–79] for JDT and [62–74] for PDE; for ‘SLOC-P’ it is [100–132] for JDT and [100–125] for PDE. On the other hand, some of the metrics are more diverse between the two projects which we have analyzed. For example, the maximum threshold value for ‘LOC’ is 202 for JDT and only 165 for PDE; for ‘HTLT’ it is 612 for JDT and 552 for PDE.

Our metrics ‘No_Methods’ and ‘NSUB’ are also known as ‘WMC’ and ‘NOC’ in related studies. The mean of threshold value of ‘WMC’ in related research varies from 10.49 [16], 13.5–13.94 [17] up to 23.17 [7]. The mean threshold value of ‘No_Methods’ varies from 9 to 10.8 in JDT and from 9.18 to 9.88 in PDE, which is similar to [16]. Although this metric passes the statistical significance criterion in every dataset, its GM value was lower than 0.6 for some datasets in PDE. Another metric that is often significant in related studies is ‘CBO’. We obtained the same results for JDT, but the completely opposite results for PDE, where it was never significant. On the other hand, ‘RFC’ was found statistically significant both in our case study and in related research. However, the threshold values were 9.2–11.02 for JDT, 9.3–10.01 for PDE in our case study and 26.82 [16], around 40 [17] or 41.77 [7] in related studies. The ’DIT’ metric was rarely significant in related studies and our case study confirmed this.

All of these examples confirm that general threshold values are hard to find and cross-project SDP is still difficult to achieve. Instead, we may only find generally significant or insignificant metrics. This case study also shows somewhat unexpected results. Software metrics that present very important features of object oriented code and its design, like for example cohesion or coupling, seam to be less often significant to defect prediction. This shows that most of previous research intentions that focused on a smaller number of intuitively important metrics offered a narrow view of this complex problem. That is why we believe this methodology should to be used to gain additional knowledge that can be used to improve the multivariate defect prediction models.

5 Conclusion and Future Work

This paper replicated the methodology for deriving the threshold values of software metrics which are effective for SDP. Afterwards, we analyzed the rate of metrics’ significance, the spread of threshold values and we compared the central tendency of these values among different datasets. Unlike previous studies, our focus was not on finding generally applicable threshold values. Instead, we analyzed the stability of thresholds for within-project defect prediction. For that purpose we tested the metrics using a statistical test of significance and cross-validated the geometric mean accuracy of defect prediction. The results revealed that all the metrics that passed the two significance criteria had stable threshold values within the same release of a project. Data imbalance may have influenced the threshold values of some metrics, for which we noticed different values in the most balanced datasets and an increasing trend of values. Some of the derived thresholds were stable regardless of data imbalance and some were stable even across different projects. However, the results and the discussion pointed out that practitioners need to use the presented methodology for their specific projects because it is difficult to obtain general conclusions with regard to threshold values.

It is important to discuss the threats to construct, internal and external validity of this empirical case study. Construct validity is threaten by the fact that we do not posses industrial data. However, we used carefully collected datasets from large, complex and long lasting open source projects. The choice of threshold derivation model is a threat to internal validity, so we have used a model that was used and replicated in related studies. The main disadvantage of this case study is the inability to discover strong evidence regarding the influence of data imbalance on the stability of derived threshold values. Finally, the external validity is very limited by the diversity of data we have used. The results of this case study are reflecting the context of open source projects, in particular from the Eclipse community. We used only two projects and both are quite central for the Eclipse community. Our future work will expand the number of datasets and combine the results of this case study with our previous research in evolving co-evolutionary multi-objective genetic programming (CoMOGP) classification algorithm for SDP. We plan to use the calculated rate of significance for better definition of the weights that are assigned to each metric in the CoMOGP. We also plan to use the estimated threshold values for building the decision tree in the CoMOGP. Instead of taking the absolute value of each metric, we will use the distance of its value from the threshold. That way, we hope to achieve a more focused CoMOGP and improve the prediction results.

References

Chidamber, S.R., Kemerer, C.F.: A metrics suite for object oriented design. IEEE Trans. Softw. Eng. 20(6), 476–493 (1994)

Briand, L.C., Daly, J.W., Wust, J.K.: A unified framework for coupling measurement in object-oriented systems. IEEE Trans. Softw. Eng. 25(1), 91–121 (1999)

Basili, V.R., Briand, L.C., Melo, W.L.: A validation of object-oriented design metrics as quality indicators. IEEE Trans. Softw. Eng. 22(10), 751–761 (1996)

Radjenović, D., Heričko, M., Torkar, R., Živković, A.: Software fault prediction metrics: a systematic literature review. Inf. Softw. Technol. 55(8), 1397–1418 (2013)

Arisholm, E., Briand, L.C., Johannessen, E.B.: A systematic and comprehensive investigation of methods to build and evaluate fault prediction models. J. Syst. Softw. 83(1), 2–17 (2010)

Shatnawi, R., Li, W.: The effectiveness of software metrics in identifying error-prone classes in post-release software evolution process. J. Syst. Softw. 81(11), 1868–1882 (2008)

Shatnawi, R.: A quantitative investigation of the acceptable risk levels of object-oriented metrics in open-source systems. IEEE Trans. Softw. Eng. 36(2), 216–225 (2010)

Galinac Grbac, T., Huljenić, D.: On the probability distribution of faults in complex software systems. Inf. Softw. Technol. 58, 250–258 (2015)

Hall, T., Beecham, S., Bowes, D., Gray, D., Counsell, S.: A systematic literature review on fault prediction performance in software engineering. IEEE Trans. Softw. Eng. 38(6), 1276–1304 (2012)

He, H., Garcia, E.A.: Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 21(9), 1263–1284 (2009)

Galinac Grbac, T., Runeson, P., Huljenić, D.: A second replicated quantitative analysis of fault distributions in complex software systems. IEEE Trans. Softw. Eng. 39(4), 462–476 (2013)

Mauša, G., Galinac Grbac, T.: Co-evolutionary multi-population genetic programming for classification in software defect prediction: an empirical case study. Appl. Soft Comput. 55, 331–351 (2017)

Graning, L., Jin, Y., Sendhoff, B.: Generalization improvement in multi-objective learning. In: The 2006 IEEE International Joint Conference on Neural Network Proceedings, pp. 4839–4846 (2006)

Eiben, A.E., Smith, J.E.: Introduction to Evolutionary Computing. Springer, Heidelberg (2003)

Martin, W.N., Lienig, J., Cohoon, J.P.: Island (migration) models: evolutionary algorithms based on punctuated equilibria. Handb. Evol. Comput. 6, 1–15 (1997)

Arar, O.F., Ayan, K.: Deriving thresholds of software metrics to predict faults on open source software. Expert Syst. Appl. 61(1), 106–121 (2016)

Shatnawi, R.: Deriving metrics thresholds using log transformation. J. Softw.: Evol. Process 27(2), 95–113 (2015). JSME-14-0025.R2

Mauša, G., Galinac Grbac, T., Dalbelo Bašić, B.: A systemathic data collection procedure for software defect prediction. Comput. Sci. Inf. Syst. 13(1), 173–197 (2016)

Oliveira, P., Valente, M.T., Lima, F.P.: Extracting relative thresholds for source code metrics. In: Proceedings of CSMR-WCRE, pp. 254–263 (2014)

Weiss, G.M.: Mining with rarity: a unifying framework. SIGKDD Explor. Newsl. 6(1), 7–19 (2004)

Andersson, C., Runeson, P.: A replicated quantitative analysis of fault distributions in complex software systems. IEEE Trans. Softw. Eng. 33(5), 273–286 (2007)

Fenton, N.E., Ohlsson, N.: Quantitative analysis of faults and failures in a complex software system. IEEE Trans. Softw. Eng. 26(8), 797–814 (2000)

Bhowan, U., Johnston, M., Zhang, M., Yao, X.: Evolving diverse ensembles using genetic programming for classification with unbalanced data. IEEE Trans. Evol. Comput. 17(3), 368–386 (2013)

Mauša, G., Galinac Grbac, T., Dalbelo Bašić, B.: Software defect prediction with bug-code analyzer - a data collection tool demo. In: Proceedings of SoftCOM 2014 (2014)

Mauša, G., Perković, P., Galinac Grbac, T., Štajduhar, I.: Techniques for bug-code linking. In: Proceedings of SQAMIA 2014, pp. 47–55 (2014)

Hastie, T., Tibshirani, R., Friedman, J.: The Elements of Statistical Learning: Data Mining, Inference and Prediction, 2nd edn. Springer, Heidelberg (2009)

Zimmermann, T., Nagappan, N.: Predicting defects using network analysis on dependency graphs. In: Proceedings of the 30th International Conference on Software Engineering. ICSE 2008, pp. 531–540. ACM, New York (2008)

Bender, R.: Quantitative risk assessment in epidemiological studies investigating threshold effects. Biometrical J. 41(3), 305–319 (1999)

Bhowan, U., Johnston, M., Zhang, M., Yao, X.: Reusing genetic programming for ensemble selection in classification of unbalanced data. IEEE Trans. Evol. Comput. 18, 893–908 (2013)

Acknowledgments

This work is supported in part by Croatian Science Foundation’s funding of the project UIP-2014-09-7945 and by the University of Rijeka Research Grant 13.09.2.2.16.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Mauša, G., Grbac, T.G. (2017). The Stability of Threshold Values for Software Metrics in Software Defect Prediction. In: Ouhammou, Y., Ivanovic, M., Abelló, A., Bellatreche, L. (eds) Model and Data Engineering. MEDI 2017. Lecture Notes in Computer Science(), vol 10563. Springer, Cham. https://doi.org/10.1007/978-3-319-66854-3_7

Download citation

DOI: https://doi.org/10.1007/978-3-319-66854-3_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-66853-6

Online ISBN: 978-3-319-66854-3

eBook Packages: Computer ScienceComputer Science (R0)

color,

color, color.

color.