Abstract

The paper contains a complete analysis of the Galton–Watson models with immigration, including the processes in the random environment, stationary or nonstationary ones. We also study the branching random walk on \(Z^{d}\) with immigration and prove the existence of the limits for the first two correlation functions.

For the second author, this work has been funded by the Russian Academic Excellence Project ‘5-100’.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

A problem with many single population models of population dynamics involving processes of birth, death, and migration is that the populations do not attain steady states or do so only under critical conditions. One solution is to allow immigration, which can stabilize the population when the birth rate is less than the mortality rate.

Here, we present analysis of several models that incorporate immigration. The first two are spatial Galton–Watson processes, the first with no migration and the second with finite Markov chain spatial dynamics (see Sects. 2 and 3 respectively). The third model allows migration on \(\mathbb {Z}^d\) (see Sect. 4). The remaining models all involve random environments in some way (see Sect. 5). Two are again Galton–Watson processes, the first with a random environment based on population size and the second with a random environment given by a Markov chain. The last two models have birth, death, immigration, and migration in a random environment allowing in some way nonstationarity in both space and time. We study in this paper only first and second moments. We will return to the complete analysis of the models with immigration in another publication. It will include a theorem about the existence of steady states and an analysis of the stability of these states.

2 Spatial Galton–Watson Process with Immigration. No Migration and No Random Environment

2.1 Moments

Assume that at each site for each particle we have birth of one new particle with rate \(\beta \) and death of the particle with rate \(\mu \). Also, assume that regardless of the number of particles at the site we have immigration of one new particle with rate k (this is a simplified version of the process in [1]). Assume that \(\beta < \mu \), for otherwise the population will grow exponentially. Assume we start with one particle at each site. In continuous time, for a given site x, \(x \in Z^d\), we can obtain all moments recursively by means of the Laplace transform with respect to n(t, x), where n(t, x) is the population size at time t at x

Specifically, for the jth moment, \(m_j\)

A partial differential equation for \(\varphi _t(\lambda )\) can be derived using the forward Kolmogorov equations

where the r.v. \(\xi \) is defined

In other words, our site (x) in a small time interval (dt) can gain a new particle at rate \(\beta \) for every particle at the site or through immigration with rate k; it can lose a particle at rate \(\mu \) for every particle at the site; or no change at all can happen. Because our model is homogeneous in space, we can write n(t) for n(t, x). This leads to the general differential equation

from which we can calculate the recursive set of differential equations

Applying Eq. 2.1, we obtain a set of recursive differential equations for the moments

where \(s_j\) denotes a linear expression involving lower order moments and where we define \(m_0 = 1\). For example, the differential equations for the first and second moments are

and

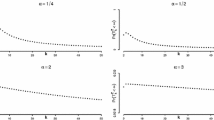

These have the solutions:

and

Again, given that we have assumed that \(\mu > \beta \), in other words, the birth rate is not high enough to maintain the population size, as \(t \rightarrow \infty \)

and

Moreover, it is clear from Eq. 2.4 that all the moments are finite.

In other words, the population size will approach a finite limit, which can be regulated by controlling the immigration rate k, and this population size will be stable, as indicated by the fact that the limiting variance is finite. Without immigration, i.e., if \(k = 0\), the population size will decay exponentially. Another possibility, because all sites are independent and there are no spatial dynamics, is for there to be immigration at some sites, which therefore reach stable population levels, and not at others, where the population thus decreases exponentially. Of course, if the birth rate exceeds the death rate, \(\beta > \mu \), \(m_1(t)\) increases exponentially and immigration has negligible effect, as shown by the solution for \(m_1(t)\).

2.2 Local CLT

Setting \(\lambda _n=n\beta +k\), \(\mu _n=n\mu \), we see that the model given by Eqs. 2.2 and 2.3 is a particular case of the general random walk on \(Z_+^1=\lbrace {0,1,2,\ldots }\rbrace \) with generator

The theory of such chains has interesting connections to the theory of orthogonal polynomials, the moments problem, and related topics (see [2]). We recall several facts of this theory.

-

a.

Equation \(\mathcal {L}\psi =0, x\geqslant 1\), (i.e., the equation for harmonic functions) has two linearly independent solutions:

$$\begin{aligned} \begin{array}{ll} \psi _{1}(n) &{}\equiv 1 \\ \psi _{2}(n) &{}= \left\{ \begin{array}{ll} 0 &{} {\quad }n=0 \\ 1 &{} {\quad }n=1 \\ 1+\frac{\mu _1}{\lambda _1}+\frac{\mu _1\mu _2}{\lambda _1\lambda _2}+\cdots +\frac{\mu _1\mu _2\cdots \mu _{n-1}}{\lambda _1\lambda _2\cdots \lambda _{n-1}} &{} {\quad }n\geqslant 2\\ \end{array} \right. \\ \end{array} \end{aligned}$$(2.7) -

b.

Denoting the adjoint of \(\mathcal {L}\) by \(\mathcal {L}^{*}\), equation \(\mathcal {L}^{*}\pi =0\) (i.e., the equation for the stationary distribution, which can be infinite) has the positive solution

$$\begin{aligned} \pi (1)&=\frac{\lambda _{0}}{\mu _{1}} \pi (0)\end{aligned}$$(2.8)$$\begin{aligned} \pi (2)&=\frac{\lambda _{0} \lambda _{1}}{\mu _{1} \mu _{2}} \pi (0)\end{aligned}$$(2.9)$$\begin{aligned} \cdots \end{aligned}$$(2.10)$$\begin{aligned} \pi (n)&=\frac{\lambda _{0} \lambda _{1} \cdots \lambda _{n-1}}{\mu _{1} \mu _{2} \cdots \mu _{n}} \pi (0) \end{aligned}$$(2.11)

This random walk is ergodic (i.e., n(t) converges to a statistical equilibrium, a steady state) if and only if the series \(1+\frac{\lambda _0}{\mu _1}\cdots +\frac{\lambda _0\lambda _1}{\mu _1\mu _2}+\cdots +\frac{\lambda _0\lambda _1\cdots \lambda _{n-1}}{\mu _1\mu _2\cdots \mu _n}\) converges. In our case,

If \(\beta >\mu \), then, for \(n>n_0\), for some fixed \(\varepsilon >0\), \(\frac{k+(n-1)\beta }{n\mu }>1+\varepsilon \), that is, \(x_n \ge C^n\), for \(C>1\) and \(n \ge n_1(\varepsilon )\), and so \(\sum x_n=\infty \). In contrast, if \(\beta <\mu \), then, for some \(0<\varepsilon <1\), \(\frac{k+(n-1)\beta }{n\mu }<1-\varepsilon \), and \(x_n\le q^n\), for \(0<q<1\) and \(n>n_1(\varepsilon )\); thus, \(\sum x_n <\infty \). In this ergodic case, the invariant distribution of the random walk n(t) is given by the formula

where

Theorem 2.1

(Local Central Limit theorem) Let \(\beta <\mu \). If \(l=O(k^{2/3})\), then, for the invariant distribution \(\pi (n)\)

where \(\sigma ^2=\frac{\mu k}{(\mu -\beta )^2}\), \(n_{0}\sim \frac{k}{\mu -\beta }\).

The proof, which we omit here, makes use of the fact that \(\tilde{S}\) is a degenerate hypergeometric function and so \(\tilde{S}=\left( 1-\frac{\beta }{\mu }\right) ^{-\frac{k}{\beta }}\). If we define \(a_n\) by setting \(\pi (n)=\displaystyle \frac{a_{n}}{\tilde{S}}\), then, \(a_{n_0}\sim \frac{k}{\mu -\beta }\), and, setting \(l=O(k^{2/3})\), straightforward computations yield \(a_{n_0+l}\sim a_{n_0}e^{-\frac{l^2}{2\sigma ^2}}.\) Application of Stirling’s formula leads to the result.

2.3 Global Limit Theorems

A functional Law of Large Numbers follows directly from Theorem 3.1 in Kurtz (1970 [3]). Likewise, a functional Central Limit Theorem follows from Theorems 3.1 and 3.5 in Kurtz (1971 [4]). We state these theorems here, therefore, without proof.

Write the population size as \(n_k(t)\), a function of the immigration rate as well as time. Set \(n_k^* = \frac{k}{\mu - \beta }\), the limit of the first moment as \(t \rightarrow \infty \). Define a new stochastic process for the population size divided by the immigration rate, \(Z_k(t) := \frac{n_k(t)}{k}\). Set \(z^* = \frac{n_k^*}{k} = \frac{1}{\mu - \beta }\).

We define the transition function, \(f_k(\frac{n_k}{k},j) := \frac{1}{k} p(n_k,n_k+j)\). Thus,

Note that \(f_k(z,j)\) does not, in fact, depend on k and we write simply f(z, j).

Theorem 2.2

(Functional LLN) Suppose \(\lim \limits _{k\rightarrow \infty } Z_k(0) = z_0\). Then, as \(k \rightarrow \infty \), \(Z_k(t) \rightarrow Z(t)\) uniformly in probability, where Z(t) is a deterministic process, the solution of

where

This has the solution

Next, define \(G_k(z) := \displaystyle \sum _{j}j^2 f_k(z,j) = (b + \mu )z + 1\). This too does not depend on k and we simply write G(z).

Theorem 2.3

(Functional CLT) If \(\lim \limits _{k\rightarrow \infty } \sqrt{k} \left( Z_k(0)-z^* \right) = \zeta _0\), the processes

converge weakly in the space of cadlag functions on any finite time interval [0, T] to a Gaussian diffusion \(\zeta (t)\) with:

-

(1)

initial value \(\zeta (0) = \zeta _0\),

-

(2)

mean

$$E\zeta (s) = \zeta _0 L_s := \zeta _0 e^{\int \limits _0^s F'(Z(u,z_0))du},$$ -

(3)

variance

$$\mathrm {Var}(\zeta (s)) = L_s^2 \int \limits _0^s L_u^{-2} G(Z(u,z_0))du.$$

Suppose, moreover, that \(F(z_0) = 0\), i.e., \(z_0 = z^*\), the equilibrium point. Then, \(Z(t) \equiv z_0\) and \(\zeta (t)\) is an Ornstein–Uhlenbeck process (OUP) with initial value \(\zeta _0\), infinitesimal drift

and infinitesimal variance

Thus, \(\zeta (t)\) is normally distributed with mean

and variance

3 Spatial Galton–Watson Process with Immigration and Finite Markov Chain Spatial Dynamics

Let \(X = \{x, y, \ldots \}\) be a finite set, and define the following parameters.

-

\(\beta (x)\) is the rate of duplication at \(x \in X\).

-

\(\mu (x)\) is the rate of annihilation at \(x \in X\).

-

a(x, y) is the rate of transition \(x \rightarrow y\).

-

k(x) is the rate of immigration into \(x \in X\).

We define \(\overrightarrow{n}(t)=\{n(t,x), x\in X\}\), the population at moment \(t \ge 0\), with n(t, x) the occupation number of site \(x \in X\). Letting \(\overrightarrow{\lambda }=\{\lambda _x \ge 0, x\in X\}\), we write the Laplace transform of the random vector \(\overrightarrow{n}(t) \in \mathbb {R}^N\), \(N = \mathrm {Card}(X)\) as \(u(t, \overrightarrow{\lambda }) = E\, e^{-(\overrightarrow{\lambda }, \overrightarrow{n}(t))}\).

Now, we derive the differential equation for \(u(t, \overrightarrow{\lambda })\). Denote the \(\sigma \)-algebra of events before or including t by \(\mathcal {F}_{\le t}\). Setting \(\overrightarrow{\varepsilon }(t,dt)) = \overrightarrow{n}(t+dt) - \overrightarrow{n}(t)\)

The conditional distribution of \((\overrightarrow{\lambda },\overrightarrow{\varepsilon })\) under \(\mathcal {F}_{\le t}\) is given by the formulas

-

(a)

\(P\{(\overrightarrow{\lambda },\overrightarrow{\varepsilon }(t,dt)) = \lambda _x |\mathcal {F}_{\le t}\} = n(t,x)\beta (x)dt + k(x)dt\) (the birth of a new offspring at site x or the immigration of a new particle into \(x \in X\))

-

(b)

\(P\{(\overrightarrow{\lambda },\overrightarrow{\varepsilon }) = \lambda _y |\mathcal {F}_{\le t}\} = n(t,y)\mu (y)dt\) (the death of a particle at \(y \in X\))

-

(c)

\(P\{(\overrightarrow{\lambda },\overrightarrow{\varepsilon }) = \lambda _x - \lambda _z |\mathcal {F}_{\le t}\} = n(t,x)a(x,z)dt;\ x,z\in X,\ x\ne z\) (transition of a single particle from x to z. Then, \(n(t+dt,x) = n(t,x)-1\), \(n(t+dt, z) = n(t,z) + 1\).)

-

(d)

\(P\{(\overrightarrow{\lambda },\overrightarrow{\varepsilon }) = 0 |\mathcal {F}_{\le t}\} = 1-\left( \displaystyle \sum _{x\in X} n(t,x) \beta (x)\right) dt -\left( \displaystyle \sum _{x\in X} k(x)\right) dt - \left( \displaystyle \sum _{y\in X} n(t,y) \mu (y)\right) dt - \left( \displaystyle \sum _{x\ne z} n(t,x) a(x,z)\right) dt\)

After substitution of these expressions into Eq. 3.1 and elementary transformations we obtain

But

I.e., finally

The initial condition is

(say, \(u(0,\overrightarrow{\lambda }) = e^{-(\overrightarrow{\lambda },\mathbf {1})} = e^{\sum _{x\in X} \lambda _x}\) for \(n(0,x) = 1\)).

Differentiation of Eq. 3.2 and the substitution of \(\overrightarrow{\lambda } = 0\) leads to the equations for the correlation functions (moments) of the field n(t, x), \(x \in X\). Put

Then

If \(a(x,z) = a(z,x)\) then finally

Here, A is the generator of a Markov chain \(A=[a(x,y)] = A^*\).

By differentiating equation (3.2) over the variables \(\lambda _x\), \(x \in X\), one can get the equations for the correlation functions

where \(x_1, \ldots , x_m\) are different points of X and \(l_1, \dots , l_m \ge 1\) are integers. Of course \(k_{l_1 \ldots l_m}(t,x_1,\ldots ,x_m) = (-1)^{l_1 + \dots + l_m}\frac{\partial ^{l_1 + \dots + l_m} n(t,\overrightarrow{x})}{\partial ^{l_1} \lambda _{x_1} \ldots \partial ^{l_m} \lambda _{x_m}}|_{\overrightarrow{\lambda }=0}\). The corresponding equations will be linear. The central point here is that the factors \((e^{\lambda _x - \lambda _z}-1)\), \((e^{\lambda _y}-1)\), and \((e^{-\lambda _x}-1)\) are equal to 0 for \(\overrightarrow{\lambda }=0\). As a result, the higher order (\(n>l_1 + \cdots + l_m\)) correlation functions cannot appear in the equations for {\(k_{l_1 \ldots l_m}(\cdot )\), \(l_1 + \cdots + l_m = n\)}.

Consider, for instance, the correlation function (in fact, matrix- valued function)

The method based on generating functions is typical for the theory of branching processes. In the case of processes with immigration, another, Markovian approach gives new results. Let us start from the simplest case, when there is but one site, i.e., \(X = \{x\}\). Then, the process n(t), \(t \ge 0\) is a random walk with reflection on the half axis \(n \ge 0\).

For a general random walk y(t) on the half axis with reflection in continuous time, we have the following facts. Let the process be given by the generator \(G=(g(w,z))\), \(w, z \ge 0\), where \(a_w = g(w,w+1)\), \(w\ge 0\); \(b_w = g(w,w-1)\), \(w > 0\); \(g(w,w) = -(a_w+b_w)\), \(w > 0\); and \(g(0,0) = -a_0\) (see Fig. 1).

The random walk is recurrent iff the series

diverges. It is ergodic (positively recurrent) iff the series

converges. In the ergodic case, the invariant distribution of the random walk y(t) is given by the formula

(see [5]).

For our random walk, n(t)

and, for \(n \ge 1\)

Proposition 3.1

-

1.

If \(\beta > \mu \) the process n(t) is transient and the population n(t) grows exponentially.

-

2.

If \(\beta = \mu \), \(k > 0\) the process is not ergodic but rather it is zero-recurrent for \(\frac{k}{\beta } \le 1\) and transient for \(\frac{k}{\beta } > 1\).

-

3.

If \(\beta < \mu \) the process n(t) is ergodic. The invariant distribution for \(\beta <\mu \) is given by

$$\begin{aligned} \begin{aligned} \pi (n)&= \frac{1}{\tilde{S}}\frac{k(k+\beta )\cdots \left( k+\beta (n-1)\right) }{\mu \cdot 2\mu \cdots n\mu } \\&= \frac{1}{\tilde{S}}\left( \frac{\beta }{\mu }\right) ^n \frac{\frac{k}{\beta }\left( \frac{k}{\beta }+1\right) \cdots \left( \frac{k}{\beta }+n-1\right) }{n!} \\&= \frac{1}{\tilde{S}}\left( \frac{\beta }{\mu }\right) ^n (1+\alpha )\left( 1+\frac{\alpha }{2}\right) \cdots \left( 1+\frac{\alpha }{n}\right) ,\qquad \alpha = \frac{k}{\beta } - 1 \\&= \frac{1}{\tilde{S}}\left( \frac{\beta }{\mu }\right) ^n \exp {\left( \sum _{j=1}^n\ln {(1+\frac{\alpha }{j})}\right) } \sim \frac{1}{\tilde{S}}\left( \frac{\beta }{\mu }\right) ^n n^\alpha \\ \end{aligned} \end{aligned}$$where \(\tilde{S} = \displaystyle \sum _{j=1}^\infty \frac{k(k+\beta )\cdots (k+\beta (j-1))}{\mu \cdot 2\mu \cdots j\mu }\).

Proof

1 and 3 follow from Eqs. 3.3–3.5. If \(\beta = \mu \) (but \(k > 0\)), i.e., in the critical case, the process cannot be ergodic because, setting \(\alpha = \frac{k}{\beta } -1\), then \(\alpha > -1\) and as \(n \rightarrow \infty \) \(\tilde{S} \sim \displaystyle \sum _n n^\alpha = +\infty \). The process is zero-recurrent, however, for \(0<\frac{k}{\beta }\le 1\). In fact, for \(\beta = \mu \)

and the series in Eq. 3.4 diverges if \(0 < \frac{k}{\beta } \le 1\). If, however, \(k > \beta \) the series converges and the process n(t) is transient. \(\square \)

Consider, now, the general case of the finite space X. Let \(N = \mathrm {Card}\, X\) and \(\overrightarrow{n}(t)\) be the vector of the occupation numbers. The process \(\overrightarrow{n}(t)\), \(t \ge 0\) is the random walk on \((\mathbb {Z}_+^1)^N = \{0,1,...)^N\) with continuous time. The generator of this random walk was already described when we calculated the Laplace transform \(u(t, \overrightarrow{\lambda }) = E\, e^{-(\overrightarrow{\lambda }, \overrightarrow{n}(t))}\). If at the moment t we have the configuration \(\overrightarrow{n}(t) = \{n(t,x), x\in X\}\), then, for the interval \((t, t+dt)\) only the following events (up to terms of order\((dt)^2\)) can happen:

-

(a)

the birth of a new particle at the site \(x_0 \in X\), with corresponding probability \(n(t,x_0) \beta (x_0)dt + k(x_0) dt\). In this case we have the transition

$$\begin{aligned} \overrightarrow{n}(t) = \{n(t,x), x \in X\} \rightarrow \overrightarrow{n}(t+dt) = \left\{ \begin{array}{l} n(t,x), x \ne x_0 \\ n(t,x_0)+1, x=x_0 \\ \end{array} \right. \end{aligned}$$ -

(b)

the death of one particle at the site \(x_0 \in X\). This has corresponding probability \(\mu (x_0) n(t,x_0)dt\) and the transition

$$\begin{aligned} \overrightarrow{n}(t) = \{n(t,x), x \in X\} \rightarrow \overrightarrow{n}(t+dt) = \left\{ \begin{array}{l} n(t,x), x \ne x_0 \\ n(t,x_0)-1, x=x_0 \\ \end{array} \right. \end{aligned}$$(Of course, here \(n(t,x_0) \ge 1\), otherwise \(\mu (x_0) n(t,x_0) dt = 0\)).

-

(c)

the transfer of one particle from site \(x_0\) to site \(y_0 \in X\) (jump from \(x_0\) to \(y_0\)), i.e., the transition

$$\begin{aligned} \overrightarrow{n}(t) = \{n(t,x), x \in X\} \rightarrow \overrightarrow{n}(t+dt) = \left\{ \begin{array}{l} n(t,x), x \ne x_0, y_0 \\ n(t,x_0)-1, x=x_0 \\ n(t,y_0)+1, x=y_0 \\ \end{array} \right. \end{aligned}$$with probability \(n(t,x_0)a(x_0,y_0) dt\) for \(n(t,x_0) \ge 1\).

The following theorem gives sufficient conditions for the ergodicity of the process \(\overrightarrow{n}(t)\).

Theorem 3.2

Assume that for some constants \(\delta > 0\), \(A>0\) and any \(x \in X\)

Then, the process \(\overrightarrow{n}(t)\) is an ergodic Markov chain and the invariant measure of this process has exponential moments, i.e., \(E\, e^{(\overrightarrow{\lambda },\overrightarrow{n}(t))} \le c_0 <\infty \) if \(|\overrightarrow{\lambda }| \le \lambda _0\) for appropriate (small) \(\lambda _0 > 0\).

Proof

We take on \((\mathbb {Z}_+^1)^N = \{0,1,\ldots )^N\) as a Lyapunov function

Then, with G the generator of the process, \(G f(\overrightarrow{n}) \le 0\) for large enough \((\overrightarrow{n},\overrightarrow{\mathbf {1}}) = \Vert \overrightarrow{n}\Vert _1\). In fact

(The terms concerning transitions of the particles between sites make no contribution: \(1-1=0\).) \(\square \)

If \(\beta (x) \equiv \beta < \mu \equiv \mu (x)\) and \(k(x) \equiv k\) then \((\overrightarrow{n},\overrightarrow{\mathbf {1}})\), i.e., the total number of the particles in the phase space X is also a Galton–Watson process with immigration and the rates of transition shown in Fig. 2.

If \(t \rightarrow \infty \) this process has a limiting distribution with invariant measure (in which Nk replaces k). That is

4 Branching Process with Migration and Immigration

We now consider our process with birth, death, migration, and immigration on a countable space, specifically the lattice \(\mathbb {Z}^{d}\). As in the other models, we have \(\beta >0\), the rate of duplication at \(x\in Z^{d}\); \(\mu >0\), the rate of death; and \(k>0\), the rate of immigration. Here, we add migration of the particles with rate \(\kappa >0\) and probability kernel a(z), \(z \in \mathbb {Z}^{d}\), \(z\ne 0\), \(a(z) = a(-z)\), \(\sum \limits _{z\ne 0}a(z)=1\). That is, a particle jumps from site x to \(x+z\) with probability \(\kappa a(z)dt\). Here we put \(\kappa =1\) to simplify the notation.

For n(t, x), the number of particles at x at time t, the forward equation for this process is given by \(n(t+dt,x)=n(t,x)+\xi (dt,x)\), where

Note that \(\xi (dt,x)\) is independent on \(\mathcal {F}_{\leqslant t}\) (the \(\sigma \)-algebra of events before or including t) and

-

(a)

\(E[\xi (dt,x)|\mathcal {F}_{\leqslant t}]=n(t,x)(\beta -\mu -1)dt+kdt+\sum \limits _{z\ne 0}a(z)n(t,x+z)dt\).

-

(b)

\(E[\xi ^{2}(dt,x)|\mathcal {F}_{\leqslant t}]=n(t,x)(\beta +\mu +1)dt+kdt+\sum \limits _{z\ne 0}a(z)n(t,x+z)dt\).

-

(c)

\(E[\xi (dt,x)\xi (dt,y)|\mathcal {F}_{\leqslant t}]=a(x-y)n(t,x)dt+a(y-x)n(t,y)dt\). A single particle jumps from x to y or from y to x. Other possibilities have probability \(O((dt)^2)\approx 0\). Here, of course, \(x\ne y\).

-

d)

If \(x\ne y\), \(y\ne z\), and \(x\ne z\), then \(E[\xi (dt,x)\xi (dt,y)\xi (dt,z)]=0\). We will not use property (d) in this paper, but it is crucial for the analysis of moments of order greater or equal to 3.

From here on, we concentrate on the first two moments.

4.1 First Moment

Due to the fact that \(\beta < \mu \), the system has a short memory, and we can calculate all the moments under the condition that n(0, x), \(x\in \mathbb {Z}^{d}\), is a system of independent and identically distributed random variables with expectation \(\frac{k}{\mu -\beta }\). We will select Poissonian random variables with parameter \(\lambda =\frac{k}{\mu -\beta }\). Then, \(m_{1}(t,x)=\frac{k}{\mu -\beta }\), \(t\geqslant 0\), \(x \in \mathbb {Z}^{d}\), and, as a result, \(\mathcal {L}_{a}m_{1}(t,x)=0\). Setting \(m_{1}(t,x)=E[n(t,x)]\), we have

Defining the operator \(\mathcal {L}_{a}(f(t,x))=\sum \limits _{z\ne 0}a(z)[f(t,x+z)-f(t,x)]\), then, from Eq. 4.2 we get the differential equation

Because of spatial homogeneity, \(\mathcal {L}_{a}m_{1}(t,x)=0\), giving

which has the solution

Thus, if \(\beta \ge \mu \), \(m_{1}(t,x)\rightarrow \infty \), and if \(\mu >\beta \),

4.2 Second Moment

We derive differential equations for the second correlation function \(m_2(t,x,y)\) for \(x = y\) and \(x \ne y\) separately, then combine them and use a Fourier transform to prove a useful result concerning the covariance.

-

I.

\(x=y\)

$$\begin{aligned} \begin{aligned} m_{2}(t&+dt,x,x)=E[E[(n(t,x)+\xi (dt,x))^2|\mathcal {F}_{\leqslant t}]]\\&= m_{2}(t,x,x)+2E\left[ {}n(t,x)[n(t,x)(\beta -\mu -1)dt+kdt\right. \\&\qquad \quad \quad \quad \quad \quad \quad \quad \quad \quad \quad +\left. \sum \limits _{z\ne 0}a(z)n(t,x+z)]dt\right] \\&\quad + E\left[ n(t,x)(\beta +\mu +1)dt + kdt + \sum \limits _{z\ne 0}a(z)n(t,x+z)dt\right] \\ \end{aligned} \end{aligned}$$Denote \(\mathcal {L}_{ax}m_{2}(t,x,y)=\sum \limits _{z\ne 0}a(z)(m_{2}(t,x+z,y)-m_{2}(t,x,y))\). From this follows the differential equation

$$\begin{aligned} \left\{ \begin{array}{cl} \displaystyle \frac{\partial m_{2}(t,x,x)}{\partial t}&{}=2(\beta -\mu )m_{2}(t,x,x)+2\mathcal {L}_{ax}m_{2}(t,x,x)+\frac{2k^2}{\mu -\beta }+\frac{2k(\mu +1)}{\mu -\beta }\\ m_{2}(0,x,x)&{}=0 \end{array}\right. \end{aligned}$$ -

II.

\(x \ne y\) Because only one event can happen during dt

$$P\{\xi (dt,x)=1,\xi (dt,y)=1\}=P\{\xi (dt,x)=-1,\xi (dt,y)=-1\}=0,$$while the probability that one particle jumps from y to x is

$$P\{\xi (dt,x)=1,\xi (dt,y)=-1\}=a(x-y)n(t,y)dt,$$and the probability that one particle jumps from x to y is

$$P\{\xi (dt,x)=-1,\xi (dt,y)=1\}=a(y-x)n(t,x)dt.$$Then, similar to above

$$\begin{aligned}&m_{2}(t+dt,x,y)=E[E[(n(t,x)+\xi (t,x))(n(t,y)+\xi (t,y))|\mathcal {F}_{\leqslant t}]]\\&=m_{2}(t,x,y)+(\beta -\mu )m_{2}(t,x,y)dt+km_{1}(t,y)dt\\&\quad + \sum \limits _{z\ne 0}a(z)(m_2(t,x+z,y)-m_{2}(t,x,y))dt \\&+ (\beta - \mu ) m_{2}(t,x,y)dt + km_{1}(t,x)dt\\&+\sum \limits _{z\ne 0}a(z)(m_2(t,x,y+z) - m_2(t,x,y))dt\\&+a(x-y)m_{1}(t,y)dt+a(y-x)m_{1}(t,x)dt\\&=m_{2}(t,x,y)+2(\beta -\mu )m_{2}(t,x,y)dt+k(m_{1}(t,y)+m_{1}(t,x))dt\\&\quad + (\mathcal {L}_{ax} + \mathcal {L}_{ay}) m_{2}(t,x,y)dt\\&+ a(x-y)(m_{1}(t,x)+m_{1}(t,y))dt \end{aligned}$$The resulting differential equation is

$$\begin{aligned} \begin{aligned} \displaystyle \frac{\partial m_{2}(t,x,y)}{\partial t}&=2(\beta -\mu )m_{2}(t,x,y)+(\mathcal {L}_{ax}+\mathcal {L}_{ay})m_{2}(t,x,y)+k(m_{1}(t,x)\\&\quad +m_{1}(t,y)) +a(x-y)[m_{1}(t,x)+m_{1}(t,y)] \end{aligned} \end{aligned}$$(4.3)That is

$$\begin{aligned} \begin{aligned} \displaystyle \frac{\partial m_{2}(t,x,y)}{\partial t}&=2(\beta -\mu )m_{2}(t,x,y)+(\mathcal {L}_{ax}+\mathcal {L}_{ay})m_{2}(t,x,y)\\&\quad +\frac{2k^2}{\mu -\beta }+2a(x-y)\frac{k}{\mu -\beta } \end{aligned} \end{aligned}$$

Because, for fixed t, n(t, x) is homogeneous in space, we can write \(m_{2}(t,x,y)=m_{2}(t,x-y)=m_{2}(t,u)\). Then, we can condense the two cases into a single differential equation

Here \(u=x-y\ne 0\) and \(a(0)=0\).

We can partition \(m_{2}(t,u)\) into \(m_{2}(t,u) = m_{21}+m_{22}\), where the solution for \(m_{21}\) depends on time but not position and the solution for \(m_{22}\) depends on position but not time. Thus, \(\mathcal {L}_{au}m_{21}=0\) and \(m_{21}\) corresponds to the source \(\frac{2k^2}{\mu -\beta }\), which gives

As \(t \rightarrow \infty \), \(m_{21} \rightarrow \bar{M_{2}}=m_{1}^{2}(t,x)=\frac{k^2}{(\mu -\beta )^2}\).

For the second part, \(m_{22}\), \(\frac{\partial m_{22}}{\partial t}=0\), i.e.,

As \(t\rightarrow \infty \), \(m_{22}\rightarrow \tilde{M}_{2}\). \(\tilde{M}_2\) is the limiting correlation function for the particle field n(t, x), \(t\rightarrow \infty \). It is the solution of the “elliptic” problem

Applying the Fourier transform \(\widehat{\tilde{M_{2}}}(\theta )=\sum \limits _{u\in Z^{d}}\tilde{M_{2}}(u)e^{i(\theta ,u)}\), \(\theta \in T^{d}=[-\pi ,\pi ]^{d}\),

we obtain

We have proved the following result.

Theorem 4.1

If \(t\rightarrow \infty \), then \(\mathrm {Cov}(n(t,x),n(t,y))=E[n(t,x)n(t,y)]-E[n(t,x)]E[n(t,y)] =m_{2}(t,x,y)-m_{1}(t,x)m_{1}(t,y)\), tends to \(\tilde{M_{2}}(x-y)=\tilde{M_{2}}(u)\in L^2(Z^d)\)

The Fourier transform of \(\tilde{M_{2}}(\cdot )\) is equal to

where \(c_{1}=\frac{k}{\mu -\beta }\), \(c_{2}=\frac{k}{\mu -\beta }\), \(c_{3}=\mu -\beta \)

Let us compare our results with the corresponding results for the critical contact model [6] (where \(k=0\), \(\mu = \beta \)). In the last case, the limiting distribution for the field n(t, x), \(t\geqslant 0\), \(x\in \mathbb {Z}^{d}\), exists if and only if the underlying random walk with generator \(\mathcal {L}_{a}\) is transient. In the recurrent case, we have the phenomenon of clusterization. The limiting correlation function is always slowly decreasing (like the Green kernel of \(\mathcal {L}_{a}\)).

In the presence of immigration, the situation is much better: the limiting correlation function always exists and we believe that the same is true for all moments. The decay of \(\tilde{M}_{2}(u)\) depends on the smoothness of \(\hat{a}(\theta )\). Under minimal regularity conditions, correlations have the same order of decay as a(z), \(z\rightarrow \infty \). For instance, if a(z) is finitely supported or exponentially decreasing, the correlation also has an exponential decay. If a(z) has power decay, then the same is true for correlation \(\tilde{M}_{2}(u)\), \(u\rightarrow \infty \).

5 Processes in a Random Environment

The final four models involve a random environment. Two are Galton–Watson models with immigration and lack a spatial component. In the first, the parameters are random functions of the population size; in the second, they are random functions of a Markov chain on a finite space. The last two models are spatial and feature immigration, migration, and, most importantly, a random environment in space, still stationary in time for the third but not stationary in time for the fourth.

5.1 Galton–Watson Processes with Immigration in Random Environments

5.1.1 Galton–Watson Process with Immigration in Random Environment Based on Population Size

Assume that rates of mortality \(\mu (\cdot )\), duplication \(\beta (\cdot )\), and immigration \(k(\cdot )\) are random functions of the volume of the population \(x\ge 0\). Namely, the random vectors \((\mu ,\beta ,k)(x,\omega )\) are i.i.d on the underlying probability space \((\Omega _{e},\mathcal {F}_{e},P_{e})\) (e: environment).

The Galton–Watson Process is ergodic (\(P_{e}\)-a.s) if and only if the random series

Theorem 5.1

Assume that the random variables \(\beta (x,\omega )\), \(\mu (x,\omega )\), \(k(x,\omega )\) are bounded from above and below by the positive constants \(C^{\pm }\): \(0<C^{-}\le \beta (x,\omega )\le C^{+}<\infty \). Then, the process \(n(t,\omega _{e})\) is ergodic \(P_{e}\)-a.s. if and only if \(\langle \ln \frac{\beta (x,\omega )}{\mu (x,\omega )}\rangle = \langle \ln \beta (\cdot )\rangle -\langle \ln (\mu (\cdot ))\rangle <0\)

Proof

It is sufficient to note that

\(\square \)

It follows from the strong LLN that the series diverges exponentially fast for \(\langle \ln \beta (\cdot )\rangle - \langle \ln \mu (\cdot ) \rangle >0\); it converges like a decreasing geometric progression for \(\langle \ln \beta (\cdot )\rangle -\langle \ln \mu (\cdot ) \rangle < 0\); and it is divergent if \(\langle \ln \beta (\cdot ) \rangle = \langle \ln \mu (\cdot ) \rangle \). It diverges even when \(\beta (x,\omega _{e})=\mu (x,\omega _{e})\) due to the presence of \(k^{-}\ge C^{-}>0.\)

Note that \(ES<\infty \) if and only if \(\langle \frac{\lambda (x-1)}{\mu (x)}\rangle = \langle \lambda \rangle \langle \frac{1}{\mu }\rangle <\infty \), i.e., the fluctuations of S, even in the case of convergence, can be very high.

5.1.2 Random Nonstationary (Time Dependent) Environment

Assume that k(t) and \(\Delta =(\mu -\beta )(t)\) are stationary random processes on \((\Omega _{m},P_{m})\) and that k(t) is independent of \(\Delta \). For a fixed environment, i.e., fixed \(k(\cdot )\) and \(\Delta (\cdot )\), the equation for the first moment takes the form

Then

Assume that \(\frac{1}{\delta }\geqslant \Delta (\cdot ) \geqslant \delta >0\), \(\frac{1}{\delta }\geqslant k(\cdot ) \geqslant \delta >0\). Then

Thus, for large t, the process \(m_{1}(t,\omega _{m})\) is exponentially close to the stationary process

Assume now that k(t) and \(\Delta (s)\) are independent stationary processes and \(-\Delta (t)=V(x(t))\), where x(t), \(t\geqslant 0\), is a Markov Chain with continuous time and symmetric geometry on the finite set X. (One can also consider x(t), \(t \geqslant 0\), as a diffusion process on a compact Riemannian manifold with Laplace–Beltrami generator \(\Delta \).) Let

Then

The operator \(\mathcal {L}\) is symmetric in \(L^2(x)\) with dot product \((f,g)=\sum \limits _{x \in X}f(x)\bar{g(x)}\). Thus, \(H=\mathcal {L}+V\) is also symmetric and has real spectrum \(0>-\delta \geqslant \lambda _{0}>\lambda _{1}\geqslant \cdots \) with orthonormal eigenfunctions \(\psi _{0}(x)>0\),\(\psi _{1}(x)>0\), \(\ldots \) Inequality \(\lambda _{0}\leqslant \delta <0\) follows from our assumption on \(\Delta (\cdot )\).

The solution of Eq. 5.1 is given by

Now, we can calculate \(<\tilde{m_{1}}(t,x,\omega _{m})>\).

Here, \(\pi (x)=\frac{1}{N}=\frac{{\mathbbm {1}}(x)}{N}\) is the invariant distribution of \(x_{s}\). Then

5.1.3 Galton–Watson Process with Immigration in Random Environment Given by Markov Chain

Let x(t) be an ergodic MCh on the finite space X and let \(\beta (x),\mu (x),k(x)\), the rates of duplication, annihilation, and immigration, be functions from X to \(R^{+}\), and, therefore, functions of t and \(\omega _{e}\). The process (n(t), x(t)) is a Markov chain on \(\mathbb {Z}_{+}^{1}\times X\).

Let a(x, y), \(x,y \in X\), \(a(x,y) \ge 0\), \(\sum \limits _{y \in X} a(x,y) = 1\) for all \(x \in X\), be the transition function for x(t). Consider \(E_{(n,x)}f(n(t),x(t))= u(t,(n,x))\). Then

We obtain the backward Kolmogorov equation

Example. Two-state random environment.

Here, x(t) indicates which one of two possible states, \(\{1,2\}\) the process is in at time t. The birth, mortality, and immigration rates are different for each state: \(\beta _1\) and \(\beta _2\), \(\mu _1\) and \(\mu _2\), and \(k_1\) and \(k_2\). For a process in state 1, at any time the rate of switching to state 2 is \(\alpha _1\), with \(\alpha _2\) the rate of the reverse switch. This creates the two-state random environment. Let G be the generator for the process, as shown in Fig. 3.

The following theorem gives sufficient conditions for the ergodicity of the process (n(t), x(t)).

Theorem 5.2

Assume that for some constants \(\delta > 0\) and \(A>0\)

Then, the process (n(t), x(t)) is an ergodic Markov chain and the invariant measure of this process has exponential moments, i.e., \(E\, e^{\lambda n(t)} \le c_0 <\infty \) if \(\lambda \le \lambda _0\) for appropriate (small) \(\lambda _0 > 0\).

Proof

We take as a Lyapunov function \(f(n,x) = n\).

Then, \(G f(n(t),x(t)) = (\beta _x - \mu _x)n + k_x\). So for sufficiently large n, specifically \(n > \frac{A}{\delta }\), we have \(Gf \le 0\). \(\square \)

5.2 Models with Immigration and Migration in a Random Environment

For this most general case, we have migration and a nonstationary environment in space and time. The rates of duplication, mortality, and immigration at time t and position \(x \in \mathbb {Z}^d\) are given by \(\beta (t,x)\), \(\mu (t,x)\), and k(t, x). As in the above models, immigration is uninfluenced by the presence of other particles; also set \(\delta _{1}\le k(t,x)\le \delta _{2}\), \(0< \delta _{1}< \delta _{2} < \infty \). The rate of migration is given by \(\kappa \), with the process governed by the probability kernel a(z), the rate of transition from x to \(x+z\), \(z\in Z^{d}\).

If n(t, x) is the number of particles at \(x \in Z^{d}\) at time t, \(n(t+dt,x)=n(t,x)+\xi (t,x)\), where

For the first moment, \(m_{1}(t,x)=E[n(t,x)]\), we can write

and so, defining, as above, \(\mathcal {L}_{a}(f(t,x))=\sum \limits _{z\ne 0}a(z)[f(t,x+z)-f(t,x)]\), we obtain

We consider two cases. The first is where the duplication and mortality rates are equal, \(\beta (t,x)=\mu (t,x)\). Because of the immigration rate bounded above 0, we find that the expected population size at each site tends to infinity. In the second case, to simplify, we consider \(\beta (t,x)\) and \(\mu (t,x)\) to be stationary in time, and assume the mortality rate to be greater than the duplication rate everywhere by at least a minimal amount. Here, we show that the interplay between the excess mortality and the positive immigration results in a finite positive expected population size at each site.

5.2.1 Case I

If \(\beta (t,x)=\mu (t,x)\)

By taking the Fourier and, then, inverse Fourier transforms, we obtain

where

As \(t \rightarrow \infty \), \(\delta _{1}t\rightarrow \infty \). Thus, when the birth rate equals the death rate, the expected population at each site \(x\in \mathbb {Z}^{d}\) will go to infinity as \(t\rightarrow \infty \).

5.2.2 Case II

Here, \(\beta (t,x)\ne \mu (t,x)\). For simplification we assume that only immigration, k(t, x), is not stationary in time. In other words, we assume that the duplication and mortality rates are stationary in time and depend only on position: \(\beta (t,x)=\beta (x)\), \(\mu (t,x)=\mu (x)\) and \(\mu (x)-\beta (x)\geqslant \delta _{1}>0\). From Eq. 5.3, we get

This has the solution

where \(q(t-s,x,y)\) is the solution for

Using the Feynman–Kac formula, we obtain

with p(t, x, y) as in Eq. 5.4.

Finally

Thus, when \(\mu (x)-\beta (x)\) is bounded above 0, then \(\lim \limits _{t\rightarrow \infty }m_{1}(t,x)\) is bounded by 0 and \(\frac{||k ||_{\infty }}{\delta _{1}}\), so this limit exists and is finite.

References

Sevast’yanov, R.A.: Limit theorems for branching stochastic processes of special form. Theory Probab. Appl. 3, 321–331 (1957)

Karlin, S., McGregor, J.: Ehrenfest urn models. J. Appl. Probab. 2, 352–376 (1965)

Kurtz, T.G.: Solutions of ordinary differential equations as limits of pure jump Markov processes. J. Appl. Prob 7, 49–58 (1970)

Kurtz, T.G.: Limit theorems for sequences of jump Markov processes approximating ordinary differential equations. J. Appl. Prob 8, 344–356 (1971)

Feller, W.: An Introduction to Probability Theory and Its Applications, vol. I, 3rd edn. Wiley, New York (1968)

Feng, Y., Molchanov, S.A., Whitmeyer, J.: Random walks with heavy tails and limit theorems for branching processes with migration and immigration. Stoch. Dyn. 12(1), 1150007 (2012). 23 pages

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Han, D., Molchanov, S., Whitmeyer, J. (2017). Population Processes with Immigration. In: Panov, V. (eds) Modern Problems of Stochastic Analysis and Statistics. MPSAS 2016. Springer Proceedings in Mathematics & Statistics, vol 208. Springer, Cham. https://doi.org/10.1007/978-3-319-65313-6_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-65313-6_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-65312-9

Online ISBN: 978-3-319-65313-6

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)