Abstract

The success of learning analytics in improving higher education students’ learning has yet to be proven systematically and based on rigorous empirical findings. Only a few works have tried to address this but limited evidence is shown. This chapter aims to form a critical reflection on empirical evidence demonstrating how learning analytics have been successful in facilitating study success in continuation and completion of students’ university courses. We present a critical reflection on empirical evidence linking study success and LA. Literature review contributions to learning analytics were first analysed, followed by individual experimental case studies containing research findings and empirical conclusions. Findings are reported focussing on positive evidence on the use of learning analytics to support study success, insufficient evidence on the use of learning analytics and link between learning analytics and intervention measures to facilitate study success.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Study success includes the successful completion of a first degree in higher education to the largest extent and the successful completion of individual learning tasks to the smallest extent (Sarrico, 2018). The essence here is to capture any positive learning satisfaction, improvement or experience during learning. As some of the more common and broader definitions of study success include terms such as retention, persistence and graduation rate, the opposing terms include withdrawal, dropout, noncompletion, attrition and failure (Mah, 2016).

Learning analytics (LA) show promise to enhance study success in higher education (Pistilli & Arnold, 2010). For example, students often enter higher education academically unprepared and with unrealistic perceptions and expectations of academic competencies for their studies. Both the inability to cope with academic requirements and unrealistic perceptions and expectations of university life, in particular with regard to academic competencies, are important factors for leaving the institution prior to degree completion (Mah, 2016). Yet Sclater and Mullan (2017) reported on the difficulty to isolate the influence of the use of LA, as often they are used in addition to wider initiatives to improve student retention and academic achievement.

However, the success of LA in improving higher education students’ learning has yet to be proven systematically and based on rigorous empirical findings. Only a few works have tried to address this but limited evidence is shown (Suchithra, Vaidhehi, & Iyer, 2015). This chapter aims to form a critical reflection on empirical evidence demonstrating how LA have been successful in facilitating study success in continuation and completion of students’ university courses.

2 Current Empirical Findings on Learning Analytics and Study Success

There have been a number of research efforts, some of which focussed on various LA tools and some focussed on practices and policies relating to learning analytics system adoption at school level, higher education and national level. Still, significant evidence on the successful usage of LA for improving students’ learning in higher education is lacking for large-scale adoption of LA (Buckingham Shum & McKay, 2018).

An extensive systematic literature review of empirical evidence on the benefits of LA as well as the related field of educational data mining (EDM) was conducted by Papamitsiou and Economides (2014). They classified the findings from case studies focussing on student behaviour modelling, prediction of performance, increase self-reflection and self-awareness, prediction of dropout as well as retention. Their findings suggest that large volumes of educational data are available and that pre-existing algorithmic methods are applied. Further, LA enable the development of precise learner models for guiding adaptive and personalised interventions. Additional strengths of LA include the identification of critical instances of learning, learning strategies, navigation behaviours and patterns of learning (Papamitsiou & Economides, 2014). Another related systematic review on LA was conducted by Kilis and Gülbahar (2016). They conclude from the reviewed studies that log data of student’s behaviour needs to be enriched with additional information (e.g. actual time spent for learning, semantic-rich information) for better supporting learning processes. Hence, LA for supporting study success requires rich data about students’ efforts and performance as well as detailed information about psychological, behavioural and emotional states.

As further research is conducted in the field of LA, the overriding research question of this chapter remains: Is it possible to identify a link between LA and related prevention and intervention measures to increase study success in international empirical studies?

2.1 Research Methodology

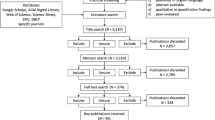

Our critical reflection on empirical evidence linking study success and LA was conducted in 2017. Literature review contributions to LA were first analysed, followed by individual experimental case studies containing research findings and empirical conclusions as well as evidence. Search terms included “learning analytics” in combination with “study success”, “retention”, “dropout”, “prevention”, “course completion” and “attrition”. We searched international databases including Google Scholar, ACM Digital Library, Web of Science, ScienceDirect, ERIC and DBLP. Additionally, we searched articles published in journals such as Journal of Learning Analytics, Computers in Human Behaviour, Computers & Education, Australasian Journal of Educational Technology and British Journal of Educational Technology. 6220 articles were located, and after duplicated papers were removed, 3163 were remaining. All of these abstracts of papers were screened and were included in our critical reflection on empirical evidence according to our inclusion criteria as follows: (a) were situated in the higher education context, (b) were published between 2013 and 2017, (c) were published in English, (d) presented either qualitative or quantitative analyses and findings and (e) were peer-reviewed. The number of key studies identified was 374 (in the first round) then limited to 46 (due to substantiality of empirical evidence); an elaboration of the identified empirical evidence from the limited studies will form our upcoming work. In this paper, we provide a general overview of the identified empirical evidence.

2.2 Results of the Critical Reflection

This section is divided into (1) positive evidence on the use of LA to support study success, (2) insufficient evidence on the use of LA to support study success and (3) link between LA and intervention measures to facilitate study success.

2.2.1 Positive Evidence on the Use of Learning Analytics to Support Study Success

Some of the positive empirical evidence presented by Sclater and Mullan (2017) include the following: At the University of Nebraska-Lincoln after LA was adopted, their 4-year graduation rate increased by 3.8% in 4 years. At Columbus State University College, Georgia, course completion rates rose 4.2%. Similarly, at the University of New England, South Wales, the dropout rate decreased from 18% to 12%. Control group studies yield the following results: there was a significant improvement in final grade (6%) at Marist College; at Strayer University, Virginia, the identified at-risk students were given intervention and resulted in 5% increase in attendance, 12% increase in passing and 8% decrease in dropout. At the University of South Australia, 549 of 730 at-risk students were contacted; 66% passed with average GPA of 4.29. Fifty-two percent of un-contacted at-risk students passed with average GPA of 3.14. At Purdue University, Indiana, it was found that using the university’s predictive analytics system (Course Signal), there were consistently higher levels of Bs and Cs grades obtained than Ds and Fs grades in two semesters of courses. A 15% increase in recruitment and a 15% increase in retention as a result was reported (Tickle, 2015).

We also identified positive evidence on the use of LA to support study success through the use of assessment data, engagement indicators, online platform data and the use of personalised feedback, as follows.

Predictive Analytics Using Assessment Data

It was found on average that there was a 95% probability if a student had not submitted their assignment and that they will not finish the course (Hlosta, Zdrahal, & Zendulka, 2017). Here, assessment description referred to (1) students’ demographic information (e.g. age, gender, etc.), (2) students’ interactions with the VLE system, (3) information about students’ date of registration and (4) a flag indicating student assignment submission. This information is used to extract learning patterns from the students where their progress of the course can be predicted. The assessment of the first assignment provides a critical indicator for the remainder of the course. The conducted experiments showed this method can successfully predict at-risk students.

Predictive Analytics Using Engagement Indicators

Information about students’ behaviour that is made available during the course can be used to predict the decrease of engagement indicators at the end of a learning sequence. Three main tasks that students conducted in a MOOC environment were able to yield good results in the prediction if there would be a decrease in engagement in the course as signalled by engagement indicators (Bote-Lorenzo & Gomez-Sanchez, 2017). The authors found that three engagement indicators derived from tasks being carried out in a MOOC were very successful in predicting study success—watching lectures, solving finger exercises and submitting assignments. It was suggested that their predictive method would be useful to detect disengaging students in digital learning environments.

Predictive Analytics Using Digital Platform Data

Self-report and digital learning system information (i.e. trace data) can be used to identify students at risk and in need of support as demonstrated by a study conducted by Manai, Yamada, and Thorn (2016). For example, some self-report survey items measure non-cognitive factors such as indicative predictors of student outcomes allowing one to inform actionable insights with only a few items’ data. Certain formulas were used in their study such as (1) if students showing higher levels of fixed mindset and to be at risk, a growth mindset is promoted to them by engaging them in growth mindset activities and also giving feedback to students that establishes high standards and assuring that the student is capable of meeting them; (2) if students showing higher levels of belonging uncertainty, group activities that facilitate building a learning community for all students in the classroom are provided; and (3) if students showing low levels of math conceptual knowledge, scaffolding for students is provided during the use of the online learning platform. Similarly, Robinson, Yeomans, Reich, Hulleman, and Gehlbach (2016) utilised natural language processing, and their experiment showed promising predictions from unstructured text which students would successfully complete an online course.

Personalised Feedback Leading to Learning Gains

Feedback can be tailored based on the student’s affective state in the intelligent support system. The affective state is derived from speech and interaction, which is then used to determine the type of appropriate feedback and its presentation (interruptive or non-interruptive) (Grawemeyer et al., 2016). Their results showed that students using the environment were less bored and less off-task showing that students had higher learning gains and there is a potential and positive impact affect-aware intelligent support.

2.2.2 Insufficient Evidence on the Use of LA to Support Study Success

The most recent review of learning analytics published in 2017 from Sclater and Mullan (2017) described the use of LA to be most concentrated in the United States, Australia and England; most institutional initiatives on LA are at an early stage and lacking sufficient time to find concrete empirical evidence of their effectiveness (Ifenthaler, 2017a). However, some of the most successful projects were in the US for-profit sector, and these findings are unpublished. In the review conducted by Ferguson et al. (2016), the state of the art in the implementation of LA for education and training in Europe, United States and Australia was presented which is still scarce. Specifically, it was noticed that there are relatively scarce information on whether LA improves teaching and learners’ support at universities, and problems with the evidence include lack of geographical spread, gaps in our knowledge (informal learning, workplace learning, ethical practice, lack of negative evidence), little evaluation of commercially available tools and lack of attention to the learning analytics cycle (Ferguson & Clow, 2017).

Threats deriving from LA include ethical issues, data privacy and danger of overanalysis, which do not bring any benefits and overconsumption of resources (Slade & Prinsloo, 2013). Accordingly, several principles for privacy and ethics in LA have been proposed. They highlight the active role of students in their learning process, the temporary character of data, the incompleteness of data on which learning analytics are executed, the transparency regarding data use as well as the purpose, analyses, access, control and ownership of the data (Ifenthaler & Schumacher, 2016; West, Huijser, & Heath, 2016). In order to overcome concerns over privacy issues while adopting LA, an eight-point checklist based on expert workshops has been developed that can be applied by teachers, researchers, policymakers and institutional managers to facilitate a trusted implementation of LA (Drachsler & Greller, 2016). The DELICATE checklist focusses on Determination, Explain, Legitimate, Involve, Consent, Anonymise, Technical aspects and External partners. However, empirical evidence towards student perceptions of privacy principles related to learning analytics is still in its infancy and requires further investigation and best practice examples (Ifenthaler & Tracey, 2016).

Ferguson et al. (2016) documented a number of tools that have been implemented for education and training and raised a number of important points—(a) most LA tools are provided on the supply side from education institutions and not on the demand side required by students and learners; (b) data visualisation tools are available, however do not provide much help in advising steps that learners should take in order to advance their studies/increase study success; and (c) especially evidence is lacking on formal validation and evaluation of LA tools of the impact and success, although national policies in some European countries such as Denmark, the Netherlands and Norway and universities such as Nottingham Trent University, Open University UK and Dublin City University have commenced to create an infrastructure to support and enable policies of utilisation of LA or implementation/incorporation of LA systems. Hence, the evidence on successful implementation and institution-wide practice is still limited (Buckingham Shum & McKay, 2018). Current policies for learning and teaching practices include developing LA that are supported through pedagogical models and accepted assessment and feedback practices. It is further suggested that policies for quality assessment and assurance practices include the development of robust quality assurance processes to ensure the validity and reliability of LA tools as well as developing evaluation benchmarks for LA tools (Ferguson et al., 2016).

2.2.3 Link between Learning Analytics and Intervention Measures to Facilitate Study Success

Different LA methods are used to predict student dropout such as predictive models and student engagement with the virtual learning environment (VLE) (more reliable indicator than gender, race and income) (Carvalho da Silva, Hobbs, & Graf, 2014; Ifenthaler & Widanapathirana, 2014). Some of the significant predictors of dropout used in these methods can be indicated and include the following: posting behaviour in forums, social network behaviour (Yang, Sinha, Adamson, & Rose, 2013), percentage of activities delivered, average grades, percentage of resources viewed and attendance (85% accuracy of at-risk student identification) (Carvalho da Silva et al., 2014). Similarly, different factors are used at Nottingham Trent University to signal student engagement: library use, card swipes into buildings, VLE use and electronic submission of coursework, analyses the progression and attainment in particular groups (Tickle, 2015). An example technique is as follows: if there is no student engagement for 2 weeks, tutors will get an automatic email notification, and they are encouraged to open up a dialogue with the at-risk student. Their LA system intends to help increase not only study retention but also to increase study performance. Prevention measures include pedagogical monitoring. The timeliness of the institution or university’s intervention is very important including noticing signs of trouble and responding immediately to these (Tickle, 2015). A question concerning ethics may be “do students want an algorithm applied to their data to show they are at risk of dropping out?” causing intervention from respective tutors to take place (West et al., 2016).

LA are often discussed and linked with regard to self-regulated learning. Self-regulated learning can be seen as a cyclical process, starting with a forethought phase including task analysis, goal setting, planning and motivational aspects (Ifenthaler, 2012). The actual learning occurs in the performance phase, i.e. focussing, applying task strategies, self-instruction and self-monitoring. The last phase contains self-reflection, as learners evaluate their outcomes versus their prior set goals. To close the loop, results from the third phase will influence future learning activities (Zimmerman, 2002). Current findings show that self-regulated learning capabilities, especially revision, coherence, concentration and goal setting, are related to students’ expected support of LA systems (Gašević, Dawson, & Siemens, 2015; Schumacher & Ifenthaler, 2018b). For example, LA facilitate students through adaptive and personalised recommendations to better plan their learning towards specific goals (McLoughlin & Lee, 2010; Schumacher & Ifenthaler, 2018a). Other findings show that many LA systems focus on visualisations and outline descriptive information, such as time spent online, the progress towards the completion of a course and comparisons with other students (Verbert, Manouselis, Drachsler, & Duval, 2012). Such LA features help in terms of monitoring. However, to plan upcoming learning activities or to adapt current strategies, further recommendations based on dispositions of students, previous learning behaviour, self-assessment results and learning goals are important (McLoughlin & Lee, 2010; Schumacher & Ifenthaler, 2018b). In sum, students may benefit from LA through personalised and adaptive support of their learning journey; however, further longitudinal and large-scale evidence is required to demonstrate the effectiveness of LA.

3 Conclusion

This critical reflection of current empirical findings indicates that a wider adoption of LA systems is needed as well as work towards standards for LA which can be integrated into any learning environment providing reliable at-risk student prediction as well as personalised prevention and intervention strategies for supporting study success. In particular, personalised learning environments are increasingly demanded and valued in higher education institutions to create a tailored learning package optimised for each individual learner based on their personal profile which could contain information such as their geo-social demographic backgrounds, their previous qualifications, how they engaged in the recruitment journey, their learning activities and strategies, affective states and individual dispositions, as well as tracking information on their searches and interactions with digital learning platforms (Ifenthaler, 2015). Still, more work on ethical and privacy guidelines supporting LA is required to support the implementation at higher education institutions (Ifenthaler & Tracey, 2016), and there are still many open questions how LA can support learning, teaching as well as the design of learning environments (Ifenthaler, 2017b; Ifenthaler, Gibson, & Dobozy, 2018). Another field requiring rigorous empirical research and precise theoretical foundations is the link between data analytics and assessment (Ifenthaler, Greiff, & Gibson, 2018). Further, as LA are of growing interest for higher education institutions, it is important to understand students’ expectations of LA features (Schumacher & Ifenthaler, 2018a) to be able to align them with learning theory and technical possibilities before implementing them (Marzouk et al., 2016). As higher education institutions are moving towards adoption of LA systems, change management strategies and questions of capabilities are key for successful implementations (Ifenthaler, 2017a). The preliminary findings obtained in this critical reflection suggest that there are a considerable number of sophisticated LA tools which utilise effective techniques in predicting study success and at-risk students of dropping out.

Limitations of this study include the difficulty in comparing results of different studies as various techniques and algorithms, research questions and aims were used. Although much empirical evidence is documented in these papers, many studies are still works-in-progress, experimental studies and at very small scale. The papers discuss how LA can work to predict study success, and the steps following this to the discussions with the students and the approaches that teachers can take to address to at-risk students are under-documented. The questions raised concerning this are, for example: (a) Will students be able to respond positively and proactively when informed that their learning progress is hindered or inactivated? (b) Will instructors be able to influence the at-risk students positively so that they will re-engage with the studies? (c) In addition, ethical dimensions regarding descriptive, predictive and prescriptive learning analytics need to be addressed with further empirical studies and linked to study success indicators.

However, evidence on a large scale to support the effectiveness of LA actually retaining students onto courses are still lacking, and we are currently examining the remainder of the key studies thoroughly to obtain a clearer and more exact picture of how much empirical evidence there is that LA can support study success. Methods and advice also can be used as a guide in helping students to stay on the course after they have been identified as at-risk students. One suggestion is to leverage existing learning theory by clearly designing studies with clear theoretical frameworks and connect LA research with decades of previous research in education. Further documented evidence on LA include that LA cannot be used as a one-size-fits-all approach, i.e. requiring personalisation, customisation and adaption (Gašević, Dawson, Rogers, & Gašević, 2016; Ifenthaler, 2015).

Our future work also includes locating learning theories onto LA (which is currently lacking)—there is missing literature on variables as key indicators of interaction and study success in digital learning environments. Hence, while the field of learning analytics produces ever more diverse perspectives, solutions and definitions, we expect analytics for learning to form a novel approach for guiding the implementation of data- and analytics-driven educational support systems based on thorough educational and psychological models of learning as well as producing rigorous empirical research with a specific focus on the processes of learning and the complex interactions and idiosyncrasies within learning environments.

References

Bote-Lorenzo, M., & Gomez-Sanchez, E. (2017). Predicting the decrease of engagement indicators in a MOOC. In International Conference on Learning Analytics & Knowledge, Vancouver, Canada.

Buckingham Shum, S., & McKay, T. A. (2018). Architecting for learning analytics. Innovating for sustainable impact. Educause Review, 53(2), 25–37.

Carvalho da Silva, J., Hobbs, D., & Graf, S. (2014). Integrating an at-risk student model into learning management systems. In Nuevas Ideas en Informatica Educativa.

Drachsler, H., & Greller, W. (2016). Privacy and analytics - It’s a DELICATE issue. A checklist for trusted learning analytics. Paper presented at the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK.

Ferguson, R., Brasher, A., Clow, D., Cooper, A., Hillaire, G., Mittelmeier, J., … Vuorikari, R. (2016). Research evidence on the use of learning analytics – Implications for education policy. In R. Vuorikari & J. Castano Munoz (Eds.). Joint Research Centre Science for Policy Report.

Ferguson, R. & Clow, D. (2017). Where is the evidence? A call to action for learning analytics. In International Conference on Learning Analytics & Knowledge, Vancouver, Canada.

Gašević, D., Dawson, S., Rogers, T., & Gašević, D. (2016). Learning analytics should not promote one size fits all: The effects of instructional conditions in predicting academic success. Internet and Higher Education, 28, 68–84.

Gašević, D., Dawson, S., & Siemens, G. (2015). Let’s not forget: Learning analytics are about learning. TechTrends, 59(1), 64–71. https://doi.org/10.1007/s11528-014-0822-x

Grawemeyer, B., Mavrikis, M., Holmes, W., Gutierrez-Santos, S., Wiedmann, M., & Rummel, N. (2016). Determination of off-task/on-task behaviours. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK.

Hlosta, M., Zdrahal, Z., & Zendulka, J. (2017). Ouroboros: Early identification of at-risk students without models based on legacy data. In Proceedings of the Seventh International Conference on Learning Analytics & Knowledge, Vancouver, Canada.

Ifenthaler, D. (2012). Determining the effectiveness of prompts for self-regulated learning in problem-solving scenarios. Journal of Educational Technology & Society, 15(1), 38–52.

Ifenthaler, D. (2015). Learning analytics. In J. M. Spector (Ed.), The SAGE encyclopedia of educational technology (Vol. 2, pp. 447–451). Thousand Oaks, CA: Sage.

Ifenthaler, D. (2017a). Are higher education institutions prepared for learning analytics? TechTrends, 61(4), 366–371. https://doi.org/10.1007/s11528-016-0154-0

Ifenthaler, D. (2017b). Learning analytics design. In L. Lin & J. M. Spector (Eds.), The sciences of learning and instructional design. Constructive articulation between communities (pp. 202–211). New York, NY: Routledge.

Ifenthaler, D., Gibson, D. C., & Dobozy, E. (2018). Informing learning design through analytics: Applying network graph analysis. Australasian Journal of Educational Technology, 34(2), 117–132. https://doi.org/10.14742/ajet.3767

Ifenthaler, D., Greiff, S., & Gibson, D. C. (2018). Making use of data for assessments: Harnessing analytics and data science. In J. Voogt, G. Knezek, R. Christensen, & K.-W. Lai (Eds.), International handbook of IT in primary and secondary education (2nd ed., pp. 649–663). New York, NY: Springer.

Ifenthaler, D., & Schumacher, C. (2016). Student perceptions of privacy principles for learning analytics. Educational Technology Research and Development, 64(5), 923–938. https://doi.org/10.1007/s11423-016-9477-y

Ifenthaler, D., & Tracey, M. W. (2016). Exploring the relationship of ethics and privacy in learning analytics and design: Implications for the field of educational technology. Educational Technology Research and Development, 64(5), 877–880. https://doi.org/10.1007/s11423-016-9480-3

Ifenthaler, D., & Widanapathirana, C. (2014). Development and validation of a learning analytics framework: Two case studies using support vector machines. Technology, Knowledge and Learning, 19(1-2), 221–240. https://doi.org/10.1007/s10758-014-9226-4

Kilis, S., & Gülbahar, Y. (2016). Learning analytics in distance education: A systematic literature review. Paper presented at the 9th European Distance and E-learning Network (EDEN) Research Workshop, Oldenburg, Germany.

Mah, D.-K. (2016). Learning analytics and digital badges: Potential impact on student retention in higher education. Technology, Knowledge and Learning, 21(3), 285–305. https://doi.org/10.1007/s10758-016-9286-8

Manai, O., Yamada, H., & Thorn, C. (2016). Real-time indicators and targeted supports: Using online platform data to accelerate student learning. In International Conference on Learning Analytics & Knowledge, UK, 2016.

Marzouk, Z., Rakovic, M., Liaqat, A., Vytasek, J., Samadi, D., Stewart-Alonso, J., … Nesbit, J. C. (2016). What if learning analytics were based on learning science? Australasian Journal of Educational Technology, 32(6), 1–18. https://doi.org/10.14742/ajet.3058

McLoughlin, C., & Lee, M. J. W. (2010). Personalized and self regulated learning in the Web 2.0 era: International exemplars of innovative pedagogy using social software. Australasian Journal of Educational Technology, 26(1), 28–43.

Papamitsiou, Z., & Economides, A. (2014). Learning analytics and educational data mining in practice: A systematic literature review of empirical evidence. Educational Technology & Society, 17(4), 49–64.

Pistilli, M. D., & Arnold, K. E. (2010). Purdue signals: Mining real-time academic data to enhance student success. About Campus: Enriching the Student Learning Experience, 15(3), 22–24.

Robinson, C., Yeomans, M., Reich, J., Hulleman, C., & Gehlbach, H. (2016). Forecasting student achievement in MOOCs with natural language processing. In International Conference on Learning Analytics & Knowledge, UK.

Sarrico, C. S. (2018). Completion and retention in higher education. In J. C. Shin & P. Teixeira (Eds.), Encyclopedia of international higher education systems and institutions. Dordrecht, The Netherlands: Springer.

Schumacher, C., & Ifenthaler, D. (2018a). Features students really expect from learning analytics. Computers in Human Behavior, 78, 397–407. https://doi.org/10.1016/j.chb.2017.06.030

Schumacher, C., & Ifenthaler, D. (2018b). The importance of students’ motivational dispositions for designing learning analytics. Journal of Computing in Higher Education, 30, 599. https://doi.org/10.1007/s12528-018-9188-y

Sclater, N., & Mullan, J. (2017). Learning analytics and student success – Assessing the evidence. Bristol, UK: JISC.

Slade, S., & Prinsloo, P. (2013). Learning analytics: Ethical issues and dilemmas. American Behavioral Scientist, 57(10), 1510–1529. https://doi.org/10.1177/0002764213479366

Suchithra, R., Vaidhehi, V., & Iyer, N. E. (2015). Survey of learning analytics based on purpose and techniques for improving student performance. International Journal of Computer Applications, 111(1), 22–26.

Tickle, L. (2015). How universities are using data to stop students dropping out. Guardian. Retrieved from https://www.theguardian.com/guardian-professional/2015/jun/30/how-universities-are-using-data-to-stop-students-dropping-out

Verbert, K., Manouselis, N., Drachsler, H., & Duval, E. (2012). Dataset-driven research to support learning and knowledge analytics. Educational Technology & Society, 15(3), 133–148.

West, D., Huijser, H., & Heath, D. (2016). Putting an ethical lens on learning analytics. Educational Technology Research and Development, 64(5), 903–922. https://doi.org/10.1007/s11423-016-9464-3

Yang, D., Sinha, T., Adamson, D., & Rose, C. (2013). “Turn on, tune in, drop out”: Anticipating student dropouts in massive open online courses. In Neural Information Processing Systems.

Zimmerman, B. J. (2002). Becoming a self-regulated learner: An overview. Theory Into Practice, 41(2), 64–70.

Acknowledgements

The authors acknowledge the financial support by the Federal Ministry of Education and Research of Germany (BMBF, project number 16DHL1038).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ifenthaler, D., Mah, DK., Yau, J.YK. (2019). Utilising Learning Analytics for Study Success: Reflections on Current Empirical Findings. In: Ifenthaler, D., Mah, DK., Yau, J.YK. (eds) Utilizing Learning Analytics to Support Study Success. Springer, Cham. https://doi.org/10.1007/978-3-319-64792-0_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-64792-0_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-64791-3

Online ISBN: 978-3-319-64792-0

eBook Packages: EducationEducation (R0)