Abstract

Zooarchaeological analyses of carcass transport behavior require methodologies that control for the effects of density-mediated attrition on skeletal element abundances. Taphonomic observations suggest that based on differences in bone structure and density, large mammal skeletal elements can be divided into a high-survival subset of skeletal elements that more accurately reflects what was originally deposited, and a low-survival subset that does not. In this chapter we explore the applicability of this model of bone survivorship across 43 Quaternary large mammal assemblages from Africa (n = 33) and Eurasia (n = 10). We demonstrate that attrition explains a substantial degree of variation in low-survival element abundances, with nearly all low-survival elements affected. Because attrition severely overprints any potential signature of differential bone transport by humans, it follows that only the high-survival elements of large mammals are suitable for making behavioral inferences from skeletal element abundances. This supports predictions made from actualistic taphonomic observations.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Bone density

- Density-mediated attrition

- Differential survivorship

- High-survival elements

- Skeletal part profiles

- Taphonomy

1 Introduction

Anthropologists have long observed that when hunter-gatherers acquire large vertebrate prey they are faced with decisions about which body parts to transport for later processing and consumption and which to leave behind at the kill site (Abe 2005; Bartram 1993; Bunn et al. 1988; O’Connell et al. 1988, 1990; White 1952; Yellen 1977). These decisions are routinely evaluated in archaeological contexts through analysis of skeletal part frequencies (Faith and Gordon 2007; Lyman 1994, 2008). However, it is widely recognized that due to destructive taphonomic processes (e.g., carnivore destruction , trampling, sediment compaction, and leaching), the skeletal parts recovered by archaeologists frequently do not reflect what was originally discarded by human foragers (Cleghorn and Marean 2004, 2007; Lupo 1995, 2001; Lyman 1984, 1985, 1993, 1994; Marean and Cleghorn 2003; Marean and Frey 1997; Marean and Spencer 1991). In many cases, the survival potential of a skeletal element or element portion is mediated by its structural density (Lam and Pearson 2005; Lam et al. 2003; Lyman 1994).

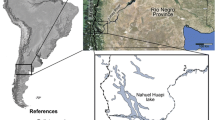

Given the importance of skeletal part data to inferring carcass transport decisions, it is imperative that zooarchaeological methodology controls for destructive processes. Several methods have been developed to extract meaningful behavioral signals from bone assemblages subject to attrition (reviewed in Cleghorn and Marean 2004). These include Stiner’s (2002) anatomical region profile (but see Pickering et al. 2003), Rogers’ (2000) analysis of bone counts by maximum likelihood, and the high- and low-survival model of skeletal element survivorship developed by Marean and Frey (1997) and Marean and Cleghorn 2003; see also Cleghorn and Marean 2004, 2007). The latter proposes that skeletal parts can be divided into a high-survival subset that accurately reflects what was originally deposited and a low-survival subset that does not. This taphonomic model is increasingly implemented by zooarchaeologists, particularly in the context of Paleolithic faunal assemblages (e.g., Faith 2007a; Faith et al. 2009; Marean and Kim 1998; Saladié et al. 2011; Schoville and Otárola-Castillo 2014; Thompson 2010; Thompson and Henshilwood 2011; Yeshurun et al. 2007; Yravedra and Domínguez-Rodrigo 2009). This study tests the applicability of the high- and low-survival model of skeletal element survivorship through examination of large mammal skeletal part data across 33 African faunal assemblages and 10 Eurasian assemblages (Table 6.1, Fig. 6.1), emphasizing how low-survival element abundances vary as a function of attrition. The broad applicability of this taphonomic model is illustrated by drawing from assemblages accumulated by both humans and non-human bone collectors (e.g., carnivores, raptors) and subject to varied taphonomic histories.

2 Density-Mediated Attrition

Parts of a complete carcass may be removed or destroyed via a number of taphonomic pathways (Lyman 1994). Density-mediated attrition refers to those processes that result in differential survivorship patterned according to bone density (Lyman 1993). For both large and small-bodied mammals, known or suspected density-mediated processes include carnivore attrition , human consumption of low-density parts, post-depositional crushing , fluvial winnowing , and diagenetic processes , among others (Lyman 1994). Bone densities vary between elements and also between portions of the same element, with some of the most dramatic intra-element differences found in the long-bones (Lam and Pearson 2005; Lam et al. 2003). For large mammals , long-bone density typically patterns according to five major regions. The least dense are the two epiphyseal ends, which are largely composed of cancellous bone overlain by a thin wall of cortical bone. The exterior cortical bone thickens as it approaches the middle shaft of the long bone, creating portions of intermediate density at the near-epiphyses and highest density at the mid-shaft. Because skeletal elements have different shapes and structural functions, the precise location of these density transitions differ by element and taxon, as do their absolute densities (Carlson and Pickering 2004; Lam et al. 1999; Lyman 1994; Stahl 1999).

For long-bones of large mammals , and especially ungulates, there is also a strong relationship between bone density and the distribution of within-bone nutrients. The dense long-bone shafts contain marrow, which is a concentrated source of fat of high nutritive value to humans and carnivores (Blumenschine and Madrigal 1993). Fat is also present within the cancellous portions, but in the form of bone grease that must be extracted either through comminution and cooking (Lupo and Schmitt 1997) or through direct consumption and digestion within the gut (Marean 1991). It has been shown in experimental, naturalistic, and ethnoarchaeological settings that carnivores will consume long-bone ends and other low-density elements in order to access the bone grease, and that the higher-density shafts are therefore more likely to survive (Bartram and Marean 1999; Faith et al. 2007; Gidna et al. 2015; Marean and Spencer 1991; Marean et al. 1992). This actualistic work provides a point of departure for understanding the processes that may lead to the differential representation of skeletal elements and element portions in the zooarchaeological record (Marean et al. 2004; Pickering et al. 2003; Yravedra and Domínguez-Rodrigo 2009).

Variation in exactly how often denser elements survive may arise from human treatment of long bones prior to deposition, for example, if bones have been cooked and the grease extracted, low-density trabecular bone may be less attractive to carnivore scavengers (Lupo 1995; Thompson and Lee-Gorishti 2007). Depositional environment also plays a role; where bones are exposed to episodic wetting and drying or heating and cooling, dense long-bone shafts may fragment more readily than cancellous bone, particularly when the bones are already highly mineralized (Conard et al. 2008). This can create a situation in which spongy elements or element portions may preserve in a more identifiable state, but only after already being depleted through density-mediated processes prior to mineralization. Thus, survivorship may be best compared between sites within similar depositional settings.

3 Large Mammal Skeletal Element Survivorship

Building on observations from experimental, ethnographic, and archaeological bone assemblages (Bartram and Marean 1999; Binford et al. 1988; Blumenschine 1988; Blumenschine and Marean 1993; Brain 1981; Lupo 1995; Marean and Frey 1997; Pickering et al. 2003), Marean and Cleghorn 2003 propose that skeletal parts of large-bodied mammals can be divided into a high-survival and low-survival subset on the basis of their physical properties (Fig. 6.2). The high-survival subset includes elements with portions that are high in density and with thick cortical walls lacking cancellous bone. These include long-bones (for ungulates: femur, tibia, metatarsal, humerus, radius, metacarpal), the cranium, and the mandible. Although carcass transport decisions are structured by a range of variables that may be difficult to discern from large bone accumulations (Binford 1978; Lupo 2001; O’Connell et al. 1988, 1990; Schoville and Otárola-Castillo 2014), the abundances of high-survival elements in archaeological assemblages are thought to be a reasonably accurate portrayal of what was originally discarded by human foragers. Thus, they offer the best option for analysis of carcass transport decisions. In contrast, the low-survival subset—including vertebrae, ribs, pelves, scapulae, and ulnae—is characterized by bones with thin cortical walls and low-density, grease-rich cancellous portions. Small compact bones (e.g., carpals and tarsals) and phalanges are considered low-survival elements given that these tend to be readily swallowed by carnivores, particularly in the case of smaller ungulates (Marean 1991). The sensitivity of low-survival elements to density-mediated taphonomic processes, including carnivore destruction , means that these bones may not accurately reflect what was originally discarded at a site, rendering them inappropriate for analyses of carcass transport behavior.

If the distinction between high- and low-survival elements is robust and broadly applicable, this places a considerable limitation on our ability to infer carcass transport behaviors from skeletal element data by limiting faunal analysts to a relatively small number of primarily appendicular elements (Fig. 6.2). Of perhaps greater importance, it also implies that zooarchaeological analyses that incorporate low-survival elements in behavioral interpretations are methodologically problematic. Given the significance of understanding this methodological issue, our aim here is to assess the following question: how sensitive are low-survival elements to attrition?

4 Methods

Zooarchaeological measures of skeletal element abundances must be designed to quantify those elements or element portions least affected by density-mediated attrition, bearing in mind that any individual fragment will only be included in such counts if it is identifiable at minimum to skeletal part. Methods for quantifying skeletal parts vary between researchers and have different potentials for capturing the densest parts, such as long bone shafts (Marean et al. 2001; Thompson and Marean 2009).

The following analyses make use of skeletal element data compiled from 33 African Quaternary faunal assemblages from six sites: Porc-Epic Cave in Ethiopia (Assefa 2006), the Amboseli Airstrip Hyena Den in Kenya (Faith 2007b; Hill 1989), and Die Kelders Cave 1 (Marean et al. 2000), Blombos Cave (Thompson and Henshilwood 2011), Pinnacle Point Cave 13B (Thompson 2010), and Boomplaas Cave (Faith 2013) in South Africa. We also consider ten Eurasian Quaternary assemblages from two sites: Kobeh Cave in Iran (Marean and Kim 1998) and Mezmaiskaya Cave in Russia (Cleghorn 2006) (Table 6.1, Fig. 6.1). These sites were selected because they fall within similar time ranges (Late Pleistocene through the Holocene: 126,000 years ago to present), are all from cave settings (except the Amboseli Airstrip Hyena Den), and had minimum number of element (MNE) values calculated by researchers using similar methods (see below). Skeletal part data are combined for both Body Size 1–2 (0–84 kg) and 3–4 (84–900 kg) mammals (Brain 1981) to improve sample sizes; all reported assemblages from these sites with a total MNE (minimum number of elements) less than 45 are excluded from this study.

Our emphasis on Africa and Eurasia does not reflect an underlying expectation that the taphonomic model of skeletal element survivorship is only applicable in these regions, but rather the importance of ensuring analytical comparability across samples. Our analyses require that (1) all identifiable ungulate long-bone shaft fragments are included in MNE calculations; (2) MNE counts are aggregated by body size class (after Brain 1981); and (3) MNE counts for long-bones are provided for different portions (ends and shafts). These criteria, particularly points one and two, are most commonly met for African assemblages, where the exceptional diversity of Bovidae (>80 extant African species), which are the dominant large mammal in nearly all African Quaternary sites, means that zooarchaeologists working on these faunas assign all ungulate long-bone shaft fragments to one of several standard body size classes rather than attempting identifications at lower taxonomic resolution. This contrasts with the situation in many other contexts, where, even when shaft fragments are considered, there is often a greater focus on assigning them to genus or species (e.g., Grayson and Delpech 2003; Morin 2004), in which case smaller and more fragmentary specimens may not be included in published skeletal inventories. All of the MNE counts used here are derived using the fraction summation approach or by counting overlaps on fragments traced into standardized bone templates (Marean et al. 2001). Long-bones are divided into five portions: proximal and distal ends, proximal and distal shafts, and a midshaft (Fig. 6.2).

To evaluate the sensitivity of low-survival elements to destructive processes, it is necessary to provide an index of attrition for each assemblage. We use the percentage of long-bone end destruction as a proxy for attrition here. Following the taphonomic model of bone survivorship, long-bones are classified as high-survival elements because their mid-shafts are dense and lack cancellous bone; to reiterate, this is only applicable if all long bone portions are incorporated into the MNE counts, including the shaft portions. In contrast, long-bone ends, defined by Marean and Spencer (1991) as the most proximal and distal portions that include the epiphyses and metaphyses (Fig. 6.2), are preferentially destroyed by attritional processes and can be considered low-survival portions of the long-bones (e.g., Binford et al. 1988; Blumenschine and Marean 1993; Marean and Spencer 1991; Marean et al. 1992; Pickering et al. 2003; Yravedra and Domínguez-Rodrigo 2009). Because long-bones include both low- and high-survival portions, the loss of long-bone ends provides a reasonable measure of attrition. Assuming that long-bones are transported intact, one can expect to recover two ends for every long-bone in the absence of attrition; the loss of proximal and distal ends reflects destructive taphonomic processes. For example, consider a hypothetical bone assemblage with a total MNE of 50 femora and a MNE of 25 ends. Given that 50 femora are present, in the absence of attrition one would expect 100 ends (50 proximal and 50 distal) to have initially been present. The recovery of only 25 implies that 75% have been destroyed. This simple relationship requires that shafts consistently preserve in an identifiable state, although extreme fragmentation may render them less identifiable or pose challenges when factoring identifiable shaft fragments into MNE calculations (e.g., if the exact position of fragment on a standardized bone template cannot be determined or if no quantifiable landmarks are preserved). The percentage of long-bone end attrition is calculated here as:

To the extent that destruction of long-bone ends provides a reliable measure of attrition in a given assemblage, we predict that higher attrition should be reflected by decreasing abundances of low-survival elements . We examine these relationships for all low-survival elements combined and for individual elements, the abundances of which are quantified using various derivatives of the MNE. These include MAU (minimal animal units: MNE normalized by the number of times the element occurs in the skeleton) and %MAU (MAU of an element divided by maximum MAU value observed in an assemblage and scaled to 100), as described further in Lyman (2008).

5 Results

Skeletal part data for the 43 assemblages examined here are reported in Table 6.1. For all long-bones, the highest MNE counts are derived from shafts (mid-shafts or near-epiphyses), which are consistently greater than those potentially derived from ends; levels of long-bone end destruction range from 34 to 89% (Table 6.2). Relative abundances of low-survival elements (MAU) range from 14 to 52%, consistently less than the 65% (15 low-survival elements divided by 23 elements total) that would be expected of a case in which all high- and low-survival elements are evenly represented.

Excluding the Blombos Cave assemblages, for which crania and mandibles were not quantified, we observe significant inverse correlations between long-bone end destruction and the abundance of low-survival elements relative to the total for all elements (MAU) for Size 1–2 (Spearman’s rho: r s = −0.655, p < 0.001, df = 23) and Size 3–4 mammals (r s = −0.650, p = 0.022, df = 10) (Fig. 6.3). Removing the DK1 Size 1–2 (Fig. 6.3) outlier, high coefficients of determination (Pearson’s r 2: Size 1–2 = 0.445, Size 3–4 = 0.610) imply that a substantial amount of variance (44.5–61.0%) in the abundance of low-survival elements can be explained by attrition of long-bone ends; when attrition is high, low-survival elements are rare.

To explore the effects of attrition on individual elements, Table 6.3 reports correlation coefficients between %end destruction and the abundance of individual elements (%MAU). For 12 of the 15 low-survival elements, we observe significant negative relationships, meaning that these elements consistently decline in abundance as long-bone end destruction increases. The exceptions include the astragalus, small tarsals, and carpals. Among the high-survival elements, only the humerus (r s = −0.404, p = 0.013) exhibits a significant correlation.

6 Discussion

Our analyses demonstrate that the destruction of long-bone ends predicts the relative abundance of low-survival elements. When all low-survival elements are considered together (Fig. 6.3), the strong correlations suggest that attritional processes severely overprint any potential signature of differential bone transport. Similar negative relationships are observed for nearly all low-survival elements considered individually (Table 6.3). Together, these results provide archaeological support for the high- and low-survival model of skeletal element survivorship (see also Bartram and Marean 1999; Marean and Frey 1997; Marean and Kim 1998; Marean et al. 2000). Based on experimental, naturalistic, and ethnoarchaeological observations (Blumenschine and Marean 1993; Lupo 1995, 2001; Marean and Spencer 1991; Marean et al. 1992; see also the reviews in Cleghorn and Marean 2007; Pickering et al. 2003), we are confident that the relationship between the destruction of long-bone ends and low-survival element abundance observed here is due to density-mediated attritional processes. Carnivore destruction is a likely candidate, as it is well-known to produce similar patterns (Blumenschine and Marean 1993; Cleghorn and Marean 2007; Lupo 1995; Marean and Spencer 1991; Marean et al. 1992), and carnivore toothmarks are observed across most of the archaeological assemblages (Assefa 2006; Cleghorn 2006; Faith 2007b, 2013; Marean et al. 2000; Marean and Kim 1998; Thompson 2010; Thompson and Henshilwood 2011). Other density-mediated process (e.g., sediment compaction, chemical leaching ) are likely to have also contributed.

Bivariate scatter plots illustrating the relationship between %Long-bone end destruction and the %abundance of low survival elements (in MAU) for Size 1–2 (left) and Size 3–4 (right) mammals. Solid lines indicate ordinary least squares regression, with the Die Kelders Cave Layer 11 Size 1–2 outlier (marked by an arrow) excluded from calculation

Our analysis of the relationship between long-bone end destruction and the abundance of individual elements reveals several exceptions that do not fit the expected pattern (Table 6.3). Among low-survival elements , these are the astragalus, carpals, and smaller tarsals. These are considered low-survival elements (Cleghorn and Marean 2004, 2007; Marean and Cleghorn 2003) because they can be swallowed whole by carnivores following human discard (Marean 1991), although their abundances are not predicted by long-bone end destruction (Table 6.3). This may imply that this taphonomic process was not universal across assemblages, or that these elements remain identifiable even after post-depositional attrition. Unexpected results are provided by the significant correlation between epiphyseal destruction and %MAU for the humerus (Table 6.3). Due to the presence of a high-density portion that resists attritional processes (the mid-shaft), we would have expected its abundance to vary independent of attrition. One potential explanation is that high attrition renders this element less identifiable or less quantifiable (in MNE) than other high-survival counterparts. At Die Kelders Cave 1 and Boomplaas Cave, data are available on the frequency of long-bone shaft fragments with right angle breaks, an indicator of post-depositional fragmentation (Marean et al. 2000; Villa and Mahieu 1991). Across assemblages from these two sites, there is a strong correlation between epiphyseal destruction and the frequency of long-bones with right-angle breaks (r s = 0.722, p = 0.004). This implies that assemblages with high attrition, and therefore fewer identified humeri, are also subject to more intense post-depositional fragmentation. In turn, this might pose analytical challenges with respect to identifying fragments of this element or recognizing landmarks needed to facilitate MNE calculations (using the fraction summation approach) or confidently establish the position of a fragment on a standard bone template.

These exceptions to the high- and low-survival model of bone survivorship, although few, suggest that there may be cases in which some high-survival elements do not accurately reflect what was originally discarded by human foragers and some low-survival elements do. The approach developed here—examining the association between epiphyseal deletion and bone survivorship—offers one means of identifying and controlling for these potential exceptions in other faunal assemblages.

On the whole, our results support the view, based on actualistic work, that low-survival elements are not suitable for interpretations of carcass transport behavior by past human foragers. Exceptions may be made for assemblages subject to exceptionally low attrition, in which case all elements deposited by human foragers are likely to survive. Provided that long-bones are transported intact and that shaft fragments are quantified, such a case is easily recognized by the absence of long-bone end destruction. However, we expect this taphonomic scenario to be exceptionally rare in the archaeological record. It is certainly not evident across any of the assemblages examined here, where the lowest level of long-bone end deletion is still a substantial 34.1% (Table 6.2). Even for cases where long-bone end attrition is modest, we cannot reliably determine which low-survival elements disappeared (see Rogers 2000 for a maximum likelihood approach for tackling this issue). Because of this, we recommend that whenever an assemblage has been subject to attrition, analyses of carcass transport strategies focus on the high-survival subset.

How applicable are our results? While the focus of our analysis is on African and Eurasian late Quaternary faunal assemblages, there is ample reason to believe that the patterns documented here would be evident in zooarchaeological assemblages from other time periods and regions (Marean et al. 2004). Large-bodied bone crunching taxa (e.g., hyaenids, canids, ursids, and some felids) capable of producing similar patterns are found throughout the continents. In addition, while carnivore bone destruction may be the most well-studied density-mediated taphonomic processes (Cleghorn and Marean 2007), it is hardly the only one (Lyman 1994). For example, taphonomic evidence from Boomplaas Cave, which provides 10 of the 34 assemblages examined here, indicates a complex history of human, carnivore, and raptor accumulation throughout its >65 ky sequence (Faith 2013), with some assemblages showing abundant evidence for carnivore bone destruction in the form of toothmarks (e.g., OCH Size 1–2: 55% of long-bone mid-shaft fragments lacking dry-bone breaks) and others showing none (e.g., BLD Size 3–4). Despite this taphonomic variability, we observe a highly significant relationship between long-bone end destruction and the abundance of low-survival elements across the 10 Boomplaas Cave assemblages (r s = −0.827, p = 0.003), but no relationship between long-bone end destruction and toothmark abundances (% of fragments with a toothmark: r s = −0.383, p = 0.275; data from Faith 2013). This implies that low-survival elements track attrition caused by a range of taphonomic processes, not just carnivore activity, though it would be worthwhile to explore similar patterns in contexts lacking bone-crunching carnivores. At Boomplaas Cave, the high frequency of right-angle fractures on long-bone shaft fragments (from 19.7 to 61.9%) implies substantial post-depositional fragmentation, perhaps due to a combination sediment compaction , leaching , and burning , which may also account for the attrition of low-survival elements and long-bone ends at this site. It follows that because a range of taphonomic processes contribute to preferential destruction of low-survival elements, we can expect the patterns documented here to be evident in other contexts.

7 Conclusions

This chapter adds to the existing body of archaeological, ethnoarchaeological, and actualistic data supporting a distinction between a subset of high-survival elements that resists destructive processes and a low-survival subset that does not (Cleghorn and Marean 2004, 2007; Marean and Cleghorn 2003; Marean and Frey 1997; Marean and Spencer 1991). Just how sensitive are low survival elements to attrition? At least based on the assemblages examined here, the answer is unequivocal: very sensitive. Attrition explains much of the variation in low-survival element abundances, with nearly all low-survival elements affected. We strongly recommend that unless evidence to the contrary can be provided—requiring evaluation on a case-by-case basis—low-survival elements should be excluded from zooarchaeological analyses of carcass transport behavior; due to destructive processes, their abundances in archaeological sites are a poor reflection of carcass transport behavior by people.

References

Abe, Y. (2005). Hunting and butchery patterns of the Evenki in Northern Transbaikalia, Russia. Unpublished Ph.D. dissertation, Stony Brook University, Stony Brook, New York.

Assefa, Z. (2006). Faunal remains from Porc-Epic: Paleoecological and zooarchaeological investigations from a middle stone age site in southeastern Ethiopia. Journal of Human Evolution, 51, 50–75.

Bartram, L. E. (1993). Perspectives on skeletal part profiles and utility curves from Eastern Kalahari ethnoarchaeology. In J. Hudson (Ed.), From bones to behavior (pp. 115–137). Carbondale: Center for Archaeological Investigations at Southern Illinois University.

Bartram, L. E., & Marean, C. W. (1999). Explaining the “Klasies Pattern”: Kua Ethnoarchaeology, the Die Kelders middle stone age archaeofauna, long bone fragmentation and carnivore ravaging. Journal of Archaeological Science, 26, 9–29.

Binford, L. R. (1978). Nunamiut ethnoarchaeology. New York: Academic.

Binford, L. R., Mills, M. G. L., & Stone, N. M. (1988). Hyena scavenging behavior and its implications for interpretations of faunal assemblages from FLK22 (the Zinj Floor) at Olduvai Gorge. Journal of Anthropological Archaeology, 7, 99–135.

Blumenschine, R. J. (1988). An experimental model of the timing of hominid and carnivore influence on archaeological bone assemblages. Journal of Archaeological Science, 15, 483–502.

Blumenschine, R. J., & Madrigal, T. C. (1993). Variability in long bone marrow yields of East African ungulates and its zooarchaeological implications. Journal of Archaeological Science, 20, 555–587.

Blumenschine, R. J., & Marean, C. W. (1993). A carnivore’s view of archaeological bone assemblages. In J. Hudson (Ed.), From bones to behavior (pp. 273–300). Carbondale: Center for Archaeological Investigations at Southern Illinois University.

Brain, C. K. (1981). The hunters or the hunted? An introduction to African cave taphonomy. Chicago: University of Chicago Press.

Bunn, H. T., & Kroll, E. M. (1986). Systematic butchery by Plio-Pleistocene hominids at Olduvai Gorge, Tanzania. Current Anthropology, 27, 431–452.

Bunn, H. T., Bartram, L. E., & Kroll, E. M. (1988). Variability in bone assemblage formation from Hadza hunting, scavenging, and carcass processing. Journal of Anthropological Archaeology, 7, 412–457.

Carlson, K. J., & Pickering, T. R. (2004). Shape-adjusted bone mineral density measurements in baboons: Other factors explain primate skeletal element representation at Swartkrans. Journal of Archaeological Science, 31, 577–583.

Cleghorn, N. (2006). A zooarchaeological perspective on the Middle to Upper Paleolithic transition at Mezmaiskaya Cave, the Northern Caucasus, Russia. Unpublished Ph.D. dissertation, Stony Brook University, Stony Brook, New York.

Cleghorn, N., & Marean, C. W. (2004). Distinguishing selective transport and in situ attrition: A critical review of analytical approaches. Journal of Taphonomy, 2, 43–67.

Cleghorn, N., & Marean, C. W. (2007). The destruction of skeletal elements by carnivores: The growth of a general model for skeletal element destruction and survival in zooarchaeological assemblages. In T. R. Pickering, N. Toth, & K. Schick (Eds.), Breathing life into fossils: Taphonomic studies in honor of C.K. (Bob) Brain (pp. 37–66). Gosport: Stone Age Institute Press.

Conard, N. J., Walker, S. J., & Kandel, A. W. (2008). How heating and cooling and wetting and drying can destroy dense faunal elements and lead to differential preservation. Palaeogeography Palaeoclimatology Palaeoecology, 266, 236–245.

Faith, J. T. (2007a). Changes in reindeer body part representation at Grotte XVI, Dordogne, France. Journal of Archaeological Science, 34, 2003–2011.

Faith, J. T. (2007b). Sources of variation in carnivore tooth-mark frequencies in a modern spotted hyena (Crocuta crocuta) den assemblage, Amboseli Park, Kenya. Journal of Archaeological Science, 34, 1601–1609.

Faith, J. T. (2013). Taphonomic and paleoecological change in the large mammal sequence from Boomplaas Cave, Western Cape, South Africa. Journal of Human Evolution, 65, 715–730.

Faith, J. T., & Gordon, A. D. (2007). Skeletal element abundances in archaeofaunal assemblages: Economic utility, sample size, and assessment of carcass transport strategies. Journal of Archaeological Science, 34, 872–882.

Faith, J. T., Marean, C. W., & Behrensmeyer, A. K. (2007). Carnivore competition, bone destruction, and bone density. Journal of Archaeological Science, 34, 2025–2034.

Faith, J. T., Domínguez-Rodrigo, M., & Gordon, A. D. (2009). Long-distance carcass transport at Olduvai Gorge? A quantitative examination of Bed I skeletal element abundances. Journal of Human Evolution, 56, 247–256.

Gidna, A., Domínguez-Rodrigo, M., & Pickering, T. R. (2015). Patterns of bovid long limb bone modification created by wild and captive leopards and their relevance to the elaboration of referential frameworks for paleoanthropology. Journal of Archaeological Science: Reports, 2, 302–309.

Grayson, D. K., & Delpech, F. (2003). Ungulates and the middle-to-upper Paleolithic transition at Grotte XVI (Dordogne, France). Journal of Archaeological Science, 30, 1633–1648.

Hill, A. (1989). Bone modification by modern spotted hyenas. In R. Bonnichsen & M. H. Sorg (Eds.), Bone modification (pp. 169–178). Orono, ME: Center for the Study of the First Americans.

Lam, Y. M., & Pearson, O. M. (2005). Bone density studies and the interpretation of the faunal record. Evolutionary Anthropology, 14, 99–108.

Lam, Y. M., Chen, X., & Pearson, O. M. (1999). Intertaxonomic variability in patterns of bone density and the differential representation of bovid, cervid, and equid elements in the archaeological record. American Antiquity, 64, 343–362.

Lam, Y. M., Pearson, O. M., Marean, C. W., & Chen, X. (2003). Bone density studies in zooarchaeology. Journal of Archaeological Science, 30, 1701–1708.

Lupo, K. D. (1995). Hadza bone assemblage and hyena attrition: An ethnographic example of the influence of cooking and mode of discard on the intensity of scavenger ravaging. Journal of Anthropological Archaeology, 14, 288–314.

Lupo, K. D. (2001). Archaeological skeletal part profiles and differential transport: An ethnoarchaeological example from Hadza bone assemblages. Journal of Anthropological Archaeology, 20, 361–378.

Lupo, K. D., & Schmitt, D. N. (1997). Experiments in bone boiling: Nutritional returns and archaeological reflections. Anthropozoologica, 25(26), 137–144.

Lyman, R. L. (1984). Bone density and differential survivorship of fossil classes. Journal of Anthropological Archaeology, 3, 259–299.

Lyman, R. L. (1985). Bone frequencies: Differential transport, in situ destruction, and the MGUI. Journal of Archaeological Science, 12, 221–236.

Lyman, R. L. (1993). Density-mediated attrition of bone assemblages: New insights. In J. Hudson (Ed.), From bones to behavior (pp. 324–341). Carbondale: Center for Archaeological Investigations at Southern Illinois University.

Lyman, R. L. (1994). Vertebrate taphonomy. Cambridge: Cambridge University Press.

Lyman, R. L. (2008). Quantitative paleozoology. Cambridge: Cambridge University Press.

Marean, C. W. (1991). Measuring the postdepositional destruction of bone in archaeological assemblages. Journal of Archaeological Science, 18, 677–694.

Marean, C. W., & Cleghorn, N. (2003). Large mammal skeletal element transport: Applying foraging theory in a complex taphonomic system. Journal of Taphonomy, 1, 15–42.

Marean, C. W., & Frey, C. J. (1997). Animal bones from caves to cities: Reverse utility curves as methodological artifacts. American Antiquity, 62, 698–711.

Marean, C. W., & Kim, S. Y. (1998). Mousterian large-mammal remains from Kobeh Cave: Behavioral implications for Neanderthals and early modern humans. Current Anthropology, 39, S79–S113.

Marean, C. W., & Spencer, L. M. (1991). Impact of carnivore ravaging on zooarchaeological measures of element abundance. American Antiquity, 56, 645–658.

Marean, C. W., Spencer, L. M., Blumenschine, R. J., & Capaldo, S. D. (1992). Captive hyaena bone choice and destruction, the schlepp effect and Olduvai archaeofaunas. Journal of Archaeological Science, 19, 101–121.

Marean, C. W., Abe, Y., Frey, C. J., & Randall, R. C. (2000). Zooarchaeological and taphonomic analysis of the Die Kelders Cave 1 layers 10 and 11 middle stone age larger mammal fauna. Journal of Human Evolution, 38, 197–233.

Marean, C. W., Abe, Y., Nilssen, P. J., & Stone, E. C. (2001). Estimating the minimum number of skeletal elements (MNE) in zooarchaeology: A review and new image-analysis and GIS approach. American Antiquity, 66, 333–348.

Marean, C. W., Domínguez-Rodrigo, M., & Pickering, T. R. (2004). Skeletal element equifinality in zooarchaeology begins with method: The evolution and status of the “shaft critique”. Journal of Taphonomy, 2, 69–98.

Morin, E. (2004). Late pleistocene population interactions in western europe and modern human origins: New insights based on the faunal remains from Saint-Césaire, Southwestern France. Unpublished Ph.D. dissertation, University of Michigan, Ann Arbor.

O’Connell, J. F., Hawkes, K., & Blurton-Jones, N. (1988). Hadza hunting, butchering, and bone transport and their archaeological implications. Journal of Anthropological Research, 44, 113–161.

O’Connell, J. F., Hawkes, K., & Blurton-Jones, N. (1990). Reanalysis of large mammal body part transport among the Hadza. Journal of Archaeological Science, 17, 301–316.

Pickering, T. R., Marean, C. W., & Domínguez-Rodrigo, M. (2003). Importance of limb bone shaft fragments in zooarchaeology: A response to “On in situ attrition and vertebrate body part profiles” (2002), by MC. Stiner. Journal of Archaeological Science, 30, 1469–1482.

Rogers, A. R. (2000). Analysis of bone counts by maximum likelihood. Journal of Archaeological Science, 27, 111–125.

Saladié, P., Huguet, R., Díez, C., Rodríguez-Hidalgo, A., Cáceres, I., Vallverdú, J., et al. (2011). Carcass transport decisions in Homo antecessor subsistence strategies. Journal of Human Evolution, 61, 425–446.

Schoville, B. J., & Otárola-Castillo, E. (2014). A model of hunter-gatherer skeletal element transport: The effect of prey body size, carriers, and distance. Journal of Human Evolution, 73, 1–14.

Stahl, P. W. (1999). Structural density of domesticated South American camelid skeletal elements and the archaeological investigation of prehistoric Andean Ch’arki. Journal of Archaeological Science, 26, 1347–1368.

Stiner, M. C. (2002). On in situ attrition and vertebrate body part profiles. Journal of Archaeological Science, 29, 979–991.

Thompson, J. C. (2010). Taphonomic analysis of the middle stone age faunal assemblage from Pinnacle Point Cave 13B, Western Cape, South Africa. Journal of Human Evolution, 59, 321–339.

Thompson, J. C., & Henshilwood, C. S. (2011). Taphonomic analysis of the middle stone age larger mammal faunal assemblage from Blombos Cave, southern Cape, South Africa. Journal of Human Evolution, 60, 746–767.

Thompson, J. C., & Lee-Gorishti, Y. (2007). Carnivore bone portion choice in modern experimental boiled bone assemblages. Journal of Taphonomy, 5, 121–135.

Thompson, J. C., & Marean, C. W. (2009). Using image analysis to quantify relative degrees of density-mediated attrition in middle stone age archaeofaunas. Society for Archaeological Sciences Bulletin, 32(2), 18–23.

Villa, P., & Mahieu, E. (1991). Breakage patterns of human long bones. Journal of Human Evolution, 21, 27–48.

White, T. E. (1952). Observations on the butchering technique of some aboriginal peoples: No. 1. American Antiquity, 4, 337–338.

Yellen, J. E. (1977). Cultural patterning in faunal remains: Evidence from the !Kung bushmen. In D. Ingersoll, J. E. Yellen, & W. Macdonald (Eds.), Experimental archeology (pp. 271–331). New York: Columbia University Press.

Yeshurun, R., Bar-Oz, G., & Weinstein-Evron, M. (2007). Modern hunting behavior in the early Middle Paleolithic: Faunal remains from Misliya Cave, Mount Carmel, Israel. Journal of Human Evolution, 53, 656–677.

Yravedra, J., & Domínguez-Rodrigo, M. (2009). The shaft-based methodological approach to the quanitification of limb bones and its relevance to understanding hominid subsistence in the Pleistocene: Application to four Palaeolithic sites. Journal of Quaternary Science, 24, 85–96.

Acknowledgments

The authors wish to thank Ali Murad Büyüm and Jennifer Gutierrez, who assisted in processing MNE counts for the BBC and PP13B assemblages. We thank Christina Giovas for inviting us to contribute this chapter and the editors and anonymous reviewers for their helpful feedback. JTF is supported by an Australian Research Council Discovery Early Career Research Fellowship.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this chapter

Cite this chapter

Faith, J.T., Thompson, J.C. (2018). Low-Survival Skeletal Elements Track Attrition, Not Carcass Transport Behavior in Quaternary Large Mammal Assemblages. In: Giovas, C., LeFebvre, M. (eds) Zooarchaeology in Practice. Springer, Cham. https://doi.org/10.1007/978-3-319-64763-0_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-64763-0_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-64761-6

Online ISBN: 978-3-319-64763-0

eBook Packages: Social SciencesSocial Sciences (R0)