Abstract

The application of emerging information technologies to traditional teaching methods can not only enhance the value of technologies, but also improve the teaching progress and integrate different fields in education with efficiency. 3D printing, virtual reality and eye tracking technology have been found more and more applications in education recently. In this paper, through the improvement of eye tracking algorithm, we developed an education software package based on eye tracking technology. By analyzing the students’ eye movement data, teachers are able to improve the teaching quality by improving the teaching framework. Students can also focus on their own interests more to develop a reasonable learning plan. The application of eye tracking technology in the field of education has a great potential to promote the application of technology and to improve the educational standards.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Eyes are among the important sensory organs that we receive outside information. The eye is not only an important channel to obtain information, but also send information through the human behavior. Through the location of the pupil we can get the human eye line of sight. As early as the beginning of the 20th century, scholars tried to analyze people’s psychological activities through the record of the eye movement trajectory, pupil size, gaze time and other information [1], but the observation method is relatively simple, mainly relying on manual recording. So its accuracy and reliability are difficult to be guaranteed. It is so difficult to accurately reflect the true trajectory of eye movement. The appearance of eye tracker provides an effective means for the psychologist to record the information of eye movements. It can be used to explore the visual signals in a variety of situations, and to observe the relationship between eye movements and human psychological activities [2]. Eye tracker is an instrument based on eye tracking technology to collect eye movement trajectory. With the development of computer, electronic information and infrared technology, eye tracker has been further developed in recent years [3].

With the development of artificial intelligence technology, the research of eye movements can explore the visual information processing and control system, because of its wide application value and business prospects in psychology [4], such as medical diagnosis [5], military research [6], commercial advertising evaluation [7] and driving the vehicle [8] and other fields. Eye tracking technology has made rapid development in recent years. The researchers extract and record the user’s eye movement data, and then extract the important information by analyzing these data. Eye tracking technology can also be used in education field. We can use the learner’s visual psychology to simulate a realistic learning environment, and interact with learners. Eye tracking technology can also be used in an e-learning system by taking appropriate interventions when the user does not focus on the active content on the computer screen for a long time or is concerned with the irrelevant area of the learning task.

2 Eye Tracking Technology

Nowadays, the eye tracking technology is based on the interactive electronic products, which is divided into wearable and non-contact eye movements. The basic principle of eye tracking technology is the use of image processing technology to extract the eye image directly or indirectly using the camera, by recording the position of the infrared light spot reflected by the human cornea and the pupil, so as to achieve the purpose of recording the change of the line of sight. The eye movement system identifies and determines the vector change between the pupil center and the cornea reflex point by analyzing the eye video information collected from the camera. Thereby determining the change of gaze point (Fig. 1).

2.1 Face Detection and Eye Positioning

At present, face detection classifiers are mostly based on haar features by using Adaboost learning algorithm training. In this paper, the face detection algorithm is also based on Haar feature and AdaBoost classifier, but combined with prior knowledge of facial feature distribution, to locate the eye area, and the human face region and human eye region are positioned in the image (Fig. 2).

2.2 Pupil Localization and Infrared Spot Detection

Due to eye movement or uneven illumination and other external factors, the pupil area will appear scattered noise information, and these scattered noise on the pupil and cornea reflection high light points will cause errors. Median filtering has some effect on filtering out such noises. So filtering and denoising methods are used as pre-processing of the image.

-

(1)

Infrared spot center

In this paper, the gaze tracking technology is mainly through the near infrared light source in the human cornea to produce the corresponding corneal reflex spot as a reference, combined with the human eye pupil center point, and then through the geometric model for spatial mapping, we get the human eye gaze point.

Step1: Calculate the image gradient.

Due to the complexity of the eye image, we estimate the region of interest (ROI) of the eye, since the gray value of the spot in the region of interest is higher than the gray value of the surrounding pixel, which gives us an idea of locating the infrared spot coordinates. In order to obtain the central position of the spot, it is necessary to divide the spot from the eye images. Before the traditional image segmentation, it is usually necessary to perform edge detection by using the feature image. The traditional edge detection operator has the Sobel operator, Prewitt operator and Roberts operator [9]. The following is a description of the Prewitt operator.

In the determination of the center of the spot, more stable edge-based fitting method are based on geometric features, through the circle and other geometric features to determine the center of the spot. There are usually grayscale centroid methods:

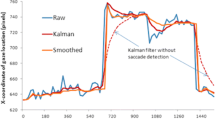

Where \( \left( {x_{c} ,y_{c} } \right) \) is the center of the detected spot and \( I_{n} \) is the pixel gray value of the ROI region. So, if our ROI area is small enough, we can use the centroid method to quickly determine the center of the spot. In order to minimize the ROI, we improved the basis of the Prewitt operator, through the gradient of the image, to determine the approximate edge position and to determine the maximum gradient value of the pixel.

By using the Eq. (5) to determine the gradient matrix of the ROI region, we can ignore the gradient values at the edges of the image based on our prior knowledge of the pupil position. Finally, we determine the maximum point of the gradient point coordinates p, according to the image pixel size, the rectangular area where the spot position is determined with the p point as the center.

Step2: Threshold processing.

After we get the interested area, in order to eliminate the impact of other image areas, we are not interested in for the next image operation, We will get the whole human eye image through the threshold for image segmentation.

Then, we focus on the segmentation of ROI images. In this paper, we study and improve the traditional maximum interclass variance (OTSU) threshold method.

-

(2)

Pupil center positioning

It is easier to locate the pupil center than the spot. Since the gray value of the pupil and the gray value of the surrounding position are quite different in the human eye region, and the image has been preprocessed before the pupil is positioned, so as to select the appropriate threshold for image segmentation.

2.3 Gaze Tracking

The coordinate extraction of the pupil and the center of the spot are the basis for realizing the gaze tracking. The two-dimensional migration vector can be obtained by calculation, that is, the P-CR vector.

Where \( (x_{p} ,y_{p} ) \) and \( (x_{c} ,y_{c} ) \) is the pupil and spot center coordinates respectively, \( (x_{e} ,y_{e} ) \) is P-CR vector.

In this paper, we use of 6 parameters of the fitting to ensure that the system has good accuracy and real-time.

During the calibration process, the user needs to follow the calibration point in order to obtain the corresponding P-CR vector. Using the calibration point and P-CR vector to solve the 12 position parameters, this paper uses the least squares method to solve the model parameters.

After the parameters are calculated, we calculate the P-CR, the P-CR into the Eq. (7), and to calculate the real scene in the human eye point of view, to achieve the purpose of gaze tracking.

3 The Application of Eye Movement in Mathematics Education

Human eye movement can reflect the human thinking process [9]. The eye movement researcher records the eye movement trajectory based on the eye tracking technique. In the process of mathematics learning, students need to think about the visual mathematical language. In the process of understanding the problem, each different symbol in mathematics represents the different information, the brain for different information symbols, watching, jumping and other different eye movement mode, the response of these eye movement mode of attention, time and pupil diameter and other indicators. In practice, the eyes will be moved to another location once they are gazed at a place after a certain period of time. So if there is a cognitive difficulty in a given area, the fixation time will increase. And, after the students understand the previous content, they can predict the following content. In some areas it will appear the tendency of eye jump. If the students think that part of the content is difficult to understand, there will be short jump distance, so their watching time is longer. At present, the experimenter is the most commonly used eye tracking method with the video image of eye position, that is, to record and analyze the direction of pupil movement by recording video. In this paper, through the real-time eye tracking system designed by ourselves, we can feedback the eye movements in real time. In the aspect of teaching, the type of eye tracker which is often used by teachers is non-contact eye movement. In the experiment, it is necessary to have a host and a camera device. The instrument is used to analyze the subject’s cognitive ability by recording the moving characteristics of the eye when reading the equation and the mathematical symbol of the host. The teachers can improve the teaching quality by improving the teaching design through the student’s eye movement. In terms of student learning mathematics, we judge the students’ acceptance of the teaching content through the eye movement of the students, who can understand the difficult part and the interest part of their own learning. We mainly learn the psychological response from the eye movement indicators of the students (Table 1).

Through the two aspects of teaching and learning in mathematics education. Researchers record and analysis the eye movement data of the students, Through the analysis of eye movement data, teachers understand the content of the difficulties of teaching, so as to modify the entire teaching design. On the other hand, students can understand their own learning interests and optimize their own learning strategies. We use their own in the windows platform to develop real-time eye tracking system, the students of mathematics learning eye tracking experiment, because the system is a beta version, only the number of fixations is provided in the interface, and other indicators will be used in the next version (Fig. 3).

In this software, we developed a multidisciplinary education framework, but in the beta version, we only tested the mathematics disciplines. So this paper take mathematics as an example for application of eye tracking (Fig. 4).

By students’ watching four different video or photos, we can analyze the students’ eye tracking data. In order to better analyze the fixation point, we add the expression recognition system in the software, while observing the change of the human eye while observing the change of the expression of the student. We can better analyze the learning characteristics of the students. We can make a simple analysis of the students’ learning process from the perspective of the students’ attention to different videos and the changes of the expression in the whole process.

4 Conclusions

In this paper, we apply the eye tracking technology to the teaching of mathematics. Teachers can improve the teaching program according to the eye movement data of the students in the course of learning. On the other hand, students can adjust themselves according to the eye movement situation by the human-computer interaction experiences. Due to the abstraction and complexity of mathematics, this paper provides a feasible solution for mathematics teaching. We will expand the software functions and improve eye tracking accuracy in the future study.

References

Tao, R.Y., Qian, M.F.: Cognitive meaning of eye movement indicators and the value of polygraph. Psychol. Technol. Appl. 7, 26–29 (2015)

Ling, D.L., Axu, H., Zhi, Y.H.: A study on the text reading based on eye movement signals. J. Northwest Univ. Nationalities (Nat. Sci. Ed.) 35(2), 43–47 (2014)

Morimoto, C.H., Mimica. M.R.M.: Eye gaze tracking techniques for interactive applications. Comput. Vis. Image Underst. 98, 4–24 (2005)

Li, Y.G., Xuejun, B.: Eye movement research and development trend of advertising psychology. Psychol. Sci. 27(2), 459–461 (2004)

Jacob, R.J.K.: The use of eye movements in human-computer interaction techniques: what you look at is what you get. Readings in Intelligent User Interfaces. Morgan Kaufmann Publishers Inc. (1998)

Lim, C.J., Kim, D.: Development of gaze tracking interface for controlling 3D contents. Sens. Actuators, A 185(5), 151–159 (2012)

Higgins, E., Leinenger, M., Rayner, K.: Eye movements when viewing advertisements. Front. Psychol. 5(5), 210 (2014)

Hansen, D.W., Ji, Q.: In the eye of the beholder: a survey of models for eyes and gaze. IEEE Trans. Pattern Anal. Mach. Intell. 32(3), 478–500 (2010)

Tatler, B.W., Wade, N.J., Kwan, H., Findlay, J.M., Velichkovsky, B.M.: Yarbus, eye movements, and vision. i-Perception 1(1), 7 (2010)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this paper

Cite this paper

Sun, Y., Li, Q., Zhang, H., Zou, J. (2018). The Application of Eye Tracking in Education. In: Pan, JS., Tsai, PW., Watada, J., Jain, L. (eds) Advances in Intelligent Information Hiding and Multimedia Signal Processing. IIH-MSP 2017. Smart Innovation, Systems and Technologies, vol 82. Springer, Cham. https://doi.org/10.1007/978-3-319-63859-1_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-63859-1_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-63858-4

Online ISBN: 978-3-319-63859-1

eBook Packages: EngineeringEngineering (R0)