Abstract

The article analyzes the notions of analogue and digital simulation as found in scientific and philosophical literature. The purpose is to distinguish computer simulations from laboratory experimentation on several grounds, including ontological, epistemological, pragmatic/intentional, and methodological. To this end, it argues that analogue simulations are best understood as part of the laboratory instrumentarium, whereas digital simulations are computational methods for solving a simulation model. The article ends by showing how the analogue-digital distinction is at the heart of contemporary debates on the epistemological and methodological power of computer simulations.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Nelson Goodman once said that

few terms are used in popular and scientific discourse more promiscuously than ‘model’. A model is something to be admired or emulated, a pattern, a case in point, a type, a prototype, a specimen, a mock-up, a mathematical description. (Goodman 1968, p. 171)

Something similar can be said about the term ‘simulation’. A simulation is something that reproduces by imitation of an original, that emulates a mechanism for purposes of manipulation and control, as well as an instrument, a depiction of an abstract representation, a method for finding sets of solutions, a crunching number machine, a gigantic and complex abacus. Paraphrasing Goodman, a simulation is almost anything from a training exercise to an algorithm.

This article presents and discusses two notions of simulation as found in scientific and philosophical literature. Originally, the concept was reserved for special kinds of empirical systems where ‘pieces of the world’ were manipulated as replacements of the world itself. Thus understood, simulations are part of traditional laboratory practice. The classic example is the wind tunnel, where engineers simulate the air flow over the wind of a plane, the roof of a car, and under a train. These simulations, however, differ greatly from the modern and more pervasive use of the term, which uses mathematical abstraction and formal syntax for the representation of a target system.Footnote 1 For terminological convenience, I shall refer to the former as analogue simulations, while the latter are digital simulations or computer simulations.

On what grounds could we distinguish these two types of simulations? Is there a set of features that facilitate the identification of each notion individually? In what respects is this distinction relevant for our assessment of the epistemological and methodological value of analogue and computer simulations? These and other questions are at the core of this article. I also show in what respects this distinction is at the heart of recent philosophical discussions on the role of computer simulations in scientific practice.

The article is structured as follows. Section 2 revisits philosophical literature interested in distinguishing analogue from digital simulations on an ontological and agent-tailored basis. Thus understood, analogue simulations are related to the empirical world by a strong causal dependency and the absence of an epistemic agent. Computer simulations, by contrast, lack causal dependencies but include the presence of an epistemic agent.Footnote 2

Section 3 raises some objections to this distinction, showing why it fails in different respects and at different levels, including mirroring scientific and engineering uses. Alternatively, I suggest that analogue simulations can be part of the laboratory instrumentaria, while computer simulations are methods for computing a simulation model. My analysis emphasizes four dimensions, namely, epistemological, ontological, pragmatic/intentional, and methodological.

At this point one could frown upon any featured analogue-digital distinction. To a certain extent, this is an understandable concern. There are deliberate efforts by modelers to make sure that this distinction is of no importance. I believe, however, that the distinction is at the heart of contemporary discussions on the epistemological power of laboratory experimentation and computer simulations. Section 4, then, tackles this point by showing the presence and impact of this distinction in the recent philosophical literature.

2 The Analogue-Digital Distinction

Nelson Goodman is known to support the analogue-digital distinction on a semantic and syntactic basis. According to him, it is a mistake to follow a simple, language-based interpretation where analogue systems have something to do with ‘analogy,’ and digital systems with ‘digits.’ The real difference lies somewhere else. Concretely, in the way each system is dense and differentiated.Footnote 3 When it comes to numerical representation, for instance, an analogue system represents in a syntactically and semantically dense manner. That is,

For every character there are infinitely many others such that for some mark, we cannot possibly determine that the mark does not belong to all, and such that for some object we cannot possibly determine that the object does not comply with all. (Goodman 1968, p. 160)

Goodman uses a rather opaque definition for a simple and intuitive fact. Imagine a Bourdon pressure gauge whose display does not contain any pressure units. In fact, think of the display as containing nothing at all, no units, no marks, no figures. If the display is blank, and the needle moves smoothly as the pressure increases, then the instrument is measuring pressure although it is not using any notation to report it (see Goodman 1968, p. 157). This, according to Goodman, is an example of an analogue device. In fact, the gauge is a “pure and elementary example of what is called an analogue computer” (Ibid., 159).

In a digital system, on the other hand, numerical representation would be differentiated in the sense that, given a number-representing mark (for instance, an inscription, a vocal utterance, a pointer position, an electrical pulse), it is theoretically possible to determine exactly which other marks are copies of that original mark, and to determine exactly which numbers that mark and its copies represent (Ibid., 161–164). Consider the Bourdon pressure gauge again. If the dial is graduated by regular numbers, then we are in the presence of a digital system. Quoting Goodman again, “displaying numerals is a simple example of what is called a digital computer” (Ibid., 159–160).

Goodman’s notions of dense and differentiated, and of analogue and digital have been conceived to account for a wide range of systems, including pictorial (e.g., photos, drawings, paintings, and icons), mathematical (e.g., graphs, functions, and theorems), and technological (e.g., instruments and computers). Unfortunately, this analogue-digital distinction suffers from significant shortcomings that put Goodman at the center of much criticism.

According to David Lewis, a chief critic of Goodman, neither the notion of ‘dense’ nor of ‘differentiated’ accounts for the analogue-digital distinction as made in ordinary technological language. That is, neither scientists nor engineers talk of analogue as dense, nor of digital as differentiated. Instead, Lewis believes that what distinguishes analogue from digital is the use of unidigits, that is, of physical primitive magnitudes. A physical primitive magnitude is defined as any physical magnitude expressed by a primitive term in the language of physics (Lewis 1971, p. 324). Examples of primitive terms are resistance, voltage, fluid, and the like. Thus, according to Lewis, the measurement of a resistance of 17 Ω represents the number 17. It follows that a system that represents analogous unidigits, such as a voltmeter, is an analogue system. Consider a more complex case: Think of a device which adds two numbers, x and y, by connecting two receptacles, X and Y, through a system of pipes capable of draining their content into Z, the result of the addition z. Consider now that the receptacles are filled with any kind of liquid. Thus constructed, the amount z of fluid that has moved from X and Y into Z is the addition of x and y. Such a device, in Lewis’s interpretation, is an analogue adding machine, and the representation of numbers (by units of fluid) is an analogue representation (Lewis 1971, pp. 322–323).

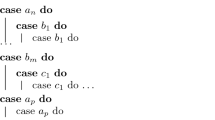

On the other hand, digital is defined as the representation of numbers by differentiated multidigital magnitudes, that is, by any physical magnitudes whose values depend arithmetically on the values of several differentiated unidigits (Ibid., 327). For instance, in fixed point digital representation, a multidigital magnitude M is digital since it depends on several unidigital voltages. For each system s at time t, where m is the number of voltages, and n=2, (see Ibid., 326):

Unfortunately, Lewis’ analogue-digital distinction also suffers the same shortcomings as Goodman’s. Take, for instance, Kay’s first digital voltmeter. According to Lewis, this digital voltmeter qualifies as an analogue device because it measures a unidigital primitive magnitude (i.e., voltage). Now, the voltmeter is referred to as digital precisely because it converts an analogue signal into a digital value. It follows that Lewis’ interpretation also fails to account for the analogue-digital distinction as used in ordinary technological language.

A third proponent of the analogue-digital distinction is Zenon Pylyshyn, who has a different idea in mind. He shifts the focus from types of representations of magnitudes, prominent in Goodman’s and Lewis’ interpretations, to types of processes (i.e., analogue in the case of analogue, and symbolic in the case of digital). In doing so, Pylyshyn gains grounds for objecting that Lewis’ criterion allows magnitudes to be represented in an analogue manner, without the process itself qualifying as an analogue process. This is an important objection since, under Lewis’ interpretation, the modern computer qualifies as an analogue process. The example used by Pylyshyn is the following:

Consider a digital computer that (perhaps by using a digital-to-analogue converter to convert each newly computed number to a voltage) represents all its intermediate results in the form of voltages and displays them on a voltmeter. Although this computer represents values, or numbers, analogically, clearly it operates digitally. (Pylyshyn 1989, p. 202)

According to Pylyshyn, the properties and relations specified in the analogue process must play the right causal role. That is, an analogue process and its target system are both causally related (Ibid., 202). This idea is mirrored by much of the work being done on scientific experimentation. In fact, the so-called ‘new experimentalism’ holds that, in laboratory experimentation, independent variables are causally manipulated for the investigation of the target system.

As for the notion of computational process, Pylyshyn indicates that it comprises two levels of description, namely, a symbolic level, which jointly refers to the algorithm, data structures, initial and boundary conditions, and the like; and a description of the physical states of the machine, referred to as the physical manipulation process. Pylyshyn, then, carefully distinguishes between a symbolic level, which involves the abstract and representational aspects of the computational process, from the physical manipulation process, which includes the physical states of the computer as instantiated by the algorithm (Ibid., 144).Footnote 4

Closely related to Pylyshyn is Russell Trenholme, who discusses these ideas on analogue and digital processes in the context of simulations. Trenholme distinguishes between analogue simulations, characterized by parallel causal-structures isomorphic to the phenomenon simulated,Footnote 5 from symbolic simulations characterized as a two-stage affair between symbolic processes and a theory-world mapping (Trenholme 1994, p. 118). An analogue simulation, then, is defined as “a single mapping from causal relations among elements of the simulation to causal relations among elements of the simulated phenomenon” (Ibid., 119). According to this definition, analogue simulations provide causal information about represented aspects of the physical processes being simulated. As Trenholme puts it, “[the] internal processes possess a causal structure isomorphic to that of the phenomena simulated, and their role as simulators may be described without bringing in intentional concepts” (Ibid., 118). Let us note that this lack of intentional concepts is an important feature of analogue simulations, for it means that they do not require an epistemic agent conceptualizing the fundamental structures of the phenomena, as symbolic simulations do.Footnote 6

The notion of symbolic simulation, on the other hand, includes two further constituents, namely, the symbolic process and a theory-world mapping. The symbolic process is defined as a mapping from the simulated model onto the physical states of the computer. The theory-world mapping is understood as a mapping from the simulated model onto aspects of a real-world phenomenon (referred to as an exogenous computational phenomenon). A symbolic simulation, therefore, is defined as a two-stage affair: “first, the mapping of inference structure of the theory onto hardware states which defines symbolic [processes]; second, the mapping of inference structure of the theory onto extra-computational phenomena” (Trenholme 1994, p. 119).Footnote 7 An important outcome that I will come back to later is that in a symbolic simulation the simulation model does not necessarily map a real-world phenomenon. Rather, the simulation could explore the theoretical implications of the model.

3 Varieties of Simulations

So far, I have briefly reconstructed canonical literature on the analogue-digital distinction. It is Pylyshyn’s and, more importantly, Trenholme’s account which facilitates drawing the first dividing lines between analogue and digital simulations. Whereas the former is understood as agent-free and causally isomorphic to a piece of the world, the latter is only an abstract –and, as sometimes also characterized, formal—representation of the target system. I take this distinction to pave the way to the general understanding of analogue and digital simulations, although some adjustments and clarifications must follow.

First, the claim that an analogue simulation is tailored to the world in a causally isomorphic, agent-free sense has serious shortcomings. Take first the claim that analogue simulations are agent-free. One could easily think of an example of an agent-tailored analogue simulation. Consider, for instance, a ripple tank for simulating the wave nature of light. Such simulation is possible because water waves and light as a wave obey Hooke’s law, d’Alembert's equation, and Maxwell’s equation, among other conceptual baggage. Now, clearly these equations have been conceptualized by an agent. It follows that, according to Trenholme’s account, the ripple tank cannot be characterized as an analogue simulation. But this appears counter-intuitive, as a ripple tank is an analogue simulation of the wave nature of light.

Additionally, iso-morphism is the wrong representational relation. One can argue this by showing how causal structural isomorphism underplays the reusability of analogue simulations. Take the ripple tank as an example again. With it, researchers simulate the wave nature of light as well as diffraction from a grid. The principle guiding the latter simulation establishes that when a wave interacts with an obstacle, diffraction –or passing through—occurs. The waves then contain information about the arrangement of the obstacle. At certain angles between the oncoming waves and the obstacle, the waves will reflect off the obstacle; at other angles, the waves will pass right through it. Now, according to Trenholme, there must be a causal isomorphism between the ripple tank and the light as a wave, one the one hand, and the ripple tank and diffraction from a grid, on the other. It follows that there should also be a causal isomorphism between light as a wave and diffraction. But this is not the case. The reason why we can use the ripple tank to simulate both empirical systems is that its causal structure includes, so to speak, the causal structure of light as a wave and the causal structure for diffraction. Since the philosophical literature on representation abounds in similar warnings and examples, I will not present any further objections to causal structural isomorphism (see, for instance, Suárez 2003). In order to maintain neutrality on representational accounts, I will talk of -morphism.

Despite these issues, I believe that Pylyshyn and Trenholme are correct in pointing out that something like a causally-based feature is characteristic of analogue simulations. To my mind, analogue simulations are a kind of laboratory instrumentaria in the sense that they cannot be conceived as alien to conceptualizations (i.e., agent-tailored) and modeling (i.e., -morphic causal structures), just like the instruments found in the laboratory practice. In addition to Pylyshyn’s and Trenholme’s ontological analysis, I include a study of the epistemological, pragmatic/intentional, and methodological dimension of analogue simulations. The ripple tank again provides a good example, as it requires models and theories for underpinning the sensors, interpreting the collected data, filtering out noise, and a host of methods that help us understand the behavior of the simulation and its -morphism with the wave nature of light—and diffraction. In fact, contemporary laboratory experimentation—including laboratory instrumentaria—is traversed by modeling and theory, concerns and interests, ideology and persuasion, all in different degrees and at different levels.

The case of digital simulations, on the other hand, is slightly different. I fundamentally agree with Pylyshyn’s and Trenholme’s characterization in that computer simulations are a two stage-affair. In this respect, computer simulations must be understood as systems that implement a simulation model, calculates it, and renders reliable results. As elaborated by the authors, however, it is left unclear whether the mapping to the extra-computational phenomena requires them to exist in the world, or whether they could be the mere product of the researcher’s imagination. A simple example helps to clarify this concern. A digital simulation could be of a real-world orbiting satellite around a real planet (i.e., by setting up the simulation to real-world values), or of a sphere of 100,000 kg of enriched uranium. Whereas the former simulation is empirically possible, the latter violates known natural laws. For this reason, if the simulation model represents an empirical target system, then the computer simulation renders information about real-world phenomena. In all other cases, the computer simulation might still render reliable results, but not of a real-world phenomena. Resolving this issue is, to my mind, a core and still untreated problem in the philosophy of computer simulations. In here, I discuss several potential target systems and what they mean for studies on computer simulation. In addition, and just like in the analogue case, I discuss their ontological side as much as their epistemological, pragmatic, and methodological dimensions.

To sum up, analogue simulations belong to the laboratory instrumentaria, while computer simulations are methods for computing a simulation model. This is to say that analogue simulations carry out instrumental work, similar to many laboratory instrumentaria, while digital simulations are all about implementing and computing a special kind of model. Thus understood, I build on Pylyshyn’s and Trenholme’s ontological characterizations, while incorporating several other dimensions. As working conceptualization, then, we can take that an analogue simulation duplicates—in the sense of imitates—a state or process in the material world by reducing it in size and complexity for purposes of manipulation and control. Analogue simulations, then, belong to the laboratory instrumentaria in a way that digital simulations do not. The working conceptualization for computer simulation, on the other hand, is of a method for computing a special kind of model. These two working conceptualizations are discussed at length in the following sections.Footnote 8

3.1 Analogue Simulations as Part of the Laboratory Instrumentaria

Modern laboratory experimentation without the aid of instruments is an empiricist’s nightmare. But, what is a laboratory instrument? Can a hammer be considered one? Or must it be a somehow more sophisticated device, such as a bubble chamber? And more to the point, why are analogue simulations constituents of laboratory instrumentaria? There are no unique criteria for answering these questions. The recent history of science shows that there is a rich and complex chronicle on laboratory instruments, anchored in changes in theory, epistemology, cosmologies and, of course, technology. In this section, I intend to answer two questions, namely, what is typically considered as a laboratory instrument, and why is an analogue simulation constituent of the laboratory instrumentaria? To this end, I address four dimensions of analysis: the epistemological, the ontological, the pragmatic/intentional, and the methodological.

Regarding the epistemological dimension, I take that laboratory instrumentaria ‘embody’ scientific knowledge via the materials, the theories, and models used for building them. In addition to this dimension, laboratory instrumentaria are capable of ‘working knowledge,’ that is, practical and non-linguistic knowledge for doing something. As for the ontological dimension, the laboratory instrumentaria belong to a causally-based ontology, something along the lines suggested earlier by Pylyshyn and Trenholme, but that needs to be refined. The pragmatic/intentional dimension shows that laboratory instrumentaria are designed for fulfilling a practical end. Finally, the methodological dimension emphasizes the diversity of sources which inform the design and construction of laboratory instrumentaria. Let us discuss each dimension in some detail.

The claim that genuine laboratory instrumentaria ‘embody’ scientific knowledge has been interpreted in several ways. One such a way takes that they carry properties and functionalities specifically built-in in order to make them more suitable for the task designed. For instance, the bubble chamber is a vessel filled with superheated liquid hydrogen suitable for detecting electrically charged particles moving through it. Before liquid hydrogen was available, early prototypes included all sorts of liquids, none of which were suitable for the specific purpose of detecting tracks of ionizing particles. The search for a suitable liquid—suitable materials, etc.—embodies knowledge that configures the instruments, the measurements, and the results. Another way to interpret the embodiment of knowledge is that laboratory instrumentaria have been built by following a theory or a scientific model. The ripple tank, as mentioned, embodies Hooke’s law, d’Alembert's formula, and Maxwell’s equation, which explains the wave nature of light.

Embodying knowledge has some kinship with the notion of ‘working knowledge.’ The general claim is that scientific activity is not only based on theory, a linguistically centered understanding of knowledge, or on laboratory experimentation, an empirically centered view of the world, but also on the use of instruments. This is at the core of what David Baird calls a materialistic epistemology of instrumentation (Baird 2004, p. 17). According to Baird, instruments bear knowledge of the phenomena they produce, allowing for contrived control over them. This is the meaning I give to the term ‘working knowledge,’ that is, a kind of knowledge that is sufficient for doing something, despite our theoretical understanding of the instrument (or lack thereof). One example used by Baird is Michael Faraday’s electromagnetic motor. Although at the time there was considerable disagreement over the phenomenon produced, as well as over the principle of operation, Faraday and his contemporaries “could reliably create, re-create, and manipulate [a torque rotating in a magnetic field induced by opposite forces], despite their lack of an agreed-upon theoretical language” (Baird 2004, p. 47).

The analysis on working knowledge needs to be complemented with a causally-based ontology. Our previous analysis made use of Pylyshyn’s and Trenholme’s as part of the ontological assessment of the analog-digital distinction. However, we now need a more refined taxonomy that takes care of the differences in laboratory instrumentaria. Rom Harré provides such taxonomy based on two families, namely, instruments and apparatus. The first is “for that species of equipment which registers an effect of some state of the material environment, such as a thermometer”, whereas the second is “for that species of equipment which is a model of some naturally occurring structure or process” (Harré 2003, p. 20). Thus understood, instruments are rather simple to envisage, since any detector or measurement device qualifies as such. As features, they are in direct causal interaction with nature, and therefore back inference from the state of the instrument to the state of the world is grounded on the reading of the instrument. Apparatus, however, are part of a more complex family of laboratory instrumentaria. They are conceived as material models whose relation to nature is one of ‘analogy,’ that is, belonging to the same ontological class. As Harré explains, “showers of rain and racks of flasks are both subtypes of the ontological supertype ‘curtains of spherical water drops’” (Harré 2003, p. 34). To make matters more complicated, the family of apparatus must be split into two subclasses: domesticated worlds and Bohrian apparatus. The former are models of actual ‘domesticated’ pieces of nature. For instance, a Petri Dish can be used for the cultivation of bacteria and small mosses. The latter are material models used for the creation of new phenomena, this is, phenomena that are not found in the wild. An example of a Bohrian apparatus is Humphrey Davy’s isolation of sodium in the metallic state by electrolysis.

Following Harré’s taxonomy, Faraday’s electromagnetic motor falls into the category of instrument, whereas the bubble chamber is an apparatus. More specifically, the bubble chamber falls into the subcategory of domesticated world, since it is a material model for the behavior of particles and it does not creates new phenomena. Analogue simulations, on the other hand, may fall into any of the categories above. For instance, the ripple tank qualifies as an apparatus, subcategory domesticated world. We could also think of analogue simulations set for measuring observable values, such as a circuit simulation, as instruments.

Thus understood, analogue simulations could be identified as constituents of the laboratory instrumentaria based on their epistemic function, as well as on their ontological placement. However, two more levels of analysis are needed for fully identifying analogue simulations and, more to my interests, distinguishing them from computer simulations.

According to Peter Kroes (2003), besides the traditional dichotomy between laboratory instrumentaria as embodying a theory and the material restrictions imposed onto it, there is another equally relevant dimension, namely, the designed intentionality of an instrument or apparatus. This term is meant to highlight, along with the nature of a scientific instrument or apparatus, the practical intentions of the scientist when using it. Following Kroes, laboratory instrumentaria are generally analyzed as physical objects, as they obey the laws of nature—and in this respect their behavior can be explained causally in a non-teleological way. Now, they can also be analyzed by their physical embodiment of a design, which does have a teleological character.

Any instrument or apparatus in the laboratory instrumentaria, then, performs a function for which they have been designed and made. This is true for all laboratory instrumentaria, from the early orreries to the latest analogue simulations. As Kroes puts it: “[T]hink away the function of a technological artifact, and what is left is no longer a technological artifact but simply an artifact—that is, a human-made object with certain physical properties but with no functional properties.” (Kroes 2003, p. 69). By highlighting this pragmatic dimension, Kroes allows for categorizations based on a purely intentional factor. This means, among other things, the possibility of subcategorizations within the laboratory instrumentaria based on intended functions and purposes.

As for the methodological dimension, there are no common features that tie all the laboratory instrumentaria together. The sources of inspiration, materials available, and techniques for building an instrument or apparatus vary by epoch, education, and location. It is virtually impossible to establish common methodological grounds. To be an analogue simulation, nevertheless, is to be made of a material thing, tangible, and prone to manipulation in a causal sense. Whether it is wood, metal, plastic, or even less tangible things, like air or force, analogue simulations are unequivocally characterized by the presence of a material substrate.

Thus understood, Pylyshyn’s and Trenholme’s causal structures are only part of the story of analogue simulations. Other perspectives include Baird’s embodiment knowledge as the epistemic angle, Harré’s refined ontological taxonomy, Kroes’ pragmatic/intentional account that brings into the picture the influence of individuals and communities, and a very complex underlying methodology. In this respect, analogue simulations are part of the laboratory instrumentaria in knowledge, nature, purpose, and design. Computer simulations, however, are something else.

3.2 The Microcosm of Computer Simulations

Trenholme’s work enables the idea that results on digital simulation are the byproduct of calculating a simulation model. Indeed, calculating such a model corresponds to Trenholme’s first stage of the symbolic simulation, which depends on the states of the physical machine as induced by the simulation model (i.e., the symbolic process). Let us also recall that Trenholme indicates that the simulation maps onto an extra-computational phenomenon, suggesting in this way that the simulation model represents a real-world target system. As suggested earlier, there is no need to assert such mapping, as it is neither necessary for rendering results, nor for assessing the epistemic power of computer simulations. Boukharta et al. (2014) provide an interesting example on how non-representational computer simulations deliver reliable information on mutagenesis and binding data for molecular biology. Following their example, then, I take computer simulations to be ‘artificial worlds of their own’ in the sense that they render results of a given target system regardless of the representational content of its model—or mapping relations to extra-computational phenomena.

Thus understood, there are as many ways in which computer simulations are artificial worlds as there are target systems. A rough list includes empirical target systems—as I understand Trenholme’s extra-computational phenomena—to utterly descriptively inadequate target system. An example of an empirical target system is the planetary movement, where one implements a simulation model of classical Newtonian mechanics. Another example stems from the social sciences, where the general behavior of social segregation is represented by the Schelling model. Naturally, the degree of accuracy and reliability of these simulations depend on several variables, such as their representational capacity, degree of robustness, computational accuracy, and the like.

On the opposite end there are non-empirical target systems, such as those of mathematical nature. For instance, in topology one could be interested in simulating a Clifford torus, a Hopf fibration, or a Möbius transformation. Of course, the boundary between what is strictly empirical and what is strictly non-empirical is set by the analysis of several factors. In what respect is the Hopf fibration ‘less empirical’ when it describes the topological structure of a quantum mechanical two-level system? Real pairwise linked keyrings could be used to mimic part of the Hopf fibration. ‘More empirical’ and ‘less empirical’, therefore, are concepts tailored not only to the target system, but also to the idealizations and abstractions of the simulation model. Allow me to bring forward another example. The model of segregation, as originally elaborated by Schelling, explicitly omits organized action (e.g., undocumented immigrants leaving due to their status) and economic and social factors (e.g., the poor are segregated from rich neighborhoods) (Schelling 1971, p. 144). To what extent, then, is Schelling’s model a representation of an empirical target system, as claimed earlier, as opposed to a mere mathematical description?

A similar issue rises with descriptively inadequate target system, such as the Ptolemaic model of planetary movement. In principle, there is nothing that prevents researchers from implementing such models as computer simulations. The problem is that being descriptively inadequate begs the question of what is an ‘adequate’ model. A Newtonian model seems to be just as descriptively inadequate as the Ptolemaic one, in that neither literally applies to planetary movement. Another example of a descriptively inadequate target system is a simulation that implements Lotka-Volterra models with infinite populations (represented in the computer simulation by very large numbers).

All these examples furnish the idea that computer simulations are ‘artificial worlds of their own.’ Any representational relation with an extra-computational phenomena is an extra mapping that does not impose constraints neither on the computer simulation nor on the assessment of its reliability. If the simulation represents an empirical target system, then its epistemological assessment is of a certain kind. If it does not, then it is of another. But in neither case does the computer simulation cease to be an artificial world, with its own methodology, epistemology, and semantics. This is why I believe that separating the simulation model from its capacity to represent the ‘real-world’, as Trenholme does with the two-stage affair, is the correct way to characterize computer simulations.

So much for target systems; what about computer simulations themselves? Their universe is vast and rapidly growing. This can be easily illustrated by the many ways that one could elaborate a sound taxonomy for computer simulations. For instance, if the taxonomy is based on the kind of problem at hand, then the class of computer simulations for astronomy is different from those used for synthetic biology, which in turn are different from organizational studies, although still similar to certain problems in sociology. The nature of each target system is sometimes best described by different models (e.g., sets of equations, descriptions of phenomenological behavior, etc.). Another way to classify computer simulations is based on the calculating method used. Thus, for simulations in fluid mechanics, acoustics, and the like, Boundary Element Methods are most suitable. Monte Carlo methods are suitable for systems with many coupled degrees of freedom, such as calculation of risk and oil exploration problems. Further criteria for classification include stochastic and deterministic systems, static and dynamic simulations, continuous and discrete simulation, and local and distributed simulations.

The most typical approach, however, is to focus on the kind of model implemented on the physical computer. The standard literature divides computer simulations into three classes: cellular automata, agent-based simulations, and equation-based simulations (see Winsberg 2015). Let us note that Monte Carlo simulations, multi-scale simulations, complex systems, and other similar computer simulations become a subclass of ‘equation-based simulations’. For instance, Monte Carlo simulations are equation-based simulations whose degrees of freedom make them unsolvable by any means other than random sampling. And multi-scale simulations are also equation-based simulations that implement multiple spatial and temporal scales.

As a result, any attempt to classify computer simulations based on a handful of criteria will fail, as researchers are not only bringing into use new mathematical and computational machinery, but also using computer simulations in cross-domains. I take that the kind of model implemented is only a first-order criterion for classification of computer simulations. Additionally, and within each class of computer simulations, there are also a host of methods for computing the simulation model, and a multiplicity of potential target systems tailored to scientific interests, availability of resources—computational costs, human capacity, time-frame, etc.—and expertise knowledge.

3.3 Computer Simulations Meet the Laboratory Instrumentaria

The previous sections made an effort to show that computer simulations are methods for computing a simulation model embodying knowledge—i.e., by implementing different kinds of models—, and which are conceived with a specific purpose in mind—i.e., representing different target systems. One could also make the case that researchers have a working knowledge of these simulations (as Baird indicates), and that a diversity of sources influence their design and coding. Let it be noticed, however, that neither the epistemological, pragmatic/intentional, nor methodological dimensions appear in analogue and computer simulations in the same way. For instance, while computer simulations embed knowledge via implementing an equation-based model in a suitable programming language, analogue simulations do something similar via their materiality.

The ontological dimension is, perhaps, where the differences between analogue and computer simulations are more visible. Harré’s taxonomy explicitly requires both families of laboratory instrumentaria to bear relations to the world, either by causal laws, as in the case of an instrument, or by belonging to the same ontological class, as is the case of the apparatus. Such a criterion excludes, in principle, computer simulations as part of the laboratory instrumentaria. The reason is straightforward: although computer simulations run on physical computers, the physical states of the latter do not correspond to the physical states of the phenomenon being simulated.Footnote 9

Although not exhaustive, I believe that the discussion presented here helps understand the distinction between analogue and computer simulation. In particular, it facilitates the identification of analogue simulations as part of the laboratory instrumentaria, whereas computer simulations are related to computational methods for solving an algorithmic structure (i.e., the simulation model). Before showing in what sense this distinction is at the heart of contemporary discussions on the role of computer simulations in scientific practice, we need to answer the question of whether analogue simulations could be regarded as methods for computing some kind of model. In other words, we are now asking the following question: Could an analogue simulation be a computer simulation? The question, let it be said, is not about so-called analogue computers, as is the example of adding two numbers by adding liquids cited earlier. Such simulations, as I have shown, are still analogue, and have nothing in common with the notion of ‘digital.’ The question above takes seriously the possibility that analogue simulations actually compute a simulation model. I argue that there are a few historical cases where an analogue simulation qualifies, under the present conditions, as a computer simulation. However, these cases represent no danger to the main argument of this article, as they are only interesting for historical purposes. Having said that, allow me to illustrate this issue with an example.

In 1890, the U.S. was ready to conduct the first census with large-scale information processing machines. Herman Hollerith, a remarkable young engineer, designed, produced, and commercialized the first mechanical system for census data processing. The Hollerith machine, as it became known, based its information processing and data storage on punched cards that could be easily read by the machine. The first recorded use of the Hollerith machine for scientific purposes was by Leslie J. Comrie, an astronomer and pioneer in the application of Hollerith’s punched cards computers for astronomical calculations and the production of computed tables (Comrie 1932). As early as 1928, Comrie computed the summation of harmonic terms for calculating the motion of the Moon from 1935 to 2000.

Now, to the extent that the Hollerith machine implements and solves a model (via punched cards) for calculating the motion of the Moon, it qualifies as a simulation. The question is, of what class of simulation are we talking about? Following Pylyshyn and Trenholme, the Hollerith machine does not qualify as analogue since it is not causally -morphic to a target system. Nor does it qualify as digital in the sense we ascribe to modern computers. It follows that, from a purely ontological viewpoint, it is not possible to characterize the use the Hollerith machine as either analogue or digital. These considerations give us an inkling of the limits of characterizing simulations on purely ontological terms. Alternatively, by following my distinction between laboratory instrumentaria and computational methods, the Hollerith machine scores better as a computer simulation. The reason is that it computes and predicts by means of a simulation model in a similar fashion as the modern computer. Admittedly, a punched cards only resemble an algorithm from an epistemic viewpoint, that is, in the sense that there is a set of step-by-step instructions for the machine. Likewise, the machine only bears a similarity to modern computers in its capacity to interpret and execute the given set of instructions coded in the punched cards. For all practical purposes, however, the Hollerith machine, as used by Comrie, implements and solves a model of the motion of the Moon in a similar fashion to most modern computers. I see no objection, therefore, to assert that this particular use of the Hollerith machine qualifies as a computer simulation, however counterintuitive this sounds.

As I mentioned before, the Comrie case is presented only for historical purposes. It is illusory to think of modern computer simulations as implementing punched cards, or to think of the architecture of the computer as anything other than silicon-based circuits on a standardized circuit board (or quantum and biological computers). It is with these discussions in mind that I now turn to my last concern, that is, to evaluate how the analogue-digital distinction influences current philosophical discussions on laboratory experimentation and computer simulations.

4 The Importance of the Analogue-Digital Distinction in the Literature on Computer Simulations

The previous discussion sought to make explicit the distinction between analogue simulations and computer simulations. It is now time to see how such a distinction is at the basis of contemporary discussions on laboratory experimentation and computer simulations.

For a long time, philosophers have shown concern over the epistemological and methodological credentials of laboratory experimentation and computer simulations. Questions like ‘to what extent are they reliable?’ and ‘in what respects does the ontology of simulations affect our assessment of their results?’ are at the heart of these concerns. I submit that the analogue-digital distinction, as discussed here, underlies the answers given to these questions. Moreover, I believe that the distinction underpins the diverse philosophical standpoints found in contemporary literature.

In Durán (2013a), I argued that philosophical comparisons between computer simulations and scientific experimentation share a common rationale, namely, that ontological commitments determine the epistemological evaluation of experiments as well as computer simulation. I then identified three viewpointsFootnote 10:

-

(a)

computer simulations and experiments are causally similar; hence, they are epistemically on par. For instance Wendy Parker (2009);

-

(b)

experiments and computer simulations are materially dissimilar: whereas the latter is abstract in nature, the former shares causal relations with the phenomenon under study. Hence, they are epistemically different. For instance Francesco Guala (2002), Ronald Giere (2009), and Mary Morgan (2003, 2005);

-

(c)

computer simulations and experiments are ontologically similar only because they are both model-shaped; hence, they are epistemically on par. For instance Margaret Morrison (2009) and Eric Winsberg (2009).

To see how the analogue-digital distinction underpins these discussions, take first viewpoint (b). Advocates of this viewpoint accept a purely ontological distinction between experiments and computer simulations. In fact, whenever experiments are analogue simulations—in the sense given by Harré’s taxonomy—and computers are digital simulations—in the sense given by Trenholme’s symbolic simulation—their epistemological assessment diverges. One can then show that this viewpoint presupposes that experiments belong to the laboratory instrumentaria—like analogue simulations—whereas computer simulations are methods for computing a simulation model—like digital simulations.

Moreover, to see how the analogue-digital distinction works to unmask misinterpretations of the nature of experiments and computer simulations, take viewpoint (a). There, Parker conflates causal-related processes with symbolic simulations. In Trenholme’s parlance, Parker merges the representation of an extra-computational phenomenon with the symbolic process. In Durán (2013a), I contended with her viewpoint by arguing that she misinterprets the nature of computer simulations in particular, and of computer software in general. In other words, I made use of the analogue-digital distinction to show in what respects her viewpoint is misleading.

Let us note that viewpoints (a) and (b) are only interested in marking an ontological and an epistemological distinction. As I have argued earlier, in order to fully account for laboratory experimentation and computer simulations it is necessary to also include the pragmatic/intentional and methodological dimensions.

Viewpoint (c), on the other hand, includes the methodological dimension to account for the epistemology of computer simulations and laboratory experimentation. In fact, Morrison’s ontological and epistemological symmetry rests first in acknowledging the analogue-digital distinction, and then in arguing that in some cases it can be overcome by adding a methodological analysis. It comes as no surprise, then, that viewpoint (c) is more successful in accounting for today’s notion of laboratory experiments and computer simulations and therefore, for assessing their epistemological power. I believe, however, that an even more successful account needs also to add the pragmatic/intentional dimension as discussed in this article.

Viewpoint (c) also brings up a concern that I have not addressed in this article yet. That is, that modern practice sometimes merges the analogue and digital dimensions together. This is especially true of complex systems, where computer simulations are at the heart of experimentation—and vice versa. A good example of this is the Large Hadron Collider, where the analogue and the digital work nicely together in order to render reliable data. For these cases, any criteria for a distinction might seem inappropriate and otiose. Admittedly, more needs to be said on this point, especially for cases of complex scientific practice where the distinction seems to have ceased being useful.

It is my belief, however, that we still have good reasons for engaging in studies such as the one carried out here. There are at least two motivations. On the one hand, there still are ‘pure’ laboratory experiments and ‘pure’ computer simulations that benefit from the analogue-digital distinction for their epistemological assessment. As shown, many of the authors discussed here depend on such a distinction in order to say something meaningful about the epistemic power of computer simulation. On the other hand, we now have a point of departure for building a more complex account of experimentation and computer simulation. Having said that, the next natural step is to integrate the analogue with the digital dimension.

Notes

- 1.

Andreas Kaminski pointed out that the notion of abstraction is present in laboratory practice as well as in scientific modeling and theorizing. In this respect, it should not be understood that laboratory practice excludes instances of abstraction, but rather that they are more material—in the straightforward sense of manipulating material products—than computer simulations. I will discuss these ideas in more detail in Sect. 3.

- 2.

Let us note that it is not enough to distinguish analogue simulations from digital simulations by saying that the latter, and not the former, are models implemented on the computer. Although correct in itself, this distinction does not provide any useful insight into the characteristics of analogue simulations nor reasons for distinguising them from computer simulations. Grasping this insight is essential for understanding the epistemological, methodological, and pragmatic value of each kind of simulation.

- 3.

I am significantly simplifying Goodman’s ideas on analogue and digital. A further distinction is that differentiated systems could be non-dense, and therefore analogue and not digital. For examples on these cases, see Lewis (1971).

- 4.

Pylyshyn is neither interested in belaboring the notions of analogue and computational process, nor in asserting grounds for a distinction. Rather, he is interested in showing that concrete features of some systems (e.g., biological, technological, etc.) are more appropriately described at the symbolic level, whereas other features are best served by the vocabulary of physics.

- 5.

The idea of ‘parallel causal-structures isomorphic to the phenomenon’ is rather difficult to pin down. For a closer look, please refer to Trenholme (1994, p. 118). I take it as a way to describe two systems sharing the same causal relations. I base my interpretation on the author’s comment in the appendix: “The simulated system causally affects the simulating system through sensory input thereby initiating a simulation run whose causal structure parallels that of the run being undergone by the simulated system” (Ibid., 128). Also, the introduction of ‘isomorphism’ as the relation of representation can be quite problematic. On this last point, see, for instance, Suárez (2003).

- 6.

Unfortunately, Trenholme does not give more details on the notion of ‘intentional concepts.’ Now, given that this term belongs to the terminological canon of cognitive sciences, and given that Trenholme is following Pylyshyn in these respects, it seems appropriate to suggest that a definition could be found in Pylyshyn’s work. In this respect, Pylyshyn talks about several concepts that could be related, such as intentional terms (Pylyshyn 1989, p. 5), intentional explanation (Ibid., 212), intentional objects (Ibid., 262), and intentional descriptions (Ibid., 20).

- 7.

Trenholme uses the notions of symbolic process and symbolic computation interchangeably (Trenholme 1994, p. 118).

- 8.

Let us note that these working conceptualizations mirror many of the definitions already found in the specialized literature (for instance, Winsberg 2015). In here, I am only interested in the analogue-digital distinction as means for grounding philosophical studies on computer simulations and laboratory experimentation.

- 9.

- 10.

In that article, I urged for a change in the evaluation of the epistemological and methodological assessment of computer simulations. For my position on the issue, see Durán (2013b).

References

Baird, Davis. 2004. Thing Knowledge: A Philosophy of Scientific Instruments. Berkeley: University of California Press.

Boukharta, Lars, Hugo Gutiérrez-de-Terán, and Johan Åqvist. 2014. Computational Prediction of Alanine Scanning and Ligand Binding Energetics in G-Protein Coupled Receptors. PLOS Computational Biology 10 (4): 6146–6156.

Comrie, Leslie John. 1932. The Application of the Hollerith Tabulating Machine to Brown’s Tables of the Moon. Monthly Notices of the Royal Astronomical Society 92 (7): 694–707.

Durán, Juan M. 2013a. The Use of the ‘Materiality Argument’ in the Literature on Computer Simulations. In Computer Simulations and the Changing Face of Scientific Experimentation, ed. Juan M. Durán and Eckhart Arnold, 76–98. Newcastle: Cambridge Scholars Publishing.

Durán, Juan M. 2013b. Explaining Simulated Phenomena: A Defense of the Epistemic Power of Computer Simulations. Dissertation, Universität Stuttgart. http://elib.uni-stuttgart.de/opus/volltexte/2014/9265/.

Giere, Ronald N. 2009. Is Computer Simulation Changing the Face of Experimentation? Philosophical Studies 143 (1): 59–62.

Goodman, Nelson. 1968. Languages of Art. An Approach to a Theory of Symbols. Indianapolis: The Bobbs-Merrill Company.

Guala, Francesco. 2002. Models, Simulations, and Experiments. In Model-Based Reasoning: Science, Technology, Values, ed. Lorenzo Magnani and Nancy J. Nersessian, 59–74. New York: Kluwer.

Harré, Rom. 2003. The Materiality of Instruments in a Metaphysics for Experiments. In The Philosophy of Scientific Experimentation, ed. Hans Radder, 19–38. Pittsburgh: University of Pittsburgh Press.

Kroes, Peter. 2003. Physics, Experiments, and the Concept of Nature. In The Philosophy of Scientific Experimentation, ed. Hans Radder, 68–86. Pittsburgh: University of Pittsburgh Press.

Lewis, David. 1971. Analog and Digital. Noûs 5 (3): 321–327.

Morgan, Mary S. 2003. Experiments without Material Intervention. In The Philosophy of Scientific Experimentation, ed. Hans Radder, 216–235. Pittsburgh: University of Pittsburgh Press.

Morgan, Mary S. 2005. Experiments versus Models: New Phenomena, Inference and Surprise. Journal of Economic Methodology 12 (2): 317–329.

Morrison, Margaret. 2009. Models, Measurement and Computer Simulation: The Changing Face of Experimentation. Philosophical Studies 143 (1): 33–57.

Parker, Wendy S. 2009. Does Matter Really Matter? Computer Simulations, Experiments, and Materiality. Synthese 169 (3): 483–496.

Pylyshyn, Zenon W. 1989. Computation and Cognition: Toward a Foundation for Cognitive Science. Cambridge, MA: MIT Press.

Schelling, Thomas C. 1971. Dynamic Models of Segregation. The Journal of Mathematical Sociology 1 (2): 143–186.

Suárez, Mauricio. 2003. Scientific Representation: Against Similarity and Isomorphism. International Studies in the Philosophy of Science 17 (3): 225–244.

Trenholme, Russell. 1994. Analog Simulation. Philosophy of Science 61 (1): 115–131.

Winsberg, Eric. 2009. A Tale of Two Methods. Synthese 169 (3): 575–592.

Winsberg, Eric. 2015. Computer Simulations in Science. In Stanford Encyclopedia of Philosophy (Summer 2015 Edition), ed. Edward N. Zalta. plato.stanford.edu/archives/sum2015/entries/simulations-science/. Cited June 2016.

Acknowledgments

Thanks go to Andreas Kaminski for comments on a previous version of the article.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Durán, J.M. (2017). Varieties of Simulations: From the Analogue to the Digital. In: Resch, M., Kaminski, A., Gehring, P. (eds) The Science and Art of Simulation I . Springer, Cham. https://doi.org/10.1007/978-3-319-55762-5_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-55762-5_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-55761-8

Online ISBN: 978-3-319-55762-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)