Abstract

The ear is a remarkable detector. It is both highly sensitive and selective and operates over a large dynamic range spanning more than 12 orders of magnitude of energy. Perhaps surprisingly, not only does it respond to sound but emits it as well. These sounds, known as otoacoustic emissions (OAEs), provide a means to probe the fundamental biophysics underlying transduction and amplification in the ear. This chapter outlines the theoretical considerations describing the underlying biomechanics of OAE generation, highlights the various uses of OAEs (both scientific and clinical), including comparative approaches, and motivates open questions.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

10.1 Introduction

10.1.1 Motivation: Remote Sensing the Cochlea

Despite significant technological advances in intracochlear measurements, many key facets (e.g., micromechanics, relative tuning throughout the organ of Corti) remain poorly characterized. This is in part due to surgical methods that can affect the fragile cochlea. A method to circumvent this limitation is via “remote sensing,” observing the behavior of the cochlea in its normal physiological state without adversely affecting it. One approach is to use the fact that the ear emits sound, known as otoacoustic emissions (OAEs). For the most part, only healthy ears emit sound, which has led to the development of OAEs as a tool for hearing screening that has revolutionized pediatric audiology (Probst et al. 1991; Janssen and Müller 2008).

OAEs can be classified into two basic categories: spontaneous (SOAE) and evoked (eOAE). SOAEs typically appear as an idiosyncratic array of spectral peaks, unique to a given ear and relatively stable (e.g., Zurek 1981). eOAEs arise in response to an external stimulus. Whereas eOAEs are common in most ears (e.g., mammals, reptiles, birds, amphibians, insects), SOAEs are less prevalent. For example, prevalence among humans of different sexes and ethnicities can vary substantially (e.g., Talmadge et al. 1993; Whitehead et al. 1993; Kuroda 2007). Even within an individual, there can be differences with age and laterality. Implied throughout the chapter is the tacit assumption that OAEs are collectively generated via active amplification by the sensory hair cells in the inner ear, as evidenced by close relationships between hair cell physiology and OAEs.

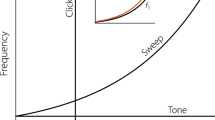

10.1.2 A Starting Heuristic

The two primary functions of the inner ear are detection and spectral decomposition of sound (i.e., frequency selectivity or tuning). Evidence indicates that the ear employs energy-generating processes to facilitate these functions (i.e., the ear is active). Presumably OAE generation is tied to that process. To model these processes, myriad theoretical cochlear frameworks have at their heart the notion of driven oscillators. To frame this chapter, a single uncoupled second-order nonautonomous system oscillator (e.g., mass on a spring) is introduced here as a heuristic (French 1971). In its simplest form, a linear differential equation describes this system by

Here x is the relevant physical dimension (e.g., displacement; diacritical dots indicate time derivatives), m is the effective mass, b is the damping, k is the stiffness, and F is an external driving force. Assuming damping is not relatively dominant, this system exhibits oscillatory behavior, with a peak response at the resonance frequency (i.e., it is tuned).

Many features in the cochlea can play the role of the various components described by Eq. 10.1. For example, “mass” can stem from the basilar (BMs) and/or tectorial (TMs) membranes, the stereovillar bundle, and/or entrained fluid. The damping term can arise in a number of different ways, such as viscous forces due to fluid coupling and/or friction internal to hair bundle linkage/channel dynamics. The drive could represent a combination of incident sound, (Brownian) noise inherent to the system, and/or some form of internal drive such as feedback.

One way to reduce the detrimental effect of damping on frequency selectivity is by adding a feedback loop (Fig. 10.1) that can act as an amplifier to inject energy into the response. With the appropriate phase of the feedback force, the amplitude of the oscillation can be boosted by the feedback and the frequency selectivity of the oscillator can be sharpened. Aside from feedback requiring a power source (e.g., metabolic energy), a drawback is that too much amplification can render the oscillator unstable and cause spontaneous oscillations. In fact, SOAEs are commonly believed to be related to such considerations and thus are pointed to as primary evidence of active feedback in the cochlea (e.g., connection to audiogram fine structure; Zwicker and Schloth 1984; Long and Tubis 1988). To ensure stability without resorting to collective “systems” level descriptions (see Sect. 10. 2.3), Eq. 10.1 is commonly modified to be nonlinear (see Fig. 10.1, Sects. 10.2.2.1 and 10.3.2.2).

Basic schematic of a vibration detector with active feedback. The mass (m) is set into motion by an external force [F(t); referred to as the stimulus]. The mass is suspended from a spring with a spring constant (k). The motion of the mass is impeded by viscous forces of the surrounding fluid with a damping constant (r fluid). A detector senses the position, leading to the displacement response [x(t)]. The motion in turn is fed back to the mass via a feedback force, which contains two terms: a negative component (−r act ẋ, which compensates for viscous losses due to the fluid) and a nonlinear term (r nl x 2 ẋ; saturation of the amplifier). It can be shown that this form of feedback can enhance the frequency selectivity of the detector. If the feedback is too strong (r = r act − r fluid > 0), the mass will oscillate spontaneously but is stabilized due to the nonlinearity. Connecting back to the more general Eq. 10.2, the equation of motion would be mẍ − [r act − r fluid − r nl x 2]ẋ + kx = F(t). Adapted from Bialek and Wit (1984)

Evidence suggests that a primary mechanism for feedback are the hair cells, which are known to provide a mechanical force either via the cell body (“somatic electromotility” in mammalian outer hair cells; Dallos 2008) and/or by the stereovillar bundle (“bundle motility”; Hudspeth 2008; see also Corey, Ó Maoiléidigh, and Ashmore, Chap. 4). Although the details of the molecular components producing such feedback are still a matter of debate, many lines of evidence (including the presence of OAEs) argue for an active frequency-selective detector at the heart of cochlear mechanics. This chapter uses Eq. 10.1 (suitably modified) as a heuristic for OAE generation and the associated amplification process.

10.2 Modeling Otoacoustic Emission Generation

10.2.1 Overview

Models are indispensable in connecting our understanding of hair cell morphology and functionality to the generation of OAEs. Since the discovery of OAEs by David Kemp (1978), there has been a stimulating history of theoretical OAE modeling. Models come in a wide variety of forms, such as electric circuits (e.g., Zwicker 1986a, b), transmission lines (e.g., Zweig and Shera 1995; Talmadge et al. 1998), standing-wave cavities analogous to a laser (e.g., Shera 2003), single active/nonlinear “limit-cycle” oscillators (e.g., Wit 1986; Talmadge et al. 1991), and systems of coupled oscillators (e.g., Murphy et al. 1995b; Vilfan and Duke 2008). Note that the classifications made above are not necessarily mutually exclusive, and certain types such as “state-space” models (e.g., Elliott et al. 2007; Ku et al. 2009) could fit into multiple categories (e.g., transmission-line cochlear models are essentially coupled oscillators).

An interesting facet when looking across cochlear models is the range of biomechanical assumptions made, such as the form of coupling of outer hair cell (OHC)-related forces and the role of morphological irregularity (or “roughness;” see Sect. 10.2.3.3). Several open questions remain to be resolved.

-

How do hair cells work together (and with accessory structures) to generate OAEs? How are such processes tied to forward auditory transduction?

-

How does nonlinear emission growth link to cochlear compression and subsequent perceptual consequences?

-

How critical is the distinction between SOAEs and eOAEs? Do they reveal fundamentally different insights into OAE generation mechanisms (e.g., Shera and Guinan 1999)?

-

Both similarities and differences exist when comparing OAEs across species, but how do such meaningfully relate back to morphological differences?

These open questions will help focus the content of this chapter. Here, the discussion is limited to basic biophysical models of OAE generation and the experimental evidence that either supports or refutes these models. Exhaustive comprehensive reviews regarding OAEs can be found elsewhere (e.g., Probst et al. 1991; Manley et al. 2008; Avan et al. 2013).

Understanding SOAE generation may be more tractable because there are fewer complicating factors (e.g., how an external stimulus may differentially drive/affect different mechanisms and/or sites). So although the bulk of otoacoustic research focuses on eOAEs, SOAE models will be the initial focal point here. The narrative highlights two (broadly categorized) classifications: single-source (see Sect. 10.2.2) and region (see Sect. 10.2.3) models.

10.2.2 Single-Source Models

Early theoretical studies of SOAE generation considered the underlying mechanism to be a single “source” (e.g., Johannesma 1980; Bialek and Wit 1984; see Fig. 10.1). That is, the complexities of the system were eschewed in favor of identifying to what extent the simplest model could describe the data. Conceptually, single-source models typically take the form of an isolated spatial element (e.g., a particular position along the BM). The foundations of such models are briefly described, and the features of the data that they are (and are not) capable of capturing are examined. Subsequently, similar efforts were also employed for eOAEs and are examined in Sect. 10.3.2.3.

10.2.2.1 Limit-Cycle Oscillators

As mentioned in Sect. 10.1.2, oscillators serve as the fundamental element. To make Eq. 10.1 produce a stable self-sustained oscillation (i.e., a limit cycle, somewhat akin to an SOAE peak), it must be modified to be both “active” (i.e., energy producing) and nonlinear (to ensure stability). Such a limit-cycle model for an SOAE peak was first proposed by Johannesma (1980). As shown in Fig. 10.1, the oscillator may correspond to an active feedback filter that becomes quasi-stable due to excessive feedback. One commonly employed formulation is the van der Pol system (Johannesma 1980)Footnote 1

where the damping parameter µ is sometimes referred to as the “control parameter.” For small displacements (x 2 < a), the damping is negative and energy is added to the system. For larger displacements, the damping becomes positive, which stabilizes the motion. Note that some studies have suggested that a second-order oscillator (i.e., Eq. 10.2) is of too low an order to be able to sufficiently capture the essential aspects (e.g., Ó Maoiléidigh et al. 2012; see also Eqs. 10.3 and 10.4).

10.2.2.2 What Single-Source Models Do and Do Not Do

To first order, a large range of SOAE-related features are well captured by a single van der Pol oscillator. If the control parameter μ is relatively small, the oscillator exhibits a nearly sinusoidal response. The sinusoidal foundation of SOAEs can be confirmed by producing an amplitude distribution of a filtered SOAE peak. This exhibits two characteristic maxima (Bialek and Wit 1984; Talmadge et al. 1991) or a ring when considering the analytic signal (Shera 2003), closely corresponding to the van der Pol model. SOAEs can be suppressed by external tones, and the onset and release from suppression shows relaxation times on the order of 10–20 ms (Zwicker and Schloth 1984), again consistent with a van der Pol oscillator with a relatively small control parameter (μ). If the oscillator is assumed to interact with internal noise (e.g., thermal noise in the cochlea), it produces a sinusoidal signal with slow amplitude fluctuation and diffusing phase. Consistent with this model, the amplitude fluctuation spectrum of SOAEs displays slow fluctuations (Bialek and Wit 1984; van Dijk and Wit 1990a), and the peaks in the power spectrum of an SOAE have a Lorenzian shape (van Dijk and Wit 1990a; Talmadge et al. 1993; van Dijk et al. 2011). Finally, SOAEs phase lock to an external tone, as predicted by the van der Pol model. For weak tones, the phase locking is intermittent, which again is consistent with the assumption of weak internal noise interacting with the oscillator (van Dijk and Wit 1990b).

Despite these successes, OAE data indicate that the underlying generation mechanisms are more complex. First, a single van der Pol is only capable of generating energy predominantly at a single frequency, not at an idiosyncratic array of them that is commonly observed in SOAE spectra (see Fig. 10.2). Second, the relationship between amplitude fluctuation and frequency fluctuations is not consistent with the single oscillator model (van Dijk and Wit 1990a), with the probable exception of strong SOAEs (Bialek and Wit 1984). This suggests that interaction between SOAE peaks complicates the behavior of individual SOAE peaks. Third, detailed analysis of SOAE peak dynamics indicates there are interactions between different SOAE peaks (van Dijk and Wit 1998). The “relaxation dynamics” of multiple SOAEs requires interactions of several coupled van der Pol oscillators (Murphy et al. 1995a).

Comparison of spontaneous optoacoustic emission (SOAE) activity from three different species. Each spectrum derives from an individual ear with relatively strong SOAE activity. Also shown is a visual comparison of the shape and length of the basilar membrane (BM) as well as the approximate total number of hair cells within a given ear for that species. SPL, sound pressure level. Modified from Bergevin et al. (2015a); graphic from Per Ruppel, University Information, University of Oldenburg, Germany, with permission

The limitations of single-source models are returned to in Sect. 10.3.2.3 within the context of eOAEs.

10.2.3 Region Models: Otoacoustic Emissions as an Emergent Property

Although single-source models provided a valuable starting point, the next generation of models takes a more global approach that considers the ear as a system of parts. To motivate them, evidence is highlighted here that compellingly points toward the importance of coupling between hair cells. For example, mice rarely exhibit SOAEs. However, when hair cell coupling is changed via a mutation in the TM structure (effectively changing its stiffness), SOAEs are more readily observed (Cheatham et al. 2014). Also, in lizards, spectral characteristics of SOAEs correlate with TM structure (Manley 2001). These examples highlight the role of longitudinal coupling between sensory hair cells in the inner ear and its impact on emission characteristics.

It appears plausible that elements of the single-source model(s) are at work, but it is ultimately more fruitful to model OAEs as a summed response that represents the interaction of multiple generation sources. That is, the complexity of an OAE (e.g., input-output function [I/O] characteristics) is an emergent property due to the distributed nature of the cochlea. The most widely accepted theory posits two distinct generation mechanisms (Kemp 1986; Shera and Guinan 1999), each of which can be manifest as an array of different sources spread throughout the cochlea. That specific framework will be returned to in Sect. 10.3.1.

10.2.3.1 Basic Considerations for Coupled Oscillators

The heuristic of Eq. 10.1 can readily be modified to describe several connected oscillators, which can trade energy back and forth. For a simple 1-dimensional (1-D) collection of linear-coupled oscillators, a “normal mode” formulation is commonly employed (French 1971). The basic idea is that there are vibration patterns where all elements oscillate at the same frequency. All possible “modes” then form a basic space, from which any possible motion is a superposition. So, although the motion can appear complex, the decomposition into simpler components makes the problem analytically tractable. A common example is the nodal patterns that can be observed on a circular membrane (e.g., drum head).

When dealing with active/nonlinear oscillators, however, things become more complicated (see also Sect. 10.3.2.2). As an example, consider the “twin-engine” model (Aranyosi 2006), which comprises just two oscillators (x 1 and x 2) and was proposed in the context of explaining “glides” (i.e., frequency variations in the BM impulse response). The equations of motion are given by

where the subscript c denotes the coupling terms and g is “a nonlinear velocity-dependent term in each resonator, defined as the derivative of a Boltzmann function” (Aranyosi 2006). In this case, not only can the oscillators trade energy back and forth, but because they can also inject it, stability can arise through a form of feedback (e.g., Dallos and Corey 1991; Zweig 1991).

In the context of SOAE modeling, different approaches have been taken, such as standing waves via coherent reflection (Shera 2003), transmission lines (Choi et al. 2008; Epp et al. 2010), and a discretized array of oscillators (Murphy et al. 1995b; Vilfan and Duke 2008; Wit et al. 2012). Ultimately, these formulations are all in fact “coupled oscillator” models, just with different assumptions about the “coupling.” Coupling typically falls into two different categories: “global” arising hydrodynamically (e.g., Zweig 1976; Nobili et al. 1998; Epp et al. 2015) and “nearest neighbor only” (e.g., Vilfan and Duke 2008) via resistive and/or reactive elements (e.g., overlying TM). Going back to Sect. 10.2.2.1, although a single active oscillator may require nonlinearity for stability, an active system does not (e.g., Zweig 1991; see also Sect. 10.3.2). Or, conversely, SOAE models do not necessarily require limit-cycle oscillators per se because a self-sustained oscillation can arise as a consequence of the mechanics of the cochlea as a whole (Shera 2003). Regardless, two common threads arise between all classes of model: waves and randomness.

10.2.3.2 The Role of Waves

In his seminal paper first reporting the existence of OAE, Kemp (1978) began by stating “Cochlea wave propagation characteristics…,” subsequently following up with a paper entitled Otoacoustic Emissions, Travelling Waves and Cochlear Mechanisms (Kemp 1986). It thus may not be surprising that much of the current understanding of OAE generation revolves around a wave-based framework (implicitly a region-model type). For example, the moniker “cochlear reflectance” has been proposed for a “normalized” measure of eOAE (Rasetshwane and Neely 2012).

In the context of OAE generation, clarification is needed regarding what precisely is meant by “wave.” A 1-D wave is described by the function f (x, t) (over spatial dimension x) that satisfies the partial differential equation (PDE), called the wave equation

where c is independent of time. From a mechanical point of view, this PDE essentially amounts to the combination of two fundamental laws. For example, in the context of deriving a 1-D transmission-line model of the cochlea (Zweig et al. 1976; Zweig 1991), the wave equation is derived from Newton’s second law (regarding BM displacement) and from the conservation of mass (regarding longitudinal fluid motion). A general solution to this equation has the form \( f\left( {x \pm {\text{c}}t} \right) \) (d’Alembert’s solution), such that time and space are scaled relative to one another. In many instances (but not all), the solutions have a periodic nature [e.g., \( f\left( {x,t} \right) = F\,\cos \left( {x \pm {\text{c}}t} \right) \) or, more generally, \( f\left( {x,t} \right) = Ae^{{i\left( {x \pm {\text{c}}t} \right)}} + Be^{{i\left( { - x \pm {\text{c}}t} \right)}} \) to allow for forward and reverse traveling waves].

Why is a wave defined here? If one considers the various dynamically relevant structures of the cochlea (e.g., hair cells, BM, TM) as oscillators, then the presence of a wave indicates some relative phase difference between them. Two consequences immediately arise, the first of which is the role of timing in the interactions and collective dynamics of the various constituents. Relative to Eq. 10.5, Shera and Guinan (2008) provided a useful working definition for “wave” as the relative timing difference between different constituent parts of the cochlea. This is useful to bridge the gap they identified when they proposed that OAE properties “… are determined not by subcellular biophysics but by macromechanical (and emergent) properties of the cochlea … many features of OAEs … are perhaps best understood in this way” (Shera and Guinan 2008, p. 336). The field is arguably now well poised to bridge the microscopic (e.g., molecular motors or individual hair cells) and macroscopic (e.g., the cochlea as a whole or a system of coupled hair cells) descriptions; waves will likely play a key role. Second, the presence (in a 1-D sense) of both a forward and a backward traveling wave allows for the notion of SOAEs to arise via some sort of standing-wave mechanism (e.g., Shera 2003).

10.2.3.3 Stochastics: Role of Noise and Roughness

In a broad class of biophysical problems, the notion of some form of a stochastic element crucially at play has become increasingly accepted (Bialek 2012) and the cochlea is no exception. Randomness can be considered as arising in two different contexts: dynamic and static. With regard to dynamic randomness, the reference is chiefly to thermal noise. The study of hair cell bundle responses to noise has been revealing, such as the violation of the fluctuation-dissipation theorem (Duke and Jülicher 2008; Dinis et al. 2012). Internal cochlear noise also affects responses close to the threshold (van Dijk and Long 2015) and is responsible for the finite width of SOAE spectral peaks. For OAE modeling, three basic considerations are important.

First are the stochastic forces an individual bundle experiences. Recent work with bullfrog vestibular cells (Kozlov et al. 2012) has suggested that thermal forces cause fractional Brownian motion (i.e., temporal fractal-like correlations exist, indicative of “stochastic processes with memory”). Second, depending on the underlying potential energy configurations available, effects such as stochastic resonance may be at play (Jaramillo and Wiesenfeld 1998). Last, how are the thermal forces distributed across the oscillators? How independent are the stochastic driving forces between two adjacent oscillators?

In addition to dynamic irregularities (cochlear noise), the notion of static randomness, commonly referred to as irregularity or “roughness,” is widely believed to play a crucial biomechanical role in OAE generation (Manley 1983; Zweig and Shera 1995; Mauermann et al. 1999). For example, within the context of a standing-wave model for SOAE generation (Shera 2003), the roughness provides a basis for reflection of waves in the cochlea. Because reflection also occurs at the round window, the conditions for a standing wave in the cochlea may arise. As stated by Ku et al. (2008), “only frequencies with a traveling wave that undergoes an integer round-trip phase change between the middle-ear boundary and the inhomogeneity will become unstable.” Hence, SOAEs occur when the appropriate reflecting boundary condition is setup, depending on the underlying roughness. Because the roughness presumably differs between ears, each ear displays a unique pattern of SOAE peaks or possibly none at all.

Many classes of current OAE models implicitly include some static aspect of irregularity (e.g., Shera 2003; Vilfan and Duke 2008). How to best measure and subsequently quantify roughness is presently unclear. For example, hair cell arrangement may not be the only or even the chief consideration in anatomical roughness. Noisiness in BM stiffness, hair cell power output, or longitudinal TM coupling could also be factors. Previous studies have been stimulating (e.g., Lonsbury-Martin et al. 1988; Martin et al. 1988), but further physiological work is desirable along these lines, such as attempting to directly correlate SOAE patterns to morphology in individual ears (Manley 1983).

10.2.3.4 Comparing Macroscopic Models to Spontaneous Emission Data

In many cases, linear formulations of region models can be handled analytically (e.g., Zweig and Shera 1995), but nonlinear formulations are typically only tractable numerically. By shifting away from relatively simple single-source frameworks, region models have enough degrees of freedom to produce just about any type of behavior. So the issue arises as to how to most meaningfully compare back to empirical data. Consider, for example, the basic question, What precisely constitutes an SOAE peak? Clearly, not all “peaks” are created equal (Fig. 10.2). Using peaks as a benchmark (e.g., is a peak present or not?), some preexisting region models (e.g., Vilfan and Duke 2008; Wit and van Dijk 2012; Fruth et al. 2014) capture qualitative and quantitative aspects of the data, but they fail to capture other (key) properties, such as peak width. Thus, focusing primarily on peaks may be misleading, especially if the dynamics (e.g., Bialek and Wit 1984; Murphy et al. 1995b) are ignored. One could thus argue the necessity of characterizing SOAE activity beyond focusing on “peaks.” For example, aspects such as “baseline” SOAE energy, readily apparent in nonmammals (e.g., Manley et al. 1996); temperature dependence (e.g., van Dijk et al. 1989); and differing SOAE interactions with external stimuli (e.g., Long and Tubis 1988; Hansen et al. 2014; Bergevin et al. 2015a) are yet unexplained.

One area where progress can be made is determining more effective ways to analyze OAE data. For example, a wide range of methodologies exist in an area broadly known as “nonlinear time-series analysis” (e.g., Kantz and Schreiber 2004) and may find valuable application to problems in hearing. This may allow for salient properties of SOAEs or SFOAEs to be more meaningfully extracted from recorded data, given inherent trade-offs between temporal and frequency resolution.

10.2.4 Summary

In summary, a basic biomechanical picture of the ear emerges as a collection of coupled nonlinear oscillators. The precise form of the oscillators and their coupling is still open to debate, but this basic theoretical framework has provided a strong foundation for current theories of OAE generation. A recent study argued that wave-based and coupled-oscillator models are not orthogonal notions (Bergevin et al. 2015a), but the common ground between model classes remains unresolved. Despite the many basic open questions, a solid foundation for understanding OAE generation mechanisms is in place (e.g., Shera and Guinan 1999).

10.3 Evoked Emissions

10.3.1 Overview

To better understand the complexities of emission generation and the nonlinear and dynamic properties of cochlear mechanics, it is necessary to study how external sound can generate OAEs. Given that eOAEs deal with an external stimulus that “drives” the system and thereby injects energy, eOAEs are commonly defined by the sounds that evoke them (e.g., SFOAEs; distortion-product OAEs [DPOAEs]; transient eOAEs [TEOAEs]).

An alternative characterization has arisen from modeling efforts, primarily focused on considerations such as a coherence among scattering sites distributed along the BM as well as how energy propagates into and out of the cochlea (Shera and Guinan 2008; see also Sect. 10.4). eOAE models have furthermore incorporated “place-fixed” and “wave-fixed” generation mechanisms, where the place-fixed mechanism relies on spatially distributed reflection sources (e.g., OHC morphology differences along the cochlea; see Sect. 10.2.3.3) and where the wave-fixed mechanism relies on local cochlear nonlinearities that depend on the stimuli that elicited the emission (Zweig and Shera 1995; Shera and Guinan 2008). These two distinct OAE generation mechanisms are based on a widely accepted OAE “taxonomy” (Shera and Guinan 1999), where emissions are classified not by the location of their generation nor the type of stimulus used to evoke them but instead by their generation mechanism. As such, seemingly disparate emission types such as SOAEs and SFOAEs are predicted to arise from the same fundamental mechanism (Shera and Guinan 1999; Shera 2003), which is supported by experiment (e.g., Probst et al. 1986; Bergevin et al. 2011a, 2012a). Furthermore, the taxonomy has been particularly valuable for understanding DPOAE generation (e.g., Knight and Kemp 2000; Shera and Abdala 2012), one of the most commonly studied forms of emissions.

Despite the many OAE modeling studies, the precise nature by which OAE generation is in fact “distributed” over a wider cochlear region is still debated for transient and pure-tone eOAEs (e.g., Moleti et al. 2013; Sisto et al. 2015). Furthermore, most studies characterizing DPOAE generation assume that there are not only multiple mechanisms but also multiple spatial sources contributing from along the cochlea (e.g., Epp et al. 2010; Shera and Abdala 2012, Figs. 5–9).

10.3.2 Linearity: To Be or Not to Be?

As Zweig (2015) wrote, “The nonlinear response of the cochlea is of great interest. But what linear equation should be made nonlinear?” At face value, the commonly measured DPOAE 2f1–f2 obviously stems from some form of nonlinearity. But in many regard, SFOAEs and click-evoked OAEs (CEOAEs; these are a type of TEOAE) are, with strong empirical support (e.g., Kalluri and Shera 2007), commonly assumed to arise from a linear reflection-based mechanism (Zweig and Shera 1995). Indeed, for low-level stimuli, SFOAE and CEOAE characteristics can be successfully described by linear models. Conceptually, the question arises as to which aspects of OAE models should be kept linear or not. Put another way, how essential is it to model cochlear nonlinearity to fully capture the key dynamics?

10.3.2.1 Basis of Cochlear “Nonlinearity”?

As a starting point, one might ask: What is the fundamental nonlinearity of the cochlea? A common assumption is that the sigmoidal nature of the mechanoelectrical transduction (MET) characteristic is the primary source of nonlinearity. An added virtue is that such a nonlinearity is commonly shared by all vertebrates (i.e., MET occurs via stereovillar hair cells). Two considerations argue that such a MET-centric view may be overly simplistic. First, numerous nonlinearities are readily observed in cochlear physiology. For example, consider just OHCs (Patuzzi 1996, Fig. 4.20). In addition to the nonlinear MET relationship, other nonlinear behavior includes potassium current through the basolateral wall, capacitive properties of the cell membrane, and prestin (Santos-Sacchi, Navaratnam, Raphael, and Oliver, Chap. 5), and length changes due to somatic electromotility. What their relative contribution is and how independent these are from one another is a subject of study but serve to indicate that there is more than just the MET source. Second, unlike linear systems, where heterogeneous nonlinear elements are coupled together, the dynamics of the resulting system can take a form that is difficult to predict (e.g., the interactions themselves can be nonlinear). That is, for coupled nonlinear systems, relatively complex behavior arises as an emergent property (Strogatz 2014), a facet true even for “simple” systems (e.g., May 1976). Thus, even if the MET is indeed the dominant nonlinearity of a given hair cell and no other sources contribute significantly, the net response of the cochlea as a whole can be difficult to characterize through the lens of just the superposition of many MET functions.

Another important modeling consideration is to what extent the nonlinearity is purely heuristic. For example, note that in Eq. 10.2, there is no obvious biophysical basis for either the nonlinearity or the active term. As mentioned in Sect. 10.1.2, at the cost of increasing an order of the model, a more salient physical basis can be introduced (e.g., the inclusion of an “adaptation system” as in Ó Maoiléidigh et al. 2012).

10.3.2.2 Nonlinearity in Cochlear Modeling

To account for the nonlinear properties of emissions, models typically either have included “local” nonlinearities (e.g., Talmadge et al. 1998; Verhulst et al. 2012) or have been made quasi linear (e.g., Zweig and Shera 1995; Choi et al. 2008). Quasi linear means the fundamental equations are linear, but some form of implicit/ad hoc assumption is made that mimics a nonlinearity (e.g., roughness does or does not matter between two different stimulus-level conditions). Thus, many experimentally observed nonlinear properties of CEOAEs and SFOAEs can be explained based on a linear reflection of local nonlinear cochlear mechanics. However, to date, it is unclear whether the nonlinear properties of eOAEs are best modeled using a combination of nonlinear cochlear mechanics and linear reflection from cochlear irregularities that either (1) show intensity-independent reflection strength properties, (2) demonstrate reflection strength dependent on cochlear gain changes, or (3) result from a reflection mechanism that shows nonlinear behavior different from that of the underlying local cochlear mechanics. Last, it should be noted that eOAE models can be stable while being both linear and active (e.g., energy input in one location can be dissipated at another location via other time-dependent forces; Zweig 1991), although most SOAE models have implicitly assumed some form of nonlinearity to avoid instability (e.g., Talmadge and Tubis 1993; Ku et al. 2009; Duifhuis 2012).

10.3.2.3 What Single-Source Models Do and Do Not Do (Revisited)

Returning to the theme of Sect. 10.2.2.2, the limitations of single limit-cycle OAE models become more evident when eOAEs are considered. A salient example is the nonlinear eOAE growth with respect to the evoking stimulus. These characterizations can be referred to as “level-growth functions” or “I/Os.” In addition to the compressive (i.e., nonlinear) nature of the BM velocity as first shown in the early 1970s (Rhode 1971), it is well-known that I/Os for auditory nerve fibers (Kiang et al. 1986), BM responses (Robles and Ruggero 2001), and OAEs (Brown 1987; Bergevin 2007; Schairer et al. 2003) can also be sometimes nonmonotonic. That is, there are regions where higher level stimuli produce smaller magnitude responses than lower levels (Fig. 10.3a). If there is a limited region of nonmonotonic behavior, it is commonly referred to as a “notch.” Typically, the notch in the magnitude is accompanied by a shift in the response phase. The nonmonotonic behavior is typically highly idiosyncratic, both to an individual ear and for a given frequency or level. Shift the stimulus frequency(ies) 100 Hz and an eOAE I/O shape could qualitatively change drastically, introducing a confounding reality, especially from a clinical perspective. Furthermore, in reports in which I/Os are shown averaged across individuals (e.g., Schairer et al. 2003; Johnson et al. 2006), nonmonotonic features tend to be averaged out.

Example of evoked otoacoustic emission (eOAE) dependence on stimulus level (Lp = Ls + 15 dB, fp = fs + 40 Hz; L length; p probe: s suppressor; f frequency; Bergevin 2007). a Representative stimulus-frequency otoacoustic emission (SFOAE) input/output function (I/O) for a human subject. Dashed line indicates the noise floor. Some phase values were shifted vertically by one cycle. The particular curve shown here is representative, but there can be significant qualitative variation in the shape across subjects (for a fixed set of parameters) and stimulus frequencies (in a given individual). b SFOAE frequency-dependence measured at different stimulus intensities in a human subject. A notch, with respect to both frequency and level, is apparent around 3 kHz and 40 dB SPL. Furthermore, there is a transition in the phase gradients depending on whether the stimulus level is below (larger gradient) or above (smaller gradient) 40 dB SPL. c Example of nonlinear regression (hyperbolic tangent) estimation of the phase jump for SFOAE probe-level dependence (solid curve). This was used as the basis for (d). d Size of SFOAE phase jump around a notch, compiled from multiple stimulus frequencies and species. The peak of the distribution indicates that the phase jumps tend to be smaller than 0.5 cycle (dashed line), typically 0.3–0.4 cycles

Nonmonotonicity can simply arise from a single nonlinearity (Engebretson and Eldredge 1968; Weiss and Leong 1985; Lukashkin and Russell 1998). That basic idea was extended to explain DPOAE growth (Lukashkin et al. 2002), where the hypothesis is that distortions observable in the ear canal arise predominantly from “a single source, namely, a nonlinear amplifier with saturating I/O characteristic.” It is important to critically examine this notion because there are wide implications for interpreting a broad range of eOAE behavior in terms of the underlying cochlear physiology, such as the inferred “operating point” of the transducer (e.g., Bian et al. 2002; Liberman et al. 2004).

At least two main lines of evidence argue against the applicability of the single-source model for eOAEs. First, many empirical aspects of nonmonotonic features are not consistent with key predictions of the model (Bergevin 2007), such as linear growth at lower level (even for cubic distortion products), the size of the phase jump (not necessarily 0.5 cycle, as predicted by the model simply stemming from a sign change; Fig. 10.3d), phase varying smoothly with level (i.e., not just a “jump”), extended portions with highly compressed growth, and the frequency-dependent nature of the I/O (Fig. 10.3a, b).

Second, it is well-known that DPOAE source “unmixing” (e.g., Mauermann et al. 1999; Kalluri and Shera 2001) demonstrates that two generation components with differing latencies exist (see Sect. 10.3.1), that these can constructively/destructively interfere to yield a measured DPOAE at the ear canal (typically referred to as “fine structure”), and that these components can be differentially affected (e.g., Mauermann and Kollmeier 2004; Botti et al. 2016). In fact, there are numerous reviews of data indicating that both SOAEs and eOAEs are generated over multiple, spatially distributed areas (e.g., Shaffer et al. 2003; Siegel et al. 2005; Moleti et al. 2013).

10.3.3 Evoked Emission Delays

For eOAEs, there is a round-trip travel time, i.e., the time it takes an emission to come back out of the ear relative to the presentation of the stimulus. How exactly this delay informs us about cochlear mechanics is a topic of great debate. For example, do SFOAE delays relate to the group delay of the cochlear mechanical filter at the site of generation and can they then be used to infer tuning (see Sect. 10.3.4)? Examples of SFOAE delays for a variety of species are shown in Fig. 10.4.

a Comparison of SFOAE phase-gradient delays and BM lengths for several different species (40-dB SPL probe level; same stimulus paradigm/parameters used for all). Data from Shera and Guinan (2003), Bergevin et al. (2008, 2011b, 2012b), and Joris et al. (2011). b Mean delay at 2 kHz as a function of approximate BM length. ‡For the frog, the provided length corresponds only to the amphibian papilla

eOAE delays can be extracted from the ear canal recording in several ways. The first is by using ripples in the (steady-state) response of the probe (i.e., take the magnitude of the Fourier transform of the microphone response in the canal) that result from constructive and destructive interference between the stimulus and the (relatively delayed) eOAE (e.g., Zwicker and Schloth 1984; Shera and Guinan 1999). An alternative spectral method involves taking the slope of the unwrapped SFOAE phase versus frequency, called the “phase-gradient delay” (French 1971; Shera et al. 2010). For linear systems, the phase-gradient delay is equivalent to the group delay. Additionally, delays can be estimated directly in the time domain (Whitehead et al. 1996; Meenderink and Narins 2006); they show a good correspondence to those extracted from the steady-state frequency domain.

One example of how phase-gradient delays can be used to study the site of DPOAE generation is by comparing the delays of the 2f2–f1 and the 2f1–f2 evoked by the same stimulus. 2f1–f2 DPOAE phase gradients are small compared with those of 2f2–f1 (Knight and Kemp 2000; Bergevin et al. 2008). Does this mean that 2f1–f2 is emitted from the cochlea much faster (almost instantaneously) relative to 2f2–f1? It does not, as time-domain studies of delay have shown (e.g., Whitehead et al. 1996). Instead, this discrepancy is thought to arise from a difference in generation mechanisms stemming from wave- and place-fixed distinctions (e.g., Shera and Guinan 1999, 2008). This example shows that caution is required when correlating phase-gradient delays to actual time delays because the generation mechanism(s) could confound this relationship.

10.3.4 Stimulus-Frequency Emission Delays and Cochlear Tuning

Several studies have examined how SFOAE phase-gradient delays can be used as a proxy measure for cochlear tuning (e.g., Shera et al. 2002, 2010). A basic intuition is provided by Eq. 10.1. When the oscillator has low damping, it is more sharply resonant (i.e., it has a stronger “preference” for frequencies close to its characteristic frequency) and sluggish (i.e., it takes a longer time to build up a steady-state response because the oscillator can store more energy that can only be provided on a cycle-by-cycle basis by the external drive). Even though this method has been verified in animals (Bergevin and Shera 2010; Shera et al. 2010; Joris et al. 2011), suggestions that humans exhibit sharper cochlear mechanical tuning than other mammalian species have been more controversial (e.g., Ruggero and Temchin 2005; Siegel et al. 2005).

A psychophysical study in humans demonstrated that tuning derived from SFOAE delays compares well with perceptual auditory-filter tuning in an isoresponse forward-masking paradigm (Oxenham and Shera 2003). Subsequent investigations have provided further support for this relationship by comparing tuning estimates from neural, otoacoustic, and psychophysical estimates from a single species (e.g., marmoset, Bergevin et al. 2011b; ferret, Sumner et al. 2014) and have shown that the SFOAE delays can also be in part explained by morphological aspects of the cochlea such as BM length (e.g., Bergevin et al. 2011b, 2012b; Fig. 10.4) and properties of the tectorial membrane (e.g., Bergevin et al. 2010). Despite this evidence, there are studies that do not support the view of sharp human frequency selectivity (e.g., Charaziak et al. 2013; Manley and van Dijk 2016). The OAE suppression properties investigated in those studies demonstrated human tuning values that are more consistent with the moderate frequency selectivity derived from simultaneous-masking psychoacoustic tuning curves.

Additionally, OAEs provide means to characterize the cochlear mechanical filter at the base of the perceptual auditory filter. It is clear that changes in cochlear mechanical filters (e.g., due to stimulus level or OHC damage) affect the perceptual auditory filters, but the exact relationship between OAE-derived tuning measures, auditory nerve tuning curves, and perceptual auditory filters is not entirely established. Whereas in humans, the relationship between perceptual and OAE tuning can be established, one must rely on animal physiology to establish the relationship between OAE and auditory nerve tuning (e.g., Shera et al. 2010). One way to study how different tuning estimates reflect the underlying cochlear filter tuning is by adopting models of the human cochlea that can simulate emissions as well as BM and auditory nerve responses (e.g., Verhulst et al. 2012, 2015).

Model approaches can further help address practical considerations such as how SFOAEs are generated and subsequently exit the inner ear (e.g., Choi et al. 2008), which is important in the study of the relationship between cochlear mechanical filter tuning and SFOAE delays. For example, if SFOAE generation is purely based on place-fixed mechanisms stemming from a narrow region around the peak of the traveling wave to the evoking stimulus (e.g., Zweig and Shera 1995; Shera and Guinan 2008), the relationship between the SFOAE delay and the filter group delay would only hold for low stimulus levels where the linear relationship between filter group delays and tuning (Goldstein et al. 1971) is valid. As the role of a place-fixed mechanism for SFOAE generation has been heavily debated over the years (e.g., Siegel et al. 2005), it is presently unclear to what degree existing SFOAE methods can reliably assess cochlear mechanical filter tuning at higher stimulus levels. Another potentially important modeling consideration that requires further study is the difference between isoresponse and isoinput measures of tuning (Eustaquio-Martín and Lopez-Poveda 2011).

10.4 How Do Emissions Exit the (Inner) Ear?

The question regarding OAEs and waves (see Sect. 10.2.3.2) is further highlighted by the debate as to how emissions are emitted from the cochlea. That is, whether OAEs propagate via “slow” (i.e., BM-based) or “fast” (i.e., fluid compression-based) waves (e.g., He et al. 2008; Dong and Olson 2008; Meenderink and van der Heijden 2010).

Another key consideration is that on exiting the cochlea, OAE energy must essentially drive the middle and outer ears in “reverse.” Despite the passive gain (in the range of 40 dB) going inward, the middle ear behaves in a reciprocal fashion by attenuating sound pressure going outward (Shera and Zweig 1993). Several studies have examined this aspect, using either DPOAEs as an “intracochlear” source (Magnan et al. 1997; Dong and Olson 2006; Dalhoff et al. 2011), extracted temporal bones (Puria 2003), or other methods. Detailed knowledge in this regard is crucial toward understanding the power produced by the ear in SOAE generation and attempts to relate this overall power to the function of individual hair cells (e.g., Manley and Gallo 1997). The frequency-specific attenuation of sound caused by the middle ear transfer is particularly important when interpreting OAE amplitudes. For example, when using eOAEs to estimate the stimulus level required for detecting cochlear compression at a given frequency place, it needs to be considered that not all stimuli are as effective in driving the cochlear location where the eOAE is generated. Second, when interpreting the magnitudes of spectral CEOAE components in a clinical screening setting, the middle ear attenuates higher frequency CEOAE components as opposed to those where middle ear transmission is more efficient (1–2 kHz region), even in ears where there is no cochlear damage.

10.5 Benefits of a “Comparative” Viewpoint

Since OAEs were first discovered in humans, researchers quickly realized that emissions arise in a wide variety of animal classes such as amphibians (Palmer and Wilson 1982), reptiles (Rosowski et al. 1984), and even insects (Kössl and Boyan 1998). Since then, numerous observations have systematically compared OAEs between humans and nonmammals, many of which lack a direct analog to BM traveling waves. Regardless of whether one’s interest is human cochlear mechanics or neuroethology, the general biophysical considerations discussed combined with the observation that most types of ears produce OAEs provides an important opportunity to understand their mechanisms.

A common feature shared among all vertebrate ears is hair cells (see Sect. 10.1.2). The number within a given ear, how they are coupled together (i.e., presence and structure of TM), their molecular composition (e.g., density of prestin), their bundle properties (e.g., number and height of stereovilli, internal coupling), their ionic environment (e.g., calcium level, effective endocochlear potential), and even the underlying substrate they are embedded in or sit atop (i.e., flexible BM, or cartilage) can vary dramatically across taxonomic boundaries (Fig. 10.2). Despite this variety, OAE properties exhibit striking similarities.

One study (Bergevin et al. 2008) indicated that the empirical basis for the wave-fixed versus place-fixed distinction (Shera and Guinan 1999; see also Sect. 10.2.3) can be found in a wide class of ears, even those that presumably lack a BM traveling wave. A subsequent study (Bergevin et al. 2015a) examined predictions of the “standing-wave” model (Shera 2003) in nonmammalian ears and found good agreement between data and model, indicating shared properties at work in the underlying generation mechanisms.

In terms of differences, one distinction has drawn significant attention. SFOAE phase-gradient delays are much longer in humans than in any other species examined thus far (Fig. 10.4). As discussed in Sect. 10.3.4, this difference in delay has been proposed to be indicative of relatively sharper cochlear tuning in humans (Shera et al. 2002). Another telling difference is that within lizards, species with a continuous TM, have relatively few/large SOAE peaks while species without a TM tend to have more numerous smaller peaks (Manley 1997).

In short, OAEs are a common property across land vertebrates, and there are many similarities (and differences) across (e.g., Bergevin et al. 2015a) and within (e.g., Bergevin 2011; Berezina-Greene and Guinan 2015) groups. Presumably, these interrelationships, in the face of vast morphological differences, point to a key underlying biophysical principle(s) at work in all types of ears. At a minimum, it is clear that neither two distinct hair cell types nor a flexible BM nor the presence of a TM are a priori required for OAE generation. Such knowledge can in turn stimulate advancements in the evolutionary theory about hearing (Manley 2000).

10.6 Putting Emissions to Work

Having examined the mechanisms underlying the generation and current models of OAE, this section describes the practical aspects and their current and potential use. Readers are also referred to extensive reviews (e.g., Robinette and Glattke 2007; Janssen and Müller 2008; Lonsbury-Martin and Martin 2008).

OAEs have been extensively developed to probe peripheral auditory function in both basic scientific (e.g., Is my genetically modified mouse deaf?) and clinical (e.g., Is this newborn deaf?) settings. For the most part, eOAEs are used as a proxy measure for hearing ability, based on their relative amplitude. If the signal is above the noise floor (or some sort of predetermined threshold), the ear is considered normal (or healthy). Generally, these screenings are efficient and cost effective, given their noninvasive and objective nature. Some salient examples include

-

Clinical hearing screening, especially in pediatric audiology (Kemp et al. 1990; Abdala et al. 1996; Norton et al. 2000)

-

Hearing screening in humans (Dorn et al. 2001; Boege and Janssen 2002; Goodman et al. 2009)

-

Hearing screening in animals (Liberman et al. 2002; Cheatham et al. 2014)

-

Veterinary diagnostics/care (McBrearty and Penderis 2011); McBrearty et al. 2012)

Note that caution is needed because auditory neuropathies (i.e., pathologies in the neural pathway connecting the cochlea to the brain) can sometimes allow for “normal” OAEs despite hearing impairment being present (e.g., Berlin et al. 2003; Bharadwaj et al. 2015). Additionally, middle ear dysfunction (e.g., otitis media) can affect OAEs and the absence of OAEs can be due to both middle or inner ear pathology (e.g., Owens et al. 1992; see also Sect. 10.4). Thus, a failure to record OAEs requires additional measurement (e.g., compound action potentials and/or auditory brainstem responses) to reach a diagnosis.

Another intriguing avenue of exploration is how eOAEs can be used to assess auditory function in hearing-impaired individuals. Recent improvements in signal processing (e.g., Vetešník et al. 2009; Keefe 2012) help toward this end. Basic comparisons between normal hearing and hearing impairment are well established (e.g., Gorga et al. 2003; Prieve et al. 1993) and have served to provide a useful benchmark for clinical applications. But recent studies have begun to examine further aspects, such as changes associated with frequency selectivity (e.g., Gruhlke et al. 2012; Charaziak et al. 2013), and these may help with understanding the difficulties with speech recognition in noisy environments. Another interesting avenue is retrocochlear pathologies such as acoustic neuromas (Telischi et al. 1995). Further studies for “translational” applications of OAEs include

-

Monitoring intracranial pressure (de Kleine et al. 2000; Voss et al. 2006)

-

Measuring cochlear blood flow (Telischi et al. 1998; Mom et al. 1999)

-

Effects of ototoxic drug exposure (Stavroulaki et al. 1999; Lonsbury-Martin and Martin 2001; Reavis et al. 2011)

-

Efferent-related feedback effects (Francis and Guinan 2010; Garinis et al. 2011; Boothalingam et al. 2015)

-

Attention-related effects (Walsh 2012)

-

SFOAEs as a measure of tuning (see Sect. 10.3.4)

-

Basis of absolute pitch (Bergevin et al. 2015b)

-

Biometric applications (Liu and Hatzinakos 2014; see also Nura headphones)

-

Assessing (forward and reverse) middle ear function (Dalhoff et al. 2011; see also Sect. 10.4)

-

Understanding OAE differences across human groups, such as ethnic and sex disparities (Whitehead et al. 1993; McFadden and Pasanen 1998)

One difficult obstacle in several of these potential applications is establishing an appropriate baseline (e.g., Reavis et al. 2015). For example, what are reasonable fluctuations in OAE properties that an audiologist could expect and thereby meaningfully detect a significant change (e.g., due to ototoxic exposure)?

10.7 Looking Ahead: Next Steps

Having described basic biophysical considerations that can be investigated using OAEs and highlighted a fraction of the literature that has attempted to address these questions, the chapter now ends on a note looking further ahead, drawing attention to several open areas of otoacoustic-related research.

-

Can OAEs be used to clarify the role played by viscous forces of the inner ear fluids? Although a single hair bundle is presumably subject to a low Reynolds number environment, perhaps their collective behavior can cause a net decrease in the relevant frictional forces (in a fashion similar to motile bacteria; e.g., López et al. 2015).

-

A recent study has argued for a “staircase”-like structure to the individual ears tonotopic map such that extended spatial regions effectively share a similar characteristic frequency (Shera 2015; Bell and Wit 2015). In what ways might these be related/connected to arguments for “frequency plateaus” in coupled oscillator models of SOAE generation (Vilfan and Duke 2008; Wit and van Dijk 2012)?

-

Although many aspects of cochlear function are linear (or quasi-linear), the overall behavior is very nonlinear (e.g., compression is a fundamental means of operation). Thus, repeating Ku et al. (2008), “How does the cochlea behave in such a relatively linear fashion when it is so inherently nonlinear in terms of its basic building blocks?” Is the ear chaotic (e.g., Keefe et al. 1990)?

-

The existence of SOAEs suggests that the cochlea is close to an instability (see Zweig 2003 as well as Corey, Ó Maoiléidigh, and Ashmore, Chap. 4) but obviously shows that the system is ultimately stable. Many classes of (active) cochlear models, however, have difficulty producing SOAE patterns with stability comparable to those seen physiologically. Why? Put another way, what aspects of cochlear function allow it to be quasi-stable?

-

What is the biophysical basis for the general frequency stability of SOAEs, even after exposure to moderate sounds, or for temperature-dependent changes in SOAEs (e.g., van Dijk et al. 1989)?

-

Idiosyncrasy of SOAEs. Why don’t all ears emit SOAEs despite common sensitivity thresholds? Why do some species exhibit more robust SOAE activity than others? What is this telling us about the notion of “irregularity”? How might cochlear roughness be quantified and be correlated to OAEs? How level dependent is the role of irregularity and what does that tell us?

-

Reconciling how a vast array of morphologies (including insect ears) give rise to OAEs. How are various morphological (e.g., BM length, number of hair cells) and functional (e.g., number of octaves spanned in audiogram, tuning) aspects interrelated?

-

Elucidating the level dependence of eOAE phase-gradient delays (e.g., at low stimulus levels, do they become level independent?) and the implications for linearity and tuning.

-

Can OAEs be used to offer an objective estimate of an individual’s frequency selectivity aside from being able to assess sensitivity? How might OAEs be more effectively used in quantifying cochlear health in hearing-impaired individuals?

-

“Suppression tuning curves” for SOAEs have been demonstrated to match auditory nerve fiber responses in lizards and the barn owl remarkably well (e.g., Köppl and Manley 1994). A recent study measured SOAE suppression in humans (Manley and van Dijk 2016), which suggested relatively moderate frequency tuning in the cochlea. Given that these observations contradict the sharp tuning estimates found comparing SFOAE phase-gradient delays and auditory nerve tuning curves in Old World monkeys (Joris et al. 2011), the exact relationship between various tuning measures requires further research.

This chapter ends with a quote of the final sentence in Kemp’s landmark paper (1978, p. 1391) announcing the discovery of OAEs: “If proven, it [the hypothesis that OAEs are generated by normal cochlear function] would provide a new insight into the microscopic behavior of the cochlear transduction mechanism which is not adequately understood.” Kemp was certainly correct, although his discovery yielded much more than just “a new insight,” and there is still a long way to go….

Notes

- 1.

When using complex notation, this equation is sometimes expressed in a simplified complex form (“normal form”) that captures qualitatively similar dynamics: \( \dot{z} = - \mu z + i\omega_{0} z + z\left| {z^{2} } \right| + F\left( t \right) \). See Hudspeth (2008).

References

Abdala, C., Sinninger, Y. S., Ekelid, M., & Zeng, F. G. (1996). Distortion product otoacoustic emission suppression tuning curves in human adults and neonates. Hearing Research, 98, 38–53.

Aranyosi, A. J. (2006). A “twin-engine” model of level-dependent cochlear motion. In A. L. Nuttall, T. Ren, P. Gillespie, K. Grosh, & E. de Boer (Eds.), Auditory Mechanisms: Processes and Models (pp. 500–501). Singapore: World Scientific Publishing Co.

Avan, P., Büki, B., & Petit, C. (2013). Auditory distortions: Origins and functions. Physiological Reviews, 93, 1563–1619.

Bell, A., & Wit, H. P. (2015). The vibrating reed frequency meter: Digital investigation of an early cochlear model. PeerJ, 3, e1333. doi:10.7717/peerj.1333.

Berezina-Greene, M. A., & Guinan, J. J. (2015). Stimulus frequency otoacoustic emission delays and generating mechanisms in guinea pigs, chinchillas, and simulations. Journal of the Association for Research in Otolaryngology, 16(6), 679–694.

Bergevin, C. (2007). Comparative Approaches to Otoacoustic Emissions: Towards an Understanding of Why the Ear Emits Sound. PhD Thesis, Massachusetts Institute of Technology, Cambridge, MA.

Bergevin, C. (2011). Comparison of otoacoustic emissions within gecko subfamilies: Morphological implications for auditory function in lizards. Journal of the Association for Research in Otolaryngology, 12(2), 203–217.

Bergevin, C., & Shera, C. A. (2010). Coherent reflection without traveling waves: On the origin of long-latency otoacoustic emissions in lizards. The Journal of the Acoustical Society of America, 127(4), 2398–2409.

Bergevin, C., Freeman, D. M., Saunders, J. C., & Shera, C. A. (2008). Otoacoustic emissions in humans, birds, lizards, and frogs: Evidence for multiple generation mechanisms. Journal of Comparative Physiology A, 194, 665–683.

Bergevin, C., Velenovsky, D. S., & Bonine, K. E. (2010). Tectorial membrane morphological variation: Effects upon stimulus frequency otoacoustic emissions. Biophysical Journal, 99, 1064–1072.

Bergevin, C., Velenovsky, D. S., & Bonine, K. E. (2011a). Coupled, active oscillators and lizard otoacoustic emissions. In C. A. Shera & E. S. Olson (Eds.), What Fire Is in Mine Ears: Progress in Auditory Biomechanics: Proceedings of the 11th International Mechanics of Hearing Workshop, Williamstown, MA, July 16–22, 2011 (pp. 453–460). Melville, NY: American Institute of Physics Conference Proceedings 1403.

Bergevin, C., McDermott, J., Roy, S., Li, F., Shera, C., & Wang, X. (2011b). Stimulus-frequency otoacoustic emissions as a probe of cochlear tuning in the common marmoset. Association for Research in Otolaryngology Abstracts, 34, 371.

Bergevin, C., Fulcher, A., Richmond, S., Velenovsky, D., & Lee, J. (2012a). Interrelationships between spontaneous and low-level stimulus-frequency otoacoustic emissions in humans. Hearing Research, 285(1–2), 20–28.

Bergevin, C., Walsh, E. J., McGee, J., & Shera, C. A. (2012b). Probing cochlear tuning and tonotopy in the tiger using otoacoustic emissions. Journal of Comparative Physiology A, 198(8), 617–624.

Bergevin, C., Manley, G. A., & Köppl, C. (2015a). Salient features of otoacoustic emissions are common across tetrapod groups and suggest shared properties of generation mechanisms. Proceedings of the National Academy of Sciences of the United States of America, 112(11), 3362–3367.

Bergevin, C., McKetton, L., Stone, V., Grahn, J., & Purcell, D. (2015b). No otoacoustic evidence for a peripheral basis underlying absolute pitch. The Journal of the Acoustical Society of America, 137, 2409.

Berlin, C. I., Hood, L., Morlet, T., Rose, K., & Brashears, S. (2003). Auditory neuropathy/dys-synchrony: Diagnosis and management. Mental Retardation and Developmental Disabilities Research Reviews, 9, 225–231.

Bharadwaj, H. M., Masud, S., Mehraei, G., Verhulst, S., & Shinn-Cunningham, B. G. (2015). Individual differences reveal correlates of hidden hearing deficits. The Journal of Neuroscience, 35(5), 2161–2172.

Bialek, W. (2012). Biophysics: Searching for Principles. Princeton, NJ: Princeton University Press.

Bialek, W., & Wit, H. P. (1984). Quantum limits to oscillator stability: Theory and experiments on acoustic emissions from the human ear. Physics Letters A, 104(3), 173–178.

Bian, L., Chertoff, M. E., & Miller, E. (2002). Deriving a cochlear transducer function from low-frequency modulation of distortion product otoacoustic emissions. The Journal of the Acoustical Society of America, 112(1), 198–210.

Boege, P., & Janssen, T. (2002). Pure-tone threshold estimation from extrapolated distortion product otoacoustic emission I/O-functions in normal and cochlear hearing loss ears. The Journal of the Acoustical Society of America, 111(4), 1810–1818.

Boothalingam, S., Allan, C., Allen, P., & Purcell, D. (2015). Cochlear delay and medial olivocochlear functioning in children with suspected auditory processing disorder. PLoS ONE, 10(8), e0136906.

Botti, T., Sisto, R., Sanjust, F., Moleti, A., & D’Amato, L. (2016). Distortion product otoacoustic emission generation mechanisms and their dependence on stimulus level and primary frequency ratio. The Journal of the Acoustical Society of America, 139(2), 658–673.

Brown, A. M. (1987). Acoustic distortion from rodent ears: A comparison of responses from rats, guinea pigs and gerbils. Hearing Research, 31(1), 25–37.

Charaziak, K. K., Souza, P., & Siegel, J. H. (2013). Stimulus-frequency otoacoustic emission suppression tuning in humans: Comparison to behavioral tuning. Journal of the Association for Research in Otolaryngology, 14, 843–862.

Cheatham, M. A., Goodyear, R. J., Homma, K., Legan, P. K., Korchagina, J., Naskar, S., Siegel, J. H., Dallos, P., Zheng, J., & Richardson, G. P. (2014). Loss of the tectorial membrane protein CEACAM16 enhances spontaneous, stimulus-frequency, and transiently evoked otoacoustic emissions. The Journal of Neuroscience, 34(31), 10325–10338.

Choi, Y. S., Lee, S. Y., Parham, K., Neely, S. T., & Kim, D. O. (2008). Stimulus-frequency otoacoustic emission: Measurements in humans and simulations with an active cochlear model. The Journal of the Acoustical Society of America, 123(5), 2651–2669.

Dalhoff, E., Turcanu, D., & Gummer, A. W. (2011). Forward and reverse transfer functions of the middle ear based on pressure and velocity DPOAEs with implications for differential hearing diagnosis. Hearing Research, 280, 86–99.

Dallos, P. (2008). Cochlear amplification, outer hair cells and prestin. Current Opinion in Neurobiology, 18, 370–376.

Dallos, P., & Corey, M. E. (1991). The role of outer hair cell motility in cochlear tuning. Current Opinion in Neurobiology, 1(2), 215–220.

de Kleine, E., Wit, H. P., & van Dijk, P. (2000). The behavior of spontaneous otoacoustic emissions during and after postural changes. The Journal of the Acoustical Society of America, 107(6), 3308–3316.

Dinis, L., Martin, P., Barral, J., Prost, J., & Joanny, J. (2012). Fluctuation-response theorem for the active noisy oscillator of the hair cell bundle. Physical Review Letters, 109, 160602.

Dong, W., & Olson, E. S. (2006). Middle ear forward and reverse transmission in gerbil. Journal of Neurophysiology, 95(5), 2951–2961.

Dong, W., & Olson, E. S. (2008). Supporting evidence for reverse cochlear traveling waves. The Journal of the Acoustical Society of America, 123(1), 222–240.

Dorn, P. A., Konrad-Martin, D., Neely, S. T., Keefe, D. H., Cyr, E., & Gorga, M. P. (2001). Distortion product otoacoustic emission input/output functions in normal-hearing and hearing-impaired human ears. The Journal of the Acoustical Society of America, 110(6), 3119–3131.

Duifhuis, H. (2012). Cochlear Mechanics: Introduction to a Time Domain Analysis of the Nonlinear Cochlea. New York: Springer-Verlag.

Duke, T. A. J., & Jülicher, F. (2008). Critical oscillators as active elements in hearing. In G. A. Manley, R. R. Fay, & A. N. Popper (Eds.), Active Processes and Otoacoustic Emissions in Hearing (pp. 63–92). New York: Springer-Verlag.

Elliott, S. J., Ku, E. M., & Lineton, B. (2007). A state space model for cochlear mechanics. The Journal of the Acoustical Society of America, 122(5), 2759–2771.

Engebretson, A. M., & Eldredge, D. H. (1968). Model for the nonlinear characteristics of cochlear potentials. The Journal of the Acoustical Society of America, 44(2), 548–554.

Epp, B., Verhey, J. L., & Mauermann, M. (2010). Modeling cochlear dynamics: Interrelation between cochlea mechanics and psychoacoustics. The Journal of the Acoustical Society of America, 128(4), 1870–1883.

Epp, B., Wit, H. P., & van Dijk, P. (2015). Clustering of cochlear oscillations in frequency plateaus as a tool to investigate SOAE generation. In K. D. Karavitaki & D. P. Corey (Eds.), Mechanics of Hearing: Protein to Perception: Proceedings of the 12th International Workshop on the Mechanics of Hearing, Cape Sounio, Greece, June 23–29, 2014 (pp. 090025-1–090025-6). Melville, NY: American Institute of Physics Conference Proceedings 1703.

Eustaquio-Martín, A., & Lopez-Poveda, E. A. (2011). Isoresponse versus isoinput estimates of cochlear filter tuning. Journal of the Association for Research in Otolaryngology, 12, 281– 299.

Francis, N. A., & Guinan, J. J. (2010). Acoustic stimulation of human medial olivocochlear efferents reduces stimulus-frequency and click-evoked otoacoustic emission delays: Implications for cochlear filter bandwidths. Hearing Research, 267(1–2), 36–45.

French, A. P. (1971). Vibrations and Waves. New York: W. W. Norton & Company.

Fruth, F., Jülicher, F., & Lindner, B. (2014). An active oscillator model describes the statistics of spontaneous otoacoustic emissions. Biophysical Journal, 107(4), 815–824.

Garinis, A., Werner, L., & Abdala, C. (2011). The relationship between MOC reflex and masked threshold. Hearing Research, 282, 128–137.

Goldstein, J. L., Baer, T., & Kiang, N. Y.-S. (1971). A theoretical treatment of latency, group delay, and tuning characteristics for auditory nerve responses to clicks and tones. In M. B. Sachs (Ed.), Physiology of the Auditory System, (p. 133–141). Baltimore, MD: National Education Consultants.

Goodman, S. S., Fitzpatrick, D. F., Ellison, J. C., Jesteadt, W., & Keefe, D. H. (2009). High-frequency click-evoked otoacoustic emissions and behavioral thresholds in humans. The Journal of the Acoustical Society of America, 125(2), 1014–1032.

Gorga, M. P., Neely, S. T., Bergman, B., Beauchaine, K. L., Kaminski, J. R., Peters, J., & Jesteadt, W. (2003). Otoacoustic emissions from normal-hearing and hearing-impaired subjects: Distortion product responses. The Journal of the Acoustical Society of America, 93(4), 2050–2060.

Gruhlke, A., Birkholz, C., Neely, S. T., Kopun, J., Tan, H., Jesteadt, W., Schmid, K., & Gorga, M. P. (2012). Distortion-product otoacoustic emission suppression tuning curves in hearing-impaired humans. The Journal of the Acoustical Society of America, 132(5), 3292–3304.

Hansen, R., Santurette, S., & Verhulst, S. (2014). Effects of spontaneous otoacoustic emissions on pure-tone frequency difference limens. The Journal of the Acoustical Society of America, 136(6), 3147–3158.

He, W., Fridberger, A., Porsov, E., Grosh, K., & Ren, T. (2008). Reverse wave propagation in the cochlea. Proceedings of the National Academy of Sciences of the United States of America, 105(7), 2729–2733.

Hudspeth, A. J. (2008). Making an effort to listen: Mechanical amplification in the ear. Neuron, 59(4), 530–545.

Janssen, T., & Müller, J. (2008). Otoacoustic emissions as a diagnostic tool in a clinical context. In G. A. Manley, R. R. Fay, & A. N. Popper (Eds.), Active Processes and Otoacoustic Emissions in Hearing (pp. 421–460). New York: Springer-Verlag.

Jaramillo, F., & Wiesenfeld, K. (1998). Mechanoelectrical transduction assisted by Brownian motion: A role for noise in the auditory system. Nature Neuroscience, 1(5), 384–388.

Johannesma, P. (1980). Narrow band filters and active resonators. In G. van den Brink & F. Bilsen (Eds.), Psychophysical, Physiological and Behavioural Studies in Hearing: Proceedings of the 5th International Symposium on Hearing, Noordwikjkerhout, The Netherlands, April 8–12, 1980 (pp. 62–63). Delft, The Netherlands: Delft University Press.

Johnson, T. A., Neely, S. T., Garner, C. A., & Gorga, M. P. (2006). Influence of primary-level and primary-frequency ratios on human distortion product otoacoustic emissions. The Journal of the Acoustical Society of America, 119(1), 418– 428.

Joris, P. X., Bergevin, C., Kalluri, R., McLaughlin, M., Michelet, P., van der Heijden, M., & Shera, C. A. (2011). Frequency selectivity in Old-World monkeys corroborates sharp cochlear tuning in humans. Proceedings of the National Academy of Sciences of the United States of America, 108(42), 17516–17520.

Kalluri, R., & Shera, C. A. (2001). Distortion-product source unmixing: A test of the two-mechanism model for DPOAE generation. The Journal of the Acoustical Society of America, 109(2), 622–637.

Kalluri, R., & Shera, C. A. (2007). Near equivalence of human click-evoked and stimulus-frequency otoacoustic emissions. The Journal of the Acoustical Society of America, 121(4), 2097–2110.

Kantz, H., & Schreiber, T. (2004). Nonlinear Time Series Analysis. Cambridge, UK: Cambridge University Press.

Keefe, D. H. (2012). Moments of click-evoked otoacoustic emissions in human ears: Group delay and spread, instantaneous frequency and bandwidth. The Journal of the Acoustical Society of America, 132(5), 3319–3350.

Keefe, D., Burns, E., Ling, R., & Laden, B. (1990). Chaotic dynamics of otoacoustic emissions. In P. Dallos, C. D. Geisler, J. W. Matthews, M. A. Ruggero, & C. R. Steele (Eds.), The Mechanics and Biophysics of Hearing (pp. 194–201). New York: Springer-Verlag.

Kemp, D. T. (1978). Stimulated acoustic emissions from within the human auditory system. The Journal of the Acoustical Society of America, 64(5), 1386–1391.

Kemp, D. T. (1986). Otoacoustic emissions, travelling waves and cochlear mechanisms. Hearing Research, 22, 95–104.

Kemp, D. T., Ryan, S., & Bray, P. (1990). A guide to the effective use of otoacoustic emissions. Ear and Hearing, 11(2), 93–105.

Kiang, N., Liberman, M., Sewell, W., & Guinan, J. J. (1986). Single unit clues to cochlear mechanisms. Hearing Research, 22, 171–182.

Knight, R. D., & Kemp, D. T. (2000). Indications of different distortion product otoacoustic emission mechanisms from a detailed f1,f2 area study. The Journal of the Acoustical Society of America, 107(1), 457.

Köppl, C., & Manley, G. A. (1994). Spontaneous otoacoustic emissions in the bobtail lizard. II: Interactions with external tones. Hearing Research, 72, 159–170.

Kössl, M., & Boyan, G. S. (1998). Otoacoustic emissions from a nonvertebrate ear. Naturwissenschaften, 85, 124–127.

Kozlov, A. S., Andor-Ardó, D., & Hudspeth, A. J. (2012). Anomalous Brownian motion discloses viscoelasticity in the ear’s mechanoelectrical-transduction apparatus. Proceedings of the National Academy of Sciences of the United States of America, 109(7), 2896–2901.

Ku, E. M., Elliott, S. J., & Lineton, B. (2008). Statistics of instabilities in a state space model of the human cochlea. The Journal of the Acoustical Society of America, 124(2), 1068–1079.

Ku, E. M., Elliott, S. J., & Lineton, B. (2009). Limit cycle oscillations in a nonlinear state space model of the human cochlea. The Journal of the Acoustical Society of America, 126, 739–750.

Kuroda, T. (2007). Clinical investigation on spontaneous otoacoustic emission (SOAE) in 447 ears. Auris Nasus Larynx, 34, 29–38.

Liberman, M. C., Gao, J., He, D. Z., Wu, X., Jia, S., & Zuo, J. (2002). Prestin is required for electromotility of the outer hair cell and for the cochlear amplifier. Nature, 419, 300–304.

Liberman, M. C., Zuo, J., & Guinan, J. J. (2004). Otoacoustic emissions without somatic motility: Can stereocilia mechanics drive the mammalian cochlea? The Journal of the Acoustical Society of America, 116(3), 1649–1655.

Liu, Y., & Hatzinakos, D. (2014). Earprint: Transient evoked otoacoustic emission for biometrics. IEEE Transactions on Information Forensics and Security, 9(12), 2291–2301.

Long, G. R., & Tubis, A. (1988). Investigations into the nature of the association between threshold microstructure and otoacoustic emissions. Hearing Research, 36(2–3), 125–138.

Lonsbury-Martin, B. L., & Martin, G. K. (2001). Evoked otoacoustic emissions as objective screeners for ototoxicity. Seminars in Hearing, 22(4), 377–392.

Lonsbury-Martin, B. L., & Martin, G. K. (2008). Otoacoustic emissions: Basic studies in mammalian models. In G. A. Manley, R. R. Fay, & A. N. Popper (Eds.), Active Processes and Otoacoustic Emissions in Hearing (pp. 261–304). New York: Springer-Verlag.

Lonsbury-Martin, B. L., Martin, G. K., Probst, R., & Coats, A. C. (1988). Spontaneous otoacoustic emissions in a nonhuman primate. II. Cochlear anatomy. Hearing Research, 33(1), 69–93.

López, H. M., Gachelin, J., Douarche, C., Auradou, H., & Clément, E. (2015). Turning bacteria suspensions into superfluids. Physical Review Letters, 115, 028301.

Lukashkin, A. N., & Russell, I. J. (1998). A descriptive model of the receptor potential nonlinearities generated by the hair cell mechanoelectrical transducer. The Journal of the Acoustical Society of America, 103(2), 973–980.

Lukashkin, A. N., Lukashkina, V. A., & Russell, I. J. (2002). One source for distortion product otoacoustic emissions generated by low- and high-level primaries. The Journal of the Acoustical Society of America, 111(6), 2740–2748.

Magnan, P., Avan, P., Dancer, A., Smurzynski, J., & Probst, R. (1997). Reverse middle-ear transfer function in the guinea pig measured with cubic difference tones. Hearing Research, 107(1–2):41–45.

Manley, G. A. (1983). Frequency spacing of acoustic emissions: A possible explanation. In W. R. Webster (Ed.), Mechanisms of Hearing (pp. 36–39). Clayton, VIC, Australia: Monash University Press.

Manley, G. A. (1997) Diversity in hearing-organ structure and the characteristics of spontaneous otoacoustic emissions in lizards. In E. R. Lewis, G. R. Long, R. F. Lyon, P. M. Narins & C. R. Steele (Eds.), Diversity in Auditory Mechanics (pp. 32–38), Singapore: World Scientific Publishing Co.

Manley, G. A. (2000). Cochlear mechanisms from a phylogenetic viewpoint. Proceedings of the National Academy of Sciences of the United States of America, 97(22), 11736–11743.

Manley, G. A. (2001). Evidence for an active process and a cochlear amplifier in nonmammals. Journal of Neurophysiology, 86(2), 541–549.

Manley, G. A., & Gallo, L. (1997). Otoacoustic emissions, hair cells, and myosin motors. The Journal of the Acoustical Society of America, 102(2), 1049–1055.

Manley, G. A., & van Dijk, P. (2016) Frequency selectivity of the human cochlea: Suppression tuning of spontaneous otoacoustic emissions. Hearing Research, 336, 53–62.

Manley, G. A., Gallo, L., & Köppl, C. (1996). Spontaneous otoacoustic emissions in two gecko species, Gekko gecko and Eublepharis macularius. The Journal of the Acoustical Society of America, 99:1588–1603.

Manley, G. A., Popper, A. N., & Fay, R. R. (2008). Active Processes and Otoacoustic Emissions in Hearing. New York: Springer-Verlag.

Martin, G. K., Lonsbury-Marin, B. L., Probst, R., & Coats, A. C. (1988). Spontaneous otoacoustic emissions in a nonhuman primate. I. Basic features and relations to other emissions. Hearing Research, 33(1), 49–68.

Mauermann, M., & Kollmeier, B. (2004). Distortion product otoacoustic emission (DPOAE) input/output functions and the influence of the second DPOAE source. The Journal of the Acoustical Society of America, 116(4), 2199–2212.