Abstract

Automatic vessel delineation has been challenging due to complexities during the acquisition of retinal images. Although, great progress have been made in this field, it remains the subject of on-going research as there is need to further improve on the delineation of more large and thinner retinal vessels as well as the computational speed. Texture and color are promising, as they are very good features applied for object detection in computer vision. This paper presents an investigatory study on sum average Haralick feature (SAHF) using multi-scale approach over two different color spaces, CIElab and RGB, for the delineation of retinal vessels. Experimental results show that the method presented in this paper is robust for the delineation of retinal vessels having achieved fast computational speed with the maximum average accuracy of 95.67% and maximum average sensitivity of 81.12% on DRIVE database. When compared with the previous methods, the method investigated in this paper achieves higher average accuracy and sensitivity rates on DRIVE.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

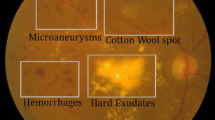

Retinal fundus imaging has been very useful to ophthalmologists for the medical diagnosis and progression monitoring of diabetic retinopathy (DR) [2]. Although several digital imaging modalities are used in ophthalmology, colored fundus photography remains an important retinal imaging modality due to its safety and cost-effective mode of retinal abnormalities documentation [2].

Image segmentation, which is an important step in image analysis, involves the partitioning of a digital image into multiple regions having the same attributes like intensity, texture or color [28]. It is applied for the detection of boundaries, objects or parts of images. There are several important anatomic structures in the human retina. The robust segmentation of the different anatomic structures of the retina is necessary for a reliable characterisation of healthy or diseased retina. Several automated techniques have successfully been used to detect different anatomic features as well as retinopathy features in retinal images [24].

As image analysis continues to assist the ophthalmologists in achieving accurate diagnosis and efficient management of larger number of retinopathies’ patients, they focus on retinal vessel morphological feature analysis such as tortuosity measurement after detecting the vessel network in the retinal images [3, 10]. Retinal vessel delineation is the process of detecting vessel network in retinal images. Efficient retinal vessel delineation and vessel feature analysis are of required for the diagnosis and progress monitoring of the various retinopathies and vascular diseases.

Texture, color and shape are very good features applied for object detection in computer vision. The investigation of texture over different color spaces for object detection or recognition is very important [9, 17]. This is due to the fact that color and texture are two major properties of the image required for image analysis [25], and the good performance of texture in image analysis is strongly influenced by the color representation chosen [16, 19]. While most of the automatic vessel segmentation methods have often utilized the green channel of the RGB color and the grayscale of the retinal images, there is a need to further investigate the use of other color spaces for the delineation of the vessels. Since the analysis of color-texture has been important in image analysis, two different color spaces will be investigated and evaluated for the delineation of the vessels using texture information.

2 Related Works

The retinal vessel segmentation methods that have been proposed in the literature can be categorized into supervised and unsupervised segmentation approaches. In supervised vessel segmentation methods [12, 15, 20, 22, 23], different algorithms are used for learning the set of rules required for the retinal vessel extraction. A set of manually segmented retinal vessels, by trained and skilled personnel, is considered as the reference image. These reference images are used for the training phase of the supervised segmentation techniques. Reliable training samples used during the supervised image segmentation can be expensive or unavailable sometimes [7]. The supervised methods are also computationally expensive since training time is required. Another major drawback of the supervised vessel segmentation techniques is the high dependence of their performance on the training samples. The methods based on unsupervised segmentation [5, 6, 8, 10, 11, 21], on the other hand discover and utilize the underlying patterns of blood vessels to determine whether a particular pixel of the retinal image is vessel-pixel or not. Training samples are, however, not required for the unsupervised segmentation methods.

Automated retinal vessel delineation are faced with several challenges such as the varying retinal vessel-widths, low contrast of thinner vessels and nonhomogeneous illumination across the retinal images [8]. Single scale matched filter have weak responses due to large variation in the widths of the vessels. In order to handle this limitation, several authors have introduced multi-scale filters for the segmentation of vessel networks. Martinez-Perez et al. [13] combined scale space analysis with region growing to detect the vessel network. The method, however, failed to segment the thin vessels. There are also a lot of false vessel-like structures at the border of the optic disc. A multi-scale retinal vessel segmentation method was implemented in [26]. The multi-scale line-tracking was applied for the vessel detection and morphological was applied for the post-processing. The drawback of the method proposed in [26] is its inability to segment the thin vessels. Li et al. [8] applied the multi-scale production of the matched filter (MPMF) responses as multi-scale data fusion strategy. The proposed MPMF vessel extraction scheme applied multi-scale matched filtering, scale multiplication in the image enhancement step and double thresholding in the vessel classification step. This method required 8 s to detect vessels without post-processing and required 30 s to detect vessels while combined with a post-processing phase. Although this method achieved a faster computational time without post-processing, the accuracy rate is relatively low. It is also noted that this method spent most of the time on the post-processing phase.

A multi-wavelet kernels combined with multi-scale hierarchical decomposition was proposed in [27] for the detection of retinal vessels. Vessels were enhanced using matched filtering with multi-wavelet kernels. The enhanced image was normalised using multi-scale hierarchical decomposition. A local adaptive thresholding technique based on the vessel edge information was used to generate the segmented vessels. Although good accuracy rates were obtained, this method fails to detect thin vessels. Another drawback of this method is its average computational time of 210 s (3.5 min) required to segment vessels in each retinal image. Patasius et al. [18] investigated different color spaces and affirmed the usefulness of green channel of the RGB color and hue component of HSV for the delineation of blood vessels while the S component of HSV can be applied for reflex detection. Soares et al. [22] implemented a supervised segmentation method based on two-dimensional (2-D) Gabor wavelet transform combined with Bayesian classifier for the segmentation of the retinal vessel. A feature vector comprising a multi-scale 2-D Gabor wavelet transform responses and pixel intensity was generated from the retinal images for training the classifier. Each of the pixels was further classified as vessel or non-vessel using a Bayesian classifier. Although the technique had a good performance, segmentation of thinner vessels as well as false detections around the border of the optic disc remain a challenge. Another drawback is that the method required 9 hours for the training phase and an average time of about 190 s (3 min, 10 s) to segment vessels in each retinal image.

3 Methods and Techniques

The segmentation of retinal vessels are faced with several challenges from the varying retinal vessel-widths to low contrast of thinner vessels and nonhomogeneous illumination across the retinal images [8]. Although existing methods have made great progress in this field, it remains the subject of on-going research as there is a need to improve further on the detection of more large and thin vessels as well as the computational speed.

Due to the non-homogeneous illumination, low contrast of thin vessels and large variation in the widths of the retinal vessels [8], this study investigates the use of sum average which is a Haralick texture feature [4] over different color spaces using unsupervised segmentation approach for the delineation of large and thin retinal vessels. This texture feature and some other Haralick [4] texture features have been applied for through supervised image segmentation in the literature [4]. While this texture feature has been applied supervised learning approach, the contribution of this work lies in the investigation of sum average Haralick feature (SAHF) using multi-scale approach over two different color spaces, CIElab and RGB in an unsupervised manner. This study further contributes by investigating SAHF using multi-scale approach over an hybrid of the two color spaces. Although, RGB is not a perceptually uniform color space, it is widely used. CIELab on the other hand is not often used. This study investigates the influence of these different color spaces on the use of SAHF in the detection of retinal vessels.

The ‘L’ channel of the CIELab color space and the green channel of the RGB color space of the retinal image is sharpen by applying an unsharp filter. An average filter is then applied for smoothing of the image. The image contrasts is then enhanced to improve the contrast of thin vessels in the image. A median filter with local window size w*w is further applied to the enhanced image as

where U(i,j) is the filtered retinal image, \(V^1(x,y)\) is the result obtained after an unsharp filter with mean filter has been applied, and the H(x,y) is a local median filter with window size (w \(\times \) w). The width of the retinal vessels can vary from very large (15 pixels) to very small (3 pixels) [8]. The window size (15 \(\times \) 15) is selected based on the adequate spectrum it provides for the very large vessels. In order to achieve illumination balance across the image, an image D(x,y) is computed as

This is followed by the computation of the local adaptive threshold based on the SAHT information. Sum average feature [4] is extracted from the ‘a’ channel of CIELAB color space. Since the width of retinal vessels can vary from very large (15 pixels) to very small (3 pixels) [8], a multi-scale approach is applied on the SAHF information considering the pixel of interest in relationship with its spacial neighbourhood to compute a local adaptive threshold. The multi-scale thresholding approach handles the challenge of vessel width variation. The multi-scale approach applied investigates the distances \((d_i)_{i=1,\ldots ,4}\) across the four orientations (horizontal: 0\(^{\circ }\), diagonal: 45\(^{\circ }\), vertical: 90\(^{\circ }\) and anti-diagonal: 135\(^{\circ }\)) as it covers adequate spectrum of vessel texture information (4 \(\times \) 4 = 16) to compute the local threshold for each pixel of interest. The grey level co-occurrence matrix (GLCM) for the retinal fundus image is first computed (For more information on computing GLCM, see [4]).

The sum average feature over the varying distances, d, and orientation, \(\varPhi \), is computed as

where \(\displaystyle {p}_{x+y}(k) = \mathop {\sum _{i=0}^{N_g-1} \sum _{j=0}^{N_g-1}}_{i+j=k} p(i,j)\), \(N_g\) is the number of gray scales, and p(i, j) is the \((i,j)^{th}\) entry in a normalised grey level co-occurrence matrix of the retinal fundus image.

A feature matrix is computed using the multi-scale feature measurement of the sum average over the varying distances ‘d’ and orientations ‘\(\varPhi \)’ as:

such that

where \(\varPhi _1\)= 0\(^{\circ }\), \(\varPhi _2\)= 45\(^{\circ }\), \(\varPhi _3\)= 90\(^{\circ }\) and \(\varPhi _4\) = 135\(^{\circ }\), and \((d_i)_{i=1,\ldots ,4}\).

The adaptive thresholding value applied for the vessel segmentation is then computed as

such that \(\max (d)\) = 4.

The delineated vessel network is

where \(S_{image}\) represents the detected vessels obtained from ‘L’ channel of CIELab or the green channel of RGB.

In order to further improve the vessel detection performance rate, the combination of the detected vessels obtained from ‘L’ channel of CIELab and the green channel of RGB was investigated. The two different vessel networks are combined using an ‘OR’ operation as shown in the Eq. 8:

where \(S_{image}^{L}\) is the detected vessels obtained from ‘L’ channel of CIELab and \(S_{image}^{G}\) is the detected vessels obtained from green channel of RGB.

Due to the presence of false vessel-like structures, there is a need for postprocessing. Mophological operator based on area opening and median filter of a moving \(2 \times 2\) sliding-window are applied in the postprocessing phase to \(S_{image}\) obtained from the adaptive thresholding technique and \(S_{image}^{L \oplus G}\) to remove the false vessel-like structures. This is followed by subtracting the FOV mask from the result obtained after removing the false vessel-like structures to obtain the final delineated vessel networks in the circular field of view.

(a) Color retinal image 4 on DRIVE (b) Ground truth of image 4 on DRIVE (c) Manual delineation of the second observer of image 4 on DRIVE (d) Result of the investigated technique based ‘L’ channel of CIELAB on image 4 of DRIVE (e) Result of the investigated technique based green channel of RGB on image 4 of DRIVE (f) Result of the investigated hybrid technique on image 4 of DRIVE.

4 Experimental Results and Discussion

This experiment is conducted using matlab 2014a. The dataset utilized in this paper is the DRIVE database [1] which is publicly available. The time required to detect the vessel from the ‘L’ channel of CIELAB color space, green channel of RGB color space and the hybrid techniques are 2.8 s, 2.6 s and 3.7 s respectively. Figure 1 shows a color retinal image on DRIVE [1], the ground truth, the manual delineation of the second observer and the results obtained from the three different techniques investigated in this paper. Figure 2 shows two results obtained from two different images through the hybrid technique and their ground truth.

The performance measures utilized in this paper are sensitivity, specificity and accuracy measures (see Eqs. (9)–(11)). The ability of a method to detect vessels in the retinal images is indicated using the sensitivity while the ability of a segmentation method to detect the background in retinal images is indicated using specificity. The degree to which the overall segmented retinal image conforms to an expert’s ground truth is indicated using accuracy. In order to ascertain a good segmentation performance, the sensitivity, specificity and accuracy measures of a segmentation method must be high [11].

where TP, TN, FP and FN are true positive, true negative, false positive and false negative respectively.

All the techniques investigated in this study achieved mean sensitivity rates ranging from 0.7409 to 0.8112 with average accuracy rates ranging from 0.9421 to 0.9567 on DRIVE database (see Table 1). The average accuracy rates of 0.9531 and 0.9567 by the SAHF green channel of RGB and SAHF luminance channel of CIELAB respectively compared favourably with the average accuracy rate 0.9421 obtained from the investigated hybrid technique. The average sensitivity rate of 0.8112 obtained from the investigated hybrid technique is, however, higher than the average sensitivity rates of 0.7674 and 0.7409 obtained by the investigated SAHF green channel of RGB and SAHF luminance channel of CIELAB respectively. This reflects the improvement in the vessel detection achieved by the hybrid technique over the individual SAHF green channel of RGB and SAHF luminance channel of CIELAB techniques.

The sensitivity and accuracy rates of all the investigated techniques compared favourably with the average sensitivity rate of 0.7145 and lower average accuracy rate of 0.9416 presented in [15]. The average sensitivity rates of all the investigated techniques compared favourably with the average sensitivity rate of 0.7345 presented in [23]. Only two average accuracy rates of 0.9531 and 0.9567 by the SAHF green channel of RGB and SAHF luminance channel of CIELAB respectively are higher than the average accuracy rate of 0.9442 presented by Staal et al. [23]. Two average accuracy rates of 0.9531 and 0.9567 by the SAHF green channel of RGB and SAHF luminance channel of CIELAB respectively are higher than the average accuracy rates 0.9452, 0.9387 and 0.9466 obtained by Marin et al. [12], Saffarzadeh et al. [21] and Soares et al. [22] respectively. Martinez-Perez et al. [13], Mendonca et al. [14] and Yin et al. [29] present lower average sensitivity rates of 0.6389, 0.7315 and 0.6522 with average accuracy rates of 0.9181, 0.9463 and 0.9267 respectively when compared with all the SAHF based methods investigated in this paper. The human observer [1] present a higher average sensitivity rate of 0.7761 than the average sensitivity rates 0.7674 and 0.7409 obtained by the investigated SAHF green channel of RGB and SAHF luminance channel of CIELAB respectively. The human observer [1], however, present a lower average sensitivity rate of 0.7761 as compared to the average sensitivity rate 0.8112 obtained by the investigated SAHF hybrid technique.

5 Conclusion and Future Work

This paper implemented the delineation of vessel in retinal images through sum average Haralick feature (SAHF) using multi-scale approach over two different color spaces, CIElab and RGB. This paper presented an investigation of sum average Haralick feature (SAHF) using multi-scale approach over two different color spaces, CIElab and RGB. This paper also presented a study of SAHF using multi-scale approach over an hybrid of the two color spaces. Experimental results presented in this paper show that the hybrid method for the delineation of retinal vessels made a significant improvement when compared to (SAHF) using multi-scale approach over two different color spaces, CIElab and RGB on DRIVE database. Experimental results also showed that the hybrid method and the multi-scale approach over two different color spaces, CIElab and RGB achieved higher average sensitivity and accuracy rates with faster computational time when compared with the previous methods on DRIVE. In the future, we shall consider a study on the efficient ways of characterising the delineated retinal vessels.

References

Research section, digital retinal image for vessel extraction (drive) database. Utrecht, The Netherlands, University Medical Center Utrecht, Image Sciences Institute. http://www.isi.uu.nl/Research/Databases/DRIVE

Abràmoff, M.D., Garvin, M.K., Sonka, M.: Retinal imaging, image analysis. IEEE Rev. Biomed. Eng. 3, 169–208 (2010)

Davitt, B.V., Wallace, D.K.: Plus disease. Surv. Ophthalmol. 54(6), 663–670 (2009)

Haralick, R.M., Shanmugam, K., Dinstein, I.H.: Textural features for image classification. IEEE Trans. Systems Man Cybern. 3(6), 610–621 (1973)

Hoover, A., Kouznetsova, V., Goldbaum, M.: Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans. Med. Imaging 19(3), 203–210 (2000)

Jiang, X., Mojon, D.: Adaptive local thresholding by verification-based multithreshold probing with application to vessel detection in retinal images. IEEE Trans. Pattern Anal. Mach. Intell. 25(1), 131–137 (2003)

Li, B., Li, H.K.: Automated analysis of diabetic retinopathy images: principles, recent developments, and emerging trends. Curr. Diab. Rep. 13(4), 453–459 (2013)

Li, Q., You, J., Zhang, D.: Vessel segmentation and width estimation in retinal images using multiscale production of matched filter responses. Expert Syst. Appl. 39(9), 7600–7610 (2012)

Mäenpää, T., Pietikäinen, M.: Classification with color and texture: jointly or separately? Pattern Recogn. 37(8), 1629–1640 (2004)

Mapayi, T., Tapamo, J.-R., Viriri, S., Adio, A.: Automatic retinal vessel detection and tortuosity measurement. Image Anal. Stereology 35(2), 117–135 (2016)

Mapayi, T., Viriri, S., Tapamo, J.-R.: Comparative study of retinal vessel segmentation based on global thresholding techniques. Comput. Math. Methods Med. 2015 (2015)

Marín, D., Aquino, A., Gegúndez-Arias, M.E., Bravo, J.M.: A new supervised method for blood vessel segmentation in retinal images by using gray-level, moment invariants-based features. IEEE Trans. Med. Imaging 30(1), 146–158 (2011)

Martínez-Pérez, M.E., Hughes, A.D., Stanton, A.V., Thom, S.A., Bharath, A.A., Parker, K.H.: Retinal blood vessel segmentation by means of scale-space analysis and region growing. In: Taylor, C., Colchester, A. (eds.) MICCAI 1999. LNCS, vol. 1679, pp. 90–97. Springer, Heidelberg (1999). doi:10.1007/10704282_10

Mendonca, A.M., Campilho, A.: Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction. IEEE Trans. Med. Imaging 25(9), 1200–1213 (2006)

Niemeijer, M., Staal, J., van Ginneken, B., Loog, M., Abramoff, M.D.: Comparative study of retinal vessel segmentation methods on a new publicly available database. In: Medical Imaging 2004, pp. 648–656. International Society for Optics and Photonics (2004)

Ohta, Y.-I., Kanade, T., Sakai, T.: Color information for region segmentation. Comput. Graph. Image Process. 13(3), 222–241 (1980)

Palm, C.: Color texture classification by integrative co-occurrence matrices. Pattern Recogn. 37(5), 965–976 (2004)

Patasius, M., Marozas, V., Jegelevicius, D., Lukoševičius, A.: Ranking of color space components for detection of blood vessels in eye fundus images. In: Sloten, J.V., Verdonck, P., Nyssen, M., Haueisen, J. (eds.) 4th European Conference of the International Federation for Medical and Biological Engineering, pp. 464–467. Springer, Heidelberg (2009)

Pratt, W.: Spatial transform coding of color images. IEEE Trans. Commun. Technol. 19(6), 980–992 (1971)

Ricci, E., Perfetti, R.: Retinal blood vessel segmentation using line operators and support vector classification. IEEE Trans. Med. Imaging 26(10), 1357–1365 (2007)

Saffarzadeh, V.M., Osareh, A., Shadgar, B.: Vessel segmentation in retinal images using multi-scale line operator, K-means clustering. J. Med. Sig. Sens. 4(2), 122 (2014)

Soares, J.V., Leandro, J.J., Cesar, R.M., Jelinek, H.F., Cree, M.J.: Retinal vessel segmentation using the 2-D gabor wavelet, supervised classification. IEEE Trans. Med. Imaging 25(9), 1214–1222 (2006)

Staal, J., Abràmoff, M.D., Niemeijer, M., Viergever, M.A., van Ginneken, B.: Ridge-based vessel segmentation in color images of the retina. IEEE Trans. Med. Imaging 23(4), 501–509 (2004)

Tobin, K.W., Edward Chaum, V., Govindasamy, P., Karnowski, T.P.: Detection of anatomic structures in human retinal imagery. IEEE Trans. Med. Imaging 26(12), 1729–1739 (2007)

Van de Wouwer, G., Scheunders, P., Livens, S., Van Dyck, D.: Wavelet correlation signatures for color texture characterization. Pattern Recogn. 32(3), 443–451 (1999)

Vlachos, M., Dermatas, E.: Multi-scale retinal vessel segmentation using line tracking. Comput. Med. Imaging Graph. 34(3), 213–227 (2010)

Wang, Y., Ji, G., Lin, P., Trucco, E., et al.: Retinal vessel segmentation using multiwavelet kernels and multiscale hierarchical decomposition. Pattern Recogn. 46(8), 2117–2133 (2013)

Yang, Y., Huang, S.: Image segmentation by fuzzy C-means clustering algorithm with a novel penalty term. Comput. Inf. 26(1), 17–31 (2012)

Yin, Y., Adel, M., Bourennane, S.: Automatic segmentation and measurement of vasculature in retinal fundus images using probabilistic formulation. Comput. Math. Methods Med. 2013 (2013)

Acknowledgement

We thank DRIVE [1] for making the retinal images dataset publicly available.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Mapayi, T., Tapamo, JR. (2016). SAHF: Unsupervised Texture-Based Multiscale with Multicolor Method for Retinal Vessel Delineation. In: Bebis, G., et al. Advances in Visual Computing. ISVC 2016. Lecture Notes in Computer Science(), vol 10072. Springer, Cham. https://doi.org/10.1007/978-3-319-50835-1_57

Download citation

DOI: https://doi.org/10.1007/978-3-319-50835-1_57

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-50834-4

Online ISBN: 978-3-319-50835-1

eBook Packages: Computer ScienceComputer Science (R0)