Abstract

Virtual reality is providing new tools to explore and quantify human social cognition. Here we review some recent studies using virtual characters to study imitation behaviour, with a focus on VR methods. We created virtual characters which demonstrate pointing actions and find that typical adults spontaneously copy action height. In a second study, we are able to create virtual characters which mimic the head movement of a participant in a naturalistic conversation task, but find no evidence for increases in rapport or liking. These studies demonstrate how virtual characters can be used to examine social cognition, and the value of greater interaction between cognitive psychology and computing in future.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Virtual Characters (VC)

- Human Social Cognition

- Virtual Reality

- Imitative Behaviour

- Picture Description Task

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Social cognitive neuroscience aims to discover the information processing mechanisms in the brain which allow people to engage in social interaction. In recent years, researchers in this tradition have begun to use the methods of virtual reality to test and advance theories of human social behaviour. This paper reviews some work in this area, and considers how links between cognitive neuroscience and the study of virtual agents can strengthen in the future.

As an exemplar of social behaviour, we focus on imitation. Imitation occurs when one person performs an action and then another performs the same action, and thus is easy to recognise in daily life. However, the classification of different forms of imitation behaviour, and the neural mechanisms which drive imitation remain hotly contested [1, 2]. Past studies of human imitation tend to fall into two categories - lab studies where a single participant responds to an item on a computer screen (e.g. imitates a hand movement or does not), and real-world studies where a participant imitates or is imitated by a confederate in the context of a natural interaction. The former has high levels of experimental control but is abstracted away from the real world. The latter has high ecological validity but results may be contaminated by many factors which cannot be controlled, such as the mood and unconscious behaviours of the confederate.

Virtual reality provides researchers in cognitive neuroscience with a means to achieve both high ecological validity and high experimental control. Imitation behaviour, which involves matching of action between two people, it is particularly amenable to VR, where a behaviour can be matched between a person and a virtual character. Here we review studies in which we have used virtual reality to explore imitation behaviour, with a focus on the VR methods used and the implications for future research. Note that full details of statistical results are reported elsewhere (Hale and Hamilton, submitted; Forbes et al., submitted). All three studies use the simple VR setup illustrated in Fig. 1, where participants are motion-tracked and view a life-size virtual character on the screen in front of them. Note that we do not use head-mounted displays or full immersion because we need participants to be 100 % confident in ownership of their own hands & bodies, which could be disrupted by use of an HMD.

1.1 Do Participants Spontaneously Imitate Virtual Characters?

We have previously shown that participants will spontaneously imitate a sequence of three actions performed by a virtual character (VC), with a faster response when the VCs action matched the participants’ action than when they did not match [3]. Here, we aimed to expand this result and test if participant would spontaneously imitate kinematic details of a VC’s action, in particular, the height of the action above the table. Previous studies showed that participants spontaneously copy action height from video clips but that this effect was smaller in participants with autism [4].

Our study aimed to replicate the same effect in virtual reality, and to test if differences in the social engagement of the virtual character made a difference to the level of imitation (Fig. 2A). To implement this, we first captured the natural hand, arm and head movements of a demonstrator performing the pointing task (using Polhemus magnetic markers and MotionBuilder) and then mapped these to a VC in Vizard. When a participant arrived in the lab, Polhemus markers were fixed to his/her right index finger and forehead to track motion and allow the interactive task to proceed. The task consisted of a series of trials, where first the virtual character demonstrated a pointing sequence (from the prerecorded actions), then the participant was instructed to point to the dots in the same sequence. While the participant performed actions, the VC actively tracked the participant by always gazing at the marker on the participant’s forehead. This gave a clear feeling that the VC was watching and engaged with the participant. In a different block of trials, an avatar with a different appearance gave the same demonstrations but did not actively watch the participant and instead turned her head away. This allowed us to compare responses with and without social engagement from the virtual character.

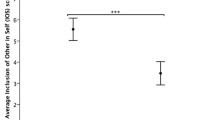

A: Task. Participants are instructed to point to the same dots as the demonstrator, and are not told that the demonstrator sometimes moves high over the table (left) and sometimes stays low (right). B. Results. Typical adults make higher movements following a high demonstration than a low demonstration.

Results were analysed in terms of the peak height of the participant’s finger movement when responding to each demonstration. Typical adults (n = 25) made higher finger movements after viewing a high trajectory compared to after viewing a low trajectory, but this was not modulated by the level of social engagement from the virtual character (Forbes et al., submitted). Overall, these results show that typical adults will spontaneously imitate the actions of a VC, but that more work is needed to determine if social cues can increase or decrease imitation levels.

1.2 Do Participants Detect and Respond Prosocially When They Are Imitated by Virtual Characters?

The claim that being imitated by another person promote affiliation and prosocial feelings has been highly influential [5] but has been tested primarily in studies using trained confederates where experimental control is low. A smaller number of studies have created virtual characters which imitate a participant’s head motion [6] or gestures [7] but results have been mixed [8]. We created a virtual character which could imitate participant’s head/body movements during a picture description task, in order to explore the factors underlying detection of imitation and the cognitive mechanisms involved.

In this study, we first precorded 30 s descriptions of pictures for the VC to speak and motion captured an extended natural head/body motion sequence which could drive the VC behaviour. Then we set up a situation where a participant and a VC take turns to complete a picture description task, where each must describe an image for 30 s and then listen to the other for 30 s, for a total of 5 turns. Piloting showed that this turn-taking task felt much more interactive and engaging than previous tasks where participants listen to a VC without speaking. When participants came to the lab, they were fitted with the Polhemus motion tracker on their head & upper body, and then instructed in the picture description task.

For the first study, participants (n = 64) completed the task with one VC who imitated all the head/body movements of the participant with a 1 s or 3 s delay, and a second VC whose head/body movements were driven by the pre-recorded animation, in a counter-balanced order. After meeting each VC, participants completed a questionnaire about their rapport, trust and feelings of similarity with that VC, and these ratings were the primary outcome measures. Finally, participants completed a structured debrief to determine if they consciously detected any imitation from either VC. This method uses a within-subjects design for the factor of avatar motion (imitate or not) because such designs typically have more power to detect small effects, but used a between-subjects design for the factor of imitation timing.

We found that 27 % of participants who were imitated with a 1 s delay were able to spontaneously detect the mimicry, whereas only 4 % who were imitated with a 3 s delay detected mimicry, and this was a significant difference (\(\chi {}\) \(^{2}\)(1) = 6.9, p < 0.01) Taking only participants who did not detect mimicry, we found a small positive effect of being imitated on rapport ratings, but no effect on other ratings and no differences in rapport between the group with 1 s mimicry and those with 3 s mimicry. This suggests that any prosocial consequences of being imitated are not dependent on the precise timing of the imitation, and thus implies that cognitive mechanisms for the detection of another person imitating might be only weakly tuned.

A. Methods For the mimicry induction, participants describe photos to a VC or listen to her for 5 min. Then they rate rapport, trust and similarity towards the VC. B. Results of delay study show more explicit detection of mimicry with short delays. C. Results of cross-culture study show that being imitated does not increase rapport in any group.

In a second study, we aimed to test the role of cultural ingroups/outgroups on the positive consequences of being imitated, using only the 3 s delay which gave the clearest results in study 1. We created 2 avatars with Western appearance, name and voice, and two with an Asian appearance/name/voice, and invited participants from the UK and from Asia (students who had arrived in London in the last few months) to take part in our study. 40 participants were tested and the methods & analyses were pre-registered at OSF to ensure the validity of the results. We did not find any positive effects of being imitated on liking, rapport or trust. This null result suggests that imitation of head/body movements alone is not enough to lead to increases in rapport or other prosocial consequences.

2 Future Directions

These studies demonstrate how virtual reality can be used to address important questions in cognitive neuroscience, with demonstrations that people can imitate virtual characters and virtual characters can imitate people in believable interactive contexts. We provide a proof-of-principle for the use of interactive VCs to probe human social cognition, combining realism with good experimental control. We also emphasise the need for strong experimental design, larger sample sizes and pre-registration of methods and analyses in order to maximise the validity of these results (Fig. 3).

Our work also raises several questions for future research, including the need for better measures of social presence and a better understanding of the role of presence in determining participant’s behaviour in VR and reactions to it. We also suggest that better control of VC actions and blending of motion capture actions will allow greater interactivity, and thus enable cognitive studies of interactive behaviour.

Finally, we suggest that there are important and deep parallels between the study of human social behaviour and the development of artificial systems which show human-like behaviour (Fig. 4). Great advances have been made recently in both computer vision and in understanding information processing in the human visual system. Similar advances are needed in understanding the control of human social behaviour and in developing appropriate control policies to generate interactive and intelligent agents, and in understanding and modelling decision making. Thus, it will be possible to both understand human behaviour and to generate it in artificial systems.

References

Hamilton, A.F.C.: The neurocognitive mechanisms of imitation. Curr. Opin. Behav. Sci. 3, 63–67 (2015)

Rizzolatti, G., Sinigaglia, C.: The functional role of the parieto-frontal mirror circuit: interpretations and misinterpretations. Nat. Rev. Neurosci. 11, 264–274 (2010)

Pan, X., Hamilton, A.F.C.: Automatic imitation in a rich social context with virtual characters. Front. Psychol. 6, 790 (2015)

Wild, K.S., Poliakoff, E., Jerrison, A., Gowen, E.: Goal-directed and goal-less imitation in autism spectrum disorder. J. Autism Dev. Disord. 42, 1739–1749 (2012)

Chartrand, T.L., Bargh, J.A.: The chameleon effect: the perception-behavior link and social interaction. J. Pers. Soc. Psychol. 76, 893–910 (1999)

Bailenson, J.N., Yee, N.: Digital chameleons: automatic assimilation of nonverbal gestures in immersive virtual environments. Psychol. Sci. 16, 814–819 (2005)

Hasler, B.S., Hirschberger, G., Shani-Sherman, T., Friedman, D.A.: Virtual peacemakers: mimicry increases empathy in simulated contact with virtual outgroup members. Cyberpsychol. Behav. Soc. Netw. 17, 766–771 (2014)

Verberne, F.M.F., Ham, J., Ponnada, A., Midden, C.J.H.: Trusting digital chameleons: the effect of mimicry by a virtual social agent on user trust. In: Berkovsky, S., Freyne, J. (eds.) PERSUASIVE 2013. LNCS, vol. 7822, pp. 234–245. Springer, Heidelberg (2013). doi:10.1007/978-3-642-37157-8_28

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Hamilton, A., Pan, X.S., Forbes, P., Hale, J. (2016). Using Virtual Characters to Study Human Social Cognition. In: Traum, D., Swartout, W., Khooshabeh, P., Kopp, S., Scherer, S., Leuski, A. (eds) Intelligent Virtual Agents. IVA 2016. Lecture Notes in Computer Science(), vol 10011. Springer, Cham. https://doi.org/10.1007/978-3-319-47665-0_62

Download citation

DOI: https://doi.org/10.1007/978-3-319-47665-0_62

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-47664-3

Online ISBN: 978-3-319-47665-0

eBook Packages: Computer ScienceComputer Science (R0)