Abstract

This article chiefly focuses on Fingerprint Quality Assessment (FQA) applied to the Automatic Fingerprint Identification System (AFIS). In our research work, different FQA solutions proposed so far are compared by using several quality metrics selected from the existing studies. The relationship between the quality metric and the matching performance is specifically analyzed via such a comparison and an extra discussion based on the sample utility. This study is achieved via an interoperability analysis by using two different matching tools. Experimental results represented by the global Equal Error Rate (EER) further reveal the constraint of the existing quality assessment solutions in predicting the matching performance. Experiments are performed via several public-known fingerprint databases.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1.1 Introduction

The disadvantage of biometric recognition systems is chiefly attributed to the imperfect matching in contrast with traditional alphanumeric system. Because of this, sample quality is more important for image-based biometric systems, and so is fingerprint image used for the Automatic Fingerprint Identification System (AFIS). Matching of fingerprint images is generally divided into three classes: correlation-based, image-based, and minutiae matching, among which the last one is acknowledged as the primary solution so far [10]. In this case, good quality sample is basically a prerequisite for extracting reliable and sufficient minutia points, and is hence the essential factor for the overall matching performance. The effect of sample quality to the matching performance is defined as the utility of a biometric sample [12]. Therefore, most of the Fingerprint Quality Assessment (FQA) approaches (or fingerprint quality metrics) rely on two aspects: subjective assessment criteria of the pattern [8] and sample utility. In addition, most of the quality metrics are also evaluated in terms of the utility. [1]. However, this property is limited by matching configurations, i.e., sample utility varies as the matching algorithm changes because no matching approach proposed so far is perfect or robust enough in dealing with different image settings though their resolution is similar to each other (normal application requires gray-level images of 500-dpi according to the ISO).

This chapter compares the existing solutions of the FQA in terms of a methodological categorization [4]. Such a comparison analyzes whether those quality metrics based on multi-feature are really able to take the advantages of the employed features. Similarly, quality assessment approaches rely on a prior-knowledge of matching performance still need discussion, especially the prediction to the matching performance. Our work gives a study of these potential problems in an experimental manner. Each of the selected quality metrics in this chapter represents a typical solution in the existing studies.

This chapter is organized as follows: Sect. 1.2 presents a brief review of the categorization of the existing FQA solutions. In Sect. 1.3, the description of trial fingerprint quality metrics is given. Experimental results are given in Sect. 1.4. Section 1.5 concludes the paper.

1.2 Background

Yao et al. [4] categorize prior work in FQA into several classes in terms how this problem is solved. Typical FQA solutions can be summarized as:

-

1.

Single feature-based approaches: these could be further divided into solutions rely on the feature itself or a regularity [18] observed from the employed feature. For instance, standard deviation [13] at block-wise is a brief factor which somehow measures the clarity and differentiates the foreground block of fingerprint. Some studies also obtain relatively good result by using a single feature, such as the Pet’s hat wavelet (CWT) coefficients [16] and the regularity of fingerprint Discrete Fourier Transform (DFT) [6], and Gabor feature [17]. These features also represent the solution of FQA in different domain. In addition, the “relatively good result” here means that those solutions perform well in reducing the overall matching performance because we believe that the evaluation of a quality metric is basically a biometric test which involves both genuine matching and impostor matching errors.

-

2.

FQA via segmentation-like operations: these kinds of solutions are divided into two vast classes at first, including global-level and local-level approaches. Mostly, local-level approaches estimate a quality measure to a fingerprint block in terms of one or several features or indexes, such as directional information and clarity [3, 9, 13, 15]. Some other local-level approaches choose to determine whether a block is a foreground at first [23], and then give a global quality measure to the fingerprint image. This type of solutions implemented globally are further divided as non-image quality assessment and image-based approach. Yao et al. [4] propose one FQA approach by using only minutiae coordinates, meaning that no real image information is used in assessing fingerprint quality. Image-based solutions are basically achieved by performing a segmentation at first, and then estimate the quality of the foreground area according to one or more measurements [4].

-

3.

FQA approaches by using multi-feature: these could be carried out by using either fusion or classification. For example, some studies combine several quality features or indexes together via a linear (or weighted) fusion [5, 7, 15, 25]. The linear fusion is basically used for a specific scenario because coefficient is a constraint of this kind of solution. Similarly, fusion of multiple features or experts outputs could also be achieved via other more sophisticated approaches such as Bayesian statistics [20] and Dezert-Smarandache (DS) theory [26]. The effectiveness of the fusion algorithm itself and differences between multiple experts outputs impact on the fused result. For instance, it is quite difficult to look out an appropriate way to fuse results generated by two different metrics, where one gives continuous output and another generalize a few discrete numbers. This chapter considers only FQA problem of the AFIS rather than any multi-modal, score/cluster-level fusion, and some fusion related issues.

FQA via multi-feature classification [14, 15] basically employs one (or more) classifier(s) to classify fingerprint image into different quality levels. Obviously, this kind of solution depends on the classifier itself. In addition, the robustness and the reliability of the prior-knowledge used by learning-based classification or fusion also impacts on the effectiveness of the quality metric, particularly when generalizing a common solution such as the state-of-the-art (SoA) approach [24].

In addition, some studies propose to use knowledge-based feature by training a multi-layer neural network [18]. However, it is essentially an observed regularity of the learnt feature and external factors such as classifier and tremendous training data set are also required.

According to the discussion above, one can note that fingerprint quality is still an open issue. Existing studies are mostly limited in these kinds of solutions, where learning-based approaches are chiefly associated with the prior-knowledge of matching performance which is debatable for a cross-use. Grother and Tabassi [10] have introduced that quality is not linearly predictive to the matching performance. This chapter gives an experimental study to analyze this problem by comparing FQA approaches selected from each of the categorized solutions.

1.3 Trial Measures

In order to observe the relationship between the quality and the matching performance, several metrics carried out by using each of the categorized solution are employed in this study, given as follows.

1.3.1 Metrics with Single Feature

As mentioned in Sect. 1.2, we first choose one quality metric generalized by using a single feature. The selected metric is implemented via the Pet’s Hat continuous wavelet, which is denoted as the CWT as mentioned in Sect. 1.2. The CWT in a window of W is formulated as

where ci is wavelet coefficient and the windows size depends on the image size, for example, 16 pixels for gray scale images of 512 dpi. In our study, the CWT is implemented with two default parameters, a scale of 2 and angle of 0. We choose this quality metric because it outperforms the SoA approach in reducing the overall error rate for some different image settings. Note that the resolution of fingerprint image is about 500-dpi, which is the minimum requirement of the AFIS [19].

1.3.2 Segmentation-Based Metrics

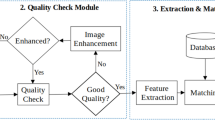

Fingerprint segmentation is one way to separate the foreground area (ridge-valley pattern) from the background (vacuum area) formed by input sensor(s). This operation is in some measure equivalent to the quality assessment of a fingerprint image because the matching (or comparison) is mainly dependent on the foreground area. It is reasonable that a fingerprint image with relatively clear and large foreground area can generate a higher genuine matching score than those characterized in an opposite way. In this case, many studies use segmentation-based solutions to perform quality assessment. This section gives two metrics based on segmentation-like operations to show how foreground area is important to quality assessment. The first one is an image-independent quality metric and the second is dependent on the image pixel (Fig. 1.1).

1.3.2.1 FQA via Informative Region

The image-independent approach employed in this chapter is known as the MQF [29] which uses only the coordinates information of the minutiae template of the associated fingerprint image. Figure 1.2 gives a general diagram of this quality metric.

As depicted by the diagram (Fig. 1.2), the convex-hull and Delaunay triangulation are used at first for modeling the informative region of a fingerprint image in terms of the detected minutiae points. Next, some unreasonable-looking triangular areas marked by pink are removed from the informative region. The remaining area of the informative region hence represents the quality of the associated fingerprint or the minutiae template [29].

This quality metric is chosen because it is a new solution of the FQA and it outperforms the SoA approach in some cases though only minutiae coordinates are used. The details of this metric can be found in the reference article and are not given here.

1.3.2.2 FQA via Pixel-Pruning

Another segmentation-based quality metric is denoted as MSEG [4] which performs a two-step operation to a fingerprint image, including a coarse segmentation and a pixel-pruning operation. The pixel-pruning is implemented via categorizing fingerprint quality into two general cases: desired image and non-desired image. Figure 1.3 illustrates such a categorization.

Obviously, an AFIS basically prefers keeping images like Fig. 1.3a because it is more probably to give reliable and sufficient feature. Figure 1.3b shows two images that are not desired subjectively because the left one has some tiny quality problems and the right one is relatively small and both may lead to low genuine matching or high impostor matching. In this case, a better quality assessment can be done if one can make a clearer difference between the desired image and the non-desired image. The MSEG employs a gradient measure of image pixel to prune pixels of non-desired image as much as possible. Figure 1.3c, d illustrates the result of pixel-pruning operation of two kinds of images.

1.3.3 FQA via Multi-feature

Similarly, we also choose two quality metrics that rely on multi-feature and both are implemented via a prior-knowledge of matching performance. By using this kind of solutions, an experimental comparison can be made between different approaches, especially one can find that solutions based on multiple features do not really take the advantages of the employed features because of the effect generated by the variation of image specifications, so is the employed prior-knowledge generated form the big data [21]. The first one is classification-based approach which is the SoA solution known as NFIQ [24]. The NFIQ estimates a normalized matching score of a fingerprint sample by sending a set of quality features (11 features) to a neural network model. The NFIQ algorithm remapped the estimated matching score into five classes denoted by integers from 1 to 5 where 1 indicates the best quality level.

On the other hand, we choose quality metric based on multi-feature fusion which is actually a No-reference Image Quality Assessment (NR-IQA) [22] solution used for FQA by integrating multiple features with a set of weighted coefficients. The selected approach is denoted as Qabed [7], which is basically defined as

where N is the number of quality features Fi (i = 1, …, N), αi are the weighted coefficients obtained by optimizing a fitness function of a genetic algorithm. The fitness function is defined as a correlation between linearly combined quality value and genuine matching score [11]. Maximizing such a linear relation is somehow equivalent to the concept that quality predicts matching performance. The weighted coefficient is dependent on a training set of fingerprint samples. We choose this approach because it performs well in predicting the matching performance in comparison with the SoA quality metric.

1.4 Experimental Results

Some existing studies propose to calculate correlation between different metrics [2] for comparing the behavior of them. However, this is not completely observable, because there is no explicit linear relation among every group of variant quality metrics. Generally, this kind of measure is to observe the similarity between two different variables such as wavelet coefficients. In this case, to compare the studied metrics, we simply provide experiment results of two evaluation approaches, one is a validation approach relied on Enrollment Selection (ES) [30] and another is an evaluation method with multiple bins of sorted biometric samples [6].

1.4.1 Software

In the experiment, we use two matching systems where one is the OpenSource NBIS [27] and another is a commercial fingerprint SDK known as “id3”. The NIST software contains several modules, among which the MINDTCT is used for generating INCITS 378-2004 standard minutiae template and the matching scores are calculated via Bozorth3. The commercial SDK has six options of the existing minutiae template standards and the minutiae templates of ISO/IEC 19794-2:2005 standard [19] have been extracted in the experiment. Similarly, a corresponding matcher has also been implemented with the SDK. By using these two sets of programs, the comparative study is accomplished via an interoperate analysis of the selected quality metrics.

1.4.2 Database et Protocol

In the experiment, one dataset of the 2000 Fingerprint Verification Competition (FVC) test, one of FVC2002, three of FVC2004, and two CASIAFootnote 1 datasets are employed. Each of the FVC datasets includes 800 images of 100 individuals, 8 samples per individual. The CASIA database contains fingerprint images of 4 fingers of each hand of 500 subjects, where each finger has 5 samples. In this study, we create the two re-organized databases by using samples of the second finger of each hand, and they are, respectively, denoted as CASL2 and CASR2. Therefore, each sub-database has 2500 images of 500 individual (5 samples per individual) (Table 1.1).

The image size of each dataset is different from one another and the resolution is over 500-dpi. A glance of the datasets is given by several samples in Fig. 1.4. In this study, the experiment includes two parts, one is utility-based evaluation and another is quality-based evaluation. The evaluation approach employed in the experiment is based on the Enrollment Selection (ES) [28].

1.4.3 Results

1.4.3.1 ES with Quality

The evaluation task is a comparison between variant frameworks of fingerprint quality metric. We use each group of quality values and two types of matching scores to perform enrollment selection for each dataset. The global Equal Error Rate (EER) values obtained by the selected quality metrics are given in Table 1.2.

One can found that the quality metrics providing the lowest global EER are not always ones based on multi-feature, even for an associated vendor such as NBIS matching software of the NFIQ. The quality metric based on a single feature (CWT) also performs well on many datasets. In addition, both the CWT and MSEG demonstrate relatively good generality for the employed matching algorithms, especially when a better matching algorithm is involved.

For instance, MSEG obtains the best results from the last four of the seven employed dataset when performing evaluation with the matching scores of the SDK, while the results obtained from other three databases are also not bad. Particularly, MSEG decreases the error rates more than other metrics for the two difficult databases: CASL2 and CASR2. In addition, the CWT also performs well for most of the databases. The QMF and NFIQ do not give dominant results, especially when the NBIS matching scores are used in the experiment because QMF relies on the GMS of the NBIS software, while the NFIQ depends on 11 quality features (or real metrics). The confidence interval (CI) of the global EER values are given in Table 1.3.

Furthermore, one can observe the effect of matching scores to the knowledge-based metrics: NFIQ and QMF. The NFIQ obtains quite high (bad) EER values from the two CASIA datasets when NBIS matching scores are employed in the evaluation, while it generalizes relatively better results for the two datasets when using the SDK. The QMF obtains better results than NFIQ from five (02DB2, 04DB1, 04DB3, CASL2, and CASR2) of the seven databases when using the NBIS matching scores because its training is independently performed for each dataset via the NBIS matching scores, meaning it is appropriate to a specific scenario. However, in comparison with the knowledge-free metrics, both the two metrics do not show a higher performance though they employ different sets of features. Meanwhile, the MQF is a no-image quality metric but the performance is not bad in comparison with the NFIQ and QMF, especially when using the NBIS matching scores because it relies on the minutiae extractor associated with the NBIS software. In this case, one can observe that a good matching algorithm and a relatively good dataset (such as 00DB2, 02DB2 and 04DB3) may blurs the effect of a quality metric, i.e., it is easier to approach to a relatively better performance if the matcher is relatively robust. Thus, it is really necessary to perform an offline biometric test via “bad” datasets. In addition, it is possible to consider that the implementation of a metric should be independent from the matching performance if we emphasize its “generality.” The effect of matching performance to quality metrics is further discussed in Sect. 1.4.4.

1.4.3.2 Isometric Bins

The ES with sample’s quality reveals the best of quality metrics’ capability in reducing the error rate. In this section, another evaluation is performed by using an approach based on isometric bins of the samples that had been sorted in terms of quality [6]. We don’t assert that quality metric is fully able to predict matching performance due to the diversity of matching algorithms. In this case, this kind of evaluation is somehow to demonstrate the linearity between a quality metric and the performance of a matcher. The NFIQ is used as a reference, while the QMF, MQF, and CWT represent metrics based on multi-feature fusion, segmentation, and single feature, respectively. We do not use all databases and metrics because these results are enough to show what the quality predicting matching performance is. The results obtained by using two types of matching scores (NBIS and SDK) are given by global EERs’ plots in Figs. 1.5 and 1.6, respectively. One can found that the EER values of the bins obtained by some of the quality metrics are monotonically decreasing, which assert the purpose of proving the validity of a quality metric. Loosely speaking, this kind of property demonstrates the so-called quality predicting matching performance. On the other hand, it shows the similarity or linear relationship between the quality scores and GMS. This could be observed with correlation coefficients between the two measurements.

In the experiment, the maximum GMS for each sample is calculated to demonstrate such an observation, see Table 1.4. For instance, when MSBoz is used, the Pearson correlation coefficients of NFIQ for 00DB2A and QMF for 02DB2 with respect to the maximum GMS are − 0. 4541 and 0.5127. Similarly, this kind of correlation also could be found for the monotonically decreased cases when MSSDK is employed. Here, we simply gives the result of some opposite cases, where the Pearson coefficients of CWT for 04DB1A, NFIQ for 02DB2A, and MQF for 04DB1A with respect to the maximum GMS of MSSDK are 0.0444, − 0. 2596, and 0.0585, respectively. These non-correlated values or some negative correlated cases such as the CWT in Fig. 1.5c are mostly caused by outliers of either the metric or the matching algorithm. Meanwhile, with the results in Table 1.2, Figs. 1.5 and 1.6 together, it reveals that quality predicting matching performance is not always reached linearly, such as the CWT for 04DB2A shown by the three sets of results. The global EERs in Table 1.2 demonstrate that the two metrics perform relatively better for determining the best cases of sample quality, while no linear relationship were found between them and both employed matching algorithms according to Figs. 1.5d and 1.6d, so is learning-based metric such as Figs. 1.5d and 1.6b.

1.4.4 Discussion via Sample Utility

To validate a biometric quality metric, an objective index [30] is used for representing the quality of a sample. The objective measure is an offline sample EER (SEER) value calculated from a set of intra-class matching scores and a set of inter-class matching scores formulated as N − 1 genuine matching scores (GMS)

and N − 1×M − 1 impostor matching scores (IMS)

where N and M denote sample number and individual number of a trial dataset, R is a matcher, and Si, j indicates the jth sample of the ith individual (Sl, k is similar).

Therefore, with a SEERi, j of one sample, one can have a measure of how much the contribution of a sample is within the experimental framework consisted of employed datasets and matching algorithms. The objective measure is denoted as sample’s Utility throughout the experiments.

The utility study in this part is actually an ES operation with the objective indexes presented in Sect. 1.4.4. The objective measure of each sample reflects the behavior of the sample under one matching algorithm of a specific vendor. This kind of measurement is simply used for explaining the limitation of those quality metrics implemented via prior knowledge of matching scores.

According to the definition given in Sect. 1.4.4, one can obtain an M-by-N matrix of sample utility for a trial database. The matrix is hence used as a quality result by which the enrollment selection is performed via interoperate matching algorithms, see graphical results in Fig. 1.7.

Figure 1.7 gives the plots of global EER values obtained by using ES with sample utility values, where Fig. 1.7a is the result based on NBIS matching scores (MSBoz) and Fig. 1.7b is generated from the SDK’s matching scores (MSSDK). In the experiment, first of all, the utility value of each sample (SEERi, j) with respect to each matcher is calculated, respectively. In this case, two matrices of the sample utility were figured out and then used for enrollment selection. The utility values correspond to NBIS software and the SDK are denoted as “UtilityBoz” and “UtilitySDK,” by which the global EER values calculated with ES are plotted in the figure, indicating by blue and red points, respectively.

The enrollment selection task chooses the best sample of one individual as the enrollment in terms of their utility values. In this case, the best performance of a matching algorithm obtained from a trial dataset cannot go over the global EER value. Apparently, the utility value is mostly dependent on the performance of the matching algorithm which is illustrated by two set of plots. In addition, according to the results, we believe that a quality metric based on a prior knowledge of matching score is not fully able to predict the matching performance in a cross-use. In fact, one can consider that whether two genuine samples should produce high GMS when one of them is not able to give reliable and sufficient features [29]. Besides, it is not clear that how much the prior knowledge is close to the ground-truth of sample quality.

1.5 Conclusion

Recent studies of fingerprint quality metrics mainly focus on reducing error rates in terms of utility of the samples. In this study, we make an interoperability analysis to observe the behavior of several representative fingerprint quality metrics from the existing frameworks, and hence reveal the limitations of this issue. Among the experimental study, one can note that it is not very easy to achieve a common good quality metric, even to those with multiple features. For instance, by comparing with the metrics carried out via a single feature, some metrics based on multi-feature do not show the advantage that should have obtained after fusion. Utility-based quality metrics, especially those related to matching scores are more probably affected by the change of matching algorithm, which is clearly brought out with the experiments. Nevertheless, the linear relationship between GMS and quality values is a valid criterion for assessing quality. However, it is not absolutely appropriate for a different matching circumstance. To the end, the offline trials also reveal that quality metrics is not an absolutely predictive measure for matching performance.

References

F. Alonso-Fernandez, J. Fierrez, J. Ortega-Garcia et al., A comparative study of fingerprint image-quality estimation methods. IEEE Trans. Inf. Forensics Secur. 2 (4), 734–743 (2007)

S. Bharadwaj, M. Vatsa, R. Singh, Biometric quality: a review of fingerprint, iris, and face. EURASIP J. Image Video Process. 2014 (1), 1–28 (2014)

R.M. Bolle, S.U. Pankanti, Y. Yao, System and method for determining the quality of fingerprint images, US Patent 5,963,656, 5 Oct 1999

C. Charrier, C. Rosenberger, Z. Yao, J.-M. Le Bars, Fingerprint quality assessment with multiple segmentation, in IEEE International Conference on Cyberworlds (CW), Gotland, Oct 2015

T.P. Chen, X. Jiang, W.Y. Yau, Fingerprint image quality analysis, in 2004 International Conference on Image Processing, 2004. ICIP ‘04, vol. 2 (2004), pp. 1253–1256

Y. Chen, S.C. Dass, A.K. Jain, Fingerprint quality indices for predicting authentication performance, in Audio-and Video-Based Biometric Person Authentication (Springer, Berlin, 2005), pp. 160–170

M. El Abed, A. Ninassi, C. Charrier, C. Rosenberger, Fingerprint quality assessment using a no-reference image quality metric, in European Signal Processing Conference (EUSIPCO) (2013), p. 6

J. Fierrez-Aguilar, J. Ortega-Garcia et al., Kernel-based multimodal biometric verification using quality signals, in Defense and Security (International Society for Optics and Photonics, Bellingham, 2004), pp. 544–554

H. Fronthaler, K. Kollreider, J. Bigun, Automatic image quality assessment with application in biometrics, in Conference on Computer Vision and Pattern Recognition Workshop. CVPRW’06 (IEEE, New York, 2006), p. 30

P. Grother, E. Tabassi, Performance of biometric quality measures. IEEE Trans. Pattern Anal. Mach. Intell. 29 (4), 531–543 (2007)

R.-L.V. Hsu, J. Shah, B. Martin, Quality assessment of facial images, in Biometric Consortium Conference, 2006 Biometrics Symposium: Special Session on Research at the (IEEE, New York, 2006), pp. 1–6

ISO/IEC 29794-1:2009. Information technology? Biometric sample quality? Part 1: Framework. August 2009

B. Lee, J. Moon, H. Kim, A novel measure of fingerprint image quality using the Fourier spectrum, in Society of Photo-Optical Instrumentation Engineers (SPIE) Conference, ed. by A.K. Jain, N.K. Ratha, vol. 5779 (2005), pp. 105–112

G. Li, B. Yang, C. Busch, Autocorrelation and dct based quality metrics for fingerprint samples generated by smartphones, in 2013 18th International Conference on Digital Signal Processing (DSP) (IEEE, New York, 2013), pp. 1–5

E. Lim, X. Jiang, W. Yau, Fingerprint quality and validity analysis, in Proceedings. 2002 International Conference on Image Processing. 2002, vol. 1 (2002), pp. I-469–I-472

L. Nanni, A. Lumini, A hybrid wavelet-based fingerprint matcher. Pattern Recognit. 40 (11), 3146–3151 (2007)

M.A. Olsen, H. Xu, C. Busch, Gabor filters as candidate quality measure for nfiq 2.0, in 2012 5th IAPR International Conference on Biometrics (ICB) (IEEE, New York, 2012), pp. 158–163

M.A. Olsen, E. Tabassi, A. Makarov, C. Busch, Self-organizing maps for fingerprint image quality assessment, in 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), June 2013, pp. 138–145

Organization for Standardization. Iso/iec 19794-2:2005: information technology-biometric data interchange formats-part 2: finger minutiae data (2005)

N. Poh, J. Kittler, A unified framework for biometric expert fusion incorporating quality measures. IEEE Trans. Pattern Anal. Mach. Intell. 34 (1), 3–18 (2011)

N.K. Ratha, J.H. Connell, S. Pankanti, Big data approach to biometric-based identity analytics. IBM J. Res. Dev. 59 (2/3), 4:1–4:11 (2015)

M. Saad, A.C. Bovik, C. Charrier, Blind image quality assessment: a natural scene statistics approach in the DCT domain. IEEE Trans. Image Process. 21 (8), 3339–3352 (2012)

L. Shen, A. Kot, W. Koo, Quality measures of fingerprint images, in Proceedings of AVBPA. LNCS, vol. 2091 (Springer, Berlin, 2001), pp. 266–271

E. Tabassi, C. Wilson, C. Watson, Nist fingerprint image quality. NIST Res. Rep. NISTIR7151 (2004)

X. Tao, X. Yang, Y. Zang, X. Jia, J. Tian, A novel measure of fingerprint image quality using principal component analysis (PCA), in 2012 5th IAPR International Conference on Biometrics (ICB), March 2012, pp. 170–175

M. Vatsa, R. Singh, A. Noore, M.M. Houck, Quality-augmented fusion of level-2 and level-3 fingerprint information using DSm theory. Int. J. Approx. Reason. 50 (1), 51–61 (2009)

C.I. Watson, M.D. Garris, E. Tabassi, C.L. Wilson, R.M. Mccabe, S. Janet, K. Ko, User’s Guide to NIST Biometric Image Software (NBIS). NIST Interagency/Internal Report (NISTIR) - 7392 (2007)

Z. Yao, J.-M. LeBars, C. Charrier, C. Rosenberger, Fingerprint quality assessment combining blind image quality, texture and minutiae features, in International Conference on Information Systems Security and Privacy, Feb 2015

Z. Yao, J.-M. LeBars, C. Charrier, C. Rosenberger, Quality assessment of fingerprints with minutiae Delaunay triangulation, in International Conference on Information Systems Security and Privacy, Feb 2015

Z. Yao, J.-M. Le Bars, C. Charrier, C. Rosenberger, A literature review of fingerprint quality assessment and its evaluation. IET J. Biometrics (2016)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Yao, Z., Bars, JM.L., Charrier, C., Rosenberger, C. (2017). Fingerprint Quality Assessment: Matching Performance and Image Quality. In: Jiang, R., Al-maadeed, S., Bouridane, A., Crookes, P.D., Beghdadi, A. (eds) Biometric Security and Privacy. Signal Processing for Security Technologies. Springer, Cham. https://doi.org/10.1007/978-3-319-47301-7_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-47301-7_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-47300-0

Online ISBN: 978-3-319-47301-7

eBook Packages: EngineeringEngineering (R0)