Abstract

We recently developed a retino-morphic hardware system operating at a frame interval of 5 ms, that was short enough for simulating the graded voltage responses of neurons in the retinal circuit in a quasi-continuous manner. In the present, we made a further progress, by implementing the Izhikevich model so that spatial spike distributions in a ganglion-cell layer can be simulated with millisecond-order timing precision. This system is useful for examining the retinal spike encoding of natural visual scenes.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Neuromorphic hardware

- Retina

- Real-time simulation

- Spike timing precision

- Izhikevich model

- Analog silicon retina

- FPGA

1 Introduction

In vertebrate retinas, incoming visual images are continuously transduced into graded voltage changes in the photoreceptor-cell array, processed by the following neuronal circuitries in a parallel manner, and then encoded into spike trains in the ganglion-cell array. Basic synaptic connections among major classes of neurons and fundamental properties of neuronal responses in the retinas were revealed by previous anatomical and physiological studies [1, 2]. However, little was understood about the functions of the retinal neurons in response to natural visual scenes, since most of conventional physiological experiments used simplified or unrealistic images as visual stimuli, and considered less about the influence of eye and/or head movements on the stimulus images. Although recent studies have suggested new insights on information encoding by certain ganglion-cell types by using more feasible visual stimuli [3–5], spatio-temporal responses of the ganglion-cell array in an animal in vivo, in a natural environment are still largely unknown. Simulating neuronal responses by using a retinal model in silico is one of the powerful approaches to estimate and predict the functional computations and the information encoding in the retinal circuit [6, 7]. Recently, we implemented a retinal model with combining the analog silicon retina [8] and digital circuits in a field-programmable gate array (FPGA) so that the model structures and parameters can be modified, and yet the spatial response patterns at each neuronal layer are visualized at a frame interval of 5 ms [9]. In the present study, we have made a further progress in this system by implementing the Izhikevich model [10] as a spike generation mechanism of the ganglion cells. This model enables us to visualize spatial spike distributions in the ganglion-cell array with millisecond-order timing precision without being affected by the clock timing of the above-mentioned frame interval. We demonstrate a real-time simulation of the spike responses of cat X-type ganglion-cell array under a natural visual condition.

2 Retino-Morphic Hardware System with Izhikevich Spiking Modulus

2.1 Neural Circuit Structure

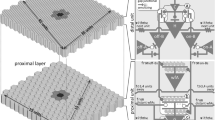

Figure 1 shows a schematic diagram of the retino-morphic hardware system we developed in the present study. This system consists of the analog silicon retina [8] (left box in the figure) and the digital circuits in FPGA (right box). The figure depicts a single pixel of the retinal circuit and almost all the components are the same as those described in the previous study [9], except for a spiking module based on the Izhikevich model [10] (‘Izh1’ and ‘Izh2’). This model is capable of reproducing various spiking patterns of neurons, and yet is compact in computational cost enough for being implemented with the digital circuits in FPGA. And also this enabled us to reproduce the adaptive nature of spiking frequency in retinal ganglion cells [11], as in the real time emulation.

In the silicon retina, an active pixel photo-sensor (‘APS’) connecting to a resistive network (‘1st RN layer’), and another resistive network (‘2nd RN layer’), reproduce spatial response profiles (i.e. spatial receptive fields) of a photoreceptor cell, and of a horizontal cell, respectively, that are coupled electrically with their neighbor cells. In the FPGA, a temporal filter, ‘TF0’ represents the signaling delay due to the synaptic transmission from a horizontal cell to a bipolar cell. The difference between the inputs from the 1st and 2nd RN layers (‘Diff’) reproduces a spatial, center-surround antagonistic receptive field of a bipolar cell. And subsequent temporal filters, ‘TF1’ and ‘TF2’ account for temporal response profiles of on-type and off-type bipolar cells, respectively (‘ONs-BC’ and ‘OFFs-BC’). A nonlinear gain function (‘NLG1’ or ‘NLG2’) is relevant to a nonlinear synaptic transmission from a bipolar cell to a ganglion cell. And the output of this block is fed to the Izhikevich spiking module (‘Izh1’ or ‘Izh2’) for generating spikes as responses of an sustained on-type or off-type ganglion cell (‘ONs-GC’ or ‘OFFs-GC’).

2.2 Approximation of the Izhikevich Model

The original Izhikevich model is governed by two differential equations and a variables resetting via conditional branching, as follows [10]:

Here, v(t) represents the membrane potential; u(t) represents the recovery variable which controls the post-spike refractoriness; I(t) represents the input current determined by the graded voltage response of a bipolar cell (i.e. the output of ‘NLG1’ or ‘NLG2’ in Fig. 1); \({{\varvec{a}}}\), \({{\varvec{b}}}\), \({{\varvec{c}}}\) and \({{\varvec{d}}}\) are constant parameters which define the membrane voltage dynamics. In the FPGA, the differential equations (Eqs. 1 and 2) are approximatively solved by using the Euler method, namely by using the corresponding finite-difference equations (Eqs. 4 and 5, see below) with a calculation time step \(\varDelta t\):

In this computation, there is obviously a trade-off between the accuracy and the cost, depending especially on the bit length of v(t) and u(t). Figure 2 shows the results of computer simulations in which the time courses of v(t) in response to step-wise changes of I(t) were calculated with using different bit lengths of v(t) and u(t). As shown in the figures, the time courses of v(t) were almost the same when the bit length was fixed-point 16 bit or floating-point 64 bit, but not fixed-point 8 or 12 bit. Thus in the present, the bit length of v(t) and u(t) was set to fixed-point 16 bit for the spiking modules in the FPGA (i.e. ‘Izh1’ and ‘Izh2’ in Fig. 1).

2.3 Operation of the Hardware System

As similar to our previous study [9], the silicon retina operates at 200 fps for the 128\(\times \)128-pixel frame and the outputs in parallel are fed to the FPGA through analog-to-digital conversion (‘ADC’ in Fig. 1) in the 5-ms-frame-based manner. The FPGA operates at 40 MHz of clock rate, and the graded voltage response of each neuronal module is computed every 5 ms. The timing of spiking in each ganglion cell module is determined by the time stamp of the conditional branching followed by the variables resetting in Eq. 6. And the final spike outputs have a timing tag of a 0.5-ms resolution(i.e. \(\varDelta t\) in Eqs. 4–6). The data set of the voltage distributions in the RN layers in the silicon retina, and of the voltage responses of the neuronal arrays in the FPGA (e.g. ONs-/OFFs-BC and ONs-.OFFs-GC), can be sent to a computer via the Universal Serial Bus (USB), to be visualized as image streams in quasi-continuous, real-time manner. Figure 3 shows a photograph of the actual hardware equipment of our system. The components of this hardware system are one printed circuit board (PCB) with silicon retina and input-output (I/O) modules including the A/D converter, two PCBs with interface connectors and buffer memories, and one PCB with the FPGA and I/O modules including the USB terminal. As seen in the photograph, the whole system is compact enough for being packed in a box so that a camera lens and a tripod are attached directly to the box, and can be used as if an animal’s eye gazes toward an object in a natural visual environment.

3 Hardware Simulation of the Neuronal Arrays in Response to a Natural Scene

Our hardware system enables us to visualize the spatial response patterns in each of the retinal neuronal arrays in a real-time manner. For the present simulations, the values of \({{\varvec{a}}}\), \({{\varvec{b}}}\), \({{\varvec{c}}}\) and \({{\varvec{d}}}\) in Eqs. 5–6, as well as other circuit parameters in the silicon retina and in the FPGA, were tuned for reproducing the intrinsic spiking properties of the cat beta-type ganglion cell [12] as well as the physiological light responses of the cat X-type ganglion cell [13] as an example (data not shown: \({{\varvec{a}}}=0.02\), \({{\varvec{b}}}=0.25\), \({{\varvec{c}}}=-58\), \({{\varvec{d}}}=3\)). Figure 4 A-C show the images of the voltage distributions in the 1st RN layer (A), of the graded voltage responses in the ONs-BC array (B), and of the spike distributions in the ONs-GC array (C) while a white horizontal bar is vertically moved downward in front of a static object (a stuffed doll) in a situation similar to one shown in Fig. 3. In Fig. 4 A, the image represents a blurred image of the visual scene captured by the APS array, since no temporal filtering is applied in the 1st RN layer (Fig. 1). In the images in Fig. 4 B, spatial contrast edges in the visual scene were enhanced due to the center-surround antagonistic receptive filed of bipolar cells (i.e. ‘ONs-BC’ in Fig. 1). Moreover, the moving bar was blurred in shape, and shifted in position to behind from the original position. And in turn, the static object appeared not to be completely occluded by the bar. These were due to the temporal filtering properties in the outer retinal circuit (i.e. ‘TF0’ and ‘TF1’ in Fig. 1). In Fig. 4 C, the red dots represent positions of the ganglion cells that fired a spike during the last 5 ms. Since the spiking simulated here was the sustained on-type response of the X(beta)-type ganglion cells, basically the spikes were induced in relatively bright regions in the visual scene. In addition, spike firings were highly synchronized among the cells near the leading edge of the moving bar. Figure 4 D superimposes the images in the panel A and C so that the location and timing of the spike firings can be examined in combination with the incoming images. For example, the leading edge of the moving bar (arrows in D) can be blurred at the stage of the bipolar cells (B), but can be sharpened at the stage of the ganglion cells’ spikes with a certain temporal delay (arrowheads in D).

4 Discussion

In the present study, we adopted Izhikevich model as a spiking mechanism of the retinal ganglion cell. Other than this model, for instance, conventional Integrate-and-Fire(I-F) model has been widely used for computer simulations of neuronal spiking. However with this model, when the membrane voltage approaches to the pre-defined threshold value, the timing when a spike is fired can be largely fluctuated dependently on the bit accuracy, and thus would be determined in rather stochastic manner. Such a stochastic spiking behavior of the simple I-F model is less suitable for modeling the ganglion cells spiking in which the spike timing precision is thought to be in milliseconds order [14]. In contrast, the Izhikevich model was suited better for realizing the millisecond-order precision of spike timing (data not shown). Meanwhile, the generalized linear I-F model, which can reproduce a variety of spiking behaviors, has been proposed [15]. However, compared with this I-F models, number of state variables in the Izhikevich equations is less, and thus less of the memory in FPGA is required for the implementation. The adaptive exponential I-F model [16] can be another candidate for emulating the spiking of retinal ganglion cells. And, although we selected the Izhikevich model in the present study, our hardware system was designed to have a capability of testing those other models, as long as the resource in FPGA allows. This would be another benefit from our system configuration.

The visualization of ganglion cells’ spiking patterns at a frame interval of 5 ms should be possible also by other technologies, like GPU, multi-core CPUs, or ASIC. On the other hand, the FPGA offers a program-based configurability of the model circuit, as similar to a simulation with GPU or multi-core CPUs. In addition, its compact size enabled us to build the whole system in a relatively small box attached with an optical lens (Fig. 3). Hence, only this system in the box and a laptop/pocket computer is enough for the present visualization. Besides, one can combine this system with a robotics device for the gaze control, thereby enabling a real-time emulation of the binocular vision under eye and head/body movements in a mobile environment. In addition, our system architecture with FPGA is usable for one to create a distributed computation platform of sensory information which may reduce the computational load in a host GPU/multi-core CPUs, and in turn, lower the power consumption as total.

5 Conclusion

The present neuromorphic hardware system makes it possible to visualize and an-alyze the relation between natural images of the real world and the corresponding neuronal response images which encode significant information for the perception of the real world. The virtual in-vivo experiment with using our system (Fig. 4) facilitates the understanding of visual functions and essential circuit structures of the retina.

References

Kolb, H., Nelson, R., Fernandez, E., Jones, B.: WEBVERSION. http://webvision.med.utah.edu/

Demb, J.B., Singer, J.H.: Functional circuitry of the retina. Annu. Rev. Vis. Sci. 1, 263–289 (2015)

Roska, B., Werblin, F.: Rapid global shifts in natural scenes block spiking in specific ganglion cell types. Nat. Neurosci. 6, 600–608 (2003)

Gollisch, T., Meister, M.: Rapid neural coding in the retina with relative spike latencies. Science 319, 1108–1111 (2008)

Zhang, Y., Kim, I.J., Sanes, J.R., Meister, M.: PNAS Plus: the most numerous ganglion cell type of the mouse retina is a selective feature detector. Proc. Natl. Acad. Sci. 109, E2391–E2398 (2012)

Mead, C.A., Mahowald, M.A.: A silicon model of early visual processing. Neural Netw. 1, 91–97 (1988)

Zaghloul, K.A., Boahen, K.: A silicon retina that reproduces signals in the optic nerve. J. Neural Eng. 3, 257–267 (2006)

Kameda, S., Yagi, T.: An analog VLSI chip emulating sustained and transient response channels of the vertebrate retina. IEEE Trans. Neural Netw. 14, 1405–1412 (2003)

Okuno, H., Hasegawa, J., Sanada, T., Yagi, T.: Real-time emulator for reproducing graded potentials in vertebrate retina. IEEE Trans. Biomed. Circ. Syst. 9, 284–295 (2015)

Izhikevich, E.M.: Simple model of spiking neurons. IEEE Trans. Neural Netw. 14, 1569–1572 (2003)

van Rossum, M.C., O’Brien, B.J., Smith, R.G.: Effects of noise on the spike timing precision of retinal ganglion cells. J. Neurophysiol. 89, 2406–2419 (2003)

O’Brien, B.J., Isayama, T., Richardson, R., Berson, D.M.: Intrinsic physiological properties of cat retinal ganglion cells. J. Physiol. 538, 787–802 (2002)

Enroth-Cugell, C., Robson, J.G.: Functional characteristics and diversity of cat retinal ganglion cells. Invest Ophthal. Vis. Sci. 25, 250–267 (1984)

Berry, M.J., Warland, D.K., Meister, M.: The structure and precision of retinal spiketrains. Proc. Natl. Acad. Sci. U.S.A. 94, 5411–5416 (1997)

Mihala, S., Niebur, E.: A generalized linear integrate-and-fire neural model produces diverse spiking behaviors. Neural Comput. 21, 704–718 (2009)

Brette, R., Gerstner, W.: Adaptive exponential integrate-and-fire model as an effective description of neuronal activity. J. Neurophysiol. 94, 3637–3642 (2005)

Acknowledgments

This research was partly supported by JSPS KAKENHI, Grant-in-Aid for Scientific Research (C), 16K01354 to T.Y.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing AG

About this paper

Cite this paper

Kudo, Y., Hayashida, Y., Ishida, R., Okuno, H., Yagi, T. (2016). A Retino-Morphic Hardware System Simulating the Graded and Action Potentials in Retinal Neuronal Layers. In: Hirose, A., Ozawa, S., Doya, K., Ikeda, K., Lee, M., Liu, D. (eds) Neural Information Processing. ICONIP 2016. Lecture Notes in Computer Science(), vol 9948. Springer, Cham. https://doi.org/10.1007/978-3-319-46672-9_37

Download citation

DOI: https://doi.org/10.1007/978-3-319-46672-9_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-46671-2

Online ISBN: 978-3-319-46672-9

eBook Packages: Computer ScienceComputer Science (R0)