Abstract

Cyberemotions refer to emotions in networks that are a complex function of emotional states in individuals. Thus, measuring cyberemotions frequently involves attempts to estimate emotional states in individuals. Yet, this is not easy, as emotions in individuals are characterized by limited cohesion of the components of response, such as expression in the face, voice, and body, central and peripheral physiological changes, changes in action readiness, as well as subjective experience. There is no gold standard that would identify any of these components by a single criterion. In consequence, modern experimental emotion research has focused on multi-modal assessment of emotions. Different components are targeted at identifying the valence of responses, or the intensity. We will describe paradigms that are particularly tailored for research in the context of cyberemotions and illustrate these with concrete examples of data recorded in our laboratory.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Emotions are embodied mental processes. They are considered mental, because emotions are elicited by information processing in the brain. They are considered embodied, because in consequence of this information processing, many changes happen in the brain and in many other parts of the body. It is assumed that these changes are evolutionary selected adaptations to classes of challenges that provide functional benefits for the procreation of the genes that shape this concerted action of systems over the life span (see also Chap. 3).

While the development of human emotions lies much in our ancestral past, even modern day situations, such as reading an insult in a traditional letter or in a digital blog post on a computer screen, trigger event cascades that are not only shaped by experiences made throughout our life-time, but also using systemic constraints that are ancient. It is because of this heritage, that pixels on a computer screen can change the frequency with which our heart beats change the distribution of blood from the core of the body to the periphery, or trigger metabolic cascades that ultimately will change the availability of energy for cells, providing conditions for adaptive action. It does not matter that these physiological changes are not necessary to prepare the individual in front of a computer screen for action. Typically, all it might take to respond is to write a message, change the channel, or switch off the device—none of these requires much physical action, however, the emotional reaction is not scaled to whatever is necessary in the specific situation in the here-and-now. Instead, the response might be beneficial if the opponent would have to be physically threatened, or we would have to make a quick physical exit out of the situation.

Because of the complexity with which these bodily systems interact, understanding emotions involves an assessment of many different systems, ranging from self-report of how people feel, to expressive behavior, and changes that are linked to affective influences on the peripheral and central nervous system of individuals. This is necessary because the cohesion between these systems is moderate to low and not a single measure is seen as an absolute criterion for diagnosing a particular emotional state (Mauss and Robinson 2009; Hollenstein and Lanteigne 2014). In other words, someone might report being angry but not show any measurable change in their expression, or physiology. Inversely, somebody might report not being touched by the statement that “climate change is nonsense”, but does so with the eyebrows pulled down, and a marked increase in sweat on the palms of their hands. Emotion scientists would consider both of these scenarios affectively relevant and any understanding of emotions on the Internet can also be seen in such a multi-level context.

In recent years, the Internet as a source of increasingly comprehensive and rich data about human emotional behavior at a large scale (Golder and Macy 2011) has attracted a dramatic increase of interest from researchers across disciplinary boundaries (Mayer-Schönberger and Cukier 2013). Thus, online measurement of emotional contents in text, such as those obtained with sentiment -mining tools like SentiStrength (see Chap. 7) have been at the core of many publications within the CYBEREMOTIONS project (see Chap. 6; Chmiel et al. 2011; Garas et al. 2012; Paltoglou et al. 2013; Thelwall et al. 2010) any beyond (Alvarez et al. 2015; Tanase et al. 2015). However, it is not really known how emotions contained in text relate to how a reader or writer feels, and to what extent they may be associated with a full-blown affective response. In this chapter, we will consider the emotion detected by sentiment mining as a property of the text and we will investigate how emotion-in-text, relates to emotion in the person.

In contemporary laboratory research, due to the evolutionary heritage of emotions outlined earlier, components of the emotional response are frequently collected at more than one level. Bodily and expressive changes, or other variables, such as differences in response times, are given weight in addition to what subjects report verbally if they are asked or presented specific response scales—because emotions are understood to take place not only in the spotlight of conscious subjective experience but also at more automatic levels involving the entire body (see Cacioppo et al. 1996; Scherer 1984, 2009). In fact, emotion theories generally posit the presence of a synchronized emotional response across several levels, yet empirical support for this assertion has often been weak or inconsistent (Hollenstein and Lanteigne 2014). In addition, there are social pressures that might lead participants in laboratory research to downplay, or exaggerate their responses. Because of this context, we suggest in this chapter that some of these time tested laboratory measures for emotion research can be fruitful also in the study of cyberemotions, even if much of the visible emotional online content initially appears to take the shape of plain text. Because we, as psychologists, assume that it is ultimately the people behind the keyboard who are emotional, we need to investigate critically to what extent text-based analysis tools and self-report relate to other measures of human emotions. In other words, we need to discuss the issue of cohesion between different levels of the emotional response, such as what people write when they are emotional, what they can report about their emotional feelings, and what bodily indicators can tell us about emotional states associated with cyberemotions. We will repeatedly return to this issue as we discuss the different measures and examples presented in this chapter.

A basic design decision relates to the choice of theoretical framework to operate in. This is important because it has immediate implications for the question which indicators will be most suitable. One of the most important distinctions here is whether emotions are conceived of as discrete states, such as happiness, or anger, or at a more abstract level as points in a two- or three-dimensional space. For example, sadness would be a negative state that is typically characterized by low to medium activation, whereas anger might be just as negative, but much more active. Happiness might be as active as anger, but clearly positive, and we can conceive of states in such a two-dimensional space, such as very slightly positive, and very slightly activated that do not have a clear categorical label that would correspond to such a state (e.g., Yik et al. 2011). Related to the basic decision about the theoretical framework is the question of which measures should be used. Ideally, whatever measure or measures we use to detect cyberemotions should be economic to use, highly reliable, not interfering with the ongoing situation, and in agreement with other indicators across a wide range of experimental contexts. Unfortunately, apart from the fact that no reliable gold standard is available, there likewise is no single measure or set of measures that would be the optimal fit for every situation. Rather, each method and each individual indicator has its own strengths and weaknesses that may or may not be critical for any individual study. In consequence, emotion researchers have to make a number of careful design decisions.

In many cases, adaptation of laboratory research for the purposes of validation and comparison with computer-based assessments of emotional online texts is facilitated by the use of dimensional models rather than discrete models of basic and qualitatively different emotional states (e.g., Barrett and Russel 1999; Russell 2009). In comparison to discrete emotion perspectives, dimensional models do not have to preserve all of the assumed qualitative differences between discrete states, and this can simplify the measurement considerably. Dimensional models further have a strong aim to organize emotional states in terms of a limited number of underlying dimensions (Mauss and Robinson 2009), a property which makes it easier for dimensional models to be “understood” by a computer, and thereby minimizes the risks of cross-category misclassifications based on highly contextualized context-dependent information. For these reasons, a dimensional framework was chosen for the majority of research in the CYBEREMOTIONS project. For the purposes of research within a framework focusing on discrete emotional states, some of the considerations and examples presented in this chapter would need to be modified. However, the overarching issue of limited cohesion would remain.

One of the most reliable indicators of negative affect relates to changes in activation of the Corrugator Supercilii muscles that are involved in pulling the eyebrows together and down. In addition to being strongly activated, such as when a subject is frowning in response to exposure to a negative stimulus, these muscles can also relax beyond what would be observed for a neutral baseline. Thus, when a subject is in a positive emotional state, the Corrugator Supercilii muscles are likely to be relaxed. In consequence, the state of relative relaxation vs. activation of these muscles can provide a mapping of emotional valence that has been shown to be largely linear (Mauss and Robinson 2009). However, even this measure is not always correlated with other behavioral or physiological changes. These limits of cohesion should therefore be considered seriously, and factors that might moderate cohesion need to be understood to improve affect detection (Kappas 2010). Because no single “marker” is yet in sight that could reliably detect emotions on its own, emotion researchers from all disciplines have to make a number of informed decisions about their measurement paradigms.

Our primary concern regarding the measurement of cyberemotions is the question to what extent it can be shown that the indicators that we use to measure emotions indeed measure emotions in a way that is meaningful. Arguably, if behavior of individuals on the Internet could be used to reliably infer their emotional states, even in the wild, i.e., outside of the lab, then this would open up entirely new horizons for applied research. A basic step in this direction is the validation of our measures by tools that have been carefully tested in laboratory research on emotions. For example, in the case of sentiment analysis, automatic classifiers can be validated by ratings from independent human judges who were not involved in the initial training of the classifier. Thus, in a recent study together with Paltoglou, Thelwall and colleagues (Paltoglou et al. 2013, see Chap. 7), we compared evaluations by human judges with various automatic prediction methods. Surprisingly, the results of this study vastly exceeded our a-priori expectations for the strength of this relationship. In fact, the results of this small validation study found correlations of up to 0.89 for hedonic valence (positive to negative), and 0.42 for arousal (calm to excited). Therefore, while these results are not directly transferable to other samples and domains of cyberemotions on the Internet, it reinforces the argument that the degree of observed cohesion varies between measures and experimental context. As new measures are tested, and empirical designs are improved, further research may uncover more successful ways of detecting emotions online. For example, new techniques, such as infrared thermal imaging, have recently been tested successfully in the study of guilt in children (Ioannou et al. 2013), as well as in the context of facial responses toward social ostracism (Paolini et al. 2016). Yet to what extent such technological advances will indeed lead to systematic increases of cohesion with other components of the emotional response remains an empirical question. In the remainder of this chapter, we will go into more detail on different aspects of experimental measurement and design, and the question of cohesion will reappear for every measure that we are going to discuss.

2 Self-report Measures of Cyberemotions as Beliefs vs. Feelings

Self-report measures of emotion capture what we can verbalize about our emotional feelings (Barrett 2004), as well as our beliefs about emotions (Robinson 2002a). While people have been shown to differ in how much they focus on different dimensions of feeling states, such as pleasantness and intensity (Barrett 2004), self-report data is generally seen as an indispensable tool. Sometimes, it is even seen as the only tool worth using. An essential observation, however, is that people often have difficulties to cleanly separate emotional feelings from generalized beliefs about emotions, such as how someone is supposed to feel at a funeral (see Robinson 2002a). The study of such biases and other kinds of contextual influences on human perception is a bread and butter topic of social psychology.

Research has shown that generalized statements about emotions can sometimes differ dramatically from reports made during, or directly subsequent to an event. For example, Barrett et al. (1998) have shown that stereotypical sex-related differences, such as the widely held belief that women are more emotional than men, only emerge when participants are asked to provide relatively global self-descriptions. In one study, males would agree more to items such as “I rarely experience strong emotions” (Barrett et al. 1998, p. 561). Yet when they were instead asked to provide momentary ratings of their actual emotional experience during a 10-min interaction, or when they recorded their emotional responses to all of their emotional interactions during a 1-week period, none of these stereotypical sex differences could be shown. Thus, there are conditions where self-report can be assumed to reflect emotional experience relatively well, such as when we evaluate our “online” emotional experience when it happens (Robinson 2002a). However, when we have to recall emotions after the fact, we increasingly rely on more generalized beliefs rather than on episodic memory (Robinson 2002a,b). In other words, “offline” post-hoc emotional self-reports describe relatively stable beliefs about how we usually feel, whereas event-specific or time-dependent knowledge about a specific emotional experience is more likely to be retrieved if we can focus on our current feelings. What does this imply for the offline study of online cyberemotions?

For cyberemotions, current emotional feelings are likely of particular interest to researchers who, e.g., aim to improve dynamic models of the emergence of emotional states across an online community (see Chaps. 8, 10 and 11). In other cases, emotion dynamics may be of lesser interest, such as when a static corpus of text is to be analyzed for emotional content in general. The important point here, however, is that emotional self-report data may vary in the extent to which it reflects episodic emotional experiences such as feelings vs. more generalized stereotypes about what people may typically feel (Robinson 2002a; Mauss and Robinson 2009). This has implications for what types of self-report data are suitable for different types of research on cyberemotions, and how it should be collected.

We concur with Mauss and Robinson (2009) in suggesting to measure subjective experience of emotions as closely as possible to when and where it actually occurs. Similarly, when we expose subjects to emotional statements collected from the Internet, we may either ask them to report about their own emotional response to just having read these statements—or we might ask them to annotate the emotional content of the statements for us. This renders the phrasing of each question particularly sensitive. For example, if a researcher is interested in current emotional states, rather than generalized beliefs, this should be clearly reflected in the phrasing of each relevant item. To give a very simple example, we might ask a participant in the laboratory “How do you feel right now?” on a rating scale for valence—instead of asking “How positive was this picture?” What is most important here, however, is to be aware of how such differences may influence participants, and adapt our designs and methods accordingly. Often, the answer will be that self-report alone is insufficient as the only indicator of emotional state of the individual.

Once the idea of using self-report as the uncontested gold standard is abandoned, the issue of consistency between different measures rises to the fore. For example, when we (Paltoglou et al. 2013) compared human valence ratings to SentiStrength metrics on the same corpus of forum posts, participants were asked: “How did the thread you just read make you feel?” This question was presented repeatedly after each individual forum discussion so that participants would be able to base their answers on current emotional experiences instead of memory or generalized stereotypes. As already discussed, we were rather surprised to find substantial correlations between human ratings and SentiStrength, suggesting that the algorithms were indeed able to predict episodic feeling states associated with human emotional responses to online content. Nevertheless, caution is still advised because subjects in this online study might have found it socially more desirable to provide stereotypical answers based on the content of the threads rather their immediate emotional experience as such. This is an example where data from other components of the emotional response, such as facial activity, could have been informative. If, for example, facial responses were to show strong evidence for coherence with self-report, social desirability might be ruled out as an alternative explanation. Of course, additional measures at the same time increase complexity to the point that the entire pattern of data should be interpreted rather than any individual measure on its own. Thus, data from other levels, such as bodily indicators, themselves need to be analyzed and integrated within an appropriate multi-level analysis framework (see Cacioppo et al. 2000).

3 From Feelings to Bodily Responses: Psychophysiological Measures

The next two sections of this chapter are devoted to a selection of psychophysiological measures of emotion. In general, psychophysiological measures offer opportunities to extend the study of cyberemotions beyond self-report and automated text analyses, yet they still have to face certain technical limitations when taken outside of the laboratory. For example, it is generally not yet possible to record online physiological responses at a large scale across all individual members of an online community (but see Kappas et al. 2013). Such, however, are primarily technical limitations at the level of large scale or community-wide measurement that may be bridged by technological advancement of the tools. It may even become possible to record at least some of these measures reliably at a large scale in the foreseeable future using mobile devices or webcams (Picard 2010; Poh et al. 2011). Of greater importance to the present discussion, however, is how they, i.e., the activity they record, relate to the other components of the emotional response.

Psychophysiological measures are indicators of bodily responses that are assumed to be associated with psychological phenomena. In comparison to emotional self-report, they promise to be more objective and less influenced by certain confounding factors such as socially desirable responding (Ravaja 2004; see also Paulhus 1991, 2002). Certain peripheral bodily responses like facial activity and skin conductance have furthermore been shown to be associated with emotional self-report in the laboratory (Mauss and Robinson 2009), and they can be recorded at a considerably lower cost than most physiological measures of central nervous system (CNS) activity. Thus, while CNS measures such as electroencephalography (EEG) continue to be of great interest to laboratory research on emotions, other measures may be more likely to bridge the gap to larger scale online emotions.

Unfortunately, a comprehensive discussion of all psychophysiological measures that have been shown to be associated with emotion is well beyond the scope of this chapter. Instead, we will have to focus on a few examples, and refer the interested reader to an overview chapter (e.g., Larsen et al. 2008), or one of the standard reference volumes on psychophysiology (e.g., Andreassi 2007; Cacioppo et al. 2000, 2007). While we will include a few basic technical considerations for the measures that are discussed for the following examples, our main aims in the present discussion are to illustrate some of the basic principles, and to show how measures of bodily responses might be used to complement and validate self-report data.

3.1 Measuring Valence: Facial Electromyography

Perhaps the single most important bodily component of emotions is what we show, or fail to show, on our faces (see Darwin 1872/2005). In the psychophysiological laboratory, there are two principal ways of assessing movements of the face. First, visible facial muscle activity can be classified by trained and certified coders of highly standardized anatomically based systems, such as the Facial Action Coding System (FACS, Ekman and Friesen 1978). Second, activation of the facial musculature, such as those associated with smiling and frowning, can be recorded by means of facial electromyography (EMG) using electrodes glued on the skin. While both methods have their individual strengths and weaknesses, we will focus on the latter because a particular strength of facial EMG is that even very subtle responses below the visual detection threshold can be recorded, including muscular relaxation (van Boxtel 2010). Facial EMG has further been of particular relevance for the validation of cyberemotions because it has frequently been mapped onto a valence-arousal dimensional space based on circumplex models of affect (see Russell 1980; Yik et al. 2011). As discussed above, the use of a valence-arousal model was one of the early design decisions in the CYBEREMOTIONS project. If, discrete emotion states such as anger, fear, and happiness were to be measured instead, FACS-coding might be more appropriate. Facial EMG, however, is particularly suitable for the measurement of how pleasant vs. unpleasant certain emotional stimuli are perceived—i.e., the valence of an emotional response (Mauss and Robinson 2009).

Facial EMG is a technique that can be used to record muscle activity with the help of small electrodes attached to the face. These specialized, re-useable, electrodes are filled with conducive gel and attached to specific locations on the face following highly standardized recording procedures (Fridlund and Cacioppo 1986). While a certain degree of cleaning of the skin is required to reduce electrical resistance, this procedure is otherwise non-invasive for the participants. However, it can be perceived as relatively obtrusive (van Boxtel 2010) when compared to being filmed. Standardized recording procedures are particularly important because some of the most diagnostic information derived from facial EMG can be relatively small changes in the range of a few microvolts (Tassinary and Cacioppo 2000).

Substantial research has shown that episodic positive and negative affect can reliably be distinguished on the basis of activation at the sites of the Zygomaticus Major (smiling) and Corrugator Supercilii (frowning) muscles (Brown and Schwartz 1980; Larsen et al. 2003; van Boxtel 2010), and this property is essential for the validation of other valence-based measures, such as text-based instruments (see Küster and Kappas in press). Nevertheless, considerable caution is still needed because the magnitude of convergence with subjective report is limited (Hollenstein and Lanteigne 2014), even for the best measures of facial activity (Mauss and Robinson 2009), and the usefulness of facial activity as a readout of emotional states has been hotly debated on both empirical and theoretical grounds (Fridlund 1991, 1994; Kappas 2003). Furthermore, the most successful laboratory research on the relationship between self-reported emotions and facial EMG has typically used highly standardized emotional images or individual words (e.g., Bradley et al. 2001; Lang et al. 1993; Larsen et al. 2003) that can be judged rapidly on their general emotional content. This, however, is substantially different from evaluating full sentences, or even paragraphs of text that people read on the Internet.

In one simple study, we (Kappas et al. 2010) recently compared emotional responses to images with responses to reading threads taken from online discussion forums. While we observed some surprisingly large correlations between facial EMG activity and subjective report that were generally on par with the coherence observed for the images (Bradley et al. 2001), there were rather large differences in reading times between participants (Fig. 5.1b). Thus, while many participants took somewhere between 10 and 20 min to read all of the texts, others took 30 min or more. Clearly, some participants will have read the forum posts more thoroughly than others, and for some participants, reading has likely been associated with emotional feelings. However, in other cases, participants may have based their emotional self-report more on generalized stereotypes as discussed by Robinson (2002b) for emotional reports made after the fact.

As this example illustrates, there is often a tradeoff between the ecological validity of responses and maintaining a level of experimental control typical for psychological laboratory research on emotions. Do we, for example, enforce equal reading times for all participants by showing each text for a predefined number of seconds? In standard paradigms of experimental psychology, such intervals are usually fixed precisely—yet in the case of longer texts, this practice would be of questionable value if some participants indeed need twice as long to process any given stimulus. Worse, even if we knew where participants were looking at the moment that a particular facial response occurred, the type of emotional story presented in a given forum thread is already so deeply embedded in contextual information that it quickly becomes surprisingly complicated to unambiguously associate the individual emotional responses with smaller units such as individual words or statements. Likewise, it can be difficult to draw conclusions from subjective report data in such cases because we cannot reasonably ask participants to rate each and every word individually.

The example of facial EMG in a reading study illustrates another more general issue associated with a multi-level measurement of cyberemotions. Psychophysiological measures, such as EMG, can provide quasi-continuous data, whereas there will usually only be a limited number of discrete data points for any measure of self-report. Thus, while continuous psychophysiological data can potentially be very useful for the study of emotion dynamics, there is a mismatch in resolution between measures such as facial EMG and self-report that precludes certain kinds of comparisons, and that may reduce the observed level of coherence in others. Unfortunately, there is no easy solution. That is, while participants could, for example, be given a control device such as a slider or a joystick to continually adjust their perceived emotional state, this is not very feasible when participants have to simultaneously process complex material such as forum posts.

Just for the sake of completeness two variants of assessing facial activity shall be mentioned. Because many researchers do not possess the means of a psychophysiology laboratory, but also do not have access to trained coders, for example of FACS, they might try to use lay-people as judges of emotional expressions. This cannot be recommended, as these measures are typically not very reliable. In a related vein, there is the promise of using automatic coding via software. This is indeed something to look forward to in the next few years. However, at the time of writing, none of the systems available are able to measure the full set of movements that a FACS coder would include. Systems that try to identify discrete emotions without providing output linked to specific facial regions are also not recommended because a) these are based on rigid patterns describing stereotypical expressions, and b) much of the action of facial movements appears to be associated with blends of Action Units, and partial displays—hence a dimensional framework might be the best choice. Once the technical problems of computer coding have been solved, this might be a preferred way of assessing expressive behavior as laptops, portable devices, and increasingly stand-alone monitors tend to have cameras built in. While the spatial and temporal resolution of EMG will always be superior, no preparation of the skin is required for visual coding. Nevertheless, the possibility of large-scale remote measurement is clearly on the horizon; and the integration of such emerging technologies with advances in modeling of complex data, such as dynamic patterns in facial temperature (Jarlier et al. 2011), will likely contribute to a more reliable (remote) measurement of facial activity in a multi-disciplinary approach to the study of cyberemotions.

3.2 Measuring Physiological Arousal: Electrodermal Activity (EDA)

In many cases, research on cyberemotions will not succeed to satisfactorily represent ongoing emotional processes in users unless arousal is considered in addition to hedonic valence (positive vs. negative). Thus, while it may sometimes appear to be sufficient to determine if someone experienced a particular online situation as unpleasant, a pure valence categorization would be entirely blind to what type of unpleasant emotional state has been elicited. For example, moderately unpleasant states associated with comparatively low arousal such as sadness or boredom may differ dramatically from other unpleasant emotional states such as fear or anger. To distinguish these types of fundamentally different cases, arousal will typically have to be measured alongside valence. Valence and arousal together have been shown to account for a large portion of the variance across a wide range of emotional rating situations (Russell 2003).

A comparatively simple to use physiological measure of arousal is Electrodermal activity (EDA). EDA refers to small changes in electrical conductivity of the skin that are associated with variation in the production of sweat by the eccrine sweat glands. Apart from eccrine sweat glands, there are the aprocrine sweat glands, however, these have remained relatively unstudied in respect to skin conductance (Dawson et al. 2000). Changes in EDA have been the subject of study for well over 100 years, and they have been observed in response to a large and varied number of stimuli. Importantly, EDA has been shown to be associated with activation of the sympathetic nervous system (Wallin 1981), and has been widely used as an indicator of sympathetic arousal (Dawson et al. 2000; Boucsein et al. 2012). For the study of cyberemotions, this implies that physiological arousal can in principle be measured continuously by means of attaching two small electrodes to a suitable place on the skin. However, as we have already seen for the measurement of valence via facial EMG, there are still a number of limitations to be considered.

One major limitation of EDA data is the fact that electrodermal activity cannot tell us what precisely the participant is responding to at any given moment. Instead, this information has to be inferred from the experimental design in which EDA was elicited. Thus, a participant may show very similar responses when there is a sudden noise in the environment, when she is reading an emotionally activating online forum discussion, or when she suddenly remembers that she still has to make an important phone call later in the afternoon. The use of control conditions can reduce, but not eliminate, this problem.

Another characteristic of EDA is that changes can be both slow (tonic) and fast (phasic), both of which can overlap substantially but should be considered separately (Dawson et al. 2000; Boucsein 2012). For the study of emotions, the phasic changes are often of particular interest because they may be tied to a specific experimental event, e.g., the presentation of a particularly activating emotional statement in an ongoing discussion. Such phasic electrodermal responses are called skin conductance responses (SCRs), and they are typically assumed to be associated with a significant experimental event if they occur within a specific time window, such as 1–4 s after stimulus onset (Boucsein et al. 2012). This shift in time between stimulus and response has to do with the physical properties of the relevant sweat glands, and is discussed in more detail in the respective guideline literature (Boucsein 2012; Boucsein et al. 2012). However, tonic changes of skin conductance across a longer time window can likewise be informative about physiological arousal.

While EDA can, in principle, be measured relatively easily and inexpensively from a variety of different locations on the body, not all of these recording sites are equally reliable (van Dooren et al. 2012). The most frequently recommended placement for the EDA electrodes is on the distal phalanges of two fingers on the non-dominant hand (Boucsein et al. 2012). However, for the study of cyberemotions, this location is typically compromised by substantial movement artefacts when participants require both hands to control input devices such as a keyboard. Fortunately, official guidelines exist for an alternative, and comparably reliable recording site at the arches of the feet (Boucsein et al. 2012; van Dooren et al. 2012). While this location might at first glance appear inconvenient when considering the “sweaty feet”, the feet’s propensity to produce sweat that is comparable to the sweat produced at the palms of the hands is precisely the reason why the feet are generally suggested as the next best alternative to the hands (see van Dooren et al. 2012). In our laboratory, we have repeatedly used specifically this recording site because it maximizes the freedom of movement of the hands when participants have to type. By resting their feet on a comfortable footstool, for example, most typing-induced movement artefacts can be completely avoided.

Apart from the recording site, interindividual differences between subjects are another important consideration. The potentially most worrisome issue relates to the finding that up to about 25 % of subjects can be classified as electrodermal non-responders (Venables and Mitchell 1996), i.e., people who never or only rarely show significant skin conductance responses. This limitation naturally reduces cohesion with subjective report or text-analyses that can be obtained for all subjects, if only self-report and EDA are measured. Interindividual differences are furthermore not limited to the case of non-responders. Thus, a substantial body of literature has shown that there are significant effects of basic demographic factors such as age, gender, and ethnicity on electrodermal activity (Boucsein 2012). One illustrative example in this context is the observation that self-report of arousal and electrodermal activity may increasingly diverge as people get older. Specifically, Gavazzeni et al. (2008) found that older adults rated the intensity of negative images higher than younger adults—whereas the level of electrodermal activity was reduced for the older adults. In another example study, Ketterer and Smith (1977) observed significant interactions between gender and electrodermal responses to music vs. a series of advertisement paragraphs (verbal stimulus). In this study, females responded more frequently than males to the verbal stimulus—whereas the reverse was found for the musical stimulus. For the measurement of online emotions, these findings imply that researchers should aim to obtain at least minimal demographic data on participants’ age, gender, ethnicity, and handedness. None of this means that the collection of EDA cannot contribute to an estimation, or model of, subjects’ arousal. However, it serves to emphasize our point that EDA, unfortunately, cannot serve as a simple objective gold standard because it is itself known to be influenced by a large number of factors. Rather, additional measures might be used to fill in the blanks and control some of the variance.

How then might EDA, despite its deficiencies, still contribute to the evaluation of other measures? In an example related to the production of emotional texts we could, e.g., aim to test the assumption that females, on the basis of gender-based stereotypes about themselves, should care more about correctly and tactfully responding to a controversial negative online topic than males. To address such a question, a researcher might begin by repeatedly asking participants about their self-perceived arousal while they are composing forum posts in an experiment. However, as discussed above, self-report questions can only be asked at a limited frequency without creating too much interference with the ongoing task. This means that there will usually only be a few discrete points of measurement for self-report data. In many cases, researchers will even decide to have only a single moment of self-report measurement to minimize distractions during the task. EDA, however, can be measured continuously throughout the entire experiment.

At the same time, experimental design decisions can be used to make self-report data more useful even for questions pertaining to emotion dynamics. We could, for example, ask subjects about their emotional experience when they are starting to think about what they are going to write—and then ask them again after they have actually written it. This is, in fact, what we have done in a recent writing study with 59 right-handed participants (30 female, 29 male) in our laboratory (Küster et al. 2011). These participants were asked to write online forum posts while physiological measures such as EDA and EMG were recorded, and they were asked to intermittently report about their perceived emotional state (valence, arousal).

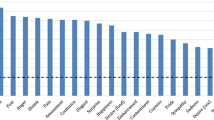

Self-reported emotional arousal of male and female participants across different stages of composing online forum posts. Participants had to either reply to a positive vs. negative topic, or write the first post of a topic. The figure distinguishes intervals where participants were thinking about what to write and intervals where they were actually writing. Higher values on the 7-point scale represent greater intensity of self-rated arousal. Error bars denote standard error of the means

In our writing study (Fig. 5.2), it became evident that participants felt at least moderately activated by the writing task, and that female participants tended to report feeling slightly more excited than male participants—in particular when they had to compose an opening forum post on a negatively valenced topic. Likewise, when we showed the same group of participants a selection of emotional images from a set of standardized pictures taken from the International Affective Picture System (IAPS; Lang et al. 2008; Fig. 5.3), female participants generally reported somewhat higher subjective arousal than males. In comparison to the writing task, only the most extreme negative images used in this study reached roughly the same level of arousal (see the rightmost three stimuli in Fig. 5.3: A duck dying from an oil spill; a painful dental operation; a gun pointed at the subject). This suggests that participants typically perceived the writing tasks as at least somewhat more activating than watching emotional images, and that females appeared generally more responsive to both tasks than male participants who may have “played it cool”.

Self-reported emotional arousal of male and female participants in response to a standardized set of frequently used emotional images (IAPS; Lang et al. 2008). Higher values on the 7-point scale represent greater intensity of self-rated arousal. Error bars denote standard error of the means

Next, we turn to consider the electrodermal activity shown by the same group of participants while looking at the images, and while engaging in the online writing task (Fig. 5.4). Here, a rather complex pattern can be observed. On first glance, engaging in the different stages of the writing task appears to have elicited a generally higher level of arousal than looking at emotional images. This suggests a certain level of cohesion that could also be observed when EDA at the feet was correlated with self-reported arousal in response to the images. Depending on gender (male vs. female) and location of measurement (left foot vs. right foot) these correlations were of medium (r = 0. 44; female left foot) to large (r = 0. 60; male right foot) magnitude, suggesting a substantial albeit not perfect level of cohesion between both measures for a standard picture viewing task.

Electrodermal activity of 59 participants (30 female, 29 male) expressed as a difference to the baseline activity level across different stages of composing online forum posts. Male and female participants had to either reply to a positive vs. negative topic, or write the first post of a topic. The figure distinguishes intervals where participants were thinking about what to write, intervals where they were actually writing, and intervals where they were merely looking at a set of widely used emotional images. Error bars denote standard error of the means

On closer inspection of the data of the writing study, however, it appears that there was substantially more variance in the levels of EDA across the different phases of the task—and between both genders. Thus, while female participants appeared to have been subjectively more excited, this was not evident from the level of electrodermal activity. We can interpret this result in the context of earlier findings on gender differences in tonic EDA (Ketterer and Smith 1977) discussed above—however, it cannot be taken at face value. Rather we should remind ourselves of the issue of timing of episodic emotional self-reports discussed, among others, by Mauss and Robinson (2009). In the case of standardized images presentation, such as the intervals of 6 s per image that have been customary and time-tested in a large volume of psychophysiological research, the object of the emotional self-report is quite clearly defined in the mind of participants. For a complex writing task spanning minutes, however, the precise object of the rating may have been much less clear. At this point, we might wonder if participants actually report some sort of mean of their emotional experience during the writing task that would be equivalent to an averaged level of electrodermal activity—or if they rather focus on a peak experiences at the beginning or end of a task. While research from other areas of psychology suggests the latter, this is a question about the emotion dynamics of emotional writing that would likely best be studied with the aid of continuous measures.

In the writing study, thinking and writing phases were experimentally separated even though they may usually take place nearly simultaneously in everyday life. The advantage was that another point of self-report could be gained. In addition, further tentative conclusions could be drawn. First, it appears that pondering what to write online may often be associated with a greater intensity of emotional excitement (arousal) than the actual act of writing itself. Second, some of the results suggest that males may have benefitted less from the writing activity than females. I.e., at least in some cases, females may have enjoyed a greater post-writing relaxation effect than males. Some of these theoretically more interesting findings would require further research and replication. However, for the present purposes, they serve as an illustration of both the potential and the limitations of adding physiological measures such as EDA to the mix of measures of online emotions. Psychophysiological measures provide the study of cyberemotions with tools to address the complexity of dynamically unfolding online emotions—and at the same time they force researchers to deal with a greater level of complexity that brings along its own problems and potential confounds as part of the deal.

The interpretation of EDA as a sign of arousal is based on the notion that relevant stimuli trigger a response of the sympathetic branch of the autonomic nervous system. For completeness sake, it should be mentioned that here too there is potentially an alternative to EDA assessment. For example, pupil dilation is associated with arousal in a very similar way (Bradley et al. 2008). The problem is that pupil dilation is also dependent of the brightness of the visual object the eye is focusing on. This makes it very difficult to use this measure when the stimuli (e.g., web sites) are quite heterogeneous with regard to brightness and contrast. Cardiac correlates of sympathetic arousal, such as the pre-ejection period of the heart require rather complicated recording set-ups that mean that EDA, even if recorded at the feet, is the best available measure for arousal in the context of the assessment of arousal in cyberemotions (for a recent study employing both PEP and EDA, see, Kreibig et al. 2013).

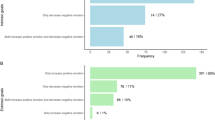

In the CYBEREMOTIONS project, as discussed above, data collected in the laboratory has been used to validate sentiment classifiers, as well as for the validation and development of models of emotion dynamics. To give a more concrete example of how this kind of data might be used within a modeling context, we will conclude this section with a brief look at the role of empirical data in an agent-based framework such as the models for online emotions developed at ETH Zurich (see Chap. 10). First, the validity of a model can be tested by simulations of the behavior of agents driven by the parameters and equations used in a model. For example, a simulation of 100,000 agents per type of thread was used at ETH Zurich for comparison with the subjective report data collected at our laboratory (Fig. 5.5). Even though certain differences between the empirical data and the model simulations might still remain, this strategy can lead to a good level of agreement. Second, validated models, such as the ETH framework, can be used to study emotion dynamics such as those evident in the facial EMG and EDA. E.g., one of the central assumptions of the ETH model has been that arousal drives user participation in online discussions: Such assumptions can be tested at more than one level when data from both subjective report and physiological response parameters are considered.

Comparative distributions of subjective report data from real participants reading threads in our laboratory (light bars) vs. simulated agents based on the parameters and models developed at ETH Zurich (dark bars). Valence distributions are shown in the top row, arousal distributions in the bottom row

4 Conclusions

In this chapter, we have argued for a multi-level approach in the study of cyberemotions that combines self-report data with information from other levels of emotional processing such as bodily responses. We have discussed strengths and limitations of a selection of indicators, with a particular focus on emotional self-report, facial electromyography, and electrodermal activity. Emotional self-report remains an important corner stone in the study of online emotions that ties directly into sentiment analysis and the computational treatment of expressions of emotional states in written text (Chap. 6). However, neither self-report nor any other individual measure has to date been identified that could reliably identify one of the other components of the emotional response by a single criterion. In some cases, computational sentiment analysis corresponds surprisingly well with emotional self-report, and this is quite encouraging for further research that is based on software such as SentiStrength (see also Chap. 6). However, self-report often correlates only weakly with other emotion measures. From a psychophysiological perspective, this is not very surprising because measures aiming at different levels of emotional functioning are understood as in fact measuring different, albeit partially overlapping, aspects of emotional processing.

We have argued that psychophysiological measures such as facial EMG or EDA can make a valuable contribution to research on cyberemotions. This applies to the continued need for basic research in the laboratory as well as the future application of large-scale remote measurement devices. It is tempting to think of these types of objective measures as a solution to the problem of obtaining a simple and continuous readout of the private emotional states of online participants. However, while each of these measures can be informative about different aspects of emotions, we emphasize that they cannot provide a readout of emotional feelings as such. That said, some physiological measures, such as a relaxation of the Corrugator Supercilii muscles associated with frowning, have been identified that correlate fairly reliably with perceived emotional valence. Nevertheless, we argue that psychophysiological measures can often be more useful if they are not primarily intended as physiological markers for emotional feeling states. As we have discussed for the example of electrodermal activity during the composition of online forum posts, an important strength of physiological measures is that emotional responses can be recorded continuously without participants having to pay special attention to their ongoing emotional feeling states. This often allows a more meaningful interpretation of the associated subjective data as well as conclusions about the dynamics of emotions while participants are engaged with an emotional online task. In the context of the interdisciplinary project from which this book emerged, we have seen how physiological data and subjective report can be used to validate and improve agent-based models (see Chap. 10).

The lack of cohesion that we have discussed throughout this chapter has, in some ways, more complex implications than the technical limitations it appears to impose upon the predictive value of any one measure of emotion. Is this entirely a matter of imprecise measurement instruments, movement artefacts, placement of electrodes, or social desirability? It appears likely that certain issues of cohesion can be reduced, or even eliminated by technical improvements on the side of recording sensors. However, we have to keep in mind that participants’ self-report about their emotional feelings is itself likely to be influenced by bodily states (see Allen et al. 2001; Strack et al. 1988). Humans certainly do not perceive bodily responses such as facial expressions, sweating, or heartbeats in quite the same way as electrodes and amplifiers do. And yet, bodily sensations clearly play an important role in how we experience, report, and think about them. For example, intercultural research has shown that people from very different cultures like Belgium, Mexico, and Indonesia associate emotions with bodily sensations, such as to feel the heart beating faster (Breugelmans et al. 2005). Most people nevertheless have surprising difficulties to correctly discriminate and monitor their own heartbeats, unless trained (Katkin et al. 1982; Wiens et al. 2000).

While our main focus in this chapter has been on measures of emotion that have become established in experimental psychology and psychophysiology, it should also be noted that sentiment analysis (Chap. 7), the emerging corner stone of such a large proportion of research on cyberemotions, is still occupying a niche role in conventional emotion research. This is surprising given the potential of this measure, yet perhaps more understandable as an example of the interdisciplinary boundaries that have to be overcome in this field. An exception has been the recent development of the LIWC (Linguistic Inquiry and Word Count) by Pennebaker and colleagues (e.g., Pennebaker et al. 2007) and their previous work on therapeutic writing (e.g., Pennebaker 1993) that has captured sustained interest from clinical and social psychologists. Thus, there has been a clinical interest in text-analyses involving therapeutic essays and longitudinal studies (Danner et al. 2001) but surprisingly little basic research involving these measures in the psychological laboratory. We, by no means, intend to downplay the partially still untapped potential of automated text-analyses for emotion research. However, this exciting new measure is presented in more detail in the next chapter of this volume, and we will instead focus on a few more general issues, as well as examples of widely used measures in psychology laboratories.

Ideally, smart affective sensors and dynamic emotion models would be able to on-the-fly interpret how emotional responses from different levels of measurement interact to produce distinct emotional states. In addition, they would be context-sensitive. Some progress on the context-sensitivity capabilities of automated systems has already been made throughout the lifetime of the CYBEREMOTIONS project (Thelwall et al. 2013; cf. Chap. 7). However, to truly understand intriguing aspects of emotions, such as the interplay of subjective experience and bodily responses, future models would have to develop and test further assumptions on what aspects of bodily activation directly or indirectly influence other components, such as subjective experience. While some of these questions have been of interest to psychologists and psychophysiologists since the days of William James, it is only since recently that some of the more complicated issues and their inherent complexity can be addressed by computational modeling. Clearly, further research is needed here. One outcome of this process may eventually be better physiological markers for subjective emotional states. However, as we have argued throughout this chapter, researchers have to be prepared for the complexity of this endeavor. For this purpose, we have to be aware that there still is no gold standard, and that emotions involve the entire body, rather than “just feelings”.

References

Allen, J.J.B., Harmon-Jones, E., Cavender, J.H.: Manipulation of frontal EEG asymmetry through biofeedback alters self-reported emotional responses and facial EMG. Psychophysiology 38 (4), 685–693 (2001). doi:10.1111/1469-8986.3840685

Alvarez, R., García, D., Moreno, Y., Schweitzer, F.: Sentiment cascades in the 15M movement. EPJ Data Sci. 4 (1), 1–13 (2015). doi:10.1140/epjds/s13688-015-0042-4

Andreassi, J.L.: Psychophysiology: Human Behavior and Physiological Response, 5th edn. Erlbaum, Mahwah, NJ (2007)

Barrett, L.F.: Feelings or words? Understanding the content in self-report ratings of experienced emotion. J. Pers. Soc. Psychol. 87 (2), 266–281 (2004). doi:10.1037/0022-3514.87.2.266

Barrett, L.F., Russell, J.A.: The structure of current affect: controversies and emerging consensus. Curr. Dir. Psychol. Sci. 8 (1), 10–14 (1999). doi:10.1111/1467-8721.00003

Barrett, L.F., Robin, L., Pietromonaco, P.R., Eyssell, K.M.: Are women the “more emotional” sex? Evidence from emotional experiences in social context. Cognit. Emot. 12 (4), 555–578 (1998). doi:10.1080/026999398379565

Boucsein, W.: Electrodermal Activity. Springer, New York (2012). doi:10.1007/978-1-4614-1126-0

Boucsein, W., Fowles, D.C., Grimnes, S., Ben-Shakhar, G., Roth, W.T., Dawson, M.E., Filion, D.L.: Publication recommendations for electrodermal measurements. Psychophysiology 49 (8), 1017–1034 (2012). doi:10.1111/j.1469-8986.2012.01384.x

Bradley, M.M., Codispoti, M., Cuthbert, B.N., Lang, P.J.: Emotion and motivation I: defensive and appetitive reactions in picture processing. Emotion 1 (3), 276–298 (2001). doi:10.1037//1528-3542.1.3.276

Bradley, M.M., Miccoli, L., Escrig, M.A., Lang, P.J.: The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 45 (4), 602–607 (2008). doi:10.1111/j.1469-8986.2008.00654.x

Breugelmans, S.M., Poortinga, Y.H., Ambadar, Z., Setiadi, B., Vaca, J.B., Widiyanto, P.: Body sensations associated with emotions in Rarámuri Indians, rural Javanese, and three student samples. Emotion 5 (2), 166–174 (2005). doi:10.1037/1528-3542.5.2.166

Brown, S.-L., Schwartz, G.E.: Relationships between facial electromyography and subjective experience during affective imagery. Biol. Psychol. 11 (1), 49–62 (1980). doi:10.1016/0301-0511(80)90026-5

Cacioppo, J.T., Berntson, G.G., Crites, S.L. Jr.: Social neuroscience: principles of psychophysiological arousal and response. In: Higgins, E.T., Kruglanski, A.W. (eds.) Social Psychology: Handbook of Basic Principles, pp. 72–101. Guilford, New York (1996)

Cacioppo, J.T., Tassinary, L.G., Berntson, G.G.: Psychophysiological science. In: Cacioppo, J.T., Tassinary, L.G., Berntson, G.G. (eds.) Handbook of Psychophysiology, 2nd edn., pp. 3–23. Cambridge University Press, Cambridge (2000)

Cacioppo, J.T., Tassinary, L.G., Berntson, G.G.: Handbook of Psychophysiology, 3rd edn. Cambridge University Press, Cambridge (2007)

Chmiel, A., Sienkiewicz, J., Thelwall, M., Paltoglou, G., Buckley, K., Kappas, A., Hołyst, J.A.: Collective emotions online and their influence on community life. PLoS ONE 6 (7), e22207 (2011). doi:10.1371/journal.pone.0022207

Danner, D.D., Snowdon, D.A., Friesen, W.V.: Positive emotions in early life and longevity: findings from the nun study. J. Pers. Soc. Psychol. 80 (5), 804–813 (2001). doi:10.1037/0022-3514.80.5.804

Darwin, C.: The Expression of the Emotions in Man and Animals. Digireads.com, Stilwell, KS (2005) (Original work published 1872)

Dawson, M.E., Schell, A.M., Filion, D.L.: The electrodermal system. In: Cacioppo, J.T., Tassinary, L.G., Berntson, G.G. (eds.) Handbook of Psychophysiology, 2nd edn., pp. 200–223. Cambridge University Press, Cambridge (2000)

Ekman, P., Friesen, W.V.: The Facial Action Coding System. Consulting Psychologists Press, Paolo Alto, CA (1978)

Fridlund, A.J.: Sociality of solitary smiling: potentiation by an implicit audience. J. Pers. Soc. Psychol. 60 (2), 229–240 (1991). doi:10.1037/0022-3514.60.2.229

Fridlund, A.J.: Human Facial Expression: An Evolutionary View. Academic, San Diego, CA (1994)

Fridlund, A.J., Cacioppo, J.T.: Guidelines for human electromyographic research. Psychophysiology 23 (5), 567–589 (1986). doi:10.1111/j.1469-8986.1986.tb00676.x

Garas, A., García, D., Skowron, M., Schweitzer, F.: Emotional persistence in online chatting communities. Sci. Rep. 2, 402 (2012). doi:10.1038/srep00402

Gavazzeni, J., Wiens, S., Fischer, H.: Age effects to negative arousal differ for self-report and electrodermal activity. Psychophysiology 45 (1), 148–151 (2008). doi:10.1111/j.1469-8986.2007.00596.x

Golder, S.A., Macy, M.W.: Diurnal and seasonal mood vary with work, sleep, and daylength across diverse cultures. Science 333 (6051), 1878 (2011). doi:10.1126/science.1202775

Hollenstein, T., Lanteigne, D.: Models and methods of emotional concordance. Biol. Psychol. 98, 1–5 (2014). doi:10.1016/j.biopsycho.2013.12.012

Ioannou, S., Ebisch, S., Aureli, T., Bafunno, D., Ioannides, H.A., Cardone, D., Manini, B., Romani, G.L., Gallese, V., Merla, A.: The autonomic signature of guilt in children: a thermal infrared imaging study. PLoS ONE 8 (11), e79440 (2013). doi:10.1371/journal.pone.0079440

Jarlier, S., Grandjean, D., Delplanque, S., N’Diaye, K., Cayeux, I., Velazco, M.I., Sander, D., Vuilleumier, P., Scherer, K.R.: Thermal analysis of facial muscles contractions. IEEE Trans. Affect. Comput. 2 (1), 2–9 (2011). doi:10.1109/T-AFFC.2011.3

Kappas, A.: What facial activity can and cannot tell us about emotions. In: Katsikitis, M. (ed.) The Human Face: Measurement and Meaning, pp. 215–234. Kluwer, Dordrecht (2003)

Kappas, A.: Smile when you read this, whether you like it or not: conceptual challenges to affect detection. IEEE Trans. Affect. Comput. 1 (1), 38–41 (2010). doi:10.1109/T-AFFC.2010.6

Kappas, A., Küster, D., Theunis, M., Tsankova, E.: Cyberemotions: subjective and physiological responses to reading online discussion forums. Poster Presented at the 50th Annual Meeting of the Society for Psychophysiological Research, Portland, OR (2010)

Kappas, A., Krumhuber, E., Küster, D.: Facial behavior. In: Hall, J.A., Knapp, M.L. (eds.) Nonverbal Communication, pp. 131–166. de Gruyter/Mouton, Berlin, New York (2013)

Katkin, E.S., Morell, M.A., Goldband, S., Bernstein, G.L., Wise, J.A.: Individual differences in heartbeat discrimination. Psychophysiology 19 (2), 160–166 (1982). doi:10.1111/j.1469-8986.1982.tb02538.x

Ketterer, M.W., Smith, B.D.: Bilateral electrodermal activity, lateralized cerebral processing and sex. Psychophysiology 14 (6), 513–516 (1977). doi:10.1111/j.1469-8986.1977.tb01190.x

Kreibig, S.D., Samson, A.C., Gross, J.J.: The psychophysiology of mixed emotional states. Psychophysiology 50 (8), 799–811 (2013). doi:10.1111/psyp.12064

Küster, D., Kappas, A.: Measuring emotions in individuals and internet communities. In: Benski, T., Fisher, E. (eds.) Internet and Emotions, pp. 48–61. Routledge (2013)

Küster, D., Kappas, A., Theunis, M., Tsankova, E.: Cyberemotions: subjective and physiological responses elicited by contributing to online discussion forums. Poster Presented at the 51st Annual Meeting of the Society for Psychophysiological Research, Boston, MA (2011)

Lang, P.J., Greenwald, M.K., Bradley, M.M., Hamm, A.O.: Looking at pictures: affective, facial, visceral, and behavioral reactions. Psychophysiology 30 (3), 261–273 (1993). doi:10.1111/j.1469-8986.1993.tb03352.x

Lang, P.J., Bradley, M.M., Cuthbert, B.N.: International affective picture system (IAPS): affective ratings of pictures and instruction manual. Technical Report A-8. University of Florida, Gainesville, FL (2008)

Larsen, J.T., Norris, C.J., Cacioppo, J.T.: Effects of positive and negative affect on electromyographic activity over zygomaticus major and corrugator supercilii. Psychophysiology 40 (5), 776–785 (2003). doi:10.1111/1469-8986.00078

Larsen, J.T., Berntson, G.G., Poehlmann, K.M., Ito, T.A., Cacioppo, J.T.: The psychophysiology of emotion. In: Lewis, R., Haviland-Jones, J.M., Barrett, L.F. (eds.) The Handbook of Emotions, 3rd edn., pp. 180–195. Guilford, New York (2008)

Mauss, I.B., Robinson, M.D.: Measures of emotion: a review. Cognit. Emot. 23 (2), 209–237 (2009). doi:10.1080/02699930802204677

Mayer-Schönberger, V., Cukier, K.: Big Data: A Revolution That Will Transform How We Live, Work, and Think. Houghton Mifflin Harcourt, New York (2013)

Paltoglou, G., Theunis, M., Kappas, A., Thelwall, M.: Predicting emotional responses to long informal text. IEEE Trans. Affect. Comput. 4 (1), 107–115 (2013). doi:10.1109/T-AFFC.2012.26

Paolini, D., Alparone, F.R., Cardone, D., van Beest, I., Merla, A.: “The face of ostracism”: the impact of the social categorization on the thermal facial responses of the target and the observer. Acta Psychol. 163, 65–73 (2016). doi:10.1016/j.actpsy.2015.11.001

Paulhus, D.L.: Measurement and control of response bias. In: Robinson, J.P., Shaver, P.R., Wrightsman, L.S. (eds.) Measures of Personality and Social Psychological Attitudes. Academic, San Diego, CA (1991)

Paulhus, D.L.: Socially desirable responding: the evolution of a construct. In: Braun, H.I., Jackson, D.N., Wiley, D.E. (eds.) The Role of Constructs in Psychological and Educational Measurement, pp. 49–46. Erlbaum, Mahwah, NJ (2002)

Pennebaker, J.W.: Putting stress into words: health, linguistic and therapeutic implications. Behav. Res. Ther. 31 (6), 539–548 (1993). doi:10.1016/0005-7967(93)90105-4

Pennebaker, J.W., Booth, R.J., Francis, M.E.: Linguistic Inquiry and Word Count: LIWC 2007, Austin, TX (2007). LIWC www.liwc.net

Picard, R.W.: Emotion research by the people, for the people. Emot. Rev. 2 (3), 250–254 (2010). doi:10.1177/1754073910364256

Poh, M.-Z., McDuff, D.J., Picard, R.W.: Advancements in noncontact, multiparameter physiological measurements using a webcam. IEEE Trans. Biomed. Eng. 58 (1), 7–11 (2011). doi:10.1109/TBME.2010.2086456

Ravaja, N.: Contributions of psychophysiology to media research: review and recommendations. Media Psychol. 6 (2), 193–235 (2004). doi:10.1207/s1532785xmep0602_4

Robinson, M.D., Clore, G.L.: Episodic and semantic knowledge in emotional self-report: evidence for two judgment processes. J. Pers. Soc. Psychol. 83 (1), 198–215 (2002a). doi:10.1037//0022-3514.83.1.198

Robinson, M.D., Clore, G.L.: Belief and feeling: evidence for an accessibility model of emotional self-report. Psychol. Bull. 128 (6), 934–960 (2002b). doi:10.1037//0033-2909.128.6.934

Russell, J.A.: A circumplex model of affect. J. Pers. Soc. Psychol. 39 (6), 1161–1178 (1980). doi:10.1037/h0077714

Russell, J.A.: Core affect and the psychological construction of emotion. Psychol. Rev. 110 (1), 145–172 (2003). doi:10.1037/0033-295X.110.1.145

Russell, J.A.: Emotion, core affect, and psychological construction. Cognit. Emot. 23 (7), 1259–1283 (2009). doi:10.1080/02699930902809375

Scherer, K.R.: On the nature and function of emotion: a component process approach. In: Scherer, K.R., Ekman, P. (eds.) Approaches to Emotion, pp. 293–317. Lawrence Erlbaum Associates, Hillsdale, NJ (1984)

Scherer, K.R.: The dynamic architecture of emotion: evidence for the component process model. Cognit. Emot. 23 (7), 1307–1351 (2009). doi:10.1080/02699930902928969

Strack, F., Martin, L.L., Stepper, S.: Inhibiting and facilitating conditions of the human smile: a nonobtrusive test of the facial feedback hypothesis. J. Pers. Soc. Psychol. 54 (5), 768–777 (1988). doi:10.1037/0022-3514.54.5.768

Tanase, D., Garcia, D., Garas, A., Schweitzer, F.: Emotions and activity profiles of influential users in product reviews communities. Front. Phys. 3, 87 (2015). doi:10.3389/fphy.2015.00087

Tassinary, L.G., Cacioppo, J.T.: The skeletomotor system: surface electromyography. In: Cacioppo, J.T., Tassinary, L.G., Berntson, G.G. (eds.) Handbook of Psychophysiology, 2nd edn., pp. 3–23. Cambridge University Press, Cambridge (2000)

Thelwall, M., Buckley, K., Paltoglou, G., Cai, D., Kappas, A.: Sentiment in short strength detection informal text. J. Am. Soc. Inf. Sci. Technol. 61 (12), 2544–2558 (2010). doi:10.1002/asi.21416

Thelwall, M., Buckley, K., Paltoglou, G., Skowron, M., García, D., Gobron, S., Ahn, J., Kappas, A., Küster, D., Hołyst, J.A.: Damping sentiment analysis in online communication: discussions, monologs and dialogs. In: Computational Linguistics and Intelligent Text Processing. Lecture Notes in Computer Science, vol. 7817, pp. 1–12 (2013). doi:10.1007/978-3-642-37256-8_1

van Boxtel, A.: Facial EMG as a tool for inferring affective states. In: Spink, A.J., Grieco, F., Krips, O.E., Loijens, L.W.W., Noldus, L.P.J.J., Zimmerman, P.H. (eds.) Proceedings of Measuring Behavior 2010, pp. 104–108. Eindhoven, The Netherlands (2010). Retrieved from Measuring Behavior 2012. http://www.measuringbehavior.org/files/ProceedingsPDF(website)/Boxtel_Symposium6.4.pdf

van Dooren, M., de Vries J.J.G., Janssen, J.H.: Emotional sweating across the body: comparing 16 different skin conductance measurement locations. Physiol. Behav. 106, 298–304 (2012). doi:10.1016/j.physbeh.2012.01.020

Venables, P.H., Mitchell, D.A.: The effects of age, sex and time of testing on skin conductance activity. Biol. Psychol. 43 (2), 87–101 (1996). doi:10.1016/0301-0511(96)05183-6

Wallin, B.G.: Sympathetic nerve activity underlying electrodermal and cardiovascular reactions in man. Psychophysiology 18 (4), 470–476 (1981). doi:10.1111/j.1469-8986.1981.tb02483.x

Wiens, S., Mezzacappa, E.S., Katkin, E.S.: Heartbeat detection and the experience of emotions. Cognit. Emot. 14 (3), 417–427 (2000). doi:10.1080/026999300378905

Yik, M., Russell, J.A., Steiger, J.H.: A 12-point circumplex structure of core affect. Emotion 11 (4), 705–731 (2011). doi:10.1037/a0023980

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Küster, D., Kappas, A. (2017). Measuring Emotions Online: Expression and Physiology. In: Holyst, J. (eds) Cyberemotions. Understanding Complex Systems. Springer, Cham. https://doi.org/10.1007/978-3-319-43639-5_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-43639-5_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-43637-1

Online ISBN: 978-3-319-43639-5

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)