Abstract

Sparse subspace clustering is a well-known algorithm, and it is widely used in many research field nowadays, and a lot effort has been contributed to improve it. In this paper, we propose a novel approach to obtain the coefficient matrix. Compared with traditional sparse subspace clustering (SSC) approaches, the key advantage of our approach is that it provides a new perspective of the self-expressive property. We call it rigidly self-expressive (RSE) property. This new formulation captures the rigidly self-expressive property of the data points in the same subspace, and provides a new formulation for sparse subspace clustering. Extensions to traditional SSC could also be cooperating with this new formulation. We present a first-order algorithm to solve the nonconvex optimization, and further prove that it converges to a KKT point of the nonconvex problem under certain standard assumptions. Extensive experiments on the Extended Yale B dataset, the USPS digital images dataset, and the Columbia Object Image Library shows that for images with up to 30 % missing pixels the clustering quality achieved by our approach outperforms the original SSC.

L. Qiao—The work was partially supported by the National Natural Science Foundation of China under Grant No. 61303264 and Grant No. 61202482.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Subspace clustering naturally arises with the emergence of high-dimensional data. It refers to the problem of finding multiple low-dimensional subspaces underlying a collection of data points sampled from a high-dimensional space and simultaneously partitioning these data into subspaces. Making use of the low intrinsic dimension of input data and the assumption of data lying in a union of linear or affine subspaces, subspace clustering assigns each data point to a subspace, in which data points residing in the same subspace belong to the same cluster. Subspace clustering has been applied to various areas such as computer vision [11], signal processing [16], and bioinformatics [13]. In the past two decades, numerous algorithms to subspace clustering have been proposed, including K-plane [3], GPCA [24], Spectral Curvature Clustering [4], Low Rank Representation (LRR) [15], Sparse Subspace Clustering (SSC) [7], etc. Among these algorithms, the recent work of Sparse Subspace Clustering (SSC) has been recognized to enjoy promising empirical performance.

In this paper, we propose a novel approach beyond SSC to obtain the coefficient matrix. Compared with the approaches mentioned above, the key advantage of our approach is that it provides a new perspective of the self-expressive property. We call it rigidly self-expressive (RSE) property. The model that we build for subspace clustering incorporates rigidly self-expressive property to obtain the coefficient matrix. This formulation generalizes traditional SSC, and captures the expressive property of the data points in the same subspace. We present a first-order algorithm to solve the nonconvex optimization, and further prove that it converges to a KKT point of the nonconvex problem under certain standard assumptions. Extensive experiments on the Extended Yale B dataset [14], the USPS digital images dataset [12], and the Columbia Object Image Library (COIL20) [17] show that for images with up to 30 % missing pixels the clustering quality achieved by our approach outperforms the original SSC.

2 Sparse Subspace Clustering

Assume there are N data points \(y_i \in \mathbb R^{d_i}, i=1,\cdots ,N\) lying in the union of the linear or affine subspaces \(S_i,i=1,...,n\), each of dimension \(d_i \le M\) for \(i = 1,\cdots ,n\). The observed data is reshaped as an matrix with each data point as one column in it

where \(Y \in \mathbb R^{M \times N}\) with each data point as one column of the matrix and \(\varGamma \in \mathbb R^{N\times N}\) is an unknown permutation matrix. The subspace clustering focus on the number of subspaces and the membership of each data point to its corresponding subspace.

The SSC algorithm [8] assumes the data can be sparsely represented by the data in a union of subspaces, which is called self-expressive. Based on the observation, we can write each data point as

where \(c_i=[c_{i1} \; c_{i2} \; \cdots \; c_{iN}]\) and the constraint \(c_{ii} = 0\) eliminates the trivial solution of writing a point as a linear combination of itself. When the data points are well distributed inside each subspace, the relationship among the data points is theoretically analyzed in [9], which formulate the problem as

where \(C=[c_1 \; c_2 \; \cdots \; c_N] \in \mathbb R^{N \times N}\) is the matrix whose i-th column corresponds to the sparse representation of \(y_i,c_i\), and \(\text {diag}(C) \in \mathbb R^N\) is the vector of the diagonal elements of C.

Considering there are outliers and noise in real world data, the model is extended as a more practical one

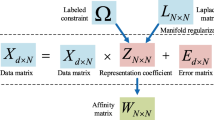

where E denotes the matrix form of outlying entries which has only a few large non-zero elements, and Z denotes the matrix form of noise,the formulation (3) is extended to handle the real-world problems with considering

In the formulation, \(Y \in R^{M\times N}\) is the original data set without any noise and outliers, Z and E correspond to noise and sparse outliers respectively, \(\lambda _e>0\), \(\lambda _z>0\) are two trade-off parameters balancing the three terms in the objective function, and the diag(C) is a vector whose elements are the diagonal entries of the matrix C.

For the case that the data corrupted by outliers, Candes et al. [22, 23] give geometric insights which show that the method would succeed when the dataset is corrupted with possibly overwhelmingly many outliers. For the case that the data is corrupted by noise Z Wang and Xu [25] show that when the amount of noise is small enough, the subspaces are sufficiently separated, and the data are well distributed, the matrix of coefficients gives the correct clustering with high probability.

3 Rigidly Self-Expressive SSC

The sparse subspace clustering algorithm mainly takes advantage of the self-expressiveness property of the data. Specifically speaking, each data point in a union of subspaces can be efficiently expressed as a combination of other points in the same dataset.

However, in many real-world problems, data are always corrupted by noise and sparse outlying entries at the same time due to measurement noise or data collection techniques. For instance, in clustering of human faces, images can be corrupted by errors due to speculation, cast shadow, and occlusion [26]. Similarly, in the motion segmentation problem, because of the malfunctioning of the tracker, feature trajectories can be corrupted by noise or can have entries with large errors [28]. In such cases, the data do not lie perfectly in a union of subspaces, which means that the noise and outlier free data \(\hat{Y}\) does not exactly lie in the union of the subspaces of observed data Y, and the observed data points Y lie in a space contaminated with outlier E and noise Z. Considering the self-expressiveness in the formulation (5), it is not appropriate to use the linear combination YC to represent the data points \(\hat{Y}\).

In this paper we improve the SSC formulation in [9], and present a rigidly self-expressive formulation, named Rigidly Self-Expressive Sparse Subspace Clustering (RSE-SSC), using a sparse linear combination \(\hat{Y}C\) of the original subspaces to represent the original data points \(\hat{Y}\) without noise and outliers, and the observed data set is expressed as the sum of the original data set and noise and outliers. Here we consider the optimization problem with the same objective value function with the original SSC, but totally different constraints on data points. Letting \(\hat{Y}\) the data points lie in the original subspaces without outliers nor noise, and the constraints

are used to express the sparse representation. The observed data Y is naturally expressed as the sum of the exactly original data set and noise and outliers. And the appropriate sparse subspace clustering (RSE-SSC) is formulated as (7)

In the formulation, \(\hat{Y} \in R^{M\times N}\) is the original data set without any noise and outliers, Z and E correspond to noise and sparse outliers respectively, \(\lambda _e>0\), \(\lambda _z>0\) are two trade-off parameters balancing the three terms in the objective function, and the diag(C) is a vector whose elements are the diagonal entries of the matrix C.

The sparse coefficients again encode information about memberships of data to subspaces, which are used in a spectral clustering framework, as before. The algorithm for RSE-SSC is shown in the following section.

4 Solving the Sparse Optimization Problem

The proposed convex programs can be solved via generic convex solvers. However, the computational costs of generic solvers typically is high and these solvers do not scale well with the dimension and the number of data points. In this section, we study efficient implementations of the proposed sparse optimizations using an Alternating Direction Method of Multipliers (ADMM) method [1, 2]. We first consider the most general optimization problem

Utilizing the equality constraint \(Y = \hat{Y} + E + Z\), we can eliminate Z from the optimization problem (7) to generate a concise formulation without Z which is equivalent to solve the original optimization problem. The optimization problem is formulated as

where Z is eliminated from the optimization program, and there are fewer variables and this will cut down the computational cost in each iteration as each variable shall be updates in each iteration of ADMM.

However there is no simple way to update C in each iteration, in order to obtain efficient updates on the optimization variables, we introduce an auxiliary matrix \(A \in \mathbb R^{N\times N}\), and equivalently transform the optimization problem (9) to the optimization problem

It should be noted that the solution \((\hat{Y};C;E)\) of optimization problem (10) concides with the solution of optimization problem (9), also concides with the solution of optimization problem (7). As shown in the Algorithm 1, the updating of variable C is much simpler in each iteration. Following the ADMM framework, we add those two constraints \(\hat{Y} = \hat{Y}A\) and \(A = C - \text {diag}(C)\) of (10) as two penalty terms with parameter \(\rho >0\) to the objective function, and also introduce Lagrange multipliers for the two equality constraints, then we can write the augmented Lagrangian function of (10) as

where the matrix \(\varDelta _1, \varDelta _2 \in \mathbb R^{N \times N}\) is the Lagrange multipliers for the two equality constraints in (10), \(\text {tr}(\cdot )\) denotes the trace operator of a given matrix. The algorithm then iteratively updates the variables \(A, C, E, \hat{Y}\) and the Lagrange multipliers \(\varDelta _1, \varDelta _2\).

In the k-th iteration, we denote the variables by \(A^k\), \(C^k\), \(E^k\), \(\hat{Y}^k\), and the Lagrange multipliers by \(\varDelta _1^k\), \(\varDelta _2^k\). Given \(A^k\), \(C^k\), \(E^k\), \(\hat{Y}^k\),\(\varDelta _1^k\), \(\varDelta _2^k\), ADMM iterates as follows

Then we show how to solve the six subproblems in (12a), (12b), (12c), (12d), (12e) and (12f) in the ADMM algorithm. After all these subproblems solved, we will give the framework of the algorithm that summarize our ADMM algorithm for solving (10) in Algorithm 1.

These three steps are repeated until convergence is achieved or the number of iterations exceeds a maximum iteration number. The algorithm will stop when these conditions satisfied \(\Vert \hat{Y}A^k-\hat{Y}\Vert _F^2 \le \epsilon \),\(\Vert A^k-C^k\Vert _F^2 \le \epsilon \),\(\Vert A^k -A^{k-1}\Vert \le \epsilon \),\(\Vert C^k -C^{k-1}\Vert \le \epsilon \),\(\Vert E^k -E^{k-1}\Vert \le \epsilon \),\(\Vert \hat{Y}^k -\hat{Y}^{k-1}\Vert \le \epsilon \), where \(\epsilon \) denotes the error tolerance for the primal and dual residuals. In practice, the choice of = \(10^4\) works well in real experiments. In summary, Algorithm 1 shows the updates for the ADMM implementation of the optimization problem (10).

5 Convergence Analysis

Similar to [21, 27], in this section, we provide a convergence analysis for the proposed ADMM algorithm showing that under certain standard conditions, any limit point of the iteration sequence generated by Algorithm 1 is a KKT point of (10).

Theorem 1

Let \(X:=(\hat{Y},C,W,E)\) and \(\{X^k\}_{k=1}^{\infty }\) be generated by Algorithm 1. Assume that \(\{X^k\}_{k=1}^{\infty }\) is bounded and \(\lim _{k\rightarrow \infty }(X^{k+1}-X^k)=0\). Then any accumulation point of \(\{X^k\}_{k=1}^{\infty }\) is a KKT point of problem (10).

For ease of presentation, we define \(S_1:=\{C|\text {diag}(C)=0\}\), and use \(\mathcal {P}_{S}(\cdot )\) to denote the projection operator onto set S. It is easy to verify that the KKT conditions for (10) are:

where \(\partial f(x)\) is the subdifferential of function f at x.

Proof

Assume \(\hat{X}:=(\hat{Y},\hat{C},\hat{W},\hat{E})\) is a limit point of \(\{X^k\}_{k=1}^{\infty }\). We will show that \(\hat{X}\) satisfies the KKT conditions in (13). As (12a) and (12b) are guaranteed by the algorithm construction, it directly implies that \(\hat{X}\) satisfies (13a) and (13b).

We rewrite the updating rules 12e and 12f in Algorithm 1 as

The assumption \(\lim _{k\rightarrow \infty }(X^{k+1}-X^k)=0\) implies that the left hand sides in (15) all go to zero. Therefore,

Hence, we only need to verify that \(\hat{X}\) satisfies the other two conditions in (13).

For convenience, let us first ignore the projection in (13e). Then for \(\tau _2>0\), (13e) is equivalent to

with the scalar function \(\mathcal {Q}_{\tau _2}(t):= \tau _2 \partial (|t|_1)+t\) applied element-wise to C. It is easy to verify that \(\mathcal {Q}_{\tau _2}(t)\) is monotone and \(\mathcal {Q}^{-1}_{\tau _2}(t)=\mathrm {shrink}_1(t,\tau _2)\). By applying \(\mathcal {Q}^{-1}_{\tau _2}(\cdot )\) to both sides of (16), we get

By invoking the definition of \(g_C^k\) leads to

which implies that \(\hat{X}\) satisfies (13c) and (13d) when the projection function is considered.

In summary, \(\hat{X}\) satisfies the KKT conditions (13). Thus, any accumulation point of \(\{X^k\}_{k=1}^\infty \) is a KKT point of problem (10).

6 Experiments

In this section, we apply our RSE-SSC approach to three different data sets. We will mainly focus on the comparison of our approach with SSC proposed in [9]. The MATLAB codes of SSC were downloaded from http://www.cis.jhu.edu/~ehsan/code.htme.

6.1 The Dataset

We applied our Algorithm 2 to three public datasets: the Extended Yale B datasetFootnote 1, the USPS digital images datasetFootnote 2, and the COIL20 datasetFootnote 3.

The Extended Yale B dataset is a well-known dataset for face clustering, which consists of images taken from 38 human subjects, and 64 frontal images for each subject were acquired under different illumination conditions and a fixed pose. To reduce the computational cost and memory requirements of the algorithms, we downsample the raw images into the size of \(48\times 42\). Thus, each image is in dimension of 2, 016.

The USPS dataset is relatively difficult to handle, in which there are 7, 291 labeled observations and each observation is a digit of \(16\times 16\) grayscale image and of different orientations. The number of each digit varies from 542 to 1, 194. To reduce the time and memory cost of the expriement, we randomly chose 100 images of each digit in our experiment.

The COIL20 dataset is a database consisting of 1, 440 grayscale images of 20 objects. Images of the 20 objects were taken to pose intervals of 5 degrees, which results in 72 images per object. All 1, 440 normalized images of 20 objects are used in our experiment.

6.2 Post-Processing and Spectral Clustering

After solving optimization problem (7), we obtain the clustered images and the sparse coefficient matrix C. Similar to [6], we perform some post-processing procedure on C. For each coefficient vector \(C_i\), we keep its largest T coefficients in absolute magnitude and set the remaining coefficients to zeros. The affinity matrix W associated with the weighted graph \(\mathcal {G}\) is then constructed as \(W=|C|+|C|^T\). To obtain the final clustering result, we apply the normalized spectral clustering approach proposed by Ng et al. [18]. Thus, the whole procedure of our RSE-SSC based clustering approach can be described as in Algorithm 2.

For SSC [7], we find that it performs better without the normalization of data and post-processing of the coefficient matrix C. As a result, we ran the codes provided by the authors directly. Note that the same spectral clustering algorithm was applied to the coefficient matrix obtained by SSC.

6.3 Implementation Details

Considering that the number of subspaces affects the clustering and recovery performance of algorithms, we applied algorithms under cases of \(L = 3, 5, 8\), where L denotes the number of subspaces, i.e., the number of different subjects. To shorten the testing time, L subjects were chosen in the following way. In the Extended Yale B dataset, all the 38 subjects were divided into four groups, where the four groups correspond to subjects 1 to 10, 11 to 20, 21 to 30, and 31 to 38, respectively. L subjects were chosen from the same group. For example, when \(L=5\), the number of possible 5 subjects is \(3\left( {\begin{array}{c}10\\ 5\end{array}}\right) +\left( {\begin{array}{c}8\\ 5\end{array}}\right) =812\). Among these 812 possible choices, 20 trials were randomly chosen to test the proposed algorithms under the condition of L subspaces. To study the effect of the fraction of missing entries in clustering and recovery performance of algorithms, we artificially corrupted images into 3 missing rate levels 10 %, 20 % to 30 %. To make the comparison of different algorithms as fair as possible, we randomly generated the missing images first, and then all algorithms were applied to the same randomly corrupted images to cluster the images and recover the missing pixels. To generate corrupted images with a specified missing fraction range from, we randomly removed squares whose size is no larger than \(10\,\times \,10\), repeatedly until the total fraction of missing pixels is no less than the specified fraction.

In Algorithm 2 (RSE-SSC), we initialise Y by filling in each missing pixel with the average value of corresponding pixels in other images with known pixel value. We implement SSC proposed in [7] in two different ways. In Algorithm “SSC-AVE”, we fill in the missing entries in the same way as “RSE-SSC”, and in Algorithm “SSC-0”, we fill in the missing entries by 0. We use the subspace clustering error (SCE), which is defined as

to demonstrate the clustering quality. For each set of L with different percentage of missing pixels, the averaged SCE over 20 random trials are calculated.

In Algorithm 1, we choose \(\lambda _e=\lambda _z=5\times 10^{-2}\), total loss threshold \(tol= 5\times 10^{-6}\), maximum iterations \(maxIter = 5\times 10^3\) in all experiments. We set \(\rho =1000/\mu \), where \(\mu :=\displaystyle \min _i \max _{j\ne i}|Y_i^TY_j|\) to avoid trivial solutions. It should be noted that \(\rho \) actually changes in each iteration, because matrix Y is updated in each iteration. To accelerate the convergence of Algorithm 1, we adopt the following stopping criterion to terminate the algorithm \(\Vert \hat{Y}A^k-\hat{Y}\Vert _F^2 + \Vert A^k-C^k\Vert _F^2 + \Vert A^k -A^{k-1}\Vert + \Vert C^k -C^{k-1}\Vert + \Vert E^k -E^{k-1}\Vert + \Vert \hat{Y}^k -\hat{Y}^{k-1}\Vert < tol\) or the iteration number exceeds the maxIter.

6.4 Results

We report the experimental results in Table 1. It shows the SCE of three algorithms, where “SCE-mean” represent the mean of the SCE in percentage over 20 random trials. From Table 1, we can see that when the images are incomplete, for example when \(L= 3\), the mean of SCE is usually smaller than 7 %. This means that the percentage of misclassified images is smaller than 7 %. It can been seen that our Algorithm 2 gives better results on clustering errors in most situations. Especially, when \(spa=20\,\%\) and \(30\,\%\), the mean clustering errors are much smaller than the ones given by SSC-AVE and SSC-0, this phenomena is more obvious with the increase of number of subjects. These comparison results show that our RSE-SSC model can cluster images very robustly and greatly outperforms SSC.

The subfigures (a) and (b) of Fig. 1 show the clustering results of one instance of \(L=5\) using Algorithm 2 for the Extended Yale B dataset and the COIL dataset. After applying our algorithm, each image is labled with a class ID, and comparing to the original class ID we could calculate the True Positive Rate and False Positive Rate of each original individual. The misclassified images in each cluster are labeled with colored rectangles and the true positive rate (TP) and false positive rate are also given as the title of the subfigures. Most of the misclassified images, as we can see, are not in good illumination conditions and they are thus difficult to be classified. Removing these illumination conditions will improve the experimental results a lot. We only show the results of one instance of \(L=5\) for the Extended Yale B dataset and the COIL20 dataset. The results on all datasets will be fully displayed in a longer version.

It should be noted that, due to the limited space, we just present results on the Extended Yale B dataset and the COIL dataset with 10 % entries missing, the other two missing rate level will be provided in a longer version or in the form of supplementary material. In the supplementary material, Fig. 1, Fig. 2 and Fig. 3 show the results for the Extended Yale B dataset, the COIL dataset and the USPS dataset with \(L=5\) using Algorithm 2. Figure 4, Fig. 5 and Fig. 6 show the accordingly clustering results of \(L=8\) using Algorithm 2 for these datasets.

7 Conclusions

Sparse subspace clustering is a well-known algorithm, and it is widely used in many research field nowadays, and a lot effort has been contributed to improve it. In this paper, we propose a novel approach to obtain the coefficient matrix. Compared with traditional sparse subspace clustering (SSC) approaches, the key advantage of our approach is that it provides a new perspective of the self-expressive property. We call it rigidly self-expressive (RSE) property. This new formulation captures the rigidly self-expressive property of the data points in the same subspace, and provides a new formulation for sparse subspace clustering. Extensions to traditional SSC could also be cooperate with this new formulation, and this could lead to a serial of approaches based on rigidly self-expressive property. We present a first-order algorithm to solve the nonconvex optimization, and further prove that it converges to a KKT point of the nonconvex problem under certain standard assumptions. Extensive experiments on the Extended Yale B dataset, the USPS digital images dataset, and the Columbia Object Image Library show that for images with up to 30 % missing pixels the clustering quality achieved by our approach outperforms the original SSC.

References

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends in Mach. Learn. 3(1), 1–122 (2011)

Boyd, S.P., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Bradley, P.S., Mangasarian, O.L.: K-plane clustering. J. Global Optim. 16(1), 23–32 (2000)

Chen, G.L., Lerman, G.: Spectral curvature clustering (SCC). Int. J. Comput. Vis. 81(3), 317–330 (2009)

Daubechies, I., Defrise, M., De Mol, C.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57(11), 1413–1457 (2004)

Dyer, E.L., Sankaranarayanan, A.C., Baraniuk, R.G.: Greedy feature selection for subspace clustering. J. Mach. Learn. Res. 14(1), 2487–2517 (2013)

Elhamifar, E.: Sparse modeling for high-dimensional multi-manifold data analysis. Ph.D. thesis, The Johns Hopkins University (2012)

Elhamifar, E., Vidal, R.: Sparse subspace clustering. In: Proceedings of CVPR, vol. 1–4, pp. 2782–2789 (2009)

Elhamifar, E., Vidal, R.: Sparse subspace clustering: algorithm, theory, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 35(11), 2765–2781 (2013)

Grant, M., Boyd, S., Ye, Y.: CVX: matlab software for disciplined convex programming (2008)

Ho, J., Yang, M.-H., Lim, J., Lee, K.-C., Kriegman, D.: Clustering appearances of objects under varying illumination conditions. In: Proceedings 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 1, pp. I-11–I-18. IEEE (2003)

Hull, J.J.: A database for handwritten text recognition research. IEEE Trans. Pattern Anal. Mach. Intell. 16(5), 550–554 (1994)

Kriegel, H.-P., Krger, P., Zimek, A.: Clustering high-dimensional data: a survey on subspace clustering, pattern-based clustering, and correlation clustering. ACM Trans. Knowl. Discov. Data (TKDD) 3(1), 1 (2009)

Lee, K.-C., Ho, J., Kriegman, D.J.: Acquiring linear subspaces for face recognition under variable lighting. IEEE Trans. Pattern Anal. Mach. Intell. 27(5), 684–698 (2005)

Liu, G., Xu, H., Yan, S.: Exact subspace segmentation and outlier detection by low-rank representation. In: Proceedings of AISTATS, pp. 703–711 (2012)

Lu, Y.M., Do, M.N.: Sampling signals from a union of subspaces. IEEE Sig. Proc. Mag. 25(2), 41–47 (2008)

Nene, S.A., Nayar, S.K., Murase, H.: Columbia object image library (coil-20). Technical report CUCS-005-96 (1996)

Ng, A.Y., Jordan, M.I., Weiss, Y.: On spectral clustering: analysis and an algorithm. Proc. NIPS 14, 849–856 (2002)

Ramamoorthi, R.: Analytic PCA construction for theoretical analysis of lighting variability in images of a lambertian object. IEEE Trans. Pattern Anal. Mach. Intell. 24(10), 1322–1333 (2002)

Rao, S.R., Tron, R., Vidal, R., Ma, Y.: Motion segmentation via robust subspace separation in the presence of outlying, incomplete, or corrupted trajectories. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2008, pp. 1–8. IEEE (2008)

Shen, Y., Wen, Z., Zhang, Y.: Augmented lagrangian alternating direction method for matrix separation based on low-rank factorization. Optim. Methods Softw. 29(2), 239–263 (2014)

Soltanolkotabi, M., Candes, E.J.: A geometric analysis of subspace clustering with outliers. Ann. Stat. 40(4), 2195–2238 (2012)

Soltanolkotabi, M., Elhamifar, E., Candes, E.J.: Robust subspace clustering. Ann. Stat. 42(2), 669–699 (2014)

Vidal, R.E.: Generalized principal component analysis (GPCA): an algebraic geometric approach to subspace clustering and motion segmentation. Ph.D. thesis, University of California at Berkeley (2003)

Wang, Y.-X., Xu, H.: Noisy sparse subspace clustering. In: Proceedings of ICML, pp. 89–97 (2013)

Wright, J., Ganesh, A., Rao, S., Peng, Y., Ma, Y.: Robust principal component analysis: exact recovery of corrupted low-rank matrices via convex optimization. In: Proceedings of NIPS, pp. 2080–2088 (2009)

Xu, Y., Yin, W., Wen, Z., Zhang, Y.: An alternating direction algorithm for matrix completion with nonnegative factors. Front. Math. China 7(2), 365–384 (2012)

Zappella, L., Llado, X., Salvi, J.: Motion segmentation: a review. Artif. Intell. Res. Dev. 184, 398–407 (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Qiao, L., Zhang, B., Sun, Y., Su, J. (2016). Rigidly Self-Expressive Sparse Subspace Clustering. In: Cao, H., Li, J., Wang, R. (eds) Trends and Applications in Knowledge Discovery and Data Mining. PAKDD 2016. Lecture Notes in Computer Science(), vol 9794. Springer, Cham. https://doi.org/10.1007/978-3-319-42996-0_9

Download citation

DOI: https://doi.org/10.1007/978-3-319-42996-0_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-42995-3

Online ISBN: 978-3-319-42996-0

eBook Packages: Computer ScienceComputer Science (R0)