Abstract

Partial distance correlation measures association between two random vectors with respect to a third random vector, analogous to, but more general than (linear) partial correlation. Distance correlation characterizes independence of random vectors in arbitrary dimension. Motivation for the definition is discussed. We introduce a Hilbert space of U-centered distance matrices in which squared distance covariance is the inner product. Simple computation of the sample partial distance correlation and definitions of the population coefficients are presented. Power of the test for zero partial distance correlation is compared with power of the partial correlation test and the partial Mantel test.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Distance correlation is a multivariate measure of dependence between random vectors in arbitrary, not necessarily equal dimension. Distance covariance (dCov) and the standardized coefficient, distance correlation (dCor), are nonnegative coefficients that characterize independence of random vectors; both are zero if and only if the random vectors are independent. The problem of defining a partial distance correlation coefficient analogous to the linear partial distance correlation coefficient had been an open problem since the distance correlation was introduced in 2007 [11]. For the definition of partial distance correlation, we introduce a new Hilbert space where the squared distance covariance is the inner product [15]. Our intermediate results include methods for applying distance correlation to dissimilarity matrices.

For background, we first review the definitions of population and sample dCov and dCor coefficients. In what follows, we suppose that X and Y take values in \(\mathbb R^p\) and \(\mathbb R^q\), respectively.

The distance covariance, \({\mathcal {V}}(X, Y)\), of two random vectors X and Y is a scalar coefficient defined by a weighted \(L_2\) norm measuring the distance between the joint characteristic function \(\phi _{{}_{X,Y}}\) of X and Y, and the product \(\phi _{{}_{X}}\phi _{{}_{Y}}\) of the marginal characteristic functions of X and Y. \({\mathcal {V}}(X, Y)\) is defined as the nonnegative square root of

where \(w(t, s) := (|t|_p^{1+p} |s|_q^{1+ q})^{-1}\). The above integral exists if |X| and |Y| have finite first moments. The choice of weight function is not unique, but when we consider certain invariance properties that one would require for a measure of dependence it can be shown to be unique [13]. This particular weight function may have first appeared in this context in 1993 where Feuerverger [3] proposed a bivariate test of independence based on an \(L_2\) norm (1).

The distance covariance coefficient can also be expressed in terms of expected distances, based on the following identity established in Székely and Rizzo [12, Theorem 8, p. 1250]. The notation \(X'\) indicates that \(X'\) is an independent and identically distributed (iid) copy of X. If (X, Y), \((X', Y')\), and \((X'', Y'')\) are iid, each with joint distribution (X, Y), then

provided that X and Y have finite first moments. Definition (2) can be extended to X and Y taking values in a separable Hilbert space. With that extension and our intermediate results, we can define and apply partial distance covariance (pdcov) and partial distance correlation (pdcor).

Distance correlation (dCor) \({\mathcal {R}}(X, Y)\) is a standardized coefficient,

The distance covariance and distance correlation statistics are functions of the double-centered distance matrices of the samples. For an observed random sample \(\{(x_{i}, y_{i}):i=1,\dots ,n\} \) from the joint distribution of random vectors X and Y, Let \((a_{ij})=(|x_i-x_j|_p)\) and \((b_{ij})=(|y_i-y_j|_q)\) denote the Euclidean distance matrices of the X and Y samples, respectively

Define the double-centered distance matrix of the X sample by

where

Similarly, define the double-centered distance matrix of the Y sample by \(\widehat{B}_{ij}= b_{ij} -\bar{b}_{i .}- \bar{b}_{. \,j} + \bar{b}_{..}\), for \(i,j=1,\dots ,n\).

A double-centered distance matrix \(\widehat{A}_{ij}\) has the property that all rows and columns sum to zero. Below we will introduce a modified definition \(\mathcal U\)-centering (“U” for unbiased) such that a \(\mathcal U\)-centered distance matrix \({\widetilde{A}}_{ij}\) has zero expected values of its elements \(E[{\widetilde{A}}_{ij}] = 0\) for all i, j.

Sample distance covariance \({\mathcal {V}}_n ({\mathbf {X}}, {\mathbf {Y}})\) is the square root of

and sample distance correlation is the standardized sample coefficient

The distance covariance test of multivariate independence is consistent against all dependent alternatives. Large values of the statistic \(n \mathcal V^2_n(\mathbf X, \mathbf Y)\) support the alternative hypothesis that X and Y are dependent (see [11, 12]). The test is implemented in the energy package [9] for R [8].

2 Partial Distance Correlation

To generalize distance correlation to partial distance correlation we require that essential properties of distance correlation are preserved, that pdcor has a meaningful interpretation as a population coefficient and as a sample coefficient, that inference is possible, and the methods are practical to apply. This generalization is not straightforward.

For example, one could try to follow the definition of partial correlation based on orthogonal projections in a Euclidean space, but this approach does not succeed. For partial distance covariance orthogonality means independence, but the orthogonal projection of a random variable onto the condition variable has a remainder that is typically not independent of the condition.

Alternately, because the product moment type of computing formula for the sample distance covariance (4) may suggest an inner product, one may consider defining the Hilbert space of double-centered distance matrices (3), where the inner product is (4). However, this approach also presents a problem, because in general it is not clear what the projection objects in this space actually represent. The difference of double-centered distance matrices is not a double-centered distance matrix of any sample except in some special cases. Although one could make the formal definitions, inference is not possible unless the sample coefficients have a meaningful interpretation as objects that arise from centering distance matrices of samples.

The sample coefficient \(\mathcal V^2_n(\mathbf X, \mathbf Y)\) is a biased estimator of \(\mathcal V^2(X,Y)\), so in a sense we could consider double centering to be a biased operation. We modify the inner product approach by first replacing double centering with \(\mathcal U\)-centering (1). The Hilbert space is the linear span of \(n \times n\) “\(\mathcal U\)-centered” matrices. The inner product in this space is unbiased dCov; it is an unbiased estimator of \(\mathcal V^2(X,Y)\). An important property of this space is that all linear combinations, and in particular all projections, are \(\mathcal U\)-centered matrices. (The corresponding property does not hold when we work with double-centered matrices.)

A representation theorem ([15]) connects the orthogonal projections to random samples in Euclidean space. With this representation result, methods for inference based on the inner product are defined and implemented. To obtain this representation we needed results for dissimilarity matrices. In many applications, such as community ecology or psychology, one has dissimilarity matrices rather than the sample points available, and the dissimilarities are often not Euclidean distances. Our intermediate results on dissimilarity matrices also extend the definitions, computing formulas, and inference to data represented by any symmetric, zero diagonal dissimilarity matrices.

2.1 The Hilbert Space of Centered Distance Matrices

Let \(A=(a_{ij})\) be an \(n \times n\) zero diagonal, symmetric matrix, \(n > 2\) (a dissimilarity matrix). The \(\mathcal U\)-centered matrix \({\widetilde{A}}_{i,j}\) is defined by

Then

is an unbiased estimator of squared population distance covariance \(\mathcal V^2(X, Y)\).

The Hilbert space of \(\mathcal U\)-centered matrices is defined as follows. Let \(\mathcal H_{n}\) denote the linear span of \(\mathcal U\)-centered \(n \times n\) distance matrices, and for each pair (C, D) in \(\mathcal H_n\), define their inner product by

It can be shown that every matrix \(C \in \mathscr {H}_n\) is the \(\mathcal U\)-centered distance matrix of some n points in \(\mathbb R^p\), where \(p \le n-2\).

The linear span of all \(n \times n\) \(\mathcal U\)-centered matrices is a Hilbert space \(\mathscr {H}_n\) with inner product defined by (3) [15].

2.2 Sample PdCov and PdCor

The projection operator (4) can now be defined in the Hilbert space \(\mathscr {H}_n\), \(n \ge 4\). Then partial distance covariance can be defined using projections in \(\mathscr {H}_n\). Suppose that x, y, and z are samples of size n and \({\widetilde{A}}, {\widetilde{B}},\) \({\widetilde{C}}\) are their \({\mathcal {U}}\)-centered distance matrices, respectively. Define the orthogonal projection

of \({\widetilde{A}}(x)\) onto \(({\widetilde{C}}(z))^\perp \), and

the orthogonal projection of \({\widetilde{B}}(y)\) onto \(({\widetilde{C}}(z))^\perp \). If \(({\widetilde{C}} \cdot \widetilde{C})=0\), \(P_{Z^\perp }(x) := {\widetilde{A}}\) and \(P_{Z^\perp }(y) := {\widetilde{B}}\). Then \(P_{z^\perp }(x)\) and \(P_{z^\perp }(y)\) are elements of \(\mathscr {H}_n\). Their inner product is as defined in (3).

Definition 1

(Partial distance covariance)

where \(P_{z^\perp }(x)\), and \(P_{z^\perp }(y)\) are defined by (4) and (5), and

Definition 2

(Partial distance correlation). Sample partial distance correlation is defined as the cosine of the angle \(\theta \) between the ‘vectors’ \(P_{z^\perp }(x)\) and \(P_{z^\perp }(y)\) in the Hilbert space \(\mathscr {H}_n\):

and otherwise \(R^*(x, y;z):=0\).

2.3 Representation in Euclidean Space

If it is true that the projection matrices \(P_{z^\perp }(x)\) and \(P_{z^\perp }(y)\) are the \(\mathcal U\)-centered Euclidean distance matrices of samples of points in Euclidean spaces, then the sample partial distance covariance (7) is in fact the distance covariance (2) of those samples.

Our representation theorem [15] holds that given an arbitrary element H of \(\mathscr {H}_n\), there exists a configuration of points \({\mathbf U}=[u_1,\dots ,u_n]\) in some Euclidean space \(\mathbb R^q\), for some \(q \ge 1\), such that the \(\mathcal U\)-centered Euclidean distance matrix of sample \({\mathbf U}\) is exactly equal to the matrix H. In general, every element in \(\mathscr {H}_n\), and in particular any orthogonal projection matrix, is the \(\mathcal U\)-centered distance matrix of some sample of n points in a Euclidean space.

The proof uses properties of \(\mathcal U\)-centered distance matrices and results from classical multidimensional scaling.

Lemma 1

Let \({\widetilde{A}}\) be a \({\mathcal {U}}\)-centered distance matrix. Then

-

(i)

Rows and columns of \({\widetilde{A}}\) sum to zero.

-

(ii)

\({\widetilde{({\widetilde{A}}})} = \widetilde{A}\). That is, if B is the matrix obtained by \(\mathcal U\)-centering an element \({\widetilde{A}} \in {\mathscr {H}}_n\), \(B = {\widetilde{A}}\).

-

(iii)

\({\widetilde{A}}\) is invariant to double centering. That is, if B is the matrix obtained by double centering the matrix \({\widetilde{A}}\), then \(B={\widetilde{A}}\).

-

(iv)

If c is a constant and B denotes the matrix obtained by adding c to the off-diagonal elements of \({\widetilde{A}}\), then \({\widetilde{B}} = {\widetilde{A}}\).

In the proof, Lemma 1(iv) is essential for our results, which shows that we cannot apply double centering as in the original (biased) definition of distance covariance. Invariance with respect to the additive constant c in (iv) does not hold for double-centered matrices.

Our representation theorem applies certain results from classical MDS and Cailliez [1, Theorem 1].

Theorem 1

Let H be an arbitrary element of the Hilbert space \(\mathscr {H}_n\) of \(\mathcal U\)-centered distance matrices. Then there exists a sample \(v_1,\dots ,v_n\) in a Euclidean space of dimension at most \(n-2\), such that the \(\mathcal U\)-centered distance matrix of \(v_1,\dots ,v_n\) is exactly equal to H.

For details of the proof and an illustration see [15]. The details of the proof reveal why the simpler idea of a Hilbert space of double-centered matrices is not applicable here. The diagonals of \(\widehat{A}\) are not zero, so we cannot get an exact solution by MDS. The inner product would depend on the additive constant c. Another problem is that \(\mathcal V_n^2 \ge 0\), but the inner product of projections in that space can be negative.

An application of our representation theorem also provides methods for zero diagonal symmetric non-Euclidean dissimilarities. There exist samples in Euclidean space such that their \(\mathcal U\)-centered Euclidean distance matrices are equal to the dissimilarity matrices. There are existing software implementations of classical MDS that can obtain these sample points. The R function cmdscale, for example, includes options to apply the additive constant of Cailliez [1] and to specify the dimension.

Using the inner product (3), we can define a bias corrected distance correlation statistic

where \({\widetilde{A}}={\widetilde{A}}(x), {\widetilde{B}}={\widetilde{B}}(y)\) are the \({\mathcal {U}}\)-centered distance matrices of the samples x and y, and \(|{\widetilde{A}}|=({\widetilde{A}} \cdot {\widetilde{A}})^{1/2}\).

Here we should note \(R^*\) is a bias corrected statistic for the squared distance correlation (5) rather than the distance correlation.

An equivalent computing formula for pdCor(x, y, z) is

\((1-(R^*_{x,z})^2)(1-(R^*_{y,z})^2) \ne 0\).

2.4 Algorithm to Compute Partial Distance Correlation \(R^*_{x,y;z}\) from Euclidean Distance Matrices

Equation (10) provides a simple and familiar form of computing formula for the partial distance correlation. The following algorithm summarizes the calculations for distance matrices \(A=(|x_i-x_j|)\), \(B=(|y_i-y_j|)\), and \(C=(|z_i-z_j|)\).

-

(i)

Compute \(\mathcal U\)-centered distance matrices \({\widetilde{A}}\), \({\widetilde{B}}\), and \({\widetilde{C}}\) using

$$\begin{aligned} {\widetilde{A}}_{i,j}= a_{i,j} - \frac{a_{i.}}{n-2} - \frac{a_{.j}}{n-2} + \frac{a_{..}}{(n-1)(n-2)}, \qquad i \ne j, \end{aligned}$$and \({\widetilde{A}}_{i,i}=0\).

-

(ii)

Compute inner products and norms using

$$ ({\widetilde{A}} \cdot {\widetilde{B}})=\frac{1}{n(n-3)} \sum _{i \ne j} {\widetilde{A}}_{i,j}{\widetilde{B}}_{i,j}, \qquad |{\widetilde{A}}|=({\widetilde{A}} \cdot {\widetilde{A}})^{1/2} $$and \(R^*_{x,y}\), \(R^*_{x,z}\), and \(R^*_{y,z}\) using \( R^*_{x,y}=\frac{({\widetilde{A}} \cdot {\widetilde{B}})}{|\widetilde{A}| |{\widetilde{B}}|}.\)

-

(iii)

If \(R_{x,z}^2\ne 1\) and \(R_{y,z}^2 \ne 1\)

$$ R^*_{x, y ; z} = \frac{R^*_{x, y} - R_{x, z}R^*_{y, z}}{\sqrt{1-(R^*_{x,z})^2}{\sqrt{1-(R^*_{y,z})^2}}}, $$

otherwise apply the definition (8).

In the above algorithm, it is typically not necessary to explicitly compute the projections, when (10) is applied. This algorithm has a straightforward translation into code. An implementation is provided in the pdcor package [10] (available upon request) or in the energy package for R.

3 Population Coefficients

Definition 3

(Population partial distance covariance) Introduce the scalar coefficients

If \(\mathcal V^2(Z,Z)=0\) define \(\alpha =\beta =0\). The double-centered projections of \( A_X\) and \( B_Y\) onto the orthogonal complement of \( C_Z\) in Hilbert space \(\mathscr {H}\) are defined

or in short \(P_{Z^\perp }(X)= A_X - \alpha C_{Z}\) and \(P_{Z^\perp }(Y)= B_Y - \beta C_Z\), where \( C_Z\) denotes double centered with respect to the random variable Z.

The population partial distance covariance is defined by the inner product

Definition 4

(Population pdCor) Population partial distance correlation is defined

where \(|P_{Z^\perp }(X)|=(P_{Z^\perp }(X) \cdot P_{Z^\perp }(X))^{1/2}.\) If \(|P_{Z^\perp }(X)||P_{Z^\perp }(Y)| = 0\) we define \(\mathcal R^*(X, Y;Z) = 0\).

Theorem 2

(Population pdCor) The following definition of population partial distance correlation is equivalent to Definition 4.

where \({\mathcal {R}}(X, Y)\) denotes the population distance correlation.

We have proved that projections can be represented as a \(\mathcal U\)-centered distance matrix of some configuration of n points \(\mathbf U\) in a Euclidean space \(\mathbb R^p\), \(p \le n-2\). Hence a test for \(\mathop {\mathrm{{pdCov}}}\nolimits (X,Y;Z)=0\) (or similarly a test for \(\mathop {\mathrm{{pdCor}}}\nolimits (X,Y;Z)=0\)) can be defined by applying the distance covariance test statistic \(\mathcal V^2_n(\mathbf U, \mathbf V)\), where U and V are a representation which exist by Theorem 1. This test can be applied to U, V using the dcov.test function of the energy package [9] or one can apply a test based on the inner product (6), which is implemented in the pdcor package [10].

4 Power Comparison

The tests for zero partial distance correlation are implemented as permutation (randomization) tests of the hypothesis of zero partial distance covariance. In these examples we used the dcov.test method described above, although in extensive simulations the two methods of testing this hypothesis are equivalent in their average power over 10,000 tests. The simulation design for the following examples used \(R=999\) replicates for the permutation tests and the estimated p-value is computed as

where \(I(\cdot )\) is the indicator function, \(T_0\) is the observed value of the test statistic, and \(T^{(k)}\) is the statistic for the k-th sample. In each example 10,000 tests are summarized at each point in the plot, and the significance level is 10 %.

Example 1

In this example, power of tests is compared for correlated trivariate normal data with standard normal marginal distributions. The variables X, Y, and Z are each correlated standard normal. The pairwise correlations are \(\rho (X,Y)=\rho (X,Z)=\rho (Y,Z)=0.5\). The power comparison summarized in Fig. 1 shows that pdcov has higher power than pcor or partial Mantel tests.

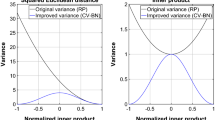

Example 2

This example presents a power comparison for correlated non-normal data. The variables X, Y, and Z are each correlated, X is standard lognormal, while Y and Z are each standard normal. The pairwise correlations are \(\rho (\log X,Y)=\rho (\log X,Z)=\rho (Y,Z)=0.5\). The power comparison summarized in Fig. 2 shows that pdcov has higher power than pcor or partial Mantel tests.

References

Cailliez, F.: The analytical solution of the additive constant problem. Psychometrika 48, 343–349 (1983)

Cox, T.F., Cox, M.A.A.: Multidimensional Scaling, 2nd edn. Chapman and Hall (2001)

Feuerverger, A.: A consistent test for bivariate dependence. Int. Stat. Rev. 61, 419–433 (1993)

Lyons, R.: Distance covariance in metric spaces. Ann. Probab. 41(5), 3284–3305 (2013)

Mantel, N.: The detection of disease clustering and a generalized regression approach. Cancer Res. 27, 209–220 (1967)

Mardia, K.V.: Some properties of classical multidimensional scaling. Commun. Stat. Theory and Methods 7(13), 1233–1241 (1978)

Mardia, K.V., Kent, J.T., Bibby, J.M.: Multivar. Anal. Academic Press, London (1979)

Team, R.C.: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria (2013). http://www.R-project.org/

Rizzo, M.L., Székely, G.J.: Energy: E-statistics (energy statistics). R package version 1.6.1 (2014). http://CRAN.R-project.org/package=energy

Rizzo, M.L., Székely, G.J.: pdcor: Partial distance correlation. R package version 1, (2013)

Székely, G.J., Rizzo, M.L., Bakirov, N.K.: Measuring and testing independence by correlation of distances. Ann. Stat. 35(6), 2769–2794 (2007). doi:10.1214/009053607000000505

Székely, G.J., Rizzo, M.L.: Brownian distance covariance. Ann. Appl. Stat. 3(4), 1236–1265 (2009). doi:10.1214/09-AOAS312

Székely, G.J., Rizzo, M.L.: On the uniqueness of distance covariance. Stat. Probab. Lett. 82(12), 2278–2282 (2012). doi:10.1016/j.spl.2012.08.007

Székely, G.J., Rizzo, M.L.: Energy statistics: statistics based on distances. J. Stat. Plan. Inference 143(8), 1249–1272 (2013). doi:10.1016/j.jspi.2013.03.018

Székely, G.J., Rizzo, M.L.: Partial distance correlation with methods for dissimilarities. Ann. Stat. 32(6), 2382–2412 (2014). doi:10.1214/14-AOS1255

Acknowledgments

Research of the first author was supported by the National Science Foundation, while working at the Foundation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Székely, G.J., Rizzo, M.L. (2016). Partial Distance Correlation. In: Cao, R., González Manteiga, W., Romo, J. (eds) Nonparametric Statistics. Springer Proceedings in Mathematics & Statistics, vol 175. Springer, Cham. https://doi.org/10.1007/978-3-319-41582-6_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-41582-6_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-41581-9

Online ISBN: 978-3-319-41582-6

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)