Abstract

In this paper a modification of the well-known Silhouette validity index is proposed. This index, which can be considered a measure of the data set partitioning accuracy, enjoys significant popularity and is often used by researchers. The proposed modification involves using an additional component in the original index. This approach improves performance of the index and provides better results during a clustering process, especially when changes of cluster separability are big. The new version of the index is called the SILA index and its maximum value identifies the best clustering scheme. The performance of the new index is demonstrated for several data sets, where the popular algorithm has been applied as underlying clustering techniques, namely the Complete–linkage algorithm. The results prove superiority of the new approach as compared to the original Silhouette validity index.

A. Krzyżak carried out this research at WUT during his sabbatical leave from Concordia University.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Clustering allows partitioning of data into homogeneous subsets (called clusters), inside which elements are similar to each other while being different from items in other groups. It is also called unsupervised learning or unsupervised classification. Nowadays, a large number of clustering algorithms exist that have found use in various fields such as data mining, bioinformatics, exploration data, etc. Clustering methods can be applied to designing neural networks and neuro-fuzzy systems [2–10, 16–18, 30–32, 37, 44]. However, the results of clustering algorithms are strongly dependent on the right choice of input parameters. Hence, for the same data but for different input parameters a clustering algorithm can produce different results. It should be noted that the number of clusters is significant input parameter of many clustering algorithms, which is often selected in advance. Thus, the key issue is how to properly evaluate results of data clustering. In the literature on the subject, three main techniques are used to evaluate partitioning of data sets, and they include external, internal or relative approaches [13, 38]. The relative methods are very popular and widely used by researchers. In this approach a clustering algorithm provides data partitioning for different values of input parameters and next partitioning schemes are compared to find the best results. For this purpose cluster validity indices are used. A great number of such indices have been introduced so far, e.g., [1, 11, 12, 14, 22, 39, 40, 45–47].

In this paper, a cluster validity index called the SILA index being a modification of the Silhouette index is proposed. This modification allows us to improve the index performance. Notice that the Silhouette index is often used by many researchers to evaluate clustering results. Unfortunately, in some cases it fails to detect correct partitioning of data sets. A detailed explanation of this problem is presented in Sect. 2. The proposed SILA index contains a component which corrects the index value when changes of cluster separability are considerable during a partitioning process (see Eq. (10)). In order to present the effectiveness of the new validity index several experiments were performed for various data sets. This paper is organized as follows: Sect. 2 presents the Silhouette index and detailed description of its properties. Section 3 describes a new validity index, which is a modification of the Silhouette index. Section 4 illustrates experimental results on artificial and real-life data sets. Finally, Sect. 5 presents conclusions.

2 Description of the Silhouette Index

Let us denote K-partition scheme of a data set X by C = \({\{C_1, C, ..., C_K\}}\), where \({C_k}\) indicates \(k_{th}\) cluster, \({k=1,..,K}\). Moreover, a mean of within-cluster distances, named \(a(\mathbf{x})\), is defined as the average distance between a pattern \(\mathbf{x}\) which belongs to \({C_k}\) and the rest of patterns \(\mathbf{x}_k\) also belonging to this cluster, such that

where \({n_k}\) is the number of patterns in \({C_k}\) and \({d\left( {\mathbf{x},\mathbf{x}_k }\right) }\) is a function of the distance between \(\mathbf{x}\) and \(\mathbf{x}_k\). Furthermore, the mean of distances of \(\mathbf{x}\) to the other patterns \(\mathbf{x}_l\) belonging to the cluster \({ C}_l\), where \(l = 1, ..., K\) and \(l \ne k\), can be written as:

where \({n_l}\) is the number of patterns in \({C_l}\). Thus, the smallest distance \(\delta (\mathbf{x},\mathbf{x}_l)\) can be defined as:

The so-called silhouette width of the pattern \({\mathbf{x}}\) can be expressed as follows:

Finally, the Silhouette index is defined as:

where n is the number of patterns in the data set X. Thus, this index can be also represented by:

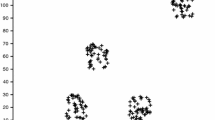

The Silhouette index is also called the SIL index. Unlike most of the validity indices, the SIL index can be used for clusters of arbitrary shapes. It should be noted that the index is based on two components, i.e., \(b(\mathbf x)\) and \(a(\mathbf x)\). As given above, the first component is the smallest of the mean distances of \(\mathbf{x}\) to the patterns belonging to other clusters. Then, \(a(\mathbf x)\) is defined as the average distance between \(\mathbf x\) and the rest of the patterns belonging to the same cluster. Notice that \(a(\mathbf x)\) can be also considered a measure of cluster compactness, whereas the numerator of \(S(\mathbf x)\), which is the difference between \(b(\mathbf x)\) and \(a(\mathbf x)\), can be considered a measure of cluster separability (see Eq. (4)). It should be noted that the value of the silhouette width is from the interval [\(-1, 1\)] and the element \(\mathbf x\) is assigned to the right cluster when \(S(\mathbf x)\) is close to 1, but when it is nearly \(-1\), \(\mathbf x\) is located in a wrong cluster. Hence, a maximum value of the Silhouette index indicates the right partition scheme. Moreover, it should be observed that the measure of cluster separability (numerator of Eq. (6)) essentially influences results of this index and in some cases it can fail to detect correct data partitioning. For example, this can happen when differences of distances between clusters are large. Figure 1 presents an example of 2–dimensional data set, which contains three clusters labelled by numbers 1, 2 and 3. Notice that the distances between the clusters are very different. Moreover, it can be seen that these clusters have several elements per class and large differences of distances between them. Thus, the distance between clusters 1 and 2 is about d1; then, between clusters 2 and 3 it is d2, and between 3 and 1 it is d3. It can be noted that the distance d1 (or d3) is much larger than d2. Let us denote by \({c^*}\) the correct number of clusters in the data set, so it is \({c^*=3}\). When the number of clusters K is more than \({c^*}\), the natural existing compact clusters are subdivided into small ones by a clustering algorithm. In this case, the minimum distance between clusters is small, which also makes this index value small (see Eq. (4)). However, when \({K=c^*}\), the value of \(b(\mathbf x)\) is equal to about d1 for \(\mathbf x\) belonging to cluster 1. Whereas \(b(\mathbf x)\) is about d2 for \(\mathbf x\) belonging to the cluster 2 (or 3). Consequently, a large distance between clusters 1 and 2 (or 1 and 3) makes that the value of the factor \(b(\mathbf x)\) calculated for cluster 1 is also much higher than \(a(\mathbf x)\) and the Silhouette index is high (see Eq. (6)). But when \(K<c^*\), the value of the index can be even higher than for \(K=c^*\). This is because clusters 2 and 3 are merged and now two new clusters are also far from each other. This means that \(b(\mathbf x)\) for both clusters is large in comparison to \(a(\mathbf x)\), which does not actually increase so much. Consequently, the sum of values of silhouette widths can be higher for \(K<c^*\) than for \(K=c^*\). Thus, due to large differences between cluster distances, the index can indicate an incorrect number of clusters. In the next section, a modification of the index is proposed so as to overcome this drawback.

3 Modification of the Silhouette Index

The modification involves an additional component which corrects values of the index. Thus, the new index, called the SILA index, is defined as follows:

where the S(x) is the \({silhouette\ width}\) (Eq. (4)). Whereas, the additional component \(A(\mathbf{x})\) is expressed as:

Thus, the new index can be represented in the following way:

or

In the next section the results of the experimental studies are presented to confirm the effectiveness of this approach.

4 Experimental Results

Several experiments were carried out to verify effectiveness of the new index. They are related to determining the number of clusters for artificial and real-life data sets when the Complete-linkage algorithm is applied as the underlying clustering method. It should be noted that in all the experiments the Euclidean distance and the min-max data normalization have been used. This approach is often applied, e.g., in the Weka machine learning toolkit [43].

4.1 Data Sets

Figures 2 and 3 show the randomly generated artificial data sets which were used in the experiments. Moreover, Table 1 presents their detailed description. These data consist of various numbers of clusters and elements per class. For instance, the first three of them called Data 1, Data 2 and Data 3 are 2- dimensional with 3, 5 and 8 clusters, respectively. The next three sets called Data 4, Data 5 and Data 6 are 3-dimensional with 4, 7 and 9 clusters, respectively. As it can be observed in Figs. 2 and 3 clusters are mostly circular and located in various distances from each other with some of them being quite close. For example, in Fig. 2 cluster sizes and distances between clusters are very different and they are located in two cluster groups in general. On the other hand, Fig. 3 presents various large clusters of 3-dimensional data sets. Here, distances between clusters are also very different and clusters create some groups. Whereas the real-life data were drawn from the UCI repository [20], and their detailed description is presented in Table 2. In experiments with the data sets, the Complete-linkage method as the underlying clustering algorithm was used for partitioning of the data. The number of clusters K was varied from \({K_{max}=}\) \({\sqrt{n}}\) to \({K_{min}=}\) 1. This value is an accepted rule in the clustering literature [23]. Moreover, in Figs. 4, 5 and 6 a comparison of the variations of the Silhouette and the SILA indices with respect to the number of clusters is presented. It can be seen that the SILA index provides the correct number of clusters for the all data sets. On the contrary, the Silhouette index incorrectly selects the partitioning schemes and thus the index mainly provides high distinct peaks when the number of clusters \(K=2\). This means that when the clustering algorithm merges clusters into larger ones and distances between them are large, influence of the separability measure is significant and consequently, this index provides incorrect results. On the other hand, despite the fact that the differences of distances between clusters are large, the SILA-index generates clear peaks which are related to the correct partitioning of these data. It can be observed that for real-life data sets both indices found the right number of clusters for the Iris data. However, for the Ecoli and the Glass data the Silhouette index indicates the number of clusters \(K=2\). On the other hand, the SILA index provides better results for the Glass, i.e., \(K=5\). Thus, for these sets, the number of clusters is determined more precisely by the SILA-index. Notice that when the number of clusters \(K>c^*\) the component \(A(\mathbf{x})\) poorly reduces values of this index because the clusters sizes are not so large.

5 Conclusions

In this paper, a new cluster validity index called the SILA index was proposed. It should be noted that this new index is a modification of the Silhouette index, which is very often used by researchers to evaluate partitioning of data. Furthermore, unlike most other indices the SILA index (also the Silhouette index) can be used for arbitrary shaped clusters. As mentioned above, the Silhouette index can indicate incorrect partitioning scheme when there are large differences of distances between clusters in a data set. Consequently, the new index contains an additional component which improves its performance and overcomes the drawback. This component uses a measure of cluster compactness which increases when a cluster size increases considerably and it reduces the high values of the index caused by large differences between clusters. To investigate the behaviour of the proposed validity index the Complete-linkage is used as the underlying clustering algorithm. All the presented results confirm high efficiency of the SILA index. It should also be noticed that cluster validity indices can be used during a process of designing various neuro-fuzzy structures [15, 19, 21, 24–29, 41, 42] and stream data mining algorithms [33–36].

References

Baskir, M.B., Türksen, I.B.: Enhanced fuzzy clustering algorithm and cluster validity index for human perception. Expert Syst. Appl. 40, 929–937 (2013)

Bas, E.: The training of multiplicative neuron model based artificial neural networks with differential evolution algorithm for forecasting. J. Artif. Intell. Soft Comput. Res. 6(1), 5–11 (2016)

Bertini, J.R., Nicoletti, M.C.: Enhancing constructive neural network performance using functionally expanded input data. J. Artif. Intell. Soft Comput. Res. 6(2), 119–131 (2016)

Bilski, J., Smolag, J.: Parallel architectures for learning the RTRN and Elman dynamic neural networks. IEEE Trans. Parallel Distrib. Syst. 26(9), 2561–2570 (2015)

Bilski, J., Smoląg, J., Galushkin, A.I.: The parallel approach to the conjugate gradient learning algorithm for the feedforward neural networks. In: Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2014, Part I. LNCS, vol. 8467, pp. 12–21. Springer, Heidelberg (2014)

Bilski, J., Smoląg, J.: Parallel approach to learning of the recurrent jordan neural network. In: Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2013, Part I. LNCS, vol. 7894, pp. 32–40. Springer, Heidelberg (2013)

Bilski, J., Smolag, J.: Parallel Realisation of the Recurrent Multi Layer Perceptron Learning. In: Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2012, Part I. LNCS, vol. 7267, pp. 12–20. Springer, Heidelberg (2012)

Cpalka, K., Rutkowski, L.: Flexible Takagi-Sugeno fuzzy systems. In: Proceedings of the International Joint Conference on Neural Networks (IJCNN), vols 1-5 Book Series: IEEE International Joint Conference on Neural Networks, pp. 1764–1769 (2005)

Cpaka, K., Rebrova, O., Nowicki, R., Rutkowski, L.: On design of flexible neuro-fuzzy systems for nonlinear modelling. Int. J. Gen Syst 42(6), 706–720 (2013)

Duch, W., Korbicz, J., Rutkowski, L., Tadeusiewicz, R. (eds.): Biocybernetics and biomedical engineering 2000. Neural Networks, vol. 6, Akademicka Oficyna Wydawnicza, EXIT, (2000)

Fränti, P., Rezaei, M., Zhao, Q.: Centroid index: cluster level similarity measure. Pattern Recognit. 47(9), 3034–3045 (2014)

Fred, L.N., Leitao, M.N.: A new cluster isolation criterion based on dissimilarity increments. IEEE Trans. Pattern Anal. Mach. Intell. 25(8), 944–958 (2003)

Jain, A., Dubes, R.: Algorithms for Clustering Data. Prentice-Hall, Englewood Cliffs (1988)

Kim, M., Ramakrishna, R.S.: New indices for cluster validity assessment. Pattern Recogn. Lett. 26(15), 2353–2363 (2005)

Korytkowski, M., Rutkowski, L., Scherer, R.: Fast image classification by boosting fuzzy classifiers. Inf. Sci. 327, 175–182 (2016)

Korytkowski, M., Rutkowski, L., Scherer, R.: From ensemble of fuzzy classifiers to single fuzzy rule base classifier. In: Rutkowski, L., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2008. LNCS (LNAI), vol. 5097, pp. 265–272. Springer, Heidelberg (2008)

Koshiyama, A.S., Vellasco, M., Tanscheit, R.: GPFIS-Control: a genetic fuzzy system for control tasks. J. Artif. Intell. Soft Comput. Res. 4(3), 167–179 (2014)

Laskowski, L., Jelonkiewicz, J.: Self-correcting neural network for stereo-matching problem solving. Fundamenta Informaticae 138(4), 457–482 (2015)

Li, X., Er, M.J., Lim, B.S., et al.: Fuzzy regression modeling for tool performance prediction and degradation detection. Int. J. Neural Syst. 20(5), 405–419 (2010)

Lichman, M.: UCI Machine Learning Repository. University of California, School of Information and Computer Science, Irvine (2013). http://archive.ics.uci.edu/ml

Miyajima, H., Shigei, N., Miyajima, H.: Performance comparison of hybrid electromagnetism-like mechanism algorithms with descent method. J. Artif. Intell. Soft Comput. Res. 5(4), 271–282 (2015)

Ozkan, I., Türksen, I.B.: MiniMax \(\varepsilon \)-stable cluster validity index for Type-2 fuzziness. Inf. Sci. 184(1), 64–74 (2012)

Pal, N.R., Bezdek, J.C.: On cluster validity for the fuzzy c-means model. IEEE Trans. Fuzzy Syst. 3(3), 370–379 (1995)

Patgiri, C., Sarma, M., Sarma, K.K.: A class of neuro-computational methods for assamese fricative classification. J. Artif. Intell. Soft Comput. Res. 5(1), 59–70 (2015)

Rigatos, G., Siano, P.: Flatness-based adaptive fuzzy control of spark-ignited engines. J. Artif. Intell. Soft Comput. Res. 4(4), 231–242 (2014)

Rutkowski, L., Cpalka, K.: Flexible neuro-fuzzy systems. IEEE Trans. Neural Networks 14(3), 554–574 (2003)

Rutkowski, L., Przybyl, A., Cpalka, K.: Novel online speed profile generation for industrial machine tool based on flexible neuro-fuzzy approximation. IEEE Trans. Industr. Electron. 59(2), 1238–1247 (2012)

Rutkowski, L., Cpalka, K.: Designing and learning of adjustable quasi-triangular norms with applications to neuro-fuzzy systems. IEEE Trans. Fuzzy Syst. 13(1), 140–151 (2005)

Rutkowski, L., Cpalka, K.: A general approach to neuro-fuzzy systems. In: 10th IEEE International Conference on Fuzzy Systems, vols. 1–3: Meeting the Grand Challenge: Machines that Serve People, pp. 1428–1431 (2001)

Rutkowski, L., Cpalka, K.: A neuro-fuzzy controller with a compromise fuzzy reasoning. Control Cybern. 31(2), 297–308 (2002)

Rutkowski, L., Przybył, A., Cpałka, K., Er, M.J.: Online Speed Profile Generation for Industrial Machine Tool Based on Neuro-fuzzy Approach. In: Rutkowski, L., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2010, Part II. LNCS, vol. 6114, pp. 645–650. Springer, Heidelberg (2010)

Rutkowski, L., Cpalka, K.: Compromise approach to neuro-fuzzy systems. Technol. Book Ser. Frontiers Artif. Intell. Appl. 76, 85–90 (2002)

Rutkowski, L., Pietruczuk, L., Duda, P., Jaworski, M.: Decision trees for mining data streams based on the McDiarmids bound. IEEE Trans. Knowl. Data Eng. 25(6), 1272–1279 (2013)

Rutkowski, L., Jaworski, M., Pietruczuk, L., Duda, P.: Decision trees for mining data streams based on the Gaussian approximation. IEEE Trans. Knowl. Data Eng. 26(1), 108–119 (2014)

Rutkowski, L., Jaworski, M., Pietruczuk, L., Duda, P.: A new method for data stream mining based on the misclassification error. IEEE Trans. Neural Networks Learn. Syst. 26(5), 1048–1059 (2015)

Rutkowski, L., Jaworski, M., Pietruczuk, L., Duda, P.: The CART decision tree for mining data streams. Inf. Sci. 266, 1–15 (2014)

Saitoh, D., Hara, K.: Mutual learning using nonlinear perceptron. J. Artif. Intell. Soft Comput. Res. 5(1), 71–77 (2015)

Sameh, A.S., Asoke, K.N.: Development of assessment criteria for clustering algorithms. Pattern Anal. Appl. 12(1), 79–98 (2009)

Shieh, H.-L.: Robust validity index for a modified subtractive clustering algorithm. Appl. Soft Comput. 22, 47–59 (2014)

Starczewski, A.: A new validity index for crisp clusters. Pattern Anal. Appl. (2015). doi:10.1007/s10044-015-0525-8

Starczewski, J., Rutkowski, L.: Interval type 2 neuro-fuzzy systems based on interval consequents. In: Rutkowski, L., Kacprzyk, J. (eds.) Neural Networks and Soft Computing. Advances in Soft Computing, pp. 570–577. Springer-Verlag, Physica-Verlag HD, Heidelberg (2003)

Starczewski, J.T., Rutkowski, L.: Connectionist structures of type 2 fuzzy inference systems. In: Wyrzykowski, R., Dongarra, J., Paprzycki, M., Waśniewski, J. (eds.) PPAM 2001. LNCS, vol. 2328, p. 634. Springer, Heidelberg (2002)

Weka 3: Data mining software in Java. University of Waikato, New Zealand. http://www.cs.waikato.ac.nz/ml/weka

Wozniak, M., Polap, D., Nowicki, R., Napoli, C., Pappalardo, G., Tramontana, E.: Novel approach toward medical signals classifier. In: 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Irlandia (2015). doi:10.1109/IJCNN.2015.7280556

Wu, K.L., Yang, M.S., Hsieh, J.N.: Robust cluster validity indexes. Pattern Recogn. 42, 2541–2550 (2009)

Zalik, K.R.: Cluster validity index for estimation of fuzzy clusters of different sizes and densities. Pattern Recogn. 43, 3374–3390 (2010)

Zhang, D., Ji, M., Yang, J., Zhang, Y., Xie, F.: A novel cluster validity index for fuzzy clustering based on bipartite modularity. Fuzzy Sets Syst. 253, 122–137 (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Starczewski, A., Krzyżak, A. (2016). A Modification of the Silhouette Index for the Improvement of Cluster Validity Assessment. In: Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L., Zurada, J. (eds) Artificial Intelligence and Soft Computing. ICAISC 2016. Lecture Notes in Computer Science(), vol 9693. Springer, Cham. https://doi.org/10.1007/978-3-319-39384-1_10

Download citation

DOI: https://doi.org/10.1007/978-3-319-39384-1_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-39383-4

Online ISBN: 978-3-319-39384-1

eBook Packages: Computer ScienceComputer Science (R0)