Abstract

The growing need for dealing with big data has made it necessary to find computationally efficient methods for identifying important factors to be considered in statistical modeling. In the linear model, the Lasso is an effective way of selecting variables using penalized regression. It has spawned substantial research in the area of variable selection for models that depend on a linear combination of predictors. However, work addressing the lack of optimality of variable selection when the model errors are not Gaussian and/or when the data contain gross outliers is scarce. We propose the weighted signed-rank Lasso as a robust and efficient alternative to least absolute deviations and least squares Lasso. The approach is appealing for use with big data since one can use data augmentation to perform the estimation as a single weighted L 1 optimization problem. Selection and estimation consistency are theoretically established and evaluated via simulation studies. The results confirm the optimality of the rank-based approach for data with heavy-tailed and contaminated errors or data containing high-leverage points.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

2.1 Introduction

The growing need for dealing with ‘big data’ has made it necessary to find ways of determining the few important factors to consider in the statistical modeling. In the linear and generalized linear models, this translates to identifying the covariates that are most needed in the prediction of the outcome. In this regard, the Lasso method introduced in Tibshirani (1996) has garnered significant attention in the past two decades. The Lasso method takes advantage of the singularity of the L 1 penalty to effectively select variables via the penalized least squares procedure. This work has been refined and extended in various directions. See, for example, Fan and Li (2001), Zou and Hastie (2005), Zou (2006), Wang and Leng (2008), and references therein. Much of the focus has been in establishing the so-called “Oracle” property Fan and Li (2001) that consists of selection consistency and estimation efficiency. These are both asymptotic properties where selection consistency refers to ones ability to correctly identify the zero regression coefficients while estimation efficiency refers to ones ability to provide a \(\sqrt{n}\)-consistent estimator of the non-zero coefficients.

However, there are not too many results that address the lack of optimality of these variable selection procedures when the model errors are not Gaussian and/or when the data contain gross outliers. An approach based on penalized Jaeckel-type rank-regression was discussed in Johnson and Peng (2008), Johnson et al. (2008), Johnson (2009), Leng (2010) and Xu et al. (2010). The computation is complicated and, as in unpenalized rank-regression, the approach used in these papers will only result in robustness in the response space. For variable selection, however, getting a handle on leverage is crucial. One paper that discussed this issue and tried to address the influence of high leverage points is Wang and Li (2009), where they considered penalized weighted Wilcoxon estimation. Our proposed approach based on minimization of a penalized weighted signed-rank norm is much simpler to compute and provides protection against outliers and high-leverage points. It also allows one flexibility through choice of score generating functions. One limitation of our proposed approach is that it requires symmetry of the error density. In this case, the estimates are equivalent to Jaeckel-type rank-regression estimates.

Consider the linear regression model given by

where \(\boldsymbol{\beta }_{0} \in \mathcal{B}\subset \mathbb{R}^{d}\) is a vector of parameters, \(\boldsymbol{x}_{i}\) is a vector of independent variables in a vector space \(\mathbb{X}\), and the errors e i are assumed to be i.i.d. with a distribution function F. Let \(\mathbf{V}_{n} =\{ (y_{1},\boldsymbol{x}_{1}),\ldots,(y_{n},\boldsymbol{x}_{n})\}\) be the set of sample data points. Note that \(\mathbf{V}_{n} \subset \mathbb{V} \equiv \mathbb{R} \times \mathbb{X}\). We shall assume that \(\mathcal{B}\) is a compact subspace of \(\mathbb{R}^{d}\), \(\boldsymbol{\beta }_{0}\) is an interior point of \(\mathcal{B}\).

Rank-based approaches have been shown to possess a high breakdown property resulting on robust and efficient estimators. The rank-based approach considered in this paper is based on the so-called the weighted signed-rank (WSR) norm proposed in Bindele and Abebe (2012) for estimation of coefficients of general nonlinear models. Here we consider WSR with added penalty for simultaneous estimation and variable selection in linear models. That is, we obtained an estimator \(\hat{\boldsymbol{\beta }}_{n}\) of \(\boldsymbol{\beta }_{0}\) satisfying

where \(Q(\boldsymbol{\beta })\) is a penalized WSR objective function

and \(D_{n}(\mathbf{V}_{n},w,\boldsymbol{\beta })\) is the WSR dispersion function defined by

Here \(z_{i}(\boldsymbol{\beta }) = y_{i} -\boldsymbol{ x}_{i}^{{\prime}}\boldsymbol{\beta }\), \(\vert z(\boldsymbol{\beta })\vert _{(i)}\) is the ith ordered value among \(\vert z_{1}(\boldsymbol{\beta })\vert,\ldots,\vert z_{n}(\boldsymbol{\beta })\vert \), and the numbers a n (i) are scores generated as \(a_{n}(i) =\varphi ^{+}(i/(n + 1))\), for some bounded and non-decreasing score function \(\varphi ^{+}: (0,1) \rightarrow \mathbb{R}^{+}\) that has at most a finite number of discontinuities. The function \(w: \mathbb{X} \rightarrow \mathbb{R}^{+}\) is a continuous weight function. The penalty function \(P_{\lambda _{j}}(\cdot )\) is defined on \(\mathbb{R}^{+}\). When the penalty function is the Lasso penalty Tibshirani (1996) \(P_{\lambda _{j}}(\vert t\vert ) =\lambda \vert t\vert \) for all j, we will refer to the resulting estimator as the WSR-Lasso (WSR-L), and when the penalty function is the adaptive Lasso Zou (2006) \(P_{\lambda _{j}}(\vert t\vert ) =\lambda _{j}\vert t\vert \), we will refer to the estimator as WSR-Adaptive Lasso (WRS-AL) estimator. We should point out that for φ + ≡ 1, the objective function in (2.3) reduces to the WLAD-Lasso discussed in Arslan (2012). If additionally w ≡ 1, then we obtain the LAD-lasso discussed in Wang et al. (2007). While these LAD based estimators are easy to compute and provide robust estimators, they lack efficiency especially when the error density at zero is small (Hettmansperger and McKean 2011; Leng 2010). Note that, while not stressed in our notation, \(\hat{\boldsymbol{\beta }}_{n}\) depends on the tuning parameter \(\boldsymbol{\lambda } = (\lambda _{1},\ldots,\lambda _{d})^{{\prime}}\).

Using the same idea in Wang et al. (2007), either under WSR-L or WSR-AL, one can write \(Q(\boldsymbol{\beta })\) as

where \(z_{i}^{{\ast}}(\boldsymbol{\beta }) = y_{i}^{{\ast}}-\boldsymbol{ x}_{i}^{{\ast}{\prime}}\boldsymbol{\beta }\) with

and

Here e i is the d-dimensional vector with ith component equal to 1 and all the others equal to 0. To this end, Eq. (2.5) can be seen as the weighted L 1 objective function. In Eq. (2.6) the WSR-L objective function is obtained by putting λ i = λ for all i. To avoid any possible confusion, we will use Q ℓ w(⋅ ) and Q aℓ w(⋅ ) for WSR-L and WSR-AL objective functions, respectively.

Remark 2.1.

Considering the unpenalized objective function \(D_{n}(\mathbf{V}_{n},w,\boldsymbol{\beta })\) defined in Eq. (2.4), asymptotic properties (consistency and \(\sqrt{n}\)-asymptotic normality) of the WSR estimator with w ≡ 1 were established under mild regularity conditions in Hössjer (1994). Considering the weighted case, analogous asymptotic results were obtained by Bindele and Abebe (2012) for general nonlinear regression model.

2.2 Asymptotics

In this section, we provide the asymptotic properties of the WSR-AL estimator defined in (2.2) under regularity conditions. Consider the following assumptions

- (I 1):

-

\(P\big(\boldsymbol{x}^{{\prime}}\boldsymbol{\beta } =\boldsymbol{ x}^{{\prime}}\boldsymbol{\beta }_{0}\big) <\alpha\) for all \(\boldsymbol{\beta }\neq \boldsymbol{\beta }_{0}\), 0 < α ≤ 1, and \(E_{G}[\vert \boldsymbol{x}\vert ^{r}] < \infty \) for some r > 1, G being the distribution of \(\boldsymbol{x}\).

- (I 2):

-

The density f of ɛ is symmetric about zero, strictly decreasing on \(\mathbb{R}^{+}\), and absolutely continuous with finite Fisher information. Its derivative f′ is bounded and E F ( | ɛ | r) < ∞ for some r > 1.

These two assumptions ensure the strong consistency of \(\tilde{\boldsymbol{\beta }}_{n}=\mathop{\mathrm{Argmin}}\limits _{\boldsymbol{\beta }}D_{n}(\mathbf{V}_{n},w,\boldsymbol{\beta })\).

2.2.1 Consistency and Asymptotic Normality

We shall assume that p 0 ≤ d of the true regression parameters are nonzero. Thus, without loss of generality, we assume β 0j ≠ 0 for j ≤ p 0 and β 0j = 0 for j > p 0. Thus \(\boldsymbol{\beta }_{0}\) can be partitioned as \(\boldsymbol{\beta }_{0} = (\boldsymbol{\beta }_{0a}^{{\prime}},\boldsymbol{\beta }_{0b}^{{\prime}})^{{\prime}}\) with \(\boldsymbol{\beta }_{0b} = \mathbf{0}\). Also, \(\hat{\boldsymbol{\beta }}_{n}\) can be similarly partitioned as \(\hat{\boldsymbol{\beta }}_{n} = (\hat{\boldsymbol{\beta }}_{na}^{{\prime}},\hat{\boldsymbol{\beta }}_{nb}^{{\prime}})^{{\prime}}\) with \(\hat{\boldsymbol{\beta }}_{na} = (\hat{\beta }_{n,1},\ldots,\hat{\beta }_{n,p_{0}})^{{\prime}}\), and \(\hat{\boldsymbol{\beta }}_{nb} = (\hat{\beta }_{n,p_{0}+1},\ldots,\hat{\beta }_{n,d})^{{\prime}}\).

Following Johnson and Peng (2008), we define

Also, under Eq. (2.5), taking the negative gradient with respect to \(\boldsymbol{\beta }\), we obtain

where \(S_{n}(\boldsymbol{\beta }) = -\nabla _{\boldsymbol{\beta }}D_{n}(\mathbf{V}_{n},w,\boldsymbol{\beta })\). In addition to (I 1) − (I 2), we will need the following assumption:

- (I 3):

-

Define \(a_{n} =\max _{1\leq j\leq p_{0}}H_{\lambda _{j}}(\vert t\vert )\quad \mbox{ and}\quad b_{n} =\min _{j>p_{0}}H_{\lambda _{j}}(\vert t\vert ),\;\;\forall \ t\ \mbox{ fixed}\), and assume that

-

(i)

\(\sqrt{n}a_{n} \rightarrow 0\) and \(\sqrt{n}b_{n} \rightarrow \infty \) as n → ∞

-

(i i)

\(\lim _{n\rightarrow \infty }\inf _{\vert t\vert \leq c/\sqrt{n}}\{\lambda _{n}^{-1}H_{\lambda _{ j}}(\vert t\vert )\} > 0\) for any c > 0.

-

(i)

Remark 2.2.

Note that for the adaptive Lasso case where \(P_{\lambda _{j}}(\vert t\vert ) =\lambda _{j}\vert t\vert \), and in assumption (I 3), a n and b n are reduced to \(a_{n} =\max _{1\leq j\leq p_{0}}\lambda _{j}\) and \(b_{n} =\min _{p_{0}+1\leq j\leq d}\lambda _{j}\), as \(H_{\lambda _{j}}(\vert t\vert ) =\lambda _{j}\). It is worth pointing out the Lasso penalty does not satisfy assumption (I 3) which is not surprising as it is well-known that the Lasso estimator does not have the oracle property, and (I 3) is key to ensuring the oracle property of the resulting estimator.

Theorem 2.1.

Under assumptions (I 1 ) − (I 3 ), \(\hat{\boldsymbol{\beta }}_{n}\) exists and is a \(\sqrt{n}\) -consistent estimator of \(\boldsymbol{\beta }_{0}\).

The proof this theorem is provided in Appendix.

Next consider the following assumption commonly imposed in the framework of signed-rank estimation, see Hössjer (1994) and Abebe et al. (2012):

- (I 4):

-

φ + ∈ C 2((0, 1)∖ E) with bounded derivatives, where E is a finite set of discontinuities.

Following Hössjer (1994), set

where \(h_{F}(u) = -f'(F^{-1}(u))/f(F^{-1}(u))\). As it is pointed out in Hössjer (1994), (I 1) and (I 2) imply that \(\zeta _{\varphi ^{+ }} > 0\). Also, letting J denote the joint distribution of \((y,\boldsymbol{x})\) and by symmetry of f, one can define a corresponding symmetric distribution as follows:

Now setting \(F_{\boldsymbol{\beta },i}(t) = \frac{1} {2}E_{G}\{\boldsymbol{x}_{i}F(t +\boldsymbol{ x}^{\tau }\boldsymbol{\beta })\}\) and \(\boldsymbol{\xi }(\boldsymbol{\beta }) = (\xi _{1}(\boldsymbol{\beta }),\ldots,\xi _{n}(\boldsymbol{\beta }))^{\tau }\), where

it is shown under (I 1) − (I 3) in Hössjer (1994) that \(S_{n}(\boldsymbol{\beta }) -\boldsymbol{\xi }(\boldsymbol{\beta }) \rightarrow 0\quad a.s.\) as n → ∞. Let \(W(\boldsymbol{x}) = diag\{w_{1}(\boldsymbol{x}),\ldots,w_{n}(\boldsymbol{x})\}\) and define the expected weighted Gram matrix \(\varSigma = E_{G}[\boldsymbol{x}^{{\prime}}W(\boldsymbol{x})\boldsymbol{x}]\). Now partition \(\boldsymbol{x}\) as \(\boldsymbol{x} = (\boldsymbol{x}_{a},\boldsymbol{x}_{b})\), according to nonzero and zero coefficients, and let Σ a denote the top left p 0 × p 0 sub-matrix of Σ. We will assume that Σ a is positive definite. The following main result gives the asymptotic properties (oracle property) of the penalized WSR estimator given in (2.2). Its proof is provided in Appendix.

Theorem 2.2.

Under assumptions (I 1 ) to (I 4 ), we have \(\lim _{n\rightarrow \infty }P(\hat{\boldsymbol{\beta }}_{nb} = \mathbf{0}) = 1\) , and

where Σ a is a p 0 × p 0 positive definite matrix.

Remark 2.3.

From the two theorems above, (i) and (ii) in assumption (I 3) together with (I 1), I 2 and (I 4) are imposed to ensure the \(\sqrt{n}\)-consistency, the oracle property and the \(\sqrt{n}\)-asymptotic normality of the proposed estimator. Note that although Theorem 2.2 is similar to that of Johnson and Peng (2008), the definitions of a n and b n given here are more general and the assumptions needed for the asymptotic normality of the gradient function \(S_{n}(\boldsymbol{\beta })\) are very different.

2.3 Some Practical Considerations

2.3.1 Estimation of the Tuning Parameter \(\boldsymbol{\lambda }\)

Another important issue in the estimation of \(\boldsymbol{\beta }_{0}\) in model (2.1), is the choice of the λ j ’s in Eq. (2.3). As proposed by Johnson et al. (2008) \(\boldsymbol{\lambda }\) can be estimated as follows

where \(e(\boldsymbol{\lambda }) = tr\big[\mathbf{X}\{\mathbf{X}^{{\prime}}\mathbf{X} +\varSigma _{\boldsymbol{\lambda },\hat{\boldsymbol{\beta }}_{n}(\boldsymbol{\lambda })}\}^{-1}\mathbf{X}^{{\prime}}\big]\) and X is the n × d matrix with column vectors \(\boldsymbol{x}_{i}\) and \(\varSigma _{\boldsymbol{\lambda },\hat{\boldsymbol{\beta }}_{n}(\boldsymbol{\lambda })}\) a diagonal matrix with entries

This cross validation procedure was considered by Johnson et al. (2008) and was shown to have advantage over the least squares cross valuation criterion that is obtained by replacing the numerator of the right hand side of Eq. (2.8) by the least squares objective function. Note that although the idea similar, the objective function \(D_{n}(\mathbf{V}_{n},w,\boldsymbol{\beta })\) considered in this paper is very different to the one considered in Johnson et al. (2008). If we restrict ourselves to WSR-AL, another alternative to estimating \(\boldsymbol{\lambda }\) is to consider the AIC and BIC approaches discussed in Wang et al. (2007) based on the considered objective function. That is, obtain \(\hat{\boldsymbol{\lambda }}\) as,

which leads to \(\hat{\lambda }_{j} = 1/(n\vert \tilde{\beta }_{nj}\vert )\), and

which leads to \(\hat{\lambda }_{j} =\log n/(n\vert \tilde{\beta }_{nj}\vert )\), where \(\tilde{\boldsymbol{\beta }}_{n} =\mathop{ \mathrm{Argmin}}\limits _{\boldsymbol{\beta }\in \mathcal{B}}D_{n}(\mathbf{V}_{n},w,\boldsymbol{\beta })\).

2.3.2 Choice of Weights

In our analysis, we choose the weight function \(w(\boldsymbol{x})\) to be

where \(d(\boldsymbol{x}) = (\boldsymbol{x} -_{\boldsymbol{x}})^{{\prime}}\mathbf{C}_{\boldsymbol{x}}^{-1}(\boldsymbol{x} -_{\boldsymbol{x}})\) is a robust Mahalanobis distance, with \(_{\boldsymbol{x}}\) and \(\mathbf{C}_{\boldsymbol{x}}\) being robust estimates of location and covariance of \(\boldsymbol{x}\), respectively and η being some positive constant usually set at χ 0. 95 2 in practice. Under this choice, it is shown in Bindele and Abebe (2012) that the resulting estimator has a bounded influence function.

2.3.3 Computational Algorithm

For computation purposes, the following steps can be followed:

-

1.

Obtain the unpenalized (W)SR estimator \(\hat{\boldsymbol{\beta }}_{\varphi ^{+}}\).

-

2.

Use \(\hat{\boldsymbol{\beta }}_{\varphi ^{+}}\).

-

3.

Form \(z^{{\ast}}(\boldsymbol{\beta },\hat{\boldsymbol{\lambda }}) = y^{{\ast}}-\boldsymbol{ x}_{\hat{\boldsymbol{\lambda }}}^{{\ast}{\prime}}\boldsymbol{\beta }\), where \(\boldsymbol{x}_{\hat{\boldsymbol{\lambda }}}^{{\ast}}\) is as defined in Eq. (2.6) with \(\boldsymbol{\lambda } =\hat{ \boldsymbol{\lambda }}\).

-

4.

Find

$$\displaystyle{\mathop{\mathrm{Argmin}}\limits _{\boldsymbol{\beta }}\sum _{i=1}^{n+d}\hat{v}_{ i}\vert z_{i}^{{\ast}}(\boldsymbol{\beta },\hat{\boldsymbol{\lambda }})\vert }$$using any weighted LAD software (e.g. quanteg, rfit in R).

2.4 Simulation and Real Data Studies

To demonstrate the performance of our proposed method, several simulation scenarios and a real data set are considered.

2.4.1 Low Dimensional Simulation

The setting for the low-dimensional simulation is taken from Tibshirani (1996). We take a sample of size n = 50 where the number of predictor variables is d = 8 and \(\boldsymbol{\beta }_{0}\) is set at \(\boldsymbol{\beta }_{0} = (3,1.5,0,0,2,0,0,0)^{{\prime}}\). Thus p 0 = 3. To study the effect of tail thickness, contamination, and leverage, we considered three different scenarios:

- Scenario 1: :

-

The vector of predictor variables \(\boldsymbol{x}\) is generated as \(\boldsymbol{x} \sim N_{8}(\mathbf{0},V )\), where V = (v ij ) and \(v_{ij} = 0.5^{\vert i-j\vert }\). The error distributions are t and contaminated normal. That is, the errors are generated as e ∼ t df for several degrees of freedom (df) and \(e \sim (1-\epsilon )N(0,1) +\epsilon N(0,3^{2})\) for several levels of contamination ε. These distributions allow us to investigate the effect of tail thickness and the rate of contamination on the proposed method.

- Scenario 2: :

-

The vector of predictors \(\boldsymbol{x}\) is generated as \(\boldsymbol{x} \sim (1-\epsilon )N_{8}(\mathbf{0},V ) +\epsilon N_{8}(\mathbf{1}\mu,V )\), with μ = 5 and the errors are generated as e ∼ N(0, 1). This enables us to study the effect of contamination (such us gross outliers and leverage points) in the design space.

- Scenario 3: :

-

This scenario considers a partial model misspecification similar to the one in Arslan (2012). In this case, we take \(\boldsymbol{\beta }_{0} = (3,1.5,0,0,2,0,0,0)^{{\prime}}\) and \(\boldsymbol{\beta }_{0}^{{\ast}} = (3,\ldots,3)^{{\prime}}\). Then \(\boldsymbol{x}\) and y are generated as follows: for \(i = 1,\ldots,n -\left [n\epsilon \right ]\), \(\boldsymbol{x}_{i} \sim N_{8}(\mathbf{0},V )\) and \(y_{i} =\boldsymbol{ x}_{i}^{{\prime}}\boldsymbol{\beta }_{0} + N(0,1)\), for \(i = n -\left [n\epsilon \right ] + 1,\ldots,n\), \(\boldsymbol{x}_{i} \sim N_{8}(\mathbf{1}\mu,V )\), μ = 5, and \(y_{i} =\boldsymbol{ x}_{i}^{{\prime}}\boldsymbol{\beta }_{0}^{c} + N(0,1)\). Varying ε in [0, 1) allows us to study the effect of various levels of model contamination.

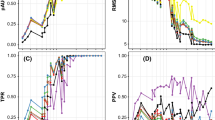

In all cases, we considered the adaptive lasso penalty where the tuning parameter is computed using the BIC criterion. The estimators studied were least squares (LS-AL), least absolute deviations (LAD-AL), signed-rank (SR-AL), weighted LAD (WLAD-AL), and weighted SR (WSR-AL). The weights were computed as discussed above using minimum covariance determinant (MCD) of Rousseeuw (1984). We performed 1000 replications and calculated the average number of correct zeros (true negatives), the average number of incorrect zeros (false negatives), the percentage of correct models identified, and relative efficiencies versus LS-AL of the proposed estimators for estimating β 1 based on estimated MSEs. The results of Scenario 1 are given in Figs. 2.1 and 2.2 while the results of Scenarios 2 and 3 are given in Figs. 2.3 and 2.4, respectively.

Average number of correct and incorrect zeroes, Relative model error, Percentage of correct fit, relative efficiencies (RE) against t distribution df (Scenario 1). The symbols in the plots are LS-AL (open triangle), LAD-AL (open square), SR-AL (open circle), WLAD-AL (filled square) and WSR-AL (filled circle)

Average number of correct and incorrect zeroes, Relative model error, Percentage of correct fit, relative efficiencies (RE) against contamination proportion (ε) of the contaminated normal distribution (Scenario 1). The symbols in the plots are LS-AL (open triangle), LAD-AL (open square), SR-AL (open circle), WLAD-AL (filled square) and WSR-AL (filled circle)

Average number of correct and incorrect zeroes, Relative model error, Percentage of correct fit, relative efficiencies (RE) against contamination proportion (ε) of the distribution of the predictor \(\boldsymbol{x}\) (Scenario 2). The symbols in the plots are LS-AL (open triangle), LAD-AL (open square), SR-AL (open circle), WLAD-AL (filled square) and WSR-AL (filled circle)

Average number of correct and incorrect zeroes, Relative model error, Percentage of correct fit, relative efficiencies (RE) against contamination proportion (ε) of the model contamination (Scenario 3). The symbols in the plots are LS-AL (open triangle), LAD-AL (open square), SR-AL (open circle), WLAD-AL (filled square) and WSR-AL (filled circle)

Figure 2.1 shows that LAD-AL and SR-AL (unweighted) estimators are not very good at identifying zeroes (left panels) compared to WLAD-AL and WSR-AL. They are, slightly more efficient than their weighted counterpart in estimating nonzero coefficients. Their relative efficiencies versus LS-AL stabilize towards the theoretical relative efficiencies of 0.955 and 0.63 as the tails of the t distribution approach the tails of the standard normal distribution.

Figure 2.2 shows that with the exception of LS-AL, the performance in detecting true zeroes of all other estimators deteriorates as the proportion of contamination increases (left panels). On the other hand, the false negatives of LS-AL increase with increasing contamination (top right panel). Taken together, these indicate that LS-AL increasingly over-penalizes when the proportion of outliers in the data increases. and SR-AL (unweighted) estimators are not very good at identifying zeros (left panels) compared to WLAD-AL and WSR-AL. Once again the unweighted LAD and SR are slightly more efficient in estimating nonzero coefficients than their weighted counterparts while the relative efficiencies of both weighted and unweighted estimators increases with increasing proportion of error contamination.

Figure 2.3 shows that, even when the model is correctly specified, high leverage points have a detrimental effect on model selection. While the number of true positives decrease, the weighted cases appear to provide some resistance for low percentage of high-leverage points. With respect to the estimation of nonzero coefficients, the false negative rates of LS-AL increase sharply compare to all other estimators (top right panel). Once again, LS-AL is increasingly over-penalizing the model with increasing proportion of high-leverage points. It is not surprising that LS-AL is also inefficient in the estimation of nonzero coefficients, especially compared to WLAD-AL and WSR-AL, especially for moderate proportion (4–8 %) of high-leverage points.

Our observations remain similar to the above for model misspecification (Scenario 3). In this case, the performance of all the estimators deteriorates quite rapidly with increasing contamination. LS-AL is once again the worst offender and WLAD-AL and WSR-AL provide the highest relative efficiency. The unweighted forms are much less efficient in comparison.

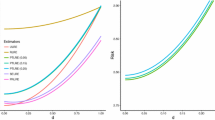

2.4.2 High-Dimensional Simulation

Again as in Tibshirani (1996), consider the linear model (2.1), where \(\boldsymbol{x}\) is a 100 × 40 matrix with entries \(x_{ij} = z_{ij} + z_{i}\) such that z ij and z i are independent and generated from standard normal distributions. This setting makes the x ij ’s to be pairwise correlated with correlation coefficient of about 0.5. The random error in Eq. (2.1) is generated from two different distributions: the contaminated normal distribution with different rates of contamination and the t distribution with different degrees of freedom. The regression coefficient vector is set at \(\boldsymbol{\beta }= (0,\ldots,0,2,\ldots,2,0,\ldots,0,2,\ldots,2)\), where there are ten repeats in each block. From 1000 replications, average numbers of correct zeroes, average number of incorrect zeroes and percentage of correct fit are reported. The simulation results are displayed in Fig. 2.5, where for clarity of presentation we only report results of LS-AL, SR-AL, and WSR-AL fits.

Average number of correct and incorrect zeroes and percentage of correct fit for the high-dimensional simulation. The symbols in the plots are LS-AL (open triangle), SR-AL (times) and WSR-AL (plus). First row represents t distributed errors, second row represents contaminated normal, third row represents high-leverage points, and the last row represents model misspecification

Our observations are quite similar to the low-dimensional case. LS-AL over-penalizes with increasing proportion of high leverage points, even when the model is correctly specified. SR-AL and WSR-AL provide superior performance in high leverage situations (rows three and four of Fig. 2.5). WSR-AL is clearly the best among the three for heavier tailed errors (top row). The percentage of correctly estimated models deteriorates with increasing error contamination (second row) for all the methods.

2.4.3 Boston Housing Data

The data considered here is the Boston Housing dataset which contains median values of housing in 506 census tracts and 13 predictors comprised of characteristics of the census tract. The full description of the data can be found in Leng (2010) and the dataset is available in the R library MASS. So, for sake of brevity, the description will not be included here. We first fit unpenalized regression models using the LS and SR procedures. The results are given in Table 2.1. We then fit penalized regression models using LS-AL, SR-AL, and WSR-AL. These results are displayed in Table 2.2.

The results in Table 2.1 indicate that both LS and SR find the variables INDUS and AGE insignificant while ZN is marginally significant. However, the LS and SR estimated coefficients are quite different in some cases outside of two standard errors of each other. Also, the residual plot given in Fig. 2.6 indicates the presence of heavy tails casting doubt on the LS results. In fact, observing the plot of studentized residuals of LS and SR in Fig. 2.6 plotted on the same scale, it is clear that the SR fit identifies many more outlying observations than the LS estimator. The results of penalized regressions given in Table 2.2 show that LS-AL eliminates the two insignificant variables (INDUS, AGE) from the model while SR-AL and WSR-AL eliminate a third variable (ZN) from the model. Thus, our observations are in line with those of Leng (2010).

The obvious question is if this reduction in model is associated with loss in prediction accuracy. To evaluate this, we performed cross validation where we randomly split the data into a training set containing approximately 90% of the data and a testing set containing the remaining 10 %. We fit the models using the training sets and calculated the absolute error for the test sets \(\vert y -\hat{\alpha }-\boldsymbol{x}^{{\prime}}\hat{\boldsymbol{\beta }}\vert \), where \(\hat{\alpha }\) is estimated using the mean (for LS) and median (for LAD and SR) of the training set residuals \(y -\boldsymbol{ x}^{{\prime}}\hat{\boldsymbol{\beta }}\). Table 2.3 gives the mean absolute error and the median model size over 100 iterations. The estimators considered all use the adaptive lasso penalty. Weights were computed using three different versions of the Mahalanobis distance: classic (Mah), minimum volume ellipsoid (MVE) of Rousseeuw (1984), and minimum covariance determinant (MCD) of Rousseeuw (1984).

It is evident from Table 2.3 that while the model performances remain relatively similar, the median model sizes of the MCD and MVE weighted adaptive lasso estimation required far fewer variables. For comparable model sizes, SR-AL estimator provides lower absolute error than LS-AL, LAD-AL, WLAD-AL (Mah), and WSR-AL (Mah). Also a comparison of WLAD-AL (Arslan 2012) and WSR-AL shows that on average WSR-AL achieves a lower mean absolute error using a slightly smaller model.

2.5 Discussion

This paper considered variable selection for linear models using penalized weighted signed-rank objective functions. It is demonstrated that the method provides selection and estimation consistency in the presence of outliers and high-leverage points. Our simulation study considered both low and high-dimensional data. In both cases, it was shown that compared to penalized least squares, penalized rank-based estimators provided more accurate true negative and false negatives identification while providing higher efficiency in estimating true positives when the error distribution is heavy tailed or contaminated. The weighted versions of the rank-based estimators provided protection against high leverage points, even when the model is incorrectly specified for the high-leverage points as long as the proportion of high-leverage points is moderate.

While the results are encouraging, an interesting extension involves regression when the data are ultra-high dimensional; that is, the dimension of the predictor also goes to infinity. This is currently under consideration by the authors. Another interesting extension involves generalized linear and single index models or even functional data analysis. Variable selection remains a valid exercise in these cases, where the last case is usually dealt with using group-selection methods.

References

Abebe, A., McKean, J. W., & Bindele, H. F. (2012). On the consistency of a class of nonlinear regression estimators. Pakistan Journal of Statistics and Operation Research, 8(3), 543–555.

Arslan, O. (2012). Weighted LAD-LASSO method for robust parameter estimation and variable selection in regression. Computational Statistics & Data Analysis, 56(6), 1952–1965.

Bindele, H. F., & Abebe, A. (2012). Bounded influence nonlinear signed-rank regression. Canadian Journal of Statistics, 40(1), 172–189.

Fan, J., & Li, R. (2001). Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association, 96(456), 1348–1360.

Hettmansperger, T. P., & McKean, J. W. (2011). Robust nonparametric statistical methods. In Monographs on statistics and applied probability (Vol. 119, 2nd ed.). Boca Raton, FL: CRC Press.

Hössjer, O. (1994). Rank-based estimates in the linear model with high breakdown point. Journal of the American Statistical Association, 89(425), 149–158.

Johnson, B. A. (2009). Rank-based estimation in the ℓ 1-regularized partly linear model for censored outcomes with application to integrated analyses of clinical predictors and gene expression data. Biostatistics, 10(4), 659–666.

Johnson, B. A., Lin, D., & Zeng, D. (2008). Penalized estimating functions and variable selection in semiparametric regression models. Journal of the American Statistical Association, 103(482), 672–680.

Johnson, B. A., & Peng, L. (2008). Rank-based variable selection. Journal of Nonparametric Statistics, 20(3), 241–252.

Leng, C. (2010). Variable selection and coefficient estimation via regularized rank regression. Statistica Sinica, 20(1), 167.

Rousseeuw, P. J. (1984). Least median of squares regression. Journal of the American Statistical Association, 79(388), 871–880.

Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B, 58, 267–288.

Wang, H., & Leng, C. (2008). A note on adaptive group lasso. Computational Statistics & Data Analysis, 52(12), 5277–5286.

Wang, H., Li, G., & Jiang, G. (2007). Robust regression shrinkage and consistent variable selection through the lad-lasso. Journal of Business & Economic Statistics, 25(3), 347–355.

Wang, L., & Li, R. (2009). Weighted Wilcoxon-type smoothly clipped absolute deviation method. Biometrics, 65(2), 564–571.

Wu, C. F. (1981). Asymptotic theory of nonlinear least squares estimation. Annals of Statistics, 9(3), 501–513.

Xu, J., Leng, C., & Ying, Z. (2010). Rank-based variable selection with censored data. Statistics and Computing, 20(2), 165–176.

Zou, H. (206). The adaptive lasso and its oracle properties. Journal of the American Statistical Association, 101(476), 1418–1429.

Zou, H., & Hastie, T. (2005). Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 67(2), 301–320.

Acknowledgements

We dedicate this work to Joseph W. McKean on the occasion of his 70th birthday. We are thankful for his mentorship and guidance over the years. We also thank the anonymous referee for suggestions that improved the presentation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

This Appendix provides some lemmas and the proofs of the main results (Theorems 2.1 and 2.2). In the proofs we have taken W = I to simplify notation. The general case follows by taking \(W^{1/2}\boldsymbol{x}\) in place of \(\boldsymbol{x}\) in the proofs.

2.1.1 Proofs

The following three lemmas, whose proofs follow from slight modifications of those given in Hössjer (1994) and Hettmansperger and McKean (2011), are key to deriving the proof of the main results.

Lemma 2.1.

Under assumptions (I 1 ) and (I 2 ), we have \(\tilde{\boldsymbol{\beta }}_{n} \rightarrow \boldsymbol{\beta }_{0}\;a.s.\)

The proof of this lemma is given in Hössjer (1994) for w ≡ 1 and in Abebe et al. (2012) for any positive w, and a more general regression model. Also, as in Wu (1981), the proof of this lemma is obtained by showing that

where B is an open subset of \(\mathcal{B}\) and \(\boldsymbol{\beta }_{0} \in Int(B)\).

Lemma 2.2.

Putting \(U_{n}(\boldsymbol{\gamma },\boldsymbol{\beta }) = \frac{\|S_{n}(\boldsymbol{\gamma }) - S_{n}(\boldsymbol{\beta }) -\boldsymbol{\xi }(\boldsymbol{\gamma }) + \boldsymbol{\xi }(\boldsymbol{\beta })\|_{1}} {n^{-1/2} +\| \boldsymbol{\xi }(\boldsymbol{\gamma })\|_{1}}\) , we have for small enough δ > 0 that

This lemma ensures that \(n^{-1/2}S_{n}(\boldsymbol{\beta }_{0})\) converges in distribution to a multivariate normal distribution with mean zero and covariance matrix \(\gamma _{\varphi ^{+}}\varSigma\). It also results in the following asymptotic linearity established in Hettmansperger and McKean (2011).

Lemma 2.3.

Under the assumption of the errors having a finite Fisher information, we have for all ε > 0 and C > 0

From this asymptotic linearity follows that for all \(\boldsymbol{\beta }\) such that \(\|\boldsymbol{\beta }-\boldsymbol{\beta }_{0}\|_{1} \leq C/\sqrt{n}\), we have

Proof of Theorem 2.1.

Set \(B =\{\boldsymbol{\beta } _{0} + n^{-1/2}\mathbf{u}:\;\;\| \mathbf{u}\|_{1} < C\}\). Clearly B is an open neighborhood of \(\boldsymbol{\beta }_{0}\) and therefore B c is a closed subset of \(\mathcal{B}\) not containing \(\boldsymbol{\beta }_{0}\). To complete the proof, it is then sufficient to show that

which from Lemma 1 of Wu (1981) will result in the \(\sqrt{n}\)-consistency of \(\hat{\boldsymbol{\beta }}_{n}\). Indeed,

Now by the mean value theorem, assuming without loss of generality the | β 0j | < | β j | , there exits α j ∈ ( | β 0j | , | β j | ) such that

and therefore

This together with Eq. (2.13) imply that

as \(\boldsymbol{\beta }\in B^{c}\) implies that \(\boldsymbol{\beta }\) can be written as \(\boldsymbol{\beta }=\boldsymbol{\beta } _{0} + n^{-1/2}\mathbf{u}\) with \(\|\mathbf{u}\|_{1} \geq C\). Being a closed subset of a compact space, B c is compact, and hence, is closed and bounded. Then, there exists a constant M such that \(C \leq \|\mathbf{u}\|_{1} \leq M\). From the last term of equation (2.14), note that \(\sum _{j=1}^{p_{0} }\vert u_{j}\vert \leq \|\mathbf{u}\|_{1} \leq M\) from which, we have \(-\sqrt{n}a_{n}\sum _{j=1}^{p_{0} }\vert u_{j}\vert \geq -\sqrt{n}a_{n}M\). Thus,

and so,

By assumption (I 3), \(\lim _{n\rightarrow \infty }\Big[\sqrt{n}a_{n}M\Big] = 0\), and by Lemma 2.1, we have

Proof of Theorem 2.2.

From the proof of Theorem 2.1 to obtain the oracle property, it is sufficient to show that for any \(\boldsymbol{\beta }^{{\ast}}\) satisfying \(\|\boldsymbol{\beta }_{a}^{{\ast}}-\boldsymbol{\beta }_{0a}\|_{1} = O_{p}(n^{-1/2})\) and \(\vert \beta _{j}^{{\ast}}\vert < Cn^{-1/2}\) for \(j = p_{0} + 1,\ldots,d\), \(\frac{\partial Q(\boldsymbol{\beta })} {\partial \beta _{j}} \Big\vert _{\boldsymbol{\beta }=\boldsymbol{\beta }^{{\ast}}}\) and β j ∗ have the same sign. Indeed,

where \(S_{n}^{j}(\boldsymbol{\beta }_{0})\) is the j th component of \(S_{n}(\boldsymbol{\beta }_{0})\). Note that by assumption (I 3), \(\sqrt{n}H_{ \lambda _{j}}(\vert \beta _{j}^{{\ast}}\vert ) \geq \sqrt{n}b_{ n} \rightarrow \infty \) as n → ∞, and thus the sign of \(\frac{\partial Q(\boldsymbol{\beta })} {\partial \beta _{j}} \Big\vert _{\boldsymbol{\beta }=\boldsymbol{\beta }^{{\ast}}}\) is fully determined by that of β j ∗ for n large enough. This together with Theorem 2.1 implies that \(\lim _{n\rightarrow \infty }P(\hat{\boldsymbol{\beta }}_{nb} = \mathbf{0}) = 1\).

Moreover, by definition of \(\hat{\boldsymbol{\beta }}_{n}\), it is obtained in a straightforward manner that \(\frac{\partial Q(\boldsymbol{\beta })} {\partial \boldsymbol{\beta }_{a}} \Big\vert _{\boldsymbol{\beta }=(\hat{\boldsymbol{\beta }}_{a},0)} = o_{P}(1)\). From this, partitioning \(S_{n}(\boldsymbol{\beta }_{0})\) as \((S_{n,a}(\boldsymbol{\beta }_{0}),S_{n,b}(\boldsymbol{\beta }_{0}))\), it follows from Eq. (2.12) that

and \(\vert \sqrt{n}\sum _{j=1}^{p_{0}}H_{\lambda _{ j}}(\vert \hat{\beta }_{na,j}\vert )\mbox{ sgn}(\hat{\beta }_{na,j})\vert \leq p_{0}\sqrt{n}a_{n} \rightarrow 0\) as n → ∞ by assumption (I 3). Hence,

As \(n^{-1/2}S_{n,a}(\boldsymbol{\beta }_{0})\mathop{\longrightarrow}\limits_{}^{\mathcal{D}}N\big(0,\ \gamma _{\varphi ^{+}}\varSigma _{a}\big)\), we have

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Abebe, A., Bindele, H.F. (2016). Robust Signed-Rank Variable Selection in Linear Regression. In: Liu, R., McKean, J. (eds) Robust Rank-Based and Nonparametric Methods. Springer Proceedings in Mathematics & Statistics, vol 168. Springer, Cham. https://doi.org/10.1007/978-3-319-39065-9_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-39065-9_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-39063-5

Online ISBN: 978-3-319-39065-9

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)