Abstract

One of the most critical environmental issues confronting mankind remains the ominous spectre of climate change, in particular, the pace at which impacts will occur and our capacity to adapt. Sea level rise is one of the key artefacts of climate change that will have profound impacts on global coastal populations. Although extensive research has been undertaken into this issue, there remains considerable scientific debate about the temporal changes in mean sea level and the climatic and physical forcings responsible for them. This research has specifically developed a complex synthetic data set to test a wide range of time series methodologies for their utility to isolate a known non-linear, non-stationary mean sea level signal. This paper provides a concise summary of the detailed analysis undertaken, identifying Singular Spectrum Analysis (SSA) and multi-resolution decomposition using short length wavelets as the most robust, consistent methods for isolating the trend signal across all length data sets tested.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Sea level rise is one of the key artefacts of climate change that will have profound impacts on global coastal populations [1, 2]. Understanding how and when impacts will occur and change are critical to developing robust strategies to adapt and minimise risks.

Although the body of mean sea level research is extensive, professional debate around the characteristics of the trend signal and its causalities remains high [3]. In particular, significant scientific debate has centred around the issue of a measurable acceleration in mean sea level [4–9], a feature central to projections based on the current knowledge of climate science [10].

Monthly and annual average ocean water level records used by sea level researchers are a complex composite of numerous dynamic influences of largely oceanographic, atmospheric or gravitational origins operating on differing temporal and spatial scales, superimposed on a comparatively low amplitude signal of sea level rise driven by climate change influences (see [3] for more detail). The mean sea level (or trend) signal results directly from a change in volume of the ocean attributable principally to melting of snow and ice reserves bounded above sea level (directly adding water), and thermal expansion of the ocean water mass. This low amplitude, non-linear, non-stationary signal is quite distinct from all other known dynamic processes that influence the ocean water surface which are considered to be stationary; that is, they cause the water surface to respond on differing scales and frequencies, but do not change the volume of the water mass. In reality, improved real-time knowledge of velocity and acceleration rests entirely with improving the temporal resolution of the mean sea level signal.

Over recent decades, the emergence and rapid improvement of data adaptive approaches to isolate trends from non-linear, non-stationary and comparatively noisy environmental data sets such as EMD [11, 12], Singular Spectrum Analysis (SSA) [13–15] and Wavelet analysis [16–18] are theoretically encouraging. The continued development of data adaptive and other spectral techniques [19] has given rise to recent variants such as CEEMD [20, 21] and Synchrosqueezed Wavelet Transform (SWT) [22, 23].

An innovative process by which to identify the most efficient method for estimating the trend is to test against a “synthetic” (or custom built) data set with a known, fixed mean sea level signal [3]. In general, a broad range of analysis techniques have been applied to the synthetic data set to directly compare their utility to isolate the embedded mean sea level signal from individual time series. Various quantitative metrics and associated qualitative criteria have been used to compare the relative performance of the techniques tested.

2 Method

The method to determine the most robust time series method for isolating mean sea level with improved temporal accuracy is relatively straightforward and has been based on three key steps, namely:

-

1.

development of synthetic data sets to test;

-

2.

application of a broad range of analytical methods to isolate the mean sea level trend from the synthetic data set and

-

3.

comparative assessment of the performance of each analytical method using a multi-criteria analysis (MCA) based on some key metrics and a range of additional qualitative criteria relevant to its applicability for broad, general use on conventional ocean water level data worldwide.

2.1 Step 1: Development of Synthetic Data Sets for Testing Purposes

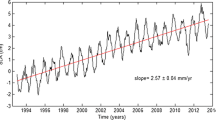

The core synthetic data set developed for this research has been specifically designed to mimic the key physical characteristics embedded within real-world ocean water level data, comprising a range of six key known dynamic components added to a non-linear, non-stationary time series of mean sea level [3]. The fixed mean sea level signal has been generated by applying a broad cubic smoothing spline to a range of points over the 1850–2010 time horizon reflective of the general characteristics of the global trend of mean sea level [24], accentuating the key positive and negative “inflexion” points evident in the majority of long ocean water level data sets [25].

This data set has been designed as a monthly average time series spanning a 160-year period (from 1850 to 2010) to reflect the predominant date range for the longer records in the Permanent Service for Mean Sea Level (PSMSL), which consolidates the world’s ocean water level data holdings.

The synthetic data set contains 20,000 separate time series, each generated by successively adding a randomly sampled signal from within each of the six key dynamic components to the fixed mean sea level signal. The selection of 20,000 time series represents a reasonable balance between optimising the widest possible set of complex combinations of real-world signals and the extensive computing time required to analyse the synthetic data set. Further, the 20,000 generated trend outputs from each analysis provide a robust means of statistically identifying the better performing techniques for extracting the trend [3].

Additionally, the core 160-year monthly average data set has been subdivided into 2 × 80 and 4 × 40 year subsets and annualised to create 14 separate data sets to also consider the influence of record length and issues associated with annual versus monthly records.

2.2 Step 2: Application of Analysis Methods to Extract Trend from Synthetic Data Sets

The time series analysis methods that have been applied to the synthetic data set to estimate the trend are summarised in Table 1. This research has not been designed to consider every time series analysis tool available. Rather the testing regime is aimed at appraising the wide range of tools currently used more specifically for mean sea level trend detection of individual records, with a view to improving generalised tools for sea level researchers. Some additional, more recently developed data adaptive methods such as CEEMD [21] and SWT [22, 23] have also been included in the analysis to consider their utility for sea level research. It is acknowledged that various methods permit a wide range of parameterisation that can critically affect trend estimation. In these circumstances, broad sensitivity testing has been undertaken to identify the better performing combination and range of parameters for a particular method when applied specifically to ocean water level records (as represented by the synthetic data sets).

With methods such as SSA and SWT, it has been necessary to develop auto detection routines to isolate specific elements of decomposed time series with characteristics that resemble low frequency trends. Direct consultation with leading time series analysts and developers of method specific analysis tools has also assisted to optimise sensitivity testing.

2.3 Step 3: Multi-Criteria Assessment of Analytical Methods for Isolating Mean Sea Level

In addition to identifying the analytic that provides the greatest temporal precision in resolving the trend, the intention is to use this analytic to underpin the development of tools for wide applicability by sea level researchers. Comparison of techniques identified in Table 1, have been assessed across a relevant range of quantitative and qualitative criteria, including:

-

Measured accuracy (Criteria A 1 ). This criterion is based upon the cumulative sum of the squared differences between the fixed mean sea level signal and the trend derived from a particular analytic for each time series in the synthetic data set. This metric has then been normalised per data point for direct comparison between the different length synthetic data sets (40, 80 and 160 years) as follows:

$$ {A}_1=\frac{1}{n}{\displaystyle \sum_{i=1}^{20,000}{\left({x}_i-X\right)}^2} $$(1)where X represents the fixed mean sea level signal embedded within each time series; x i represents the trend derived from the analysis of the synthetic data set using a particular analytical approach and n represents the number of data points within each of the respective synthetic data sets (or lesser outputs in the case of moving averages).

It is imperative to note that particular combinations of key parameters used as part of the sensitivity testing regime for particular methods (refer Table 1), resulted in no (or limited) outputs for various time series analysed. This occurred either due to the analytic not resolving a signal within the limitations established for a trend (particularly for auto detection routines necessary for SSA and SWT) or where internal thresholds/convergence protocols were not met for a particular algorithm and the analysis terminated. Where such circumstances occurred, the determined A1 metric was prorated to equate to 20,000 time series for direct comparison across methods. Where the outputs of an analysis resolved a trend signal in less than 75 % (or 15,000 time series) of a particular synthetic data set, the result was not included in the comparative analysis.

-

Maximum standard deviation (Criteria A 2 ). This straightforward statistical measure is based on the outputted trends from the application of a particular analytical method to the synthetic data sets, providing a measure of the scale of the spread of outputted trend estimates. Intuitively, the better performing analytic will minimise both criterions A1 and A2.

-

Computational expense (Criteria A 3 ). This criterion provides a comparative assessment of the average processing time to isolate the trend from the longest synthetic data set (160 years). This metric provides an intuitive appraisal of the value of some of the more computationally demanding analytical approaches when weighed against, in particular, the measured accuracy (criteria A1).

-

Consistency across differing length data sets (Criteria A 4 ). This criterion is based on a qualitative assessment of the consistency in the performance of the respective method across the three key length data sets (40, 80 and 160 years) which cover the contemporary length of global data used by sea level researchers. It is important to gain an understanding of how the relative accuracy changes in the extraction of the trend (if at all) from shorter to longer length data sets. A simple tick indicates a general consistency in the level of accuracy across all data sets. A cross indicates that the analytic may not have been able to consistently isolate a signal with “trend-like” characteristics across all length data sets within the limits established through the sensitivity testing regime.

-

Capacity to improve temporal resolution of trend characteristics (Criteria A 5 ). This criterion is similarly based on a qualitative assessment of the capacity for the isolated trend to inform changes to associated real-time velocity and accelerations, which are of great contemporary importance to sea level and climate change researchers.

-

Resolution of trend over full data record (Criteria A 6 ). This criterion relates to the ability of a particular analytic to resolve the trend over the full length of the data record. It has become increasingly important for sea level researchers to gain a real-time understanding of any temporal changes in the characteristics of the mean sea level (or trend) signal in the latter portion of the record.

-

Ease of application by non-expert practitioners (Criteria A 7 ). Several analytical approaches considered require extensive expert judgement to optimise performance. Despite the sensitivity analyses undertaken to broadly identify the optimal settings of a specific analytic in relation to the signals within the synthetic data sets, the sensitivity of key parameters can be quite high. Where limited (or no) specific knowledge of the analytic is required to optimise its performance the analytic has been denoted with a tick.

3 Results

In total, 1450 separate analyses have been undertaken as part of the testing regime, translating to precisely 29 million individual time series analyses. Figure 1 provides a pictorial summary of the complete analysis of all monthly and annual data sets (40-, 80- and 160-year synthetic data sets) plotted against the key metric, criteria A1. Equivalent scales for each panel provide direct visual and quantitative comparison between monthly and annual and differing length data sets. For the sake of completeness, it is worth noting a further 36 monthly analysis results lie beyond the limit of the scale chosen and therefore are not depicted on the chart. Where analysis resolves a trend signal across more than 75 % (or 15,000 time series) of a synthetic data set, the output is used for comparative purposes and depicted on Fig. 1 as “complete”.

Analysis overview based on Criteria A1. Notes: This chart provides a summary of all analysis undertaken (refer Table 1). Scales for both axes are equivalent for direct comparison between respective analyses conducted on the monthly (top panel) and annual (bottom panel) synthetic data sets. The vertical dashed lines demarcate the results of each method on the 160-, 80- and 40-year length data sets in moving from left to right across each panel. Where the analysis permitted the resolution of a trend signal across a minimum of 75 % (or 15,000 time series) of a synthetic data set, this has been represented as “complete”. Those analyses resolving trends over less than 75 % of a synthetic data set are represented as “incomplete”

From Fig. 1, it is evident that the cumulative errors of the estimated trend (criteria A1) are appreciably lower for the annual data sets when considered across the totality of the analysis undertaken. More specifically, for the 579 “complete” monthly outputs, 408 (or 71 %) fall below an A1 threshold level of 30 × 106 mm2 (where the optimum methods reside). Comparatively, for the 632 “complete” annual outputs, 566 (or 90 %) are below this threshold level.

The key reason for this is that the annualised data sets not only provide a natural low frequency smooth (through averaging calendar year monthlies), but, the seasonal influence (at monthly frequency) is largely removed, noting the bin of seasonal signals sampled to create the synthetic data set also contains numerous time-varying seasonal signals derived using ARIMA.

Based on visual inspection of Fig. 1, it is difficult to distinguish the influence of record length on capacity to isolate the trend component. However, detailed examination of the “complete” monthly outputs indicates that 77 % of the 160-year data set are contained below the A1 threshold level of 30 × 106 mm2, falling to 62 % for the 40-year data sets. Similarly for the “complete” annual outputs, 98 % of the 160-year data set are contained below this threshold, falling to 85 % for the 40-year data sets. The above-mentioned results provide strong evidence that estimates of mean sea level are enhanced generally through the use of longer, annual average ocean water level data.

Based upon the appreciably reduced error in the estimate of the trend by using annual over monthly average ocean water level data, the multi-criteria assessment of the various methodologies advised in Table 1 have been limited to analysis outputs based solely on the annual synthetic data sets. Table 2 provides a summary of the multi-criteria assessment of the better performing methods, based on optimisation of relevant parameters for each specific analytic. From this assessment, multi-resolution decomposition using short maximal overlap discrete wavelet transform (MODWT) and short length wavelets has proven the optimal analytic over the broad range of criteria outlined in Sect. 2.3, whereby limited expert judgment is required to optimise performance.

In addition to the results discussed above, there are some other interesting observations to be gleaned from the weight of analysis undertaken as part of this work. Of all methods considered in Table 1, the comparatively simple structural models applied to the monthly data sets provided the least utility in extracting the mean sea-level trend component. This is not unexpected given that the range of complex signals within the synthetic data set are forced to be resolved into trend, seasonal and noise components only by these general models.

Similarly, methods such as EMD with inherent limitations associated with mode mixing and splitting, aliasing and end effects [41], performed comparatively poorly across the range of synthetic data sets and across the range of parameters varied to optimise performance. The EEMD variant [12] which effectively combines EMD with noise stabilisation to offset the propensity for mode mixing and aliasing [19], exhibited substantially enhanced performance compared to EMD. Across all 14 monthly and annual average synthetic data sets, EEMD exhibited more stable and consistent results across all sensitivity tests with the best performing EEMD on average reducing the squared error by 15 % compared to the best performing EMD combination.

A further advancement in the form of CEEMD [21] was developed to overcome a nuance of EEMD in which the sum of the intrinsic mode functions determined by the algorithm does not necessarily reconstruct the original signal [19]. When similarly averaged across all synthetic data sets, the best performing combination of CEEMD parameterisation only reduced the squared error by less than 5 % compared to the best performing EMD combination. Further, it should be noted the CEEMD algorithm was not able to resolve a trend for every time series where internal thresholds/convergence protocols were not met.

Based on the testing regime performed on the synthetic data sets, EEMD outperformed CEEMD. Both variants of the ensemble EMD, using the sensitivity analysis advised, proved the most computationally expensive of all the algorithms tested. Both of these EMD variants were substantially outperformed by the MODWT and SSA, but importantly, processing times were of the order of 3000–4000 times that of these better performing analytics.

Clearly for these particularly complex ocean water level time series, the excessive computational expense of these algorithms has not proven beneficial. One of the more inconsistent performers proved to be the SWT. This algorithm proved highly sensitive to the combination of wavelet filter and generalisation parameter. Certain combinations of parameters provided exceptional performance on individual synthetic data sets but proved less capable of consistently resolving low frequency “trend-like” signals across differing length data sets. Of the analytics tested, this algorithm proved the most complex to optimise in order to isolate and reconstruct trends from the ridge extracted components. Auto detection routines were specifically developed to test and isolate the low frequency components based on first differences. However, a significant portion of the sensitivity analyses for SWT had difficulty isolating the low frequency signals across the majority of the data sets tested.

SSA has also been demonstrated to be a superior analytical tool for trend extraction across the range of synthetic data sets. However, like the SWT, SSA requires an elevated level of expertise to select appropriate parameters and internal methods to optimise performance. Auto detection routines were also developed to isolate the key SSA eigentriple groupings with low frequency “trend-like” characteristics, based on first differences. With this approach, not all time series could be resolved to isolate a trend within the limits established. Auto detection routines based on frequency contribution [42] were also provided by Associate Professor Nina Golyandina (St Petersburg State University, Russia) to test, proving comparable to the first differences technique.

4 Discussion

With so much reliance on improving the temporal resolution of the mean sea level signal due to its association as a key climate change indicator, it is imperative to maximise the information possible from the extensive global data holdings of the PSMSL. Numerous techniques have been applied to these data sets to extract trends and infer accelerations based on local, basin or global scale studies. Ocean water level data sets, like any environmental time series, are complex amalgams of physical processes and influences operating on different spatial scales and frequencies. Further, these data sets will invariably also contain influences and signals that might not yet be well understood (if at all).

With so many competing and sometimes controversial findings in the scientific literature concerning trends and more particularly, accelerations in mean sea level (refer Sect. 1), it is difficult to definitively separate sound conclusions from those that might unwittingly be influenced by the analytical methodology applied (and to what extent). This research has been specifically designed as a necessary starting point to alleviate some of this uncertainty and improve knowledge of the better performing trend extraction methods for individual long ocean water level data. Identification of the better performing methods enables the temporal resolution of mean sea level to be improved, enhancing the knowledge that can be gleaned from long records which includes associated real-time velocities and accelerations. In turn, key physically driven changes can be identified with improved precision and confidence, which is critical not only to sea level research, but also climate change more generally at increasingly finer (or localised) scales.

The importance of resolving trends from complex environmental and climatic records has led to the application of increasingly sophisticated, so-called data adaptive spectral and empirical techniques [12, 19, 43, 44] over comparatively recent times. In this regard, it is readily acknowledged that whilst the testing undertaken within this research has indeed been extensive, not every time series method for trend extraction has been examined. The methods tested are principally those that have been applied to individual ocean water level data sets within the literature to estimate the trend of mean sea level.

Therefore spatial trend coherence and multiple time series decomposition techniques such as PCA/EOF, SVD, MC-SSA, M-SSA, XWT, some of which are used in various regional and global scale sea level studies [45–51] are beyond the scope of this work and have not been considered. In any case, the synthetic data sets developed for this work have not been configured with spatially dependent patterns to facilitate rigorous testing of these methods. In developing the synthetic data sets to test for this research, Watson [3] noted specifically that a natural extension (or refinement) of the work might be to attempt to fine tune the core synthetic data set to reflect the more regionally specific signatures of combined dynamic components.

Other key factors for consideration include identifying the method(s) that prove robust over the differing length time series available whilst resolving trends efficiently, with little pre-conditioning or site specificity. Whilst recognising that various studies investigating mean sea level trends at long gauge sites have utilised the construction of comparatively detailed site specific general additive models, these models have little direct applicability or transferability to other sites and have not been considered further for this work.

Of the analysis methods considered, the comparatively simple 30-year moving (or rolling) average filter proved the optimal performer against the key A1 criterion when averaged across all length data sets. Although not isolating and removing high amplitude signals or contaminating noise, the sheer width of the averaging window proves to be very efficient in dampening their influence for ocean water level time series. However, the resulting mean sea level trend finishes 15 years inside either end of each data set, providing no temporal understanding of the signal for the most important part of the record—the recent history, which is keenly desired to better inform the trajectory of the climate related signal. Although well performing on a range of criteria, this facet is a critical shortcoming of this approach. Whilst triple and quadruple moving averages were demonstrated to marginally lower the A1 criteria, respectively, compared to the equivalent single moving average, the loss of data from the ends of the record was further amplified by these methods.

It is also noted that the simple linear regression analysis also performed exceptionally well against the A1 criteria when averaged across all data sets. Based on the comparatively limited amplitude and curvature of the mean sea level trend signal embedded within the synthetic data set it is perhaps not surprising that the linear regression performs well. But, like the moving average approach, its simplicity brings with it a profound shortcoming, in that it provides limited temporal instruction on the trend other than its general direction (increasing or decreasing). No information on how (or when) this signal might be accelerating is possible from this technique, which regrettably, is a facet of critical focus for contemporary sea level research.

It has been noted that unfortunately many studies using wavelet analysis have suffered from an apparent lack of quantitative results. The wavelet transform has been regarded by many as an interesting diversion that produces colourful pictures, yet purely qualitative results [52]. The initial use of this particular multi-resolution decomposition technique (MODWT) for application to a long ocean water level record can be found in the work of Percival and Mofjeld [53]. There is no question from this current research, that wavelet analysis has proven a “star performer”, producing measurable quantitative accuracy exceeding other methods, with comparable consistency across all length synthetic data sets and with minimal computational expense.

Importantly, it is worth noting that the sensitivity testing and MCA used to differentiate the utility of the various methods, unduly disadvantages the SSA method. In reality the SSA method performs optimally with a window length varying between L/4 and L/2 (where L is the length of the time series). Varying the window length permits necessary optimisation of the separability between the trend, oscillatory and noise components [54]. However, for the sensitivity analysis around SSA, only fixed window lengths were compared across all data sets. Although SSA (with a fixed 30-year window) performed comparably for the key A1 criteria with MODWT (refer Table 2), a method that optimises the window length parameter automatically would, in all likelihood have further improved this result. Only a modest improvement of less than 4 % would be required to put SSA on parity with the accuracy of MODWT. In addition, auto detection routines designed to select “trend-like” SSA components are unlikely to perform as well as the interactive visual inspection (VI) techniques commonly employed by experienced practitioners decomposing individual time series [43]. Clearly VI techniques were not an option for the testing regime described herein, which involved processing 14 separate data sets each containing 20,000 time series.

It is important that both the intent and the limitations of the research work presented here are clearly understood. The process of creating a detailed synthetic ocean water level data set, embedded with a fixed non-linear, non-stationary mean sea level signal to test the utility of trend extraction methods is unique for sea level research. Despite broad sensitivity testing designed herein, this work should be viewed as a starting point rather than a fait accompli in providing a transparent appraisal of the utility of currently used techniques for isolating the mean sea level trend from individual ocean water level time series. The author warmly welcomes the opportunity to work further with analysts on refining parameters of tested methods and alternative methods of trend extraction to optimise performance of these tools for sea level research.

5 Conclusion

The monthly and annual average ocean water level data sets used to estimate mean sea level are like any environmental or climatic time series data, ubiquitously “contaminated” by numerous complex dynamic processes operating across differing spatial and frequency scales, often with very high noise to signal ratio. Whilst the primary physical processes and their scale of influence are known generally [3], not all processes in nature are fully understood and the quantitative attribution of these associated influences will always have a degree of imprecision, despite improvements in the sophistication of time series analyses methods [44]. In an ideal world with all contributory factors implicitly known and accommodated, the extraction of a trend signal would be straightforward.

In recent years, the controversy surrounding the conclusions of various published works, particularly concerning measured accelerations from long, individual ocean water level records necessitate a more transparent, qualitative discussion around the utility of various analytical methods to isolate the mean sea level signal with improved accuracy. The synthetic data set developed by Watson [3] was specifically designed for long individual records, providing a robust and unique framework within which to test a range of time series methods to augment sea level research.

The testing and analysis regime summarised in this paper is extensive, involving 1450 separate analyses across monthly and annual data sets of length 40, 80 and 160 years. In total, 29 million individual time series were analysed. From this work, there are some broad general conclusions to be drawn concerning the extraction of the mean sea level signal from individual ocean water level records with improved temporal accuracy:

-

Precision is enhanced by the use of the longer, annual average data sets;

-

The analytic producing the optimal measured accuracy (Criteria A1) across all length annual data sets was the simple 30-year moving average filter. However, the outputted trend finishes half the width of the averaging filter inside either end of the data record, providing no temporal understanding of the trend signal for the most important part of the record – the recent history;

-

The best general purpose analytic requiring minimum expert judgment and parameterisation to optimise performance was multi-resolution decomposition using MODWT and

-

The optimum performing analytic is most likely to be SSA whereby interactive visual inspection (VI) techniques are used by experienced practitioners to optimise window length and component separability.

This work provides a very strong argument for the utility of SSA and multi-resolution decomposition using MODWT techniques to isolate mean sea level with improved temporal resolution from long individual ocean water level data using a unique, robust, measurable approach. Notwithstanding, there remains scope to improve the utility of several of the data adaptive approaches using more extensive tuning of alternative parameters to optimise their performance to enhance mean sea level research.

References

McGranahan, G., Balk, D., Anderson, B.: The rising tide: assessing the risks of climate change and human settlements in low elevation coastal zones. Environ. Urban. 19(1), 17–37 (2007)

Nicholls, R.J., Cazenave, A.: Sea-level rise and its impact on coastal zones. Science 328(5985), 1517–1520 (2010)

Watson, P.J.: Development of a unique synthetic data set to improve sea level research and understanding. J. Coast. Res. 31(3), 758–770 (2015)

Baart, F., van Koningsveld, M., Stive, M.: Trends in sea-level trend analysis. J. Coast. Res. 28(2), 311–315 (2012)

Donoghue, J.F., Parkinson, R.W.: Discussion of: Houston, J.R. and Dean, R.G., 2011. Sea-level acceleration based on U.S. tide gauges and extensions of previous global-gauge analyses. J. Coast. Res. 27(3), 409–417; J. Coast. Res, 994–996 (2011)

Houston, J.R., Dean, R.G.: Sea-level acceleration based on U.S. tide gauges and extensions of previous global-gauge analyses. J. Coast. Res. 27(3), 409–417 (2011)

Houston, J.R., Dean, R.G.: Reply to: Rahmstorf, S. and Vermeer, M., 2011. Discussion of: Houston, J.R. and Dean, R.G., 2011. Sea-level acceleration based on U.S. tide gauges and extensions of previous global-gauge analyses. J. Coast. Res. 27(3), 409–417; J. Coast. Res., 788–790 (2011)

Rahmstorf, S., Vermeer, M.: Discussion of: Houston, J.R. and Dean, R.G., 2011. Sea-level acceleration based on U.S. tide gauges and extensions of previous global-gauge analyses. J. Coast. Res. 27(3), 409–417; J. Coast. Res., 784–787 (2011)

Watson, P.J.: Is there evidence yet of acceleration in mean sea level rise around Mainland Australia. J. Coast. Res. 27(2), 368–377 (2011)

IPCC: Summary for policymakers. In: Stocker, T.F., Qin, D., Plattner, G.-K., Tignor, M., Allen, S.K., Boschung, J., Nauels, A., Xia, Y., Bex, V., Midgley, P.M. (eds.) Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge (2013)

Huang, N.E., Shen, Z., Long, S.R., Wu, M.C., Shih, E.H., Zheng, Q., Tung, C.C., Liu, H.H.: The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 454(1971), 903–995 (1998)

Wu, Z., Huang, N.E.: Ensemble empirical mode decomposition: a noise-assisted data analysis method. Adv. Adapt. Data Anal. 1(01), 1–41 (2009)

Broomhead, D.S., King, G.P.: Extracting qualitative dynamics from experimental data. Physica D 20(2), 217–236 (1986)

Golyandina, N., Nekrutkin, V., Zhigljavsky, A.A.: Analysis of Time Series Structure: SSA and Related Techniques. Chapman and Hall/CRC, Boca Raton, FL (2001)

Vautard, R., Ghil, M.: Singular spectrum analysis in nonlinear dynamics, with applications to paleoclimatic time series. Physica D 35(3), 395–424 (1989)

Daubechies, I.: Ten Lectures on Wavelets. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (1992)

Grossmann, A., Morlet, J.: Decomposition of Hardy functions into square integrable wavelets of constant shape. SIAM J. Math. Anal. 15(4), 723–736 (1984)

Grossmann, A., Kronland-Martinet, R., Morlet, J.: Reading and understanding continuous wavelet transforms. In: Combes, J.-M., Grossmann, A., Tchamitchian, P. (eds.) Wavelets, pp.~2–20. Springer, Heidelberg (1989)

Tary, J.B., Herrera, R.H., Han, J., Baan, M.: Spectral estimation—What is new? What is next? Rev. Geophys. 52, 723–749 (2014)

Han, J., van der Baan, M.: Empirical mode decomposition for seismic time-frequency analysis. Geophysics 78(2), O9–O19 (2013)

Torres, M.E., Colominas, M.A., Schlotthauer, G., Flandrin, P.: A complete ensemble empirical mode decomposition with adaptive noise. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal (ICASSP), May 22–27, 2011, Prague Congress Center Prague, Czech Republic, pp. 4144–4147 (2011)

Daubechies, I., Lu, J., Wu, H.T.: Synchrosqueezed wavelet transforms: an empirical mode decomposition-like tool. Appl. Comput. Harmon. Anal. 30(2), 243–261 (2011)

Thakur, G., Brevdo, E., Fučkar, N.S., Wu, H.T.: The synchrosqueezing algorithm for time-varying spectral analysis: robustness properties and new paleoclimate applications. Signal Process. 93(5), 1079–1094 (2013)

Bindoff, N.L., Willebrand, J., Artale, V., Cazenave, A., Gregory, J., Gulev, S., Hanawa, K., Le Quéré, C., Levitus, S., Nojiri, Y., Shum, C.K., Talley, L.D., Unnikrishnan, A.: Observations: oceanic climate change and sea level. In: Solomon, S., Qin, D., Manning, M., Chen, Z., Marquis, M., Averyt, K.B., Tignor, M., Miller, H.L. (eds.) Climate Change 2007: The Physical Science Basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge (2007)

Woodworth, P.L., White, N.J., Jevrejeva, S., Holgate, S.J., Church, J.A., Gehrels, W.R.: Review—evidence for the accelerations of sea level on multi-decade and century timescales. Int. J. Climatol. 29, 777–789 (2009)

Wood, S.: Generalized Additive Models: An Introduction with R. CRC, Boca Raton, FL (2006)

O'Sullivan, F.: A statistical perspective on ill-posed inverse problems. Stat. Sci. 1(4), 502–527 (1986)

O’Sullivan, F.: Fast computation of fully automated log-density and log-hazard estimators. SIAM J. Sci. Stat. Comput. 9(2), 363–379 (1988)

Eilers, P.H.C., Marx, B.D.: Flexible smoothing with B-splines and penalties. Stat. Sci. 11(2), 89–102 (1996)

Zeileis, A., Grothendieck, G.: Zoo: S3 infrastructure for regular and irregular time series. J. Stat. Softw. 14(6), 1–27 (2005)

Cleveland, R.B., Cleveland, W.S., McRae, J.E., Terpenning, I.: STL: a seasonal-trend decomposition procedure based on loess. J. Off. Stat. 6(1), 3–73 (1990)

Durbin, J., Koopman, S.J.: Time Series Analysis by State Space Methods (No. 38). Oxford University Press, Oxford (2012)

GRETL.: Gnu Regression, Econometrics and Time series Library (GRETL). http://www.gretl.sourceforge.net/ (2014)

Golyandina, N., Korobeynikov, A.: Basic singular spectrum analysis and forecasting with R. Comput. Stat. Data Anal. 71, 934–954 (2014)

Kim, D., Oh, H.S.: EMD: a package for empirical mode decomposition and Hilbert spectrum. R J. 1(1), 40–46 (2009)

Kim, D., Kim, K.O., Oh, H.S.: Extending the scope of empirical mode decomposition by smoothing. EURASIP J. Adv. Signal Process 2012(1), 1–17 (2012)

Bowman, D.C., Lees, J.M.: The Hilbert–Huang transform: a high resolution spectral method for nonlinear and nonstationary time series. Seismol. Res. Lett. 84(6), 1074–1080 (2013)

Percival, D.B., Walden, A.T.: Wavelet Methods for Time Series Analysis (Cambridge Series in Statistical and Probabilistic Mathematics). Cambridge University Press, Cambridge (2000)

Daubechies, I.: Orthonormal bases of compactly supported wavelets. Commun. Pure Appl. Math. 41(7), 909–996 (1988)

R Core Team.: R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. ISBN 3-900051-07-0. http://www.R-project.org/ (2014)

Mandic, D.P., Rehman, N.U., Wu, Z., Huang, N.E.: Empirical mode decomposition-based time-frequency analysis of multivariate signals: the power of adaptive data analysis. IEEE Signal Process Mag. 30(6), 74–86 (2013)

Alexandrov, T., Golyandina, N.: Automatic extraction and forecast of time series cyclic components within the framework of SSA. In: Proceedings of the 5th St. Petersburg Workshop on Simulation, June, St. Petersburg, pp. 45–50 (2005)

Ghil, M., Allen, M.R., Dettinger, M.D., Ide, K., Kondrashov, D., Mann, M.E., Yiou, P.: Advanced spectral methods for climatic time series. Rev. Geophys. 40(1), 3–1 (2002)

Moore, J.C., Grinsted, A., Jevrejeva, S.: New tools for analyzing time series relationships and trends. Eos. Trans. AGU 86(24), 226–232 (2005)

Church, J.A., White, N.J., Coleman, R., Lambeck, K., Mitrovica, J.X.: Estimates of the regional distribution of sea level rise over the 1950–2000 period. J. Clim. 17(13), 2609–2625 (2004)

Church, J.A., White, N.J.: A 20th century acceleration in global sea-level rise. Geophys. Res. Lett. 33(1), L01602 (2006)

Church, J.A., White, N.J.: Sea-level rise from the late 19th to the early 21st century. Surv. Geophys. 32(4-5), 585–602 (2011)

Domingues, C.M., Church, J.A., White, N.J., Gleckler, P.J., Wijffels, S.E., Barker, P.M., Dunn, J.R.: Improved estimates of upper-ocean warming and multi-decadal sea-level rise. Nature 453(7198), 1090–1093 (2008)

Hendricks, J.R., Leben, R.R., Born, G.H., Koblinsky, C.J.: Empirical orthogonal function analysis of global TOPEX/POSEIDON altimeter data and implications for detection of global sea level rise. J. Geophys. Res. Oceans (1978–2012), 101(C6), 14131–14145 (1996)

Jevrejeva, S., Moore, J.C., Grinsted, A., Woodworth, P.L.: Recent global sea level acceleration started over 200 years ago? Geophys. Res. Lett. 35(8), L08715 (2008)

Meyssignac, B., Becker, M., Llovel, W., Cazenave, A.: An assessment of two-dimensional past sea level reconstructions over 1950–2009 based on tide-gauge data and different input sea level grids. Surv. Geophys. 33(5), 945–972 (2012)

Torrence, C., Compo, G.P.: A practical guide to wavelet analysis. Bull. Am. Meteorol. Soc. 79(1), 61–78 (1998)

Percival, D.B., Mofjeld, H.O.: Analysis of subtidal coastal sea level fluctuations using wavelets. J. Am. Stat. Assoc. 92(439), 868–880 (1997)

Hassani, H., Mahmoudvand, R., Zokaei, M.: Separability and window length in singular spectrum analysis. Comptes Rendus Mathematique 349(17), 987–990 (2011)

Acknowledgements

The computing resources required to facilitate this research have been considerable. It would not have been possible to undertake the testing program without the benefit of access to high performance cluster computing systems. In this regard, I am indebted to John Zaitseff and Dr Zavier Barthelemy for facilitating access to the “Leonardi” and “Manning” systems at the Faculty of Engineering, University of NSW and Water Research Laboratory, respectively.

Further, this component of the research has benefitted significantly from direct consultations with some of the world’s leading time series experts and developers of method specific analysis tools. Similarly, I would like to thank the following individuals whose contributions have helped considerably to shape the final product and have ranged from providing specific and general expert advice, to guidance and review (in alphabetical order): Daniel Bowman (Department of Geological Sciences, University of North Carolina at Chapel Hill); Dr Eugene Brevdo (Research Department, Google Inc, USA); Emeritus Professor Dudley Chelton (College of Earth, Ocean and Atmospheric Sciences, Oregon State University, USA); Associate Professor Nina Golyandina (Department of Statistical Modelling, Saint Petersburg State University, Russia); Professor Rob Hyndman (Department of Econometrics and Business Statistics, Monash University, Australia); Professor Donghoh Kim (Department of Applied Statistics, Sejong University, South Korea); Alexander Shlemov (Department of Statistical Modelling, Saint Petersburg State University, Russia); Associate Professor Anton Korobeynikov (Department of Statistical Modelling, Saint Petersburg State University, Russia); Emeritus Professor Stephen Pollock (Department of Economics, University of Leicester, UK); Dr Natalya Pya (Department of Mathematical Sciences, University of Bath, UK); Dr Andrew Robinson (Department of Mathematics and Statistics, University of Melbourne, Australia); and Professor Ashish Sharma (School of Civil and Environmental Engineering, University of New South Wales, Australia).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Watson, P.J. (2016). Identifying the Best Performing Time Series Analytics for Sea Level Research. In: Rojas, I., Pomares, H. (eds) Time Series Analysis and Forecasting. Contributions to Statistics. Springer, Cham. https://doi.org/10.1007/978-3-319-28725-6_20

Download citation

DOI: https://doi.org/10.1007/978-3-319-28725-6_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-28723-2

Online ISBN: 978-3-319-28725-6

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)