Abstract

A gender classification system uses a given image from human face to tell the gender of the given person. An effective gender classification approach is able to improve the performance of many other applications, including image or video retrieval, security monitoring, human-computer interaction and so on. In this paper, an effective method for gender classification task in frontal facial images based on convolutional neural networks (CNNs) is presented. Our experiments have been shown that the method of CNNs for gender classification task is effective and achieves higher classification accuracy than others on FERET and CAS-PEAL-R1 facial datasets. Finally, we built a gender classification demo, where input is the scene image per frame captured by the camera and the output is the original scene image with marked on detected facial areas.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

It is exceedingly critical for us to make the information visualization in communication with others. Not only are we able to identify who his/her is, but also acquire the other information, for instances, gender, age, ethnicity, even mental state when we look at the person’s face. It is not difficult task for individual beings, but a big challenge for machines. Gender classification plays a prominent role on many research areas, including images or videos retrieval, human-computer interaction, robotics vision, security monitoring, demographics studies and so on.

A computer system with the capability of gender classification has profound prospect in basic and applied research areas. Although the field of face recognition have been explored by a great deal of researchers, only a few studies on gender classification have been reported. The gender classification process can reduce half of the search space in face recognition, which is favorable for face identification with preprocessing step.

An effective and feasible method for gender classification in facial images has been presented in this paper, which can achieve a better precision for this task. In addition, other methods for gender classification in face images, including feature extraction and classification, are also presented and compared. Our experiments show that the method in this paper enables to acquire the higher accuracy for gender classification task.

2 Related Works

Xia et al. [1] combined shape and texture features in their experiment. They used Random Forest on the FRGC-2.0 dataset with 10-fold cross-validation and achieved the accuracy of 93.27 %. Timotius et al. [2] built a gender classifier with the edge orientation histogram, which is estimated on image pixels, and achieved the accuracy of 84.2 % on the VISiO Lab face database. Shan [3] adopted a compact Adaboost algorithm to learn the better description with local binary pattern (LBP) histogram feature and obtained the accuracy of \(94.40 \pm 0.86\)% on the LFW database [4]. Jabid et al. [5] proposed a novel texture descriptor, local direction pattern (LDP), to detect gender via facial image for gender recognition task, and achieved accuracy of 95.05 % on FERET facial dataset. Habid et al. [6], who relied on the movement track of the video frame sequences, presented a gender classification algorithm based on LBP feature with boosting algorithm in the time-spatial domain and achieved high precision on CRIM, VidTIMIT and Cohn-Kanade video face databases. Principal component analysis (PCA) via non-linear support vector machine (SVM), representing those images as eigenvector in low-dimensional subspace, was applied in gender classification by Kumari et al. [7] in their experiment, which achieved the accuracy of 92 % on the indian face database.

In China, the effective method proposed by Li et al. [8], based on AdaBoost algorithm to combine facial and hair information for gender recognition, achieved accuracy of \(95.1 \pm 0.5\)% on FERET and \(95.0 \pm 0.8\)% on BCMI datasets. Zhang [9] used VG-TSA algorithm with SVM to recognize gender on face image in the videos. Chu et al. [10] randomly cut the detected face image and distinguished gender with support subspace, which made it successful on face gender recognition task with accuracy of 91.13 % on the FERET dataset. Chen et al. [11] proposed an iterative learning algorithm which combined active appearance models (AAM) with SVM, and obtained the great improvement on the IMM face database [12].

3 Convolutional Neural Networks

This section describes the feature extraction, the architecture of classification model, and the dropout regularization in our experiments.

3.1 Architecture

Convolutional neural networks (CNNs) are comprised of a series of convolutional and subsampling layers sequentially, and then followed by one or more fully connected layers in multi-layers perceptron network. CNNs represent the multi-stage Hubel-Wiesel architecture, which extract local features with high resolution and turn them into a lower resolution combined more complex features.

The lower layers consist of alternative layers: convolutional layers and pooling layers. Each convolutional layer presents a two-dimensional discrete convolutional operation on its source image with a given kernel (filter) and applies a nonlinear transfer function. The objective of pooling layers is that reducing the size of the input map thought averaging or summarizing neurons from a small spatial neighborhood. The upper layers, however, are fully connected and correspond to a traditional multi-layers perceptron networks, as illustrated in Fig. 1.

In CNNs, such as LeNet-5 proposed by LeCun et al. [13], shift-invariance is implemented thought subsampling layers. A small non-overlapping receptive fields in these layers receive input neurons of the previous layer. Each neuron calculates the sum of its inputs by a training coefficient, and adds a bias. At last, the intermediate results can be trained through a nonlinear transfer function.

3.2 Convolutional Layers

The purpose of the convolutional layer is that extracting underlying patterns covered within local regions of the input images throughout the dataset, which convolves by a filter over the input image pixels and computes the inner-product with the kernel and pixels at every position of the image.

The input to a convolutional layer is \(\mathbf {x}_i\), where \(\mathbf {x}_i \in \mathbb {R}^{w \times h}\) is the \(w \times h\) matrix, which is corresponding to the pixels of input map i (image with width w and height h).

The convolutional layer will have k kernels with \(m \times n\) size, where m and n is smaller than the width and height of the image respectively. The size of the filters, which are convolved with the image to engender k feature maps with \((w-m+1) \times (h-n+1)\) size, leads to the local connected structure.

In convolutional layer, the feature maps in the previous layer need to be convolved with learnable kernels and pass through the activation function to figure out the output feature map. Each output map can be combined with multiple input maps through convolutions. Apparently, we have that

where \(*\) is convolutional operator, l represents the layer in the networks, \(\mathbf {a}^{(l-1)}_i = \mathbf {x}_i\) when \(l=2\) (the 1st layer in the network is data input layer, and the 2nd layer is convolutional layer), \(\mathbf {k}^{(l)}_{ij} \in \mathbb {R}^{m \times n}\) is the kernel of the l-th layer in the networks between the i-th input map and the j-th output map, \(f( \cdot )\) is non-linear transfer function to activations, such as hyperbolic tangent function.

An additive bias \(b_j\) is given at each output map, but for a specific output map, the input maps will be convolved with different kernels distinctly. In other words, if both the \(j_1\)-th output map and the \(j_2\)-th output map sum over the i-th input map, then the kernels are applied to the i-th input map, which is different from the \(j_1\)-th and \(j_2\)-th output maps.

3.3 Pooling Layers

The objective of pooling [14] layers is to acquire spatial invariance through scaling down the resolution of the feature maps. And then, each map needs to be subsampled typically with mean or max pooling over \(p \times q\) adjoining regions, where p and q is the size (width and height respectively) of patches in the pooling layer. In general, we have that:

where \(R_j\) is the j-th pooling region with \(p \times q\) size in the i-th input map.

Two kinds of conventional operators for \(g( \cdot )\) can be chosen: average and max operation. The former takes the arithmetic mean of the elements in each pooling region:

but the largest element is picked up via the max operator in each pooling region:

Both two kinds of pooling operators have own disadvantages when training deep convolutional networks. When it comes to the average pooling, all elements in a pooling region need to be took fully into account, even though many of them have low magnitude. While max pooling does not suffer from these weaknesses, we are able to find it easily to overfit the training set in practice, making it difficult to generalize well to the test instances. In feedforward propagation, the pooling regions with \(p \times q\) size are scaled down as a single value. And then, this single value attains an error calculated by backwards propagation from the previous layer. This error is then just feedforwarded to the place where it comes from. Since it only comes from the one place within the region of \(p \times q\) size, the back-propagated errors from max-pooling layers are considerable sparse.

3.4 Regularization

For regularization, we apply dropout [15] regularization on the hidden layer with a constraint on \(l_2\)-norms of the weight vectors. Dropout enables to prevent overfitting thought dropping units out randomly with a probability p in the network. For instance, setting to zero with a probability p of the hidden units during feedforward and back-propagation in the network. That is, given the hidden layer, also called perceptron layer (after pooling layer), \(\mathbf {a}^{(l-1)} = [a_1, \dots , a_h]\), instead of using

but for output unit \(\mathbf {a}^{(l)}\) in feedforward propagation, dropout represents

where \(\circ \) is the element-wise multiplication operator, and \(r \in \mathbb {R}^{h}\) is a masking vector of Bernoulli random variables with probability p of being 1. At training time, the gradients are able to be updated only through the unmasked units via back-propagation. At test phase, the weight vectors we have learned enbale to be scaled by p such that \(\hat{\mathbf {w}}=p\mathbf {w}\), where \(\hat{\mathbf {w}}\) is employed without dropout in the test procedure. In addition, we constrain \(l_2\)-norms of the weight vectors by rescaling \(\mathbf {w}\) such that \(||\mathbf {w}||_2=s\) whenever \(||\mathbf {w}||_2 > s\) after a gradient descent step.

4 Experiments

This section describes the datasets, data preprocessing steps, detailed hyperparameters, as well as the training procedure in our experiments.

4.1 Datasets and Computers

Our experiments were carried out on the FERET [16] dataset and CAS-PEAL-R1 [17] formal subsets, labeled by ourselves, as described in Table 1. FERET face database dataset consists of 1351 8-bit grayscale images of human from 193 people (108 males and 85 females for each 7 images), heads with views ranging from frontal to left and right profiles. CAS-PEAL-R1 formal subsets made up 1040 Chinese face images from 208 people (119 males and 89 females for each 5 images).

We used up to a workstation machine with Ubuntu 14.04 for our experiments. It has 2 Intel Core CPUs, each for 8 cores (Intel(R) Core(TM) i7-5960X CPU at 3.00 GHz), 32 GB memory and a GPU with GeForce GTX TITAN Z. (6 GB memory). Our experiments below are carried out on GPU with Theano 0.7.0.

4.2 Data Preprocessing

The facial images in the datasets are preprocessed before model training. There are some preprocessed steps as follows.

Gray-Scale. Firstly, the color images, if they have 3 color channels, are converted into grayscale images as follows.

where \(x^{(R)}_{ij}\), \(x^{(G)}_{ij}\) and \(x^{(B)}_{ij}\) are the pixels of R, G, B channels.

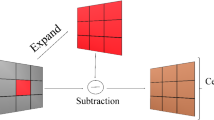

Face Detection. Secondly, histogram equalization is used for adjusting facial image intensities to enhance contrast. And then, we need to detect face area in the image using Harr-likes features with Adaboost method [18] and cut the detected areas. What’s more, we are able to resize the images, outputs in the previous step, to \(80 \times 80\) on FERET dataset and \(100 \times 100\) on CAS-PEAL-R1 dataset.

Normalization. Ultimately, data normalization, a function map transformed 0\(\sim \)255 into 0\(\sim \)1, can be used in these datasets. The formula is shown in the following:

where are \(\hat{x}_{ij}\) is normalized value, proceeding from original pixel \(x_{ij}\) of \(\mathbf {x}\).

The visualised samples of facial image on FERET and CAS-PEAL-R1 datasets after preprocessing has been shown in Fig. 2.

4.3 Hyperparameters and Training

The CNN architecture with dropout regularization [15] and two kinds of learning algorithms have been implemented by ourselves with Theano. For all datasets, we randomly split all data via 20 %–80 % as training set and test set separately. Learning algorithms at training procedure has been carried out through Stochastic Gradient Descent (SGD) [19] over mini-batched with learning rate decay update rule and ADADELTA [20] method.

Networks Structure. The exact network architecture on FERET and CAS-PEAL-R1 datasets in our experiment have been listed in Tables 2 and 3. Taking FERET dataset for example, the size of input layer is \(80 \times 80\), and followed by convolution with max-pooling layers many times, increasing the number of kernels and reducing the size of patch. Ultimately, flattening the output in the pervious layer, concatenating by fully connected layers with dropout regularization and making classification via softmax layer. The fourth column in the Tables 2 and 3, namely #params, means the number of parameters we need to learn, for instance, 0.4K is equal to \(16 \times 5 \times 5\).

For convolution layers, the number of kernels are increased with network going deeper. But for pooling layers, we need to acquire spatial invariance by scaling down the size of the feature maps with max-pooling over given contiguous regions. Apparently, the loss function of our network is negative log-likelihood in output layer as follows.

where \(\theta \) is the parameters we need to learn in this network. \(W^i\) and \(b^i\) is represented by the weight matrix and bias in the i-th hidden layer respectively, as well as \(\mathcal {D}\) stands for the samples of training dataset, so that \(|\mathcal {D}|\) means the number of training data.

Learning Algorithm. In our experiments, two kinds of learning algorithms, SGD [19] and ADADELTA [20], have been used to optimize the loss function and obtained the optimal parameters in the training step.

A stochastic gradient trainer with momentum \(\mu \) and learning rate \(\alpha \) updates parameter \(\theta \) at step t by blending the current velocity v with the current gradient \(\frac{\partial \mathcal {L}}{\partial \theta }\). Besides, we make the learning rate decreased at every step gradually, so the learning decay rate \(\gamma \) is no more than zero and the gradient update rule is followed by Eqs. (10)–(12). (For more training details, please refer to the article [19].)

The ADADELTA method uses the same general strategy as all first-order stochastic gradient methods, in the sense that these methods make small parameter adjustments iteratively using local derivative information. The difference with ADADELTA is that as gradients are computed during each parameter update, an exponentially-weighted moving average (EWMA) gradient value, as well as an EWMA of recent parameter steps, are maintained as well. And the update rule is followed by Eqs. (13)–(16). (For more training details, please refer to the article [20].)

Parameters Settings. In our experiment, \(tanh(\cdot )\) activation function has been used for convolutional layers and fully connected layers, and setting dropout rates (p) of 0.2 and 0.5 for flatten layers and fully connected layers, initial bias b set 0 and initial weight matrix \(W \sim U \left[ -\sqrt{\frac{6}{I+O}}, \sqrt{\frac{6}{I+O}} \right) \) for each layer, where I and O is represented by the feature maps of input and ouput in the hidden layer respectively.

For SGD trainer, initial learning rate \(\alpha _0\) of 0.01, learning decay rate \(\gamma \) of 0.998 and mini-batch size of 30 for stochastic gradient descent learning algorithm. But for ADADELTA trainer, decay constant \(\rho \) of 0.95 and constant \(\epsilon \) of \(10^{-6}\) should been set. These hyperparameters have been selected through a grid search on the FERET and CAS-PEAL-R1 datasets.

4.4 Results and Comparation

As can be seen in Fig. 3, the results have been demonstrated as follows. On the one hand, the cost value with learning rate decay method has smoothly declined and gradually stabilized on all datasets when the epoch increased. In contrast, the cost value has a large number of shaking in the first few epochs and gradually kept stable on all datasets with Adadelta method.

On the other hand, the accuracy rates increases smoothly with epoch increased. The misclassification rates of test data, as described by Eq. (17), achieves 4.07 % at the 259th epoch on FERET and 8.33 % at the 825th epoch on CAS-PEAL-R1 with SGD learning algorithm. And the error rates obtains 2.23 % at the 82th epoch on FERET and 6.67 % at the 75th epoch on CAS-PEAL-R1 with ADADELTA learning algorithm.

where \(I(\cdot )\) is the indicator function, which is that \(I\{a\;false\;statement\} = 0\) and \(I\{a\,true\,statement\} = 1\). And then, \(y_i\) is the label of image \(\mathbf {x}_i\), corresponsively, \(\hat{y}_i\) is the label of predication, \(|\mathcal {D}|\) is the number of test data sets.

In training such networks, the cost value stops decreasing after a few epochs and remains at a level lower than that of learning rate decay method, which achieves a great performance util convergence.

Accordingly, we compared with mainly different methods for gender classification on FERET and CAS-PEAL-R1 datasets, as described in Table 4. Image pixels with logistic regression method have been chosen as our baseline. Compared with these methods which included in Logistic Regression, RBF-SVM, C.H.O, Adaboost and Stacked-Autoencoders, the misclassification rates of our works are 2.23 % on FERET and 6.67 % on CAS-PEAL-R1, which is lower than previous works. Our works have achieved the great improvements with higher classification accuracy.

In addition, it is exceedingly decreased for the training time-consuming problem with GPU mode compared to CPU mode, as demonstrated on Tables 5 and 6. No matter what the datasets are, training time with GPU mode is \(150 \times \) faster than other (with CPU mode) approximately. It is a very competitive method, which perform significantly better than their contemporaries.

5 Application to Gender Recognition from Camera

Finally, we carried out the CNNs model, which were trained and tuned on hyperparameters by ourselves, to build a application for gender classification with facial images.

Image acquisition on natural scene is captured by camera. And then, the whole image is preprocessed to acquire the area about human face, as described in Sect. 4.2. Next step, the facial area of image as 2d signal inputs is put into the CNNs model, which has been trained parameters previously, included the weight matrix and bias for each layer, the kernels for each convolutional layer, and the patch size for pooling layer, the dropout rates for perceptron layers and so on. At last, we get the outputs from the model, which enables to distinguish male from female on facial images, and label on the original image. The rectangle with black lines on face represents male, and the white one stands for female, as illustrated in Fig. 4.

6 Conclusion

An effective and feasible method for gender classification in facial images has been presented in this paper. The underlying gender descriptors on face would need to be general, invariant to pose, illumination, expression, and image quality. Our works demonstrate that CNNs model can learn from many samples to distinguish gender effectively on facial images. Adadelta method, to tune the learning rate automatically, is able to make convergence rapidly and acquire the much lower misclassification rates in our works.

The ability, to present a remarkable improvement in gender classification, attests to the potential of such coupling to become significant in other vision domains as well. Finally, this method has been applied in the gender recognition system in order to build a application to predict the gender with facial images captured by camera.

In the next step, we still need to improve the accuracy for classification performance and focus on high-precision recognition under the influence of different positions and orientations.

References

Xia, B., Ben Amor, B., Huang, D., Daoudi, M., Wang, Y., Drira, H.: Enhancing gender classification by combining 3D and 2D face modalities. In: 2013 Proceedings of the 21st European Signal Processing Conference (EUSIPCO), pp. 1–5, September 2013

Timotius, I.K., Setyawan, I.: Using edge orientation histograms in face-based gender classification. In: 2014 International Conference on Information Technology Systems and Innovation (ICITSI), pp. 93–98, November 2014

Shan, C.: Learning local binary patterns for gender classification on real-world face images. Pattern Recogn. Lett. 33(4), 431–437 (2012). Intelligent Multimedia Interactivity

Huang, G.B., Mattar, M., Lee, H., Learned-Miller, E.: Learning to align from scratch. In: NIPS (2012)

Jabid, T., Kabir, M.H., Chae, O.: Gender classification using local directional pattern (LDP). In: 2010 20th International Conference on Pattern Recognition (ICPR), pp. 2162–2165, August 2010

Hadid, A., Pietikainen, M.: Combining appearance and motion for face and gender recognition from videos. Pattern Recogn. 42(11), 2818–2827 (2009)

Kumari, S., Sa, P.K., Majhi, B.: Gender classification by principal component analysis and support vector machine. In: Proceedings of the 2011 International Conference on Communication, Computing and Security, ICCCS 2011, pp. 339–342. ACM, New York (2011)

Li, B., Lian, X.-C., Lu, B.-L.: Gender classification by combining clothing, hair and facial component classifiers. Neurocomputing 76(1), 18–27 (2012). Seventh International Symposium on Neural Networks (ISNN 2010) Advances in Web Intelligence

Zhang, M.: Design and implementation of video-based face gender recognition system using manifold learning. Master’s thesis, University of Electronic Science and Technology (2013)

Chu, W.-S., Huang, C.-R., Chen, C.-S.: Gender classification from unaligned facial images using support subspaces. Inf. Sci. 221, 98–109 (2013)

Chen, H., Wei, W.: Support vectort aam based iterative learning algorithm for gender classification. J. Zhejiang Univ. (Eng. Sci.) 39(12), 1989–2011 (2005)

Nordstrøm, M.M., Larsen, M., Sierakowski, J., Stegmann, M.B.: The IMM face database - an annotated dataset of 240 face images. Technical report, Informatics and Mathematical Modelling, Technical University of Denmark, DTU, Richard Petersens Plads, Building 321, DK-2800 Kgs. Lyngby, May 2004

Lecun, Y., Bottou, L., Bengio, Y., Haffner, P.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Zeiler, M.D., Fergus, R.: Stochastic pooling for regularization of deep convolutional neural networks. CoRR, abs/1301.3557 (2013)

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15, 1929–1958 (2014)

The defense advanced research team: the facial recognition technology (feret) database. Technical report, United States Department of Defense (1996)

Gao, W., Cao, B., Shan, S., Chen, X., Zhou, D., Zhang, X., Zhao, D.: The CAS-PEAL large-scale chinese face database and baseline evaluations. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 38, 149–161 (2008)

Lienhart, R., Maydt, J.: An extended set of haar-like features for rapid object detection. In: Proceedings of the 2002 International Conference on Image Processing, vol. 1, pp. I-900–I-903 (2002)

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning representations by back-propagating errors. In: Neurocomputing: Foundations of Research, pp. 696–699. MIT Press, Cambridge (1988)

Zeiler, M.D.: ADADELTA: an adaptive learning rate method. CoRR, abs/1212.5701 (2012)

Lu, L.: A study of gender classification and age estimation based on face image. Ph.D. thesis, Shanghai Jiao Tong University (2010)

Zhang, H., Zhu, Q.: Gender classification in face images based on stacked-autoencoders method. In: 2014 7th International Congress on Image and Signal Processing (CISP), pp. 486–491, October 2014

Acknowledgments

This research is partially supported by Beijing Natural Science Foundation (Grant 4152008). Hao is supported by the Foundation of Science and Technology of Beijing University of Technology (Grant ykj-2013-9341). We sincerely thank the anonymous reviewers for their thorough reviewing and valuable suggestions. In addition, the authors would also give warm thanks to Yutong Yu for the data labelling works, and Lei Wang and Zhiqiang Wang for their comments and discussions.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Zhang, H., Zhu, Q., Jia, X. (2015). An Effective Method for Gender Classification with Convolutional Neural Networks. In: Wang, G., Zomaya, A., Martinez, G., Li, K. (eds) Algorithms and Architectures for Parallel Processing. ICA3PP 2015. Lecture Notes in Computer Science(), vol 9529. Springer, Cham. https://doi.org/10.1007/978-3-319-27122-4_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-27122-4_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-27121-7

Online ISBN: 978-3-319-27122-4

eBook Packages: Computer ScienceComputer Science (R0)